Probabilistic Maritime Trajectory Prediction in Complex Scenarios Using Deep Learning

Abstract

:1. Introduction

2. Related Work

3. Methodology

3.1. Deep Learning

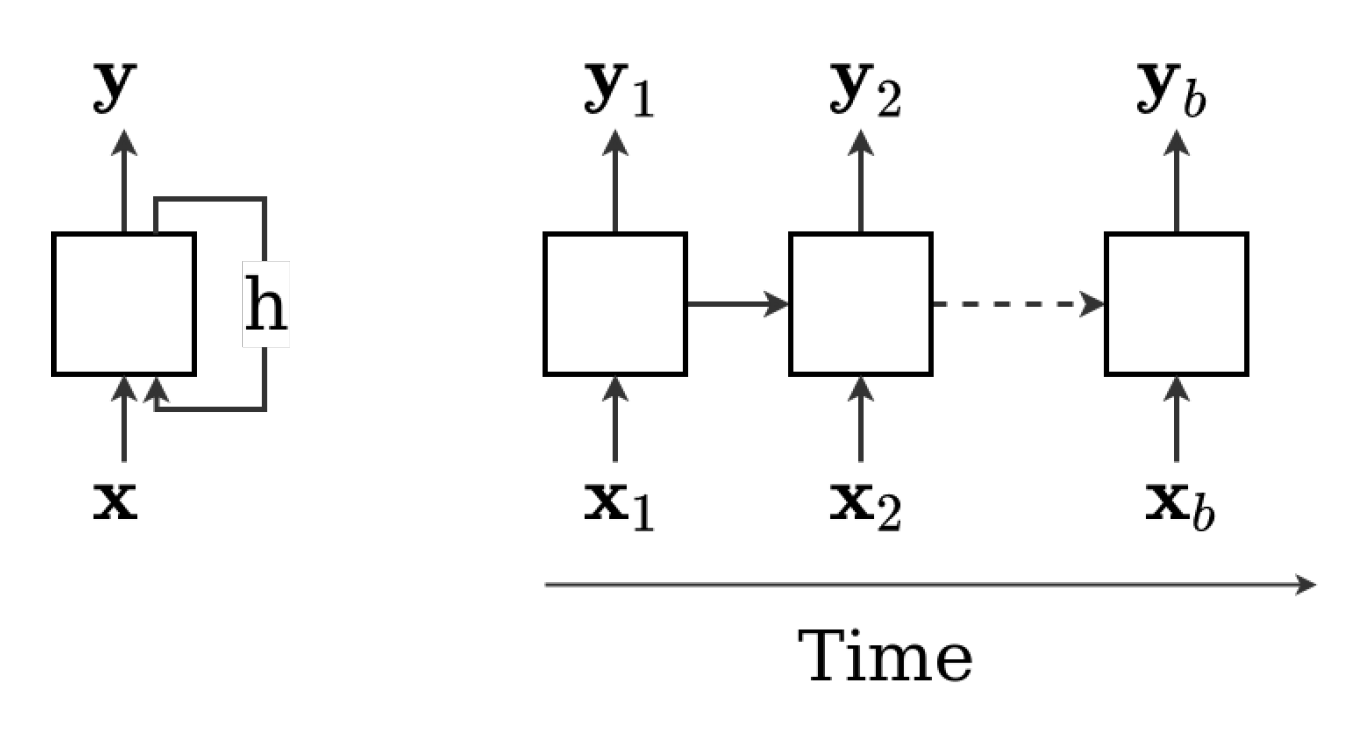

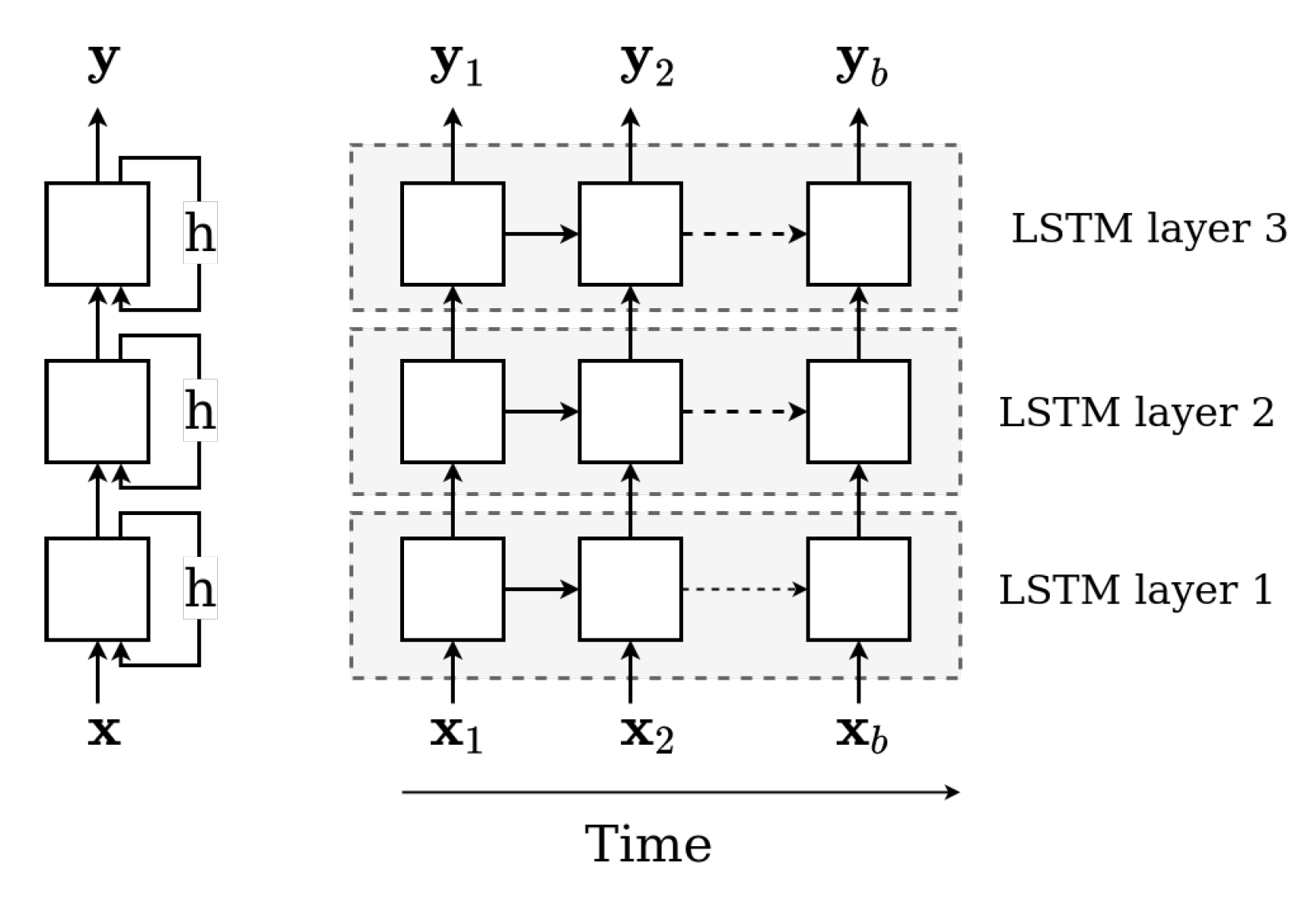

3.2. Long Short Term Memory Networks

3.3. Mixture Density Network

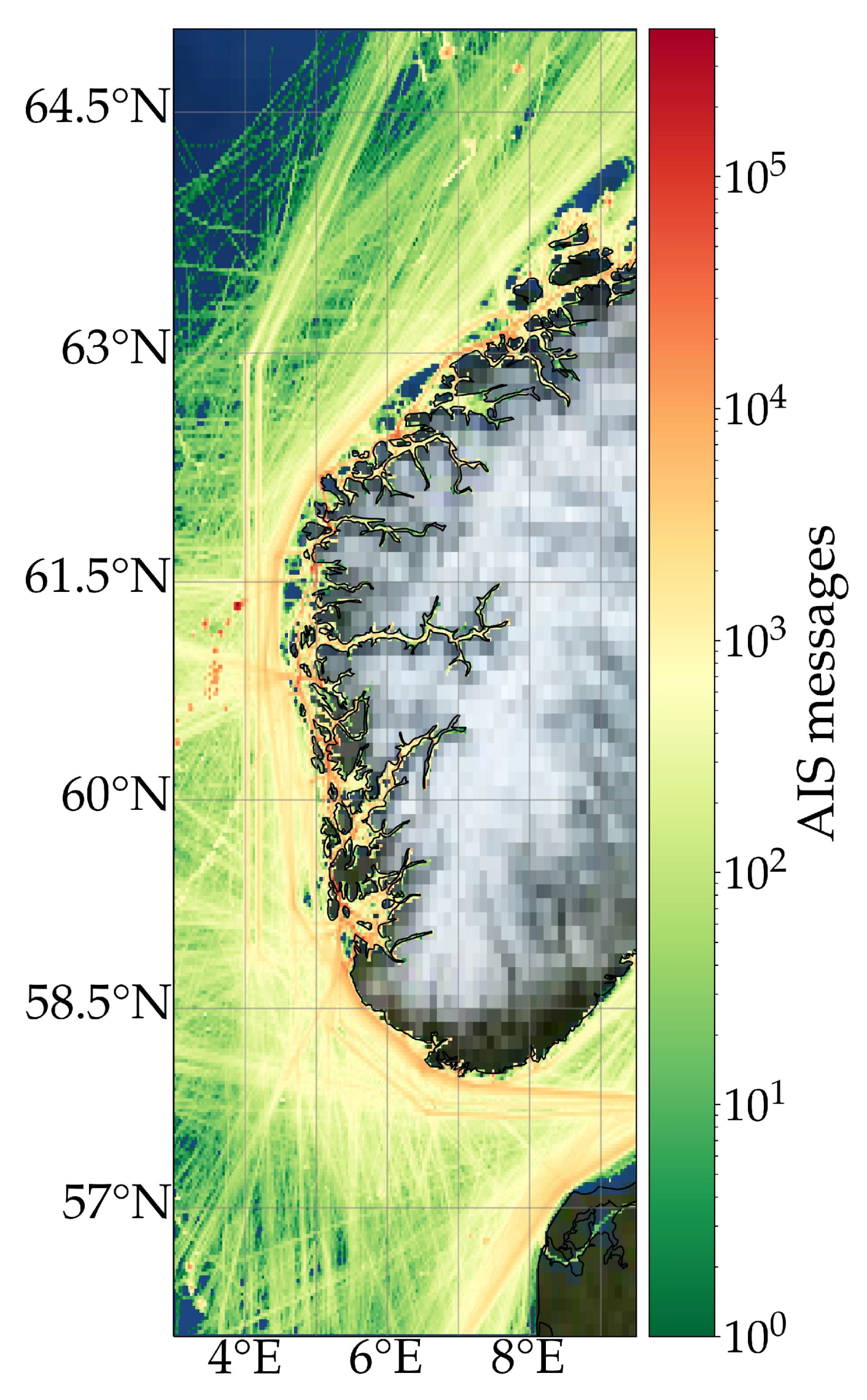

3.4. Data

3.4.1. Data Pre-Processing

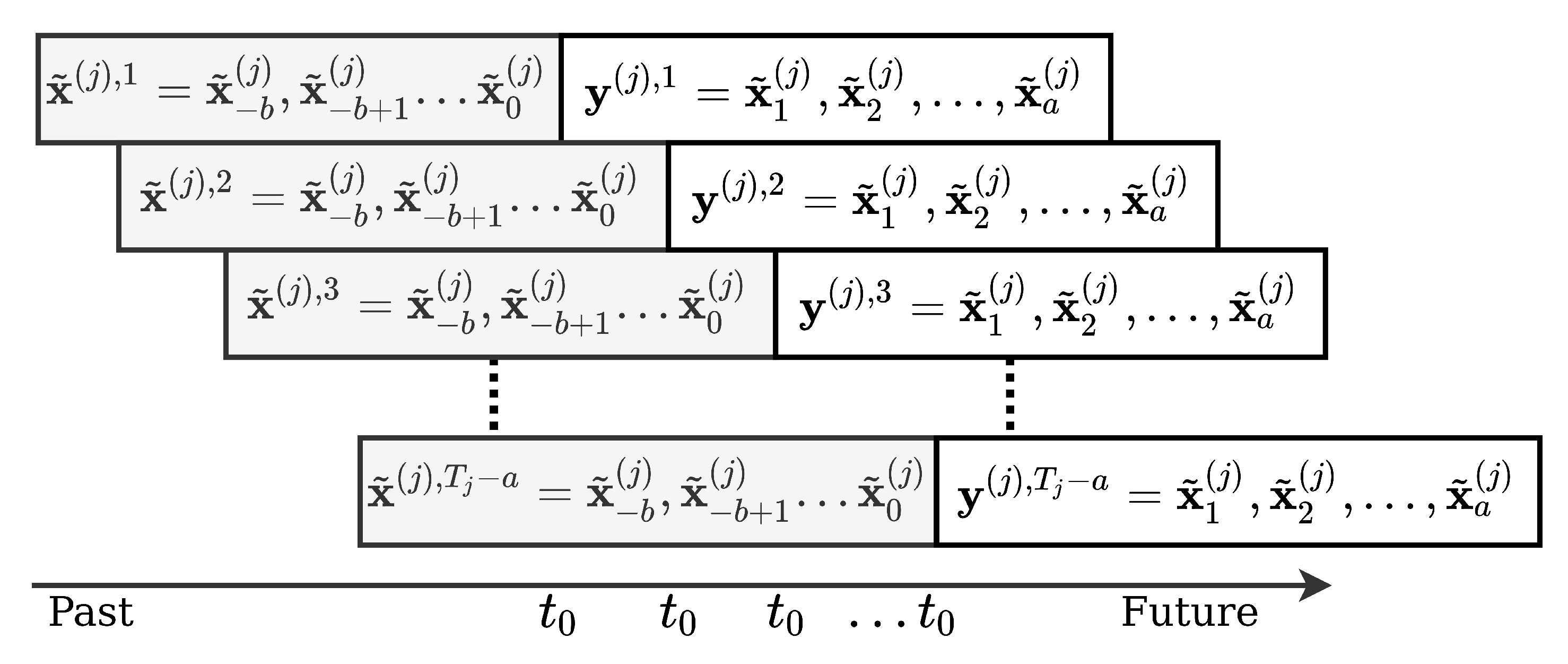

3.4.2. Data for Training

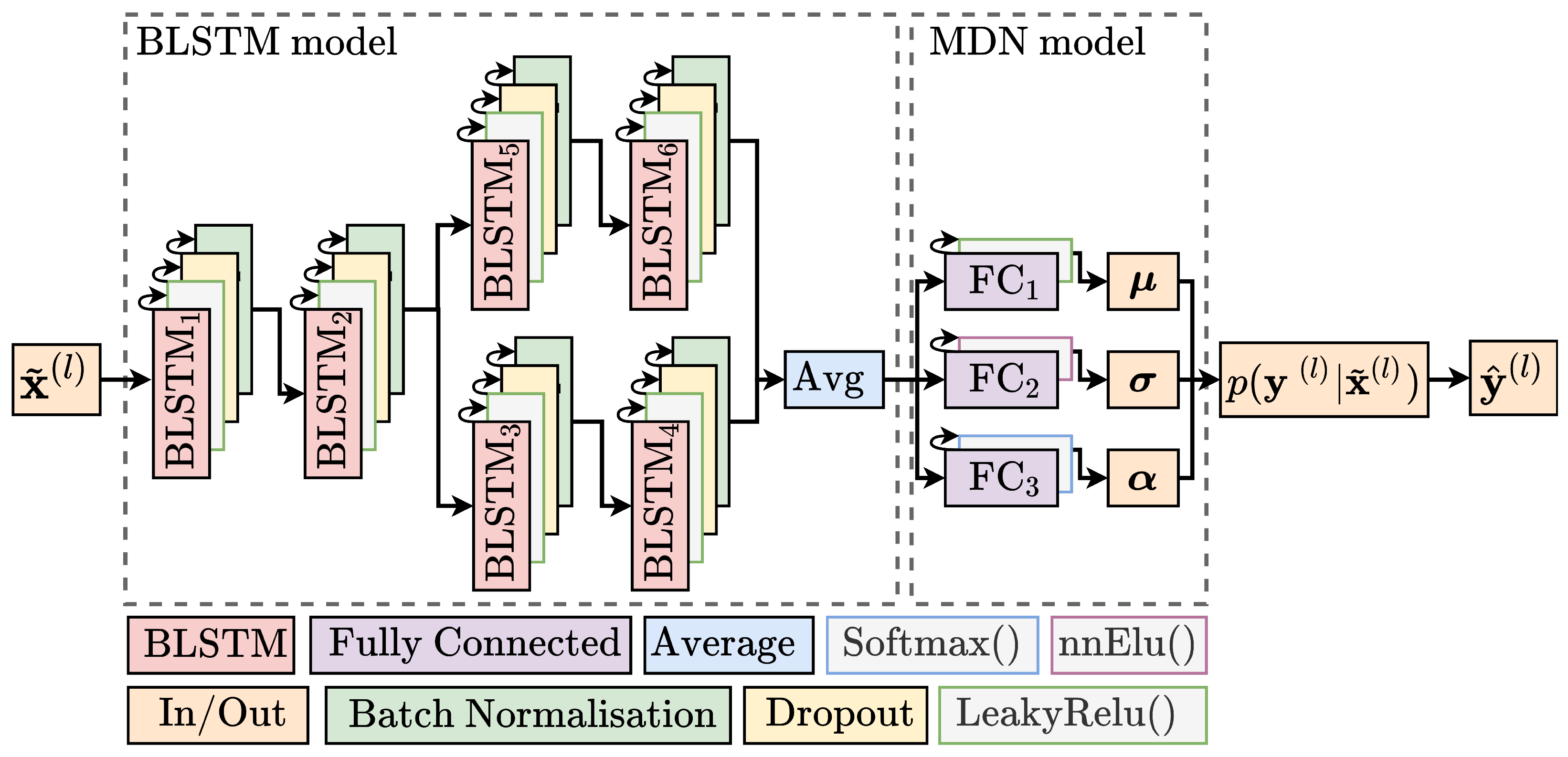

3.5. Model

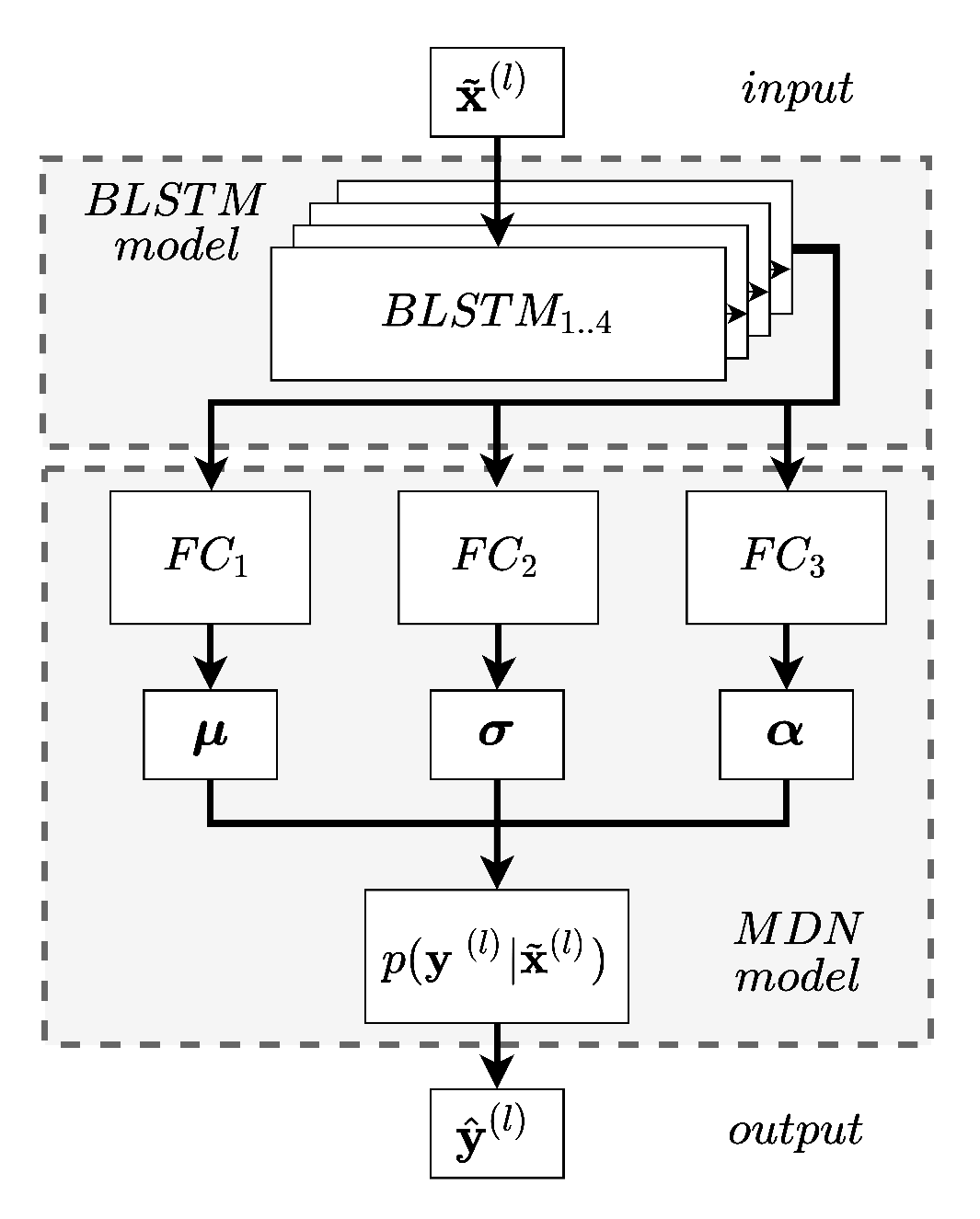

3.5.1. MDN

3.5.2. Optimiser

3.5.3. Loss Function

4. Experimental Results and Discussion

4.1. Data Set and Model Setup

4.2. Model Evaluation

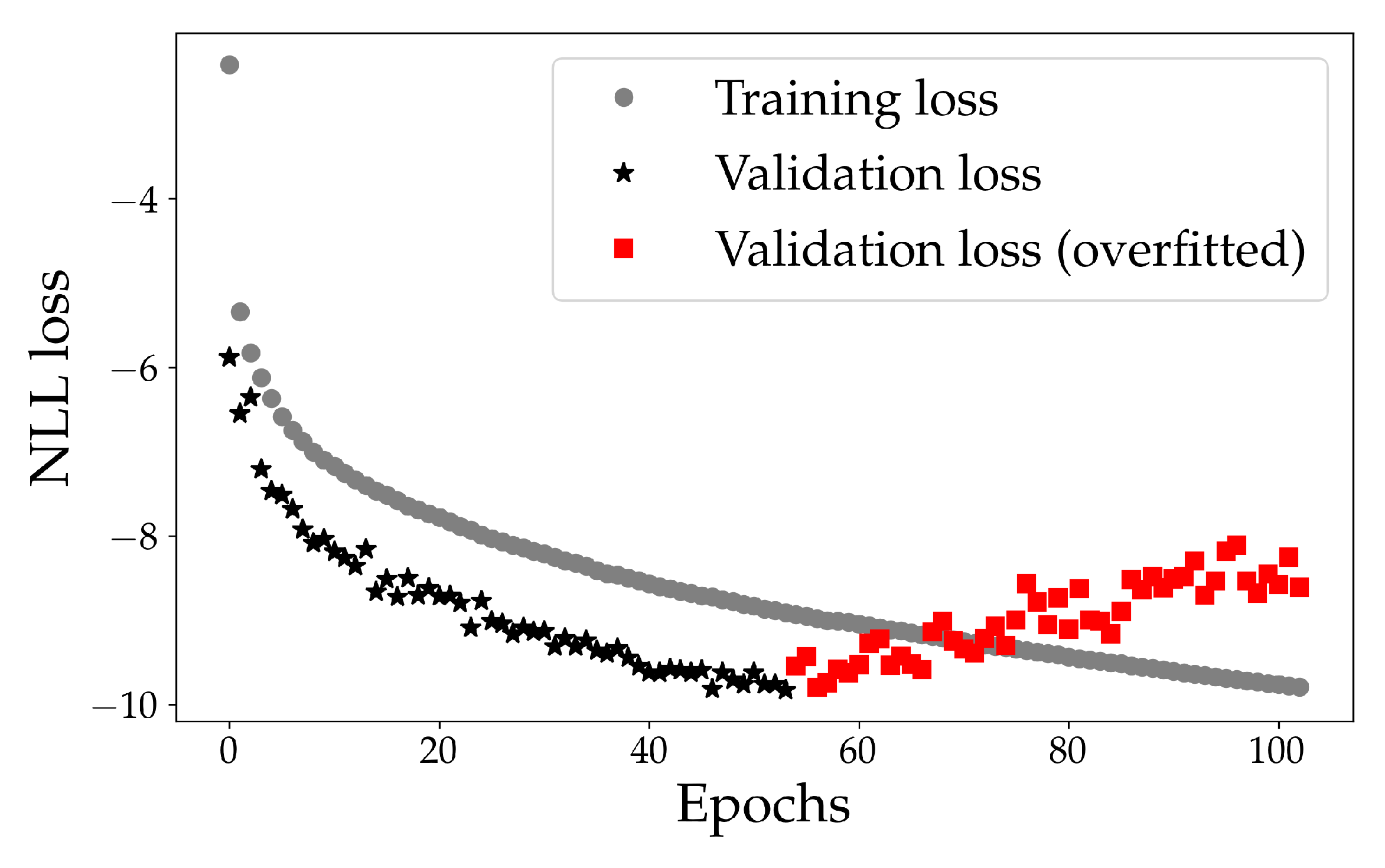

4.2.1. Quantitative Evaluation

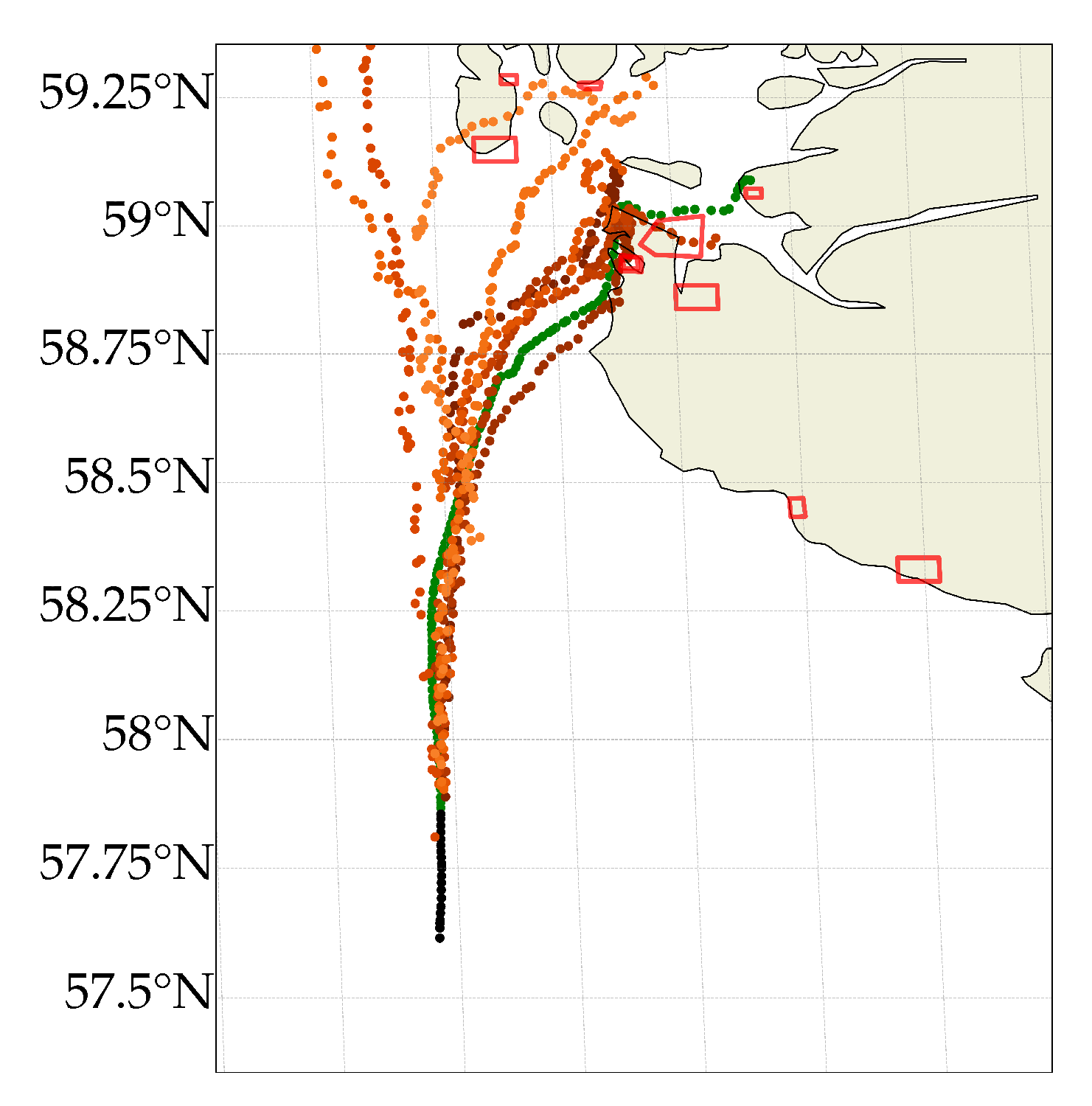

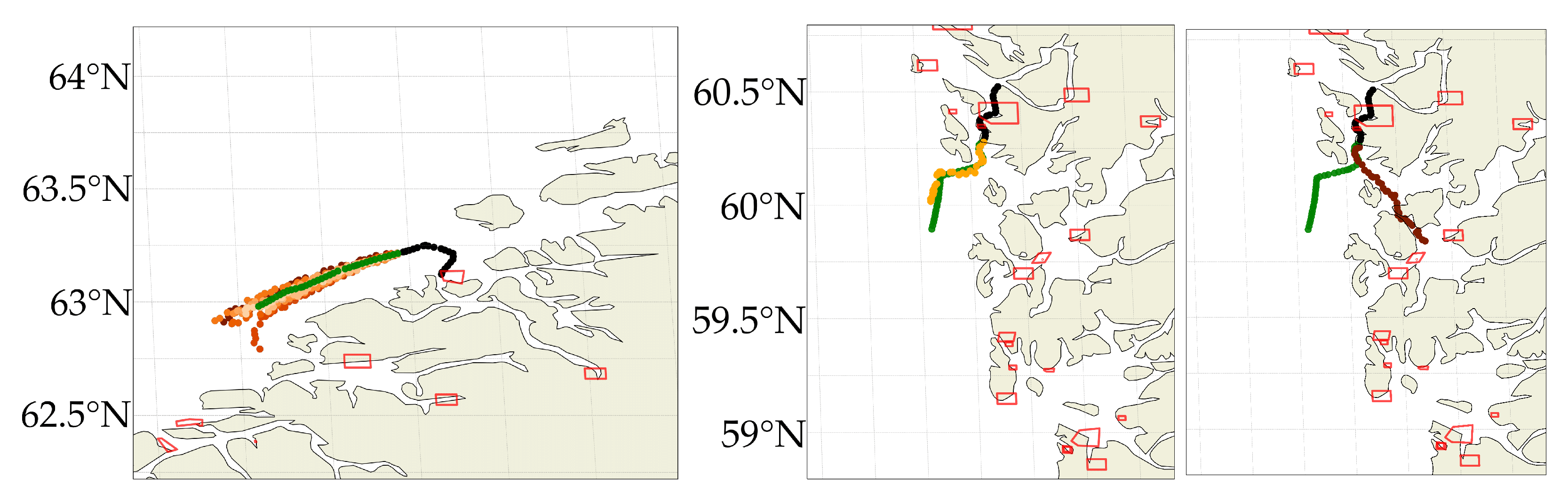

4.2.2. Qualitative Evaluation

4.3. Discussion

4.3.1. Model

4.3.2. Data

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MSA | Maritime Situational Awareness |

| AIS | Automatic Identification System |

| BLSTM | Bidirectional Long-Short Term Memory |

| SOTA | State-Of-The-Art |

| MDN | Mixture Density Network |

| THREAD | Traffic Route Extraction and Anomaly Detection |

| MP | Multi-step Prediction |

| RMSE | Root-Mean-Squared-Error |

| FC | Fully Connected |

| Probability Density Function | |

| sog | Speed Over Ground |

| cog | Course Over Ground |

| NLL | Negative Log Likelihood |

Appendix A. Choice of Mixtures

| Mixtures m | Training NLL | Testing NLL |

|---|---|---|

| 1 | ||

| 2 | ||

| 3 | ||

| 4 | ||

| 5 | ||

| 6 | ||

| 7 | ||

| 8 | ||

| 9 | ||

| 10 | ||

| 11 | ||

| 12 |

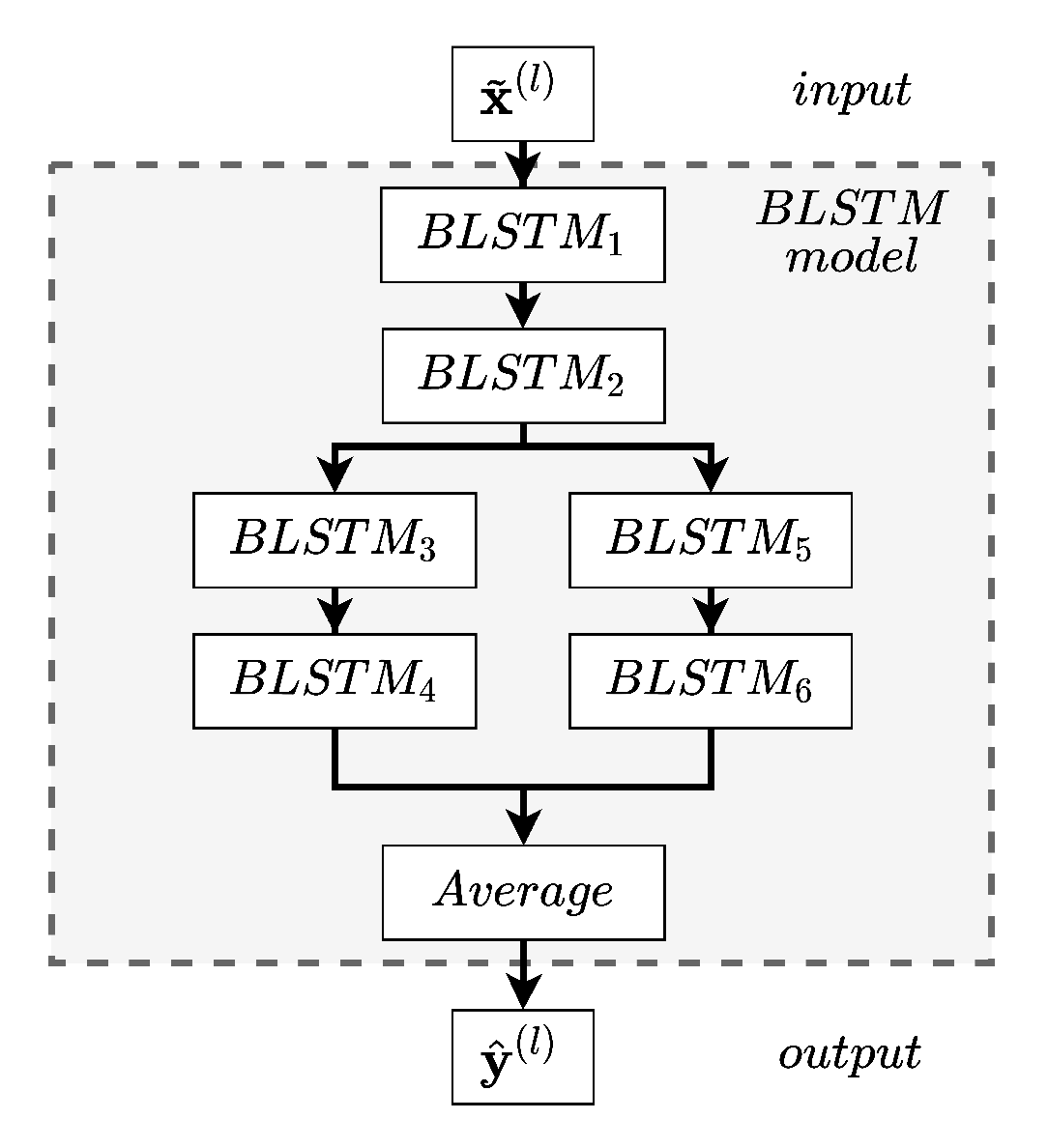

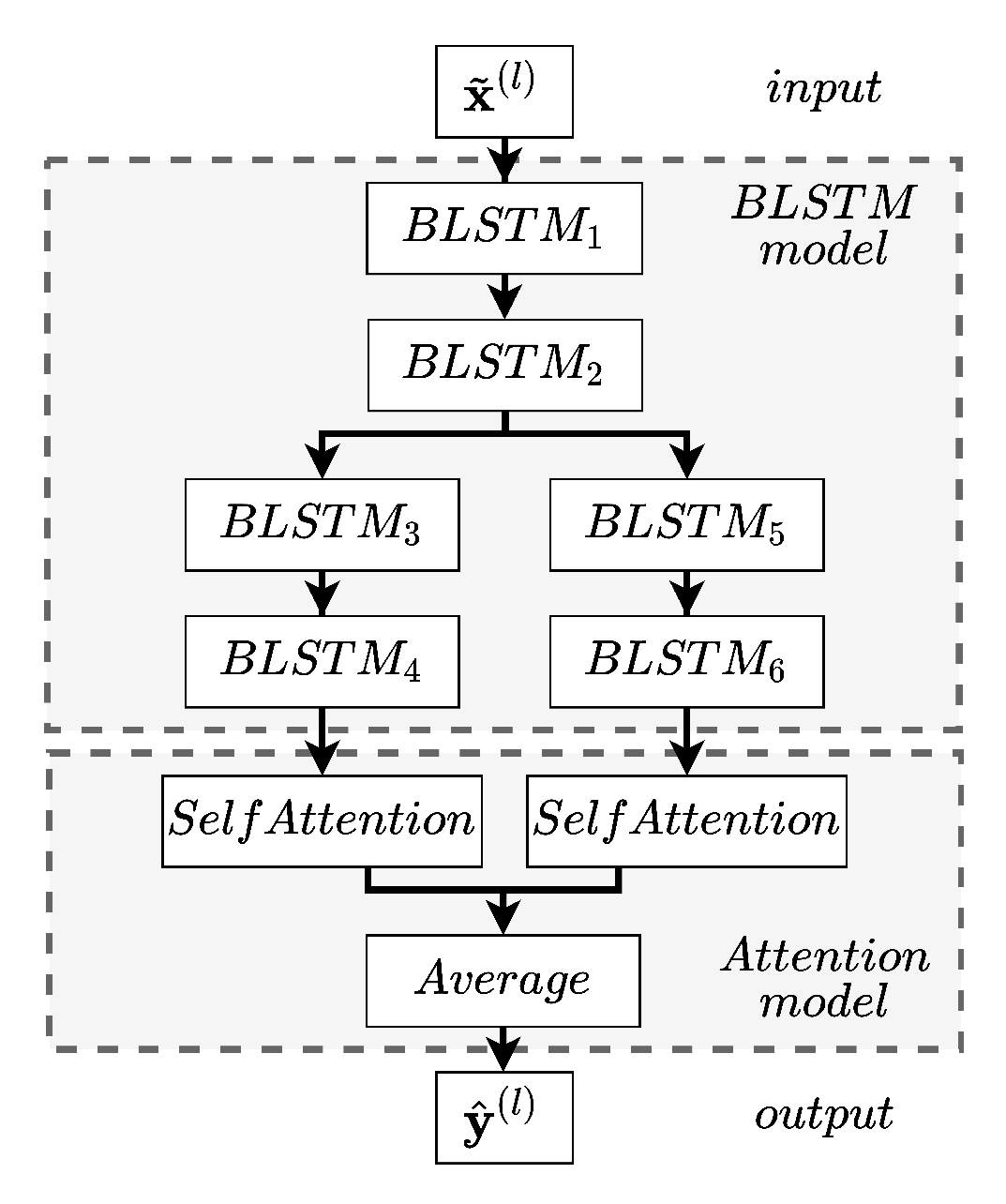

Appendix B. Models for Comparison

Appendix C. RNN and LSTM

References

- Danish Ministry of Foreign Affairs. The Cutting Edge of Blue Business. 2020. Available online: https://investindk.com/set-up-a-business/maritime (accessed on 17 December 2021).

- Forsvarsudvalget. Forsvarsministeriets Fremtidige Opgaveløsning i Arktis; Danish Ministry of Defense: Copenhagen, Denmark, 2015; Available online: https://www.ft.dk/samling/20151/almdel/FOU/bilag/151/1650324.pdf (accessed on 17 December 2021).

- Jakobsen, U.; í Dali, B. The Greenlandic sea areas and activity level up to 2025. In Maritime Activity in the High North—Current and Estimated Level up to 2025; Borch, O.J., Andreassen, N., Marchenko, N., Ingimundarson, V., Gunnarsdóttir, H., Iudin, I., Petrov, S., Jakobsen, U., Dali, B., Eds.; Nord Universitet Utredning, NORD Universitet: Bodø, Norway, 2016; pp. 86–111. [Google Scholar]

- Ball, H. Satellite AIS for Dummies; Wiley: Hoboken, NJ, USA, 2013. [Google Scholar]

- International Maritime Organization. Revised Guidelines for the Onboard Operational Use of Shipborne Automatic Identification System (AIS): Resolution A.1106(29). 2015. Available online: https://www.navcen.uscg.gov/pdf/ais/references/IMO_A1106_29_Revised_guidelines.pdf (accessed on 17 December 2021).

- International Maritime Organization. Regulations for Carriage of AIS. 2020. Available online: https://wwwcdn.imo.org/localresources/en/OurWork/Safety/Documents/AIS/Resolution%5C%20A.1106(29).pdf (accessed on 17 December 2021).

- Heiselberg, H. A Direct and Fast Methodology for Ship Recognition in Sentinel-2 Multispectral Imagery. Remote Sens. 2016, 8, 1033. [Google Scholar] [CrossRef] [Green Version]

- Heiselberg, H. Ship-Iceberg Classification in SAR and Multispectral Satellite Images with Neural Networks. Remote Sens. 2020, 12, 2353. [Google Scholar] [CrossRef]

- Forskningsinstitutt, F. NorSat-3—Ship Surveillance with a Navigation Radar Detector. 2019. Available online: https://ffi-publikasjoner.archive.knowledgearc.net/handle/20.500.12242/2593?show=full (accessed on 17 December 2021).

- Mozaffari, S.; Al-Jarrah, O.Y.; Dianati, M.; Jennings, P.; Mouzakitis, A. Deep Learning-Based Vehicle Behavior Prediction for Autonomous Driving Applications: A Review. IEEE Trans. Intell. Transp. Syst. 2022, 23, 33–47. [Google Scholar] [CrossRef]

- Lo Duca, A.; Marchetti, A. Exploiting multiclass classification algorithms for the prediction of ship routes: A study in the area of Malta. J. Syst. Inf. Technol. 2020, 22, 289–307. [Google Scholar] [CrossRef]

- Pallotta, G.; Vespe, M.; Bryan, K. Vessel Pattern Knowledge Discovery from AIS Data: A Framework for Anomaly Detection and Route Prediction. Entropy 2013, 15, 2218–2245. [Google Scholar] [CrossRef] [Green Version]

- Pallotta, G.; Vespe, M.; Bryan, K. Traffic knowledge discovery from AIS data. In Proceedings of the 16th International Conference on Information Fusion, Istanbul, Turkey, 9–12 July 2013; pp. 1996–2003. [Google Scholar]

- Mazzarella, F.; Arguedas, V.F.; Vespe, M. Knowledge-based vessel position prediction using historical AIS data. In Proceedings of the 2015 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 6–8 October 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Mazzarella, F.; Vespe, M.; Santamaria, C. SAR Ship Detection and Self-Reporting Data Fusion Based on Traffic Knowledge. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1685–1689. [Google Scholar] [CrossRef]

- Han, X.; Armenakis, C.; Jadidi, M. DBSCAN optimization for improving marine trajectory clustering and anomaly detection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 455–461. [Google Scholar] [CrossRef]

- Liraz, S.P. Ships Trajectories Prediction Using Recurrent Neural Networks Based on AIS Data; Calhoun Institutional Archive of the Naval Postgraduate School: Monterey, CA, USA, 2018; Available online: https://calhoun.nps.edu/handle/10945/60431 (accessed on 17 December 2021).

- Charla, J.L. Vessel Trajectory Prediction Using Historical AIS Data. Master’s Thesis, Portland State University, Oregon, Portland, 12 August 2020. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, L.; Zhu, M.; Chen, S.; Zhang, H.; Zeng, Z. A Bi-directional LSTM Ship Trajectory Prediction Method based on Attention Mechanism. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; Volume 5, pp. 1987–1993. [Google Scholar] [CrossRef]

- Park, J.; Jeong, J.; Park, Y. Ship Trajectory Prediction Based on Bi-LSTM Using Spectral-Clustered AIS Data. J. Mar. Sci. Eng. 2021, 9, 1037. [Google Scholar] [CrossRef]

- Gao, M.; Shi, G.; Li, S. Online Prediction of Ship Behavior with Automatic Identification System Sensor Data Using Bidirectional Long Short-Term Memory Recurrent Neural Network. Sensors 2018, 18, 4211. [Google Scholar] [CrossRef] [Green Version]

- Graves, A. Generating Sequences With Recurrent Neural Networks. arXiv 2013, arXiv:1308.0850. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Gao, D.W.; Zhu, Y.S.; Zhang, J.F.; He, Y.K.; Yan, K.; Yan, B.R. A novel MP-LSTM method for ship trajectory prediction based on AIS data. Ocean Eng. 2021, 228, 108956. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.U.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Messaoud, K.; Yahiaoui, I.; Verroust-Blondet, A.; Nashashibi, F. Attention Based Vehicle Trajectory Prediction. IEEE Trans. Intell. Veh. 2021, 6, 175–185. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, L.; Rao, Z.; Liu, G. Attention-Based Interaction Trajectory Prediction. In Artificial Intelligence and Mobile Services—AIMS 2020; Xu, R., De, W., Zhong, W., Tian, L., Bai, Y., Zhang, L.J., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 168–175. [Google Scholar]

- Murray, B.; Perera, L.P. An AIS-based deep learning framework for regional ship behavior prediction. Reliab. Eng. Syst. Saf. 2021, 215, 107819. [Google Scholar] [CrossRef]

- Rong, H.; Teixeira, A.; Guedes Soares, C. Ship trajectory uncertainty prediction based on a Gaussian Process model. Ocean Eng. 2019, 182, 499–511. [Google Scholar] [CrossRef]

- Hou, L.H.; Liu, H.J. An End-to-End LSTM-MDN Network for Projectile Trajectory Prediction. In Proceedings of the Intelligence Science and Big Data Engineering, Big Data and Machine Learning, Nanjing, China, 17–20 October 2019; Cui, Z., Pan, J., Zhang, S., Xiao, L., Yang, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 114–125. [Google Scholar]

- Zhao, Y.; Yang, R.; Chevalier, G.; Shah, R.C.; Romijnders, R. Applying deep bidirectional LSTM and mixture density network for basketball trajectory prediction. Optik 2018, 158, 266–272. [Google Scholar] [CrossRef] [Green Version]

- Géron, A. Hands-On Machine Learning with Scikit-Learn and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems; O’Reilly Media: Newton, MA, USA, 2017. [Google Scholar]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Dasgupta, S., McAllester, D., Eds.; PMLR: Atlanta, GA, USA, 2013; Volume 28, pp. 1310–1318. [Google Scholar]

- Bishop, C. Mixture Density Networks; Working Paper; Aston University: Birmingham, UK, 1994. [Google Scholar]

- Bishop, C. Pattern Recognition and Machine Learning (Information Science and Statistics); Springer: Berlin/Heidelberg, Germany, 2007; ISBN 0387310738. [Google Scholar]

- Høye, G.K.; Eriksen, T.; Mel, B.J.; Narheim, B.T. Space-based AIS for global maritime traffic monitoring. Acta Astronaut. 2008, 62, 240–245. [Google Scholar] [CrossRef]

- Sang, L.Z.; Yan, X.P.; Mao, Z.; Ma, F. Restoring Method of Vessel Track Based on AIS Information. In Proceedings of the 2012 11th International Symposium on Distributed Computing and Applications to Business, Engineering Science, Guilin, China, 19–22 October 2012; pp. 336–340. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Klambauer, G.; Unterthiner, T.; Mayr, A.; Hochreiter, S. Self-Normalizing Neural Networks. arXiv 2017, arXiv:1706.02515. [Google Scholar]

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs). arXiv 2015, arXiv:1511.07289. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv 2017, arXiv:1608.03983. [Google Scholar]

- Van Rossum, G.; Drake, F.L., Jr. Python Reference Manual; Centrum voor Wiskunde en Informatica: Amsterdam, The Netherlands, 1995. [Google Scholar]

- Chollet, F.; Zhu, Q.S.; Rahman, F.; Gardener, T.; Gardener, T.; de Marmiesse, G.; Zabluda, O.; Chenta, M.S.; Watson, M.; Santana, E.; et al. Keras. 2015. Available online: https://github.com/fchollet/keras (accessed on 17 December 2021).

- Keras. Keras FAQ: Why Is My Training Loss Much Higher than My Testing Loss? 2021. Available online: https://keras.io/getting_started/faq/ (accessed on 17 December 2021).

| Hyperparameter | Value | Hyperparameter | Value |

|---|---|---|---|

| BLSTM 1,2,3,5 * | 456 | ||

| BLSTM 4,6 * | 256 | 0.7 | |

| Mixtures, M | 11 | ||

| FC(1,2,3) *** | () | ||

| Dropout ** | Batch Size, l | 3000 | |

| Initializer | LeCun N [40] | 10 |

| Parameters | Value |

|---|---|

| Resampling time | 5 min |

| Minimum messages | 50 |

| Stop time | 3 h |

| Look back, b | 20 time steps |

| Look ahead, a | 1 time step |

| Features, f | 4 |

| Data Set | # MMSI | Targets | NLL Loss |

|---|---|---|---|

| Training | 2316 | 4,810,199 | |

| Validation | 579 | 1,137,834 | |

| Testing | 726 | 1,765,351 | |

| Total | 3631 | 7,713,384 | − |

| Model | |||

|---|---|---|---|

| Minutes | BLSTM | BLSTM-Attention | BLSTM-MDN |

| 25 | |||

| 50 | |||

| 75 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sørensen, K.A.; Heiselberg, P.; Heiselberg, H. Probabilistic Maritime Trajectory Prediction in Complex Scenarios Using Deep Learning. Sensors 2022, 22, 2058. https://doi.org/10.3390/s22052058

Sørensen KA, Heiselberg P, Heiselberg H. Probabilistic Maritime Trajectory Prediction in Complex Scenarios Using Deep Learning. Sensors. 2022; 22(5):2058. https://doi.org/10.3390/s22052058

Chicago/Turabian StyleSørensen, Kristian Aalling, Peder Heiselberg, and Henning Heiselberg. 2022. "Probabilistic Maritime Trajectory Prediction in Complex Scenarios Using Deep Learning" Sensors 22, no. 5: 2058. https://doi.org/10.3390/s22052058

APA StyleSørensen, K. A., Heiselberg, P., & Heiselberg, H. (2022). Probabilistic Maritime Trajectory Prediction in Complex Scenarios Using Deep Learning. Sensors, 22(5), 2058. https://doi.org/10.3390/s22052058