Study of Different Deep Learning Methods for Coronavirus (COVID-19) Pandemic: Taxonomy, Survey and Insights

Abstract

:1. Introduction

1.1. COVID-19

1.2. Taxonomy of Medical Imaging

1.2.1. X-ray Radiography

1.2.2. Computed Tomography

1.3. Paper Structure

2. Basic and Background

2.1. Deep Learning

2.2. Deep Learning Architectures

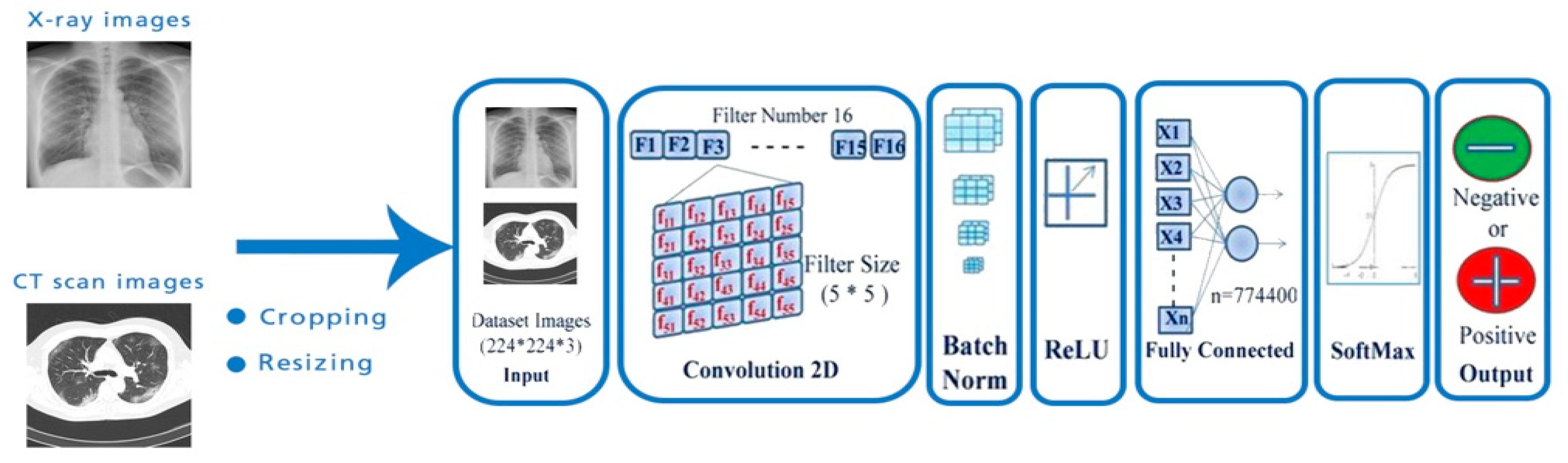

2.2.1. Convolutional Neural Networks

2.2.2. Recurrent Neural Network

2.2.3. Deep Belief Networks

2.2.4. Reinforcement Learning

2.3. Transfer Learning

2.4. Datasets

2.5. Metrics

3. Deep Learning Techniques for Different Image Modalities

3.1. Binary Classification

3.1.1. Pre-Trained Model with Deep Transfer Learning

3.1.2. Custom Deep Learning Techniques

3.2. Multi-Classification

3.2.1. Pre-Trained Model with Deep Transfer Learning

3.2.2. Custom Deep Learning Techniques

4. Discussion: Challenge and Future Research Direction

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hu, T.; Liu, Y.; Zhao, M.; Zhuang, Q.; Xu, L.; He, Q. A comparison of COVID-19, SARS and MERS. PeerJ 2020, 8, e9725. [Google Scholar] [CrossRef] [PubMed]

- Hani, C.; Trieu, N.; Saab, I.; Dangeard, S.; Bennani, S.; Chassagnon, G.; Revel, M.-P. COVID-19 pneumonia: A review of typical CT findings and differential diagnosis. Diagn. Interv. Imaging 2020, 101, 263–268. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Niu, S.; Qiu, Z.; Wei, Y.; Zhao, P.; Yao, J.; Huang, J.; Wu, Q.; Tan, M. COVID-da: Deep domain adaptation from typical pneumonia to COVID-19. arXiv 2020, arXiv:2005.01577. [Google Scholar]

- Chowdhury, M.E.H.; Rahman, T.; Khandakar, A.; Mazhar, R.; Kadir, M.A.; Bin Mahbub, Z.; Islam, K.R.; Khan, M.S.; Iqbal, A.; Al Emadi, N.; et al. Can AI Help in Screening Viral and COVID-19 Pneumonia? IEEE Access 2020, 8, 132665–132676. [Google Scholar] [CrossRef]

- Al-Doori, A.N.; Ahmed, D.S.; Kadhom, M.; Yousif, E. Herbal medicine as an alternative method to treat and prevent COVID-19. Baghdad J. Biochem. Appl. Biol. Sci. 2021, 2, 1–20. [Google Scholar] [CrossRef]

- Galloway, S.E.; Paul, P.; MacCannell, D.R.; Johansson, M.A.; Brooks, J.T.; MacNeil, A.; Slayton, R.B.; Tong, S.; Silk, B.J.; Armstrong, G.L.; et al. Emergence of SARS-CoV-2 b. 1.1. 7 lineage—united states, december 29, 2020–january 12, 2021. Morb. Mortal. Wkly. Rep. 2021, 70, 95. [Google Scholar] [CrossRef]

- Madhi, S.A.; Baillie, V.; Cutland, C.L.; Voysey, M.; Koen, A.L.; Fairlie, L.; Padayachee, S.D.; Dheda, K.; Barnabas, S.L.; Bhorat, Q.E.; et al. Safety and efficacy of the ChAdOx1 nCoV-19 (AZD1222) COVID-19 vaccine against the B. 1.351 variant in South Africa. medRxiv 2021. [Google Scholar] [CrossRef]

- Naveca, F.; da Costa, C.; Nascimento, V.; Souza, V.; Corado, A.; Nascimento, F.; Costa, Á.; Duarte, D.; Silva, G.; Mejía, M.; et al. SARS-CoV-2 Reinfection by the New Variant of Concern (VOC) P. 1 in Amazonas, Brazil. 2021. Available online: Virological.org (accessed on 17 December 2021).

- Boehm, E.; Kronig, I.; Neher, R.A.; Eckerle, I.; Vetter, P.; Kaiser, L. Novel SARS-CoV-2 variants: The pandemics within the pandemic. Clin. Microbiol. Infect. 2021, 27, 1109–1117. [Google Scholar] [CrossRef]

- Hunter, P.R.; JBrainard, S.; Grant, A.R. The Impact of the November 2020 English National Lockdown on COVID-19 case counts. medRxiv 2021. [Google Scholar] [CrossRef]

- Volz, E.; Mishra, S.; Chand, M.; Barrett, J.C.; Johnson, R.; Geidelberg, L.; Hinsley, W.R.; Laydon, D.J.; Dabrera, G.; O’Toole, Á.; et al. Transmission of SARS-CoV-2 Lineage B. 1.1. 7 in England: Insights from linking epidemiological and genetic data. medRxiv 2021. [Google Scholar] [CrossRef]

- van Oosterhout, C.; Hall, N.; Ly, H.; Tyler, K.M. COVID-19 Evolution during the Pandemic–Implications of New SARS-CoV-2 Variants on Disease Control and Public Health Policies; Taylor & Francis: Abingdon, UK, 2021. [Google Scholar]

- Al-Falluji, R.A.; Katheeth, Z.D.; Alathari, B. Automatic Detection of COVID-19 Using Chest X-ray Images and Modified ResNet18-Based Convolution Neural Networks. Comput. Mater. Contin. 2021, 66, 1301–1313. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, Y.; Li, Y.; Shen, C.; Xia, Y. COVID-19 screening on chest x-ray images using deep learning based anomaly detection. arXiv 2020, arXiv:2003.12338. [Google Scholar]

- Assmus, A. Early history of X rays. Beam Line 1995, 25, 10–24. [Google Scholar]

- Schiaffino, S.; Tritella, S.; Cozzi, A.; Carriero, S.; Blandi, L.; Ferraris, L.; Sardanelli, F. Diagnostic performance of chest X-ray for COVID-19 pneumonia during the SARS-CoV-2 pandemic in Lombardy, Italy. J. Thorac. Imaging 2020, 35, W105–W106. [Google Scholar] [CrossRef] [PubMed]

- Filler, A. The history, development and impact of computed imaging in neurological diagnosis and neurosurgery: CT, MRI, and DTI. Nat. Preced. 2009, 1. [Google Scholar] [CrossRef] [Green Version]

- Suh, C.H.; Baek, J.H.; Choi, Y.J.; Lee, J.H. Performance of CT in the preoperative diagnosis of cervical lymph node metastasis in patients with papillary thyroid cancer: A systematic review and meta-analysis. Am. J. Neuroradiol. 2017, 38, 154–161. [Google Scholar] [CrossRef] [Green Version]

- Eijsvoogel, N.G.; Hendriks, B.M.F.; Martens, B.; Gerretsen, S.C.; Gommers, S.; van Kuijk, S.M.J.; Mihl, C.; Wildberger, J.E.; Das, M. The performance of non-ECG gated chest CT for cardiac assessment–The cardiac pathologies in chest CT (CaPaCT) study. Eur. J. Radiol. 2020, 130, 109151. [Google Scholar] [CrossRef]

- Herpe, G.; Lederlin, M.; Naudin, M.; Ohana, M.; Chaumoitre, K.; Gregory, J.; Vilgrain, V.; Freitag, C.A.; De Margerie-Mellon, C.; Flory, V.; et al. Efficacy of Chest CT for COVID-19 Pneumonia Diagnosis in France. Radiology 2021, 298, E81–E87. [Google Scholar] [CrossRef]

- Kumar, P.R.; Manash, E.B.K. Deep learning: A branch of machine learning. J. Phys. Conf. Ser. 2019, 1228, 012045. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Zhang, J.; Zhang, H.; Nan, Y.; Zhao, Y.; Fu, E.; Xie, Y.; Liu, W.; Li, W.; Zhang, H.; et al. Automated detection and quantification of COVID-19 pneumonia: CT imaging analysis by a deep learning-based software. Eur. J. Nucl. Med. Mol. Imaging 2020, 47, 2525–2532. [Google Scholar] [CrossRef] [PubMed]

- Javaheri, T.; Homayounfar, M.; Amoozgar, Z.; Reiazi, R.; Homayounieh, F.; Abbas, E.; Laali, A.; Radmard, A.R.; Gharib, M.H.; Mousavi, S.A.J.; et al. COVIDCTNet: An open-source deep learning approach to diagnose COVID-19 using small cohort of CT images. NPJ Digit. Med. 2021, 4, 29. [Google Scholar] [CrossRef]

- Luz, E.; Silva, P.; Silva, R.; Silva, L.; Guimarães, J.; Miozzo, G.; Moreira, G.; Menotti, D. Towards an effective and efficient deep learning model for COVID-19 patterns detection in X-ray images. Res. Biomed. Eng. 2021. [Google Scholar] [CrossRef]

- Pathak, Y.; Shukla, P.; Tiwari, A.; Stalin, S.; Singh, S. Deep Transfer Learning Based Classification Model for COVID-19 Disease. IRBM 2020, in press. [Google Scholar] [CrossRef]

- Gifani, P.; Shalbaf, A.; Vafaeezadeh, M. Automated detection of COVID-19 using ensemble of transfer learning with deep convolutional neural network based on CT scans. Int. J. Comput. Assist. Radiol. Surg. 2020, 16, 115–123. [Google Scholar] [CrossRef]

- Lawton, S.; Viriri, S. Detection of COVID-19 from CT Lung Scans Using Transfer Learning. Comput. Intell. Neurosci. 2021, 2021, 5527923. [Google Scholar] [CrossRef]

- Duran-Lopez, L.; Dominguez-Morales, J.; Corral-Jaime, J.; Vicente-Diaz, S.; Linares-Barranco, A. COVID-XNet: A Custom Deep Learning System to Diagnose and Locate COVID-19 in Chest X-ray Images. Appl. Sci. 2020, 10, 5683. [Google Scholar] [CrossRef]

- Sakib, S.; Tazrin, T.; Fouda, M.M.; Fadlullah, Z.M.; Guizani, M. DL-CRC: Deep Learning-Based Chest Radiograph Classification for COVID-19 Detection: A Novel Approach. IEEE Access 2020, 8, 171575–171589. [Google Scholar] [CrossRef] [PubMed]

- Dhahri, H.; Rabhi, B.; Chelbi, S.; Almutiry, O.; Mahmood, A.; Alimi, A.M. Automatic Detection of COVID-19 Using a Stacked Denoising Convolutional Autoencoder. Comput. Mater. Contin. 2021, 69, 3259–3274. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1. [Google Scholar]

- Schmidhuber, J. Deep Learning. Scholarpedia 2015, 10, 32832. [Google Scholar] [CrossRef] [Green Version]

- Islam, Z.; Islam, M.; Asraf, A. A combined deep CNN-LSTM network for the detection of novel coronavirus (COVID-19) using X-ray images. Inform. Med. Unlocked 2020, 20, 100412. [Google Scholar] [CrossRef] [PubMed]

- Yang, B.; Guo, H.; Cao, E. Chapter Two—Design of Cyber-Physical-Social Systems with Forensic-Awareness Based on Deep Learning in AI and Cloud Computing; Hurson, A.R., Wu, S., Eds.; Elsevier: Amsterdam, The Netherlands, 2021; Volume 120, pp. 39–79. [Google Scholar]

- Ke, Q.; Liu, J.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F. Chapter 5—Computer Vision for Human–Machine Interaction. In Computer Vision for Assistive Healthcare; Leo, M., Farinella, G.M., Eds.; Academic Press: Cambridge, MA, USA, 2018; pp. 127–145. [Google Scholar]

- Hcini, G.; Jdey, I.; Heni, A.; Ltifi, H. Hyperparameter optimization in customized convolutional neural network for blood cells classification. J. Theor. Appl. Inf. Technol. 2021, 99, 5425–5435. [Google Scholar]

- Shamshirband, S.; Fathi, M.; Dehzangi, A.; Chronopoulos, A.T.; Alinejad-Rokny, H. A Review on Deep Learning Approaches in Healthcare Systems: Taxonomies, Challenges, and Open Issues. J. Biomed. Inform. 2020, 113, 103627. [Google Scholar] [CrossRef] [PubMed]

- Hua, Y.; Guo, J.; Zhao, H. Deep belief networks and deep learning. In Proceedings of the 2015 International Conference on Intelligent Computing and Internet of Things, Harbin, China, 17–18 January 2015. [Google Scholar]

- Hinton, G.E. Deep belief networks. Scholarpedia 2009, 4, 5947. [Google Scholar] [CrossRef]

- Zong, K.; Luo, C. Reinforcement learning based framework for COVID-19 resource allocation. Comput. Ind. Eng. 2022, 167, 107960. [Google Scholar] [CrossRef] [PubMed]

- Saood, A.; Hatem, I. COVID-19 lung CT image segmentation using deep learning methods: U-Net versus SegNet. BMC Med. Imaging 2021, 21, 19. [Google Scholar] [CrossRef]

- Paluru, N.; Dayal, A.; Jenssen, H.B.; Sakinis, T.; Cenkeramaddi, L.R.; Prakash, J.; Yalavarthy, P.K. Anam-Net: Anamorphic Depth Embedding-Based Lightweight CNN for Segmentation of Anomalies in COVID-19 Chest CT Images. IEEE Trans. Neural Networks Learn. Syst. 2021, 32, 932–946. [Google Scholar] [CrossRef]

- SYin, S.; Deng, H.; Xu, Z.; Zhu, Q.; Cheng, J. SD-UNet: A Novel Segmentation Framework for CT Images of Lung Infections. Electronics 2022, 11, 130. [Google Scholar]

- Shan, F.; Gao, Y.; Wang, J.; Shi, W.; Shi, N.; Han, M.; Xue, Z.; Shen, D.; Shi, Y. Abnormal lung quantification in chest CT images of COVID-19 patients with deep learning and its application to severity prediction. Med. Phys. 2020, 48, 1633–1645. [Google Scholar] [CrossRef]

- Fung, D.L.X.; Liu, Q.; Zammit, J.; Leung, C.K.; Hu, P. Self-supervised deep learning model for COVID-19 lung CT image segmentation highlighting putative causal relationship among age, underlying disease and COVID-19. J. Transl. Med. 2021, 19, 1–18. [Google Scholar] [CrossRef]

- Suri, J.; Agarwal, S.; Pathak, R.; Ketireddy, V.; Columbu, M.; Saba, L.; Gupta, S.; Faa, G.; Singh, I.; Turk, M.; et al. COVLIAS 1.0: Lung Segmentation in COVID-19 Computed Tomography Scans Using Hybrid Deep Learning Artificial Intelligence Models. Diagnostics 2021, 11, 1405. [Google Scholar] [CrossRef]

- Hu, H.; Shen, L.; Guan, Q.; Li, X.; Zhou, Q.; Ruan, S. Deep co-supervision and attention fusion strategy for automatic COVID-19 lung infection segmentation on CT images. Pattern Recognit. 2021, 124, 108452. [Google Scholar] [CrossRef] [PubMed]

- Kamil, M.Y. A deep learning framework to detect COVID-19 disease via chest X-ray and CT scan images. Int. J. Electr. Comput. Eng. IJECE 2021, 11, 844–850. [Google Scholar] [CrossRef]

- Abdulmunem, A.A.; Abutiheen, Z.A.; Aleqabie, H.J. Recognition of corona virus disease (COVID-19) using deep learning network. Int. J. Electr. Comput. Eng. IJECE 2021, 11, 365–374. [Google Scholar] [CrossRef]

- Gozes, O.; Frid-Adar, M.; Sagie, N.; Zhang, H.; Ji, W.; Greenspan, H. Coronavirus detection and analysis on chest ct with deep learning. arXiv 2020, arXiv:2004.02640. [Google Scholar]

- Kermany, D.; Zhang, K.; Goldbaum, M. Labeled optical coherence tomography (OCT) and Chest X-ray images for classification. Mendeley Data 2018, 2, 2. [Google Scholar]

- Cohen, J.P.; Morrison, P.; Dao, L. COVID-19 Image Data Collection. arXiv 2020, arXiv:2003.11597. [Google Scholar]

- Italian Society of Medical and Interventional Radiology (SIRM). 2020. Available online: https://www.sirm.org/en/category/articles/covid-19-database/page/1/ (accessed on 3 June 2020).

- Manapure, P.; Likhar, K.; Kosare, H. Detecting COVID-19 in X-ray Images with Keras, Tensor Flow, and Deep Learning. Available online: http://acors.org/Journal/Papers/Volume1/issue3/VOL1_ISSUE3_09.pdf (accessed on 17 December 2021).

- ChainZ. Available online: www.ChainZ.cn (accessed on 17 December 2021).

- Zhang, Y.-D.; Satapathy, S.C.; Liu, S.; Li, G.-R. A five-layer deep convolutional neural network with stochastic pooling for chest CT-based COVID-19 diagnosis. Mach. Vis. Appl. 2020, 32, 1–13. [Google Scholar] [CrossRef]

- Ahrabi, S.S.; Scarpiniti, M.; Baccarelli, E.; Momenzadeh, A. An Accuracy vs. Complexity Comparison of Deep Learning Architectures for the Detection of COVID-19 Disease. Computation 2021, 9, 3. [Google Scholar] [CrossRef]

- Zhu, Z.; Xingming, Z.; Tao, G.; Dan, T.; Li, J.; Chen, X.; Li, Y.; Zhou, Z.; Zhang, X.; Zhou, J.; et al. Classification of COVID-19 by Compressed Chest CT Image through Deep Learning on a Large Patients Cohort. Interdiscip. Sci. Comput. Life Sci. 2021, 13, 73–82. [Google Scholar] [CrossRef]

- Siddiqui, S.Y.; Abbas, S.; Khan, M.A.; Naseer, I.; Masood, T.; Khan, K.M.; Al Ghamdi, M.A.; AlMotiri, S.H. Intelligent Decision Support System for COVID-19 Empowered with Deep Learning. Comput. Mater. Contin. 2021, 66, 1719–1732. [Google Scholar] [CrossRef]

- Hajij, M.; Zamzmi, G.; Batayneh, F. TDA-Net: Fusion of Persistent Homology and Deep Learning Features for COVID-19 Detection in Chest X-ray Images. arXiv 2021, arXiv:2101.08398. [Google Scholar]

- NIH. Nih Chest X-ray Dataset of 14 Common Thorax Disease. Available online: https://www.nih.gov/news-events/news-releases/nih-clinical-center-provides-one-largestpublicly-available-chest-x-ray-datasets-scientific-community (accessed on 17 December 2021).

- Wang, S.; Kang, B.; Ma, J.; Zeng, X.; Xiao, M.; Guo, J.; Cai, M.; Yang, J.; Li, Y.; Meng, X.; et al. A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19). Eur. Radiol. 2021, 31, 6096–6104. [Google Scholar] [CrossRef] [PubMed]

- Rahimzadeh, M.; Attar, A.; Sakhaei, S.M. A fully automated deep learning-based network for detecting COVID-19 from a new and large lung CT scan dataset. Biomed. Signal Process. Control 2021, 68, 102588. [Google Scholar] [CrossRef]

- Dhiman, G.; Chang, V.; Singh, K.K.; Shankar, A. ADOPT: Automatic deep learning and optimization-based approach for detection of novel coronavirus COVID-19 disease using X-ray images. J. Biomol. Struct. Dyn. 2021, 1–13. [Google Scholar] [CrossRef]

- Yu, X.; Wang, S.-H.; Zhang, Y.-D. CGNet: A graph-knowledge embedded convolutional neural network for detection of pneumonia. Inf. Process. Manag. 2020, 58, 102411. [Google Scholar] [CrossRef]

- Wehbe, R.M.; Sheng, J.; Dutta, S.; Chai, S.; Dravid, A.; Barutcu, S.; Wu, Y.; Cantrell, D.R.; Xiao, N.; Allen, B.D.; et al. DeepCOVID-XR: An Artificial Intelligence Algorithm to Detect COVID-19 on Chest Radiographs Trained and Tested on a Large US Clinical Data Set. Radiology 2021, 299, E167–E176. [Google Scholar] [CrossRef]

- Sedik, A.; Hammad, M.; El-Samie, F.E.A.; Gupta, B.B.; El-Latif, A.A.A. Efficient deep learning approach for augmented detection of Coronavirus disease. Neural Comput. Appl. 2021, 1–18. [Google Scholar] [CrossRef]

- Saha, P.; Sadi, M.S.; Islam, M. EMCNet: Automated COVID-19 diagnosis from X-ray images using convolutional neural network and ensemble of machine learning classifiers. Inform. Med. Unlocked 2020, 22, 100505. [Google Scholar] [CrossRef]

- Jain, G.; Mittal, D.; Thakur, D.; Mittal, M.K. A deep learning approach to detect COVID-19 coronavirus with X-ray images. Biocybern. Biomed. Eng. 2020, 40, 1391–1405. [Google Scholar] [CrossRef]

- Alom, M.Z.; Rahman, M.M.; Nasrin, M.S.; Taha, T.M.; Asari, V.K. COVID_MTNet: COVID-19 detection with multi-task deep learning approaches. arXiv 2020, arXiv:2004.03747. [Google Scholar]

- Zheng, C.; Deng, X.; Fu, Q.; Zhou, Q.; Feng, J.; Ma, H.; Liu, W.; Wang, X. Deep learning-based detection for COVID-19 from chest CT using weak label. MedRxiv 2020. [Google Scholar] [CrossRef] [Green Version]

- Shah, V.; Keniya, R.; Shridharani, A.; Punjabi, M.; Shah, J.; Mehendale, N. Diagnosis of COVID-19 using CT scan images and deep learning techniques. Emerg. Radiol. 2021, 28, 497–505. [Google Scholar] [CrossRef] [PubMed]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef] [Green Version]

- Desai, S.; Baghal, A.; Wongsurawat, T.; Al-Shukri, S.; Gates, K.; Farmer, P.; Rutherford, M.; Blake, G.D.; Nolan, T.; Powell, T.; et al. Data from chest imaging with clinical and genomic correlates representing a rural COVID-19 positive population. Cancer Imaging Arch. 2020. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Y.; He, X.; Xie, P. COVID-ct-dataset: A ct scan dataset about COVID-19. arXiv 2020, arXiv:2003.13865. [Google Scholar]

- COVID-CTset. Available online: https://github.com/mr7495/COVID-CTset (accessed on 17 December 2021).

- Chest X-ray Images (Pneumonia). 2020. Available online: https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia/version/1 (accessed on 17 December 2021).

- UCSD-AI4H. COVID-CT. Available online: https://github.com/UCSD-AI4H/COVID-CT (accessed on 9 April 2020).

- Alqudah, A.M.; Qazan, S. Augmented COVID-19 X-ray; Volume 4. 2020. Available online: https://data.mendeley.com/datasets/2fxz4px6d8/4 (accessed on 17 December 2021).

- COVID-19 Radiography Database. Available online: https://www.kaggle.com/tawsifurrahman/covid19-radiography-database (accessed on 17 December 2021).

- COVID-19 Radiopaedia. 2020. Available online: https://radiopaedia.org/articles/covid-19-3?lang=us (accessed on 3 June 2020).

- Bhatti, S.; Aziz, D.; Nadeem, D.; Usmani, I.; Aamir, P.; Khan, D. Automatic Classification of the Severity of COVID-19 Patients Based on CT Scans and X-rays Using Deep Learning. Eur. J. Mol. Clin. Med. 2021, 7, 1436–1455. [Google Scholar]

- Afifi, A.; Hafsa, N.E.; Ali, M.A.S.; Alhumam, A.; Alsalman, S. An Ensemble of Global and Local-Attention Based Convolutional Neural Networks for COVID-19 Diagnosis on Chest X-ray Images. Symmetry 2021, 13, 113. [Google Scholar] [CrossRef]

- Sarker, L.; Islam, M.M.; Hannan, T.; Ahmed, Z. COVID-Densenet: A Deep Learning Architecture to Detect COVID-19 from Chest Radiology Images. 2021. Available online: https://pdfs.semanticscholar.org/c6f7/a57a37e87b52ac92402987c9b7a3df41f2db.pdf (accessed on 17 December 2021).

- Karar, M.E.; Hemdan, E.E.-D.; Shouman, M.A. Cascaded deep learning classifiers for computer-aided diagnosis of COVID-19 and pneumonia diseases in X-ray scans. Complex Intell. Syst. 2020, 7, 235–247. [Google Scholar] [CrossRef]

- Zebin, T.; Rezvy, S. COVID-19 detection and disease progression visualization: Deep learning on chest X-rays for classification and coarse localization. Appl. Intell. 2021, 51, 1010–1021. [Google Scholar] [CrossRef]

- Ibrahim, A.U.; Ozsoz, M.; Serte, S.; Al-Turjman, F.; Yakoi, P.S. Pneumonia Classification Using Deep Learning from Chest X-ray Images During COVID-19. Cogn. Comput. 2021, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Jain, R.; Gupta, M.; Taneja, S.; Hemanth, D.J. Deep learning based detection and analysis of COVID-19 on chest X-ray images. Appl. Intell. 2020, 51, 1690–1700. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.; Liu, H.; Xu, C. Deep learning for the detection of COVID-19 using transfer learning and model integration. In Proceedings of the 2020 IEEE 10th International Conference on Electronics Information and Emergency Communication (ICEIEC), Beijing, China, 17–19 July 2020. [Google Scholar]

- El Asnaoui, K.; Chawki, Y. Using X-ray images and deep learning for automated detection of coronavirus disease. J. Biomol. Struct. Dyn. 2020, 39, 3615–3626. [Google Scholar] [CrossRef] [PubMed]

- Sajid, N. COVID-19 Patients Lungs X-ray Images 10000. Available online: https://www.kaggle.com/nabeelsajid917/covid-19-x-ray-10000-images (accessed on 4 May 2020).

- ABustos, A.; Pertusa, A.; Salinas, J.-M.; de la Iglesia-Vayá, M. PadChest: A large chest x-ray image dataset with multi-label annotated reports. arXiv 2019, arXiv:1901.07441. [Google Scholar]

- DeGrave, A.J.; Janizek, J.D.; Lee, S.-I. AI for radiographic COVID-19 detection selects shortcuts over signal. Nat. Mach. Intell. 2021, 3, 610–619. [Google Scholar] [CrossRef]

- Wang, L.; Lin, Z.Q.; Wong, A. COVID-net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. 2020, 10, 1–12. [Google Scholar] [CrossRef]

- Novel Corona Virus 2019 Dataset. Available online: https://www.kaggle.com/sudalairajkumar/novel-corona-virus-2019-dataset (accessed on 17 December 2021).

- Patel, P. Chest X-ray (COVID-19 & Pneumonia). Available online: https://www.kaggle.com/prashant268/chest-xray-covid19-pneumonia (accessed on 17 December 2021).

- RSNA Pneumonia Detection Challenge. 2020. Available online: https://www.kaggle.com/c/rsna-pneumonia-detection-challenge/data (accessed on 17 December 2021).

- Zhou, T.; Lu, H.; Yang, Z.; Qiu, S.; Huo, B.; Dong, Y. The ensemble deep learning model for novel COVID-19 on CT images. Appl. Soft Comput. 2021, 98, 106885. [Google Scholar] [CrossRef]

- Fontanellaz, M.; Ebner, L.; Huber, A.; Peters, A.; Löbelenz, L.; Hourscht, C.; Klaus, J.; Munz, J.; Ruder, T.; Drakopoulos, D.; et al. A Deep-Learning Diagnostic Support System for the Detection of COVID-19 Using Chest Radiographs: A Multireader Validation Study. Investig. Radiol. 2021, 56, 348–356. [Google Scholar] [CrossRef]

- Wang, Z.; Xiao, Y.; Li, Y.; Zhang, J.; Lu, F.; Hou, M.; Liu, X. Automatically discriminating and localizing COVID-19 from community-acquired pneumonia on chest X-rays. Pattern Recognit. 2020, 110, 107613. [Google Scholar] [CrossRef]

- Ahmed, F.; Bukhari, S.A.C.; Keshtkar, F. A Deep Learning Approach for COVID-19 8 Viral Pneumonia Screening with X-ray Images. Digit. Gov. Res. Pr. 2021, 2, 1–12. [Google Scholar] [CrossRef]

- Aslan, M.F.; Unlersen, M.F.; Sabanci, K.; Durdu, A. CNN-based transfer learning–BiLSTM network: A novel approach for COVID-19 infection detection. Appl. Soft Comput. 2020, 98, 106912. [Google Scholar] [CrossRef] [PubMed]

- Gupta, A.; Anjum; Gupta, S.; Katarya, R. InstaCovNet-19: A deep learning classification model for the detection of COVID-19 patients using Chest X-ray. Appl. Soft Comput. 2020, 99, 106859. [Google Scholar] [CrossRef] [PubMed]

- Karthik, R.; Menaka, R.; Hariharan, M. Learning distinctive filters for COVID-19 detection from chest X-ray using shuffled residual CNN. Appl. Soft Comput. 2020, 99, 106744. [Google Scholar] [CrossRef] [PubMed]

- Karakanis, S.; Leontidis, G. Lightweight deep learning models for detecting COVID-19 from chest X-ray images. Comput. Biol. Med. 2020, 130, 104181. [Google Scholar] [CrossRef] [PubMed]

- Canayaz, M. MH-COVIDNet: Diagnosis of COVID-19 using deep neural networks and meta-heuristic-based feature selection on X-ray images. Biomed. Signal Process. Control 2020, 64, 102257. [Google Scholar] [CrossRef]

- EHussain, E.; Hasan, M.; Rahman, A.; Lee, I.; Tamanna, T.; Parvez, M.Z. CoroDet: A deep learning based classification for COVID-19 detection using chest X-ray images. Chaos Solitons Fractals 2020, 142, 110495. [Google Scholar] [CrossRef] [PubMed]

- Mahdi, M.S.; Abid, Y.M.; Omran, A.H.; Abdul-Majeed, G. A Novel Aided Diagnosis Schema for COVID 19 Using Convolution Neural Network. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1051, 012007. [Google Scholar] [CrossRef]

- Ahmed, S.; Hossain, F.; Noor, M.B.T. Convid-Net: An Enhanced Convolutional Neural Network Framework for COVID-19 Detection from X-ray Images; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Chakraborty, M.; Dhavale, S.V.; Ingole, J. Corona-Nidaan: Lightweight deep convolutional neural network for chest X-ray based COVID-19 infection detection. Appl. Intell. 2021, 51, 3026–3043. [Google Scholar] [CrossRef]

- Demir, F. DeepCoroNet: A deep LSTM approach for automated detection of COVID-19 cases from chest X-ray images. Appl. Soft Comput. 2021, 103, 107160. [Google Scholar] [CrossRef]

- Liang, S.; Liu, H.; Gu, Y.; Guo, X.; Li, H.; Li, L.; Wu, Z.; Liu, M.; Tao, L. Fast automated detection of COVID-19 from medical images using convolutional neural networks. Commun. Biol. 2021, 4, 1–13. [Google Scholar] [CrossRef]

- Xu, Y.; Lam, H.-K.; Jia, G. MANet: A two-stage deep learning method for classification of COVID-19 from Chest X-ray images. Neurocomputing 2021, 443, 96–105. [Google Scholar] [CrossRef] [PubMed]

- Toğaçar, M.; Ergen, B.; Cömert, Z. COVID-19 detection using deep learning models to exploit Social Mimic Optimization and structured chest X-ray images using fuzzy color and stacking approaches. Comput. Biol. Med. 2020, 121, 103805. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Xie, Y.; Pang, G.; Liao, Z.; Verjans, J.; Li, W.; Sun, Z.; He, J.; Li, Y.; Shen, C.; et al. Viral Pneumonia Screening on Chest X-Rays Using Confidence-Aware Anomaly Detection. IEEE Trans. Med. Imaging 2020, 40, 879–890. [Google Scholar] [CrossRef]

- Ouchicha, C.; Ammor, O.; Meknassi, M. CVDNet: A novel deep learning architecture for detection of coronavirus (COVID-19) from chest X-ray images. Chaos Solitons Fractals 2020, 140, 110245. [Google Scholar] [CrossRef]

- Actualmed COVID-19 Chest X-ray Dataset. 2020. Available online: https://github.com/agchung/Actualmed-COVID-chestxray-dataset (accessed on 12 July 2020).

- Maguolo, G.; Nanni, L. A critic evaluation of methods for COVID-19 automatic detection from X-ray images. Inf. Fusion 2021, 76, 1–7. [Google Scholar] [CrossRef]

- Chung, A. COVID Chest X-ray Dataset. 2020. Available online: https://github.com/agchung/Figure1-COVID-chestxray-dataset (accessed on 3 June 2020).

- SARS-CoV-2 CT-Scan Datase. June 2020. Available online: https://www.kaggle.com/plameneduardo/sarscov2-ctscan-dataset (accessed on 17 December 2021).

- COVID-19 X-ray Dataset (Train & Test Sets) with COVID-19CNN. April 2020. Available online: https://www.kaggle.com/khoongweihao/covid19-xray-dataset-train-test-sets (accessed on 17 December 2021).

- Armato, S.G.; Drukker, K.; Li, F.; Hadjiiski, L.; Tourassi, G.D.; Engelmann, R.M.; Giger, M.L.; Redmond, G.; Farahani, K.; Kirby, J.S.; et al. LUNGx Challenge for computerized lung nodule classification. J. Med. Imaging 2016, 3, 044506. [Google Scholar] [CrossRef]

- COVID-19 Detection X-ray Dataset. Available online: https://kaggle.com/darshan1504/covid19-detection-xray-dataset (accessed on 17 December 2021).

- Vayá, M.d.l.I.; Saborit, J.M.; Montell, J.A.; Pertusa, A.; Bustos, A.; Cazorla, M.; Galant, J.; Barber, X.; Orozco-Beltrán, D.; Garcia, F.; et al. BIMCV COVID-19+: A large annotated dataset of RX and CT images from COVID-19 patients. arXiv 2020, arXiv:2006.01174. [Google Scholar]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. Chestx-ray8: Hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2017. Available online: https://openaccess.thecvf.com/content_cvpr_2017/html/Wang_ChestX-ray8_Hospital-Scale_Chest_CVPR_2017_paper.html (accessed on 17 December 2021).

- COVID-19 X-ray Images. Available online: https://www.kaggle.com/bachrr/covid-chest-xray (accessed on 17 December 2021).

- Setio, A.A.A.; Traverso, A.; de Bel, T.; Berens, M.S.; Bogaard, C.V.D.; Cerello, P.; Chen, H.; Dou, Q.; Fantacci, M.E.; Geurts, B.; et al. Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: The LUNA16 challenge. Med. Image Anal. 2017, 42, 1–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jaeger, S.; Candemir, S.; Antani, S.; Wáng, Y.-X.J.; Lu, P.-X.; Thoma, G. Two public chest X-ray datasets for computer-aided screening of pulmonary diseases. Quant. Imaging Med. Surg. 2014, 4, 475–477. [Google Scholar]

- Cohen, J.P.; Morrison, P.; Dao, L.; Roth, K.; Duong, T.Q.; Ghassemi, M. COVID-19 image data collection: Prospective predictions are the future. arXiv 2020, arXiv:2006.11988. [Google Scholar]

- Islam, M.; Karray, F.; Alhajj, R.; Zeng, J. A Review on Deep Learning Techniques for the Diagnosis of Novel Coronavirus (COVID-19). IEEE Access 2021, 9, 30551–30572. [Google Scholar] [CrossRef] [PubMed]

- Hemanjali, A.; Revathy, S.; Anu, V.M.; MaryGladence, L.; Jeyanthi, P.; Ritika, C.G. Document Clustering on COVID literature using Machine Learning. In Proceedings of the 2021 5th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 8–10 April 2021. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

| Predicted Class | ||

|---|---|---|

| Actual class | True Positive (TP) | False Positive (FP) |

| False Negative (FN) | True Negative (TN) | |

| Metrics | Definition |

|---|---|

| Accuracy | . |

| Precision/PPV | . |

| Recall /Sensitivity/TPR | . |

| F1 score | . |

| Specificity/TNR | . |

| AUC | The area under the curve (AUC) is a total measure of a binary classifier’s performance over all potential threshold settings. |

| MCC | . |

| IoU | Intersection over union (IoU) is an object detection metric that finds the difference between ground truth annotations and predicted bounding boxes. |

| Error | 1 − Accuracy. |

| Kappa | Kappa is an interesting metric used to measure classification performance. |

| ROC AUC/ROC | The receiver operating characteristic curve is a plot that shows the true positive rate (TPR) against the false positive rate (FPR) for various threshold values. |

| PR AUC/Average Precision | PR AUC is the average of precision scores calculated for each recall threshold. |

| NPV | . |

| FPR | . |

| FNR | . |

| NPR | False positive rate measures among truly negative cases to determine what percentage of them are actually false positive. |

| LRP | Localization recall precision is an error metric used to evaluate all visual detection tasks. |

| References | Data Set | Modalities | No. of Images | Partitioning | Classifiers | Performances (%) |

|---|---|---|---|---|---|---|

| [42] | Italian Society of Medical and Interventional Radiology | CT | 1001 lung CT images | Training (72%) Validation (10%) Testing (18%) | SegNet U-NET | SegNet Sensitivity 0.956 Specificity 0.9542 U-NET Sensitivity 0.964 Specificity 0.948 |

| (Paluru, N., Dayal, A., Jenssen, H.B., Sakinis, T., Cenkeramaddi, L.R., Prakash, J. and Yalavarthy, P.K, 2021) [43] | Italian Society of Medical and Interventional Radiology and Radiopedia | CT | 929 lung CT images | Training (70%) Testing (30%) | Anam Net | Sensitivity 0.927 Specificity 0.998 Accuracy 0.985 |

| (Yin, 2022) [44] | The Italian Society of Medical and Interactive Radiology | CT | 1963 lung CT images | Training (1376 CT images) Validation (196 CT images) Testing (391 CT images | SD-Unet | Sensitivity 0.8988 Specificity 0.9932 Accuracy 0.9906 |

| (Shan, et al., 2021) [45] | Shanghai Public Health Clinical Center and other centers outside of Shanghai | CT scan images | 249 images | Training (75%) Testing (25%) | DL-based segmentation system (VB-Net) | Accuracy 0.916 |

| [46] | Integrative Resource of Lung CT Images and Clinical Features (ICTCF) Med-Seg (medical segmentation) COVID-19 dataset | CT | 7586 lung CT images | Training (698 CT images) Validation (6654 CT images) Testing (117 CT images) | SSInfNet | F1 score 0.63 Recall 0.71 Precision 0.68 |

| [47] | Private dataset | CT | 5000 CT images | Training (40%) Testing (60%) | COVLIAS 1.0 (SegNet, VGG-SegNet and ResNet-SegNet) | AUC: SegNet 0.96 VGG-SegNet. 0.97 ResNet-SegNet 0.98 |

| [48] | Multiple sources of datasets | CT | 4449 CT images | Training (4000 CT images) Testing (449 CT images) | ResUnet | Dice metric 72.81 |

| Authors | Data Sources | No. of Images | Name of Classes | Partitioning | Techniques | Performances (%) |

|---|---|---|---|---|---|---|

| [49] | [52,53] | 1000 chest X-ray and CT images (normal = 805, COVID-19 = 195 (23 lung CT, 172 chest X-ray) | COVID-19, Normal | Training = 80% Test = 20% | VGG16, VGG19, Xception, ResNet50V2, MobileNetV2, NASNetMobile, ResNet101V2, and InceptionV3 | Accuracy = 99% Sensitivity = 97.4% Specificity = 99.4%. |

| [42] | [54] | 100 CT images | Infected, non-infected | Training = 70% Validation = 10% Test = 20% 5-fold cross validation | SegNet, U-NET | Accuracy = 95% Sensitivity = 95.6% Specificity = 95.42% Dice = 74.9% G-mean = 95.5% F2 = 86.1% |

| [50] | X-ray COVID-19 dataset [55] | 50 X-ray images (COVID = 25, Normal = 25) | COVID, Normal | Training = 80% Test = 20% 5- and 10-fold cross validation. | ResNet50 | 5-folds cross validation: Accuracy = 97.28%. Precision = 96% Sensitivity = 96% F-measure = 96% 10-folds cross validation: Accuracy = 95.99% Precision = 95.83% Sensitivity =92% F-measure = 93.87% |

| [51] | Development dataset [56], Testing dataset: Zhejiang Province, China, lung segmentation development: El-Camino Hospital (CA), lung segmentation development: University Hospitals of Geneva (HUG). | 1865 CT (normal = 1036, abnormal = 829) | Normal, COVID-19 | Training = 1725 Validation = 320 Test = 270 | ResNet-50-2D | AUC = 99.4% Sensitivity = 94% Specificity = 98% |

| Authors | Data Sources | No. of Images | Name of Classes | Partitioning | Techniques | Performances (%) |

|---|---|---|---|---|---|---|

| [57] | Local hospitals | 640 CT (COVID-19 = 320, healthy controls (HCs) = 320 | COVID-19, HC | 10-fold cross validation | 5LDCNN-SP-C | Sensitivity = 93.28% ± 1.50% Specificity = 94.00% ± 1.56% Accuracy = 93.64% ± 1.42% |

| [58] | data collection from Mendeley [52], The Cancer Imaging Archive (TCIA) [74], collection of X-rays and CT images that are COVID-19 positive [75] | 753 X-ray images (COVID-19 = 253, normal = 500) | COVID-19, Normal | Train = 653: 5-fold cross validation Hold out = 100 | CNN | Hold out test: Precision = 99% Recall = 99% F1 score = 99% AUC = 99% MCC = 99% |

| [59] | COVID-ct-dataset [76], Guangxi Medical University hospitals | 2592 CT images (COVID-19 = 1357, non-infected = 1235) | COVID-19, non-infected | Training = 1867 Validation = 1400 Test = 510 | Modified ResNet50 | Specificity = 92% Sensitivity = 93% Accuracy = 93% IoU = 0.85 F1 score = 92% AUC = 93% |

| [60] | IOT | COVID, non-COVID | Training = 70% Validation = 30% | ID2S-COVID19-DL | Accuracy = 95.5% Sensitivity = 94.38% Specificity =97.06% Miss rate =1.89% PPV = 98.51% NPV = 97.62% FPR = 54.46% NPR = 0.02% LRP = 97.61% LRN = 98.51% | |

| [61] | Open-source dataset [53], dataset from Kaggle [62] | 574 CXR images (COVID = 287, viral and bacterial pneumonia = 287) | COVID, non-COVID | Training = 80% leave-Out = 20% | TDA-Net | Accuracy = 93% Precision = 88% Recall = 95% F1 score = 92% AUC = 100% TNR = 91% |

| [63] | Dataset collected from 3 centers: Xi’an Jiaotong University First Affiliated Hospital (center 1), Nanchang University First Hospital (center 2), Xi’an No.8 Hospital of Xi’an Medical College (center 3) | 1065 CT images (COVID-19, typical pneumonia) | COVID-19, typical pneumonia | Training = 320 Internal Validation = 455 External validation = 290. | Modified Inception | Accuracy = 79.3% Specificity = 83% Sensitivity = 67% |

| [64] | COVID-CTset [77] | 63,849 CT scan images (normal = 48,260, COVID-19 = 15,589) | COVID-19, normal | 5-fold cross validation | ResNet50V2 + FPN | Accuracy = 98.49% |

| [65] | Open source repository provided by [53,78] | 100 patients (50 COVID-19, 50 normal) | COVID-19, normal | k-fold cross validation (k = 5 and k = 10-fold) | ResNet101 + J48 | k = 5-fold cross validation: Accuracy = 97.18% Recall = 98.64% Specificity = 95.86% Precision = 98.64% F1 score = 97.05% k = 10-fold cross validation: Accuracy = 100% Recall = 100% Specificity = 98.89% Precision = 100% F1 score = 100% |

| [66] | public COVID-19 CT dataset [76], Public pneumonia dataset [78], | public pneumonia dataset: 5856 X-ray images (normal and pneumonia) public COVID-19 CT dataset: 746 CT images (normal and pneumonia) | Pneumonia, normal | Public pneumonia dataset: Training = 5216 Validation = 16 Testing = 624 public pneumonia dataset: Training = 425 Validation = 118 Testing = 203 | CGNet | Public pneumonia dataset: Accuracy = 98.72% Sensitivity = 100% Specificity = 97.95% Public COVID-19 CT dataset: Accuracy = 99% Sensitivity = 100% Specificity = 98% |

| [67] | Sites the Northwestern Memorial Health Care System | 15,035 CXR images (COVID-19 positive = 4750, COVID-19 Negative = 10,285) | COVID-positive, COVID-negative | Training = 10,470 validation = 2686 Testing = 1879 | DeepCOVID-XR | For the entire test set: Accuracy = 83% AUC = 90% For 300 random test images: Accuracy = 82% |

| [68] | Dataset includes CT images [79], dataset includes X-ray images [80], COVID-19 radiography dataset [81] | 6130 images (COVID-19 = 3065, non-COVID-19 = 3065) | COVID-19, viral pneumonia | Training = 70% Test = 30% | CNN + ConvLSTM | Accuracy = 100% |

| [69] | Multiple sources [53,54,62,80,82] | 4600 X-ray images (COVID-19 = 2300, Normal = 2300) | COVID-19, normal | Training = 70% Validation = 20% Test = 10% | EMCNet | Accuracy = 98.91% Precision = 100% Recall = 97.82% F1 score = 98.89% |

| [70] | Two open-source image databases [53,78] | 1365 chest X-ray images (COVID-19 = 250, normal = 315, Viral Pneumonia = 350, bacterial pneumonia = 300, Other = 150) | COVID-19, other | Training = 70% Validation = 20% Test = 10% 5-fold cross validation | ResNet50 + ResNet-101 | Accuracy = 97.77% Recall = 97.14% Precision = 97.14% With cCross validation: Accuracy = 98.93% Sensitivity = 98.93% Specificity = 98.66% Precision = 96.39% F1 score = 98.15% |

| [71] | Joseph Paul Cohen dataset [53], Publicly available dataset [78], | 5216 chest X-ray and CT images (normal = 1341, pneumonia = 3875) | COVID-19, normal | Training = 80% Test = 20% | IRRCNN | X-ray images: Accuracy = 84.67% CT images: Accuracy = 98.78% |

| [72] | Archiving and communication system (PACS) of the radiology department (Union Hospital, Tongji Medical College, Huazhong University of Science and Tech) | 540 CT images (COVID-positive = 313, COVID-negative = 229) | COVID-positive, COVID-negative | Training = 499 Test =131 | DeCoVNet | ROC AUC = 95.9% PR AUC = 97.6% Sensitivity = 90.7% Specificity = 91.1% |

| [73] | COVID-19 CT dataset [76] | 738 CT images (COVID = 349, non-COVID = 463) | COVID, non COVID | Training = 80% Validation = 10% Test = 10% | CTnet-10 | Accuracy = 82.1% |

| Authors | Data Sources | No. of Images | Name of Classes | Partitioning | Techniques | Performances (%) |

|---|---|---|---|---|---|---|

| [83] | Two Kaggle datasets [4,92], COVID-19 image data collection [53] | 1491 chest X–rays and CT scans (normal = 1335, mild/moderate = 106, severe = 50) | Normal, mild/moderate, Severe | Training = 70% Validation = 15% Test = 15% | AlexNet GoogleNet Resnet50 | Average accuracy (non-augmented) AlexNet 81.48% GoogleNet 78.71% Resnet50 82.10% Average accuracy (augmented) AlexNet 83.70% GoogleNet 81.60% Resnet50 87.80% |

| [84] | BIMCV COVID-19 dataset [93], PadChest dataset [94] | 11,197 CXR (Control = 7217, pneumonia = 5451, COVID-19 = 1056) | Control, pneumonia, COVID-19 | Training = 70% Validation = 15% Test = 15% | DenseNet161 | Average balanced accuracy = 91.2%, Average precision = 92.4% F1 score = 91.9% |

| [85] | COVIDx dataset [95] | 15,177 Chest X-ray images (COVID-19 = 238, pneumonia = 6045, Normal = 8851) | COVID-19, non-COVID- COVID-19, pneumonia, normal | Training = 80% Validation = 10% Test = 10% 10-fold cross validation | DenseNet-121 | Two-class: Accuracy = 96% Precision = 96% Recall = 96% F-score = 96% Three-class: Accuracy = 93% Precision = 92% Recall = 92% F-score = 92% |

| [86] | Public dataset of X-ray images collected by [53] | 306 X-ray images (normal = 79, COVID-19 = 69, viral pneumonia = 79, bacterial pneumonia = 79) | Normal, COVID-19, viral pneumonia, bacterial pneumonia | Training = 85% Test = 15% | Cascaded deep learning classifiers (VGG16, ResNet50V2, DenseNet169) | Accuracy = 99.9% |

| [87] | [53,78] | 673 X-ray and CT images (COVID-19 = 202, normal = 300, pneumonia = 300) | COVID-19, pneumonia, normal | Training = 80% Test = 20% | VGG-16, ResNet50, EfficientNetB0 | Accuracy = 96.8% |

| [88] | Multiple sources [52,53,81,96] | 11568 X-ray images (COVID-19 = 371, non-COVID-19 viral pneumonia = 4237, bacterial pneumonia = 4078, normal = 2882) | COVID-19, viral pneumonia, bacterial pneumonia, normal | Training = 70% Test = 30% | AlexNet | Accuracy = 99.62% Sensitivity = 90.63% Specificity = 99.89%. |

| [89] | Kaggle repository [97] | 6432 (COVID-19 = 576, pneumonia = 4273, normal = 1583) | COVID-19, pneumonia, normal | Training = 5467 Validation = 965 | CNN models: Inception V3 Xception ResNeXt | Accuracy = 97.97% |

| [90] | chest X-ray dataset [53], RSNA pneumonia dataset [98] | 18,567 (COVID-19 = 140, viral pneumonia = 9576, normal = 8851) | COVID-19, viral pneumonia, normal | Training = 16714 Test = 1862 | ResNet101 ResNet152 | Accuracy = 96.1% |

| [91] | Publicly available image datasets (chest X-ray and CT dataset) [52,53] | 6087 chest X-ray and CT images (bacterial pneumonia = 2780, coronavirus = 1493, COVID19 = 231, normal = 1583) | Normal, bacteria, coronavirus | Training = 80% Validation = 20% | VGG16, VGG19, DenseNet201, Inception_ResNet_V2, Inception_V3, Resnet50, MobileNet_V2 | Accuracy = 92.18% |

| Authors | Data Sources | No. of Images | Name of Classes | Partitioning | Techniques | Performances (%) |

|---|---|---|---|---|---|---|

| [99] | Journals: Science direct, Nature, Springer Link, and China CNKI, Thoritative media reports: New York Times, Daily Mail (United Kingdom), The Times (United Kingdom), CNN, etc. | 2933 lung CT images | COVID, lung tumor, normal lung | Training = 6000 Test =1500 5-fold cross validation. | EDL-COVID | Accuracy = 99.054%. Sensitivity = 99.05% Specificity = 99.6% F measure = 98.59% MCC = 97.89% |

| [100] | Multiple sources [4,53,81,98,118] | 13,975 CXR images (normal = 7966, pneumonia = 5451, and COVID-19 pneumonia = 258) | Healthy, pneumonia, COVID-19 | Training = 13,675 Test = 300 | Modified COVID-net | Accuracy = 94.3% Sensitivity = 94.3% ± 4.5% Specificity = 97.2% ± 1.9% PPV = 94.5% ± 3.3% F score = 94.3% ± 2.0% |

| [13] | Two open-source datasets [52,53] | 15,085 X-ray (normal = 8851, COVID-19 = 180, pneumonia = 6054) | Normal, COVID-19, pneumonia | cross entropy 3-fold cross validation | Modified ResNet18 | Accuracy = 96.73% Recall = 94% Specificity = 100% |

| [101] | COVID-19 CXR dataset [53], Xiangya Hospital RSNA pneumonia detection challenge [98] | 3545 chest X-ray images (COVID-19 = 204, healthy = 1314, CAP = 2004) | COVID-19, Healthy, CAP | Training = 80% Validation = 20% Test = 61 images | ResNet50 + FPN | Accuracy = 93.65% Sensitivity = 90.92% Specificity = 92.62% |

| [102] | Two Kaggle datasets [53,92] | 1389 X-ray images (COVID-19 = 289, viral pneumonia = 550, normal = 550) | COVID-19, viral pneumonia, normal | 5-fold cross validation | CNN | Accuracy = 90.64% F1 score = 89.8% |

| [103] | Open-access database [4] | 2905 CXR images (COVID-19 = 219, viral pneumonia = 1345, normal = 1341) | COVID-19, viral pneumonia, normal | mAlexNet | Accuracy = 98.70% Error = 0.0130 Recall = 98.76% Specificity = 99.33% Precision = 98.77% False positive rate = 0.0067 F1 score = 98.76% AUC = 99.00% MCC = 98.09% Kappa = 97.07% | |

| [104] | COVID-19 Radiography Database [4], Chest X-ray dataset [119] | 3047 chest X-ray images (COVID-19 = 361, pneumonia = 1341, normal = 1345) | COVID, non-COVID COVID-19, pneumonia, normal | Training = 80% Test = 20% | InstaCovNet-19 | Two class: Accuracy = 99.53% Precision = 100% Recall = 99% Three class: Accuracy = 99.08% Recall = 99% F1 score = 99% Precision = 99% |

| [105] | Multiple sources [53,54,78,82,98,118,120] | 15,265 chest X-ray images (COVID-19 = 558, normal = 10,434, bacterial pneumonia = 2780, Viral pneumonia = 1493) | COVID-19, normal, viral pneumonia, bacterial pneumonia | 5-fold cross validation | CSDB CNN | Precision = 96.34 Recall = 97.54% F1 score = 96.90% Accuracy = 97.94% Specificity = 99.25% AUC = 98.39% |

| [106] | COVID-19 dataset [53], chest-X-ray images [78] | CXR (COVID-19 = 145, Bacterial Pneumonia = 145, normal = 145) | COVID, non-COVID COVID, non-COVID, bacterial pneumonia | Training = 80% Test = 20% | deep learning conditional generative adversarial networks | Two class: Accuracy = 98.7% Sensitivity = 100% Specificity = 98.3% Three class: Accuracy = 98.3% Sensitivity = 99.3% Specificity = 98.1% |

| [107] | Multiple sources [4,52,53] | 1092 X-ray images (COVID-19 = 364, normal 364, pneumonia = 364) | COVID-19, normal COVID-19, normal, pneumonia | Training = 70% Test = 30% 5-fold cross validation | MH-COVIDNet | Accuracy = 99.38% |

| [108] | Multiple sources [4,53,79,92,118,120,121,122] | 7390 X-ray and CT images (COVID-19 = 2843, normal = 3108, viral pneumonia + bacterial pneumonia = 1439) | COVID, normal COVID, normal, pneumonia COVID, normal, viral pneumonia, bacterial pneumonia | 5-fold cross validation | CoroDet | Two class: Accuracy = 99.1% Sensitivity = 95.36% Specificity = 97.36% Precision = 97.64% Recall = 95.3% F1 score = 96.88% Three class: Accuracy = 94.2% Sensitivity = 92.76% Specificity = 94.56% Precision = 94.04% Recall = 92.5% F1 score = 91.32% Four class: Accuracy = 91.2% Sensitivity = 91.76% Specificity = 93.48% Precision = 92.04% Recall = 91.9% F1 score = 90.04 |

| [24] | LUNGx Challenge for computerized lung nodule classification [123] | 16,750 CT images (COVID-19 = 5550, CAP = 5750, control = 5450) | COVID-19, Non-COVID COVID-19, CAP, control | Training = 15,000 Validation = 750 Test = 1000 | COVIDCTNet | Sensitivity = 93% Specificity = 100% Two class: Accuracy = 95% Three class: Accuracy = 85% |

| [109] | COVID-19 dataset [53] | 1184 chest X-ray images (COVID-19 = 336, MERS = 185 SARS = 141, ARDS = 130, Normal = 392) | COVID-19, MERS, SARS, ARDS, normal | Training = 757 Test = 427 | CNN | Accuracy = 98% Precision = 99% Recall = 98% F1 score = 98% |

| [110] | Multiple sources [53,81,92,118,122,124,125] | 6317 chest X-ray images (COVID-19 = 1440, normal = 2470 viral and bacterial pneumonia = 2407) | COVID-19, normal, pneumonia | Training = 70% Test = 30% | Convid-Net | Accuracy = 97.99% |

| [111] | COVID-19 Image Data Collection [53], RSNA Pneumonia Detection Challenge dataset [98], COVID-19 Chest X-ray Dataset Initiative [120] | 13,862 chest X-ray samples (COVID-19 = 245, pneumonia = 5551, normal = 8066) | COVID-19, pneumonia, normal | Training = 20,907 Test = 231 | Corona-Nidaan | For three-class classification: Accuracy = 95% For COVID-19 cases: Precision = 94% Recall = 94% |

| [112] | [78,126,127] | 1061 CX images (COVID-19 = 361, normal = 200, pneumonia = 500) | COVID-19, pneumonia, normal | Training = 80% Testing = 20% | DeepCoroNet | Accuracy = 100% Sensitivity = 100% Specificity = 100% F score = 100% |

| [113] | Multiple sources [53,74,98,128] | 10,377 X-ray and CT images (normal, pneumonia, COVID-19, influenza) | COVID-19, pneumonia, normal | Training = 9830 Test = 547 | CNNRF | F1 score = 98.90% Specificity = 100% |

| [114] | Multiple sources [52,53,129,130] | 6792 CXR images (normal = 1840, COVID-19 = 433, TB = 394, BP = 2780, VP = 1345) | COVID-19, normal, tuberculosis (TB), bacterial pneumonia (BP), viral pneumonia (VP) | Training = 80% Validation = 10% Test = 10% | MANet | Accuracy = 96.32% |

| [115] | COVID-19 dataset [4], Joseph Paul Cohen dataset [53] | 458 X-ray images (COVID-19 = 295, pneumonia = 98, normal = 65) | COVID-19, pneumonia, normal | Training = 70% Test = 30% 5-fold cross validation | MobileNetV2 + SqueezeNet | Accuracy = 99.27% |

| [116] | X-VIRAL dataset collected from 390 township hospitals through a telemedicine platform of JF Healthcare, X-COVID dataset collected from 6 institutions, COVID-19 dataset [53] | Chest X-ray images (positive viral pneumonia = 5977, non-viral pneumonia or healthy = 37,393, COVID-19 = 106, normal controls = 107) | COVID, non-COVID COVID, SARS, MERS | 5-fold cross validation | CAAD | X-COVID dataset: Two class AUC = 83.61% Sensitivity = 71.70% Open-COVID dataset: Three class Accuracy = 94.93% for COVID-19 detection Accuracy = 100% for SARS and MERS detection |

| [117] | COVID-19 Radiography Database [4] | 2905 chest X-ray images (COVID-19 = 219, viral pneumonia = 1341, normal = 1345) | COVID, viral pneumonia, normal | 5-fold cross validation Training = 70% Validation = 10% Test = 20% | CVDNet | Precision = 96.72% Accuracy = 96.69% Recall = 96.84% F1 score = 96.68% Accuracy = 97.20% for COVID-19 class |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Awassa, L.; Jdey, I.; Dhahri, H.; Hcini, G.; Mahmood, A.; Othman, E.; Haneef, M. Study of Different Deep Learning Methods for Coronavirus (COVID-19) Pandemic: Taxonomy, Survey and Insights. Sensors 2022, 22, 1890. https://doi.org/10.3390/s22051890

Awassa L, Jdey I, Dhahri H, Hcini G, Mahmood A, Othman E, Haneef M. Study of Different Deep Learning Methods for Coronavirus (COVID-19) Pandemic: Taxonomy, Survey and Insights. Sensors. 2022; 22(5):1890. https://doi.org/10.3390/s22051890

Chicago/Turabian StyleAwassa, Lamia, Imen Jdey, Habib Dhahri, Ghazala Hcini, Awais Mahmood, Esam Othman, and Muhammad Haneef. 2022. "Study of Different Deep Learning Methods for Coronavirus (COVID-19) Pandemic: Taxonomy, Survey and Insights" Sensors 22, no. 5: 1890. https://doi.org/10.3390/s22051890

APA StyleAwassa, L., Jdey, I., Dhahri, H., Hcini, G., Mahmood, A., Othman, E., & Haneef, M. (2022). Study of Different Deep Learning Methods for Coronavirus (COVID-19) Pandemic: Taxonomy, Survey and Insights. Sensors, 22(5), 1890. https://doi.org/10.3390/s22051890