Automatic Emotion Recognition in Children with Autism: A Systematic Literature Review

Abstract

:1. Introduction

2. Methods

2.1. Research Questions

- RQ1: What emotions are recognized in children with autism in these studies?

- RQ2: Which observation channels are used in emotion recognition in children with autism?

- RQ3: Which techniques are used in emotion recognition in children with autism?

- RQ4: What techniques are used for multimodal recognition?

2.2. Keywords

2.3. Inclusion/Exclusion Criteria

2.4. Search Engines and Search Strings

2.5. Papers Selection and Key Findings Extraction

3. Results

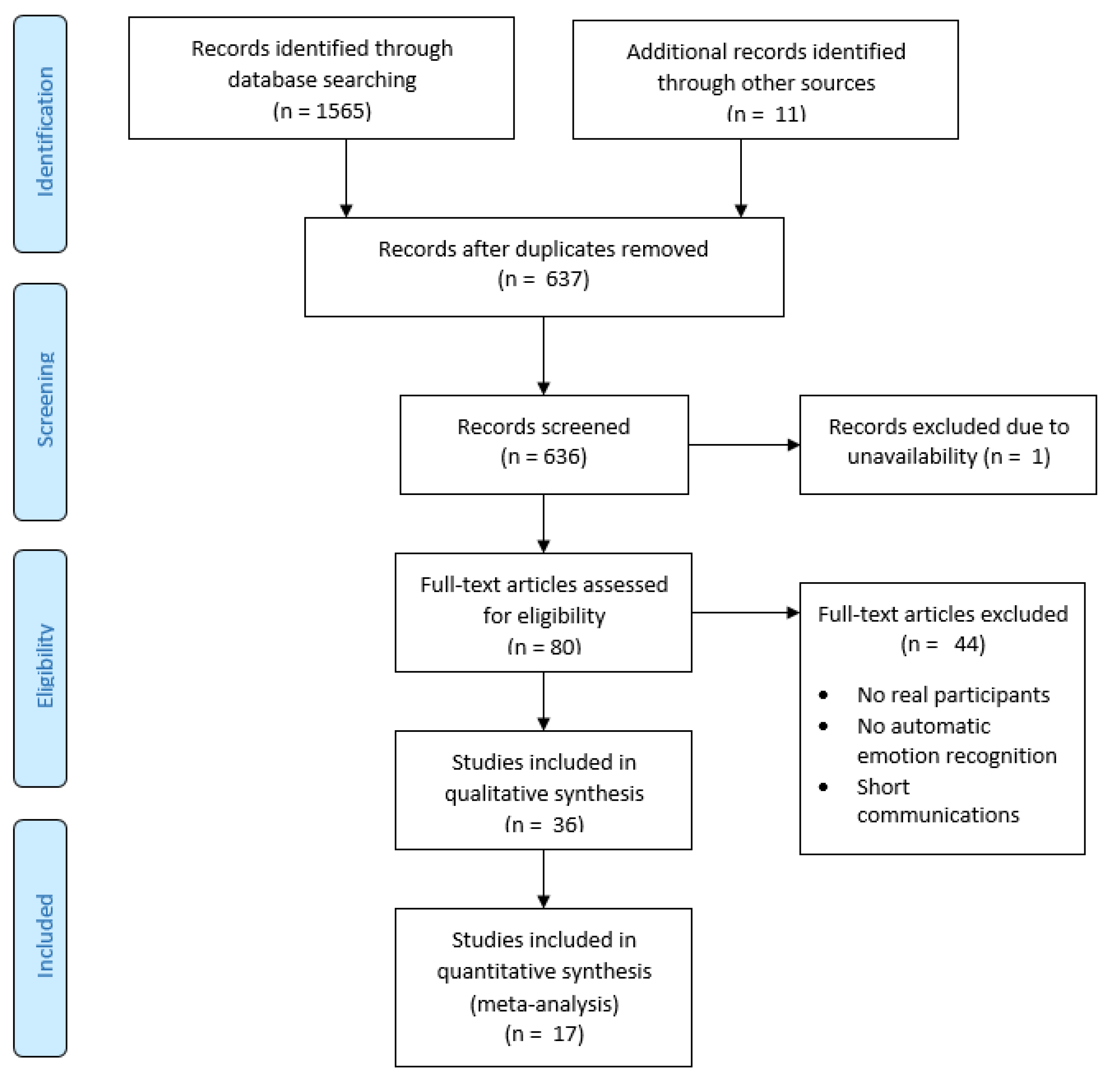

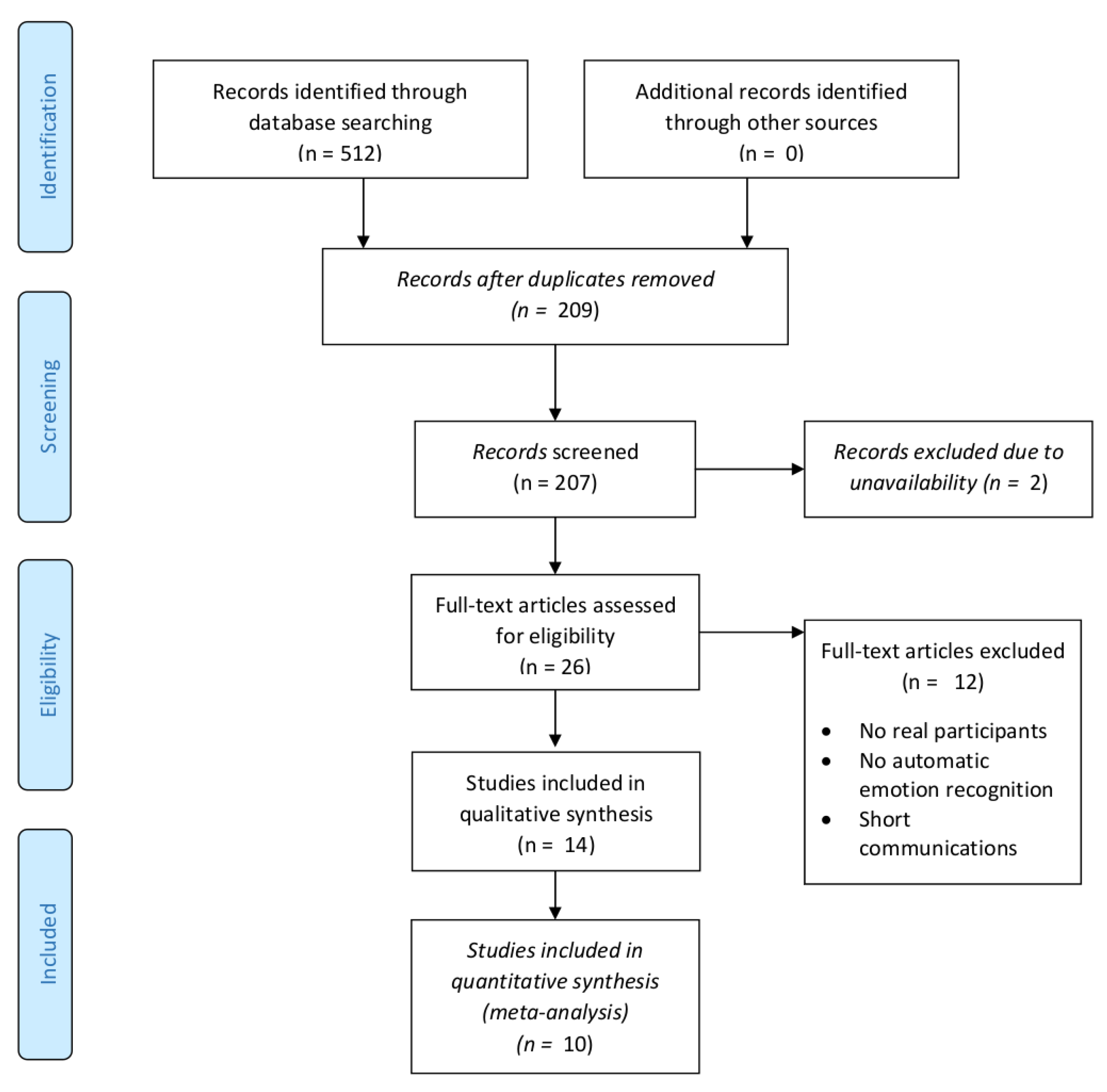

3.1. Study Selection

3.2. Quantitative Results

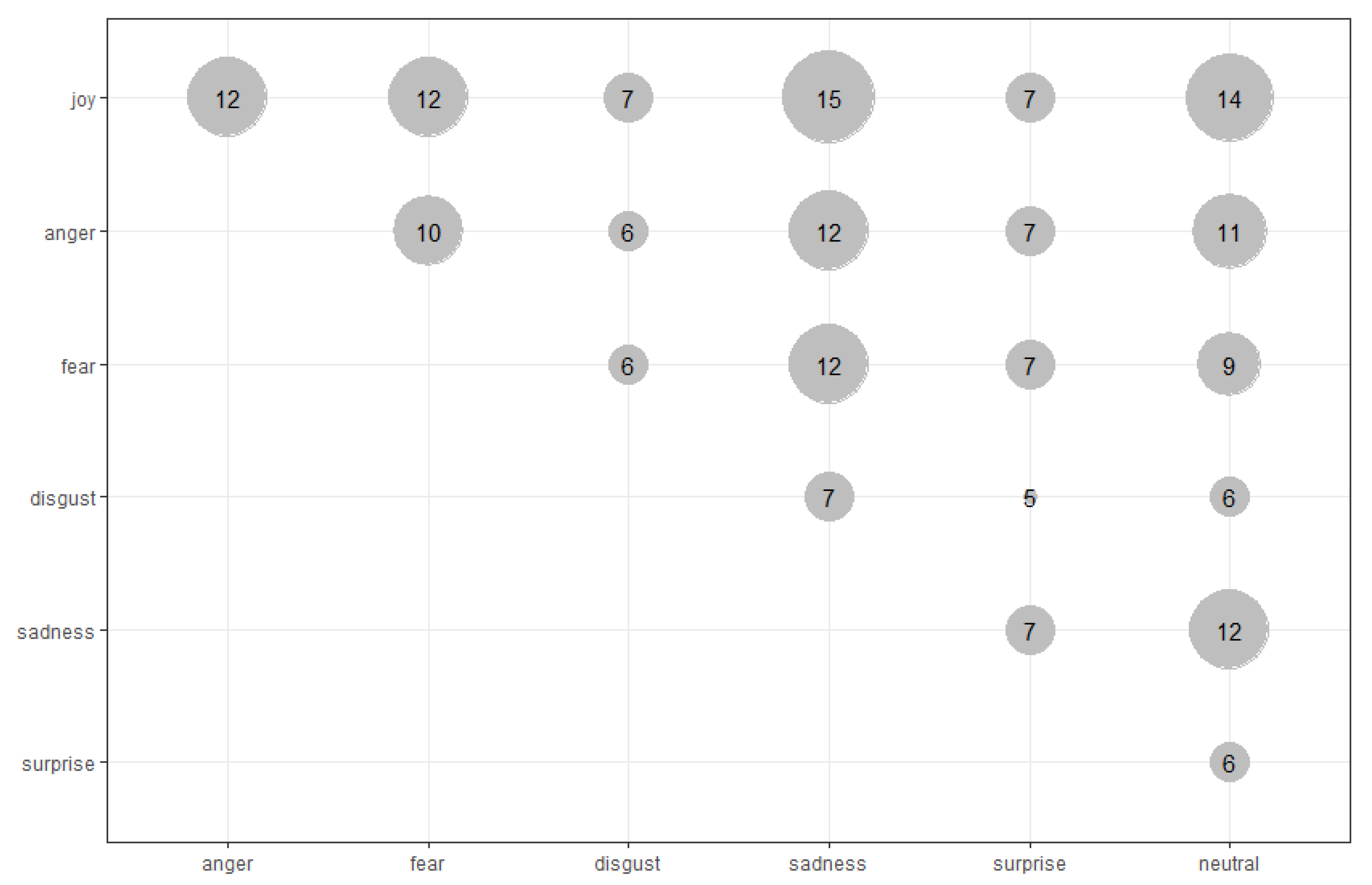

3.2.1. Emotions Recognized

- Most of the papers use two emotion models: Ekman’s basic emotions (joy, anger, fear, disgust, sadness, surprise) and/or a two-dimensional model of valence and arousal;

- For Ekman’s basic model, papers use a subset rather than the complete set of emotions;

- Papers use different wordings to describe the emotions, and additional attention should be paid to the meaning of those in a particular study;

- The set of emotions resulting from the recognition process is not limited to the ones described by these two models. Thus, all the emotions recognized within the studies described in the analyzed papers were grouped into some subsets and further discussed;

- Some notions used in the papers can be treated as moods or mental states, but all of them were included in the analysis. Emotions are analyzed in two ways: separately and in groups;

- All notions other than dimensional representation are treated as discrete emotions.

3.2.2. Discrete and Dimensional Emotion Models Used

3.2.3. Channels Used for Emotion Recognition

- movement: facial expressions, body postures, eye gaze, head movement, gestures (also called hand movements), and any other not-previously classified motion;

- sound expressions: vocalizations, the prosody of speech;

- heart activity: heart rate, HRV (heart rate variablity);

- muscle activity not related to movement: muscle tension;

- perspiration: skin conductance;

- respiration: intensity and period, ECG;

- thermal regulation: peripheral temperature;

- brain activity: neural activity.

3.2.4. Machine-Learning Techniques Used for Emotion Recognition

3.2.5. Analysis of Approaches Used in Emotion Recognition for Multimodal Processing

3.3. Qualitative Results

3.3.1. Participants

- construct the control group according to developmental age and/or intelligence factor rather than the chronological age of ASD group;

- making an openly available dataset of recordings is a highly recommended approach, as it fosters further research;

- regarding the construction of participant group, it might be hard to balance girls’/boys’ inclusion due to population skewness (autism is diagnosed in boys more frequently); similarly, it might be hard to balance the group regarding low-/high-functioning children, as low-functioning children might refuse to participate more frequently and their participation also depends on the type of activities under study.

3.3.2. Stimuli and Tagging Practices

- There are differences in reactions to positive and negative emotions presented in stimuli;

- Live videos of natural, real humans are good stimuli for tracking reaction patterns in children with ASD;

- There are differences in fixation duration time regarding different areas of interest (eyes, mouth) between children with ASD and typically developing children.

3.3.3. Modalities and Their Processing in Autism

- the measurement procedure or environment might be disturbing for a child with autism;

- the data obtained are not of high quality (hold no significant information on a child’s symptoms of emotions);

- the obtained modalities are hard to analyze due to atypical patterns of symptoms.

- children with ASD had significantly lower amplitudes of respiratory sinus arrhythmia and faster heart rates than typically developing children at baseline, suggesting lower overall vagal regulation of heart rate;

- a large percentage of children with autism had abnormally high sympathetic activity, i.e., skin conductance response;

- it is difficult to employ eye-tracking technologies with lower-functioning children because the calibration and data-collection processes require the child to sit still.

- How will the children’s emotions be displayed (in autism)?

- Does emotional affect vary with the severity of the disorder? How can this be accounted for and by the model?

3.3.4. Applications

- Before automatic emotion recognition is performed, it is advisable to define a list of emotional states that would provide a value from the intervention perspective;

- Children with autism deficits in communication skills make it hard to apply classic methods of tagging emotional states;

- Physiological signals are continuously available and are not impacted by deficits;

- For the enhancement of the reliability of tagging, a clinical observer and a caregiver who knows the participant shall be included in the study;

- A preliminary phase might be added to train recognition models before the actual tasks to be monitored are performed.

- For what purpose are emotions recognized—is it to better understand the phenomena, or support intervention, or adjust technology (robot, app) behavior?

- In what way would emotion recognition help to develop skills in children with autism?

- The training of which skill would require automatic emotion recognition?

- Which emotions are of the primary interest? Perhaps the most frequently used set of six basic emotions is not the set that we shall be looking for, as practical studies point, rather, to engagement as a crucial affective state for the therapy effectiveness.

4. Discussion

4.1. Answering Research Questions

4.2. Other Observations

- Participant groups used in the analyzed studies were relatively small and skewed in terms of representation of male/female and high-/low-functioning individuals. That might result in lower generalizability of the findings;

- Regarding the stimuli, various approaches were used, including emotions evoked via pictures, videos, and serious games, as well as natural interaction. The applied stimuli were frequently treated as labels, as the “ground truth” was hard to determine. Labels were also applied by self-assessment, assessment by therapist and/or caregiver, or as a combination of those. The problem of stimuli and labelling is known in affective computing; however, in autism-related and child-related studies, it is even more challenging, as children with autism might react to stimuli differently than typically developing children. Moreover, even qualified therapists might find it hard to assign a label to a child’s behavior;

- Physiological signals are most frequently used for the recognition of happiness versus a neutral state, which is surprising, as they reveal more information on arousal (intensity) of the emotional state rather than on valence (positive/negative dimension);

- All channels are prone to some disturbance, and information on a specific symptom might be temporarily or permanently unavailable. The disturbances might be divided into activity-based (depending on a task), child condition-based (depending on child deficits or current state), and setup-based ones (technical and contextual issues).

4.3. Validity Threats

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Jordan, R. Autistic Spectrum Disorders: An Introductory Handbook for Practitioners; David Fulton Publishers: London, UK, 1999. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Association: Washington, DC, USA, 2013. [Google Scholar] [CrossRef]

- Fridenson-Hayo, S.; Berggren, S.; Lassalle, A.; Tal, S.; Pigat, D.; Bölte, S.; Baron-Cohen, S.; Golan, O. Basic and complex emotion recognition in children with autism: Cross-cultural findings. Mol. Autism 2016, 7, 52. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Corbett, B.A.; Carmean, V.; Ravizza, S.; Wendelken, C.; Henry, M.L.; Carter, C.; Rivera, S.M. A functional and structural study of emotion and face processing in children with autism. Psychiatry Res. 2009, 173, 196–205. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Karpus, A.; Landowska, A.; Miler, J.; Pykala, M. Systematic Literature Review—Methods and Hints; Technical Report 1/2020; Gdansk University of Technology, Faculty of Electronics, Telecommunications and Informatics: Gdansk, Poland, 2020. [Google Scholar]

- Ackermann, P.; Kohlschein, C.; Bitsch, J.A.; Wehrle, K.; Jeschke, S. EEG-based automatic emotion recognition: Feature extraction, selection and classification methods. In Proceedings of the 2016 IEEE 18th International Conference on e-Health Networking, Applications and Services (Healthcom), Munich, Germany, 14–17 September 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Kowallik, A.E.; Schweinberger, S.R. Sensor-Based Technology for Social Information Processing in Autism: A Review. Sensors 2019, 19, 4787. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chaidi, I.; Drigas, A. Autism, Expression, and Understanding of Emotions: Literature Review. Int. J. Online Eng. (iJOE) 2020, 16, 94–111. [Google Scholar] [CrossRef] [Green Version]

- Rashidan, M.A.; Sidek, S.N.; Yusof, H.M.; Khalid, M.; Dzulkarnain, A.A.A.; Ghazali, A.S.; Zabidi, S.A.M.; Sidique, F.A.A. Technology-Assisted Emotion Recognition for Autism Spectrum Disorder (ASD) Children: A Systematic Literature Review. IEEE Access 2021, 9, 33638–33653. [Google Scholar] [CrossRef]

- BA, K.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Technical Report EBSE-2007-01; Keele University: Newcastle upon Tyne, UK, 2007. [Google Scholar]

- Turner, M.; Kitchenham, B.; Budgen, D.; Brereton, P. Lessons Learnt Undertaking a Large-Scale Systematic Literature Review. In Proceedings of the 12th International Conference on Evaluation and Assessment in Software Engineering, EASE’08, Bari, Italy, 26–27 June 2008; BCS Learning & Development Ltd.: Swindon, UK, 2008; pp. 110–118. [Google Scholar]

- PRISMA Transparent Reporting of Systematic Reviews and Meta-Analyses. 2017. Available online: http://www.prisma-statement.org/ (accessed on 17 February 2020).

- Gu, S.; Wang, F.; Patel, N.P.; Bourgeois, J.A.; Huang, J.H. A Model for Basic Emotions Using Observations of Behavior in Drosophila. Front. Psychol. 2019, 10, 781. [Google Scholar] [CrossRef]

- Ekman, P. Expression and the nature of emotion. In Approaches to Emotion; Ekman, P., Scherer, K.R., Eds.; L. Erlbaum Associates: Hillsdale, NJ, USA, 1984; pp. 319–344. [Google Scholar]

- Ekman, P. Are there basic emotions? Psychol. Rev. 1992, 99, 550–553. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Javed, H.; Jeon, M.; Park, C.H. Adaptive Framework for Emotional Engagement in Child-Robot Interactions for Autism Interventions. In Proceedings of the 2018 15th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 26–30 June 2018; pp. 396–400. [Google Scholar]

- Kim, J.C.; Azzi, P.; Jeon, M.; Howard, A.M.; Park, C.H. Audio-based emotion estimation for interactive robotic therapy for children with autism spectrum disorder. In Proceedings of the 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Korea, 28 June–1 July 2017; pp. 39–44. [Google Scholar] [CrossRef]

- Liu, C.; Conn, K.; Sarkar, N.; Stone, W. Online Affect Detection and Adaptation in Robot Assisted Rehabilitation for Children with Autism. In Proceedings of the RO-MAN 2007–The 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Korea, 26–29 August 2007; pp. 588–593. [Google Scholar] [CrossRef]

- Liu, C.; Conn, K.; Sarkar, N.; Stone, W. Online Affect Detection and Robot Behavior Adaptation for Intervention of Children with Autism. IEEE Trans. Robot. 2008, 24, 883–896. [Google Scholar]

- Liu, C.; Conn, K.; Sarkar, N.; Stone, W. Physiology-based affect recognition for computer-assisted intervention of children with Autism Spectrum Disorder. Int. J.-Hum.-Comput. Stud. 2008, 66, 662–677. [Google Scholar] [CrossRef]

- Liu, C.; Conn, K.; Sarkar, N.; Stone, W. Affect Recognition in Robot Assisted Rehabilitation of Children with Autism Spectrum Disorder. In Proceedings of the 2007 IEEE International Conference on Robotics andAutomation, Rome, Italy, 10–14 April 2007; pp. 1755–1760. [Google Scholar]

- Rudovic, O.; Lee, J.; Dai, M.; Schuller, B.; Picard, R.W. Personalized machine learning for robot perception of affect and engagement in autism therapy. Sci. Robot. 2018, 3, eaao6760. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krupa, N.; Anantharam, K.; Sanker, M.; Datta, S.; Sagar, J.V. Recognition of emotions in autistic children using physiological signals. Health Technol. 2016, 6, 137–147. [Google Scholar] [CrossRef]

- Akinloye, F.O.; Obe, O.; Boyinbode, O. Development of an affective-based e-healthcare system for autistic children. Sci. Afr. 2020, 9, e00514. [Google Scholar] [CrossRef]

- Del Coco, M.; Leo, M.; Carcagnì, P.; Spagnolo, P.; Mazzeo, P.L.; Bernava, M.; Marino, F.; Pioggia, G.; Distante, C. A Computer Vision Based Approach for Understanding Emotional Involvements in Children with Autism Spectrum Disorders. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 1401–1407. [Google Scholar]

- Leo, M.; Del Coco, M.; Carcagni, P.; Distante, C.; Bernava, M.; Pioggia, G.; Palestra, G. Automatic Emotion Recognition in Robot-Children Interaction for ASD Treatment. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 537–545. [Google Scholar]

- Santhoshkumar, R.; Kalaiselvi Geetha, M. Emotion Recognition System for Autism Children using Non-verbal Communication. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 159–165. [Google Scholar]

- Pour, A.G.; Taheri, A.; Alemi, M.; Meghdari, A. Human–Robot Facial Expression Reciprocal Interaction Platform: Case Studies on Children with Autism. Int. J. Soc. Robot. 2018, 10, 179–198. [Google Scholar]

- Dapretto, M.; Davies, M.S.; Pfeifer, J.H.; Scott, A.A.; Sigman, M.; Bookheimer, S.Y.; Iacoboni, M. Understanding emotions in others: Mirror neuron dysfunction in children with autism spectrum disorders. Nat. Neurosci. 2006, 9, 28–30. [Google Scholar] [CrossRef] [Green Version]

- Silva, V.; Soares, F.; Esteves, J. Mirroring and recognizing emotions through facial expressions for a RoboKind platform. In Proceedings of the 2017 IEEE 5th Portuguese Meeting on Bioengineering (ENBENG), Coimbra, Portugal, 16–18 February 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Guo, C.; Zhang, K.; Chen, J.; Xu, R.; Gao, L. Design and application of facial expression analysis system in empathy ability of children with autism spectrum disorder. In Proceedings of the 2021 16th Conference on Computer Science and Intelligence Systems (FedCSIS), Online, 2–5 September 2021; pp. 319–325. [Google Scholar] [CrossRef]

- Silva, V.; Soares, F.; Esteves, J.S.; Santos, C.P.; Pereira, A.P. Fostering Emotion Recognition in Children with Autism Spectrum Disorder. Multimodal Technol. Interact. 2021, 5, 57. [Google Scholar] [CrossRef]

- Rusli, N.; Sidek, S.N.; Yusof, H.M.; Ishak, N.I.; Khalid, M.; Dzulkarnain, A.A.A. Implementation of Wavelet Analysis on Thermal Images for Affective States Recognition of Children With Autism Spectrum Disorder. IEEE Access 2020, 8, 120818–120834. [Google Scholar] [CrossRef]

- Landowska, A.; Robins, B. Robot Eye Perspective in Perceiving Facial Expressions in Interaction with Children with Autism. In Web, Artificial Intelligence and Network Applications; Barolli, L., Amato, F., Moscato, F., Enokido, T., Takizawa, M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 1287–1297. [Google Scholar]

- Sukumaran, P.; Govardhanan, K. Towards voice based prediction and analysis of emotions in ASD children. J. Intell. Fuzzy Syst. 2021. [Google Scholar] [CrossRef]

- Fadhil, T.Z.; Mandeel, A.R. Live Monitoring System for Recognizing Varied Emotions of Autistic Children. In Proceedings of the 2018 International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 9–11 October 2018; pp. 151–155. [Google Scholar]

- Grossard, C.; Dapogny, A.; Cohen, D.; Bernheim, S.; Juillet, E.; Hamel, F.; Hun, S.; Bourgeois, J.; Pellerin, H.; Serret, S.; et al. Children with autism spectrum disorder produce more ambiguous and less socially meaningful facial expressions: An experimental study using random forest classifiers. Mol. Autism 2020, 11, 5. [Google Scholar] [CrossRef] [Green Version]

- Rani, P. Emotion Detection of Autistic Children Using Image Processing. In Proceedings of the 2019 Fifth International Conference on Image Information Processing (ICIIP), Shimla, India, 15–17 November 2019; pp. 532–535. [Google Scholar] [CrossRef]

- Hirokawa, M.; Funahashi, A.; Itoh, Y.; Suzuki, K. Design of affective robot-assisted activity for children with autism spectrum disorders. In Proceedings of the 23rd IEEE International Symposium on Robot and Human InteractiveCommunication, Edinburgh, UK, 25–29 August 2014; pp. 365–370. [Google Scholar]

- Li, J.; Bhat, A.; Barmaki, R. A Two-stage Multi-Modal Affect Analysis Framework for Children with Autism Spectrum Disorder. arXiv 2021, arXiv:2106.09199. [Google Scholar]

- Marinoiu, E.; Zanfir, M.; Olaru, V.; Sminchisescu, C. 3D Human Sensing, Action and Emotion Recognition in Robot Assisted Therapy of Children with Autism. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2158–2167. [Google Scholar]

- Sarabadani, S.; Schudlo, L.C.; Samadani, A.; Kushki, A. Physiological Detection of Affective States in Children with Autism Spectrum Disorder. IEEE Trans. Affect. Comput. 2018, 11, 588–600. [Google Scholar] [CrossRef]

- Gay, V.; Leijdekkers, P.; Wong, F. Using sensors and facial expression recognition to personalize emotion learning for autistic children. Stud. Health Technol. Inform. 2013, 189, 71–76. [Google Scholar] [CrossRef] [PubMed]

- Jarraya, S.K.; Masmoudi, M.; Hammami, M. A comparative study of Autistic Children Emotion recognition based on Spatio-Temporal and Deep analysis of facial expressions features during a Meltdown Crisis. Multimed. Tools Appl. 2020, 80, 83–125. [Google Scholar] [CrossRef]

- Jarraya, S.K.; Masmoudi, M.; Hammami, M. Compound Emotion Recognition of Autistic Children during Meltdown Crisis Based on Deep Spatio-Temporal Analysis of Facial Geometric Features. IEEE Access 2020, 8, 69311–69326. [Google Scholar] [CrossRef]

- Popescu, A.L.; Popescu, N. Machine Learning based Solution for Predicting the Affective State of Children with Autism. In Proceedings of the 2020 International Conference on e-Health and Bioengineering (EHB), Iasi, Romania, 29–30 October 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Banire, B.; Al Thani, D.; Makki, M.; Qaraqe, M.; Anand, K.; Connor, O.; Khowaja, K.; Mansoor, B. Attention Assessment: Evaluation of Facial Expressions of Children with Autism Spectrum Disorder. In Universal Access in Human-Computer Interaction. Multimodality and Assistive Environments; Antona, M., Stephanidis, C., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 32–48. [Google Scholar]

- Kuusikko, S.; Haapsamo, H.; Jansson-Verkasalo, E.; Hurtig, T.; Mattila, M.L.; Ebeling, H.; Jussila, K.; Bölte, S.; Moilanen, I. Emotion Recognition in Children and Adolescents with Autism Spectrum Disorders. J. Autism Dev. Disord. 2009, 39, 938–945. [Google Scholar] [CrossRef]

- Bal, E.; Harden, E.; Lamb, D.; Van Hecke, A.V.; Denver, J.W.; Porges, S.W. Emotion Recognition in Children with Autism Spectrum Disorders: Relations to Eye Gaze and Autonomic State. J. Autism Dev. Disord. 2010, 40, 358–370. [Google Scholar] [CrossRef]

- Tang, T.Y. Helping Neuro-Typical Individuals to “Read” the Emotion of Children with Autism Spectrum Disorder: An Internet-of-Things Approach. In Proceedings of the 15th International Conference on Interaction Design and Children, IDC’16, Manchester, UK, 21–24 June 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 666–671. [Google Scholar] [CrossRef]

- Su, Q.; Chen, F.; Li, H.; Yan, N.; Wang, L. Multimodal Emotion Perception in Children with Autism Spectrum Disorder by Eye Tracking Study. In Proceedings of the 2018 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), Sarawak, Malaysia, 3–6 December 2018; pp. 382–387. [Google Scholar]

- Pop, C.; Vanderborght, B.; David, D. Robot-Enhanced CBT for dysfunctional emotions in social situations for children with ASD. J.-Evid.-Based Psychother. 2017, 17, 119–132. [Google Scholar] [CrossRef]

- Golan, O.; Gordon, I.; Fichman, K.; Keinan, G. Specific Patterns of Emotion Recognition from Faces in Children with ASD: Results of a Cross-Modal Matching Paradigm. J. Autism Dev. Disord. 2018, 48, 844–852. [Google Scholar] [CrossRef]

- Golan, O.; Sinai-Gavrilov, Y.; Baron-Cohen, S. The Cambridge Mindreading Face-Voice Battery for Children (CAM-C): Complex emotion recognition in children with and without autism spectrum conditions. Mol. Autism 2015, 6, 22. [Google Scholar] [CrossRef] [Green Version]

- Buitelaar, J.K.; Van Der Wees, M.; Swaab-barneveld, H.; Van Der Gaag, R.J. Theory of mind and emotion-recognition functioning in autistic spectrum disorders and in psychiatric control and normal children. Dev. Psychopathol. 1999, 11, 39–58. [Google Scholar] [CrossRef]

- Rieffe, C.; Terwogt, M.M.; Stockmann, L. Understanding Atypical Emotions Among Children with Autism. J. Autism Dev. Disord. 2000, 30, 195–203. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Begeer, S.; Terwogt, M.M.; Rieffe, C.; Stegge, H.; Olthof, T.; Koot, H.M. Understanding emotional transfer in children with autism spectrum disorders. Autism 2010, 14, 629–640. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Losh, M.; Capps, L. Understanding of emotional experience in autism: Insights from the personal accounts of high-functioning children with autism. Dev. Psychol. 2006, 42, 809–818. [Google Scholar] [CrossRef] [Green Version]

- Capps, L.; Yirmiya, N.; Sigman, M. Understanding of Simple and Complex Emotions in Non-Retarded Children with Autism. J. Child Psychol. Psychiatry 1992, 33, 1169–1182. [Google Scholar] [CrossRef]

- Syeda, U.H.; Zafar, Z.; Islam, Z.Z.; Tazwar, S.M.; Rasna, M.J.; Kise, K.; Ahad, M.A.R. Visual Face Scanning and Emotion Perception Analysis between Autistic and Typically Developing Children. In Proceedings of the 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2017 ACM International Symposium on Wearable Computers, UbiComp ’17, Maui, HI, USA, 11–15 September 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 844–853. [Google Scholar] [CrossRef]

- Schuller, B. What Affective Computing Reveals about Autistic Children’s Facial Expressions of Joy or Fear. Computer 2018, 51, 7–8. [Google Scholar] [CrossRef] [Green Version]

- Monteiro, R.; Simoes, M.; Andrade, J.; Castelo-Branco, M. Processing of Facial Expressions in Autism: A Systematic Review of EEG/ERP Evidence. Rev. J. Autism Dev. Disord. 2017, 4, 255–276. [Google Scholar] [CrossRef]

- Black, M.H.; Chen, N.T.; Iyer, K.K.; Lipp, O.V.; Bölte, S.; Falkmer, M.; Tan, T.; Girdler, S. Mechanisms of facial emotion recognition in autism spectrum disorders: Insights from eye tracking and electroencephalography. Neurosci. Biobehav. Rev. 2017, 80, 488–515. [Google Scholar] [CrossRef]

- Di Palma, S.; Tonacci, A.; Narzisi, A.; Domenici, C.; Pioggia, G.; Muratori, F.; Billeci, L. Monitoring of autonomic response to sociocognitive tasks during treatment in children with Autism Spectrum Disorders by wearable technologies: A feasibility study. Comput. Biol. Med. 2017, 85, 143–152. [Google Scholar] [CrossRef]

- Sorensen, T.; Zane, E.; Feng, T.; Narayanan, S.; Grossman, R. Cross-Modal Coordination of Face-Directed Gaze and Emotional Speech Production in School-Aged Children and Adolescents with ASD. Sci. Rep. 2019, 9, 18301. [Google Scholar] [CrossRef]

- Haque, M.I.U.; Valles, D. A Facial Expression Recognition Approach Using DCNN for Autistic Children to Identify Emotions. In Proceedings of the 2018 IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 1–3 November 2018; pp. 546–551. [Google Scholar] [CrossRef]

| Search Field | |||||

|---|---|---|---|---|---|

| All Fields | Keywords | Topic | Title | ||

| Database | ACM Digital Library | 126 | 8 | na | 21 |

| Elsevier Science Direct | 71,860 | 1278 | - | 106 | |

| (96) | |||||

| IEEE Xplore | 272 | 5 | na | 44 | |

| Scopus | 92,484 | 3960 | - | 561 | |

| Springer Link | 46,086 | na | na | na | |

| Web of Science | 10,615 | na | 8395 | 509 | |

| PubMed | 6999 | na | 3349 | 324 | |

| Total | 228,442 | 16,995 | 1565 | ||

| (1555) | |||||

| Emotion Group | Number of Papers | Emotions—Notions Used |

|---|---|---|

| attention-related | 6 | engagement (3): [19,22,23] |

| involvement (2): [21,24] | ||

| attention (1): [25] | ||

| bored (1): [25] | ||

| fear-related | 16 | fear (12): [17,26,27,28,29,30,31,32,33,34,35,36] |

| anxiety (3): [20,22,25] | ||

| trepidation (1): [37] | ||

| joy-related | 21 | joy (5): [21,26,27,28,29] |

| liking (3): [19,20,22] | ||

| happiness (12): [17,24,25,30,31,32,33,34,35,37,38,39] | ||

| smile (1): [40] | ||

| relaxation (1): [37] |

| Emotion | Number of Papers | Papers |

|---|---|---|

| joy | 21 | [17,19,20,21,22,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39] |

| anger | 12 | [17,27,28,29,30,31,32,33,35,36,38,39] |

| fear | 12 | [17,26,27,28,29,30,31,32,33,34,35,36] |

| disgust | 7 | [17,25,27,29,32,35,36] |

| sadness | 16 | [17,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39] |

| surprise | 7 | [17,27,29,31,32,33,35] |

| neutral | 15 | [21,24,25,27,28,29,30,31,32,33,35,36,38,39,41] |

| Emotions Recognized Together | Number of Papers | Papers |

|---|---|---|

| joy | 3 | [19,20,22] |

| joy, neutral | 2 | [21,24] |

| joy, sadness | 2 | [21,37] |

| joy, fear, sadness | 2 | [26,34] |

| joy, disgust, sadness, neutral | 1 | [25] |

| joy, anger, sadness, neutral | 2 | [38,39] |

| joy, anger, fear, sadness, neutral | 2 | [28,30] |

| joy, anger, fear, disgust, sadness, surprise | 1 | [17] |

| joy, anger, fear, sadness, surprise, neutral | 2 | [31,33] |

| joy, anger, fear, disgust, sadness, neutral | 1 | [36] |

| joy, anger, fear, disgust, sadness, surprise, netural | 4 | [27,29,32,35] |

| Life Activity | Modality | Channel | Papers |

|---|---|---|---|

| movement | facial expressions | RGB video | [17,23,26,27,29,31,32,33,35,38,41] |

| depth video | [29,31] | ||

| images | [39] | ||

| EMG | [40] | ||

| body posture | RGB video | [23,42] | |

| depth video | [42] | ||

| eye gaze | RGB video | [17] | |

| head movement | RGB video | [23,28] | |

| gestures | RGB video | [23,28,42] | |

| depth video | [42] | ||

| motion | RGB video | [17,23,42] | |

| depth video | [17,42] | ||

| sound | prosody of speech | audio | [17,23,25,30,36,41] |

| vocalization | audio | [17,23,25,36,41] | |

| heart activity | heart rate | ECG | [19,20,21,22,43] |

| BVP | [21,23,24] | ||

| variability | HRV | ECG | [19,20,22,43] |

| BVP | [21,24] | ||

| muscle activities | muscle tension | EMG | [19,20,21,22] |

| perspiration | skin conductance | EDA | [19,20,21,22,23,24,25,37,43] |

| respiration | RESP intensity and period | chest size | [43] |

| thermal regulation | peripheral temperature | temperature | [20,21,23,34,43] |

| brain activity | neural activity | fMRI | [30] |

| Life Activity | Modality | Channel | Joy | Anger | Fear | Disgust | Sadness | Surprise | Neutral | Valence/Arousal |

|---|---|---|---|---|---|---|---|---|---|---|

| movement | facial expressions | RGB video | [17,26,27,29,31,32,33,35,38] | [17,27,29,31,32,33,35,38] | [17,26,27,29,31,32,33,35] | [17,27,29,32,35] | [17,26,27,29,31,32,33,35,38] | [17,27,29,31,32,33,35] | [27,29,31,32,33,35,38,41] | [17,23,35] |

| depth video | [29,31] | [29,31] | [29,31] | [29] | [29,31] | [29,31] | [29,31] | |||

| images | [39] | [39] | [39] | [39] | ||||||

| eye gaze | RGB video | [17] | [17] | [17] | [17] | [17] | [17] | [17] | ||

| head movement | RGB video | [28] | [28] | [28] | [28] | [28] | [23] | |||

| gestures | RGB video | [28] | [28] | [28] | [28] | [28] | [23,42] | |||

| depth video | [42] | |||||||||

| motion | RGB video | [17] | [17] | [17] | [17] | [17] | [17] | [17,23,42] | ||

| depth video | [17] | [17] | [17] | [17] | [17] | [17] | [17,42] | |||

| body posture | RGB video | [23,42] | ||||||||

| sound | prosody of speech | audio | [17,25,36] | [17,36] | [17,36] | [17,25,36] | [17,25,36] | [17] | [25,36] | [17,23] |

| vocalization | audio | [17,25,36] | [17,36] | [17,36] | [17,25,36] | [17,25,36] | [17] | [25,36] | [17,23] | |

| heart activity | heart rate | ECG | [19,20,21,22] | [21] | [43] | |||||

| BVP | [21,24] | [21,24] | [23] | |||||||

| HRV | ECG | [19,20,21,22] | [21] | [43] | ||||||

| BVP | [24] | [24] | ||||||||

| muscle activity | muscle tension | EMG | [19,20,21,22] | [21] | ||||||

| perspira-tion | skin conductance | EDA | [19,20,21,22,24,25,37] | [25] | [25,37] | [21,24,25] | [23,43] | |||

| thermal regulation | peripheral temperature | tempera-ture | [20,21,34] | [34] | [34] | [21] | [23,34,43] | |||

| brain activity | neural activity | fMRI | [30] | [30] | [30] | [30] | [30] | |||

| respiration | RESP intensity and period | chest size | [43] |

| Life Activity | Modality | Channel | Attention-Related Emotions | Fear-Related Emotions | Joy-Related Emotions |

|---|---|---|---|---|---|

| movement | facial expressions | RGB video | [23] | [17,26,27,29,31,32,33,35] | [17,26,27,29,31,32,33,35,38] |

| depth video | [29,31] | [29,31] | |||

| images | [39] | ||||

| EMG | [40] | ||||

| body posture | RGB video | [23] | |||

| eye gaze | RGB video | [17] | [17] | ||

| head movement | RGB video | [23] | [28] | [28] | |

| gestures | RGB video | [23] | [28] | [28] | |

| motion | RGB video | [23] | [17] | [17] | |

| depth video | [17] | [17] | |||

| sound | prosody of speech | audio | [23,25] | [17,25,36] | [17,25,36] |

| vocalization | audio | [23,25] | [17,25,36] | [17,25,36] | |

| heart activity | heart rate | ECG | [20,21,22] | [20,22] | [19,20,21,22] |

| BVP | [21,23,24] | [21,24] | |||

| HRV | ECG | [20,21,22] | [20,22] | [19,20,21,22] | |

| BVP | [24] | [24] | |||

| muscle activities | muscle tension | EMG | [20,21,22] | [20,22] | [19,20,21,22] |

| perspiration | skin conductance | EDA | [20,21,22,23,24,25] | [20,22,25,37] | [19,20,21,22,24,25,37] |

| thermal regulation | peripheral temperature | temperature | [20,21,23] | [20,34] | [20,21,34] |

| brain activity | neural activity | fMRI | [30] | [30] |

| Emotions Recognized | NN | FC-M | SVM | RF | HMM | k-NN | LDA | FL |

|---|---|---|---|---|---|---|---|---|

| joy | [26,32,36,39] | [29] | [19,20,21,22,24,27,28,31,33,39] | [28,38] | [17] | [34] | [25] | |

| anger | [32,36,39] | [29] | [28,31,33,39] | [38] | [17] | |||

| fear | [26,32,36] | [29] | [27,28,31,33] | [28] | [17] | [34] | ||

| disgust | [32,36] | [29] | [27] | [17] | [25] | |||

| sadness | [26,32,36,39] | [29] | [27,28,31,33,39] | [28,38] | [17] | [34] | [25] | |

| surprise | [32] | [29] | [27,31,33] | [17] | ||||

| neutral | [32,36,39,41] | [29] | [21,24,27,28,31,33,39] | [28,38] | [25] | |||

| valence and arousal | [23,42] | [43] | [17] | [34,43] | [43] | |||

| attention-related emotions | [23] | [20,21,22,24] | [25] | |||||

| fear-related emotions | [26,32,36] | [29] | [20,22,27,28,31,33] | [28] | [17] | [34] | [25] | |

| joy-related emotions | [26,32,36,39,40] | [29] | [19,20,21,22,24,27,28,31,33,39] | [28,38] | [17] | [34] | [25] |

| Unimodal/ Multimodal | NN | FC-M | SVM | RF | HMM | k-NN | LDA | FL |

|---|---|---|---|---|---|---|---|---|

| single channel only | [26,32,36,39,40] | [29] | [27,31,33,39] | [38] | [34] | |||

| multiple channels separately analyzed | [23,41,42] | [43] | [17] | [43] | [43] | |||

| early fusion | [23] | [19,20,21,22,24,28] | [28] | [43] | [43] | [25] | ||

| late fusion | [43] | [43] | [43] | |||||

| hybrid fusion | [43] | [43] |

| Unimodal/Multimodal | Joy | Anger | Fear | Disgust | Sadness | Surprise | Neutral | Valence and Arousal |

|---|---|---|---|---|---|---|---|---|

| single channel only | [26,27,29,30,31,32,33,34,35,36,37,38,39] | [27,29,30,31,32,33,35,36,38,39] | [26,27,29,30,31,32,33,34,35,36,35,36] | [26,27,29,31,32,35,36] | [27,29,30,31,32,33,34,35,36,37,38,39] | [27,29,31,32,33,35] | [30,32,33,35,36,38,39] | [34,35] |

| multiple channels separately analyzed | [17] | [17] | [17] | [17] | [17] | [17] | [41] | [17,23,42,43] |

| early fusion | [19,20,21,22,24,25,28] | [21,28] | [28] | [25] | [25,28] | [21,24,25,28] | [23,43] | |

| late fusion | [43] | |||||||

| hybrid fusion | [43] |

| Unimodal/Multimodal | Attention-Related Emotions | Fear-Related Emotions | Joy-Related Emotions |

|---|---|---|---|

| single channel only | [26,27,29,30,31,32,33,34,35,36,37] | [26,27,29,30,31,32,33,34,35,36,37,38,39,40] | |

| multiple channels separately analyzed | [23] | [17] | [17] |

| early fusion | [20,21,22,23,24,25] | [20,22,25,28] | [19,20,21,22,24,25,28] |

| Life Activity | Modality | Channel | Single Channel Only | Multiple Channels Separately Analyzed | Early Fusion | Late Fusion | Hybrid Fusion |

|---|---|---|---|---|---|---|---|

| movement | facial expressions | RGB video | [26,27,29], [31,32,38], [33,35] | [17,23,41] | [23] | ||

| depth video | [29,31] | ||||||

| images | [39] | ||||||

| EMG | [40] | ||||||

| body posture | RGB video | [23,42] | [23] | ||||

| depth video | [42] | ||||||

| eye gaze | RGB video | [17] | |||||

| head movement | RGB video | [23] | [23,28] | ||||

| gestures | RGB video | [23,42] | [23,28] | ||||

| depth video | [42] | ||||||

| motion | RGB video | [17,23,42] | [23] | ||||

| depth video | [17,42] | ||||||

| sound | prosody of speech | audio | [36] | [17,23,41] | [23,25] | ||

| vocalization | audio | [36] | [17,23,41] | [23,25] | |||

| heart activity | heart rate | ECG | [22,43] | [19,20,21,43] | [43] | [43] | |

| BVP | [23] | [21,23,24] | |||||

| HRV | ECG | [22,43] | [19,20,21,43] | [43] | [43] | ||

| BVP | [24] | ||||||

| muscle activity | muscle tension | EMG | [22] | [19,20,21] | |||

| perspiration | skin conductance | EDA | [37] | [22,23,43] | [19,20,21,23,24,25,43] | [43] | [43] |

| respiration | RESP intensity and period | chest size | [43] | [43] | [43] | [43] | |

| thermal regulation | peripheral temperature | temperature | [34] | [23,43] | [20,21,23,43] | [43] | [43] |

| brain activity | neural activity | fMRI | [30] |

| Modalities | Number of Papers | Papers |

|---|---|---|

| HRV, skin conductance | 1 | [24] |

| head movement, gestures | 1 | [28] |

| prosody of speech, vocalization, skin conductance | 1 | [25] |

| facial expressions, prosody of speech, vocalization | 1 | [41] |

| body posture, gestures, motion | 1 | [42] |

| heart rate, HRV, muscle tension, skin conductance | 4 | [19,20,21,22] |

| heart rate, HRV, respiration, skin conductance, peripheral temperature | 1 | [43] |

| heart rate, HRV, skin conductance, RESP intensity and period | 1 | [43] |

| facial expressions, eye gaze, motion, prosody of speech, vocalization | 1 | [17] |

| facial expressions, body posture, head movement, gestures, motion, prosody of speech, vocalization, heart rate, skin conductance, temperature | 1 | [23] |

| bp | eg | hm | g | m | pos | v | hr | HRV | mt | sc | RESPiap | t | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| fe | 2 | 1 | 1 | 1 | 2 | 3 | 3 | 1 | 0 | 0 | 1 | 0 | 1 |

| bp | 0 | 1 | 2 | 2 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | |

| eg | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | ||

| hm | 2 | 1 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | |||

| g | 2 | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | ||||

| m | 2 | 2 | 1 | 0 | 0 | 1 | 0 | 1 | |||||

| pos | 3 | 1 | 0 | 0 | 2 | 0 | 1 | ||||||

| v | 1 | 0 | 0 | 2 | 0 | 1 | |||||||

| hr | 6 | 4 | 6 | 2 | 2 | ||||||||

| HRV | 4 | 7 | 2 | 1 | |||||||||

| mt | 4 | 0 | 0 | ||||||||||

| sc | 2 | 2 | |||||||||||

| RESPiap | 1 |

| Depth Video | EMG | Audio | ECG | BVP | EDA | Temp. | Chest Size | |

|---|---|---|---|---|---|---|---|---|

| RGB video | 1 | 0 | 3 | 0 | 1 | 1 | 1 | 0 |

| depth video | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| EMG | 0 | 4 | 1 | 4 | 2 | 0 | ||

| audio | 0 | 1 | 2 | 1 | 0 | |||

| ECG | 1 | 6 | 4 | 1 | ||||

| BVP | 3 | 2 | 0 | |||||

| EDA | 5 | 1 | ||||||

| temp. | 1 |

| Notions Used | Number of Papers | Number of Occurrences |

|---|---|---|

| autistic child\children | 32 | 341 |

| child\children with autism | 49 | 474 |

| child\children with ASD | 35 | 642 |

| child\children with ASC | 2 | 29 |

| child\children on autism spectrum child\children with autism spectrum | 41 | 117 |

| autism child\children | 4 | 13 |

| normal child\children | 15 | 52 |

| typically developing child\children | 22 | 103 |

| neurotypical child\children | 2 | 5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Landowska, A.; Karpus, A.; Zawadzka, T.; Robins, B.; Erol Barkana, D.; Kose, H.; Zorcec, T.; Cummins, N. Automatic Emotion Recognition in Children with Autism: A Systematic Literature Review. Sensors 2022, 22, 1649. https://doi.org/10.3390/s22041649

Landowska A, Karpus A, Zawadzka T, Robins B, Erol Barkana D, Kose H, Zorcec T, Cummins N. Automatic Emotion Recognition in Children with Autism: A Systematic Literature Review. Sensors. 2022; 22(4):1649. https://doi.org/10.3390/s22041649

Chicago/Turabian StyleLandowska, Agnieszka, Aleksandra Karpus, Teresa Zawadzka, Ben Robins, Duygun Erol Barkana, Hatice Kose, Tatjana Zorcec, and Nicholas Cummins. 2022. "Automatic Emotion Recognition in Children with Autism: A Systematic Literature Review" Sensors 22, no. 4: 1649. https://doi.org/10.3390/s22041649

APA StyleLandowska, A., Karpus, A., Zawadzka, T., Robins, B., Erol Barkana, D., Kose, H., Zorcec, T., & Cummins, N. (2022). Automatic Emotion Recognition in Children with Autism: A Systematic Literature Review. Sensors, 22(4), 1649. https://doi.org/10.3390/s22041649