A Methodology for Extracting Power-Efficient and Contrast Enhanced RGB Images

Abstract

:1. Introduction

2. Materials and Methods

2.1. Histogram

- Brightness Preserving Histogram Equalization with Maximum Entropy;

- Brightness Preserving Bihistogram Equalization (BPHE);

- Bihistogram Equalization (BBHE);

- Dualistic Subimage Histogram Equalization (DSHE);

- Recursive Mean-Separate Histogram Equalization;

- Minimum Within-Class Variance Multi-Histogram Equalization (MWCVMHE);

- Minimum Middle Level Squared Error Multi-Histogram Equalization (MMLSEMHE).

2.2. Proposed Approach

2.2.1. Description

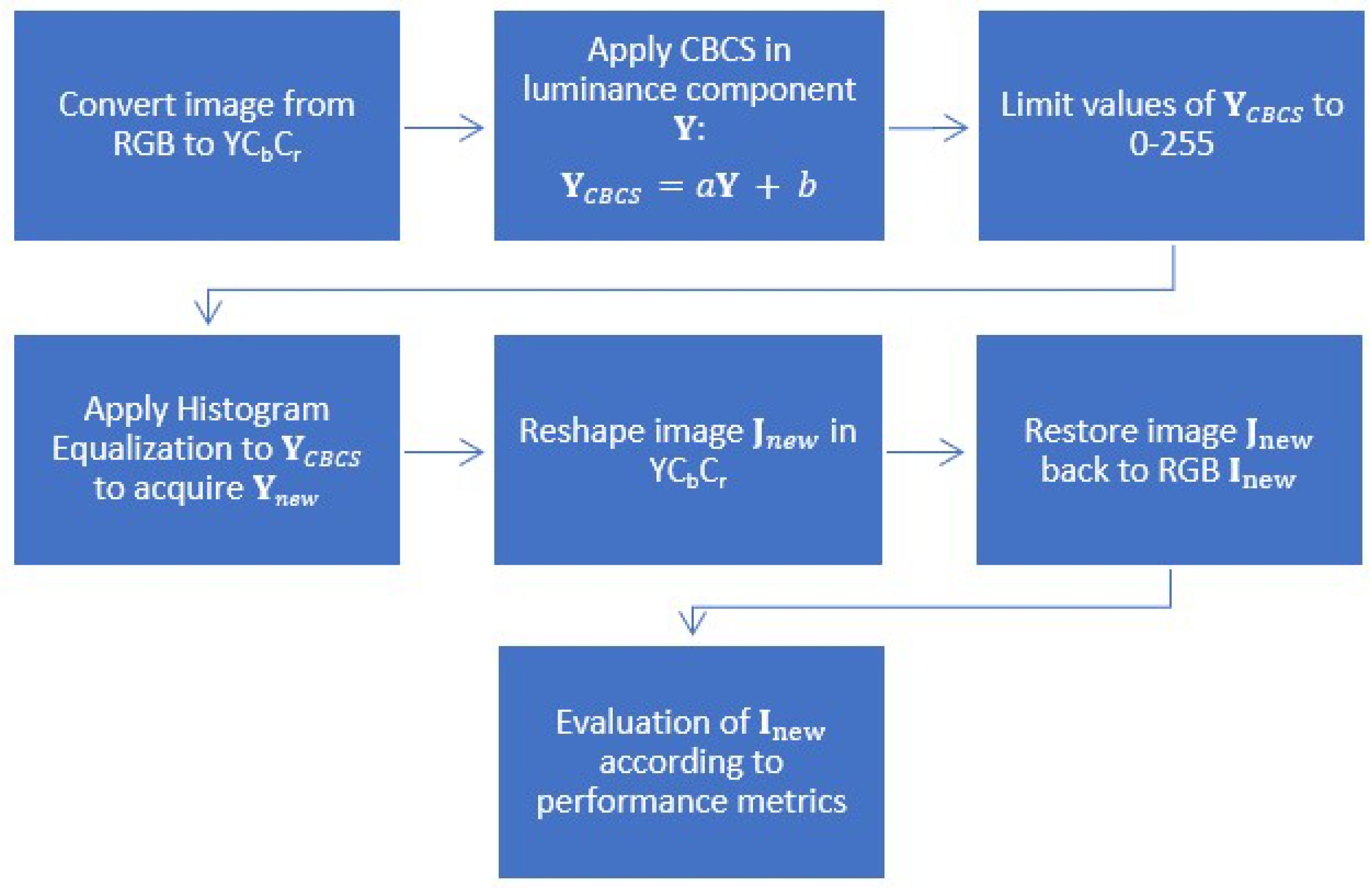

2.2.2. The Main Steps of the Algorithm

- Reads the RGB input image .

- Converts the image from the RGB to YCbCr and retrieves the three components .

- Applies the Concurrent Brightness & Contrast Scaling technique to the intensity component (not the color components). It is a linear function of the form , where captures the intensity pixel values of the transformed image and denotes the intensity of the original image. We should find the appropriate and b that reduce the image energy without compromising its quality. Parameter a adjusts the contrast, and parameter b adjusts the image brightness.

- Checks if the resulting values of step 3 are between and . If it is below 0, they are set to 0, while if it is above 255, they are set to 255.

- The component (after the previous transformation) is additionally subjected to a global histogram equalization that eliminates the contrasts.

- Replaces the values of the component with the ones that emerged from the previous step, reassembles image components in YCbCr, and returns the processed image to RGB.

- Estimates the energy consumed by the new image, which is expected to be lower than the original, and evaluates the quality of the resulting image through the Peak Signal-to-Noise Ratio (), Mean Squared Error (), and Structural Similarity Index () performance metrics.

2.2.3. Histogram Equalization of with and without Power Constraint

| Algorithm 1 HE-CBCS. |

|

2.3. Image Quality Evaluation Metrics

2.4. Power Rating Metrics

3. Results

3.1. Experiments Environment and Data

3.2. Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Thantharate, A.; Beard, C.; Marupaduga, S. An Approach to Optimize Device Power Performance Towards Energy Efficient Next Generation 5G Networks. In Proceedings of the 2019 IEEE 10th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 10–12 October 2019; pp. 749–754. [Google Scholar]

- Pramanik, P.K.D.; Sinhababu, N.; Mukherjee, B.; Padmanaban, S.; Maity, A.; Upadhyaya, B.K.; Holm-Nielsen, J.B.; Choudhury, P. Power consumption analysis, measurement, management, and issues: A state-of-the-art review of smartphone battery and energy usage. IEEE Access 2019, 7, 182113–182172. [Google Scholar] [CrossRef]

- Herglotz, C.; Coulombe, S.; Vazquez, C.; Vakili, A.; Kaup, A.; Grenier, J.C. Power modeling for video streaming applications on mobile devices. IEEE Access 2020, 8, 70234–70244. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Z.J.; Quan, Z.; Yin, J.; Chen, Y.; Guo, M. Optimizing power consumption of mobile devices for video streaming over 4G LTE networks. Peer-to-Peer Netw. Appl. 2018, 11, 1101–1114. [Google Scholar] [CrossRef]

- Yan, M.; Chan, C.A.; Gygax, A.F.; Yan, J.; Campbell, L.; Nirmalathas, A.; Leckie, C. Modeling the total energy consumption of mobile network services and applications. Energies 2019, 12, 184. [Google Scholar] [CrossRef] [Green Version]

- Tawalbeh, M.; Eardley, A.; Tawalbeh, L. Studying the energy consumption in mobile devices. Procedia Comput. Sci. 2016, 94, 183–189. [Google Scholar] [CrossRef] [Green Version]

- Riaz, M.N. Energy consumption in hand-held mobile communication devices: A comparative study. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; pp. 1–5. [Google Scholar]

- Ahmadoh, E.; Lo’ai, A.T. Power consumption experimental analysis in smart phones. In Proceedings of the 2018 Third International Conference on Fog and Mobile Edge Computing (FMEC), Barcelona, Spain, 23–26 April 2018; pp. 295–299. [Google Scholar]

- Carroll, A.; Heiser, G. An analysis of power consumption in a smartphone. In Proceedings of the USENIX Annual Technical Conference, Boston, MA, USA, 23–25 June 2010; Volume 14, p. 21. [Google Scholar]

- Kennedy, M.; Venkataraman, H.; Muntean, G.M. Energy consumption analysis and adaptive energy saving solutions for mobile device applications. In Green IT: Technologies and Applications; Springer: Berlin/Heidelberg, Germany, 2011; pp. 173–189. [Google Scholar]

- Kang, S.J. Image-quality-based power control technique for organic light emitting diode displays. J. Disp. Technol. 2015, 11, 104–109. [Google Scholar] [CrossRef]

- Asnani, S.; Canu, M.G.; Montrucchio, B. Producing Green Computing Images to Optimize Power Consumption in OLED-Based Displays. In Proceedings of the 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), Milwaukee, WI, USA; 2019; Volume 1, pp. 529–534. [Google Scholar]

- Dash, P.; Hu, Y.C. How much battery does dark mode save? An accurate OLED display power profiler for modern smartphones. In Proceedings of the 19th Annual International Conference on Mobile Systems, Applications, and Services, Virtual, Online, USA, 24 June–2 July 2021; pp. 323–335. [Google Scholar]

- Lai, E.H.; Chen, B.H.; Shi, L.F. Power constrained contrast enhancement by joint L2, 1-norm regularized sparse coding for OLED display. In Proceedings of the 2018 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Miami, FL, USA, 10–12 April 2018; pp. 309–314. [Google Scholar]

- Yin, J.L.; Chen, B.H.; Lai, E.H.; Shi, L.F. Power-constrained image contrast enhancement through sparse representation by joint mixed-norm regularization. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 2477–2488. [Google Scholar] [CrossRef]

- Yin, J.L.; Chen, B.H.; Peng, Y.T.; Tsai, C.C. Deep Battery Saver: End-to-End Learning for Power Constrained Contrast Enhancement. IEEE Trans. Multimed. 2020, 23, 1049–1059. [Google Scholar] [CrossRef]

- Gupta, B.; Agarwal, T.K. New contrast enhancement approach for dark images with non-uniform illumination. Comput. Electr. Eng. 2018, 70, 616–630. [Google Scholar] [CrossRef]

- Wang, X.; Chen, L. An effective histogram modification scheme for image contrast enhancement. Signal Process. Image Commun. 2017, 58, 187–198. [Google Scholar] [CrossRef]

- Xiao, B.; Tang, H.; Jiang, Y.; Li, W.; Wang, G. Brightness and contrast controllable image enhancement based on histogram specification. Neurocomputing 2018, 275, 2798–2809. [Google Scholar] [CrossRef]

- Jeon, G.; Lee, Y.S. Histogram Equalization-Based Color Image Processing in Different Color Model. Adv. Sci. Technol. Lett. 2013, 28, 54–57. [Google Scholar]

- Kamandar, M. Automatic color image contrast enhancement using Gaussian mixture modeling, piecewise linear transformation, and monotone piecewise cubic interpolant. Signal Image Video Process. 2018, 12, 625–632. [Google Scholar] [CrossRef]

- Jeon, G. Color image enhancement by histogram equalization in heterogeneous color space. Int. J. Multimed. Ubiquitous Eng. 2014, 9, 309–318. [Google Scholar] [CrossRef]

- García-Lamont, F.; Cervantes, J.; López-Chau, A.; Ruiz, S. Contrast enhancement of RGB color images by histogram equalization of color vectors’ intensities. In International Conference on Intelligent Computing; Springer: Berlin/Heidelberg, Germany, 2018; pp. 443–455. [Google Scholar]

- Cao, Q.; Shi, Z.; Wang, R.; Wang, P.; Yao, S. A brightness-preserving two-dimensional histogram equalization method based on two-level segmentation. Multimed. Tools Appl. 2020, 79, 27091–27114. [Google Scholar] [CrossRef]

- Dong, M.; Choi, Y.S.K.; Zhong, L. Power-saving color transformation of mobile graphical user interfaces on OLED-based displays. In Proceedings of the 2009 ACM/IEEE International Symposium on Low Power Electronics and Design, San Fancisco, CA, USA, 19–21 August 2009; pp. 339–342. [Google Scholar]

- Lee, Y.; Song, M. Adaptive Color Selection to Limit Power Consumption for Multi-Object GUI Applications in OLED-Based Mobile Devices. Energies 2020, 13, 2425. [Google Scholar] [CrossRef]

- Lee, C.; Lee, C.; Kim, C.S. Power-constrained contrast enhancement for OLED displays based on histogram equalization. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 1689–1692. [Google Scholar]

- Kalaiselvi, T.; Vasanthi, R.; Sriramakrishnan, P. A Study on Validation Metrics of Digital Image Processing. In Proceedings of the Computational Methods, Communication Techniques and Informatics, Dindigul, India, 27–28 January 2017; p. 396. [Google Scholar]

- Snell, J.; Ridgeway, K.; Liao, R.; Roads, B.D.; Mozer, M.C.; Zemel, R.S. Learning to generate images with perceptual similarity metrics. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 4277–4281. [Google Scholar]

- Tufail, Z.; Khurshid, K.; Salman, A.; Nizami, I.F.; Khurshid, K.; Jeon, B. Improved dark channel prior for image defogging using RGB and YCbCr color space. IEEE Access 2018, 6, 32576–32587. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Kang, S.J. Perceptual quality-aware power reduction technique for organic light emitting diodes. J. Disp. Technol. 2015, 12, 519–525. [Google Scholar] [CrossRef]

- Pagliari, D.J.; Di Cataldo, S.; Patti, E.; Macii, A.; Macii, E.; Poncino, M. Low-overhead adaptive brightness scaling for energy reduction in OLED displays. IEEE Trans. Emerg. Top. Comput. 2019, 9, 1625–1636. [Google Scholar] [CrossRef]

- Tan, Y.; Qin, J.; Xiang, X.; Ma, W.; Pan, W.; Xiong, N.N. A robust watermarking scheme in YCbCr color space based on channel coding. IEEE Access 2019, 7, 25026–25036. [Google Scholar] [CrossRef]

- Lee, C.; Lee, C.; Lee, Y.Y.; Kim, C.S. Power-constrained contrast enhancement for emissive displays based on histogram equalization. IEEE Trans. Image Process. 2011, 21, 80–93. [Google Scholar] [PubMed]

- Shin, J.; Park, R.H. Power-constrained contrast enhancement for organic light-emitting diode display using locality-preserving histogram equalisation. IET Image Process. 2016, 10, 542–551. [Google Scholar] [CrossRef]

- Lee, C.; Lam, E.Y. Computationally efficient brightness compensation and contrast enhancement for transmissive liquid crystal displays. J. Real-Time Image Process. 2018, 14, 733–741. [Google Scholar] [CrossRef]

- Pagliari, D.J.; Macii, E.; Poncino, M. LAPSE: Low-overhead adaptive power saving and contrast enhancement for OLEDs. IEEE Trans. Image Process. 2018, 27, 4623–4637. [Google Scholar] [CrossRef]

- Asnani, S.; Canu, M.G.; Farinetti, L.; Montrucchio, B. On producing energy-efficient and contrast-enhanced images for OLED-based mobile devices. Pervasive Mob. Comput. 2021, 75, 101384. [Google Scholar] [CrossRef]

- Li, H.; Deng, J.; Feng, P.; Pu, C.; Arachchige, D.D.; Cheng, Q. Short-Term Nacelle Orientation Forecasting Using Bilinear Transformation and ICEEMDAN Framework. Front. Energy Res. 2021, 9, 780928. [Google Scholar] [CrossRef]

- Li, H.; Deng, J.; Yuan, S.; Feng, P.; Arachchige, D.D. Monitoring and Identifying Wind Turbine Generator Bearing Faults Using Deep Belief Network and EWMA Control Charts. Front. Energy Res. 2021, 9, 799039. [Google Scholar] [CrossRef]

| Linear | Nonlinear |

|---|---|

| Contrast Stretching , , | Logarithmic Function |

| , | Exponent Function |

| Thresholding , , | Power Law reinforce dark areas suppress light areas square law–exponent function cubic law–logarithmic function |

| Multi-level thresholding | - |

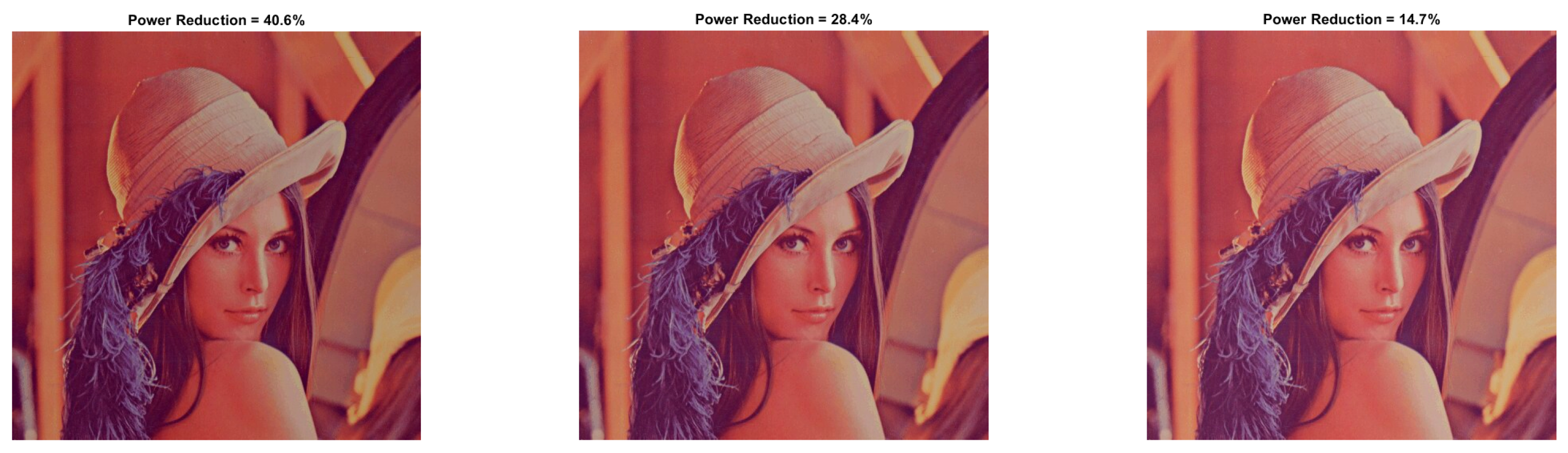

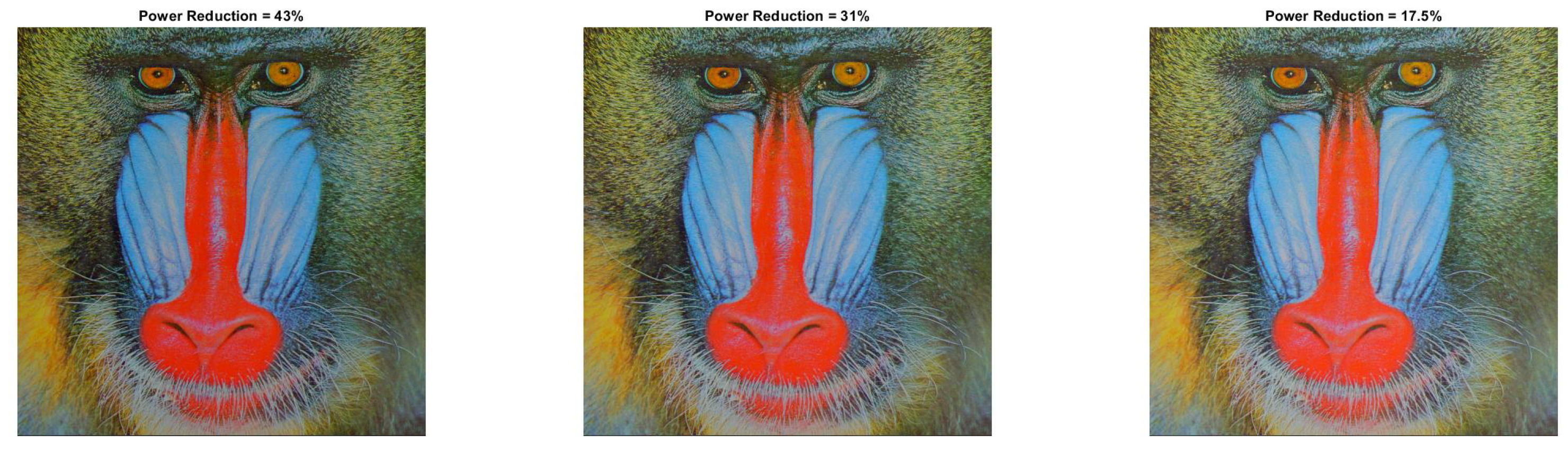

| 66.26 | 57.42 | 47.16 | |

| 0.828 | 0.893 | 0.932 | |

| 12.15 | 13.75 | 15.59 |

| 40.59 | 28.37 | 14.75 | |

| 0.959 | 0.980 | 0.986 | |

| 17.55 | 20.59 | 23.94 |

| −164.07 | −146.75 | −126.29 | −103.37 | −78.71 | −50.99 | −22.12 | 6.39 | 30.22 | 49.52 | |

| 0.383 | 0.440 | 0.461 | 0.493 | 0.546 | 0.586 | 0.592 | 0.562 | 0.513 | 0.450 | |

| 7.88 | 8.58 | 9.38 | 10.30 | 11.33 | 12.33 | 12.82 | 12.37 | 11.25 | 10.02 | |

| −107.06 | −82.69 | −57.47 | −31.19 | −4.79 | 19.55 | 40 | 56.35 | 70.42 | 81.61 | |

| 0.634 | 0.670 | 0.691 | 0.702 | 0.687 | 0.650 | 0.586 | 0.509 | 0.428 | 0.357 | |

| 10.81 | 12.07 | 13.32 | 14.29 | 14.34 | 13.26 | 11.78 | 10.46 | 9.36 | 8.47 | |

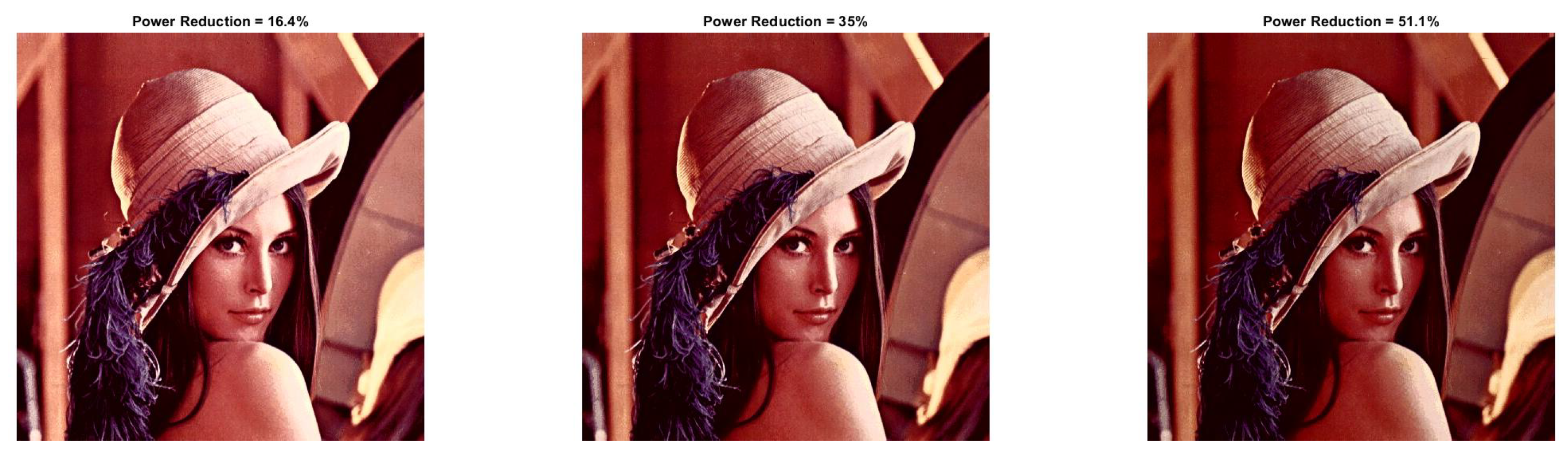

| −5.14 | 16.40 | 35.03 | 51.05 | 64.97 | 82.92 | 88.08 | 91.65 | 94.09 | 95.42 | |

| 0.834 | 0.786 | 0.722 | 0.644 | 0.560 | 0.481 | 0.408 | 0.344 | 0.289 | 0.244 | |

| 17.24 | 15.85 | 13.95 | 12.23 | 10.78 | 9.64 | 8.75 | 8.07 | 7.54 | 7.14 |

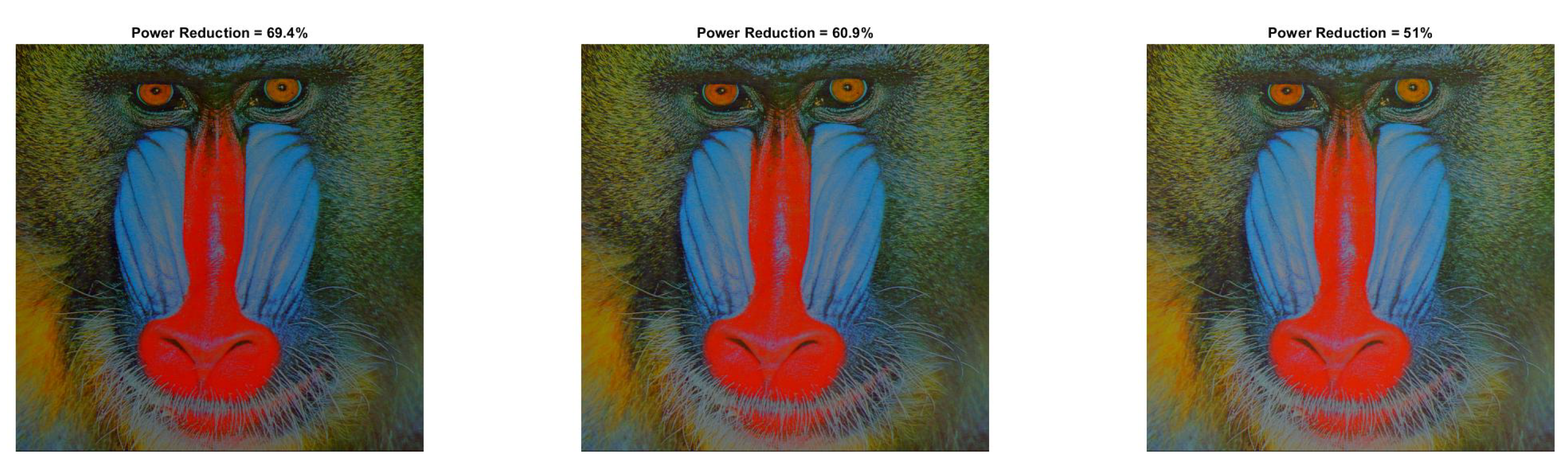

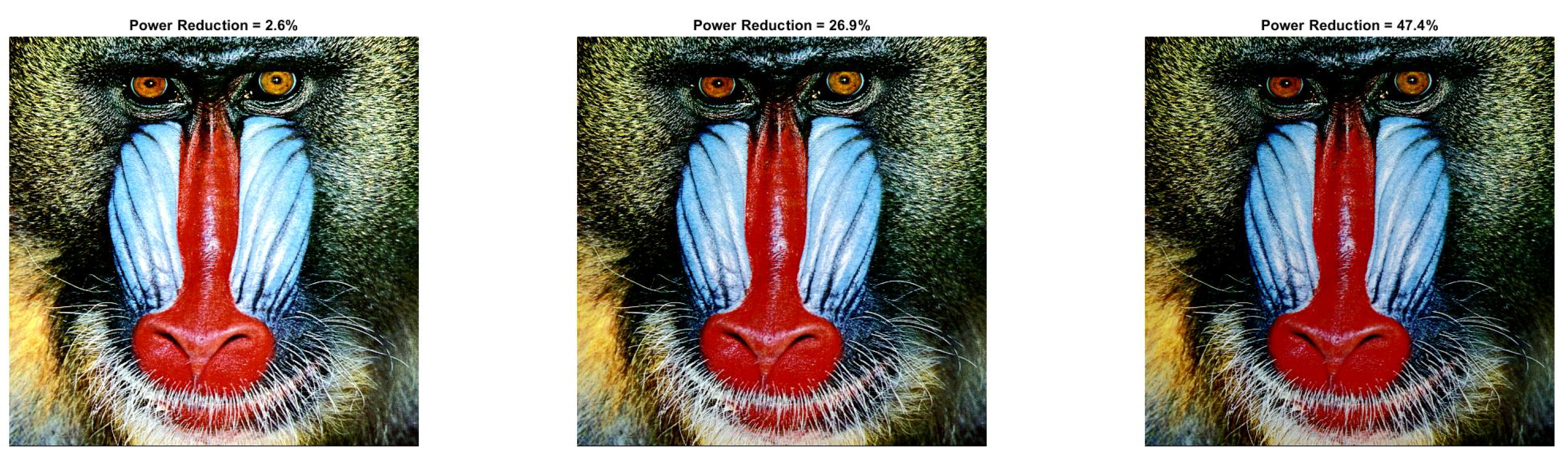

| 69.45 | 60.94 | 51.04 | |

| 0.730 | 0.805 | 0.856 | |

| 11.78 | 13.31 | 15.12 |

| 43.02 | 30.98 | 17.54 | |

| 0.928 | 0.956 | 0.967 | |

| 17.09 | 20.12 | 23.93 |

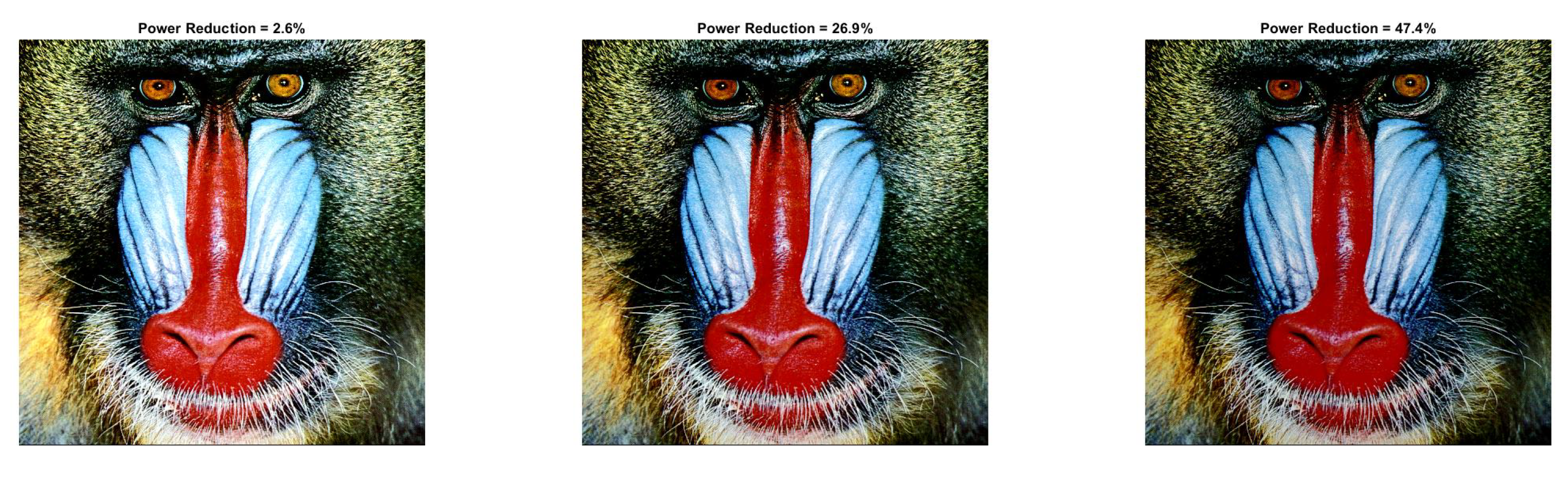

| 73.53 | 66.15 | 57.61 | |

| 0.747 | 0.813 | 0.853 | |

| 10.52 | 11.76 | 13.14 |

| 46.27 | 35.84 | 24.33 | |

| 0.932 | 0.949 | 0.954 | |

| 15.71 | 17.99 | 20.38 |

| −178.89 | −160.38 | −138.18 | −112.14 | −84.79 | −56.67 | −28.41 | −1.16 | 26.20 | 52.88 | |

| 0.416 | 0.456 | 0.495 | 0.536 | 0.560 | 0.556 | 0.523 | 0.479 | 0.428 | 0.358 | |

| 7.44 | 8.16 | 9.06 | 10.17 | 11.24 | 11.86 | 11.75 | 11.16 | 10.35 | 9.38 | |

| −112.55 | −86.69 | −60.85 | −33.42 | −5.83 | 21.36 | 46.41 | 66.59 | 81.07 | 90.13 | |

| 0.612 | 0.641 | 0.649 | 0.634 | 0.602 | 0.549 | 0.474 | 0.386 | 0.292 | 0.207 | |

| 10.63 | 11.92 | 12.95 | 13.30 | 12.83 | 11.88 | 10.65 | 9.38 | 8.23 | 7.32 | |

| 2.63 | 26.95 | 47.36 | 63.62 | 76.34 | 85.53 | 91.29 | 94.55 | 96.33 | 97.27 | |

| 0.742 | 0.678 | 0.596 | 0.502 | 0.403 | 0.307 | 0.224 | 0.159 | 0.114 | 0.085 | |

| 15.30 | 13.71 | 11.97 | 10.44 | 9.16 | 8.13 | 7.36 | 6.81 | 6.42 | 6.14 |

| −100.17 | −83.08 | −63.47 | −44.05 | −26.61 | −11.24 | 2.24 | 13.48 | 21.81 | 27.34 | |

| 0.663 | 0.691 | 0.712 | 0.715 | 0.689 | 0.634 | 0.562 | 0.488 | 0.418 | 0.355 | |

| 9.53 | 10.86 | 12.63 | 14.43 | 15.30 | 14.81 | 13.53 | 12.12 | 10.86 | 9.91 | |

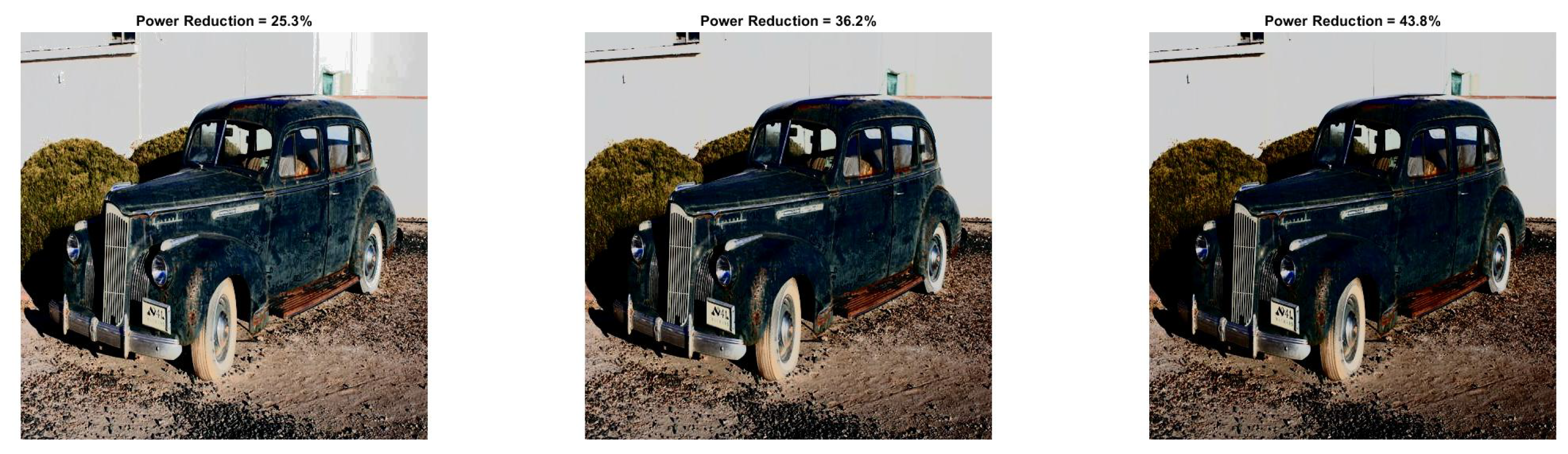

| −46.05 | −31.09 | −16.59 | −3.18 | 8.42 | 17.89 | 36.75 | 50.14 | 54.22 | 57.45 | |

| 0.796 | 0.791 | 0.760 | 0.702 | 0.624 | 0.538 | 0.433 | 0.386 | 0.330 | 0.294 | |

| 15.09 | 16.90 | 17.40 | 16.16 | 14.29 | 12.56 | 11.12 | 9.97 | 9.28 | 8.83 | |

| 25.34 | 36.21 | 43.78 | 49.72 | 54.39 | 58.15 | 62.21 | 67.58 | 74.47 | 81.52 | |

| 0.766 | 0.685 | 0.596 | 0.506 | 0.425 | 0.360 | 0.313 | 0.279 | 0.249 | 0.221 | |

| 16.54 | 14.26 | 12.52 | 11.18 | 10.16 | 9.42 | 8.87 | 8.40 | 7.89 | 7.34 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dritsas, E.; Trigka, M. A Methodology for Extracting Power-Efficient and Contrast Enhanced RGB Images. Sensors 2022, 22, 1461. https://doi.org/10.3390/s22041461

Dritsas E, Trigka M. A Methodology for Extracting Power-Efficient and Contrast Enhanced RGB Images. Sensors. 2022; 22(4):1461. https://doi.org/10.3390/s22041461

Chicago/Turabian StyleDritsas, Elias, and Maria Trigka. 2022. "A Methodology for Extracting Power-Efficient and Contrast Enhanced RGB Images" Sensors 22, no. 4: 1461. https://doi.org/10.3390/s22041461

APA StyleDritsas, E., & Trigka, M. (2022). A Methodology for Extracting Power-Efficient and Contrast Enhanced RGB Images. Sensors, 22(4), 1461. https://doi.org/10.3390/s22041461