Development of Multiple UAV Collaborative Driving Systems for Improving Field Phenotyping

Abstract

:1. Introduction

2. Materials and Methods

2.1. System Architecture and Principles

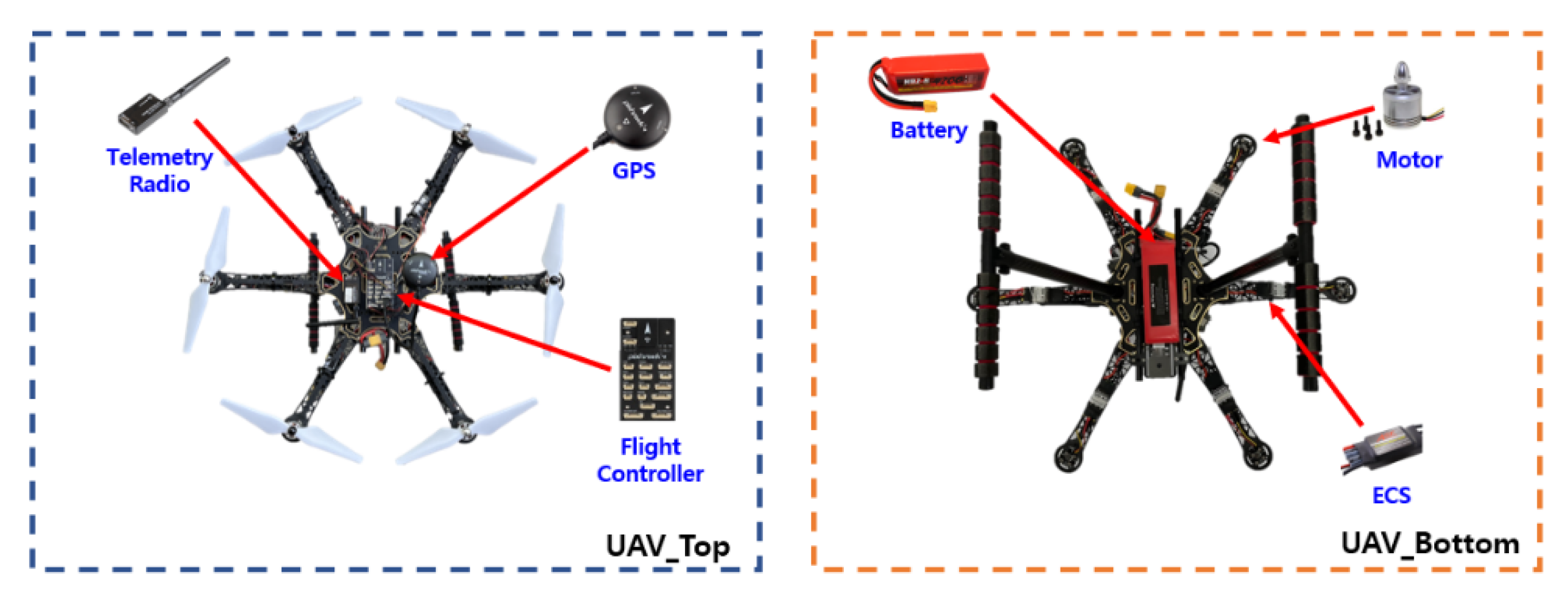

2.1.1. UAV

2.1.2. MAVLink Router

2.1.3. Ground Control Station (GCS)

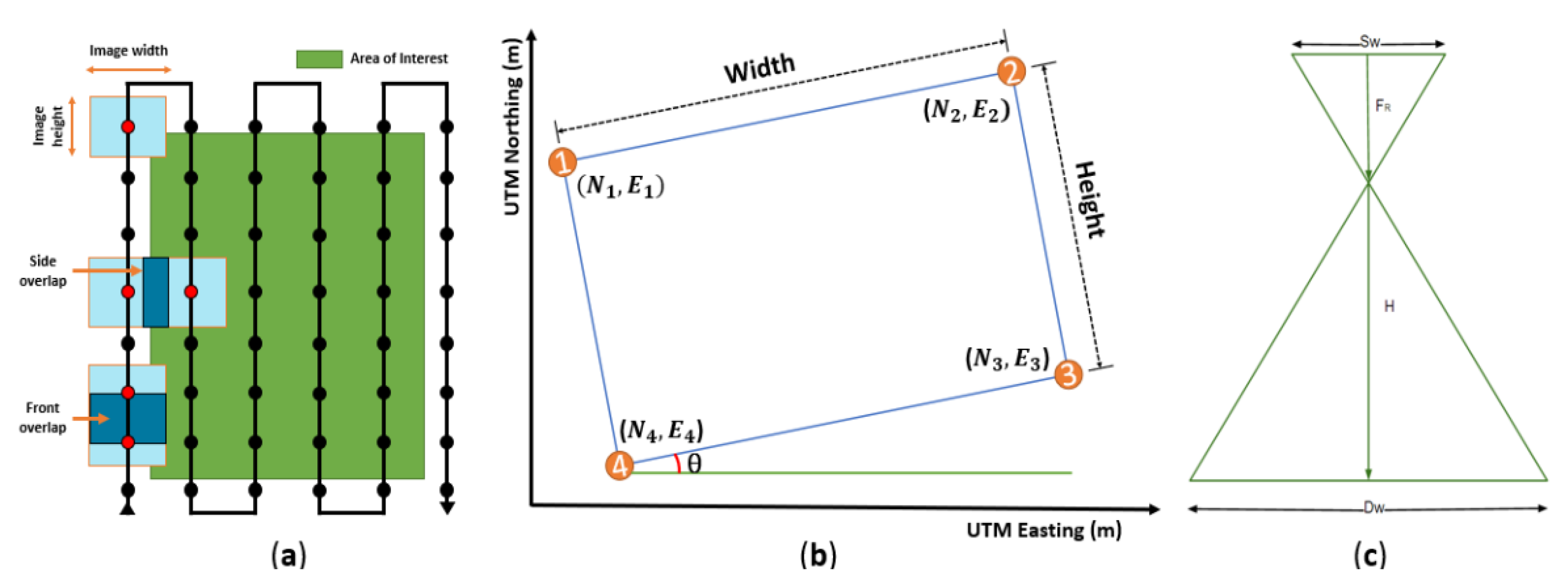

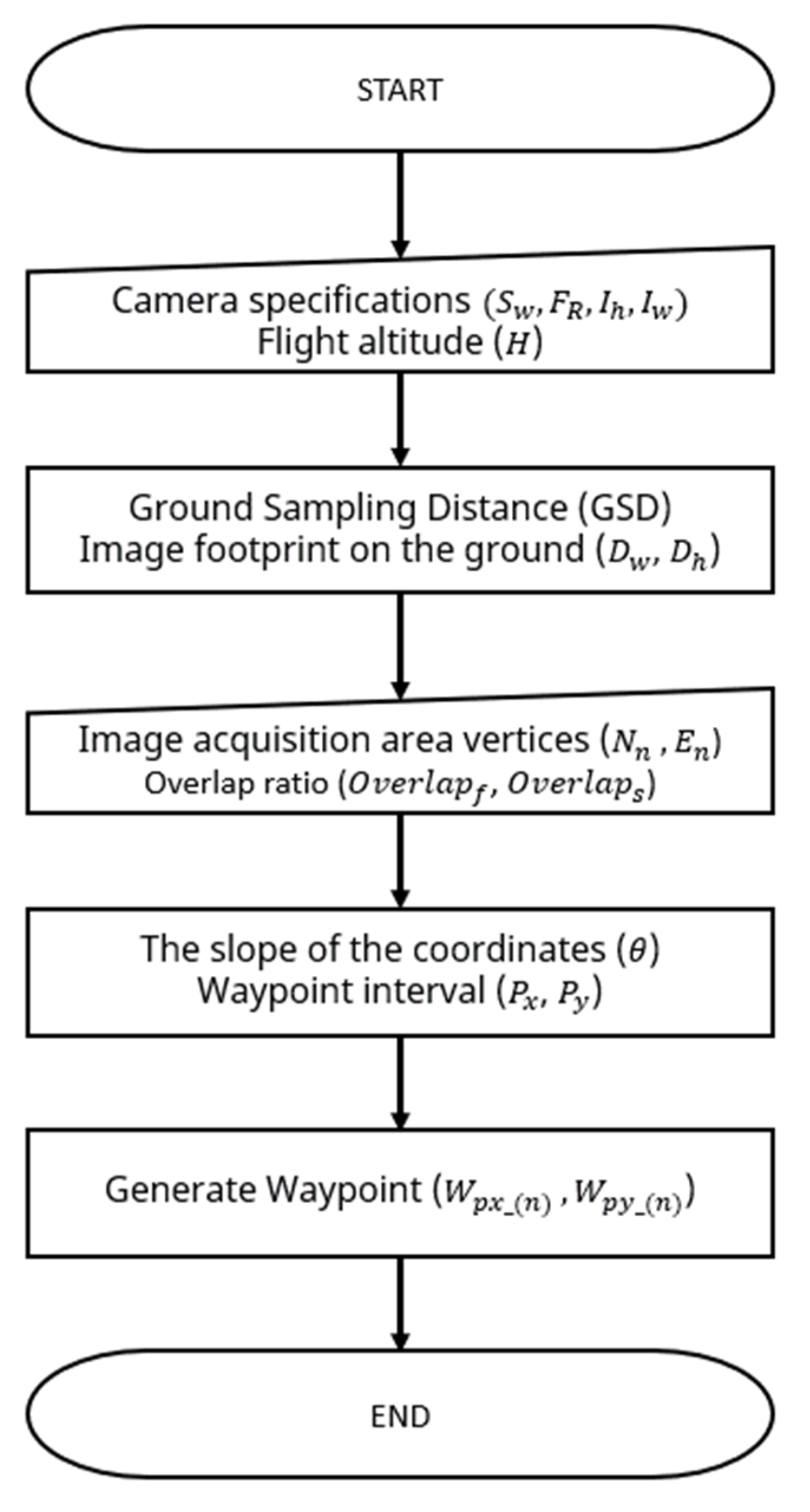

2.2. Multiple Path Generation Algorithms

2.3. Multiple UAV Collaborative Driving Algorithm

2.3.1. Driving Waypoint Update

2.3.2. Collision Avoidance

2.4. Flight Simulation

2.4.1. Flight Simulation Configuration

2.4.2. Check and Optimize the Developed Algorithm

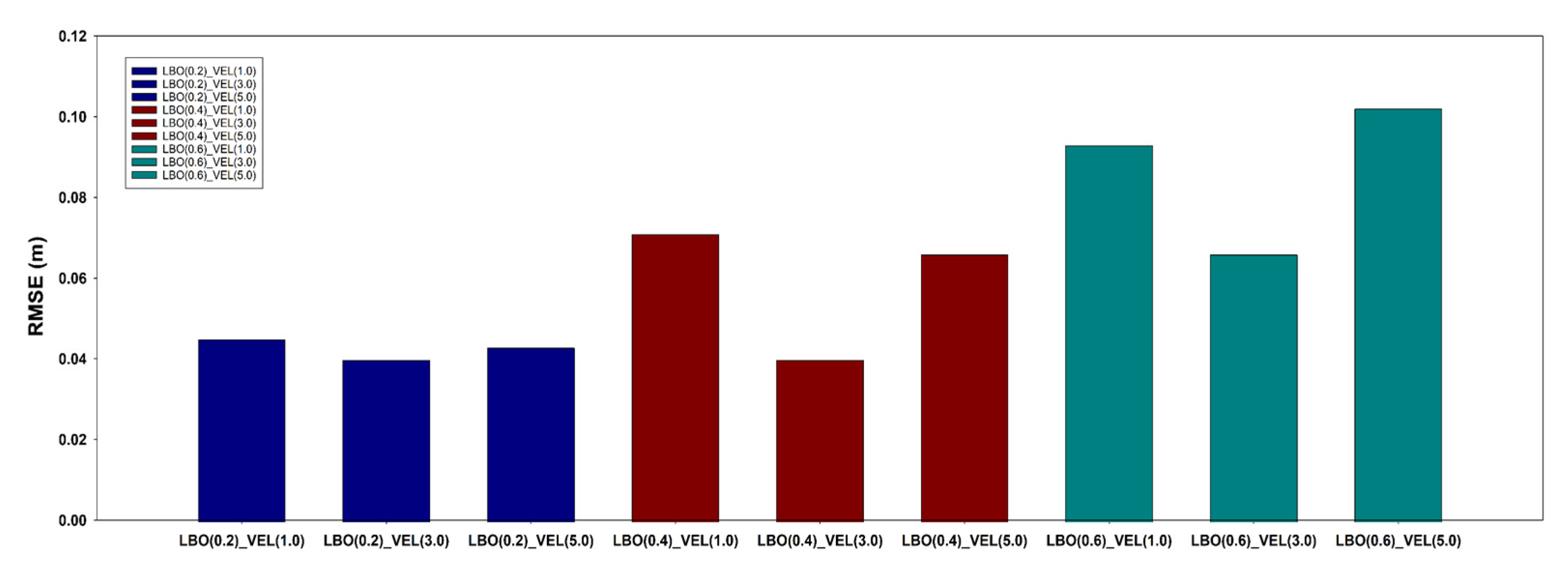

2.4.3. LBO Optimization

2.4.4. Flight Speed Optimization

2.4.5. Avoidance Algorithm Verification

2.5. Field Test

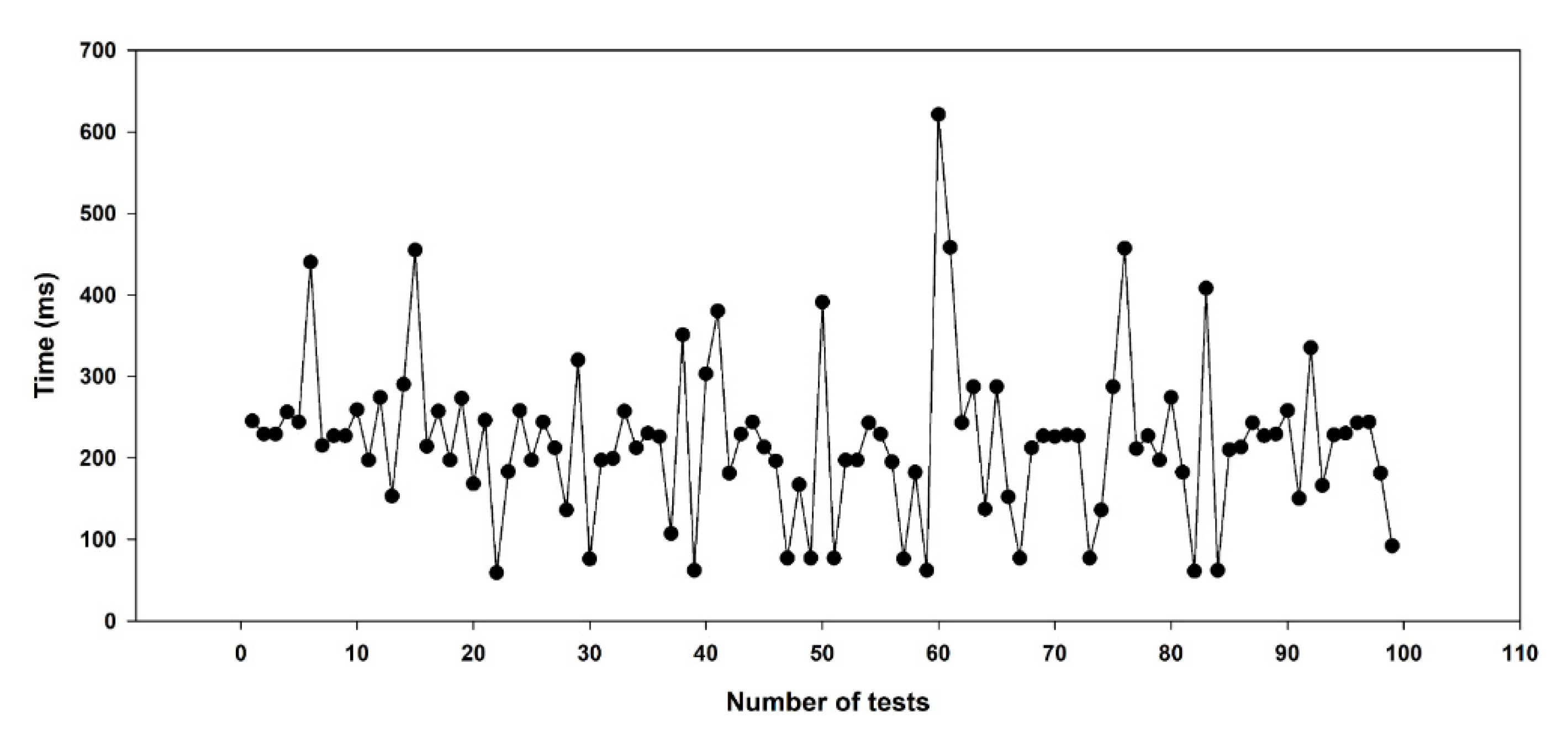

2.5.1. Long-Distance Wireless Communication Latency

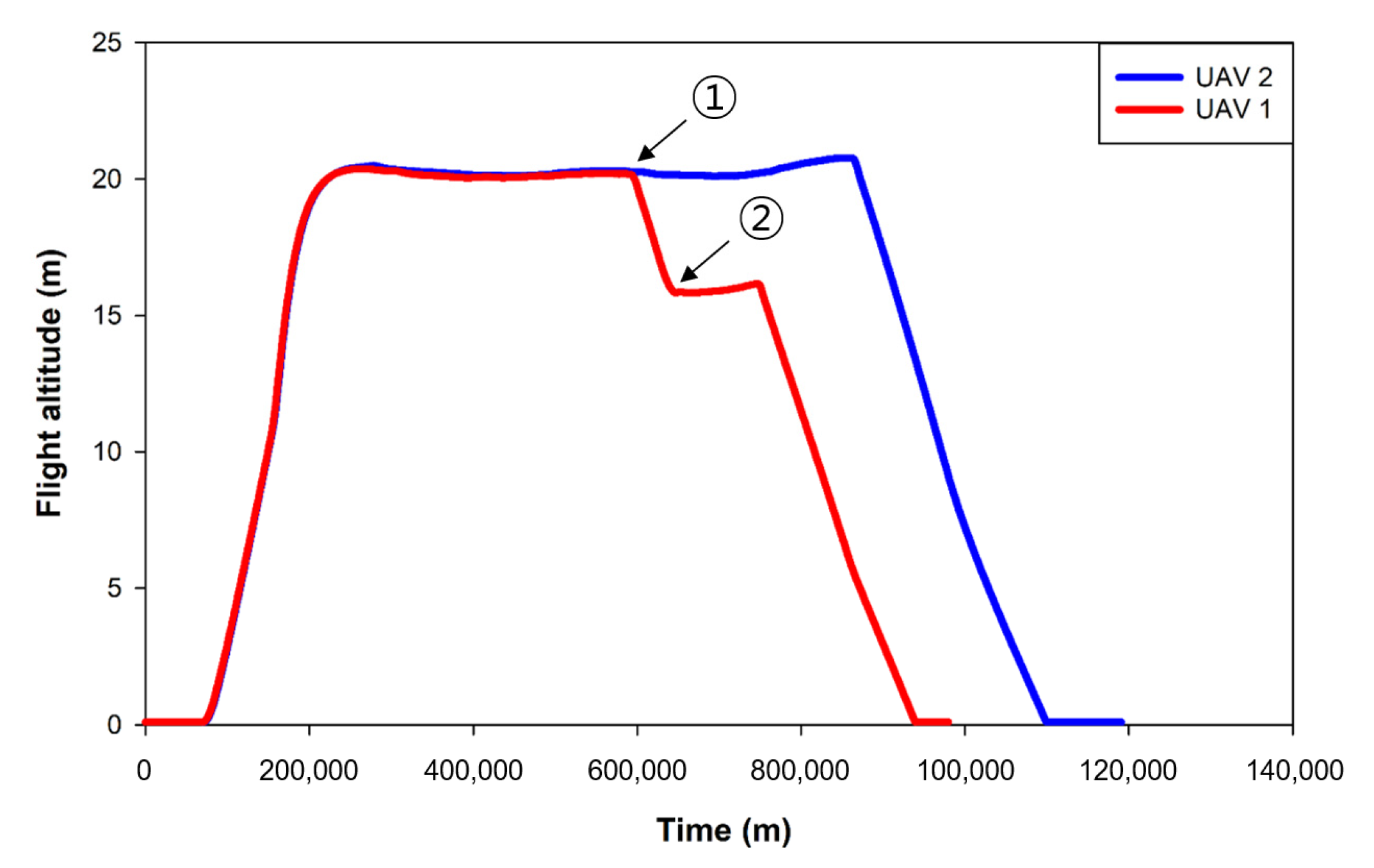

2.5.2. Multiple UAV Collaborative Driving Algorithm Verification

2.5.3. Collision Avoidance Algorithm Verification

2.5.4. Comparison with Existing Commercial Programs (GCS)

2.5.5. Comparison between Real and Simulated Environments

3. Results and Discussion

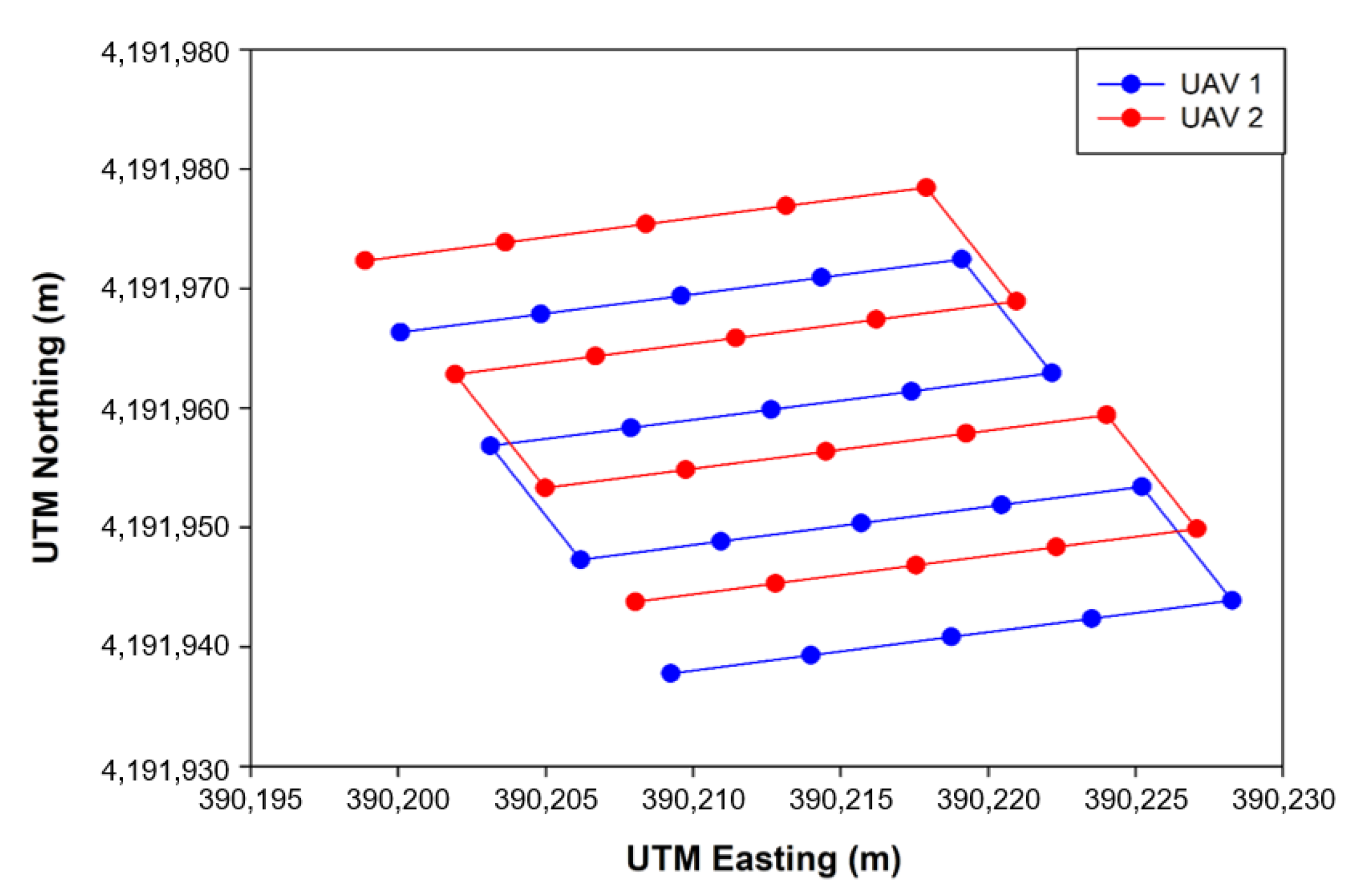

3.1. Results of Multiple Path Generation Algorithms

3.2. Results of Flight Simulation

3.2.1. Multiple UAV Collaborative Driving Algorithm Optimization

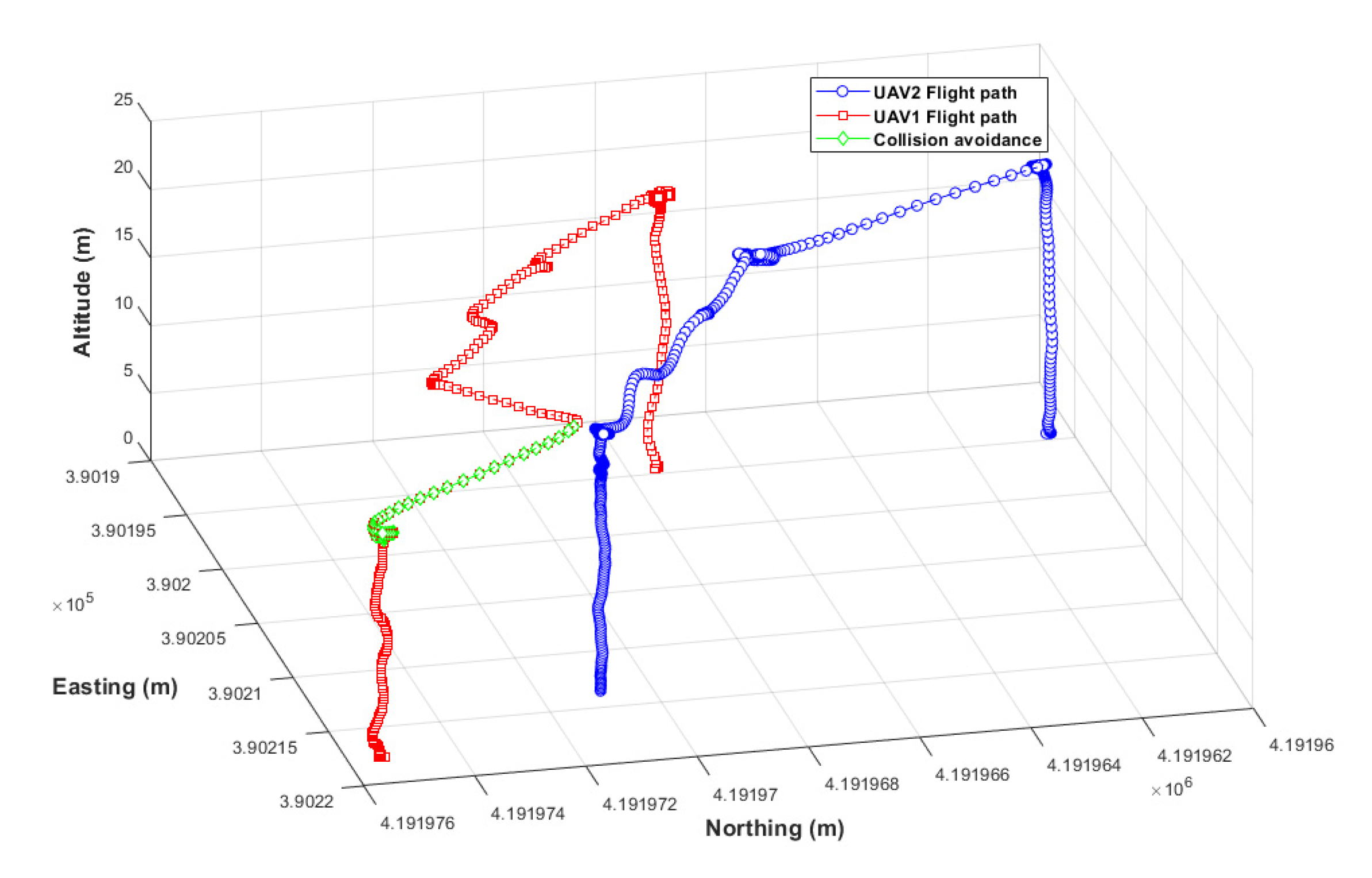

3.2.2. Results of Collision Avoidance Algorithm Verification

3.3. Results of Field Test

3.3.1. Wireless Communication Latency

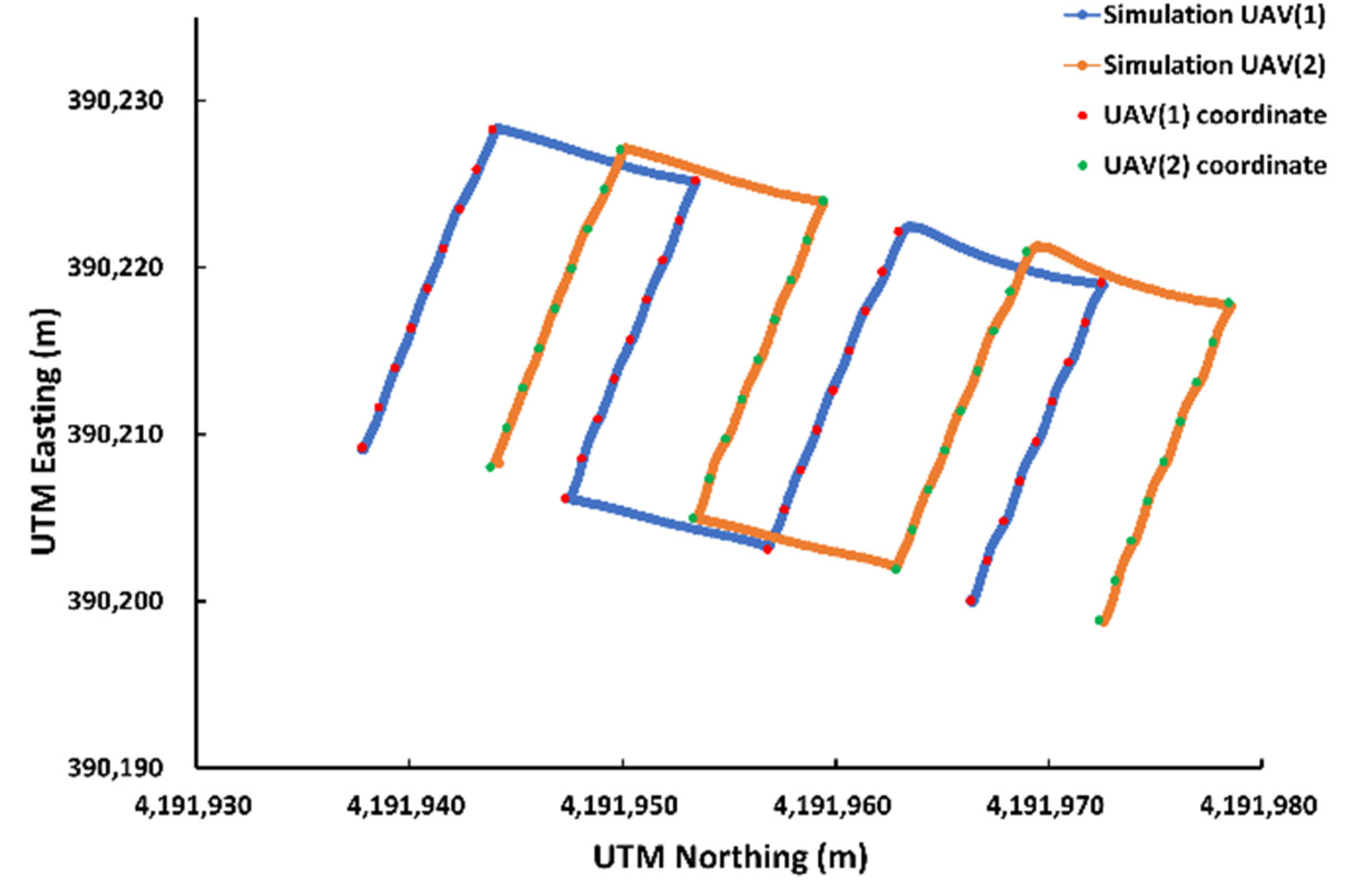

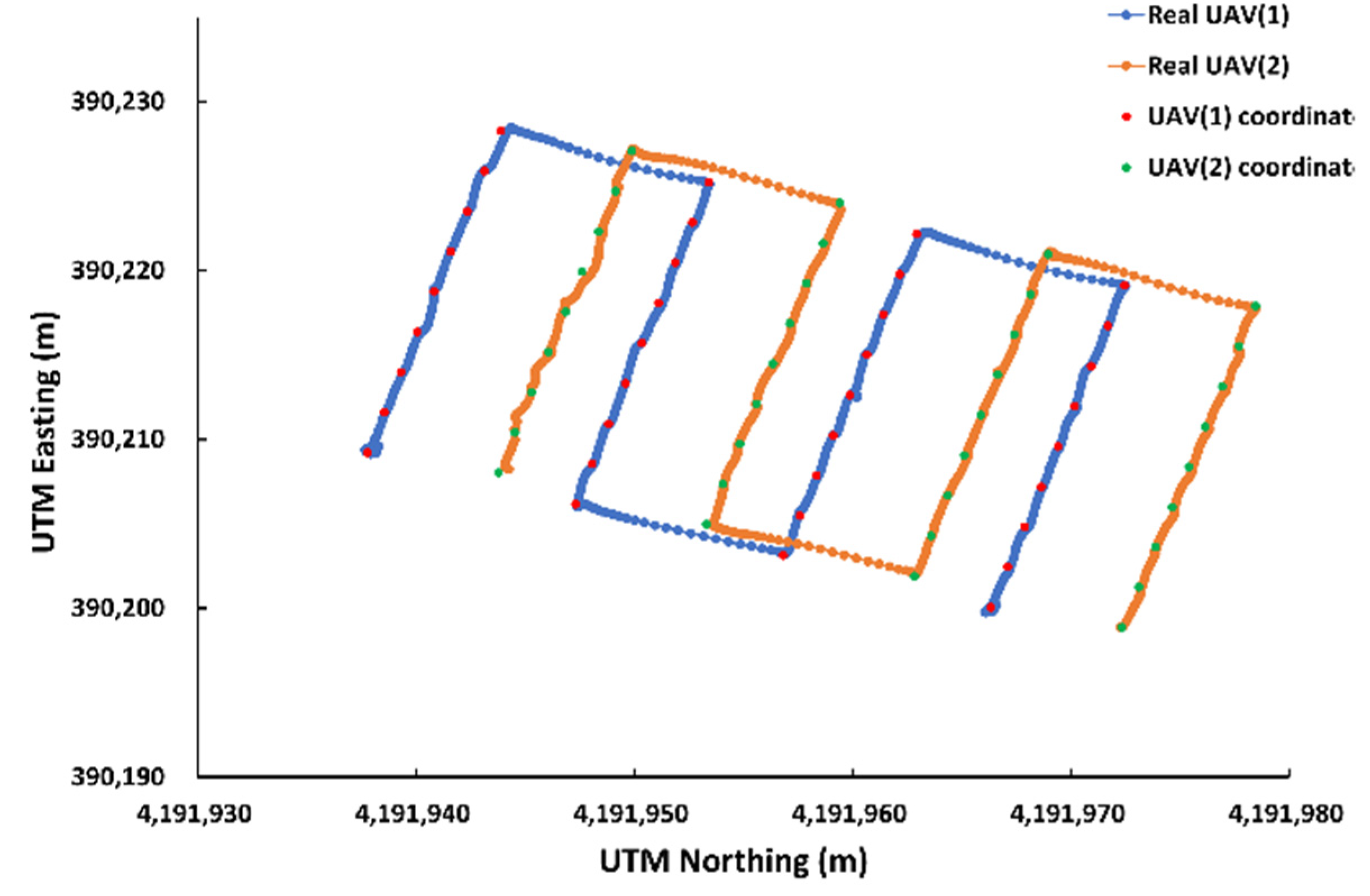

3.3.2. Comparison between Simulation and Actual Field Tests

3.3.3. Collision Avoidance in the Actual Field

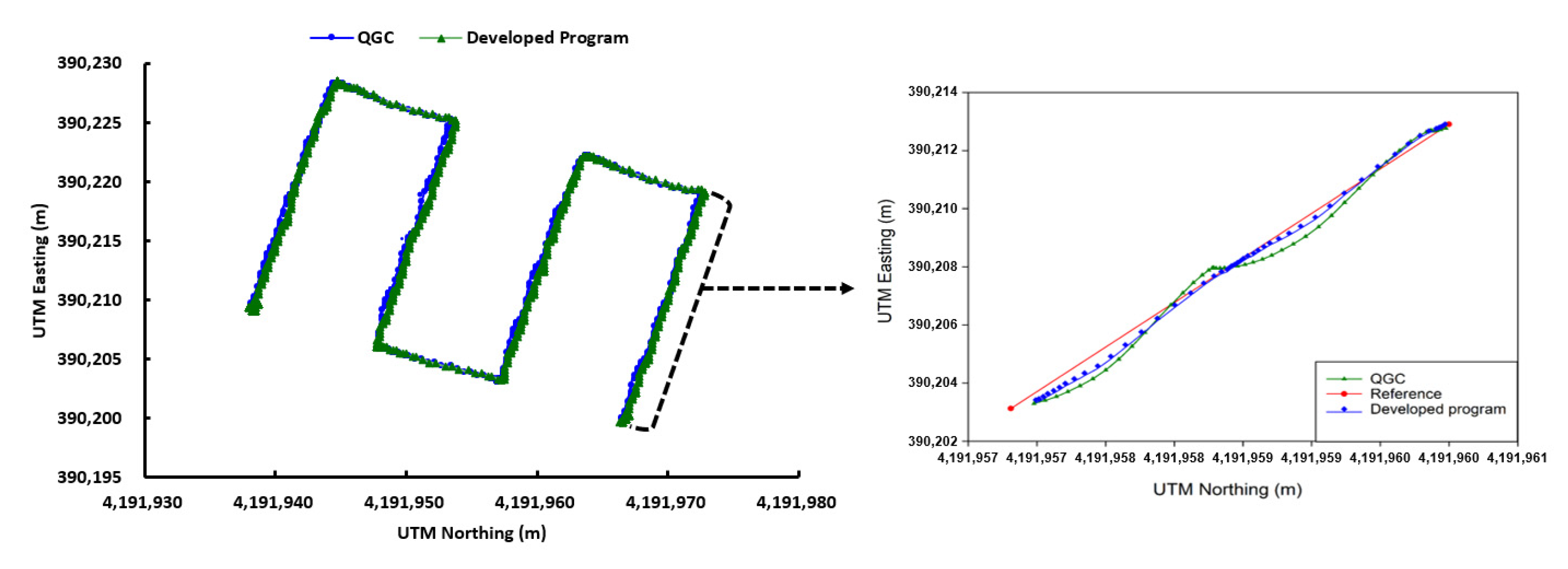

3.3.4. Comparison of Existing Commercial and Developed Program Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Fuentes-Peailillo, F.; Ortega-Farias, S.; Rivera, M.; Bardeen, M.; Moreno, M. Comparison of vegetation indices acquired from RGB and multispectral sensors placed on UAV. In Proceedings of the 2018 IEEE International Conference on Automation/XXIII Congress of the Chilean Association of Automatic Control (ICA-ACCA), Concepcion, Chile, 17–19 October 2018. [Google Scholar] [CrossRef]

- Zheng, H.; Zhou, X.; He, J.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Early season detection of rice plants using RGB, NIR-G-B and multispectral images from unmanned aerial vehicle (UAV). Comput. Electron. Agric. 2020, 169, 105223. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Navia, J.; Mondragon, I.; Patino, D.; Colorado, J. Multispectral mapping in agriculture: Terrain mosaic using an autonomous quadcopter UAV. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS 2016), Arlington, TX, USA, 7–10 June 2016; pp. 1351–1358. [Google Scholar] [CrossRef]

- Christiansen, M.P.; Laursen, M.S.; Jørgensen, R.N.; Skovsen, S.; Gislum, R. Designing and testing a UAV mapping system for agricultural field surveying. Sensors 2017, 17, 2703. [Google Scholar] [CrossRef] [Green Version]

- Han, X.; Thomasson, J.A.; Swaminathan, V.; Wang, T.; Raman, R.; Rajan, N.; Neely, H. Field-based calibration of unmanned aerial vehicle thermal infrared imagery with temperature-controlled references. Sensors 2020, 20, 7098. [Google Scholar] [CrossRef] [PubMed]

- Han, X.; Thomasson, J.A.; Wang, T.; Swaminathan, V. Autonomous mobile ground control point improves accuracy of agricultural remote sensing through collaboration with UAV. Inventions 2020, 5, 12. [Google Scholar] [CrossRef] [Green Version]

- López-Granados, F.; Torres-Sánchez, J.; De Castro, A.I.; Serrano-Pérez, A.; Mesas-Carrascosa, F.J.; Peña, J.M. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 67. [Google Scholar] [CrossRef]

- Ballesteros, R.; Ortega, J.F.; Hernandez, D.; Moreno, M.A. Onion biomass monitoring using UAV-based RGB imaging. Precis. Agric. 2018, 19, 840–857. [Google Scholar] [CrossRef]

- Koc-San, D.; Selim, S.; Aslan, N.; San, B.T. Automatic citrus tree extraction from UAV images and digital surface models using circular Hough transform. Comput. Electron. Agric. 2018, 150, 289–301. [Google Scholar] [CrossRef]

- Straffelini, E.; Cucchiaro, S.; Tarolli, P. Mapping potential surface ponding in agriculture using UAV-SfM. Earth Surf. Process. Landf. 2021, 46, 1926–1940. [Google Scholar] [CrossRef]

- Dileep, M.R.; Navaneeth, A.V.; Ullagaddi, S.; Danti, A. A Study and analysis on various types of agricultural drones and its applications. In Proceedings of the International Conference on Research in Computational Intelligence and Communication Networks (ICRCICN 2020), Bangalore, India, 26–27 November 2020; pp. 181–185. [Google Scholar] [CrossRef]

- Ebeid, E.; Skriver, M.; Jin, J. A Survey on open-source flight control platforms of unmanned aerial vehicle. In Proceedings of the 2017 Euromicro Conference on Digital System Design (DSD), Vienna, Austria, 30 August–1 September 2017; pp. 396–402. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, T.T.; Slaughter, D.C.; Townsley, B.T.; Carriedo, L.; Maloof, J.N.; Sinha, N. In-field plant phenotyping using multi-view reconstruction: An investigation in eggplant. In Proceedings of the 13th International Conference on Precision Agriculture, St. Louis, MO, USA, 31 July–4 August 2016; pp. 1–16. [Google Scholar]

- Avellar, G.S.C.; Pereira, G.A.S.; Pimenta, L.C.A.; Iscold, P. Multi-UAV routing for area coverage and remote sensing with minimum time. Sensors 2015, 15, 27783–27803. [Google Scholar] [CrossRef] [Green Version]

- Engebraten, S.; Glette, K.; Yakimenko, O. Field-testing of high-level decentralized controllers for a multi-function drone swarm. In Proceedings of the IEEE International Conference on Control and Automation (ICCA 2018), Anchorage, AK, USA, 12–15 June 2018; pp. 379–386. [Google Scholar] [CrossRef]

- Zaidi, A.; Kazim, M.; Weng, R.; Wang, D.; Zhang, X. Distributed Observer-Based Leader Following Consensus Tracking Protocol for a Swarm of Drones. J. Intell. Robot. Syst. 2021, 102, 64. [Google Scholar] [CrossRef]

- Ju, C.; Son, H. Multiple UAV Systems for Agricultural Applications: Control, Implementation, and Evaluation. Electronics 2018, 7, 162. [Google Scholar] [CrossRef] [Green Version]

- Barrientos, A.; Colorado, J.; Cerro, J.; Martinez, A.; Rossi, C.; Sanz, D.; Valente, J. Aerial remote sensing in agriculture: A practical approach to area coverage and path planning for fleetsof mini aerial robots. J. Field Robot. 2011, 28, 667–689. [Google Scholar] [CrossRef] [Green Version]

- Roberge, V.; Tarbouchi, M.; Labonte, G. Comparison of parallel genetic algorithm and particle swarm optimization for real-time UAV path planning. IEEE Trans. Ind. Inform. 2013, 9, 132–141. [Google Scholar] [CrossRef]

- Gu, J.; Su, T.; Wang, Q.; Du, X.; Guizani, M. Multiple moving targets surveillance based on a cooperative network for multi-UAV. IEEE Commun. Mag. 2018, 56, 82–89. [Google Scholar] [CrossRef]

- Lee, B.H.Y.; Morrison, J.R.; Sharma, R. Multi-UAV control testbed for persistent UAV presence: ROS GPS waypoint tracking package and centralized task allocation capability. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS 2017), Miami, FL, USA, 13–16 June 2017; pp. 1742–1750. [Google Scholar] [CrossRef]

- Greenwood, F. Drones on the Horizon: New Frontier in Agricultural Innovation. ICT Update, Issue 82. 2016. Available online: https://cgspace.cgiar.org/bitstream/handle/10568/89779/ICT082E_PDF.pdf (accessed on 12 January 2022).

- Young, S.N.; Kayacan, E.; Peschel, J.M. Design and field evaluation of a ground robot for high-throughput phenotyping of energy sorghum. Precis. Agric. 2019, 20, 697–722. [Google Scholar] [CrossRef] [Green Version]

- Madec, S.; Baret, F.; De Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-throughput phenotyping of plant height: Comparing unmanned aerial vehicles and ground lidar estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef] [Green Version]

- Mueller-Sim, T.; Jenkins, M.; Abel, J.; Kantor, G. The Robotanist: A ground-based agricultural robot for high-throughput crop phenotyping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3634–3639. [Google Scholar] [CrossRef]

- Manish, R.; Lin, Y.C.; Ravi, R.; Hasheminasab, S.M.; Zhou, T.; Habib, A. Development of a miniaturized mobile mapping system for in-row, under-canopy phenotyping. Remote Sens. 2021, 13, 276. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Borra-Serrano, I.; Manuel Peña, J. Assessing UAV-collected image overlap influence on computation time and digital surface model accuracy in olive orchards. Precis. Agric. 2018, 19, 115–133. [Google Scholar] [CrossRef]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Ruiz, J.J.; Diaz-Mas, L.; Perez, F.; Viguria, A. Evaluating the accuracy of dem generation algorithms from uav imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 333–337. [Google Scholar] [CrossRef]

- Willkomm, M.; Bolten, A.; Bareth, G. Non-destructive monitoring of rice by hyperspectral in-field spectrometry and UAV-based remote sensing: Case study of field-grown rice in North Rhine-Westphalia, Germany. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences (ISPRS Archives 2016), Prague, Czech, 12–19 July 2016; pp. 1071–1077. [Google Scholar] [CrossRef]

- Zhu, R.; Sun, K.; Yan, Z.; Yan, X.; Yu, J.; Shi, J.; Hu, Z.; Jiang, H.; Xin, D.; Zhang, Z. Analysing the phenotype development of soybean plants using low-cost 3D reconstruction. Sci. Rep. 2020, 10, 7055. [Google Scholar] [CrossRef]

- Sodhi, P.; Vijayarangan, S.; Wettergreen, D. In-field segmentation and identification of plant structures using 3D imaging. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 5180–5187. [Google Scholar] [CrossRef]

- He, J.Q.; Harrison, R.J.; Li, B. A novel 3D imaging system for strawberry phenotyping. Plant Methods 2017, 13, 93. [Google Scholar] [CrossRef]

- Li, J.; Tang, L. Developing a low-cost 3D plant morphological traits characterization system. Comput. Electron. Agric. 2017, 143, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Zermas, D.; Morellas, V.; Mulla, D.; Papanikolopoulos, N. 3D model processing for high throughput phenotype extraction—The case of corn. Comput. Electron. Agric. 2020, 172, 105047. [Google Scholar] [CrossRef]

- Atoev, S.; Kwon, K.R.; Lee, S.H.; Moon, K.S. Data analysis of the MAVLink communication protocol. In Proceedings of the International Conference on Information Science and Communications Technologies (ICISCT), Tashkent, Uzbekistan, 2–4 November 2017. [Google Scholar] [CrossRef]

- Ramirez-Atencia, C.; Camacho, D. Extending QGroundControl for automated mission planning of Uavs. Sensors 2018, 18, 2339. [Google Scholar] [CrossRef] [Green Version]

- Paula, N.; Areias, B.; Reis, A.B.; Sargento, S. Multi-drone Control with Autonomous Mission Support. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops, Kyoto, Japan, 11–15 March 2019; pp. 918–923. [Google Scholar] [CrossRef]

- Yao, L.; Jiang, Y.; Zhiyao, Z.; Shuaishuai, Y.; Quan, Q. A pesticide spraying mission assignment performed by multi-quadcopters and its simulation platform establishment. In Proceedings of the IEEE Chinese Guidance, Navigation and Control Conference, Nanjing, China, 12–14 August 2016; pp. 1980–1985. [Google Scholar] [CrossRef]

| Input Variables | Values |

|---|---|

| Site area | 641 m2 |

| Flight altitude | 20 m |

| Overlap (side, front) | 75% |

| Distance between UAV | 3 m |

| Flight speed | 4.0 m/s |

| Image size | 3280 × 2464 pixels |

| Camera focal length | 3.04 mm |

| Camera sensor size | 4.6 mm |

| Description | Contents |

|---|---|

| Wind direction | South-west |

| Wind speed | 1.0 m/s |

| Atmospheric temperature | 30.0 °C |

| LBO | 0.4 m |

| Maximum flight speed | 3.0 m/s |

| Description | Contents |

|---|---|

| Wind direction | North-east |

| Wind speed | 3.7 m/s |

| Atmospheric temperature | 28.6 °C |

| LBO | 0.4 m |

| Collision recognition distance | 2.5 m |

| Maximum flight speed | 3.0 m/s |

| Description | Values |

|---|---|

| Flight altitude | 20 m |

| Overlap (side, front) | 75% |

| Distance between UAVs | 3 m |

| LBO | 0.4 m |

| Flight speed | 4.0 m/s |

| Image size | 3280 × 2464 pixels |

| Camera focal length | 3.04 mm |

| Camera sensor size | 4.6 mm |

| Simulation | Actual Field | |

|---|---|---|

| Flight time | 164 s | 185 s |

| Flight accuracy | 0.06 m | 0.08 m |

| QgroundControl | Developed Program | |

|---|---|---|

| Flight time | 127 s | 185 s |

| Flight accuracy | 0.2 m | 0.08 m |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, H.-S.; Shin, B.-S.; Thomasson, J.A.; Wang, T.; Zhang, Z.; Han, X. Development of Multiple UAV Collaborative Driving Systems for Improving Field Phenotyping. Sensors 2022, 22, 1423. https://doi.org/10.3390/s22041423

Lee H-S, Shin B-S, Thomasson JA, Wang T, Zhang Z, Han X. Development of Multiple UAV Collaborative Driving Systems for Improving Field Phenotyping. Sensors. 2022; 22(4):1423. https://doi.org/10.3390/s22041423

Chicago/Turabian StyleLee, Hyeon-Seung, Beom-Soo Shin, J. Alex Thomasson, Tianyi Wang, Zhao Zhang, and Xiongzhe Han. 2022. "Development of Multiple UAV Collaborative Driving Systems for Improving Field Phenotyping" Sensors 22, no. 4: 1423. https://doi.org/10.3390/s22041423

APA StyleLee, H.-S., Shin, B.-S., Thomasson, J. A., Wang, T., Zhang, Z., & Han, X. (2022). Development of Multiple UAV Collaborative Driving Systems for Improving Field Phenotyping. Sensors, 22(4), 1423. https://doi.org/10.3390/s22041423