Abstract

This study evaluates the progression of visual fatigue induced by visual display terminal (VDT) using automatically detected blink features. A total of 23 subjects were recruited to participate in a VDT task, during which they were required to watch a 120-min video on a laptop and answer a questionnaire every 30 min. Face video recordings were captured by a camera. The blinking and incomplete blinking images were recognized by automatic detection of the parameters of the eyes. Then, the blink features were extracted including blink number (BN), mean blink interval (Mean_BI), mean blink duration (Mean_BD), group blink number (GBN), mean group blink interval (Mean_GBI), incomplete blink number (IBN), and mean incomplete blink interval (Mean_IBI). The results showed that BN and GBN increased significantly, and that Mean_BI and Mean_GBI decreased significantly over time. Mean_BD and Mean_IBI increased and IBN decreased significantly only in the last 30 min. The blink features automatically detected in this study can be used to evaluate the progression of visual fatigue.

1. Introduction

With the development and popularization of the internet, visual display terminals (VDTs), including computers, smartphones, and tablets, etc., have become essential parts of modern life. In particular, during the COVID-19 pandemic and social isolation, people have to work at home and communicate by video conferences. E-learning has become a new form for students. Lack of outdoor recreational activities has resulted in people resorting to VDT for entertainment. Prolonged exposure to a VDT increases the load on the eyes [1] and causes symptoms of visual fatigue, such as blurred vision, dry eyes, burning, headache, eye pain, dizziness, and swelling [2]. Visual fatigue is increasing in prevalence among VDT users [3], producing discomfort for extended periods [4,5,6]. Accordingly, clinicians need to evaluate visual fatigue before providing guidance to minimize the severity of these symptoms [7].

Previous research on measuring visual fatigue can be categorized into subjective and objective methods [8,9]. Subjective ocular symptoms and visual fatigue were evaluated using the ocular surface disease index (OSDI), visual analogue scale (VAS), and computer vision syndrome (CVS) score, and dry eye symptom score [10,11,12]. These methods can be influenced by individual perception, mental states, and daily conditions and cannot be used to continuously measure visual fatigue when a user is viewing a VDT. Thus, the development of objective methods for measuring visual fatigue is now an active area of research.

Visual fatigue induced by viewing 2D or 3D displays has been evaluated objectively by the autonomic nervous response measured by electrocardiogram (ECG) [13], electroencephalogram (EEG) [14], galvanic skin response (GSR), photoplethysmogram (PPG), skin temperature (SKT) [15], and magnetoencephalography (MEG) [16]. However, the accuracy of these signals can be degraded by external stimuli or environmental conditions such as loud noises and air temperature. Further, these studies required inconvenient devices or multiple electrodes to be attached to the participant’s body, which can be uncomfortable and become another factor that contributes to user fatigue.

The parameters from the eyes provide direct evidence for assessing visual fatigue [17,18]. Blink is the regular opening and closing of the eyelids to spread the tear film coated on the frontal part of the cornea across the corneal surface [19]. Healthy people blink about 10 to 15 blinks per minute on average. Some studies indicated that blink rate reduced significantly with the increased ocular symptoms [20,21]. Another study showed the blink rate gradually increased over time, but this trend was not significant [22]. Blink completeness and incompleteness are distinguished based on whether the eyelids touch or not [23] or 75% of corneal coverage by eyelids or not [22]. A greater increase in the incomplete blinks was associated with the prolonged VDT tasks and worsening of the symptom [20,21,22].

Blinks could be detected with the electrooculogram (EOG) signal recorded by skin electrodes placed at opposite sides of the eye [19,24]. However, the attached electrodes may interfere with routine VDT tasks and increase visual fatigue. A glasses-type of infrared light-based eye-tracker has been worn to capture eye images to compare the visual fatigue caused by 2D and 3D displays. The blink rate based on the number of black pixels in the pupil area was calculated to indicate visual fatigue [8,9]. However, this device is inconvenient for those usually wearing glasses.

As a non-contact measurement, a video camera was positioned in front of the participant to capture eyelid movement, and video analysis was subsequently conducted to assess blink features [25]. Mostly, blink was manually recognized and counted from a video recording, which consumed much time and effort for long videos. Machine learning algorithms, such as recurrent neural network (RNN) [26] and support vector machine (SVM) [27], have been proposed to differentiate complete blinks from incomplete blinks. However, a large number of video images and manual annotations were required to train the classifiers. The results were not satisfactory due to the influence of eyelashes and eye size [23].

The conventional image processing methods for recognizing blinks are based on the gradient vector of eye information and local texture information [28]. The pixels of each frame are divided into two groups according to the direction and magnitude of the hybrid gradient vectors. The distance between their centers of gravity is used to determine the blink features [29]. However, this method requires higher image quality and accurate localization of the eye region. The eye aspect ratio between the height and width of the eyes was calculated to detect eye blink and alert the driver about drowsiness; however, the accuracy of eye blink rate detection is not high [30]. Besides, as far as we know, the VDT tasks designed in the previous studies were usually no more than 60 min [23], which could not reflect the progression of visual fatigue.

The present study aims to evaluate the progression of visual fatigue with blink features. We propose an algorithm to identify blink and incomplete blink and extract the blink features at different stages of the VDT task.

2. Materials and Methods

Firstly, the experiment was set up to record the face video during a VDT task. Then the eyes were located to extract blinking image frames and recognize complete and incomplete blinks. After that, the blink features were extracted and their changes with visual fatigue were analyzed statistically.

The process of blink features extraction was summarized in Algorithm 1, where n is the total video frames during an experiment.

| Algorithm 1. Blink feature extraction |

| 1. for X = 1; X ≤ n; X++ |

| 2. Read video image |

| 3. Detect face and locate eyes |

| 4. Obtain blink frame |

| 5. Image enhancement and magnification |

| 6. Calculation of the distance between the upper eyelid and eye corner |

| 7. Recognize incomplete blink |

| 8. Blink features are calculated |

| 9. The features are stored in a file |

| 10. end for |

2.1. Experiment Setup

2.1.1. Subject

A total of 23 subjects (12 women, 11 men) aged between 23 and 28 years old participated in this study. They were in good health and had normal or corrected normal vision without eye diseases. They usually spent more than four hours a day staring at VDT screens. The day before the experiment, any drugs, coffee, strong tea, and other beverages that might affect their state were not allowed. At the same time, the subjects ensured that they had a normal diet and enough sleep. The subjects were asked to sign a consent after being informed of the study’s aim, potential benefit, and risk. The study was approved by the Ethics Committee of Science and Technology of Beijing University of Technology and was conducted according to the Declaration of Helsinki of the World Medical Association.

A laptop having a 19-inch display screen (Patriot F907W, Beijing, China) with a resolution of 1440 × 900 and 100% brightness was used to play a documentary video. The documentary video called “The Hunt” produced by the BBC is calm and soothing, however, with drastic changes in hue and brightness. A camera (Spedal MF934H, Guangdong, China) was connected to the laptop via a USB port to record the face video during watching the documentary video. It has a resolution of 1920 × 1080 and a frame rate of 60 frames/s. The camera pointing at the subject’s face was started by the laptop and took the face images continuously at 60 frames/s during the experiment.

2.1.2. Questionnaire for Visual Fatigue Assessment

A questionnaire was designed to evaluate visual fatigue with the score. The visual fatigue questionnaire consists of 5 questions with three options of ‘no’, ‘uncertain’ and ‘yes’, scored 0, 1, and 2, respectively. The higher the total score, the more severe the visual fatigue. The questionnaire shown in Table 1. has been approved by two experienced ophthalmologists.

Table 1.

The visual fatigue assessment questionnaire.

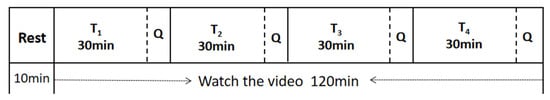

2.1.3. Experimental Procedure

The experiment was carried out at 3 p.m. in a quiet room with constant temperature and ambient light. The subject sat in a chair 50 cm before the display screen facing the camera. Before the start of the experiment, the subjects answered the questionnaire orally and closed their eyes for a 10-min rest. Then, they began to watch the documentary video for 120 min, during which they answered the questionnaire every 30 min. The subject’s face was recorded by the camera during the experiment. The experimental procedure is shown in Figure 1.

Figure 1.

Experimental procedure. Q represents answering the questionnaire orally (about 10 s). T1, T2, T3, and T4 are the first, second, third, and fourth 30 min, respectively.

2.2. Blink Detection

2.2.1. Eyes Location

Firstly, the resolution of the face images was downsampled to 600 × 450 to reduce computation. Then, we applied the advanced facial landmarks to detect the face and locate the eyes [27], in which a set of regression trees was used to estimate the landmark position of the face from a sparse subset of pixel intensities and achieved real-time performance with high-quality prediction. A total of 68 key points of the face were extracted, as shown in Figure 2. The eye area of the target image could be determined with the eye coordinates obtained from the key points on the face. The coordinates of the eyes are from point 36 to 47 and, therefore, the eye images were obtained.

Figure 2.

68 key points in a given face.

2.2.2. Extraction of Blinking Image Frames

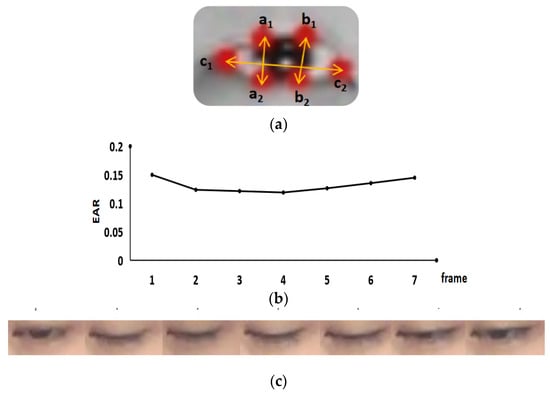

The blinking image frames were captured based on the change of the eye aspect ratio (EAR) [30]. Figure 3a, a1, a2, b1, b2, c1, c2 are the key points on the eye, which were determined by the 68 key points in the face.

Figure 3.

Blink image frame extraction. (a) The key points of the eye; (b) the change of EAR in a blink; (c) the image frames of a blink.

EAR was calculated as Equation (1).

where a1a2 and b1b2 are the height of the eye, and c1c2 is the length of the eye.

EAR was calculated for each eye image. It has been observed that EAR is usually larger than 0.2 when the eyes open. Hereby, a blink began when EAR was smaller than 0.2 and ended when EAR was larger than 0.2 again. Figure 3b shows the change of EAR during a blink with seven frames. Figure 3c provides an example of a blink exhibited by seven consecutive images.

2.3. Detection of Incomplete Blink

To evaluate whether the incomplete blinks were associated with visual symptoms, this paper detected the incomplete blinks in the following steps.

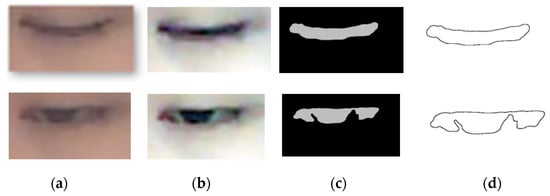

2.3.1. Extraction of the Eye Contour

Firstly, the single scale retinex (SSR) algorithm [31] was applied to improve the brightness, contrast, and sharpness of a greyscale image through a combination of spatial and spectral transformations, which resulted in dynamic range compression and a reduction in the global variance of the image. In our study, the SSR transform scale was set to 300. Then, the eye image was enlarged and enhanced using cubic interpolation with the magnification of 33. After that, the eye image was converted to a grey image and then binarized with the threshold of 139. Finally, the eye boundary was extracted using the adaptive threshold segmentation [32]. Figure 4 shows the contour extraction process.

Figure 4.

The process of contour extraction. (a) Original image; (b) SSR image; (c) binarized image; (d) eye contour.

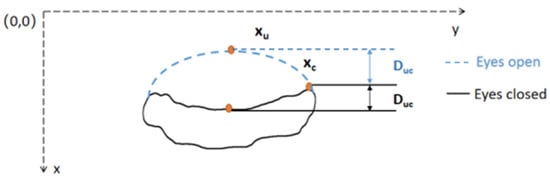

2.3.2. Calculation of the Distance between the Upper Eyelid and Eye Corner

The vertical distance between the upper eyelid and eye corner expressed by Duc was calculated by the Equation (2), where xu and xc are the x-axis coordinates of the upper eyelid midpoint and the eye corner respectively, as shown in Figure 5.

Figure 5.

The vertical distance between the midpoint of the upper eyelid and the eye corner. The dashed line stands for eyes open and the solid line for eyes closed.

2.3.3. Recognition of Incomplete Blink

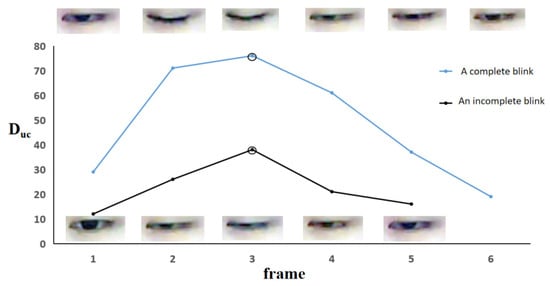

We manually labeled the complete blinks and incomplete blinks within the first ten minutes for all subjects, approximately 3000 blinks, including 500 incomplete blinks. Duc was calculated for each frame of blinking images and the maximum Duc was obtained for each blink, denoted as maxDuc. The median of maxDuc values of complete blinks within the first ten minutes was acquired as mmDuc for each subject. If the maxDuc value from a blink was less than 75% of mmDuc (the threshold), then this blink was recognized as an incomplete blink, otherwise, it was a complete blink. With this subject-specific threshold, the complete blinks and incomplete blinks were detected for the subsequent 110 min. Figure 6 shows the change of Duc in a blink. The upper part is a complete blink, in which the upper eyelid and lower eyelid touch completely during the eyes closed. The lower part is an incomplete blink, in which the cornea is not completely covered by eyelids during the eyes closed.

Figure 6.

The change of Duc in a blink. The top half corresponds to a complete blink and the bottom half corresponds to an incomplete blinking. ‘°’stands for the maxDuc from a complete and incomplete blink.

2.4. Blink Feature Extraction

Blink features were extracted separately from four 30-min videos (phase 1–4), including blink number (BN), mean blink interval (Mean_BI), mean blink duration (Mean_BD), group blink number (GBN), mean group blink interval (Mean_GBI), incomplete blink number (IBN), mean incomplete blink interval (Mean_IBI). These features were defined as follows:

BN is the total number of blinks including complete blinks and incomplete blinks within 30 min.

Mean_BI is the mean value of the time interval between adjacent two blinks within 30 min. Figure 7 shows the adjacent two blinks, where fi and fj are the start frames, fi + ni and fj + nj are the corresponding end frames. fj − fi is the frame interval between adjacent two blinks.

Figure 7.

Adjacent blink frame.

Mean_BD is the mean blink duration calculated as Equation (3).

where ni is the number of frames recorded in a blink, fr is the frame rate.

GBN is the number of group blinks whose blink interval is less than one second.

Mean_GBI is the mean value of the time interval between the adjacent group blinks.

IBN is the number of incomplete blinks.

Mean_IBI is the mean value of the time interval between the adjacent incomplete blinks.

2.5. Statistical Analysis

In this study, the scores of the visual fatigue questionnaire and all blink features were abnormally distributed. The questionnaire score was analyzed by a two-pair Wilcoxon signed-rank test. All blink features from four phases were analyzed by Friedman’s test as a whole, and then pair-wise multiple comparisons were performed. The analysis was performed using the software SPSS 24 (IBM Corp., Armonk, NY, USA). p < 0.05 was considered a significant difference. p < 0.01 was a very significant difference.

3. Results

3.1. Analysis of Questionnaire Score

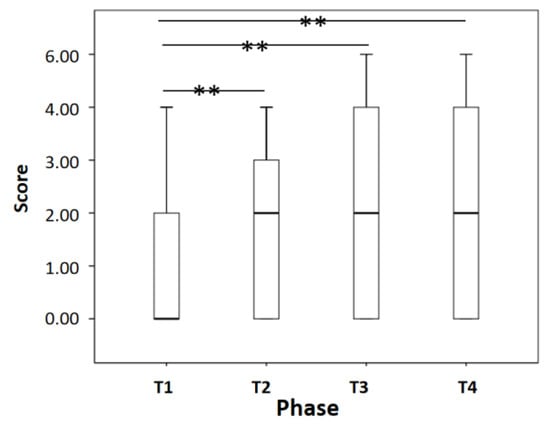

As shown in Figure 8, the questionnaire scores improved very significantly from phase T1 to phase T2, T3, and T4 (p < 0.01). That is, the symptoms of visual fatigue increased with the time spent watching the video.

Figure 8.

Questionnaire scores. ** p < 0.01.

3.2. Analysis of Blink Features

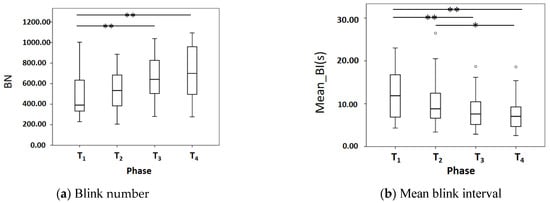

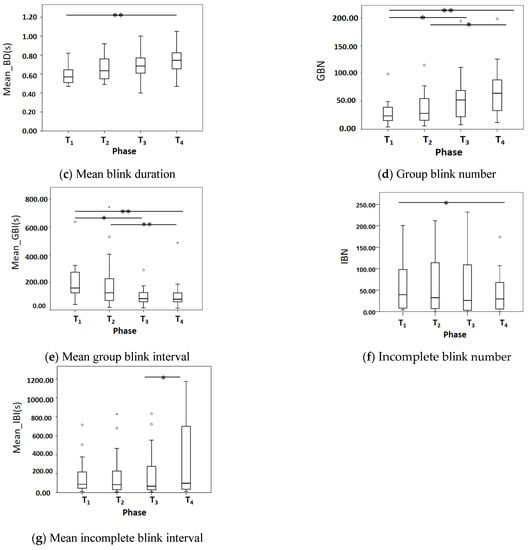

Figure 9a shows that BN in the phase of T3 and T4 are significantly higher than T1 (p < 0.01). Figure 9b shows that Mean_BI gradually decreases from T1 to T4, with T3 and T4 very significantly lower than T1 (p < 0.01), and T4 significantly lower than T2 (p < 0.05). Figure 9c shows Mean_BD gradually increases from T1 to T4, with T4 very significantly higher than T1 (p < 0.01). Figure 9d shows GBN gradually increases from T1 to T4, with T4 very significantly higher than T1 (p < 0.01), T3 and T4 significantly higher than T1 and T2 respectively (p < 0.05) Figure 9e shows that Mean_GBI in T4 is very significantly lower than T1 and T2 (p < 0.01) and T3 is significantly lower than the T1 (p < 0.05). Figure 9f shows that IBN in T4 is significantly lower than T1 (p < 0.05). Figure 9g shows that Mean_IBI in T4 is significantly higher than T3 (p < 0.05).

Figure 9.

Comparison of blink features. * p < 0.05 and ** p < 0.01.

3.3. Summary of the Blink Features at Different Phases

The blink features are summarized in Table 2. Friedman’s test is present as well to indicate the total difference between phase T1 and T4.

Table 2.

The median of blink features at different phases.

4. Discussion

This study designed a long-term VDT task with face video recordings to track the progression of visual fatigue. It did not cause any inconvenience and was more convincing than a short-term task [23]. The blink features extracted from the eye images could reflect the visual fatigue objectively.

A questionnaire was simplified to investigate the subjective feeling without interrupting the VDT task. The increase of the questionnaire score over time was associated with a worsening of visual fatigue.

We proposed the algorithms with the parameters of EAR and Duc to detect blinks, mmDuc and maxDuc to detect incomplete blinks, which eliminated the individual differences with subject-specific parameters. Computations were minimized using the eye’s size and making the algorithm as simple as possible and easy to implement.

In the present study, seven blink features were measured and compared over the 120-min VDT task to indicate the progression of visual fatigue objectively. With the extension of the VDT task, blink number and mean blink duration significantly increased and mean blink interval significantly decreased, which agreed with the previous work. It has been reported that a high blink rate usually occurred at a high degree of visual fatigue [33]. When staring at VDT over a long period, the tear film evaporated excessively, causing the external type of visual fatigue [24]. To relieve the discomfort of visual fatigue, people refreshed the tear film by frequent blinking and prolonging blink duration [34] and, consequently, reducing blink interval. Besides, the increased group blink number and reduced mean group blink interval indicated that the single blink was insufficient to sustain the over evaporated tear film and, therefore, more group blinks participated in the maintenance of tear film and relief of eyestrain.

In respect to incomplete blink, no significant difference was found in both the number and interval among phases 1–3. In phase 4, the incomplete blink number significantly decreased, and the mean interval increased. However, it has been reported the percentage of incomplete blinks increased with visual symptoms during the VDT task [22,25], which is inconsistent with our results. We speculated that the subjects in our study reduced their incomplete blinks and enhanced complete blinks to fight visual fatigue.

Young subjects were recruited in this study, because they usually spend more time on computers or smartphones and, therefore, are susceptible to VDT-induced visual fatigue. For the elders, their visual fatigue may be due to the aging of the eyes. Therefore, this study could be applied to monitor visual fatigue in office workers.

Future work should be directed to improve the image quality and the accuracy of incomplete blinks. Besides, more eye movement parameters could be explored to recognize visual fatigue and even grade visual fatigue. Further, dry eye and mental fatigue will be investigated to disclose their influence on visual fatigue.

5. Conclusions

We designed a long-term VDT task and recorded the face video that could capture the progression of visual fatigue without causing any inconvenience to the participants. Based on the ratio of height to length of the eye, a blink could be detected first. Among the blinks, the vertical distance from the midpoint of the upper eyelid to the corner of the eye was calculated to distinguish the incomplete blinks. These automatic blink recognition algorithms have the advantages of low computation and are subject-specific.

We analyzed the blink features statistically and found the blink number, mean blink duration, and group blink number increased, and the mean blink interval and mean group blink interval decreased significantly over time. In terms of incomplete blink, no significant difference was found in the first 90 min; however, the incomplete blink number decreased, and the mean interval increased significantly in the last 30 min.

With the development of visual fatigue, the blink features proposed in this study changed significantly. Therefore, the blink features automatically detected in this study could be used to evaluate the progression of VDT-induced visual fatigue objectively.

Author Contributions

Conceptualization, D.H. and B.L.; methodology, Z.Y., D.H. and B.L.; software, Z.Y.; validation, Z.Y., D.H. and B.L.; formal analysis, L.Y.; investigation, B.L., Y.F. and L.Y.; resources, Z.Y., D.H. and L.Y.; data curation, Z.Y.; writing—original draft preparation, Z.Y.; writing—review and editing, D.H., B.L. and L.Y.; visualization, Z.Y.; supervision, D.H. and B.L.; project administration, D.H. and L.Y.; funding acquisition, D.H. and L.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program, grant number 2019YFC0119700, and the National Natural Science Foundation of China, grant number U20A20388.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of Science and Technology of Beijing University of Technology.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data used to support the findings of this study are included in the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hirota, M.; Morimoto, T.; Kanda, H.; Endo, T.; Miyoshi, T.; Miyagawa, S.; Hirohara, Y.; Yamaguchi, T.; Saika, M.; Fujikado, T. Objective evaluation of visual fatigue using binocular fusion maintenance. Transl. Vis. Sci. Technol. 2018, 7, 9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blehm, C.; Vishnu, S.; Khattak, A.; Mitra, S.; Yee, R.W. Computer Vision Syndrome: A Review. Surv. Ophthalmol. 2005, 50, 253–262. [Google Scholar] [CrossRef] [PubMed]

- Zhu, R.; Liang, S. Progress in the treatment of visual fatigue syndrome on video terminals. Chin. J. Clin. (Electron. Ed.) 2019, 13, 702–706. [Google Scholar]

- Agarwal, S.; Goel, D.; Sharma, A. Evaluation of the Factors which Contribute to the Ocular Complaints in Computer Users. J. Clin. Diagn. Res. JCDR 2013, 7, 331–335. [Google Scholar] [CrossRef] [PubMed]

- Tilborg, M.; Murphy, P.J.; Evans, K.S. Impact of Dry Eye Symptoms and Daily Activities in a Modern Office. Optom. Vis. Sci. 2017, 94, 688–693. [Google Scholar] [CrossRef]

- Ganne, P.; Najeeb, S.; Chaitanya, G.; Sharma, A.; Krishnappa, N.C. Digital Eye Strain Epidemic amid COVID-19 Pandemic—A Cross-sectional Survey. Ophthalmic Epidemiol. 2021, 28, 285–292. [Google Scholar] [CrossRef]

- Portello, J.K.; Rosenfield, M.; Bababekova, Y.; Estrada, J.M.; Leon, A. Computer-related visual symptoms in office workers. Ophthalmic Physiol. Opt. 2012, 32, 375–382. [Google Scholar] [CrossRef]

- Lee, E.C.; Heo, H.; Park, K.R. The comparative measurements of eyestrain caused by 2D and 3D displays. IEEE Trans. Consum. Electron. 2010, 56, 1677–1683. [Google Scholar] [CrossRef]

- Heo, H.; Lee, W.O.; Shin, K.Y.; Park, K.R. Quantitative measurement of eyestrain on 3D stereoscopic display considering the eye foveation model and edge information. Sensors 2014, 14, 8577–8604. [Google Scholar] [CrossRef] [Green Version]

- Seguí, M.D.M.; Cabrero-García, J.; Crespo, A.; Verdú, J.; Ronda, E. A reliable and valid questionnaire was developed to measure computer vision syndrome at the workplace. J. Clin. Epidemiol. 2015, 68, 662–673. [Google Scholar] [CrossRef] [Green Version]

- Bhargava, R.; Kumar, P.; Kumar, M.; Mehra, N.; Mishra, A. A randomized controlled trial of omega-3 fatty acids in dry eye syndrome. Int. J. Ophthalmol. (Engl. Ed.) 2013, 6, 811–816. [Google Scholar]

- Han, C.J.; Ying, L.; Ho, K.S.; Rujun, J.; Kim, Y.H.; Choi, W.; You, I.C.; Yoon, K.C. The influences of smartphone use on the status of the tear film and ocular surface. PLoS ONE 2018, 13, e0206541. [Google Scholar]

- Park, S.J.; Oh, S.B.; Subramaniyam, M.; Lim, D.H.K. Human impact assessment of watching 3D television by electrocardiogram and subjective evaluation. In Proceedings of the XX IMEKO World Congress—Metrology for Green Growth, Busan, Korea, 9–14 September 2012; pp. 9–14. [Google Scholar]

- Kim, Y.J.; Lee, E.C. EEG Based Comparative Measurement of Visual Fatigue Caused by 2D and 3D Displays. In Proceedings of the HCI International 2011-Posters’ Extended Abstracts-International Conference, HCI International 2011, Orlando, FL, USA, 9–14 July 2011. [Google Scholar]

- Kim, C.J.; Park, S.; Won, M.J.; Whang, M.; Lee, E.C. Autonomic Nervous System Responses Can Reveal Visual Fatigue Induced by 3D Displays. Sensors 2013, 13, 13054–13062. [Google Scholar] [CrossRef] [PubMed]

- Hagura, H.; Nakajima, M. Study of asthenopia caused by the viewing of stereoscopic images: Measurement by MEG and other devices. International Society for Optics and Photonics. In Proceedings of the Electronic Imaging 2006, San Jose, CA, USA, 15–19 January 2006. [Google Scholar]

- Yu, J.H.; Lee, B.H.; Kim, D.H. EOG based eye movement measure of visual fatigue caused by 2D and 3D displays. In Proceedings of the 2012 IEEE-EMBS International Conference on Biomedical and Health Informatics, Hong Kong, China, 5–7 January 2012; pp. 305–308. [Google Scholar]

- Lee, E.C.; Park, K.R. Measuring eyestrain from LCD TV according to adjustment factors of image. IEEE Trans. Consum. Electron. 2009, 55, 1447–1452. [Google Scholar] [CrossRef]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Tröster, G. Eye movement analysis for activity recognition using electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 741–753. [Google Scholar] [CrossRef]

- Cardona, G.; Carles, G.; Carme, S.; Vilaseca, M.; Gispets, J. Blink rate, blink amplitude, and tear film integrity during dynamic visual display terminal tasks. Curr. Eye Res. 2011, 36, 190–197. [Google Scholar] [CrossRef]

- Portello, J.K.; Rosenfield, M.; Chu, C.A. Blink rate, incomplete blinks and computer vision syndrome. Optom. Vis. Sci. Off. Publ. Am. Acad. Optom. 2013, 90, 482–487. [Google Scholar] [CrossRef]

- Golebiowski, B.; Long, J.; Harrison, K.; Lee, A.; Chidi-Egboka, N.; Asper, L. Smartphone Use and Effects on Tear Film, Blinking and Binocular Vision. Curr. Eye Res. 2020, 45, 428–434. [Google Scholar] [CrossRef]

- Fogelton, A.; Benesova, W. Eye blink completeness detection. Comput. Vis. Image Underst. 2018, 176, 78–85. [Google Scholar] [CrossRef]

- Li, S.; Hao, D.; Liu, B.; Yin, Z.; Yang, L.; Yu, J. Evaluation of eyestrain with vertical electrooculogram. Comput. Methods Programs Biomed. 2021, 208, 106171. [Google Scholar] [CrossRef]

- Argilés, M.; Cardona, G.; Cabre, E.P.; Rodríguez, M. Blink rate and incomplete blinks in six different controlled hard-copy and electronic reading conditions. Investig. Opthalmology Vis. Sci. 2015, 56, 6679–6685. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yeung, S.; Russakovsky, O.; Mori, G.; Fei-Fei, L. End-to-End learning of action detection from frame glimpses in videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2678–2687. [Google Scholar]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Columbus, OH, USA, 23–38 June 2014; pp. 1867–1874. [Google Scholar]

- Song, F.; Tan, X.; Liu, X.; Chen, S. Eyes closeness detection from still images with multi-scale histograms of principal oriented gradients. Pattern Recognit. 2014, 47, 2825–2838. [Google Scholar] [CrossRef]

- Radlak, K.; Smolka, B. A novel approach to the eye movement analysis using a high speed camera. In Proceedings of the 2012 2nd International Conference on Advances in Computational Tools for Engineering Applications (ACTEA), Beirut, Lebanon, 12–15 December 2012. [Google Scholar]

- Raipurkar, A.R.; Chandak, M.B. Driver eye blink rate detection and alert system. Int. J. Innov. Technol. Explor. Eng. 2020, 9, 2278–3075. [Google Scholar]

- Mahmood, Z.; Muhammad, N.; Bibi, N.; Malik, Y.M.; Ahmed, N. Human visual enhancement using Multi Scale Retinex. Inform. Med. Unlocked 2018, 13, 9–20. [Google Scholar] [CrossRef]

- Kiragu, H.; Mwangi, E. An improved enhancement of degraded binary text document images using morphological and single scale retinex operations. In Proceedings of the IET Conference on Image Processing (IPR 2012), London, UK, 3–4 July 2012; pp. 1–6. [Google Scholar]

- Sheedy, J.E. The physiology of eyestrain. J. Mod. Opt. 2007, 54, 1333–1341. [Google Scholar] [CrossRef]

- Kasprzak, H.T.; Licznerski, T.J. Influence of the characteristics of tear film break-up on the point spread function of an eye model. In Proceedings of the SPIE—The International Society for Optical Engineering, Stara Lesna, Slovakia, 14 July 1999; Volume 3820, pp. 390–396. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).