Lightweight Software Architecture Evaluation for Industry: A Comprehensive Review

Abstract

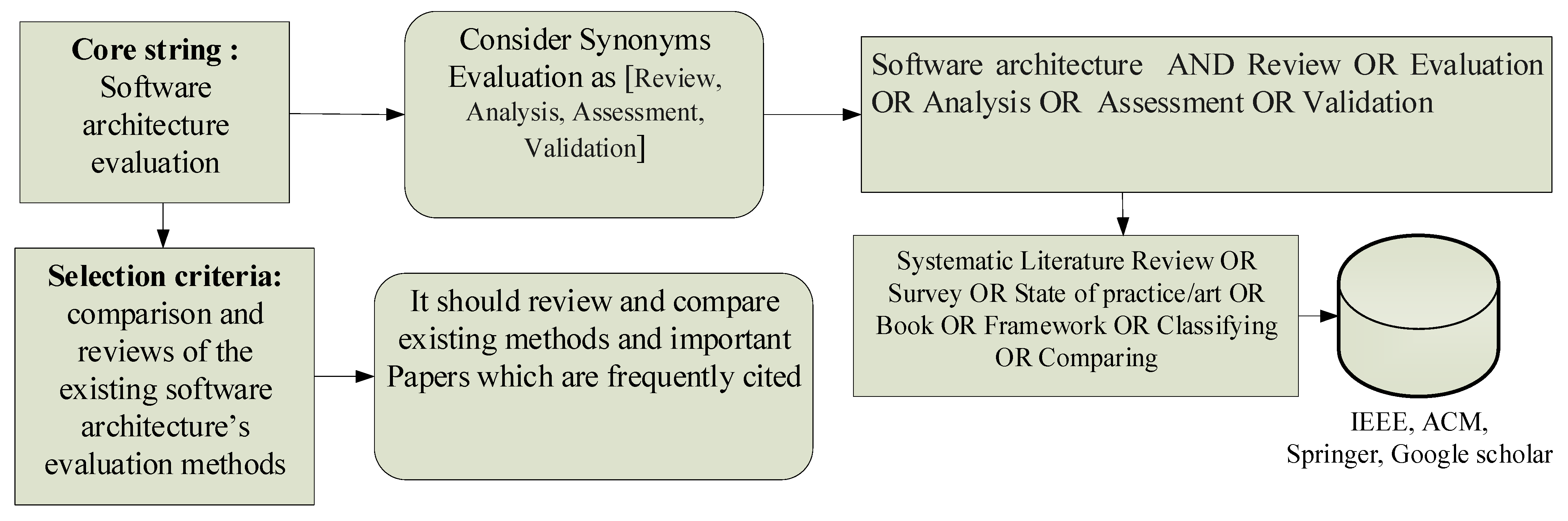

1. Introduction

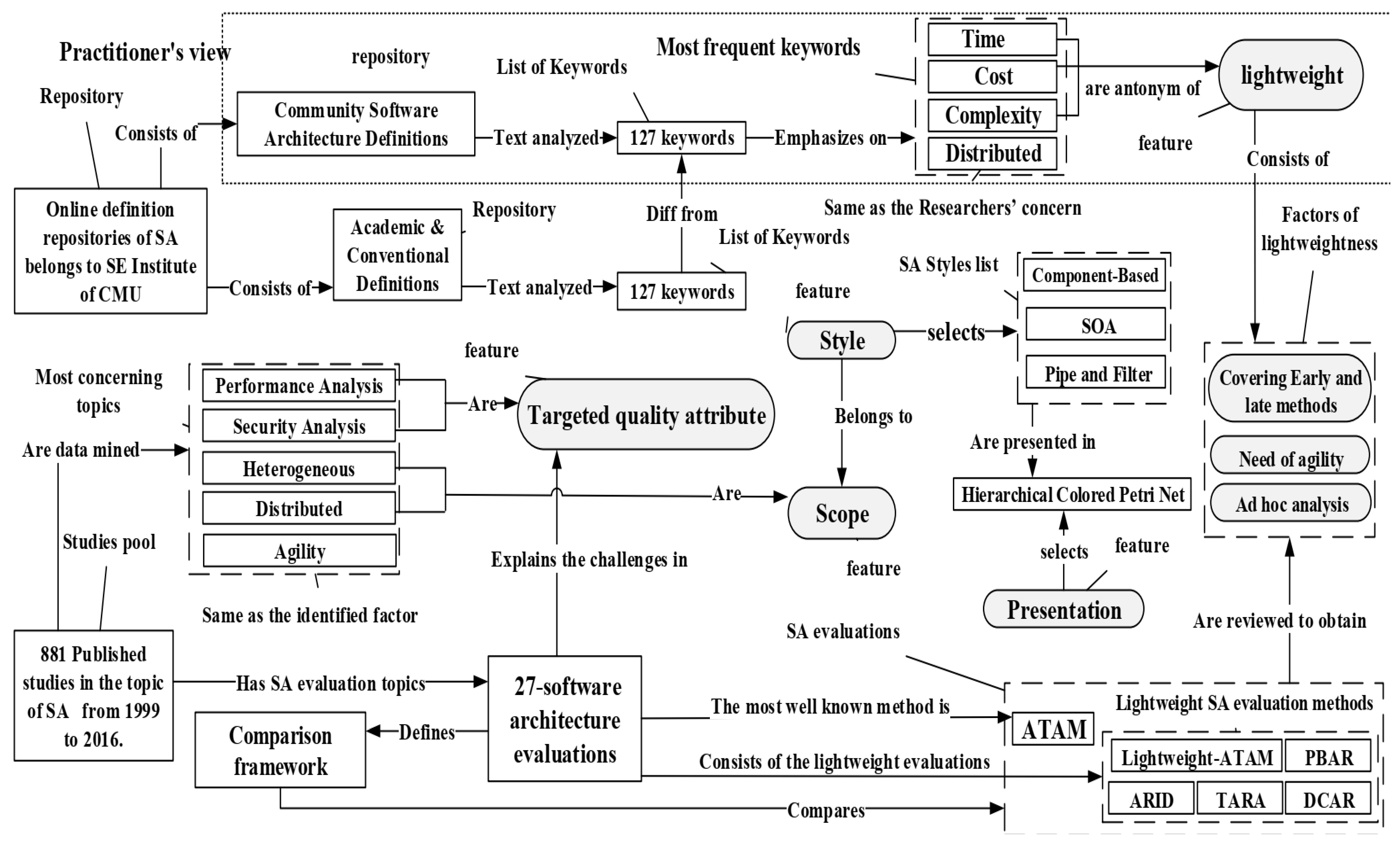

2. Software Architecture Definition Differences

3. Software Architecture Design

4. Software Architecture Evaluation

- The first technique focuses on experience, where SA plays a vital role in design and evaluation [66]. This is the most practiced technique by the industrial section [56]. Empirically Based Architecture Evaluation (EBAE) is performed late in development. At the same time, Attribute-Based Architectural Styles (ABAS) can run during the design time and get integrated with ATAM [49,60,67]. Decision-Centric Architecture Reviews (DCAR) analyse a set of architectural decisions to identify if the decision taken is valid. It is more suitable for agile projects due to its lightweight [62].

- The second-most popular technique is the prototype that collects early feedback from the stakeholders based on and enables architecture analysis to look at close-to-real conditions. It may answer questions that cannot be resolved by other approaches [68].

- The third technique is a scenario-based evaluation. SAAM is the earliest method using scenarios and multiple SA candidates. Later on, ATAM completed SAAM by trade-off analysis between QAs, where ATAM uses qualitative and quantitative techniques. The Architecture-Level Modifiability Analysis (ALMA) and Performance Assessment of Software Architecture (PASA) have been used to combine scenarios and quantitative methods to boost the results [69,70].

- The fourth technique is checklists, consisting of detailed questions assessing the various requirements of architecture. Software Review Architecture (SAR) uses checklists according to the stakeholder’s criteria and the system’s characteristics. The Framework of Evaluation of Reference Architectures (FERA) exploits the opinions of experts in SA and reference architectures. There is a need for a precise understanding of the requirements to create the checklist [71,72].

- The fifth technique is simulation-based methods, which are very tool-dependent; the Architecture Recovery, Change, and Decay Evaluator/Reference Architecture Representation Environment (ARCADE/RARE) simulates and evaluates architecture by automatic simulation and interpretation of SA [73]. An architecture description is created using the subset of toolset called Software Engineering Process Activities (SEPA), descriptions of usage scenarios are input to the ARCADE tool [74]. Many tools and toolkits transform architecture into layered queuing networks (LQN) [75]. It requires special knowledge about the component’s interaction and behavioral information, execution times, and resource requirements [76]. Formal Systematic Software Architecture Specification and Analysis Methodology (SAM) follows formal methods and supports an executable SA specification using time Petri nets and temporal logic [77]. It facilitates scalable SA specification by hierarchical architectural decomposition.

- The sixth category is for metrics-based techniques that need to be mixed with other techniques, and they are not intrinsically powerful enough [78]. Here are some examples of metric-based methods. The Software Architecture Evaluation on Model (SAEM) is based on the Goal/Question/Metric Paradigm (GQM) to organize the metrics. Metrics of Software Architecture Changes based on Structural Metrics (SACMM) measures the distances between SAs endpoints by graph kernel functions [79]. Lindvall et al. [60] introduced late SA metrics-based approaches to compare the actual SA with the planned architecture.

- The seventh technique, focusing on mathematical-model-based methods, is highlighted in the research areas, but the industry does not attend to them. Software Performance Engineering (SPE) and path and state-based methods are used to increase the reliability performance. These modeling methods exploit mathematical equations resulting in architectural statistics such as the mean execution time of a component and can be mixed with simulation [80,81].

| Techniques | Methods | Quality Attribute | Remarks |

|---|---|---|---|

| Experience-based Experts encountered the software system’s requirements and domain. | EBAE (Empirically Based Architecture Evaluation) | Maintainability | The most common technique applied to review architecture in the industry [5], based on expert’s knowledge and documents. |

| ABAS (Attribute-Based Architectural Styles) | Specific QAs | ||

| DCAR (Decision-Centric Architecture Reviews) | All | ||

| Prototyping-based Incrementally prototyping before developing a product to get to know the problem better. | Exploratory, Experimental, and Evolutionary | Performance and modifiability | The delighted techniques have been applied to review industry architecture; possibly an evolutionary prototype can be developed into a final product. |

| Scenario-based The specific quality attribute is evaluated by creating a scenario profile conducting a concrete description of the quality requirement. | SAAM (Software Architecture Analysis Method) | All | Scenario is a short description of stakeholders’ interaction with a system. Scenario-based methods are widely used and well known [49]. |

| ATAM (Architecture Trade-off Analysis Method) | |||

| Lightweight-ATAM (derived from ATAM) | |||

| ARID (Active Reviews for Intermediate Designs) | |||

| PBAR (Pattern-Based Architecture Reviews) | |||

| ALMA (Architecture Level Modifiability Analysis) | Modifiability | ||

| PASA (Performance Assessment of Software Architecture) | Performance | ||

| Checklist-based A checklist includes a list of detailed questions to evaluate an architecture. | Software Review Architecture (SAR) | All | It depends on the domain, meaning that a new checklist must be created for each new evaluation. |

| FERA (Framework of Evaluation of Reference Architectures) | |||

| Simulation-based It provides answers to specific questions, such as the system’s behavior under load. | ARCADE/RARE (Architecture Recovery, Change, and Decay Evaluator/Reference Architecture Representation Environment) | Performance | There is a tendency to mix simulation and mathematical modeling to extend the evaluation framework. |

| Layered Queuing Networks) | |||

| SAM (Formal Systematic Software Architecture Specification and Analysis Methodology) | |||

| Metrics-based It uses quality metrics to evaluate architecture and its representation. | SACMM (Metrics of Software Architecture Changes based on Structural Metrics) | Modifiability | It is not rampant in the industry. Commonly other methods exploit metrics to boost their functionalities. |

| SAEM (Software Architecture Evaluation on Model) | |||

| Design pattern, conformance with design and violations. | Inter-module coupling violation. | ||

| TARA (Tiny Architectural Review Approach) | Functional and non-functional | ||

| Math Model-based By mathematical proofs and method, operational qualities requirements such as performance and reliability are evaluated. | Path and state-based methods | Reliability | It is mixed with a scenario and simulation-based architecture to have more accurate results. |

| SPE (Software Performance Engineering). | Performance |

4.1. Categorizing of Software Architecture Evaluation

- C1:

- The main goal of the method.

- C2:

- The evaluation technique(s).

- C3:

- Covered QAs.

- C4:

- Stakeholder’s engagement.

- C5:

- How applied techniques are arranged and are performed to achieve the method goal.

- C6:

- How user experience interferes with the method

- C7:

- Method validation

4.2. Identifying Factors for Lightweight Evaluation Method

4.2.1. Architecture Tradeoff Analysis Method

| ATAM Step (C5) | Stakeholders Engagement (C4) | How User Experience Interferes with the Method (C6) | Outputs |

|---|---|---|---|

| First Phase: Presentation | |||

| 1. Briefing of the ATAM | Evaluation Team and All stakeholders | The stakeholders will understand the ATAM process and related techniques. | – |

| 2. Introduction of the business drivers | The stakeholders will understand the goals of businesses and the architectural drivers (non-functional qualities affect SA). | – | |

| 3. Introduction of SAs | Evaluation Team and who make significant project decisions | The evaluation team will review the targeted SA. | – |

| 4. Identifying the architectural approaches | Evaluation Team and software architects | The team and architects will highlight architectural patterns, tactics, and SA design. | List of the candidate SAs design |

| 5. Producing of the quality attribute tree | Evaluation Team and those who make significant project decisions | The decision-makers will prioritize their decision based on the quality attribute goals. | The first version is based on prioritized quality scenarios and a quality attribute tree. |

| 6. Analyze the architectural approaches | Evaluation Team and software architects | They will link the SA to primary quality attribute goals to develop an initial analysis resulting in non-risks, risk, and sensitivity/trade-off points. | The first version of non-risks, risks, risk themes, trade-off points, sensitivity points |

| Second Phase: Testing | |||

| 7. Brainstorming and prioritizing scenarios | Evaluation Team and All stakeholders | It will utilize the involved stakeholder’s knowledge to expand the quality requirements. | The last version of prioritized quality scenarios |

| 8. Analyze the architectural approaches | Evaluation Team and software architects | Revised the achievements. | The version of the of non-risks, risks, risk themes, trade-off points, sensitivity points |

| Third Phase: Reporting | |||

| 9. Conclusion | All stakeholders | It summarizes the evaluation’s achievements regarding the business drivers introduced in the second step. | Evaluation report with final results |

4.2.2. Lightweight ATAM

4.2.3. ARID

- Phase 1: Meeting for preparation.

- Step 1: Appointing of reviewers.

- Step 2: Presenting of SA’s designs.

- Step 3: Prepare seed scenarios.

- Step 4: Arranging the meeting.

- Phase 2: Review meeting.

- Step 5: Presenting ARID.

- Step 6: Presenting designed SA.

- Step 7: Brainstorming and prioritizing the scenarios.

- Step 8: Conducting of SA evaluation.

- Step 9: Results.

4.2.4. PBAR

- Elicitation of essential quality requirements from user stories with the assistance of developers.

- Establishing SA’s structure by a discussion with developers.

- Nominating architectural styles.

- Analyzing the nominated architectural effects on the qualities.

- Recognizing and discussing the final results.

4.2.5. TARA

- The evaluator elicits essential requirements and system context.

- The evaluator designed SA based on the previous.

- The implementation techniques are assessed.

- The results of the previous step should link to the requirements. Expert judgment techniques are applied in this step.

- The evaluation’s results should be collected and related based on the predefined forms.

- Present findings and recommendations.

4.2.6. DCAR

- Preparation: The SA styles and related technologies are presented for management and customer representatives.

- DCAR Introduction.

- Management presentation: The management/customer representative will be exposed to a brief presentation to elicit the potential decision forces (the list of architectural decisions was produced in the first step).

- Architecture presentation: The lead architect will present potential decision forces and potential design decisions to all participants in a very brief and interactive session to revise the list of architectural choices.

- Forces and decision completion: The decision forces and design decisions will be verified based on the same terminologies for all stakeholders.

- Decision prioritization: The decisions will be prioritized based on participant’s votes.

- Decision documentation: The most important decisions will be documented in applied architectural solutions, the addressed problem, the alternative solutions, and the forces that must be considered to evaluate the decision.

- Decision evaluation: By discussion among all stakeholders, the potential risks and issues are selected, the decisions are revised based on decision approval.

- Retrospective and reporting: Review team will scrutinize all the artifacts and produce the final report.

4.3. Factors for Lightweight Evaluation Method

4.3.1. Covering Early and Late Methods

4.3.2. Need of Agility

4.3.3. Ad Hoc Analysis

5. Targeted Quality Attributes: Performance and Security in Software Architecture Evaluation

5.1. Performance

- Predicting the system’s performance in the early stages of the software life cycle.

- Testing performance goals.

- Comparing the performance of architectural designs.

- Finding bottleneck, possible timing problems.

5.2. Security

- Omission flaws are born in the aftermath of decisions that have never been made (e.g., ignoring a security requirement or potential threats). Experience and prototype-based or even scenario-based methods can help the architect to detect this type of flaw. Still, they are mainly concerned with the requirement elicitation step, which is outside the scope of this research.

- Commission flaws refer to the design decisions that were made and could lead to undesirable consequences. An example of such flaws is “using a weak cryptography for passwords” to achieve better performance while maintaining data confidentiality. DCAR is devised to support such a problem.

- Realization flaws are the correct design decisions (i.e., they satisfy the software’s security requirements), but their implementation suffers from coding mistakes. It can lead to many consequences, such as crashes or bypass mechanisms. TARA and SA evaluation methods can mitigate these problems.

6. Identified Features and Factors

7. Achievement and Results

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Symbol | Meaning |

| AABI | Andolfl et al. |

| ABAS | Attribute based Architectural Style |

| ABI | Aquilani et al. |

| ACP | Algebra of Communicating Processes |

| ADL | Architecture Description Language |

| AELB | Atomic Energy Licensing Board |

| ARCADE | Architecture Recovery, Change, and Decay Evaluator |

| ARID | Active Reviews for Intermediate Designs |

| ATAM | Architecture-based Tradeoff Analysis Method |

| BIM | Balsamo et al. |

| CCS | Calculus of Communicating Systems |

| CM | Cortellessa and Mirandola |

| CMMI | Capability Maturity Model Integration |

| CPN | Colored Petri nets |

| CSP | Communicating Sequential Processes |

| DCAR | Decision-Centric Architecture Reviews |

| EBAE | Empirically Based Architecture Evaluation |

| FERA | Framework of evaluation of Reference Architectures |

| GQM | Goal Question Metrics Paradigm |

| GUI | Graphical User Interface |

| HCPN | Hierarchical Colored Petri nets |

| HM | Heuristic Miner |

| LQN | Layered Queuing Networks |

| LTL | Linear Temporal Logic |

| LTS | Labeled Transition System |

| MVC | Model View Controller |

| OWL | Web Ontology Language |

| PAIS | Process Aware Information System |

| PASA | Performance Assessment of Software Architecture |

| PBAR | Pattern-Based Architecture Reviews |

| PM | Process Mining |

| QNM | Layered Queuing Networks |

| RARE | Reference Architecture Representation Environment |

| REST API | Representational State Transfer Application Programming Interface |

| SA | Software Architecture |

| SAAM | Scenario-based Software Architecture Analysis Method |

| SACMM | Metrics of Software Architecture Changes based on Structural Metrics |

| SADL | Simulation Architecture Description Language |

| SAM | Formal systematic software architecture specification and analysis methodology |

| SAR | Software Review Architecture |

| SOA | Service Oriented Architecture |

| SPE | Software Performance Analysis |

| TARA | Tiny Architectural Review Approach |

| UML | Unified Modeling Language |

| WS | Williams and Smith |

| SPL | Software Product Line |

| OO | Object Oriented |

| CBS | component based Software |

References

- Feldgen, M.; Clua, O. Promoting design skills in distributed systems. In Proceedings of the 2012 Frontiers in Education Conference Proceedings, Seattle, WA, USA, 3–6 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–6. [Google Scholar]

- Heidmann, E.F.; von Kurnatowski, L.; Meinecke, A.; Schreiber, A. Visualization of Evolution of Component-Based Software Architectures in Virtual Reality. In Proceedings of the 2020 Working Conference on Software Visualization (VISSOFT), Adelaide, Australia, 28 September–2 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 12–21. [Google Scholar]

- Mekni, M.; Buddhavarapu, G.; Chinthapatla, S.; Gangula, M. Software Architectural Design in Agile Environments. J. Comput. Commun. 2017, 6, 171–189. [Google Scholar] [CrossRef]

- Kil, B.-H.; Park, J.-S.; Ryu, M.-H.; Park, C.-Y.; Kim, Y.-S.; Kim, J.-D. Cloud-Based Software Architecture for Fully Automated Point-of-Care Molecular Diagnostic Device. Sensors 2021, 21, 6980. [Google Scholar] [CrossRef]

- Lagsaiar, L.; Shahrour, I.; Aljer, A.; Soulhi, A. Modular Software Architecture for Local Smart Building Servers. Sensors 2021, 21, 5810. [Google Scholar] [CrossRef] [PubMed]

- Ungurean, I.; Gaitan, N.C. A software architecture for the Industrial Internet of Things—A conceptual model. Sensors 2020, 20, 5603. [Google Scholar] [CrossRef] [PubMed]

- Piao, Y.C.K.; Ezzati-Jivan, N.; Dagenais, M.R. Distributed Architecture for an Integrated Development Environment, Large Trace Analysis, and Visualization. Sensors 2021, 21, 5560. [Google Scholar] [CrossRef]

- Dickerson, C.E.; Wilkinson, M.; Hunsicker, E.; Ji, S.; Li, M.; Bernard, Y.; Bleakley, G.; Denno, P. Architecture definition in complex system design using model theory. IEEE Syst. J. 2020, 15, 1847–1860. [Google Scholar] [CrossRef]

- Yang, C.; Liang, P.; Avgeriou, P.; Eliasson, U.; Heldal, R.; Pelliccione, P. Architectural assumptions and their management in industry—An exploratory study. In Proceedings of the European Conference on Software Architecture, Canterbury, UK, 11–15 September 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 191–207. [Google Scholar]

- Harrison, N.; Avgeriou, P. Pattern-based architecture reviews. IEEE Softw. 2010, 28, 66–71. [Google Scholar] [CrossRef]

- Yang, T.; Jiang, Z.; Shang, Y.; Norouzi, M. Systematic review on next-generation web-based software architecture clustering models. Comput. Commun. 2020, 167, 63–74. [Google Scholar] [CrossRef]

- Allian, A.P.; Sena, B.; Nakagawa, E.Y. Evaluating variability at the software architecture level: An overview. In Proceedings of the 34th ACM/SIGAPP Symposium on Applied Computing, Limassol, Cyprus, 8–12 April 2019; pp. 2354–2361. [Google Scholar]

- Sedaghatbaf, A.; Azgomi, M.A. SQME: A framework for modeling and evaluation of software architecture quality attributes. Softw. Syst. Model. 2019, 18, 2609–2632. [Google Scholar] [CrossRef]

- Venters, C.C.; Capilla, R.; Betz, S.; Penzenstadler, B.; Crick, T.; Crouch, S.; Nakagawa, E.Y.; Becker, C.; Carrillo, C. Software sustainability: Research and practice from a software architecture viewpoint. J. Syst. Softw. 2018, 138, 174–188. [Google Scholar] [CrossRef]

- van Heesch, U.; Eloranta, V.-P.; Avgeriou, P.; Koskimies, K.; Harrison, N. Decision-centric architecture reviews. IEEE Softw. 2013, 31, 69–76. [Google Scholar] [CrossRef]

- Zalewski, A. Modelling and evaluation of software architectures. In Prace Naukowe Politechniki Warszawskiej. Elektronika; Warsaw University of Technology Publishing Office: Warsaw, Poland, 2013. [Google Scholar]

- Amirat, A.; Anthony, H.-K.; Oussalah, M.C. Object-oriented, component-based, agent oriented and service-oriented paradigms in software architectures. Softw. Archit. 2014, 1. [Google Scholar] [CrossRef]

- Richards, M. Software Architecture Patterns; O’Reilly Media: Newton, MA, USA, 2015; Available online: https://www.oreilly.com/library/view/software-architecture-patterns/9781491971437/ (accessed on 1 December 2021).

- Shatnawi, A. Supporting Reuse by Reverse Engineering Software Architecture and Component from Object-Oriented Product Variants and APIs. Ph.D. Thesis, University of Montpellier, Montpellier, France, 2015. [Google Scholar]

- Brown, S. Is Software Architecture important. In Software Architecture for Developers; Leanpub: Victoria, BC, Canada, 2015. [Google Scholar]

- Link, D.; Behnamghader, P.; Moazeni, R.; Boehm, B. Recover and RELAX: Concern-oriented software architecture recovery for systems development and maintenance. In Proceedings of the 2019 IEEE/ACM International Conference on Software and System Processes (ICSSP), Montreal, QC, Canada, 25–26 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 64–73. [Google Scholar]

- Medvidovic, N.; Taylor, R.N. Software architecture: Foundations, theory, and practice. In Proceedings of the 2010 ACM/IEEE 32nd International Conference on Software Engineering, Cape Town, South Africa, 1–8 May 2010; IEEE: Piscataway, NJ, USA, 2010; Volume 2, pp. 471–472. [Google Scholar]

- Kazman, R.; Bass, L.; Klein, M.; Lattanze, T.; Northrop, L. A basis for analyzing software architecture analysis methods. Softw. Qual. J. 2005, 13, 329–355. [Google Scholar] [CrossRef]

- Tibermacine, C.; Sadou, S.; That, M.T.T.; Dony, C. Software architecture constraint reuse-by-composition. Future Gener. Comput. Syst. 2016, 61, 37–53. [Google Scholar] [CrossRef][Green Version]

- Muccini, H. Exploring the Temporal Aspects of Software Architecture. In Proceedings of the ICSOFT-EA 2016, Lisbon, Portugal, 24–26 July 2016; p. 9. [Google Scholar]

- Aboutaleb, H.; Monsuez, B. Measuring complexity of system/software architecture using Higraph-based model. In Proceedings of the International Multiconference of Engineers and Computer Scientists, Hong Kong, China, 15–17 March 2017; Newswood Limited: Hong Kong, China, 2017; Volume 1, pp. 92–96. [Google Scholar]

- Garcés, L.; Oquendo, F.; Nakagawa, E.Y. Towards a taxonomy of software mediators for systems-of-systems. In Proceedings of the VII Brazilian Symposium on Software Components, Architectures, and Reuse, Sao Carlos, Brazil, 17–21 September 2018; pp. 53–62. [Google Scholar]

- Magableh, A.; Shukur, Z. Comprehensive Aspectual UML approach to support AspectJ. Sci. World J. 2014, 2014, 327808. [Google Scholar] [CrossRef]

- Kanade, A. Event-Based Concurrency: Applications, Abstractions, and Analyses. In Advances in Computers; Elsevier: Amsterdam, The Netherlands, 2019; Volume 112, pp. 379–412. [Google Scholar]

- Al Rawashdeh, H.; Idris, S.; Zin, A.M. Using Model Checking Approach for Grading the Semantics of UML Models. In Proceedings of the International Conference Image Processing, Computers and Industrial Engineering (ICICIE’2014), Kuala Lumpur, Malaysia, 15–16 January 2014. [Google Scholar]

- Rodriguez-Priego, E.; García-Izquierdo, F.J.; Rubio, Á.L. Modeling issues: A survival guide for a non-expert modeler. In Proceedings of the International Conference on Model Driven Engineering Languages and Systems, Oslo, Norway, 3–8 October 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 361–375. [Google Scholar]

- Medvidovic, N.; Taylor, R.N. A classification and comparison framework for software architecture description languages. IEEE Trans. Softw. Eng. 2000, 26, 70–93. [Google Scholar] [CrossRef]

- Jensen, K.; Kristensen, L.M. Introduction to Modelling and Validation. In Coloured Petri Nets; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–12. [Google Scholar]

- Emadi, S.; Shams, F. Mapping Annotated Use Case and Sequence Diagrams to a Petri Net Notation for Performance Evaluation. In Proceedings of the Second International Conference on Computer and Electrical Engineering (ICCEE’09), Dubai, United Arab Emirates, 28–30 December 2009; pp. 67–81. [Google Scholar]

- Sahlabadi, M.; Muniyandi, R.C.; Shukor, Z.; Sahlabadi, A. Heterogeneous Hierarchical Coloured Petri Net Software/Hardware Architectural View of Embedded System based on System Behaviours. Procedia Technol. 2013, 11, 925–932. [Google Scholar] [CrossRef]

- Sievi-Korte, O.; Richardson, I.; Beecham, S. Software architecture design in global software development: An empirical study. J. Syst. Softw. 2019, 158, 110400. [Google Scholar] [CrossRef]

- Jaiswal, M. Software Architecture and Software Design. Int. Res. J. Eng. Technol. (IRJET) 2019, 6, 2452–2454. [Google Scholar] [CrossRef]

- Hofmeister, C.; Kruchten, P.; Nord, R.L.; Obbink, H.; Ran, A.; America, P. Generalizing a model of software architecture design from five industrial approaches. In Proceedings of the 5th Working IEEE/IFIP Conference on Software Architecture (WICSA’05), Pittsburgh, PA, USA, 6–10 November 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 77–88. [Google Scholar]

- Wu, X.-W.; Li, C.; Wang, X.; Yang, H.-J. A creative approach to reducing ambiguity in scenario-based software architecture analysis. Int. J. Autom. Comput. 2019, 16, 248–260. [Google Scholar] [CrossRef]

- Iacob, M.-E. Architecture analysis. In Enterprise Architecture at Work; Springer: Berlin/Heidelberg, Germany, 2017; pp. 215–252. [Google Scholar]

- Al-Tarawneh, F.; Baharom, F.; Yahaya, J.H. Toward quality model for evaluating COTS software. Int. J. Adv. Comput. Technol. 2013, 5, 112. [Google Scholar]

- Khatchatoorian, A.G.; Jamzad, M. Architecture to improve the accuracy of automatic image annotation systems. IET Comput. Vis. 2020, 14, 214–223. [Google Scholar] [CrossRef]

- Júnior, A.A.; Misra, S.; Soares, M.S. A systematic mapping study on software architectures description based on ISO/IEC/IEEE 42010: 2011. In Proceedings of the International Conference on Computational Science and Its Applications, Saint Petersburg, Russia, 1–4 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 17–30. [Google Scholar]

- Weinreich, R.; Miesbauer, C.; Buchgeher, G.; Kriechbaum, T. Extracting and facilitating architecture in service-oriented software systems. In Proceedings of the 2012 Joint Working IEEE/IFIP Conference on Software Architecture and European Conference on Software Architecture, Helsinki, Finland, 20–24 August 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 81–90. [Google Scholar]

- Cabac, L.; Haustermann, M.; Mosteller, D. Software development with Petri nets and agents: Approach, frameworks and tool set. Sci. Comput. Program. 2018, 157, 56–70. [Google Scholar] [CrossRef]

- Cabac, L.; Mosteller, D.; Wester-Ebbinghaus, M. Modeling organizational structures and agent knowledge for Mulan applications. In Transactions on Petri Nets and Other Models of Concurrency IX; Springer: Berlin/Heidelberg, Germany, 2014; pp. 62–82. [Google Scholar]

- Siefke, L.; Sommer, V.; Wudka, B.; Thomas, C. Robotic Systems of Systems Based on a Decentralized Service-Oriented Architecture. Robotics 2020, 9, 78. [Google Scholar] [CrossRef]

- Hasselbring, W. Software architecture: Past, present, future. In The Essence of Software Engineering; Springer: Cham, Switzerland, 2018; pp. 169–184. [Google Scholar]

- Breivold, H.P.; Crnkovic, I. A systematic review on architecting for software evolvability. In Proceedings of the 2010 21st Australian Software Engineering Conference, Auckland, New Zealand, 6–9 April 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 13–22. [Google Scholar]

- Barcelos, R.F.; Travassos, G.H. Evaluation Approaches for Software Architectural Documents: A Systematic Review. In Proceedings of the CIbSE 2006, La Plata, Argentina, 24–28 April 2006; pp. 433–446. [Google Scholar]

- Patidar, A.; Suman, U. A survey on software architecture evaluation methods. In Proceedings of the 2015 2nd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 11–13 March 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 967–972. [Google Scholar]

- Shanmugapriya, P.; Suresh, R. Software architecture evaluation methods—A survey. Int. J. Comput. Appl. 2012, 49, 19–26. [Google Scholar] [CrossRef]

- Roy, B.; Graham, T.N. Methods for evaluating software architecture: A survey. Sch. Comput. TR 2008, 545, 82. [Google Scholar]

- Mattsson, M.; Grahn, H.; Mårtensson, F. Software architecture evaluation methods for performance, maintainability, testability, and portability. In Proceedings of the Second International Conference on the Quality of Software Architectures, Västerås, Sweden, 27–29 June 2006; Citeseer: Princeton, NJ, USA, 2006. [Google Scholar]

- Christensen, H.B.; Hansen, K.M. An empirical investigation of architectural prototyping. J. Syst. Softw. 2010, 83, 133–142. [Google Scholar] [CrossRef]

- Babar, M.A.; Gorton, I. Comparison of scenario-based software architecture evaluation methods. In Proceedings of the 11th Asia-Pacific Software Engineering Conference, Busan, Korea, 30 November–3 December 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 600–607. [Google Scholar]

- Maranzano, J.F.; Rozsypal, S.A.; Zimmerman, G.H.; Warnken, G.W.; Wirth, P.E.; Weiss, D.M. Architecture reviews: Practice and experience. IEEE Softw. 2005, 22, 34–43. [Google Scholar] [CrossRef]

- Babar, M.A. Making software architecture and agile approaches work together: Foundations and approaches. In Agile Software Architecture; Elsevier: Amsterdam, The Netherlands, 2014; pp. 1–22. [Google Scholar]

- Sharma, T.; Suryanarayana, G.; Samarthyam, G. Refactoring for Software Design Smells: Managing Technical Debt; Morgan Kaufmann Publishers: Burlington, MA, USA, 2015. [Google Scholar]

- Lindvall, M.; Tvedt, R.T.; Costa, P. An empirically-based process for software architecture evaluation. Empir. Softw. Eng. 2003, 8, 83–108. [Google Scholar] [CrossRef]

- Santos, J.F.M.; Guessi, M.; Galster, M.; Feitosa, D.; Nakagawa, E.Y. A Checklist for Evaluation of Reference Architectures of Embedded Systems (S). SEKE 2013, 13, 1–4. [Google Scholar]

- De Oliveira, L.B.R. Architectural design of service-oriented robotic systems. Ph.D. Thesis, Universite de Bretagne-Sud, Lorient, France, 2015. [Google Scholar]

- Nakamura, T.; Basili, V.R. Metrics of software architecture changes based on structural distance. In Proceedings of the 11th IEEE International Software Metrics Symposium (METRICS’05), Como, Italy, 19–22 September 2005; IEEE: Piscataway, NJ, USA, 2005; p. 24. [Google Scholar]

- Knodel, J.; Naab, M. How to Evaluate Software Architectures: Tutorial on Practical Insights on Architecture Evaluation Projects with Industrial Customers. In Proceedings of the 2017 IEEE International Conference on Software Architecture Workshops (ICSAW), Gothenburg, Sweden, 5–7 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 183–184. [Google Scholar]

- Babar, M.A.; Gorton, I. Software architecture review: The state of practice. Computer 2009, 42, 26–32. [Google Scholar] [CrossRef]

- Tekinerdoğan, B.; Akşit, M. Classifying and evaluating architecture design methods. In Software Architectures and Component Technology; Springer: Berlin/Heidelberg, Germany, 2002; pp. 3–27. [Google Scholar]

- Klein, M.H.; Kazman, R.; Bass, L.; Carriere, J.; Barbacci, M.; Lipson, H. Attribute-based architecture styles. In Proceedings of the Working Conference on Software Architecture, San Antonio, TX, USA, 22–24 February 1999; Springer: Berlin/Heidelberg, Germany, 1999; pp. 225–243. [Google Scholar]

- Arvanitou, E.M.; Ampatzoglou, A.; Chatzigeorgiou, A.; Galster, M.; Avgeriou, P. A mapping study on design-time quality attributes and metrics. J. Syst. Softw. 2017, 127, 52–77. [Google Scholar] [CrossRef]

- Fünfrocken, M.; Otte, A.; Vogt, J.; Wolniak, N.; Wieker, H. Assessment of ITS architectures. IET Intell. Transp. Syst. 2018, 12, 1096–1102. [Google Scholar]

- Babar, M.A.; Shen, H.; Biffl, S.; Winkler, D. An Empirical Study of the Effectiveness of Software Architecture Evaluation Meetings. IEEE Access 2019, 7, 79069–79084. [Google Scholar] [CrossRef]

- Savold, R.; Dagher, N.; Frazier, P.; McCallam, D. Architecting cyber defense: A survey of the leading cyber reference architectures and frameworks. In Proceedings of the 2017 IEEE 4th International Conference on Cyber Security and Cloud Computing (CSCloud), New York, NY, USA, 26–28 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 127–138. [Google Scholar]

- de Jong, P.; van der Werf, J.M.E.; van Steenbergen, M.; Bex, F.; Brinkhuis, M. Evaluating design rationale in architecture. In Proceedings of the 2019 IEEE International Conference on Software Architecture Companion (ICSA-C), Hamburg, Germany, 25–26 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 145–152. [Google Scholar]

- Shahbazian, A.; Lee, Y.K.; Le, D.; Brun, Y.; Medvidovic, N. Recovering architectural design decisions. In Proceedings of the 2018 IEEE International Conference on Software Architecture (ICSA), Seattle, WA, USA, 30 April–4 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 95–9509. [Google Scholar]

- Krusche, S.; Bruegge, B. CSEPM-a continuous software engineering process metamodel. In Proceedings of the 2017 IEEE/ACM 3rd International Workshop on Rapid Continuous Software Engineering (RCoSE), Buenos Aires, Argentina, 22–23 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2–8. [Google Scholar]

- Arcelli, D. Exploiting queuing networks to model and assess the performance of self-adaptive software systems: A survey. Procedia Comput. Sci. 2020, 170, 498–505. [Google Scholar] [CrossRef]

- Palensky, P.; van der Meer, A.A.; Lopez, C.D.; Joseph, A.; Pan, K. Cosimulation of intelligent power systems: Fundamentals, software architecture, numerics, and coupling. IEEE Ind. Electron. Mag. 2017, 11, 34–50. [Google Scholar] [CrossRef]

- Szmuc, W.; Szmuc, T. Towards Embedded Systems Formal Verification Translation from SysML into Petri Nets. In Proceedings of the 2018 25th International Conference” Mixed Design of Integrated Circuits and System”(MIXDES), Gdynia, Poland, 21–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 420–423. [Google Scholar]

- Coulin, T.; Detante, M.; Mouchère, W.; Petrillo, F. Software Architecture Metrics: A literature review. arXiv 2019, arXiv:1901.09050. [Google Scholar]

- Soares, M.A.C.; Parreiras, F.S. A literature review on question answering techniques, paradigms and systems. J. King Saud Univ.-Comput. Inf. Sci. 2020, 32, 635–646. [Google Scholar]

- Düllmann, T.F.; Heinrich, R.; van Hoorn, A.; Pitakrat, T.; Walter, J.; Willnecker, F. CASPA: A platform for comparability of architecture-based software performance engineering approaches. In Proceedings of the 2017 IEEE International Conference on Software Architecture Workshops (ICSAW), Gothenburg, Sweden, 5–7 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 294–297. [Google Scholar]

- Walter, J.; Stier, C.; Koziolek, H.; Kounev, S. An expandable extraction framework for architectural performance models. In Proceedings of the 8th ACM/SPEC on International Conference on Performance Engineering Companion, L’Aquila, Italy, 22–26 April 2017; pp. 165–170. [Google Scholar]

- Singh, H. Secure SoftwareArchitecture and Design: Security Evaluation for Hybrid Approach. INROADS Int. J. Jaipur Natl. Univ. 2019, 8, 82–88. [Google Scholar]

- Sujay, V.; Reddy, M.B. Advanced Architecture-Centric Software Maintenance. i-Manager’s J. Softw. Eng. 2017, 12, 1. [Google Scholar]

- Hassan, A.; Oussalah, M.C. Evolution Styles: Multi-View/Multi-Level Model for Software Architecture Evolution. JSW 2018, 13, 146–154. [Google Scholar] [CrossRef][Green Version]

- Dobrica, L.; Niemela, E. A survey on software architecture analysis methods. IEEE Trans. Softw. Eng. 2002, 28, 638–653. [Google Scholar] [CrossRef]

- Plauth, M.; Feinbube, L.; Polze, A. A performance evaluation of lightweight approaches to virtualization. Cloud Comput. 2017, 2017, 14. [Google Scholar]

- Li, Z.; Zheng, J. Toward industry friendly software architecture evaluation. In Proceedings of the European Conference on Software Architecture, Montpellier, France, 1–5 July 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 328–331. [Google Scholar]

- Abrahão, S.; Insfran, E. Evaluating software architecture evaluation methods: An internal replication. In Proceedings of the 21st International Conference on Evaluation and Assessment in Software Engineering, Karlskrona, Sweden, 15–16 June 2017; pp. 144–153. [Google Scholar]

- Zalewski, A.; Kijas, S. Beyond ATAM: Early architecture evaluation method for large-scale distributed systems. J. Syst. Softw. 2013, 86, 683–697. [Google Scholar] [CrossRef]

- Alsaqaf, W.; Daneva, M.; Wieringa, R. Quality requirements in large-scale distributed agile projects—A systematic literature review. In Proceedings of the International Working Conference on Requirements Engineering: Foundation for Software Quality, Essen, Germany, 27 February–2 March 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 219–234. [Google Scholar]

- Bass, L.; Nord, R.L. Understanding the context of architecture evaluation methods. In Proceedings of the 2012 Joint Working IEEE/IFIP Conference on Software Architecture and European Conference on Software Architecture, Helsinki, Finland, 20–24 August 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 277–281. [Google Scholar]

- Kasauli, R.; Knauss, E.; Horkoff, J.; Liebel, G.; Neto, F.G.d. Requirements engineering challenges and practices in large-scale agile system development. J. Syst. Softw. 2021, 172, 110851. [Google Scholar] [CrossRef]

- Zhang, D.; Yu, F.R.; Yang, R. A machine learning approach for software-defined vehicular ad hoc networks with trust management. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Cruz-Benito, J.; Garcia-Penalvo, F.J.; Theron, R. Analyzing the software architectures supporting HCI/HMI processes through a systematic review of the literature. Telemat. Inform. 2019, 38, 118–132. [Google Scholar] [CrossRef]

- Poularakis, K.; Qin, Q.; Nahum, E.M.; Rio, M.; Tassiulas, L. Flexible SDN control in tactical ad hoc networks. Ad Hoc Netw. 2019, 85, 71–80. [Google Scholar] [CrossRef]

- Li, X.-Y.; Liu, Y.; Lin, Y.-H.; Xiao, L.-H.; Zio, E.; Kang, R. A generalized petri net-based modeling framework for service reliability evaluation and management of cloud data centers. Reliab. Eng. Syst. Saf. 2021, 207, 107381. [Google Scholar] [CrossRef]

- Varshosaz, M.; Beohar, H.; Mousavi, M.R. Basic behavioral models for software product lines: Revisited. Sci. Comput. Program. 2018, 168, 171–185. [Google Scholar] [CrossRef]

- Ozkaya, M. Do the informal & formal software modeling notations satisfy practitioners for software architecture modeling? Inf. Softw. Technol. 2018, 95, 15–33. [Google Scholar]

- Bhat, M.; Shumaiev, K.; Hohenstein, U.; Biesdorf, A.; Matthes, F. The evolution of architectural decision making as a key focus area of software architecture research: A semi-systematic literature study. In Proceedings of the 2020 IEEE International Conference on Software Architecture (ICSA), Salvador, Brazil, 16–20 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 69–80. [Google Scholar]

- Seifermann, S.; Heinrich, R.; Reussner, R. Data-driven software architecture for analyzing confidentiality. In Proceedings of the 2019 IEEE International Conference on Software Architecture (ICSA), Hamburg, Germany, 25–29 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–10. [Google Scholar]

- Landauer, C.; Bellman, K.L. An architecture for self-awareness experiments. In Proceedings of the 2017 IEEE International Conference on Autonomic Computing (ICAC), Columbus, OH, USA, 17–21 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 255–262. [Google Scholar]

- Ferraiuolo, A.; Xu, R.; Zhang, D.; Myers, A.C.; Suh, G.E. Verification of a practical hardware security architecture through static information flow analysis. In Proceedings of the Twenty-Second International Conference on Architectural Support for Programming Languages and Operating Systems, Xi’an, China, 1–8 April 2017; pp. 555–568. [Google Scholar]

- van Engelenburg, S.; Janssen, M.; Klievink, B. Design of a software architecture supporting business-to-government information sharing to improve public safety and security. J. Intell. Inf. Syst. 2019, 52, 595–618. [Google Scholar] [CrossRef]

- Bánáti, A.; Kail, E.; Karóczkai, K.; Kozlovszky, M. Authentication and authorization orchestrator for microservice-based software architectures. In Proceedings of the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1180–1184. [Google Scholar]

- Tuma, K.; Scandariato, R.; Balliu, M. Flaws in flows: Unveiling design flaws via information flow analysis. In Proceedings of the 2019 IEEE International Conference on Software Architecture (ICSA), Hamburg, Germany, 25–29 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 191–200. [Google Scholar]

- Santos, J.C.; Tarrit, K.; Mirakhorli, M. A catalog of security architecture weaknesses. In Proceedings of the 2017 IEEE International Conference on Software Architecture Workshops (ICSAW), Gothenburg, Sweden, 5–7 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 220–223. [Google Scholar]

- Ouma, W.Z.; Pogacar, K.; Grotewold, E. Topological and statistical analyses of gene regulatory networks reveal unifying yet quantitatively different emergent properties. PLoS Comput. Biol. 2018, 14, e1006098. [Google Scholar] [CrossRef] [PubMed]

- Dissanayake, N.; Jayatilaka, A.; Zahedi, M.; Babar, M.A. Software security patch management—A systematic literature review of challenges, approaches, tools and practices. Inf. Softw. Technol. 2021, 144, 106771. [Google Scholar] [CrossRef]

- De Vita, F.; Bruneo, D.; Das, S.K. On the use of a full stack hardware/software infrastructure for sensor data fusion and fault prediction in industry 4.0. Pattern Recognit. Lett. 2020, 138, 30–37. [Google Scholar] [CrossRef]

| Reference Study | Focus |

|---|---|

| Breivold et al. [49] | The search identified 58 studies that were cataloged as primary studies for this review after using a multi-step selection process. The studies are classified into the following five main categories: techniques supporting quality considerations during SA design, architectural quality evaluation, economic valuation, architectural knowledge management, and modeling techniques. |

| Barcelos et al. [50] | A total of 11 evaluation methods based on measuring techniques are used, mainly focusing on simulation and metrics to analyze the architecture. |

| Suman et al. [51] | This paper presents a comparative analysis of eight scenario-based SA evaluation methods using a taxonomy. |

| Shanmugapriya et al. [52] | It compares 14 scenario-based evaluation methods and five of the latest SA evaluation methods. |

| Roy et al. [53] | The taxonomy is used to distinguish architectural evaluations based on the artifacts on which the methods are applied and two phases of the software life cycle. |

| Mattsson et al. [54] | The paper compares 11 various evaluation methods from technical, quality attributes, and usage views. |

| Hansen et al. [55] | The research reports three studies of architectural prototyping in practice, ethnographic research, and a focus group on architectural prototyping. It involves architects from four companies and a survey study of 20 practicing software architects and developers. |

| Gorton et al. [56] | This paper compares four well-known scenario-based SA evaluation methods. It uses an evaluation framework that considers each method for context, stakeholders, structure, and reliability. |

| Weiss et al. [57] | It conducted a survey based on architectural experience, which was organized into six categories. The architecture reviews found more than 1000 issues between the years 1989 and 2000. |

| Babar et al. [58] | It discusses the agility in SA evaluation methods. |

| Suryanarayana et al. [59] | It states refractory adaption for architecture evaluation methods. |

| Lindvall et al. [60]; Santos et al. [61] | These are references of reviewed papers lacking stated knowledge, which is needed in the paper or more investigation. |

| Oliveira et al. [62] | It reviews the agile SA evaluation. |

| Martensson et al. [63] | It reviews the scenario-based SA evaluation based on industrial cases. |

| Comparison Framework | |||

|---|---|---|---|

| Component | Elements | Brief Explanation | Taxonomic Comparison |

| Context | SA definition | Does the method overtly consider a specific definition of SA? | NA |

| Specific goal | What is the specific aim of the methods? | Need for Evolution: Corrective, Perfective, Adaptive, Preventive, All applicable. | |

| Means of Evaluation (Static): Transformation, Refactoring, Refinement, Restructuring, Pattern change. | |||

| Means of Evaluation (Dynamic): Reconfiguration, Adaptation | |||

| Quality attributes | How many and which QAs are covered by the method? | QAs | |

| Applicable stage | Which is the most suitable development phase for applying the method? | Early | |

| Late | |||

| Input and output | What are the required inputs and produced outputs? | In: coarse-grained, medium, or fine SA design, views | |

| Out: risks, issues | |||

| Application domain | What is/are the application domain(s) the method is often applied? | Development Paradigm: SPL, OO, SOA, CBS | |

| Traditional: Embedded, Real-time, Process-aware, Distributed, Event-based, Concurrent, Mechatronic, Mobile, Robotic, Grid computing | |||

| Emerging: Cloud computing, Smart grid, Autonomic computing, Critical system, Ubiquitous | |||

| Benefits | What are the advantages of the method to the stakeholders? | NA | |

| Stakeholder | Involved Stakeholders | Which groups of stakeholders are needed to take part in the evaluation? | NA |

| Process support | How much support is supplied by the method to perform various activities? | NA | |

| Socio-technical issues | How does the method handle non-technical (e.g., social, organizational) issues? | NA | |

| Required resources | How many man-days are needed? What is the size of the evaluation team? | NA | |

| Contents | Method’s activities | What are the activities to be accomplished, and in which order to reach the aims? | Means of Evolution: Static, Dynamic |

| Support activity: Change impact, Change history | |||

| SA description | What form of SA description is needed (e.g., formal, informal, ADL, views, etc.)? | Type of Formalism | |

| Description of Language | |||

| UML Specification | |||

| Description Aspect | |||

| Evaluation approaches | What are the types of evaluation approaches applied by the method? | Experience-based, Prototyping-based, Scenario-based, Checklist-based, Simulation-based, Metrics-based, Math Model-based | |

| Tool support | Are there tools or experience repositories for supporting the method and its artifacts? | Need for Tool Support Analysis | |

| Usage of Tool Support | |||

| Level of Automation | |||

| Reliability | Maturity of the method | What is the level of maturity (inception, refinement, development, or dormant)? | Overview or Survey, Formalism for constraint specification, Formalism for architectural analysis, Formalism for arch and evolution, Formalism for code generation. |

| Method’s validation | Has the method been validated? How has the method been validated? | Case study, Mathematical proof, an Example application, Industrial validation | |

| Time | Time of evaluation | Stage of evolution | Early, middle, and Post-deployment. |

| SLDC | Analysis/Design, Implementation, Integration/provisioning, Deployment, Evolution | ||

| Specification-time | Design-Time, Run-Time | ||

| Complementary table | |||

| Means of Evolution: | Static: Transformation, Refactoring, Refinement, Restructuring, Pattern change | ||

| Dynamic: Reconfiguration, Adaptation | |||

| Support activity: | Change impact: Consistency checking, Impact analysis, Propagation; | ||

| Change history: Evolution analysis, Versioning | |||

| Type of Formalism | Modeling language: ADL, Programming languages, Domain-specific language, Type systems, Archface, Model-based | ||

| Formal models: Graph theory, Petri-net, Ontology, State machine, Constraint automata, CHAM | |||

| Process algebra: FSP, CSP, π-calculus; Logic (Constraint language): OCL, CCL, FOL, Grammars, Temporal logics, Rules, Description logic, Z, Alloy, Larch | |||

| Description of Language | Process algebra: Darwin, Wright, LEDA, PiLar | ||

| Standards: UML, Ex.-UML, SysML, AADL | |||

| Others: ACME, Aesop, C2, MetaH, Rapide, SADL, UniCon, Weaves, Koala, xADL, ADML, AO-ADL, xAcme | |||

| UML Specification | Static: Class, Component, Object. | ||

| Dynamic: Activity, State, Sequence, Transition, Communication | |||

| Description Aspect | Structural, Behavioral, Semantic | ||

| Need for Tool Support Analysis | Architecture lifecycle: Business case, Creating architecture, Documenting, Analyzing, Evolving | ||

| Usage of Tool Support | Simulation, Dependence analysis, Model checking, Conformance testing, Interface consistency, Inspection, and Review-based | ||

| Automation’s level | Fully automated, Partially automated, Manual | ||

| Aspect | Category | Approaches |

|---|---|---|

| The goal of the evaluation method | Assessment against requirements | Lightweight ATAM |

| Architectural flaws detection | PBAR, TARA, ARID | |

| SA description | Architectural decisions | DCAR |

| Full SA description (views) | Lightweight-ATAM | |

| SA patterns, tactics | PBAR | |

| There is no specific form of SA designs or documents | ARID, PBAR, TARA | |

| Time of evaluation | Early | DCAR |

| Middle | ARID, PBAR, Lightweight-ATAM | |

| Post-deployment | TARA | |

| Method’s validation based on case study number | 0 | Lightweight-ATAM |

| 1 | TARA | |

| 3 to 6 | PBAR, DCAR | |

| 6+ | ATAM | |

| Tool Support | Conformance testing | TARA |

| Review-based | DCAR |

| Features | Lightweights Factors | |

|---|---|---|

| The excess of the SA Evaluation work: | Lightweight | Covering Early and late methods |

| Need of agility | ||

| Ad hoc analysis | ||

| Scope of the SA Evaluation: | Distributed and heterogamous system | |

| Style of evaluated SA: | SOA, Component-based pipe and filter | |

| SA presentation: | Petri nets | |

| Targeted quality attributes: | Performance and security | |

| Tools and technique: | Should be investigated | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sahlabadi, M.; Muniyandi, R.C.; Shukur, Z.; Qamar, F. Lightweight Software Architecture Evaluation for Industry: A Comprehensive Review. Sensors 2022, 22, 1252. https://doi.org/10.3390/s22031252

Sahlabadi M, Muniyandi RC, Shukur Z, Qamar F. Lightweight Software Architecture Evaluation for Industry: A Comprehensive Review. Sensors. 2022; 22(3):1252. https://doi.org/10.3390/s22031252

Chicago/Turabian StyleSahlabadi, Mahdi, Ravie Chandren Muniyandi, Zarina Shukur, and Faizan Qamar. 2022. "Lightweight Software Architecture Evaluation for Industry: A Comprehensive Review" Sensors 22, no. 3: 1252. https://doi.org/10.3390/s22031252

APA StyleSahlabadi, M., Muniyandi, R. C., Shukur, Z., & Qamar, F. (2022). Lightweight Software Architecture Evaluation for Industry: A Comprehensive Review. Sensors, 22(3), 1252. https://doi.org/10.3390/s22031252