1. Introduction

Skin cancer is an invasive disease caused by the abnormal growth of melanocyte cells in the body, which tend to replicate and spread through lymph nodes to destroy surrounding tissues [

1]. The damaged cells develop a mole on the external skin layer, categorized as malignant or benign, whereas melanoma is considered cancer because it is more dangerous and life-threatening. Skin cancer is a widespread and dangerous disease globally, with 300,000 newly diagnosed cases and over 1 million deaths each month worldwide in 2018 [

2]. Melanoma is more prevalent globally, becoming the 19th most common disease with the highest mortality rate [

2]. As per the statistics of the International Agency for Research for Cancer (IARC) [

3], 19.3 million new cases were diagnosed with cancer, with a mortality rate of about 10 million people in 2020. Moreover, the number of new cases found in the United States were 100,350, and the number of people who died in 2020 were approximately 6850. According to the American Cancer Society [

4], 106,110 new melanoma cases were predicted to be diagnosed (nearly 62,260 in men and 43,850 in women) and about 7180 melanoma patients were estimated to die in 2021. Some environmental and genetic factors such as fair complexion, pollution, family history, and sunburn may lead to the formation of skin cancer. The control over mortality rate due to cancer is challenging; however, the latest development in image processing and artificial intelligence approaches may help diagnose melanoma early as early detection and prognosis can increase the survival rate. Moreover, computer-aided diagnostic (CAD) tools save time and effort compared with existing clinical approaches.

During diagnosis, an expert dermatologist performs a series of steps, starting with a visual inspection of a skin lesion by the naked eye; then dermoscopy, which is a magnifying lens to view lesion patterns in detail; and finally, a biopsy [

5]. These conventional methods are time-consuming, expensive, and laborious. Achieving an accurate diagnosis is entirely subjective depending upon the expert’s skillset, resulting in variations in their predictions. Many experts analyze lesions based on the ABCDE [

6] metrics, which define the asymmetry, border, color, diameter above 6 mm, and evolution over time. However, it requires intensive knowledge and proficiency that might not be available in clinical settings. It is found that the accuracy of correctly identifying skin lesions by a dermatologist is less than 80% [

7]. Additionally, there is a limited number of expert dermatologists available globally in the health sector.

To diagnose a skin lesion at the earliest stage and to solve the complexities mentioned above, comprehensive research solutions have been proposed in the literature using computer vision algorithms [

8]. The classification methods vary, including decision trees (DT) [

9], support vector machines (SVM) [

10], and artificial neural networks (ANN) [

11]. A detailed review of these methods is explained in the paper in Reference [

12]. Many machine learning methods have constraints in processing data, such as requiring high contrast, noise-free, and cleaned images that do not apply in the case of skin cancer data. Moreover, skin classification depends on features such as color, texture, and structural features. The classification may lead to erroneous results with poor feature sets as skin lesions consist of a high degree of inter-class homogeneity and intra-class heterogeneity [

13]. The traditional approaches are parametric and require training data to be normally distributed, whereas skin cancer data is uncontrolled. Each lesion consists of a different pattern; thus, these methods are inadequate. For these reasons, deep learning techniques in skin classification are very effective in assisting dermatologists in diagnosing lesions with high accuracy. Several detailed surveys elaborate on the application of deep learning in medical applications [

14].

There are mainly three types of skin cancer: basal, squamous, and melanocyte [

15]. The most commonly occurring type of cancer, basal cell carcinoma, grows very slowly and does not spread to other parts of the body. It tends to recur, so eradicating it from the body is important. Squamous cell carcinoma is another type of skin cancer that is more likely to spread to other body parts than basal cell carcinoma and penetrates deeper into the skin. Melanocytes, the cells involved in the last type, produce melanin when exposed to sunlight, giving the skin its brown or tan color. The melanin in these cells protects the skin from sunlight, but if it accumulates in the body, it forms cancerous moles, also known as melanoma cancer. Based on their tendency to cause minimal damage to surrounding tissues, basal and squamous cancers are considered benign, whereas melanocyte-based cancers are considered malignant and can be life-threatening. The most popular datasets employed in this work is from the International Skin Imaging Collaboration (ISIC) [

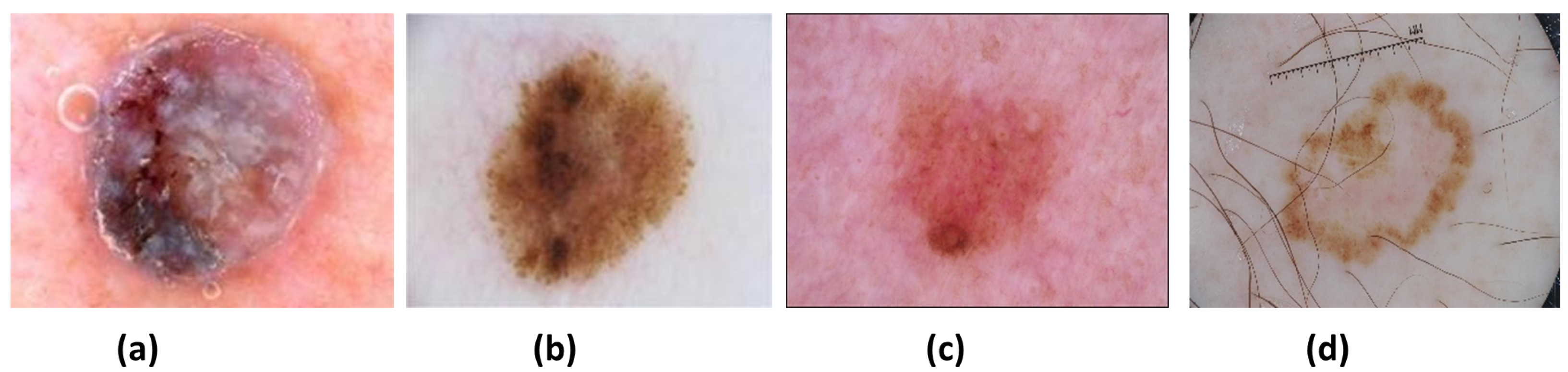

16], which contains different skin lesions. There are mainly four types of lesions (see

Figure 1) in the ISIC 2016, 2017, and 2020 data: (a) Nevus (NV), (b) Seborrheic keratosis (SK), (c) Benign (BEN) (d) Melanoma (MEL). NV cancer has distinct edges that primarily appear on the arms, legs, and trunk in pink, brown, and tan colors. Next is the SK, of which its non-cancerous appearance is waxy brown, black, or tan colors. Another non-cancerous lesion type is BEN, which does not invade surrounding tissues or spread into the body. Both NV and SK lesion types are considered BEN. Lastly, MEL is a large brown mole with dark speckles; it sometimes bleeds or changes color over time. It is a dangerous type of cancer that quickly spreads to other organs of the body. MEL is further divided into many types: acral, nodular, superficial, and lentigo. This research aims to identify and distinguish between MEL and BEN cancers.

Although deep learning approaches are highly effective in processing complex data, skin classification is still a challenging task due to a few reasons:

- (a)

Skin lesion classes in given datasets are highly imbalanced. For example, NV contains more samples than SK and MEL in the ISIC 2017 set, and BEN samples are more common than MEL in the ISIC 2020 set.

- (b)

Lesions contain noisy artefacts such as hairlines, gel bubbles, ruler marks, and poor contrast.

- (c)

Lesion types are difficult to distinguish due to high intra-class differences and inter-class similarities.

Moreover, there have been a few challenges during the design of classification approaches, such as (a) achieving a high prediction rate despite the class imbalance problem, (b) less complex and lightweight network architectures, and (c) low inference time. Popular deep learning pre-trained networks cannot be applied to skin cancer problems in general, as those networks are trained on different datasets such as ImageNet. Hence, the proposed research aims to develop, implement, and evaluate a deep learning-based, highly efficient network for melanoma vs. benign classification. The contributions of the proposed work are as follows:

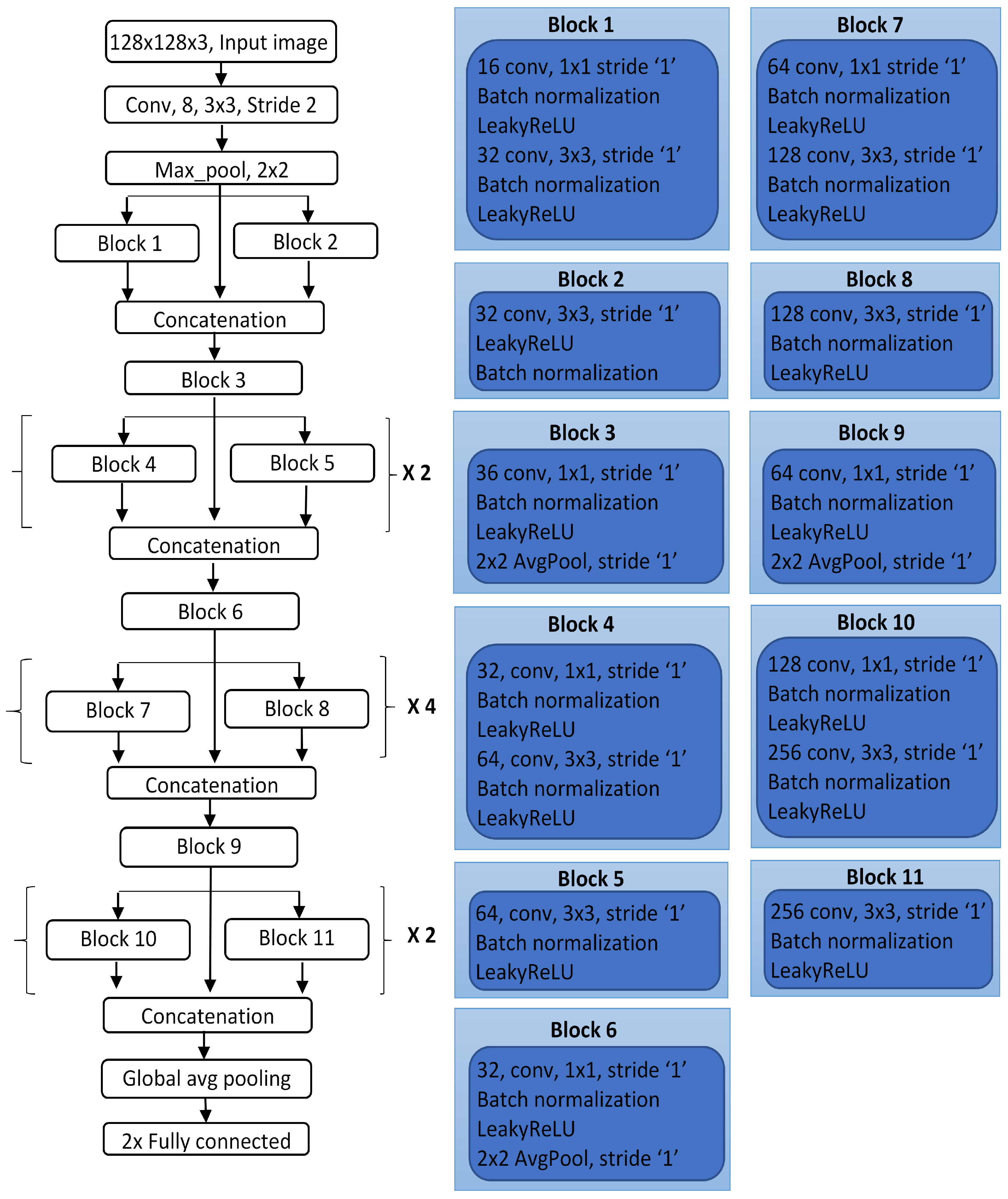

A new design of the DCNN model for classifying skin lesions as malignant or benign on dermoscopic images is proposed by building multiple connected blocks to allow for large feature information to flow directly through the network.

The depth of the network is optimized by conducting several experimental trials on the validation set by repeating sub-blocks with some specific ratio to form a deep neural network.

Each block of the network uses different parameters such as the number of kernels, filter size, and stride to extract low- and high-level feature information from lesions.

The proposed model achieves higher performance than other state-of-the-art methods on the adopted ISIC datasets, with fewer filters and learnable parameters. Thus, it is a lightweight network for classifying a large skin cancer dataset.

2. Related Work

Skin cancer is prevalent around the world, becoming the cause of a large number of deaths each year [

17]. It is an aggressive disease; thus, it is vital to perform early detection to save lives. Clinical experts visually observe lesions based on the ABCDE [

6] criteria followed by some histopathological tests. For automation of the classification process, several artificial intelligence-based algorithms have been proposed that comprise the standard phases such as preprocessing, feature extraction, segmentation, and classification. Many classification approaches [

18,

19] were highly dependent upon handcrafted feature sets, which have low generalization capability for dermoscopic skin images due to a deep understanding of biological patterns. Lesions have a substantial visual resemblance and are highly correlated because of their similarity in colors, shape, and size leading to poor feature information [

20]. Thus, handcrafted feature-based approaches are not suitable for skin classification problems. The advantage of deep learning techniques is that they can be directly applied to classification without any preprocessing phase. Deep networks are efficient at calculating detailed features to perform accurate lesion classification compared with shallow networks. The first breakthrough of applying DCNN on skin cancer came from Esteva et al. [

5] used a pre-trained Inceptionv3 model on 129,450 clinical images to perform classification in 2032 different diseases. Their network was compared against 21 board-certified medical experts to perform binary classification between the two deadliest skin cancers: malignant and nevus. Experts testified that the proposed network could identify skin cancer with high performance. Another work by Y. Li et al. [

21] proposed a lesion index calculation unit (LICU) that computes heat maps to filter coarse classification outcomes from the FCRN model. This unit measures the contribution of each pixel from the segmented map towards classification. The framework was evaluated on the ISIC 2017 dataset. J. Zhang et al. [

22] proposed a CNN implementing an attention residual learning (ARL) for skin classification consisting of multiple ARL blocks followed by global average pooling and classification layers.

The network explored the intrinsic self-attention ability of a deep convolutional neural network (DCNN). Each ARL block uses a residual learning mechanism and generates attention maps at lower layers to improve classification performance. Iqbal et al. [

23] designed a DCNN model for multi-class classification of a skin lesion on the ISIC 2017-19 datasets. Their model consists of multiple blocks connected to pass feature information from top to bottom of the network utilizing 68 convolutional layers. Similarly, Jinnai et al. [

24] employed faster region-based CNN (FRCNN) to classify melanoma from 5846 clinical images rather than dermoscopy. They manually created bounding boxes for lesion regions to prepare the training dataset. The FRCNN outperformed ten board-certified dermatologists and ten dermatology trainees, providing higher accuracy.

An investigation on increasing the performance of the model in terms of the area under the curve (AUC), accuracy, and other metrics by creating ensemble CNN models was proposed by Barata et al. [

18]. The output from the classification layers of four different networks, such as GoogleNet, AlexNet, VGG, and ResNet, was fused to form an ensemble model for three class classifications. Jordan Yap et al. [

25] proposed a method that considers several image modalities, including patient’s metadata, to improve the classification results. The ResNet50 network was differently applied over dermoscopic and macroscopic images, and their features were fused to perform the final classification. Their multimodel classifier outperformed the basic model using only macroscopy with an AUC of 0.866. Similarly, Gessert et al. [

26] presented an ensemble model designed from EfficientNets, SENet, and ResNeXt WSL to perform a multi-class classification task on the ISIC 2019 dataset. They applied a cropping strategy on images to deal with multimodel input resolutions. Moreover, a loss balancing approach was implemented to tackle imbalanced datasets. Srinivasu et al. [

27] presented a DCNN based on MobileNetV2 and Long Short-Term Memory (LSTM) for lesion classification on the HAM10000 dataset. Compared with other CNN models, MobileNetV2 offered advantages in terms of a low computational cost, a reduced network size, and compatibility with mobile devices. The LSTM network retained timestamp information about the features calculated by MobileNetV2. The use of LSTM with MobileNetV2 enhanced the system accuracy to 85.34%.

Another method was a Self-supervised Topology Clustering Network (STCN) given by Wang. et al. [

28] to classify unlabelled data without requiring any prior class information. The clustering algorithm was used to organize anonymous data into clusters by maximizing modularity. Features learned at different levels of variations such as illumination, point of view, and background were considered by the STCN model. Some studies [

29,

30] utilized pre-trained networks such as Xception, AlexNet, VGGNet, and ResNet using transfer learning and compared their performance. The fully connected layers were changed to use existing networks for skin lesion classification, and hyperparameters are required to fine-tune to achieve the best performance. The systematic review articles in [

14,

31] can be referred for detailed insights of deep learning approaches used for skin cancer classification. The detailed survey article in [

32] explained the possible solution to automatic skin cancer detection system, considered various challenges of skin cancer problems, and provided research directions to be considered for this problem.

4. Results and Discussion

Several experiments for skin lesion classification were conducted on different dermoscopic lesion images to evaluate the performance of the LCNet. It was tested on three different sets, the ISIC 2016, ISIC 2017, ISIC 2020, and

for two classes, MEL and BEN. Other state-of-the-art methods depend highly on the noise removal preprocessing steps and region of interest (ROI) specific feature calculation for achieving a high classification rate. In contrast, the LCNet does not require extensive preprocessing operations and extraction of lesion features. It is trained end-to-end on dermoscopic images to distinguish melanoma and other lesion types. The hyperparameters (see

Table 4) are finalized after several experiments and monitoring the network’s highest performance on the validation data. The network training was performed on the hardware configuration of GeForce GTX 1080 Ti with a computation capacity of ‘7.5’. Moreover, the inference time on ISIC 2016 with 344 test images was 3.77 s, that on ISIC 2017 with 835 test images was 15.7 s, and that on ISIC 2020 with 2014 test images was 61.6 s. Various classification performance metrics such as precision (PRE), recall (REC), accuracy (ACC), specificity (SPE), F1-Score, [

43,

44], and learnable parameters were considered to evaluate the model. The mathematical formulas used to calculate the values of these metrics are given as follows:

In a confusion matrix, TP, FP, TN, and FN represent true positives, false positives, true negatives, and false negatives. TP represents the number of lesion samples correctly classified as melanoma, TN represents the number of lesion samples correctly classified as benign, FP represents the ratio of samples incorrectly classified as melanoma, and FN represents the images determined to be benign when they are melanoma. An ACC is defined as the fraction of correctly identified samples and the total number of predictions based on these parameters. Other parameters, PRE and REC, are very significant metrics used to evaluate the model’s performance as PRE measures all positive predicted rates. In contrast, REC calculates the true positive ratio out of all positively identified samples. The model’s ability to identify TN of each class is measured by a metric called SPE. Lastly, F1-Score measures the harmonic mean of PRE and REC by considering FP and FN. Its value close to 1 indicates the perfect PRE and REC.

The scarcity of lesion samples in different classes prevented bias using data augmentation and oversampling methods. The impact of using data oversampling on the network’s performance is shown in

Table 5, which explains that there is an increase in the values of metrics on all datasets, where a drastic change is noticed on ISIC 2017. The reason for this is that the original ISIC 2017 dataset was highly imbalanced, giving poor results. In data extension, first, the training of the LCNet was performed on the augmented training set using the fine-tuned hyperparameters. The training progress was monitored on the validation set of the ISIC datasets. The validation set contains a different proportion of lesion samples from all classes, and the hyperparameters were tuned on the validation set to improve the performance. Thus, the final values were selected based on the best output offered by the network on the validation set having the lowest loss and high accuracy. Finally, the trained model with fine-tuned parameters was used to evaluate the test set unseen by the network.

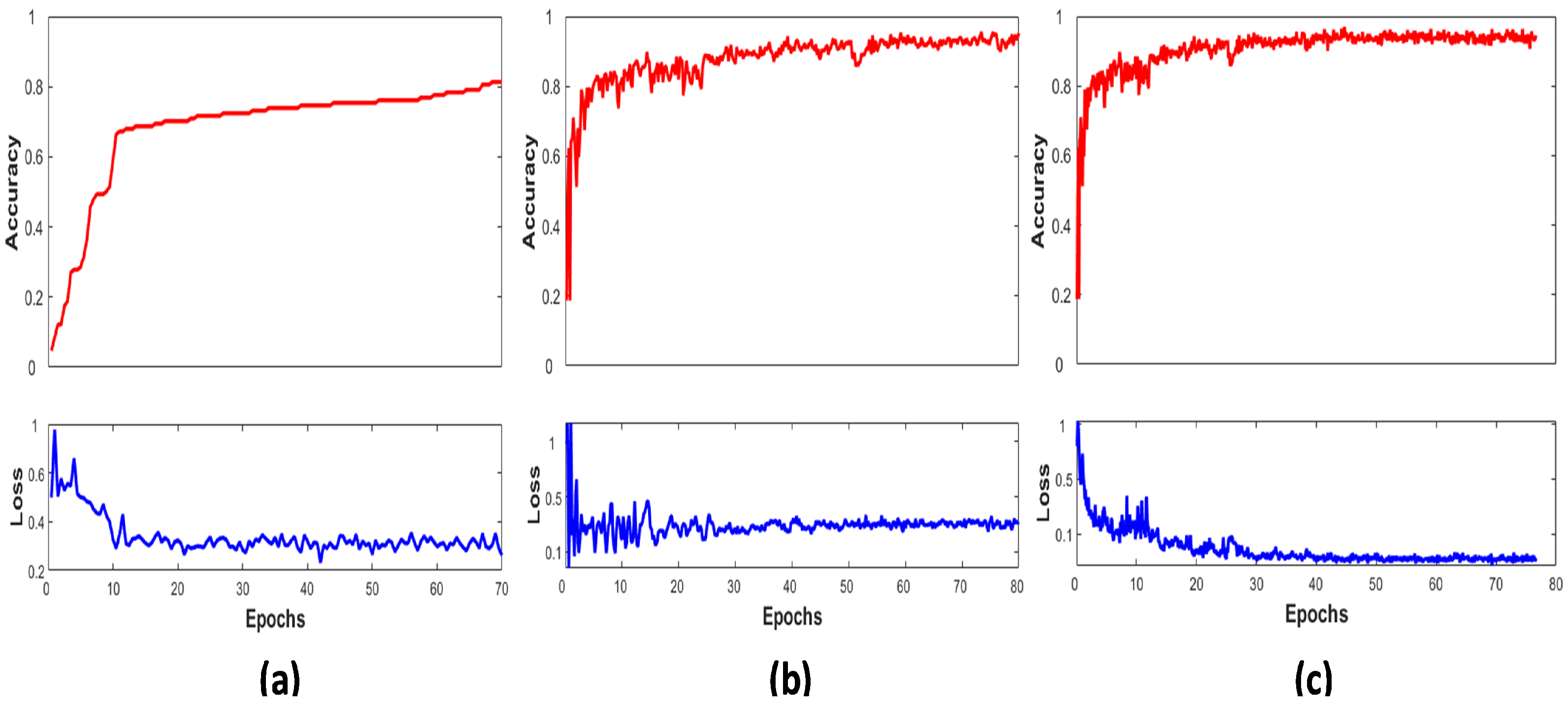

Figure 4 shows the graphical view of the LCNet on the ISIC 2016, 2017, and 2020 validation sets by plotting their performance between accuracy and number of epochs. It displays the network’s accuracy progressively increasing towards higher values over the subsequent increase in the number of iterations per epochs. Early stopping criteria were implemented to stop the model’s training if accuracy did not improve and the corresponding loss did not decrease; hence, the LCNet converges after 80 epochs. The higher performance is noticed on the ISIC 2020 dataset due to a large number of samples present in it.

Similarly,

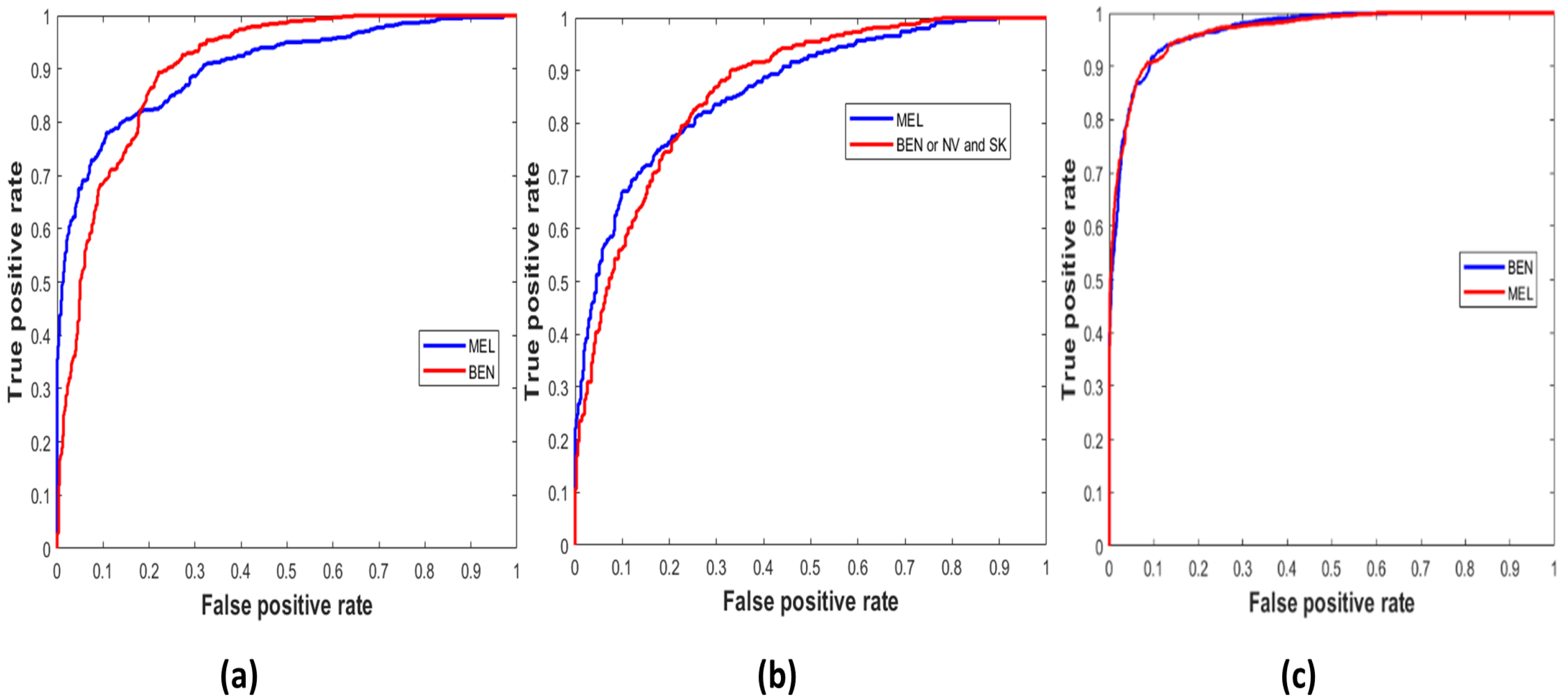

Figure 5 demonstrates the true positive vs. false positive curves [

45], illustrating the trade-off between sensitivity and specificity achieved by the model with the area under the curve (AUC) as ‘0.9033’, ‘0.8658’, and ’0.9671’ on the ISIC 2016, 2017, and 2020 test sets. In

Table 6, the performance of the LCNet is illustrated on all datasets based on the classification metrics explained above. The LCNet model obtained ACC, PRE, and REC of 81.41%, 81.88%, and 81.30%, respectively, for the binary classification of MEL vs. BEN on the ISIC 2016 dataset. At the same time, the values for these metrics on the ISIC 2017 test set for the classification of classes, i.e., MEL vs. NV and SK, were 88.23%, 78.55%, and 87.86%, respectively. Furthermore, on the ISIC 2020 and

sets, the values for ACC, PRE, and REC achieved by the model were 90.42%, 90.48%, and 90.39% and 76.0%, 67.8%, and 75.3%, respectively. Moreover, the LCNet surpassed the other state-of-the-art approaches for skin lesion classification, as given in

Table 7. It compares the methods with the best results highlighted in bold based on metrics such as ACC, PRE, REC, SPE, F1-Score, and learnable parameters. Only the ACC and SPE on the ISIC 2017 of the [

46] were higher than in the proposed model, whereas the PRE of the LCNet is the highest among all given studies. In addition, the number of learnable parameters of LCNet is less, making it a lightweight and less complex network.

In

Table 8, the performances of baseline CNN models such as ResNet18, Inceptionv3, and AlexNet are displayed. These popular networks were fine-tuned on the adopted datasets, and a comparison was shown between them and the LCNet model. For tuning them, the same hyperparameters setting were used as for the proposed model (see

Table 4). It can be seen in

Table 8 that the proposed model outperformed given networks. The metrics ACC, PRE, and REC represent the prediction score of the models on the ISIC 2016, 2017, and 2020 test sets, classifying lesion classes by giving better insight into correctly classified and misclassified samples based on the evaluation metrics. The proposed network achieved 0.5% more ACC than ResNet18, 1.5% than Inceptionv3, and 16% more than the AlexNet model on the ISIC 2016 dataset. Similarly, on the ISIC 2017 dataset, LCNet gained 13.2% higher ACC than ResNet18, 10.8% higher ACC than Inceptionv3, and 14.2% higher ACC than the AlexNet network. Lastly, the ACC of ResNet18 was slightly more than LCNet with a ratio of 0.4%, whereas LCNet outperformed Incpetionv3 and AlexNet by a higher margin. It is observed that the proposed LCNet model gained a higher accuracy on all datasets, which is higher among other popular models.

The experimental outcomes prove that the proposed model performs better for binary skin cancer classification tasks. PRE, REC, and ACC are relatively higher on the ISIC 2020 datasets. In contrast, these metrics observed lower values on ISIC 2017 and 2016 than the ISIC 2020 due to the fewer samples in each class. It is analysed that the deep learning-based LCNet model requires a large dataset for efficient network training. The primary advantage of the proposed model is that the inference time is very low on test sets and have a smaller number of learnable parameters.

5. Conclusions

Skin cancer is a global health problem, and the development of an automatic melanoma detection system plays a major role in its early diagnosis. The proposed LCNet model, inspired by the deep convolutional neural network for skin cancer classification, was trained in an end-to-end manner on dermoscopic skin cancer images. Three different datasets from the ISIC challenge were incorporated to perform the experiments, and an additional set was used for testing. It is challenging to establish an automatic framework to classify different lesions due to high inter-similarities and intra-class variations. With the design of a few preprocessing steps such as image resizing, oversampling, and augmentation, an accurate model was designed for MEL lesion classification. The experimental results showed that the proposed model achieved higher performance than the selected studies and pre-trained classification models. Overall, LCNet achieved average ACC, PRE, and REC of 81.41%, 81.88%, and 81.30% on ISIC 2016, of 88.23%, 78.55%, and 87.86% on ISIC 2017, and of 90.48%, 90.39%, and 90.42% on ISIC 2020. The proposed model is reliable in predicting the correct lesion category with a high true positive rate, thus strongly satisfying AI in solving medical problems as a diagnostic tool. It was found that using an image size of with three channels and the inference time per image of 0.1 s could achieve a higher processing speed. Therefore, the proposed method could perform better on large and balanced skin cancer datasets, such as the ISIC 2020 dataset, compared with the ISIC 2016 and 2017. The designed DCNN model can be further extended to multi-class classification to predict other different types of skin cancers.