A Pyramid Semi-Autoregressive Transformer with Rich Semantics for Sign Language Production

Abstract

1. Introduction

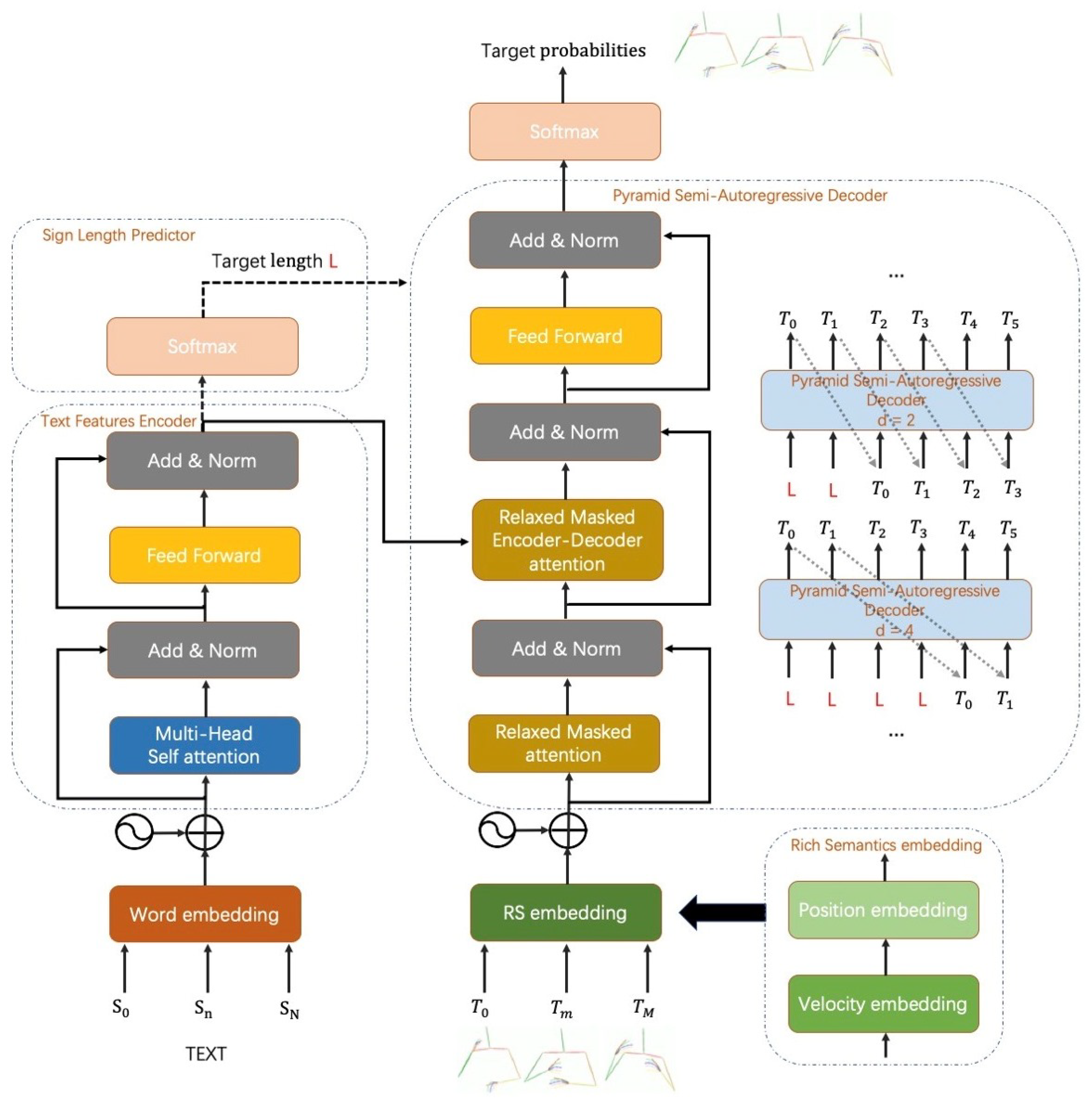

- We introduce a novel Semi-Autoregressive SLP model, PSAT-RS, which has the autoregressive property in global and generates sign pose concurrently in local.

- To produce realistic and accurate sign pose sequences, a RS embedding module is presented to exploit the spatial displacements and temporal correlations at frame level.

- Experiments demonstrate the superior performance of our method to the competing methods on RWTH-PHOENIX-Weather-2014T dataset and Chinese sign language dataset.

2. Related Work

2.1. Human Action Recognition

2.2. Sign Language Translation

2.3. Sign Language Production

2.4. AT vs. NAT vs. SAT

3. Methodology

3.1. Rich Semantics Embedding Module

3.2. Relaxed Mask

3.3. Pyramid Semi-Autoregressive Transformer

4. Experiments

4.1. Datasets and Evaluation Criteria

4.2. Implementation Details

4.3. Ablation Studies

- The effectiveness of RS embedding worked can be clearly seen in the cases of PT(GN) and PT (GN & RS). When we embed RS into PT(GN), the BLEU-1 and ROUGE scores are improved by 0.15 and 0.38 respectively. It verifies the importance of the combination of position features and motion features.

- In comparison PT (GN) with our PSAT, although the accuracy scores are slightly dropped on DEV SET, it can be observed that the BLEU-1 and ROUGE scores respectively improved by 0.68 and 0.79 on TEST SET, since our method alleviates error accumulation during decoding. Another important thing observed in Table 2 is that our P-SAT speedups 3.6× which efficiently optimizes the inference latency.

- After simultaneously using both our PSAT and RS embedding module, PSAT & RS shows that the BLEU score lifts 0.64 and ROUGE score lifts 0.63, confirming the effectiveness of the proposed algorithm.

4.4. Quantitative Evaluation

4.5. Qualitative Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xiao, Q.; Qin, M.; Yin, Y. Skeleton-based Chinese sign language recognition and generation for bidirectional communication between deaf and hearing people. Neural Netw. 2020, 125, 41–55. [Google Scholar] [CrossRef] [PubMed]

- Saunders, B.; Camgoz, N.C.; Bowden, R. Progressive Transformers for End-to-End Sign Language Production. In Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Saunders, B.; Camgoz, N.C.; Bowden, R. Continuous 3D multi-channel sign language production via progressive transformers and mixture density networks. Int. J. Comput. Vis. 2021, 129, 2113–2135. [Google Scholar] [CrossRef]

- Saunders, B.; Camgoz, N.C.; Bowden, R. Mixed SIGNals: Sign Language Production via a Mixture of Motion Primitives. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 1919–1929. [Google Scholar]

- Tang, S.; Hong, R.; Guo, D.; Wang, M. Gloss Semantic-Enhanced Network with Online Back-Translation for Sign Language Production. In Proceedings of the ACM International Conference on Multimedia (ACM MM), Lisbon, Portugal, 10–14 October 2022. [Google Scholar]

- Hwang, E.; Kim, J.H.; Park, J.C. Non-Autoregressive Sign Language Production with Gaussian Space. In Proceedings of the 32nd British Machine Vision Conference (BMVC 21), British Machine Vision Conference (BMVC), Virtual Event, 22–25 November 2021. [Google Scholar]

- Huang, W.; Pan, W.; Zhao, Z.; Tian, Q. Towards Fast and High-Quality Sign Language Production. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, 20–24 October 2021; pp. 3172–3181. [Google Scholar]

- Wang, C.; Zhang, J.; Chen, H. Semi-autoregressive neural machine translation. arXiv 2018, arXiv:1808.08583. [Google Scholar]

- Zhang, P.; Lan, C.; Zeng, W.; Xing, J.; Zheng, N. Semantics-Guided Neural Networks for Efficient Skeleton-Based Human Action Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Cui, R.; Hu, L.; Zhang, C. Recurrent Convolutional Neural Networks for Continuous Sign Language Recognition by Staged Optimization. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Shi, F.; Lee, C.; Qiu, L.; Zhao, Y.; Shen, T.; Muralidhar, S.; Han, T.; Zhu, S.C.; Narayanan, V. STAR: Sparse Transformer-based Action Recognition. arXiv 2021, arXiv:2107.07089. [Google Scholar]

- Ghosh, P.; Song, J.; Aksan, E.; Hilliges, O. Learning human motion models for long-term predictions. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 458–466. [Google Scholar]

- Cho, S.; Maqbool, M.H.; Liu, F.; Foroosh, H. Self-Attention Network for Skeleton-based Human Action Recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2019. [Google Scholar]

- Camgoz, N.C.; Hadfield, S.; Koller, O.; Ney, H.; Bowden, R. Neural Sign Language Translation. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Zhao, J.; Qi, W.; Zhou, W.; Duan, N.; Zhou, M.; Li, H. Conditional Sentence Generation and Cross-Modal Reranking for Sign Language Translation. IEEE Trans. Multimed. 2022, 24, 2662–2672. [Google Scholar] [CrossRef]

- Pu, J.; Zhou, W.; Li, H. Dilated convolutional network with iterative optimization for continuous sign language recognition. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18), Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Tang, S.; Guo, D.; Hong, R.; Wang, M. Graph-Based Multimodal Sequential Embedding for Sign Language Translation. IEEE Trans. Multimed. 2022, 24, 4433–4445. [Google Scholar] [CrossRef]

- Camgoz, N.C.; Koller, O.; Hadfield, S.; Bowden, R. Sign Language Transformers: Joint End-to-end Sign Language Recognition and Translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Saunders, B.; Camgoz, N.C.; Bowden, R. Adversarial training for multi-channel sign language production. arXiv 2020, arXiv:2008.12405. [Google Scholar]

- Ventura, L.; Duarte, A.; Giró-i Nieto, X. Can everybody sign now? Exploring sign language video generation from 2D poses. arXiv 2020, arXiv:2012.10941. [Google Scholar]

- Saunders, B.; Camgöz, N.C.; Bowden, R. Signing at Scale: Learning to Co-Articulate Signs for Large-Scale Photo-Realistic Sign Language Production. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 5141–5151. [Google Scholar] [CrossRef]

- Stoll, S.; Camgoz, N.C.; Hadfield, S.; Bowden, R. Text2Sign: Towards Sign Language Production Using Neural Machine Translation and Generative Adversarial Networks. Int. J. Comput. Vis. 2020, 128, 891–908. [Google Scholar] [CrossRef]

- Datta, D.; David, P.E.; Mittal, D.; Jain, A. Neural machine translation using recurrent neural network. Int. J. Eng. Adv. Technol. 2020, 9, 1395–1400. [Google Scholar] [CrossRef]

- Chen, M.X.; Firat, O.; Bapna, A.; Johnson, M.; Macherey, W.; Foster, G.; Jones, L.; Parmar, N.; Schuster, M.; Chen, Z.; et al. The best of both worlds: Combining recent advances in neural machine translation. arXiv 2018, arXiv:1804.09849. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017. [Google Scholar]

- Wang, Y.; Tian, F.; Di, H.; Tao, Q.; Liu, T.Y. Non-Autoregressive Machine Translation with Auxiliary Regularization. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19), Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 5377–5384. [Google Scholar]

- Lee, J.; Mansimov, E.; Cho, K. Deterministic non-autoregressive neural sequence modeling by iterative refinement. arXiv 2018, arXiv:1802.06901. [Google Scholar]

- Zhou, Y.; Zhang, Y.; Hu, Z.; Wang, M. Semi-Autoregressive Transformer for Image Captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual Event, 11–17 October 2021; pp. 3139–3143. [Google Scholar]

- Wang, M.; Jiaxin, G.; Wang, Y.; Chen, Y.; Chang, S.; Shang, H.; Zhang, M.; Tao, S.; Yang, H. How Length Prediction Influence the Performance of Non-Autoregressive Translation? In Proceedings of the Fourth BlackboxNLP Workshop on Analyzing and Interpreting Neural Networks for NLP, Punta Cana, Dominican Republic, 11 November 2021; pp. 205–213. [Google Scholar]

- Forster, J.; Schmidt, C.; Koller, O.; Bellgardt, M.; Ney, H. Extensions of the sign language recognition and translation corpus RWTH-PHOENIX-Weather. In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14), Reykjavik, Iceland, 26–31 May 2014; pp. 1911–1916. [Google Scholar]

- Pu, J.; Zhou, W.; Li, H. Iterative Alignment Network for Continuous Sign Language Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; pp. 4165–4174. [Google Scholar] [CrossRef]

- Chen, W.; Jiang, Z.; Guo, H.; Ni, X. Fall detection based on key points of human-skeleton using openpose. Symmetry 2020, 12, 744. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Gotmare, A.; Keskar, N.S.; Xiong, C.; Socher, R. A closer look at deep learning heuristics: Learning rate restarts, warmup and distillation. arXiv 2018, arXiv:1810.13243. [Google Scholar]

| Approach | BLEU-4 | BLEU-3 | BLEU-2 | BLEU-1 | ROUGE | Mean Latency (ms) | Speedup |

|---|---|---|---|---|---|---|---|

| Transformer N = 2 | 7.09 | 8.44 | 12.08 | 19.20 | 20.00 | 192 | 1× |

| PSAT d = {2, 2} | 6.74 | 8.13 | 11.90 | 19.11 | 19.76 | 101 | 1.90× |

| PSAT d = {4, 4} | 6.17 | 7.62 | 11.05 | 17.21 | 18.70 | 50 | 3.86× |

| PSAT d = {8, 8} | 5.66 | 7.00 | 10.22 | 14.36 | 16.61 | 27 | 7.32× |

| PSAT d = {1, 1, 1, 1} | 11.38 | 14.59 | 20.35 | 32.25 | 33.09 | 387 | 1× |

| PSAT d = {2, 2, 2, 2} | 11.04 | 14.38 | 19.92 | 32.13 | 32.90 | 210 | 1.84× |

| PSAT d = {8, 6, 4, 2} | 10.16 | 13.37 | 18.43 | 29.62 | 30.51 | 81 | 4.75× |

| PSAT d = {8, 4, 2, 1} | 11.02 | 14.47 | 20.08 | 32.10 | 33.01 | 108 | 3.60× |

| Approach | DEV SET | TEST SET | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BLEU-4 | BLEU-3 | BLEU-2 | BLEU-1 | ROUGE | BLEU-4 | BLEU-3 | BLEU-2 | BLEU-1 | ROUGE | |

| Autoregressive models | ||||||||||

| PT (GN) [2] | 11.38 | 14.59 | 20.35 | 32.25 | 33.09 | 9.45 | 12.52 | 17.08 | 26.59 | 27.31 |

| PT (GN & RS) | 12.00 | 15.27 | 21.11 | 32.40 | 33.47 | 9.68 | 12.78 | 17.28 | 26.86 | 28.01 |

| Non-Autoregressive models | ||||||||||

| NAT [6,7] | 6.90 | 10.10 | 13.76 | 22.76 | 24.43 | 4.86 | 7.11 | 10.28 | 18.94 | 19.97 |

| Our models | ||||||||||

| PSAT | 11.02 | 14.47 | 20.28 | 32.10 | 33.01 | 9.72 | 12.78 | 17.35 | 27.27 | 28.10 |

| PSAT & RS | 11.39 | 14.85 | 21.04 | 32.33 | 33.51 | 10.01 | 13.19 | 18.62 | 27.50 | 28.73 |

| Approach | Mean Latency (ms) | Speedup | Complexity |

|---|---|---|---|

| AT | 387 | 1× | |

| NAT | 21 | 18.4× | |

| PSAT | 108 | 3.60× |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, Z.; Chen, Z.; Li, Z.; Wang, Z. A Pyramid Semi-Autoregressive Transformer with Rich Semantics for Sign Language Production. Sensors 2022, 22, 9606. https://doi.org/10.3390/s22249606

Cui Z, Chen Z, Li Z, Wang Z. A Pyramid Semi-Autoregressive Transformer with Rich Semantics for Sign Language Production. Sensors. 2022; 22(24):9606. https://doi.org/10.3390/s22249606

Chicago/Turabian StyleCui, Zhenchao, Ziang Chen, Zhaoxin Li, and Zhaoqi Wang. 2022. "A Pyramid Semi-Autoregressive Transformer with Rich Semantics for Sign Language Production" Sensors 22, no. 24: 9606. https://doi.org/10.3390/s22249606

APA StyleCui, Z., Chen, Z., Li, Z., & Wang, Z. (2022). A Pyramid Semi-Autoregressive Transformer with Rich Semantics for Sign Language Production. Sensors, 22(24), 9606. https://doi.org/10.3390/s22249606