Inhalation Injury Grading Using Transfer Learning Based on Bronchoscopy Images and Mechanical Ventilation Period

Abstract

1. Introduction

1.1. Related Work

1.2. Contribution

- We propose a novel grading method for evaluating the severity of inhalation injury. Conventional inhalation diagnostic methods focused on the percentage of inhalation injury. However, our proposed inhalation diagnostic method is novel in that the method determines the severity of inhalation injuries based on our proposed deep machine learning algorithm with bronchoscopy images. Moreover, compared to the current manual grading system which depends on examiners, our proposed method gives quantitative and consistent results, which do not depend on inconsistent and subjective examiners’ decisions.

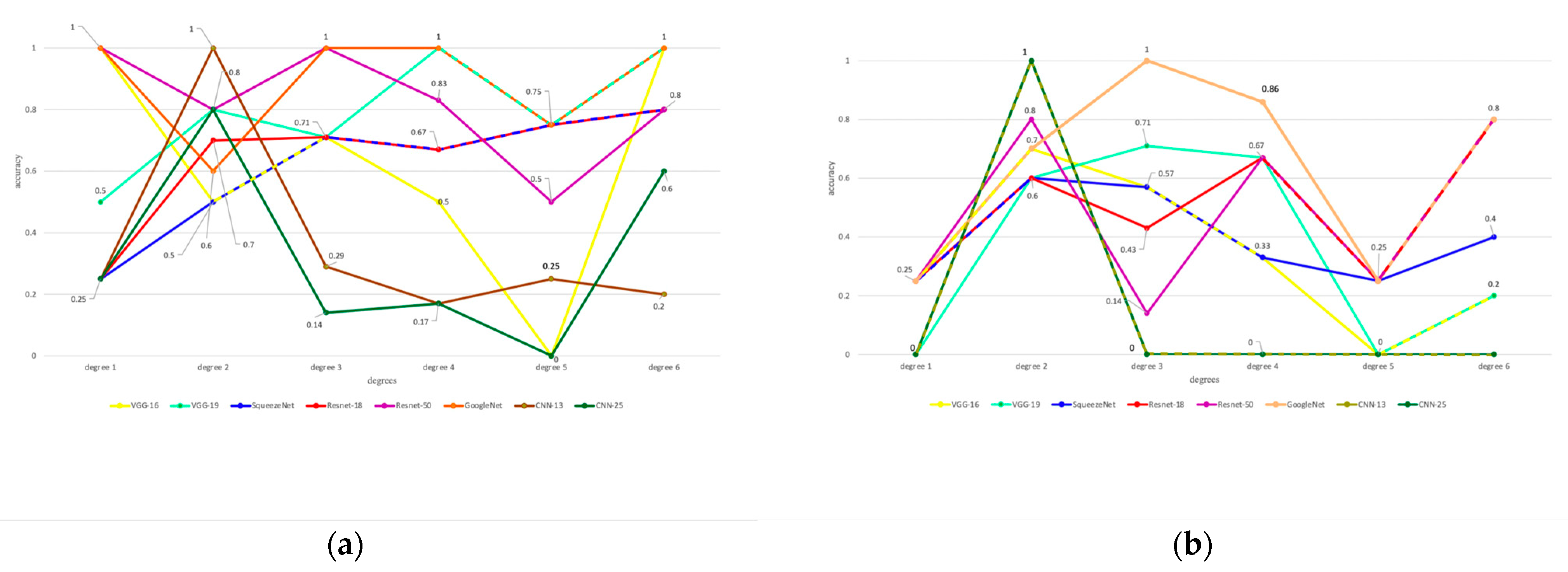

- Our proposed algorithm provides functionality that optimizes the hyperparameters of its deep machine learning model in terms of prediction accuracy of grading the severity of inhalation injuries. These include factors such as learning rate, drop period, max epochs, and mini-batch size. To achieve this, data augmentation and typical CNN-based models were also implemented for comparison with our proposed transfer learning method and exploration of higher performances. As a result, the proposed algorithm provides an average testing accuracy of 86.11%, which shows the potential to predict the severity of inhalation injuries.

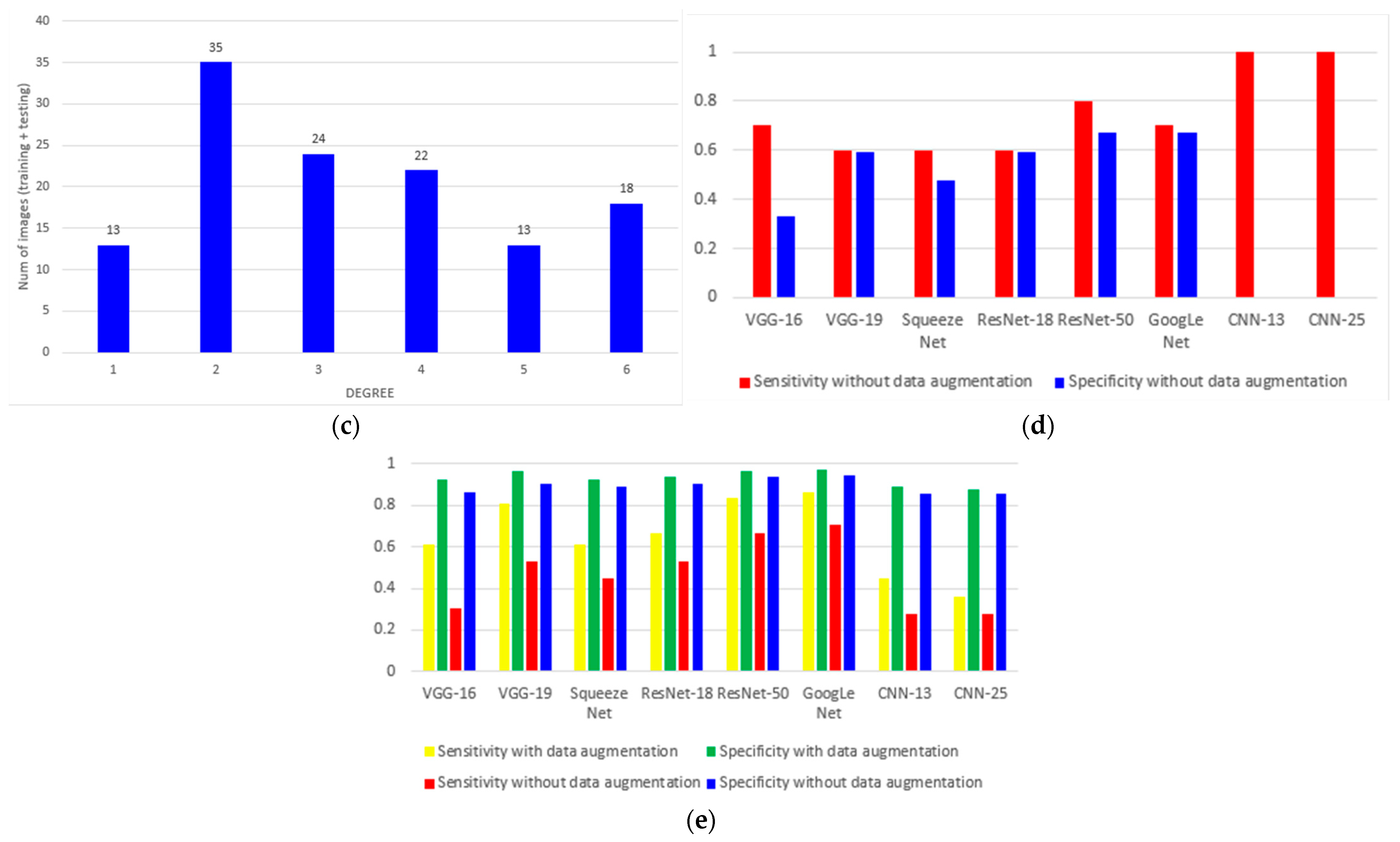

- We analyze the impact of data augmentation and transfer learning by including or excluding these factors, respectively, in or from the algorithm. That is, we evaluate accuracy performance, in this paper, for the following combinations of methods, factors and paramteres: (1) transfer learning with data augmentation, (2) transfer learning without data augmentation, (3) non-transfer learning with data augmentation, and (4) non-transfer learning with data augmentation.

1.3. Paper Organization

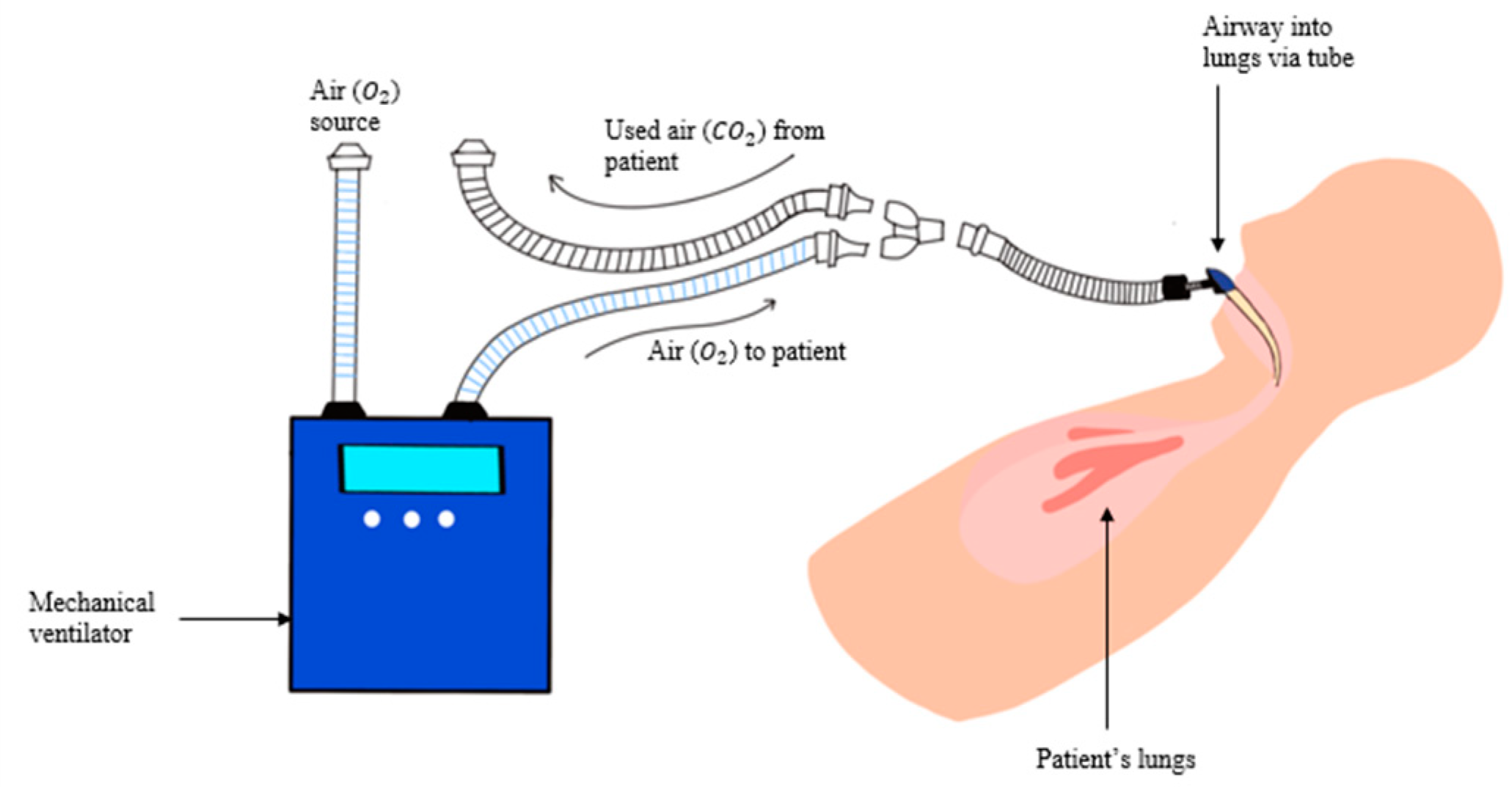

2. Materials and Methods

2.1. Dataset Development

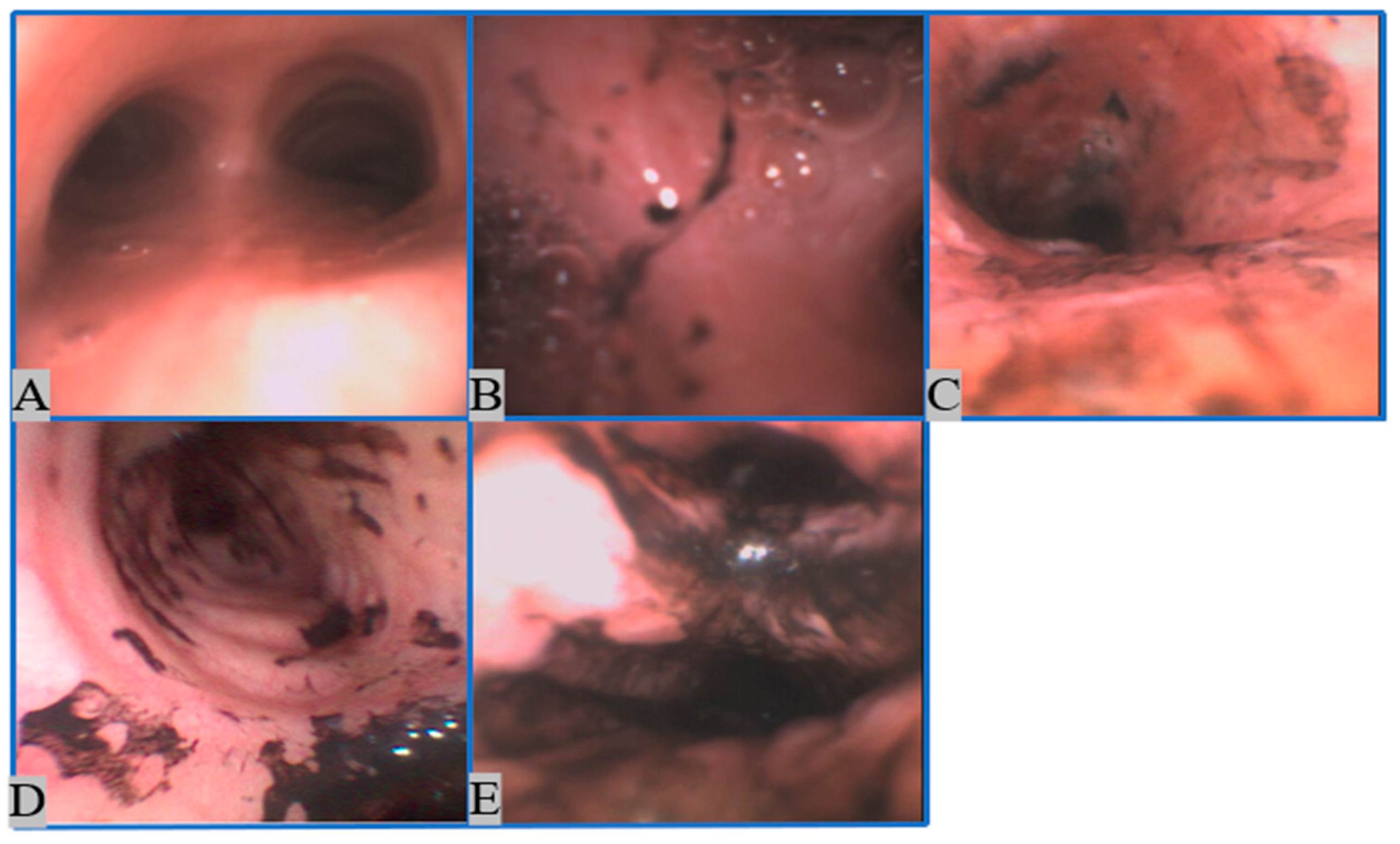

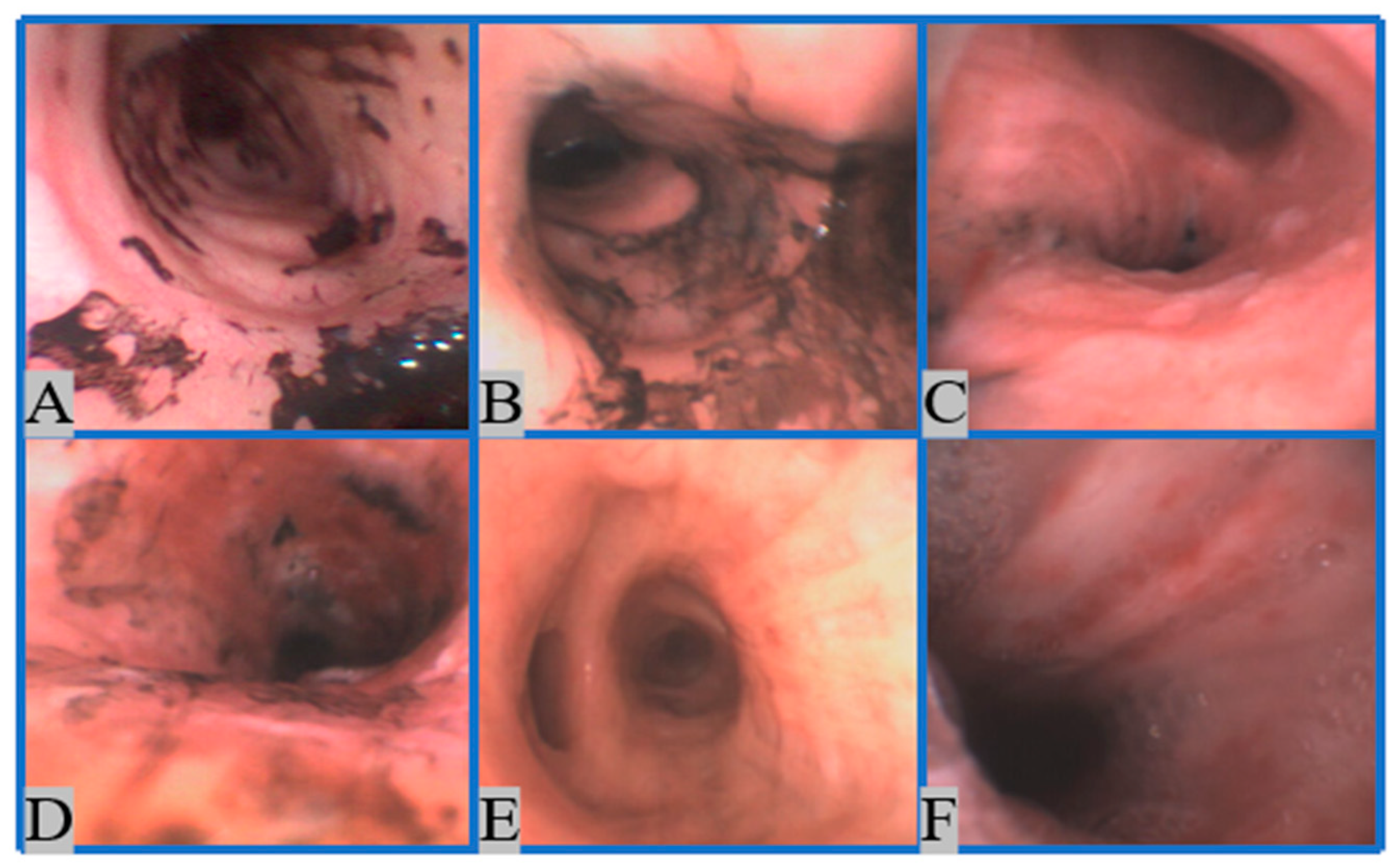

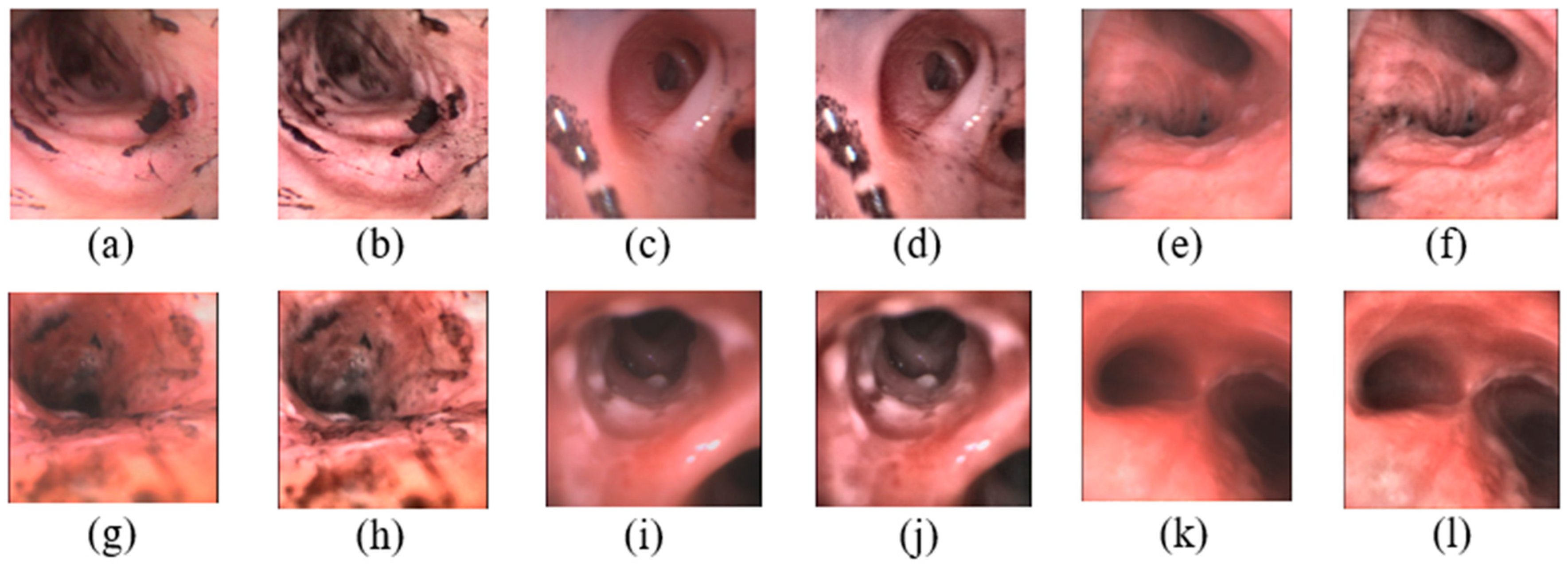

2.1.1. Image Collection

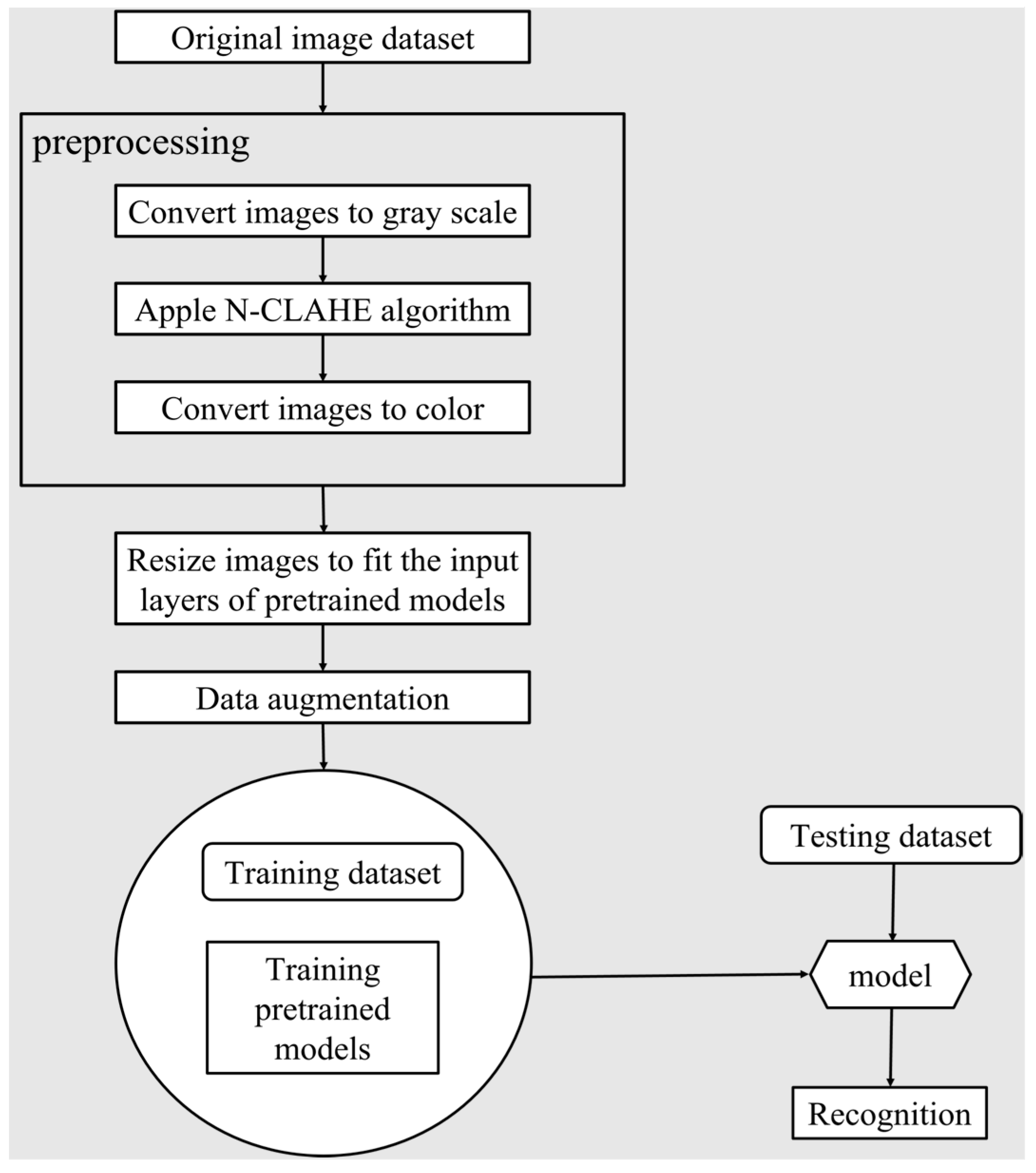

2.1.2. Image Preprocessing

- 1.

- Normalization

- 2.

- Contrast-Limited Adaptive Histogram Equalization (CLAHE) [41]

2.2. Method

2.2.1. Learning and Testing Pipeline

2.2.2. Data Augmentation

- 3.

- Image rotation:

- 4.

- Image scaling:

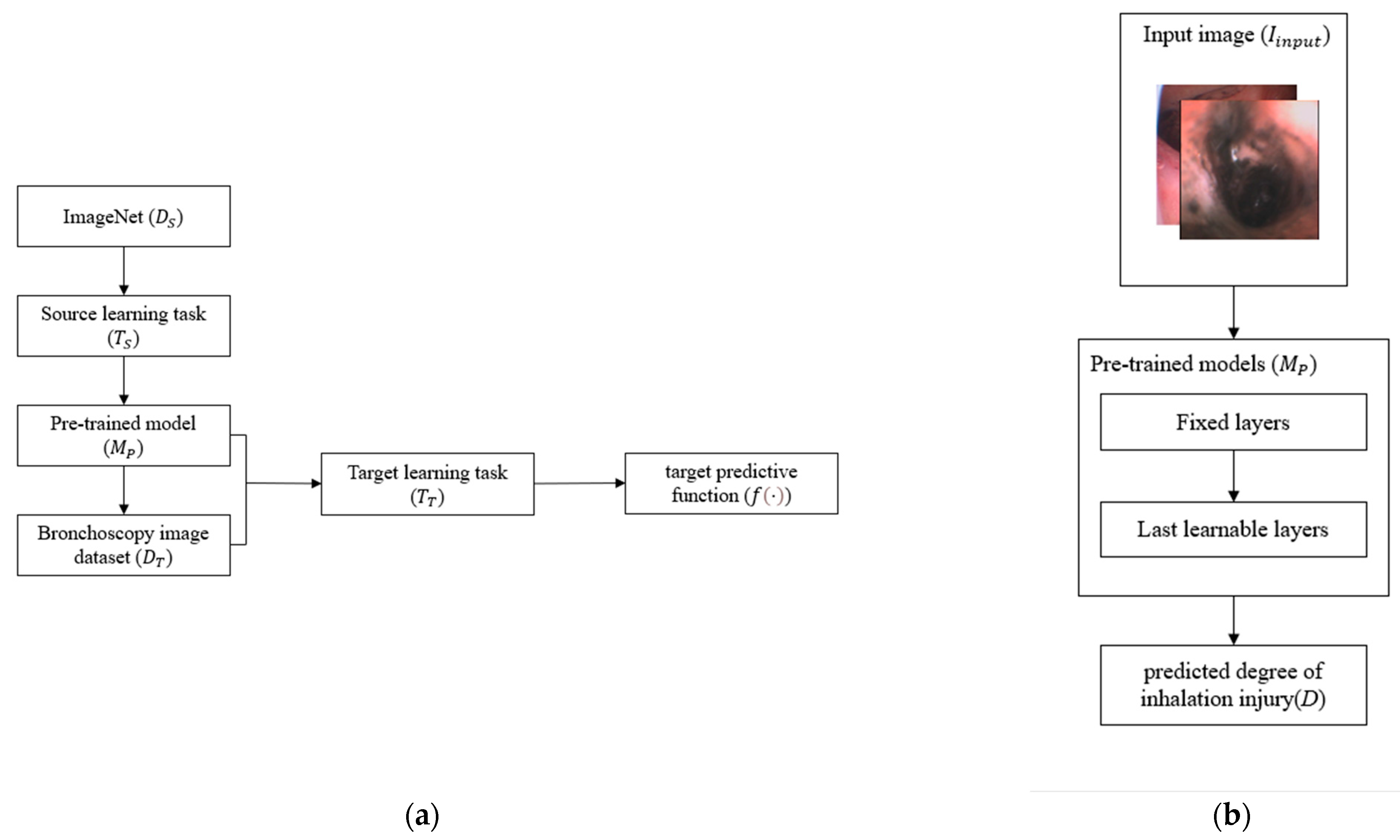

2.2.3. Transfer Learning

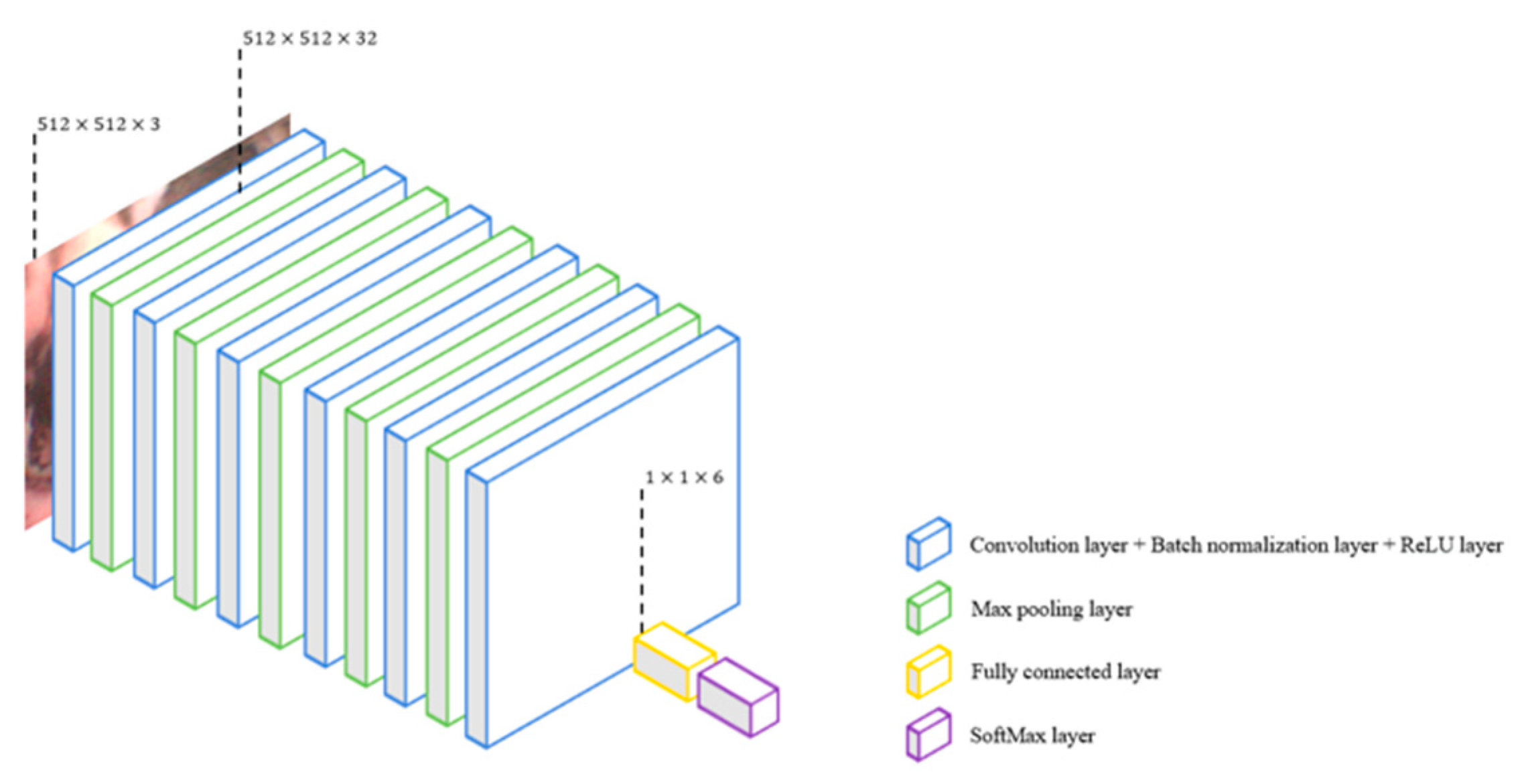

2.2.4. Model Selection

- 1.

- VGG-16

- 2.

- VGG-19

- 3.

- SqueezeNet

- 4.

- ResNet-18

- 5.

- ResNet-50

- 6.

- GoogLeNet

- 7.

- CNN-13

- 8.

- CNN-25

2.2.5. Experiment Set Up

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- American Burn Association. National Burn Repository: 2002 Report Dataset Version 8; American Burn Association: Chicago, IL, USA, 2002. [Google Scholar]

- Veeravagu, A.; Yoon, B.C.; Jiang, B.; Carvalho, C.M.; Rincon, F.; Maltenfort, M.; Jallo, J.; Ratliff, J.K. National trends in burn and inhalation injury in burn patients: Results of analysis of the nationwide inpatient sample database. J. Burn Care Res. 2015, 36, 258–265. [Google Scholar] [CrossRef]

- American Burn Association. National Burn Repository: 2019 Update Dataset Version 14.0; American Burn Association: Chicago, IL, USA, 2019. [Google Scholar]

- Merrel, P.; Mayo, D. Inhalation injury in the burn patient. Crit. Care Nurs. Clin. 2004, 16, 27–38. [Google Scholar] [CrossRef] [PubMed]

- Traber, D.; Hawkins, H.; Enkhbaatar, P.; Cox, R.; Schmalstieg, F.; Zwischenberger, J.; Traber, L. The role of the bronchial circulation in acute lung injury resulting from burn and smoke inhalation. Pulm. Pharmacol. Ther. 2007, 20, 163–166. [Google Scholar] [CrossRef] [PubMed]

- Shirani, K.Z.; Pruitt, B.A., Jr.; Mason, A.D., Jr. The influence of inhalation injury and pneumonia on burn mortality. Ann. Surg. 1987, 205, 82. [Google Scholar] [CrossRef]

- Herlihy, J.; Vermeulen, M.; Joseph, P.; Hales, C. Impaired alveolar macrophage function in smoke inhalation injury. J. Cell. Physiol. 1995, 163, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Al Ashry, H.S.; Mansour, G.; Kalil, A.C.; Walters, R.W.; Vivekanandan, R. Incidence of ventilator associated pneumonia in burn patients with inhalation injury treated with high frequency percussive ventilation versus volume control ventilation: A systematic review. Burns 2016, 42, 1193–1200. [Google Scholar] [CrossRef]

- Mlcak, R.P.; Suman, O.E.; Herndon, D.N. Respiratory management of inhalation injury. Burns 2007, 33, 2–13. [Google Scholar] [CrossRef]

- Endorf, F.W.; Gamelli, R.L. Inhalation injury, pulmonary perturbations, and fluid resuscitation. J. Burn Care Res. 2007, 28, 80–83. [Google Scholar] [CrossRef]

- Albright, J.M.; Davis, C.S.; Bird, M.D.; Ramirez, L.; Kim, H.; Burnham, E.L.; Gamelli, R.L.; Kovacs, E.J. The acute pulmonary inflammatory response to the graded severity of smoke inhalation injury. Crit. Care Med. 2012, 40, 1113. [Google Scholar] [CrossRef]

- Jones, S.W.; Williams, F.N.; Cairns, B.A.; Cartotto, R. Inhalation injury: Pathophysiology, diagnosis, and treatment. Clin. Plast. Surg. 2017, 44, 505–511. [Google Scholar] [CrossRef]

- Mosier, M.J.; Pham, T.N.; Park, D.R.; Simmons, J.; Klein, M.B.; Gibran, N.S. Predictive value of bronchoscopy in assessing the severity of inhalation injury. J. Burn Care Res. 2012, 33, 65–73. [Google Scholar] [CrossRef] [PubMed]

- Horry, M.J.; Chakraborty, S.; Paul, M.; Ulhaq, A.; Pradhan, B.; Saha, M.; Shukla, N. COVID-19 detection through transfer learning using multimodal imaging data. IEEE Access 2020, 8, 149808–149824. [Google Scholar] [CrossRef] [PubMed]

- Mazurowski, M.A.; Buda, M.; Saha, A.; Bashir, M.R. Deep learning in radiology: An overview of the concepts and a survey of the state of the art with focus on MRI. J. Magn. Reson. Imaging 2019, 49, 939–954. [Google Scholar] [CrossRef] [PubMed]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Into Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Walker, P.F.; Buehner, M.F.; Wood, L.A.; Boyer, N.L.; Driscoll, I.R.; Lundy, J.B.; Cancio, L.C.; Chung, K.K. Diagnosis and management of inhalation injury: An updated review. Crit. Care 2015, 19, 351. [Google Scholar] [CrossRef]

- Yadav, D.; Sharma, A.; Singh, M.; Goyal, A. Feature extraction based machine learning for human burn diagnosis from burn images. IEEE J. Transl. Eng. Health Med. 2019, 7, 1800507. [Google Scholar] [CrossRef]

- Rangel-Olvera, B.; Rosas-Romero, R. Detection and classification of burnt skin via sparse representation of signals by over-redundant dictionaries. Comput. Biol. Med. 2021, 132, 104310. [Google Scholar] [CrossRef]

- Suha, S.A.; Sanam, T.F. A deep convolutional neural network-based approach for detecting burn severity from skin burn images. Mach. Learn. Appl. 2022, 9, 100371. [Google Scholar] [CrossRef]

- Lee, S.; Lukan, J.; Boyko, T.; Zelenova, K.; Makled, B.; Parsey, C.; Norfleet, J.; De, S. A deep learning model for burn depth classification using ultrasound imaging. J. Mech. Behav. Biomed. Mater. 2022, 125, 104930. [Google Scholar] [CrossRef]

- Chauhan, J.; Goyal, P. BPBSAM: Body part-specific burn severity assessment model. Burns 2020, 46, 1407–1423. [Google Scholar] [CrossRef]

- Rangaraju, L.P.; Kunapuli, G.; Every, D.; Ayala, O.D.; Ganapathy, P.; Mahadevan-Jansen, A. Classification of burn injury using Raman spectroscopy and optical coherence tomography: An ex-vivo study on porcine skin. Burns 2019, 45, 659–670. [Google Scholar] [CrossRef] [PubMed]

- Rowland, R.A.; Ponticorvo, A.; Baldado, M.L.; Kennedy, G.T.; Burmeister, D.M.; Christy, R.J.; Bernal, N.P.; Durkin, A.J. Burn wound classification model using spatial frequency-domain imaging and machine learning. J. Biomed. Opt. 2019, 24, 056007. [Google Scholar] [PubMed]

- Liu, N.T.; Salinas, J. Machine learning in burn care and research: A systematic review of the literature. Burns 2015, 41, 1636–1641. [Google Scholar] [CrossRef] [PubMed]

- Chauhan, N.K.; Asfahan, S.; Dutt, N.; Jalandra, R.N. Artificial intelligence in the practice of pulmonology: The future is now. Lung India Off. Organ Indian Chest Soc. 2022, 39, 1. [Google Scholar] [CrossRef] [PubMed]

- Feng, P.H.; Lin, Y.T.; Lo, C.M. A machine learning texture model for classifying lung cancer subtypes using preliminary bronchoscopic findings. Med. Phys. 2018, 45, 5509–5514. [Google Scholar] [CrossRef]

- Ravishankar, H.; Sudhakar, P.; Venkataramani, R.; Thiruvenkadam, S.; Annangi, P.; Babu, N.; Vaidya, V. Understanding the mechanisms of deep transfer learning for medical images. In Deep Learning and Data Labeling for Medical Applications; Springer: Berlin/Heidelberg, Germany, 2016; pp. 188–196. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Huang, S.; Dang, J.; Sheckter, C.C.; Yenikomshian, H.A.; Gillenwater, J. A systematic review of machine learning and automation in burn wound evaluation: A promising but developing frontier. Burns 2021, 47, 1691–1704. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. Chestx-ray8: Hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106. [Google Scholar]

- Maghdid, H.S.; Asaad, A.T.; Ghafoor, K.Z.; Sadiq, A.S.; Mirjalili, S.; Khan, M.K. Diagnosing COVID-19 pneumonia from X-ray and CT images using deep learning and transfer learning algorithms. In Multimodal Image Exploitation and Learning 2021; SPIE: Bellingham, WA, USA, 2021; Volume 11734, pp. 99–110. [Google Scholar]

- Sajja, T.; Devarapalli, R.; Kalluri, H. Lung Cancer Detection Based on CT Scan Images by Using Deep Transfer Learning. Traitement Du Signal 2019, 36, 339–344. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Al-Amidie, M.; Al-Asadi, A.; Humaidi, A.J.; Al-Shamma, O.; Fadhel, M.A.; Zhang, J.; Santamaría, J.; Duan, Y. Novel transfer learning approach for medical imaging with limited labeled data. Cancers 2021, 13, 1590. [Google Scholar] [CrossRef]

- Koonsanit, K.; Thongvigitmanee, S.; Pongnapang, N.; Thajchayapong, P. Image enhancement on digital x-ray images using N-CLAHE. In Proceedings of the 2017 10th Biomedical Engineering International Conference (BMEICON), Hokkaido, Japan, 31 August–2 September 2017; IEEE: Piscataway, NJ, USA; pp. 1–4. [Google Scholar]

- Shin, H.; Shin, H.; Choi, W.; Park, J.; Park, M.; Koh, E.; Woo, H. Sample-Efficient Deep Learning Techniques for Burn Severity Assessment with Limited Data Conditions. Appl. Sci. 2022, 12, 7317. [Google Scholar] [CrossRef]

- Volety, R.; Jeeva, J. Classification of Burn Images into 1st, 2nd, and 3rd Degree Using State-of-the-Art Deep Learning Techniques. ECS Trans. 2022, 107, 18323. [Google Scholar] [CrossRef]

- Zuiderveld, K.J. Contrast Limited Adaptive Histogram Equalization. In Graphics Gems; Elsevier: Amsterdam, The Netherlands, 1994; pp. 474–485. [Google Scholar]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Lin, Y.-P.; Jung, T.-P. Improving EEG-based emotion classification using conditional transfer learning. Front. Hum. Neurosci. 2017, 11, 334. [Google Scholar] [CrossRef]

- Zhao, X.; Qi, S.; Zhang, B.; Ma, H.; Qian, W.; Yao, Y.; Sun, J. Deep CNN models for pulmonary nodule classification: Model modification, model integration, and transfer learning. J. X-Ray Sci. Technol. 2019, 27, 615–629. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Ballester, P.; Araujo, R.M. On the performance of GoogLeNet and AlexNet applied to sketches. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning (Adaptive Computation and Machine Learning Series); MIT Press: Cambridge MA, USA, 2017. [Google Scholar]

- Li, M.; Zhang, T.; Chen, Y.; Smola, A.J. Efficient mini-batch training for stochastic optimization. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 661–670. [Google Scholar]

- Lalkhen, A.G.; McCluskey, A. Clinical tests: Sensitivity and specificity. Contin. Educ. Anaesth. Crit. Care Pain 2008, 8, 221–223. [Google Scholar] [CrossRef]

| Hyperparameters | Range |

|---|---|

| Initial learning rate (l) | – |

| Learning rate drop period (LP) | 5–15 |

| Learning rate drop factor (LF) | 0.05–0.2 |

| Max epochs (ME) | 10–50 |

| Mini-batch size (MB) | 2–6 |

| Model | Precision | Sensitivity | Specificity | Accuracy | F1 Score |

|---|---|---|---|---|---|

| VGG-16 | 61.11% | 61.11% | 92.22% | 61.11% | 61.11% |

| VGG-19 | 80.56% | 80.56% | 96.11% | 80.56% | 80.56% |

| Squeeze Net | 61.11% | 61.11% | 92.22% | 61.11% | 44.44% |

| ResNet-18 | 66.67% | 66.67% | 93.33% | 66.67% | 66.67% |

| ResNet-50 | 83.33% | 83.33% | 96.67% | 83.33% | 83.33% |

| GoogLeNet | 86.11% | 86.11% | 97.22% | 86.11% | 86.11% |

| CNN-13 | 44.44% | 44.44% | 88.89% | 44.44% | 44.44% |

| CNN-25 | 36.11% | 36.11% | 87.22% | 36.11% | 36.11% |

| Model | Precision | Sensitivity | Specificity | Accuracy | F1 Score |

|---|---|---|---|---|---|

| VGG-16 | 30.56% | 30.56% | 86.11% | 30.56% | 30.56% |

| VGG-19 | 52.78% | 52.78% | 90.56% | 52.78% | 52.78% |

| Squeeze Net | 44.44% | 44.44% | 88.89% | 44.44% | 44.44% |

| ResNet-18 | 52.78% | 52.78% | 90.56% | 52.78% | 52.78% |

| ResNet-50 | 66.67% | 66.67% | 93.33% | 66.67% | 66.67% |

| GoogLeNet | 70.27% | 70.27% | 94.05% | 70.27% | 70.27% |

| CNN-13 | 27.78% | 27.78% | 85.56% | 27.78% | 27.78% |

| CNN-25 | 27.78% | 27.78% | 85.56% | 27.78% | 27.78% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Pang, A.W.; Zeitouni, J.; Zeitouni, F.; Mateja, K.; Griswold, J.A.; Chong, J.W. Inhalation Injury Grading Using Transfer Learning Based on Bronchoscopy Images and Mechanical Ventilation Period. Sensors 2022, 22, 9430. https://doi.org/10.3390/s22239430

Li Y, Pang AW, Zeitouni J, Zeitouni F, Mateja K, Griswold JA, Chong JW. Inhalation Injury Grading Using Transfer Learning Based on Bronchoscopy Images and Mechanical Ventilation Period. Sensors. 2022; 22(23):9430. https://doi.org/10.3390/s22239430

Chicago/Turabian StyleLi, Yifan, Alan W. Pang, Jad Zeitouni, Ferris Zeitouni, Kirby Mateja, John A. Griswold, and Jo Woon Chong. 2022. "Inhalation Injury Grading Using Transfer Learning Based on Bronchoscopy Images and Mechanical Ventilation Period" Sensors 22, no. 23: 9430. https://doi.org/10.3390/s22239430

APA StyleLi, Y., Pang, A. W., Zeitouni, J., Zeitouni, F., Mateja, K., Griswold, J. A., & Chong, J. W. (2022). Inhalation Injury Grading Using Transfer Learning Based on Bronchoscopy Images and Mechanical Ventilation Period. Sensors, 22(23), 9430. https://doi.org/10.3390/s22239430