Industrial Anomaly Detection with Skip Autoencoder and Deep Feature Extractor

Abstract

:1. Introduction

- The MVTecAD, furniture wood, and mobile phone cover glass datasets for production lines were used to train and verify the proposed model, which was then compared with previous anomaly detection models.

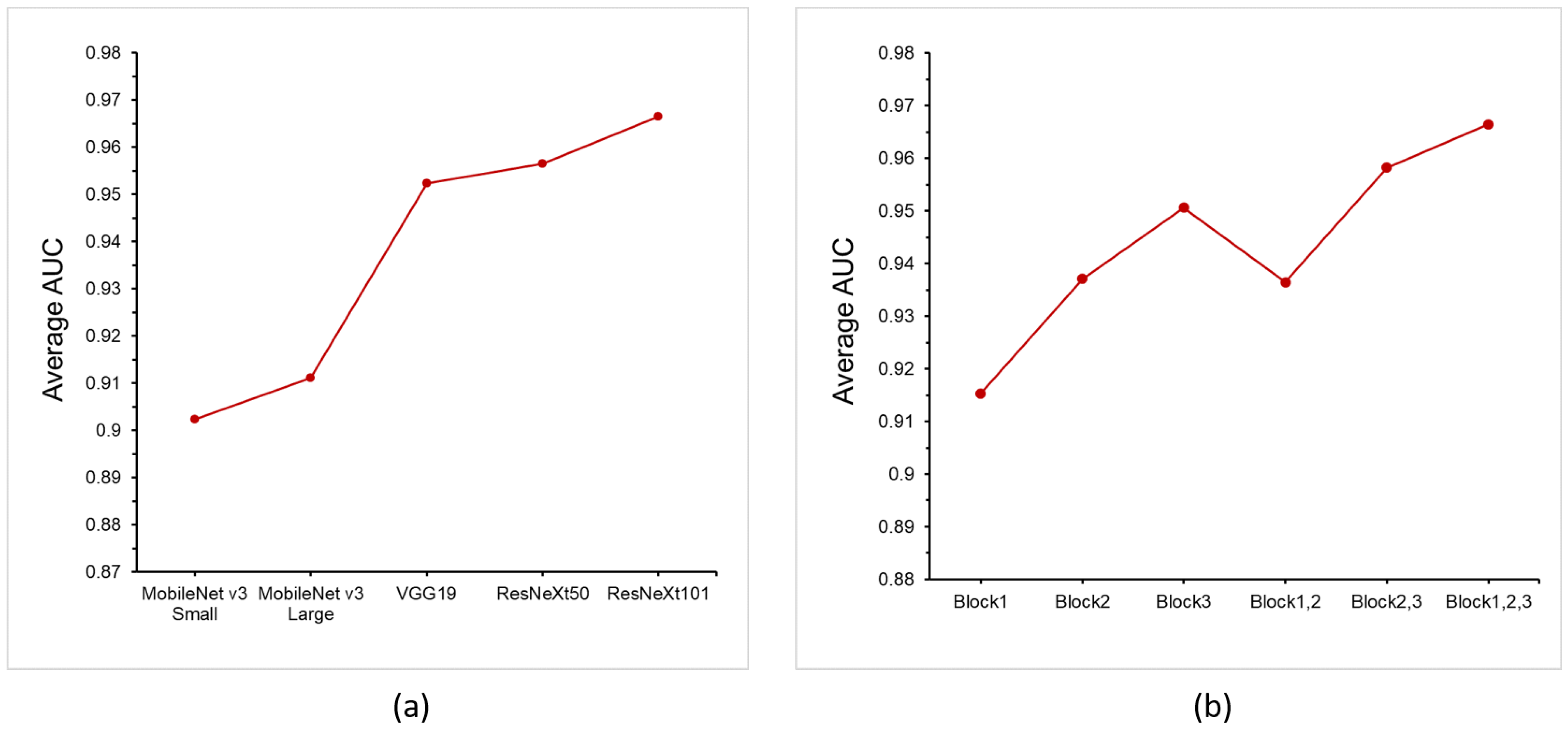

- Different feature extractors were used to train the proposed model, and optimal feature extractor selection under different requirements was discussed.

- The proposed model was trained with different feature extract layers, and the corresponding effects were discussed.

2. Related Works

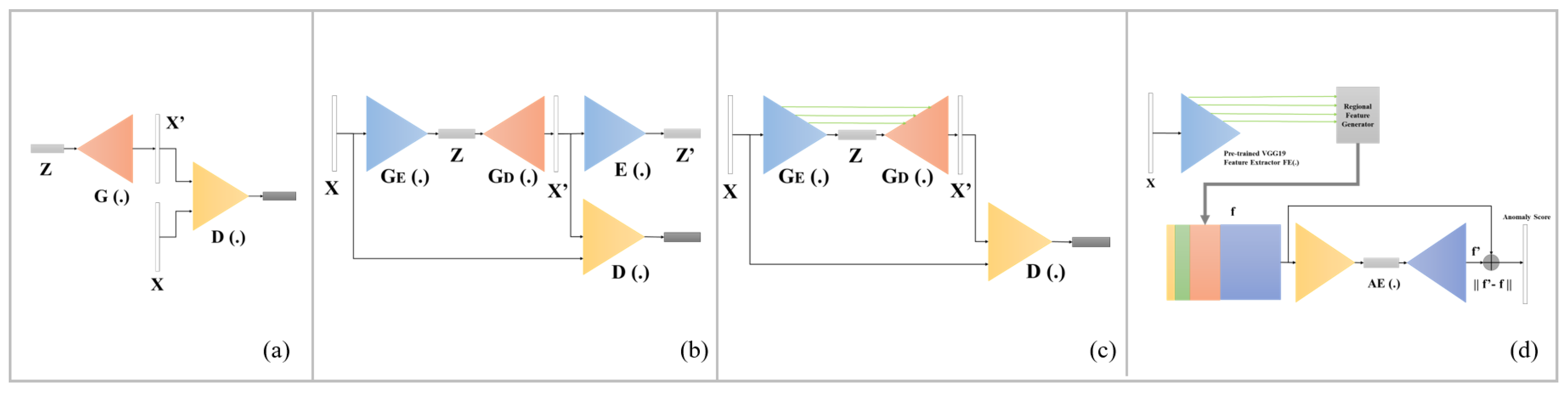

2.1. AnoGAN

2.2. GANomaly

2.3. Skip-GANomaly

2.4. Deep Feature Reconstruction (DFR)

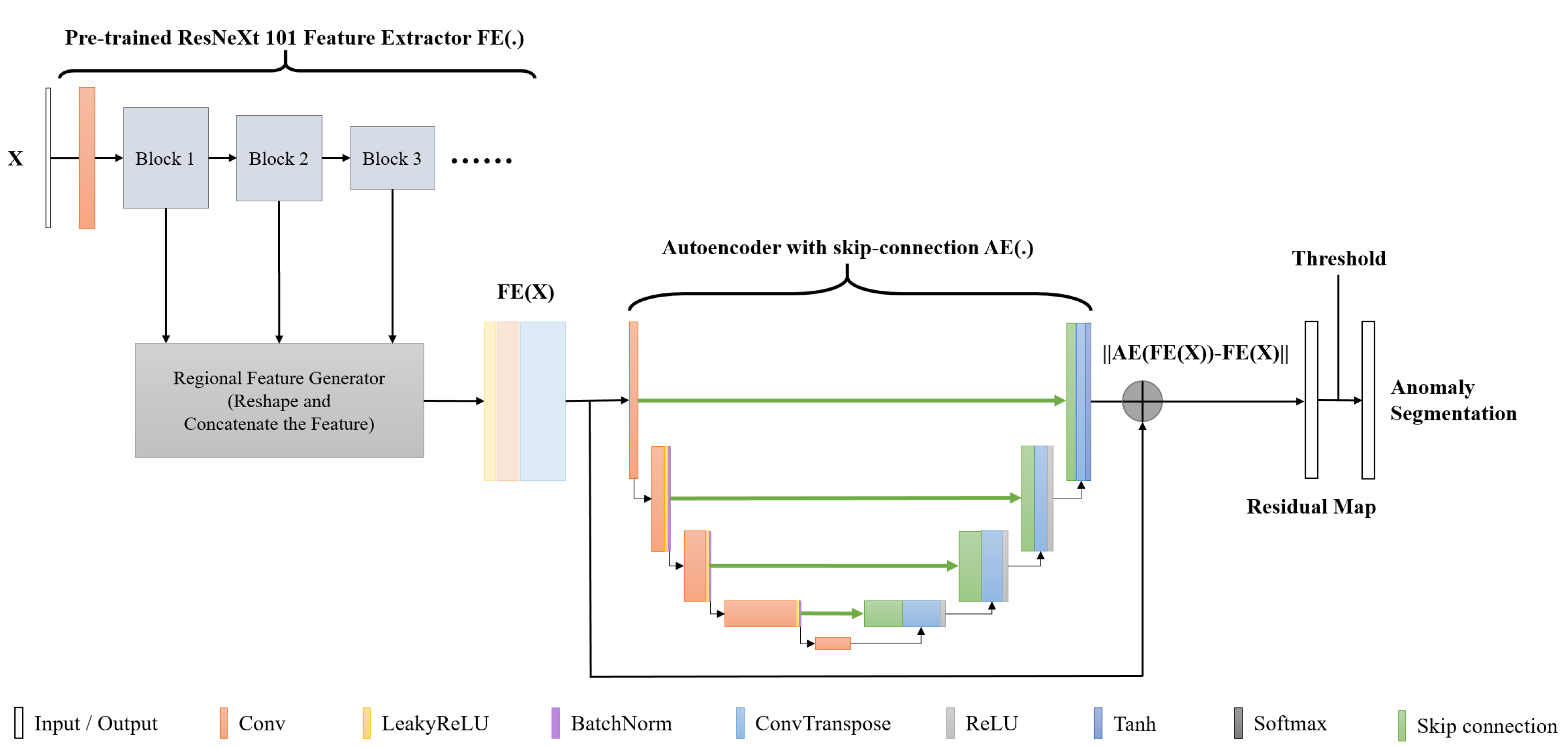

3. Proposed Method

3.1. Model Architecture

3.2. Training Process

3.3. Detection Process

4. Experimental Setup

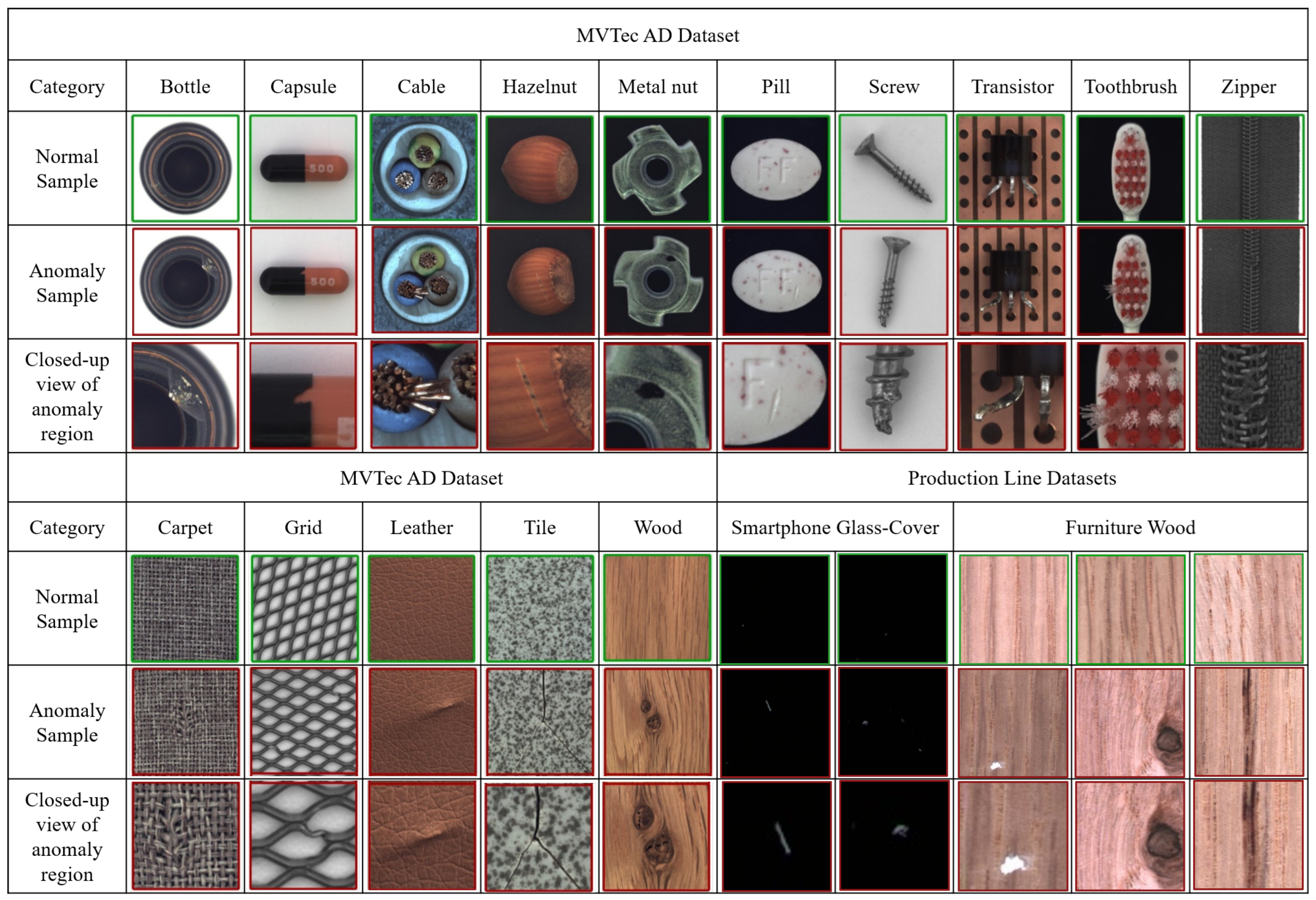

4.1. Datasets

4.1.1. MVTec AD

4.1.2. Production Line Smartphone Glass-Cover Dataset

4.1.3. Production Line Furniture Wood Dataset

4.2. Training Process

4.3. Evaluation Method

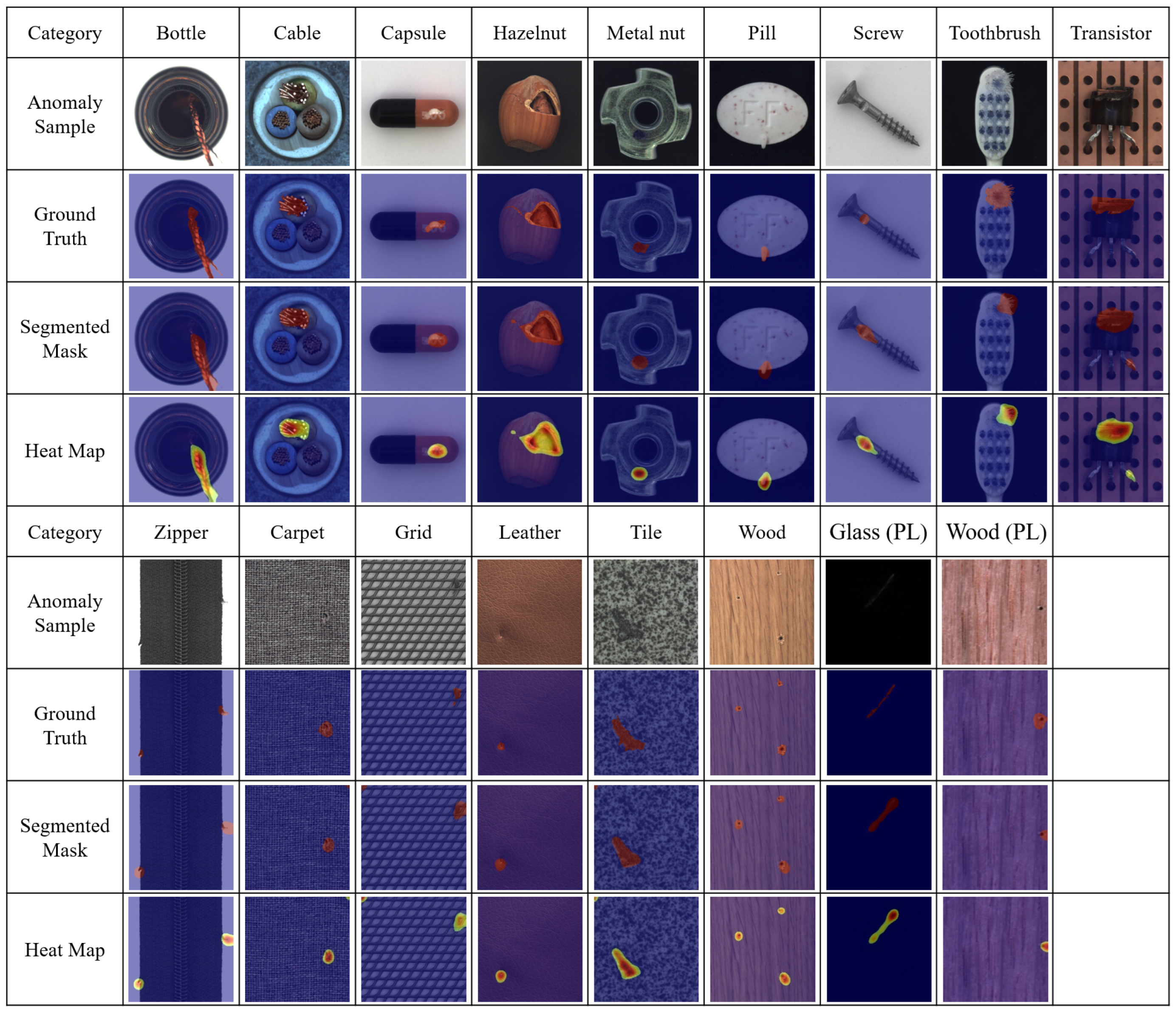

5. Experiment Results

5.1. MVTec AD Dataset

5.2. Production Line Smartphone Glass-Cover and Furniture Wood Datasets

5.3. Discussion of Inference Time

5.4. Discussion of Feature Extractor

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Chen, Y.; Li, J.; Xiao, H.; Jin, X.; Yan, S.; Feng, J. Dual Path Networks. arXiv 2017, arXiv:1707.01629. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Avdelidis, N.P.; Tsourdos, A.; Lafiosca, P.; Plaster, R.; Plaster, A.; Droznika, M. Defects Recognition Algorithm Development from Visual UAV Inspections. Sensors 2022, 22, 4682. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Xiang, Z. RPDNet: Automatic Fabric Defect Detection Based on a Convolutional Neural Network and Repeated Pattern Analysis. Sensors 2022, 22, 6226. [Google Scholar] [CrossRef] [PubMed]

- Gubins, I.; Chaillet, M.L.; van der Schot, G.; Veltkamp, R.C.; Förster, F.; Hao, Y.; Bunyak, F. SHREC 2020: Classification in cryo-electron tomograms. Comput. Graph. 2020, 279–289. [Google Scholar] [CrossRef]

- Badmos, O.; Kopp, A.; Bernthaler, T.; Schneider, G. Image-based defect detection in lithium-ion battery electrode using convolutional neural networks. J. Intell. Manuf. 2020, 31, 885–897. [Google Scholar] [CrossRef]

- Chen, H.; Pang, Y.; Hu, Q.; Liu, K. Solar cell surface defect inspection based on multispectral convolutional neural network. J. Intell. Manuf. 2020, 31, 453–468. [Google Scholar] [CrossRef] [Green Version]

- Yuan, R.; Lv, Y.; Wang, T.; Li, S.; Li, H. Looseness monitoring of multiple M1 bolt joints using multivariate intrinsic multiscale entropy analysis and Lorentz signal-enhanced piezoelectric active sensing. Struct. Health Monit. 2022, 21, 2851–2873. [Google Scholar] [CrossRef]

- Yuan, R.; Lv, Y.; Lu, Z.; Li, S.; Li, H. Robust fault diagnosis of rolling bearing via phase space reconstruction of intrinsic mode functions and neural network under various operating conditions. Struct. Health Monit. 2022, 14759217221091131. [Google Scholar] [CrossRef]

- Dunphy, K.; Fekri, M.N.; Grolinger, K.; Sadhu, A. Data Augmentation for Deep-Learning-Based Multiclass Structural Damage Detection Using Limited Information. Sensors 2022, 22, 6193. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Tang, D.; Liu, C.; Nie, Q.; Wang, Z.; Zhang, L. An Augmented Reality-Assisted Prognostics and Health Management System Based on Deep Learning for IoT-Enabled Manufacturing. Sensors 2022, 22, 6472. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Cao, J.; Zheng, D.; Yao, X.; Ling, B.W.K. Deep Learning-Based Synthesized View Quality Enhancement with DIBR Distortion Mask Prediction Using Synthetic Images. Sensors 2022, 22, 8127. [Google Scholar] [CrossRef] [PubMed]

- Stern, M.L.; Schellenberger, M. Fully Convolutional Networks for Chip-wise Defect Detection Employing Photoluminescence Images. J. Intell. Manuf. 2021, 32, 113–126. [Google Scholar] [CrossRef] [Green Version]

- An, J.; Cho, S. Variational Autoencoder based Anomaly Detection using Reconstruction Probability. SNU Data Min. Cent. 2015, 2, 1–18. [Google Scholar]

- Zenati, H.; Foo, C.S.; Lecouat, B.; Manek, G.; Chrasekhar, V.R. Efficient GAN-Based Anomaly Detection. arXiv 2019, arXiv:1611.05431. [Google Scholar]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised Anomaly Detection with Generative Adversarial Networks to Guide Marker Discovery. In Proceedings of the International Conference on Information Processing in Medical Imaging, Boone, NC, USA, 25–30 June 2017; pp. 147–157. [Google Scholar]

- Akcay, S.; Atapour-Abarghouei, A.; Breckon, T.P. GANomaly: Semi-supervised Anomaly Detection via Adversarial Training. In Proceedings of the Asian Conference on Computer Vision, Perth, WA, Australia, 2–6 December 2018; pp. 622–637. [Google Scholar]

- Akçay, S.; Atapour-Abarghouei, A.; Breckon, T.P. Skip-GANomaly: Skip Connected and Adversarially Trained Encoder-Decoder Anomaly Detection. In Proceedings of the International Joint Conference on Neural Networks, Budapest, Hungary, 14–19 July 2019. [Google Scholar]

- Yang, J.; Shi, Y.; Qi, Z. DFR: Deep Feature Reconstruction for Unsupervised Anomaly Segmentation. arXiv 2020, arXiv:2012.07122. [Google Scholar]

- Tang, T.W.; Kuo, W.H.; Lan, J.H.; Ding, C.F.; Hsu, H.; Young, H.T. Anomaly Detection Neural Network with Dual Auto-Encoders GAN and Its Industrial Inspection Applications. Sensors 2020, 20, 3336. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Fei-Fei, L. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD—A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection. Int. J. Comput. Vis. 2021, 129, 1038–1059. [Google Scholar] [CrossRef]

- Liznerski, P.; Ruff, L.; Vandermeulen, R.A.; Franks, B.J.; Kloft, M.; Müller, K.R. Explainable Deep One-Class Classification. arXiv 2021, arXiv:2007.01760. [Google Scholar]

- Dehaene, D.; Frigo, O.; Combrexelle, S.; Eline, P. Iterative energy-based projection on a normal data manifold for anomaly localization. arXiv 2020, arXiv:2002.03734. [Google Scholar]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. Reconstruction by inpainting for visual anomaly detection. Pattern Recognit. 2021, 112, 107706. [Google Scholar] [CrossRef]

- Dehaene, D.; Eline, P. Anomaly localization by modeling perceptual features. arXiv 2020, arXiv:2008.05369. [Google Scholar]

- Kim, J.H.; Kim, D.H.; Yi, S.; Lee, T. Semi-orthogonal Embedding for Efficient Unsupervised Anomaly Segmentation. arXiv 2021, arXiv:2105.21737. [Google Scholar]

- Chen, Y.; Tian, Y.; Pang, G.; Carneiro, G. Deep One-Class Classification via Interpolated Gaussian Descriptor. arXiv 2022, arXiv:2101.10043. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Adam, H. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar]

| AnoGAN | GANomaly | Skip-GANomaly | DFR | |

|---|---|---|---|---|

| Advantages | Training the model without abnormal data. | Much less inspection time than AnoGAN. | Better ability of feature reconstructing. | Great ability of feature extraction. |

| Limitations | Requires a significant amount of computing resources. | Feature extracting and reconstructing abilities are limited. | Cannot extract complex image feature. | Limited ability of feature reconstruction. |

| Category | AnoGAN | GANomaly | Skip-GANomaly | DFR | Proposed Method |

|---|---|---|---|---|---|

| Bottle | 0.82 | 0.82 | 0.91 | 0.94 | 0.98 |

| Cable | 0.77 | 0.64 | 0.65 | 0.87 | 0.95 |

| Capsule | 0.85 | 0.75 | 0.72 | 0.97 | 0.99 |

| Carpet | 0.55 | 0.83 | 0.52 | 0.98 | 0.98 |

| Grid | 0.59 | 0.89 | 0.85 | 0.96 | 0.91 |

| Hazelnut | 0.4 | 0.94 | 0.83 | 0.98 | 0.98 |

| Leather | 0.64 | 0.81 | 0.82 | 0.99 | 0.99 |

| Metal nut | 0.44 | 0.65 | 0.67 | 0.92 | 0.98 |

| Pill | 0.76 | 0.67 | 0.8 | 0.95 | 0.98 |

| Screw | 0.79 | 0.9 | 0.92 | 0.97 | 0.98 |

| Tile | 0.52 | 0.65 | 0.68 | 0.89 | 0.97 |

| Toothbrush | 0.88 | 0.85 | 0.78 | 0.97 | 0.99 |

| Transistor | 0.78 | 0.7 | 0.81 | 0.78 | 0.87 |

| Wood | 0.65 | 0.95 | 0.92 | 0.94 | 0.97 |

| Zipper | 0.77 | 0.67 | 0.67 | 0.95 | 0.98 |

| Average | 0.68 | 0.78 | 0.77 | 0.94 | 0.97 |

| Category | AnoGAN | GANomaly | Skip-GANomaly | DFR | Proposed Method |

|---|---|---|---|---|---|

| Glass (PL) | 0.65 | 0.64 | 0.82 | 0.99 | 0.99 |

| Wood (PL) | 0.71 | 0.84 | 0.8 | 0.92 | 0.94 |

| Average | 0.68 | 0.74 | 0.81 | 0.96 | 0.97 |

| AnoGAN | GANomaly | Skip-GANomaly | DFR | Proposed Method | |

|---|---|---|---|---|---|

| Time (ms) | 7025 | 2.68 | 2.82 | 10.10 | 11.20 |

| Category | MobileNet (S) | MobileNet (L) | VGG19 | ResNeXt50 | ResNeXt101 |

|---|---|---|---|---|---|

| Bottle | 0.94 | 0.92 | 0.96 | 0.98 | 0.98 |

| Cable | 0.87 | 0.88 | 0.92 | 0.94 | 0.95 |

| Capsule | 0.94 | 0.94 | 0.98 | 0.99 | 0.99 |

| Carpet | 0.88 | 0.9 | 0.98 | 0.95 | 0.98 |

| Grid | 0.78 | 0.86 | 0.98 | 0.9 | 0.91 |

| Hazelnut | 0.95 | 0.95 | 0.98 | 0.98 | 0.98 |

| Leather | 0.98 | 0.98 | 0.98 | 0.98 | 0.99 |

| Metal nut | 0.87 | 0.95 | 0.94 | 0.98 | 0.98 |

| Pill | 0.92 | 0.92 | 0.97 | 0.96 | 0.98 |

| Screw | 0.92 | 0.95 | 0.98 | 0.98 | 0.98 |

| Tile | 0.98 | 0.95 | 0.91 | 0.97 | 0.97 |

| Toothbrush | 0.95 | 0.94 | 0.98 | 0.98 | 0.99 |

| Transistor | 0.74 | 0.66 | 0.79 | 0.82 | 0.87 |

| Wood | 0.93 | 0.94 | 0.95 | 0.97 | 0.97 |

| Zipper | 0.78 | 0.88 | 0.97 | 0.96 | 0.98 |

| Glass (PL) | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 |

| Wood (PL) | 0.92 | 0.88 | 0.93 | 0.93 | 0.94 |

| Average | 0.9 | 0.91 | 0.95 | 0.96 | 0.97 |

| Category | Block1 | Block2 | Block3 | Block1,2 | Block2,3 | Block1,2,3 |

|---|---|---|---|---|---|---|

| Bottle | 0.84 | 0.96 | 0.97 | 0.94 | 0.97 | 0.98 |

| Cable | 0.88 | 0.92 | 0.95 | 0.91 | 0.95 | 0.95 |

| Capsule | 0.93 | 0.97 | 0.98 | 0.96 | 0.98 | 0.99 |

| Carpet | 0.93 | 0.92 | 0.95 | 0.94 | 0.96 | 0.98 |

| Grid | 0.88 | 0.91 | 0.87 | 0.92 | 0.9 | 0.91 |

| Hazelnut | 0.97 | 0.96 | 0.97 | 0.97 | 0.98 | 0.98 |

| Leather | 0.98 | 0.99 | 0.97 | 0.98 | 0.97 | 0.99 |

| Metal nut | 0.96 | 0.96 | 0.95 | 0.96 | 0.97 | 0.98 |

| Pill | 0.92 | 0.95 | 0.97 | 0.95 | 0.98 | 0.98 |

| Screw | 0.97 | 0.97 | 0.96 | 0.97 | 0.97 | 0.98 |

| Tile | 0.97 | 0.97 | 0.95 | 0.97 | 0.96 | 0.97 |

| Toothbrush | 0.92 | 0.96 | 0.98 | 0.96 | 0.98 | 0.99 |

| Transistor | 0.66 | 0.71 | 0.88 | 0.71 | 0.87 | 0.87 |

| Wood | 0.96 | 0.96 | 0.94 | 0.96 | 0.96 | 0.97 |

| Zipper | 0.92 | 0.94 | 0.96 | 0.94 | 0.97 | 0.98 |

| Glass (PL) | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 |

| Wood (PL) | 0.88 | 0.89 | 0.92 | 0.89 | 0.93 | 0.94 |

| Average | 0.92 | 0.94 | 0.95 | 0.94 | 0.96 | 0.97 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, T.-W.; Hsu, H.; Huang, W.-R.; Li, K.-M. Industrial Anomaly Detection with Skip Autoencoder and Deep Feature Extractor. Sensors 2022, 22, 9327. https://doi.org/10.3390/s22239327

Tang T-W, Hsu H, Huang W-R, Li K-M. Industrial Anomaly Detection with Skip Autoencoder and Deep Feature Extractor. Sensors. 2022; 22(23):9327. https://doi.org/10.3390/s22239327

Chicago/Turabian StyleTang, Ta-Wei, Hakiem Hsu, Wei-Ren Huang, and Kuan-Ming Li. 2022. "Industrial Anomaly Detection with Skip Autoencoder and Deep Feature Extractor" Sensors 22, no. 23: 9327. https://doi.org/10.3390/s22239327

APA StyleTang, T.-W., Hsu, H., Huang, W.-R., & Li, K.-M. (2022). Industrial Anomaly Detection with Skip Autoencoder and Deep Feature Extractor. Sensors, 22(23), 9327. https://doi.org/10.3390/s22239327