An Entropy Analysis-Based Window Size Optimization Scheme for Merging LiDAR Data Frames

Abstract

1. Introduction

2. Related Works

2.1. Mobile LiDAR Systems

2.2. Point Cloud Data Merging

3. Entropy Analysis based Window Size Optimization Scheme

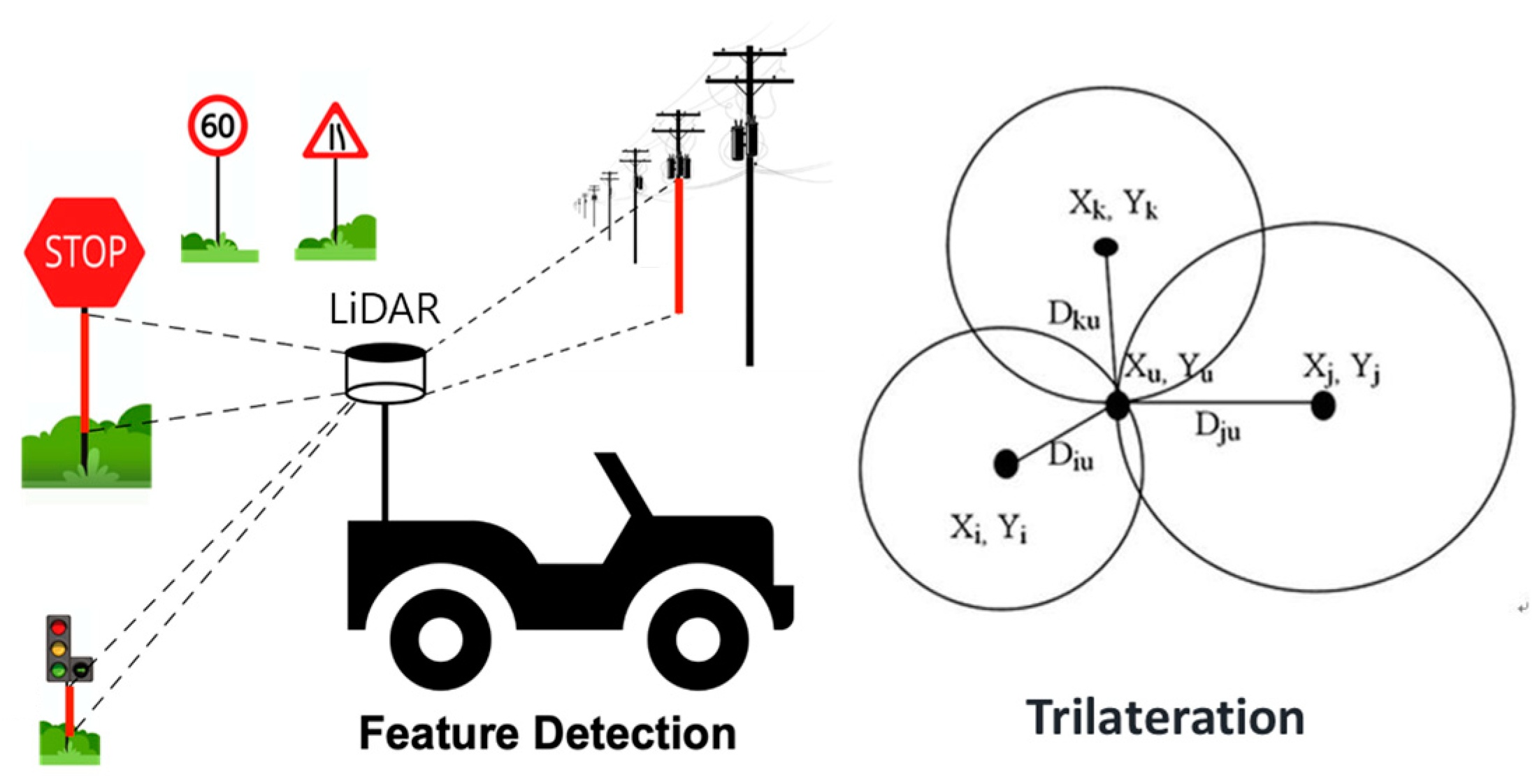

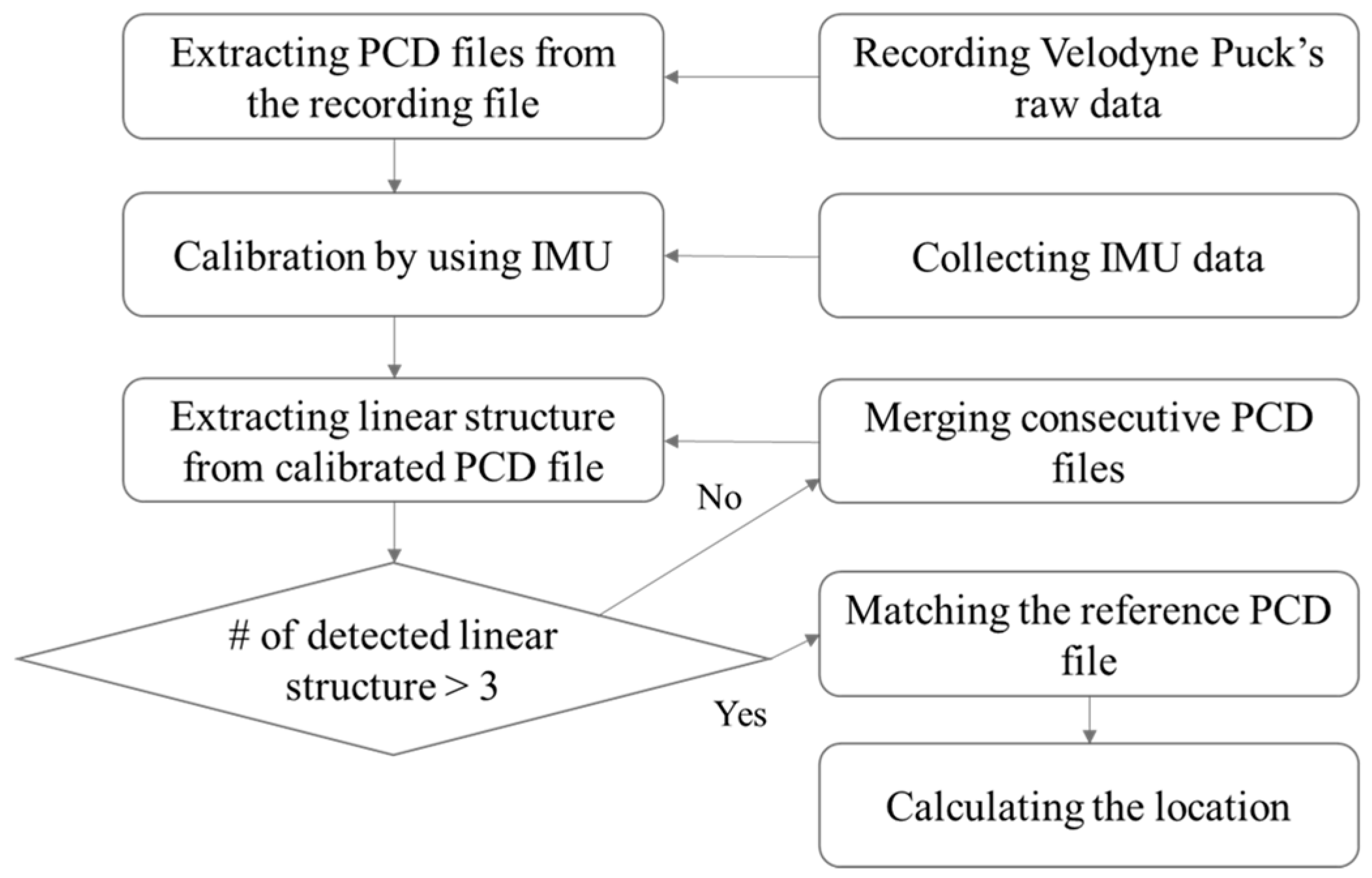

3.1. Application Scenario

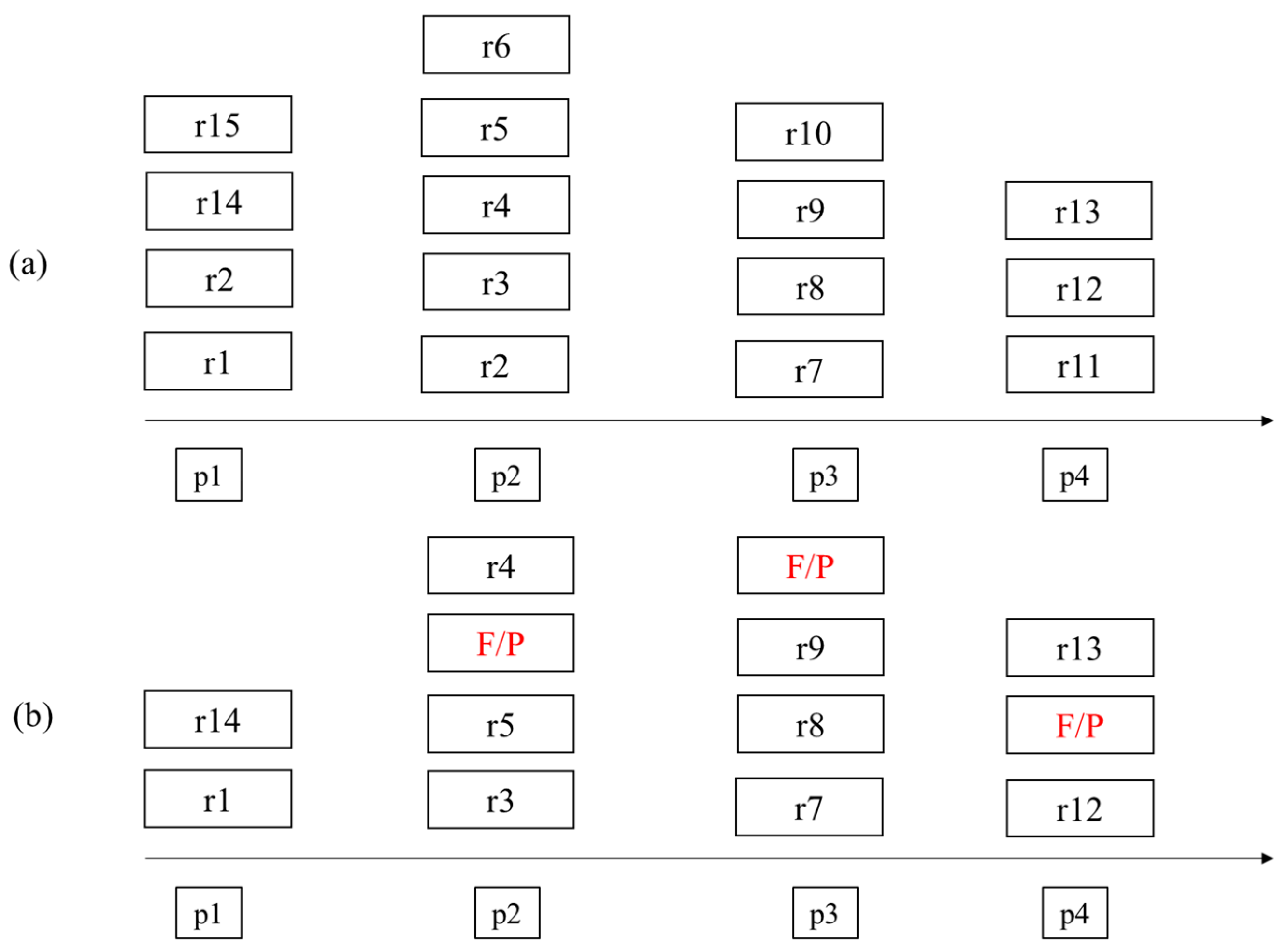

3.2. System Modeling

3.3. Window Size Optimization Algorithm

| Algorithm 1 Finding Optimal Window Size | |

| Input: I, TI, R, N | |

| Output: Optimal window size ω | |

| 1 | Calculate ER(X) |

| 2 | Vmin = INF |

| 3 | for i = 2 to 10 do |

| 4 | Ei(X) = 0 |

| 5 | for j = 1 to TI do |

| 6 | Ei(X) += |

| 7 | end for |

| 8 | if < Vmin then |

| 9 | Vmin |

| 10 | ω = i |

| 11 | end if |

| 12 | end for |

| 13 | return ω |

4. Evaluation Results

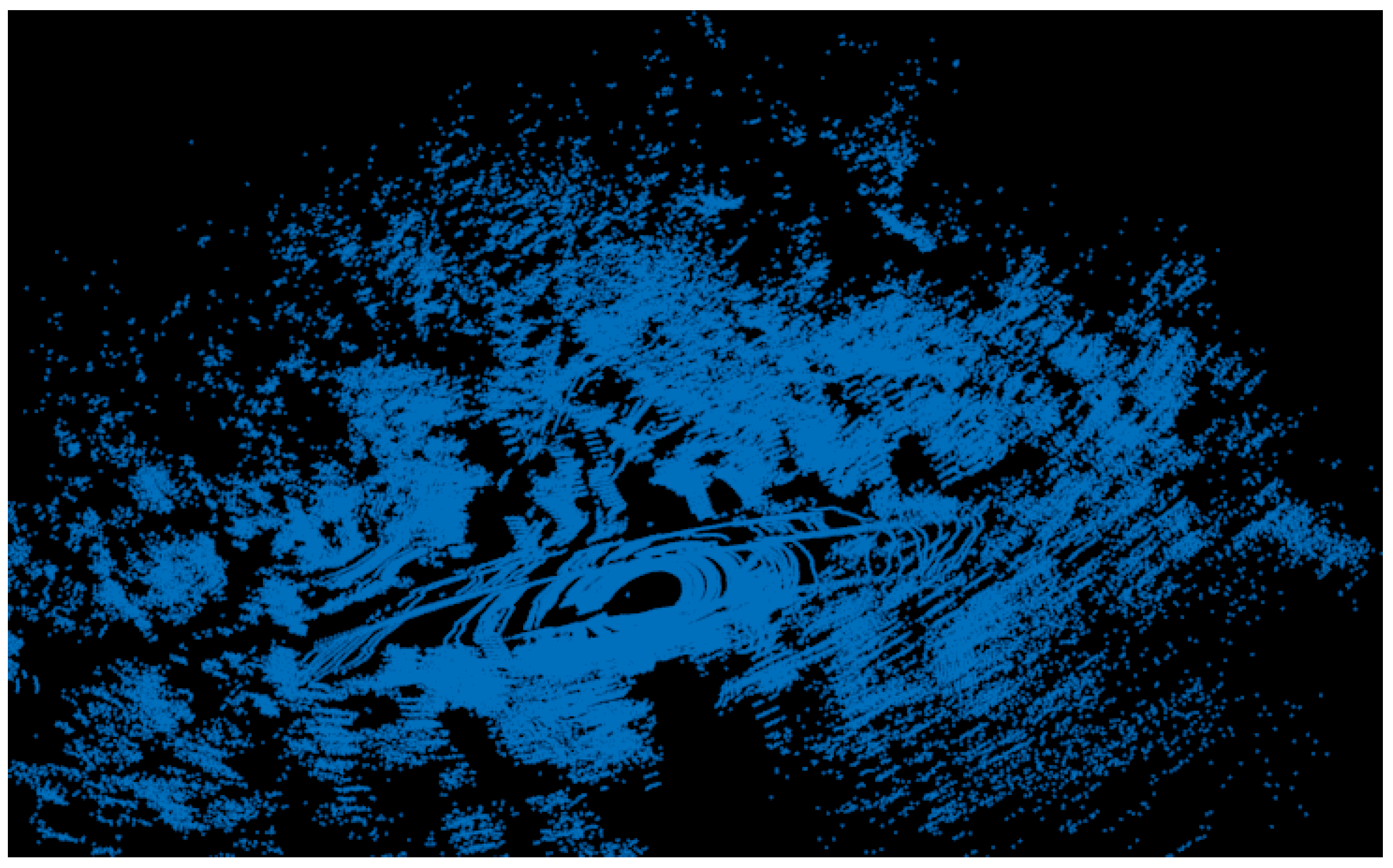

4.1. Experimental Environment

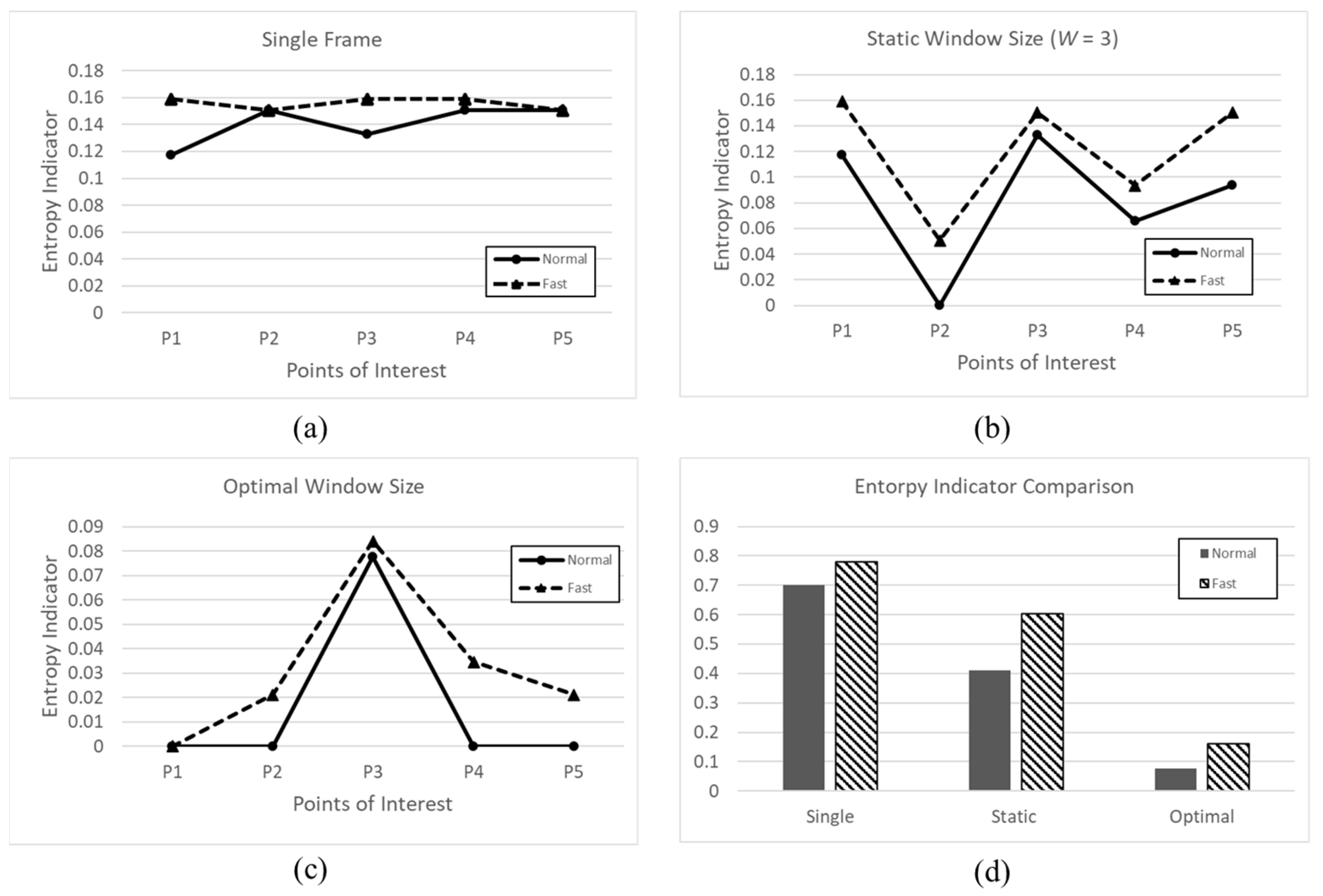

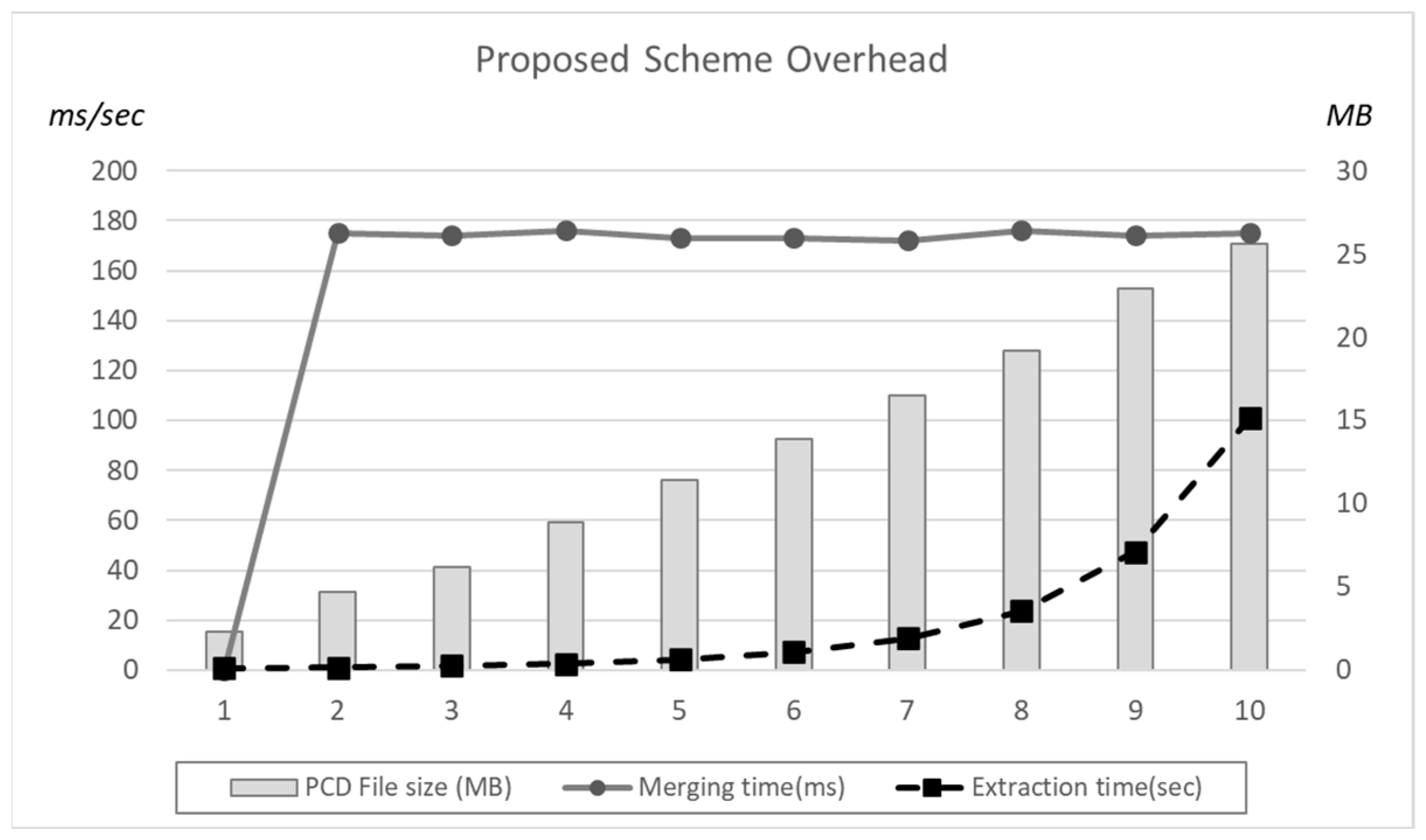

4.2. Effects of the Proposed Window Mechanism

4.3. Entropy Indicator Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Raj, T.; Hashim, F.H.; Huddin, A.B.; Ibrahim, M.F.; Hussain, A. A Survey on LiDAR Scanning Mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Roriz, R.; Cabral, J.; Gomes, T. Automotive LiDAR Technology: A Survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6282–6297. [Google Scholar] [CrossRef]

- Zheng, X.; Zhu, J. Efficient LiDAR Odometry for Autonomous Driving. IEEE Robot. Autom. Lett. 2021, 6, 8458–8465. [Google Scholar] [CrossRef]

- Khan, M.U.; Zaidi, S.A.; Ishtiaq, A.; Bukhari, S.U.; Samer, S.; Farman, A. A Comparative Survey of LiDAR-SLAM and LiDAR based Sensor Technologies. In Proceedings of the 2021 Mohammad Ali Jinnah University International Conference on Computing (MAJICC), Karachi, Pakistan, 15–17 July 2021; pp. 1–8. [Google Scholar]

- Wang, H.; Wang, C.; Xie, L. Intensity-SLAM: Intensity Assisted Localization and Mapping for Large Scale Environment. IEEE Robot. Autom. Lett. 2021, 6, 1715–1721. [Google Scholar] [CrossRef]

- Zou, Q.; Sun, Q.; Chen, L.; Nie, B.; Li, Q. A Comparative Analysis of LiDAR SLAM-Based Indoor Navigation for Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6907–6921. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, J.; Wang, X.; Dolan, J.M. Road-Segmentation-Based Curb Detection Method for Self-Driving via a 3D-LiDAR Sensor. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3981–3991. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, L.; Jiang, X.; Zeng, Y.; Dai, Y. An efficient LiDAR-based localization method for self-driving cars in dynamic environments. Robotica 2021, 40, 38–55. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, M.; Su, S.; Liu, T.; Yang, Y. Dynamic Object Tracking for Self-Driving Cars Using Monocular Camera and LIDAR. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 10865–10872. [Google Scholar]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather Influence and Classification with Automotive Lidar Sensors. In Proceedings of the IEEE Intelligent Vehicles Symposium, Paris, France, 9–12 June 2019; pp. 1527–1534. [Google Scholar]

- Cheng, X.; Hu, X.; Tan, K.; Wang, L.; Yang, L. Automatic Detection of Shield Tunnel Leakages Based on Terrestrial Mobile LiDAR Intensity Images Using Deep Learning. IEEE Access 2021, 9, 55300–55310. [Google Scholar] [CrossRef]

- Luo, C.; Sha, H.; Ling, C.; Li, J. Intelligent Detection for Tunnel Shotcrete Spray Using Deep Learning and LiDAR. IEEE Access 2020, 8, 1755–1766. [Google Scholar]

- Ma, L.; Li, Y.; Li, J.; Yu, Y.; Junior, J.M.; Goncalves, W.N.; Chapman, M.A. Capsule-Based Networks for Road Marking Extraction and Classification From Mobile LiDAR Point Clouds. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1981–1995. [Google Scholar] [CrossRef]

- De Silva, V.; Roche, J.; Kondoz, A. Robust Fusion of LiDAR and Wide-Angle Camera Data for Autonomous Mobile Robots. Sensors 2018, 18, 2730. [Google Scholar] [CrossRef]

- Hu, T.; Sun, X.; Su, Y.; Guan, H.; Sun, Q.; Kelly, M.; Guo, Q. Development and Performance Evaluation of a Very Low-Cost UAV-Lidar System for Forestry Applications. Remote Sens. 2021, 13, 77. [Google Scholar] [CrossRef]

- Park, C.; Moghadam, P.; Williams, J.L.; Kim, S.; Sridharan, S.; Fookes, C. Elasticity Meets Continuous-Time: Map-Centric Dense 3D LiDAR SLAM. IEEE Trans. Robot. 2021, 38, 978–997. [Google Scholar] [CrossRef]

- Karimi, M.; Oelsch, M.; Stengel, O.; Babaians, E.; Steinbach, E. LoLa-SLAM: Low-Latency LiDAR SLAM Using Continuous Scan Slicing. IEEE Robot. Autom. Lett. 2021, 6, 2248–2255. [Google Scholar] [CrossRef]

- Zhou, L.; Koppel, D.; Kaess, M. LiDAR SLAM With Plane Adjustment for Indoor Environment. IEEE Robot. Autom. Lett. 2021, 6, 7073–7080. [Google Scholar] [CrossRef]

- Chen, Y.; Hao, C.; Wu, W.; Wu, E. Robust dense reconstruction by range merging based on confidence estimation. Sci. China Inf. Sci. 2016, 59, 092103. [Google Scholar] [CrossRef]

- Morita, K.; Hashimoto, M.; Takahashi, K. Point-Cloud Mapping and Merging Using Mobile Laser Scanner. In Proceedings of the 3rd IEEE International Conference on Robotic Computing, Naples, Italy, 25–27 February 2019; pp. 417–418. [Google Scholar]

- Gao, X.; Shen, S.; Zhou, Y.; Cui, H.; Zhu, L.; Hu, Z. Ancient Chinese architecture 3D preservation by merging ground and aerial point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 143, 72–84. [Google Scholar] [CrossRef]

- Serafin, J.; Grisetti, G. Using extended measurements and scene merging for efficient and robust point cloud registration. Robot. Auton. Syst. 2017, 92, 91–106. [Google Scholar] [CrossRef]

- Wang, D.; Brunner, J.; Ma, Z.; Lu, H.; Hollaus, M.; Pang, Y.; Pfeifer, N. Separating Tree Photosynthetic and Non-Photosynthetic Components from Point Cloud Data Using Dynamic Segment Merging. Forests 2018, 9, 252. [Google Scholar] [CrossRef]

- Kwon, S.; Park, J.-W.; Moon, D.; Jung, S.; Park, H. Smart Merging Method for Hybrid Point Cloud Data using UAV and LIDAR in Earthwork Construction. Procedia Eng. 2017, 196, 21–28. [Google Scholar] [CrossRef]

- Wu, W.; Huang, Y.; Kurachi, R.; Zeng, G.; Xie, G.; Li, R.; Li, K. Sliding Window Optimized Information Entropy Analysis Method for Intrusion Detection on In-Vehicle Networks. IEEE Access 2018, 6, 45233–45245. [Google Scholar] [CrossRef]

| Velodyne Puck | Velodyne Ultra Puck | |

|---|---|---|

| # of channels | 16 | 32 |

| Max range | 100 m | 200 m |

| Accuracy | ±3 cm | ±3 cm |

| FoV | 30° (−15° to +15°) | 40° (−25° to +15°) |

| Rotation rate | 5~20 Hz | 5~20 Hz |

| Vertical angular resolution | 2° | 0.33° |

| Horizontal angular resolution | 0.1~0.4° | 0.1~0.4° |

| # of frames | 10 | 10 |

| Weight | 830 g | 925 g |

| Notation | Description |

|---|---|

| E(X) | Indicator of system X’s entropy |

| I | Set of point of interests |

| TI | Total number of point of interests |

| R | Set of referenced linear structures’ ID |

| W | Window size (2 <= W <= 10) |

| Total number of extracted linear structure at each point of interest in ideal result (e.g., N = 16 in Figure 5a) | |

| The number of detected linear structure at point i (pi) | |

| The number of incorrectly detected linear structure at point i (pi) | |

| The probability of correctly detected linear structure at point i (pi) | |

| ER(X) | Entropy indicator of ideal result |

| EW(X) | Entropy indicator of actual result at window size W |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, T.; Jung, J.; Min, H.; Jung, Y.-H. An Entropy Analysis-Based Window Size Optimization Scheme for Merging LiDAR Data Frames. Sensors 2022, 22, 9293. https://doi.org/10.3390/s22239293

Kim T, Jung J, Min H, Jung Y-H. An Entropy Analysis-Based Window Size Optimization Scheme for Merging LiDAR Data Frames. Sensors. 2022; 22(23):9293. https://doi.org/10.3390/s22239293

Chicago/Turabian StyleKim, Taesik, Jinman Jung, Hong Min, and Young-Hoon Jung. 2022. "An Entropy Analysis-Based Window Size Optimization Scheme for Merging LiDAR Data Frames" Sensors 22, no. 23: 9293. https://doi.org/10.3390/s22239293

APA StyleKim, T., Jung, J., Min, H., & Jung, Y.-H. (2022). An Entropy Analysis-Based Window Size Optimization Scheme for Merging LiDAR Data Frames. Sensors, 22(23), 9293. https://doi.org/10.3390/s22239293