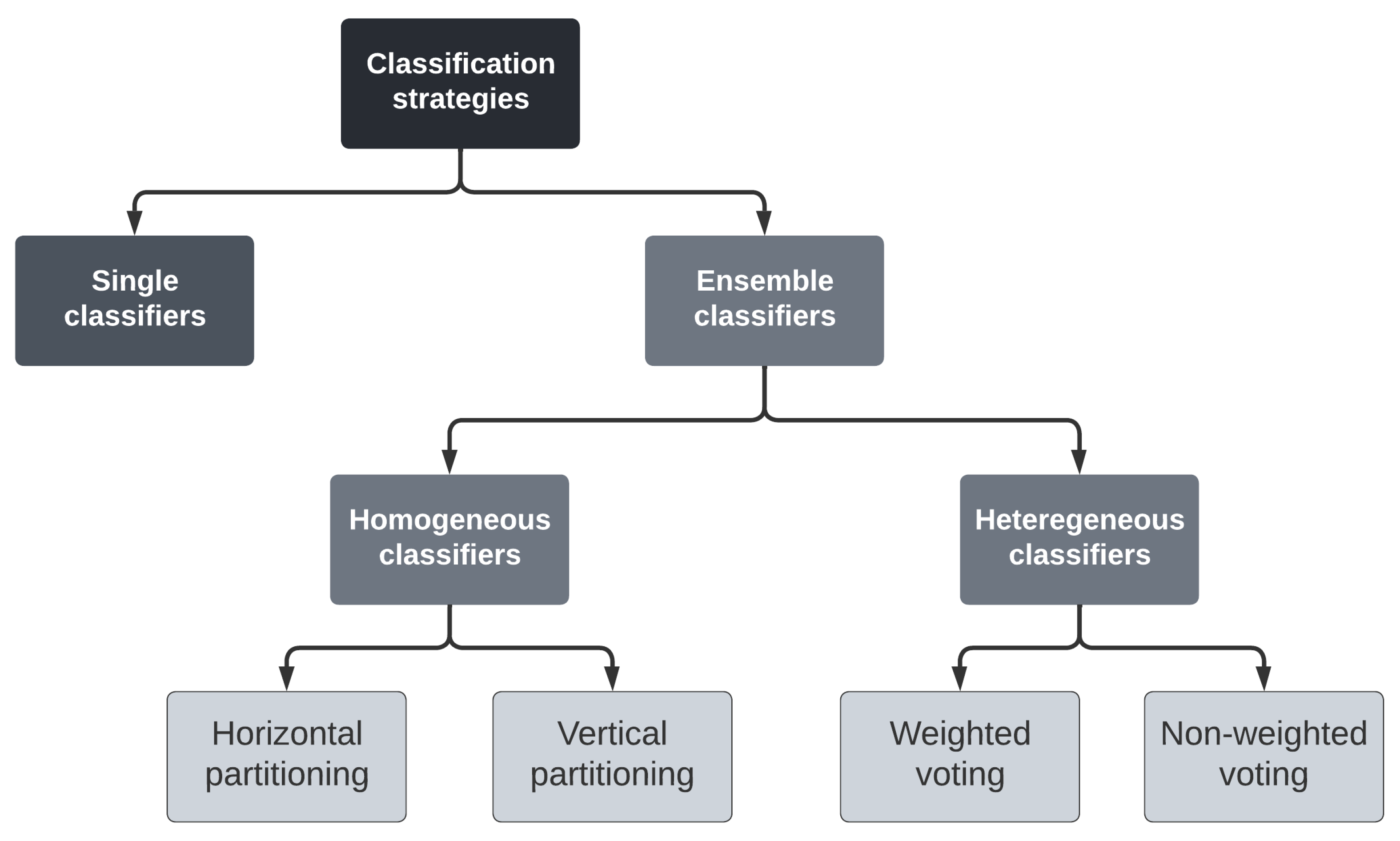

In the case of ensemble classifiers, two options are possible. The first option is called Heterogeneous Ensemble Classifiers, which consists of training different classifiers using the same training dataset. The second option is called Homogeneous Ensemble Classifiers, which consists of training different instances of the same classifier using different partitions of the training dataset.

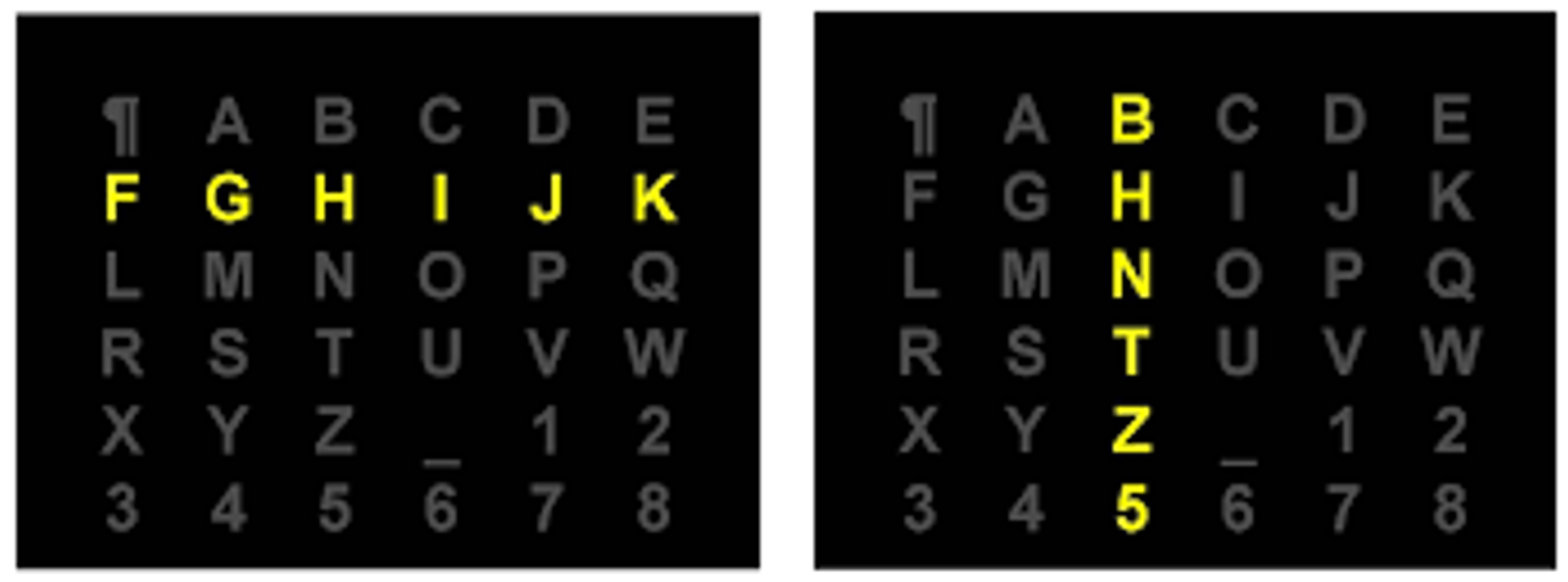

Let us consider a training dataset, denoted

D, comprising post-stimulus signals corresponding to the selection of

symbols (commands)

of

M.

where

is the

ith sequence

that occurs during the

jth selection. So, the dataset

D consists of

post-stimulus training signals.

4.2.1. Heterogeneous Ensemble Classifiers Strategy

This step is often known as decision-level fusion, where different modalities are utilized for separate training models. An aggregation function is used at the end to determine the final decision by combining the different prediction results of distinct models. In such a case, the whole training dataset

D is used to train

N different 2-class classifiers, leading to an ensemble of distinct classifiers. Every classifier

is trained to predict if a signal contains a P300 response or not. Every classifier

builds its prediction model. Thus every classifier

will customize the prediction method

, denoted

, according to its prediction model. The total number of trials used to train and build the model of every classifier is defined using the following expression:

There are two different approaches to building the final decision of this classification strategy: non-weighted voting and weighted voting.

- a.

Non Weighted Voting

In this case, the different classifiers play the same role to predict the selected symbol/command. Given a selection

, the corresponding

sequences of intensifications are parsed simultaneously by the

N distinct classifiers. Every classifier

calculates

and returns a row vector denoted

as follows:

such that

The results

obtained by the different classifiers

are then combined to calculate the global decision as follows:

returns a row vector denoted

such that

is the probability that the intensification which occurs in the jth row/column of M has elicited a P300 response.

Given a selection

, we can determine the user’s desired symbol by maximizing the results of the prediction function

. Using the values of the row vector

we identify the column and the row that have most probably elicited P300 responses. Considering

y is the number of the column of

M that has most probably elicited P300 responses. Thus,

y is the column number that maximizes the score

.

Let

x be the number of the row of

M that has most probably elicited P300 responses.

x is the number of the row that maximizes the score

.

Thus, we consider that the symbol of M is the most probably user’s desired symbol.

- b.

Weighted Voting

In this case, we apply the same strategy as the non-weighted voting approach except that the decisions of the involved classifiers are waved to generate the global decision. Every classifier

is assigned a weight denoted

that corresponds to the accuracy of

during the training phase. As such the results obtained by the different classifiers

are combined to predict the global decision using the following prediction function

to calculate the global decision as follows:

returns a row vector denoted

such that

is the probability that the intensification that occurs in the jth row/column of M has elicited a P300 response.

Given a selection , we can determine the user’s desired symbol by maximizing the results of the prediction function . Using the values of the row vector we identify the column and the row that have most probably elicited P300 responses. Let y and x be the numbers of the column and the row of M that has most probably elicited P300 responses, respectively. y and x are obtained by applying Equations (22) and (23), respectively.

Thus, we consider that the symbol of M is the most probably user’s desired symbol.

4.2.2. Homogeneous Ensemble Classifiers Strategy

In this case, the training dataset D is split into disjoint partitions (portions). Every partition is used to train an instance of the same 2-class classifier, leading to an ensemble of homogeneous classifiers. Every classifier is as such trained to predict if a signal contains a P300 response or not. The training dataset D could be split into two different approaches: Vertical or Horizontal partitioning.

- a.

Horizontal Partitioning

We remind that the dataset contains selections . Every selection is composed of sequences of intensifications denoted by . represents the jth sequence of the ith selection.

In the horizontal partitioning strategy, the signals of the training dataset

D are spread over

partitions denoted

each of which is defined as follows:

A partition

contains all

ith sequences of intensifications of the

selections. As such, the partition

contains all first sequences of the

selections, the partition

contains all second sequences of the

selections and so on. The obtained partitions have to satisfy the following properties:

Every partition

is composed of

sequences of intensifications. Hence, a partition

contains

post-stimulus signals.

Every partition

is used to train a single 2-class classifier

, leading to an ensemble of

classifiers. Every classifier

is as such trained to predict if a signal corresponds to a P300 response or not. Thus, the total number of trials used to train every classifier is defined using the following expression:

Given a selection

, the corresponding

sequences of intensifications are parsed simultaneously by the

classifiers. Every classifier processes a single sequence of the

sequences of

. Thus, a classifier

will parse the

sequence

of

. Every classifier

calculates

as follows:

Every classifier

returns a row vector denoted

whose values are calculated as follows:

is the probability that the intensification, whatever its order/rank, of the

jth row/column of

M that happens during the

ith sequence of intensifications

has elicited a P300 response or not. The different results (row vectors)

calculated by the different classifiers

are then combined to calculate the global decision as follows:

returns a row vector denoted

such that

is the probability that the intensifications that occur in the jth row/column of M have elicited P300 responses.

Given a selection , we can identify the column and the row that have most probably elicited P300 responses by maximizing the results of the prediction function . Let y and x be the numbers of the column and the row of M that has most probably elicited P300 responses, respectively. y and x are obtained by applying Equations (22) and (23), respectively. Thus, we consider that the symbol of M is the most probable user’s desired symbol.

- b.

Vertical Partitioning

In the vertical partitioning strategy, the selections are spread over a set of classifiers. So, we defined a collection composed of

N different instances of the same classifier. Then, we split equally the

selections over the different instances

. Thus, every classifier

will be trained on a subset of the training dataset composed of

selections. As such, every instance

of the classifiers will be assigned a partition defined as follows:

A partition contains successive selections and satisfies the properties described by expression (33).

Every partition

contains

post-stimulus signals.

Every partition

is used to train a 2-class classifier

, leading to an ensemble of

N classifiers. Every classifier

is as such trained to predict if a signal contains a P300 response or not. Thus, the total number of trials used to train every classifier is defined using the following expression:

Given a selection

, the corresponding

sequences of intensifications are parsed simultaneously by the

N classifiers. Every classifier

calculates

and returns a row vector denoted

(Equation (

18)) whose values are calculated using Equation (

19).

The different results (row vectors)

calculated by the different classifiers

are then combined to calculate the global decision as follows:

returns a row vector denoted

such that

is the probability that the intensification that occurs in the jth row/column of M has elicited a P300 response.

Given a selection , we can identify the column and the row that have most probably elicited P300 responses by maximizing the results of the prediction function . Let y and x be the numbers of the column and the row of M that has most probably elicited P300 responses, respectively. y and x are obtained by applying Equations (22) and (23), respectively. Thus, we consider that the symbol of M is the most probable user’s desired symbol.