Achieving Adaptive Visual Multi-Object Tracking with Unscented Kalman Filter

Abstract

1. Introduction

- Through the in-depth study of image motion characteristics, a general accelerated motion model for multi-object is provided, which is similar to the variable acceleration motion. In addition, a multi-object tracking system based on the unscented Kalman filter is established to enhance tracking accuracy.

- Aiming at the occlusion in the tracking process, an improved DeepSORT algorithm with the adaptive factor is designed to improve the tracking robustness. The algorithm can adapt to the fast motion of objects better and reduce the observation noise caused by occlusion.

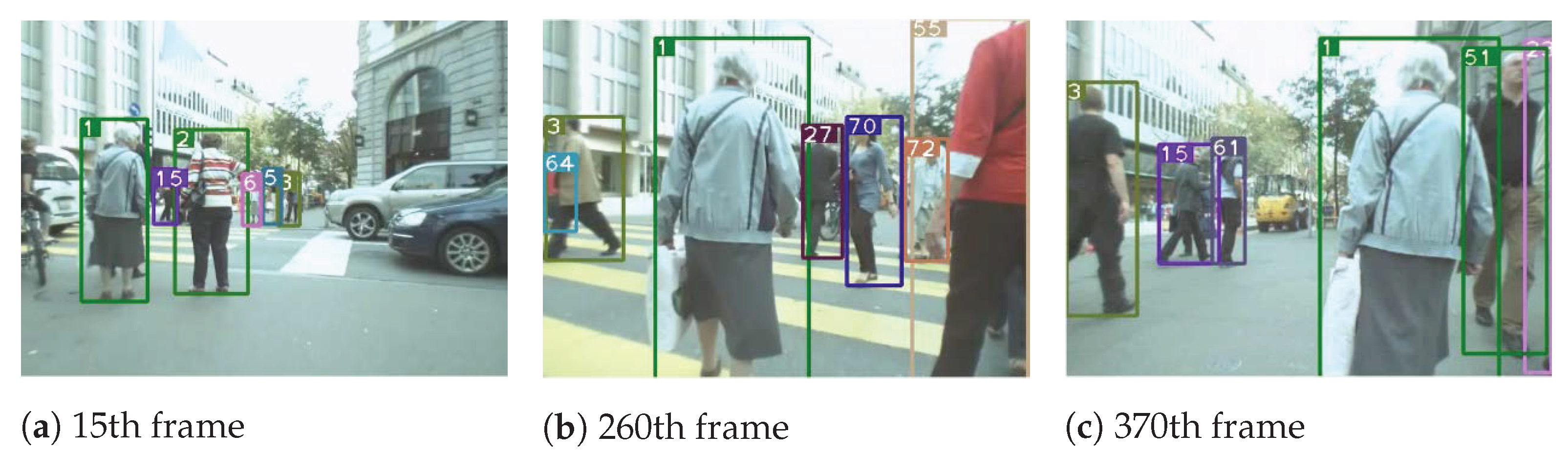

- We conduct extensive experiments to indicate the tracking performance. The improved DeepSORT algorithm is compared with DeepSORT on the MOT16 data set. In addition, the results indicate that the proposed improved DeepSORT has better tracking speed and accuracy, especially with the dynamic cameras.

2. Related Work

2.1. The Object Detection Methods

2.2. The Object Tracking Algorithm

2.3. YOLO and DeepSORT Applications

3. Existing Detection-Based Multi-Object Tracking Method

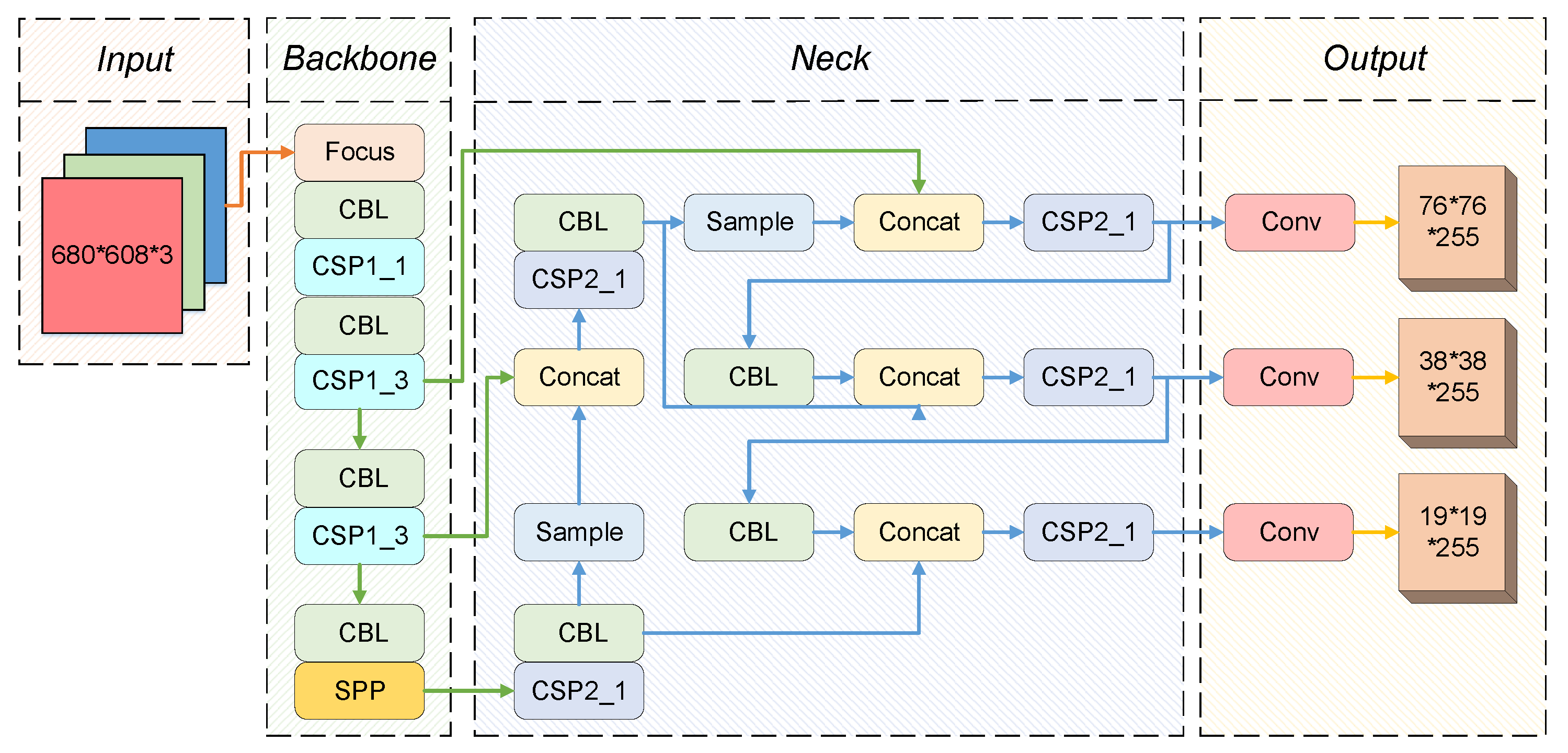

3.1. The Object Detection of YOLOv5

3.2. DeepSORT Object Tracking Algorithm

3.3. Classical Tracking Model

4. The Proposed General Object Tracking Model

4.1. General Motion Model

4.2. Multi-Object Tracking System

5. The Improved Multi-Object Tracking Algorithm

5.1. Unscented Kalman Filter-Based Object Tracking Algorithm

5.2. Improved Unscented Kalman Filter Algorithm

6. Experimental Evaluation

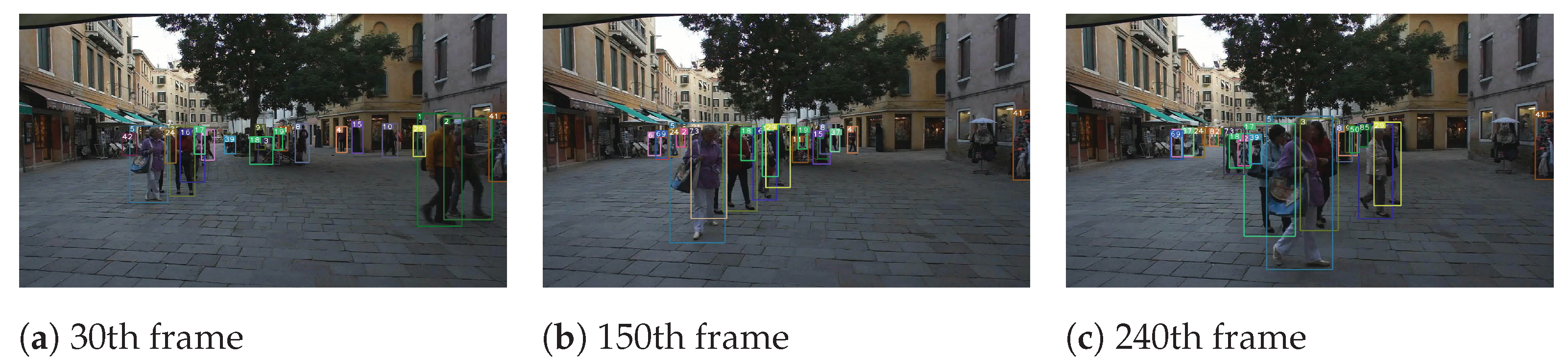

6.1. MOT16 Dataset Evaluation

6.2. Tracking Performance Comparison under Different Detection Models

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, C.; Liu, B.; Wan, S.; Qiao, P.; Pei, Q. An edge traffic flow detection scheme based on deep learning in an intelligent transportation system. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1840–1852. [Google Scholar] [CrossRef]

- Dicle, C.; Camps, O.I.; Sznaier, M. The way they move: Tracking multiple targets with similar appearance. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2304–2311. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Häger, G.; Khan, F.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014; Bmva Press: Durham, UK, 2014. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Shuai, B.; Berneshawi, A.; Li, X.; Modolo, D.; Tighe, J. Siammot: Siamese multi-object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 12372–12382. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3464–3468. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3645–3649. [Google Scholar]

- Zuraimi, M.A.B.; Zaman, F.H.K. Vehicle detection and tracking using YOLO and DeepSORT. In Proceedings of the 2021 11th IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, Malaysia, 3–4 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 23–29. [Google Scholar]

- Wang, S.; Sheng, H.; Zhang, Y.; Wu, Y.; Xiong, Z. A general recurrent tracking framework without real data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 13219–13228. [Google Scholar]

- Fu, H.; Wu, L.; Jian, M.; Yang, Y.; Wang, X. MF-SORT: Simple online and Realtime tracking with motion features. In Proceedings of the International Conference on Image and Graphics, Beijing, China, 23–25 August 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 157–168. [Google Scholar]

- Hou, X.; Wang, Y.; Chau, L.P. Vehicle tracking using deep sort with low confidence track filtering. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taipei, Taiwan, 18–21 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Luvizon, D.; Tabia, H.; Picard, D. SSP-Net: Scalable Sequential Pyramid Networks for Real-Time 3D Human Pose Regression. arXiv 2020, arXiv:2009.01998. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Hu, X.; Xu, X.; Xiao, Y.; Chen, H.; He, S.; Qin, J.; Heng, P.A. SINet: A scale-insensitive convolutional neural network for fast vehicle detection. IEEE Trans. Intell. Transp. Syst. 2018, 20, 1010–1019. [Google Scholar] [CrossRef]

- Cai, Z.; Fan, Q.; Feris, R.S.; Vasconcelos, N. A unified multi-scale deep convolutional neural network for fast object detection. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 354–370. [Google Scholar]

- Fortmann, T.; Bar-Shalom, Y.; Scheffe, M. Sonar tracking of multiple targets using joint probabilistic data association. IEEE J. Ocean. Eng. 1983, 8, 173–184. [Google Scholar] [CrossRef]

- Reid, D. An algorithm for tracking multiple targets. IEEE Trans. Autom. Control 1979, 24, 843–854. [Google Scholar] [CrossRef]

- Kim, C.; Li, F.; Ciptadi, A.; Rehg, J.M. Multiple hypothesis tracking revisited. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4696–4704. [Google Scholar]

- Rezatofighi, S.H.; Milan, A.; Zhang, Z.; Shi, Q.; Dick, A.; Reid, I. Joint probabilistic data association revisited. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3047–3055. [Google Scholar]

- Bochinski, E.; Eiselein, V.; Sikora, T. High-speed tracking-by-detection without using image information. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Bochinski, E.; Senst, T.; Sikora, T. Extending IOU based multi-object tracking by visual information. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 435–440. [Google Scholar]

- Punn, N.S.; Sonbhadra, S.K.; Agarwal, S.; Rai, G. Monitoring COVID-19 social distancing with person detection and tracking via fine-tuned YOLO v3 and Deepsort techniques. arXiv 2020, arXiv:2005.01385. [Google Scholar]

- Kapania, S.; Saini, D.; Goyal, S.; Thakur, N.; Jain, R.; Nagrath, P. Multi object tracking with UAVs using deep SORT and YOLOv3 RetinaNet detection framework. In Proceedings of the 1st ACM Workshop on Autonomous and Intelligent Mobile Systems, Bangalore, India, 11 January 2020; pp. 1–6. [Google Scholar]

- Xiang, Y.; Alahi, A.; Savarese, S. Learning to track: Online multi-object tracking by decision making. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4705–4713. [Google Scholar]

- Avidan, S. Support vector tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1064–1072. [Google Scholar] [CrossRef] [PubMed]

- Lee, B.; Erdenee, E.; Jin, S.; Nam, M.Y.; Jung, Y.G.; Rhee, P.K. Multi-class multi-object tracking using changing point detection. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 68–83. [Google Scholar]

- Tjaden, H.; Schwanecke, U.; Schömer, E.; Cremers, D. A region-based gauss-newton approach to real-time monocular multiple object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1797–1812. [Google Scholar] [CrossRef] [PubMed]

- Nam, H.; Baek, M.; Han, B. Modeling and propagating cnns in a tree structure for visual tracking. arXiv 2016, arXiv:1608.07242. [Google Scholar]

- Dias, R.; Cunha, B.; Sousa, E.; Azevedo, J.L.; Silva, J.; Amaral, F.; Lau, N. Real-time multi-object tracking on highly dynamic environments. In Proceedings of the 2017 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Coimbra, Portugal, 26–28 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 178–183. [Google Scholar]

- Yoon, J.H.; Yang, M.H.; Lim, J.; Yoon, K.J. Bayesian multi-object tracking using motion context from multiple objects. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 6–9 January 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 33–40. [Google Scholar]

- Chen, L.; Ai, H.; Zhuang, Z.; Shang, C. Real-time multiple people tracking with deeply learned candidate selection and person re-identification. In Proceedings of the 2018 IEEE International Conference on Multimedia And Expo (ICME), San Diego, CA, USA, 23–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 423–428. [Google Scholar]

- Al-Shakarji, N.M.; Bunyak, F.; Seetharaman, G.; Palaniappan, K. Multi-object tracking cascade with multi-step data association and occlusion handling. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Van Der Merwe, R.; Doucet, A.; De Freitas, N.; Wan, E. The unscented particle filter. Adv. Neural Inf. Process. Syst. 2000, 13, 584–590. [Google Scholar]

- Zhang, Y.; Chen, Z.; Wei, B. A sport athlete object tracking based on deep sort and yolo V4 in case of camera movement. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1312–1316. [Google Scholar]

- Wang, Y.; Yang, H. Multi-target Pedestrian Tracking Based on YOLOv5 and DeepSORT. In Proceedings of the 2022 IEEE Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), Dalian, China, 14–16 April 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 508–514. [Google Scholar]

- Azhar, M.I.H.; Zaman, F.H.K.; Tahir, N.M.; Hashim, H. People tracking system using DeepSORT. In Proceedings of the 2020 10th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 21–22 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 137–141. [Google Scholar]

- Gai, Y.; He, W.; Zhou, Z. Pedestrian Target Tracking Based On DeepSORT With YOLOv5. In Proceedings of the 2021 2nd International Conference on Computer Engineering and Intelligent Control (ICCEIC), Chongqing, China, 12–14 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Qiu, Z.; Zhao, N.; Zhou, L.; Wang, M.; Yang, L.; Fang, H.; He, Y.; Liu, Y. Vision-based moving obstacle detection and tracking in paddy field using improved yolov3 and deep SORT. Sensors 2020, 20, 4082. [Google Scholar] [CrossRef]

- Jie, Y.; Leonidas, L.; Mumtaz, F.; Ali, M. Ship detection and tracking in inland waterways using improved YOLOv3 and Deep SORT. Symmetry 2021, 13, 308. [Google Scholar] [CrossRef]

- Parico, A.I.B.; Ahamed, T. Real time pear fruit detection and counting using YOLOv4 models and deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef]

- Doan, T.N.; Truong, M.T. Real-time vehicle detection and counting based on YOLO and DeepSORT. In Proceedings of the 2020 12th International Conference on Knowledge and Systems Engineering (KSE), Can Tho, Vietnam, 12–14 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 67–72. [Google Scholar]

- Zhai, C.; Wang, M.; Yang, Y.; Shen, K. Robust Vision-Aided Inertial Navigation System for Protection Against Ego-Motion Uncertainty of Unmanned Ground Vehicle. IEEE Trans. Ind. Electron. 2020, 68, 12462–12471. [Google Scholar] [CrossRef]

- Zhang, J.H.; Li, P.; Jin, C.C.; Zhang, W.A.; Liu, S. A novel adaptive Kalman filtering approach to human motion tracking with magnetic-inertial sensors. IEEE Trans. Ind. Electron. 2019, 67, 8659–8669. [Google Scholar] [CrossRef]

- Yoo, Y.S.; Lee, S.H.; Bae, S.H. Effective Multi-Object Tracking via Global Object Models and Object Constraint Learning. Sensors 2022, 22, 7943. [Google Scholar] [CrossRef] [PubMed]

| Sequence | Method | MOTA↑ | FP↓ | FN↓ | IDS↓ | Hz↑ |

|---|---|---|---|---|---|---|

| MOT16-02 | Baseline | 23.8 | 2992 | 14,982 | 86 | 46 |

| DeepSORT | 22.9 | 2952 | 14,593 | 96 | 61 | |

| improved DeepSORT | 23.5 | 2976 | 14,613 | 83 | 59 | |

| MOT16-04 | Baseline | 33.5 | 4277 | 35,355 | 89 | 52 |

| DeepSORT | 25.9 | 4677 | 37,059 | 91 | 81 | |

| improved DeepSORT | 26.4 | 4093 | 36,509 | 70 | 83 | |

| MOT16-05 | Baseline | 39.4 | 3313 | 4472 | 128 | 81 |

| DeepSORT | 45.7 | 3146 | 4349 | 104 | 147 | |

| improved DeepSORT | 49.4 | 2920 | 4170 | 96 | 152 | |

| MOT16-09 | Baseline | 56.9 | 2633 | 3098 | 64 | 97 |

| DeepSORT | 61.0 | 1927 | 2925 | 50 | 112 | |

| improved DeepSORT | 61.3 | 1419 | 2440 | 48 | 116 | |

| MOT16-10 | Baseline | 37.2 | 2820 | 8274 | 87 | 66 |

| DeepSORT | 39.3 | 2937 | 7827 | 80 | 85 | |

| improved DeepSORT | 41.6 | 2672 | 7638 | 68 | 86 | |

| MOT16-11 | Baseline | 51.3 | 3270 | 5179 | 27 | 56 |

| DeepSORT | 49.2 | 3893 | 5408 | 30 | 95 | |

| improved DeepSORT | 50.6 | 3081 | 4623 | 28 | 94 | |

| MOT16-13 | Baseline | 19.2 | 3240 | 9993 | 114 | 17 |

| DeepSORT | 21.3 | 2659 | 9328 | 105 | 29 | |

| improved DeepSORT | 24.8 | 2145 | 8929 | 90 | 31 | |

| Total | Baseline | 37.4 | 22,545 | 81,353 | 595 | 59 |

| DeepSORT | 37.9 | 22,191 | 81,489 | 556 | 87 | |

| improved DeepSORT | 39.7 | 19,306 | 78,922 | 483 | 89 |

| Detection Model | mAP | Hz | Model Size |

|---|---|---|---|

| YOLOv5x | 49.6 | 145 | 89.0 M |

| YOLOv5l | 47.2 | 201 | 40.2 M |

| YOLOv5m | 44.5 | 294 | 21.8 M |

| YOLOv5s | 37.0 | 416 | 7.5 M |

| Detection Input | Method | MOTA | FP | FN | IDS | Hz |

|---|---|---|---|---|---|---|

| YOLOv5x | DeepSORT | 40.3 | 20,555 | 77,307 | 504 | 65 |

| improved DeepSORT | 41.1 | 19,494 | 76,494 | 454 | 62 | |

| YOLOv5m | DeepSORT | 35.2 | 22,902 | 77,936 | 590 | 77 |

| improved DeepSORT | 36.1 | 19,991 | 75,243 | 528 | 83 | |

| YOLOv5s | DeepSORT | 33.9 | 20,728 | 80,335 | 514 | 120 |

| improved DeepSORT | 34.8 | 19,570 | 78,375 | 464 | 122 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, G.; Yin, J.; Deng, P.; Sun, Y.; Zhou, L.; Zhang, K. Achieving Adaptive Visual Multi-Object Tracking with Unscented Kalman Filter. Sensors 2022, 22, 9106. https://doi.org/10.3390/s22239106

Zhang G, Yin J, Deng P, Sun Y, Zhou L, Zhang K. Achieving Adaptive Visual Multi-Object Tracking with Unscented Kalman Filter. Sensors. 2022; 22(23):9106. https://doi.org/10.3390/s22239106

Chicago/Turabian StyleZhang, Guowei, Jiyao Yin, Peng Deng, Yanlong Sun, Lin Zhou, and Kuiyuan Zhang. 2022. "Achieving Adaptive Visual Multi-Object Tracking with Unscented Kalman Filter" Sensors 22, no. 23: 9106. https://doi.org/10.3390/s22239106

APA StyleZhang, G., Yin, J., Deng, P., Sun, Y., Zhou, L., & Zhang, K. (2022). Achieving Adaptive Visual Multi-Object Tracking with Unscented Kalman Filter. Sensors, 22(23), 9106. https://doi.org/10.3390/s22239106