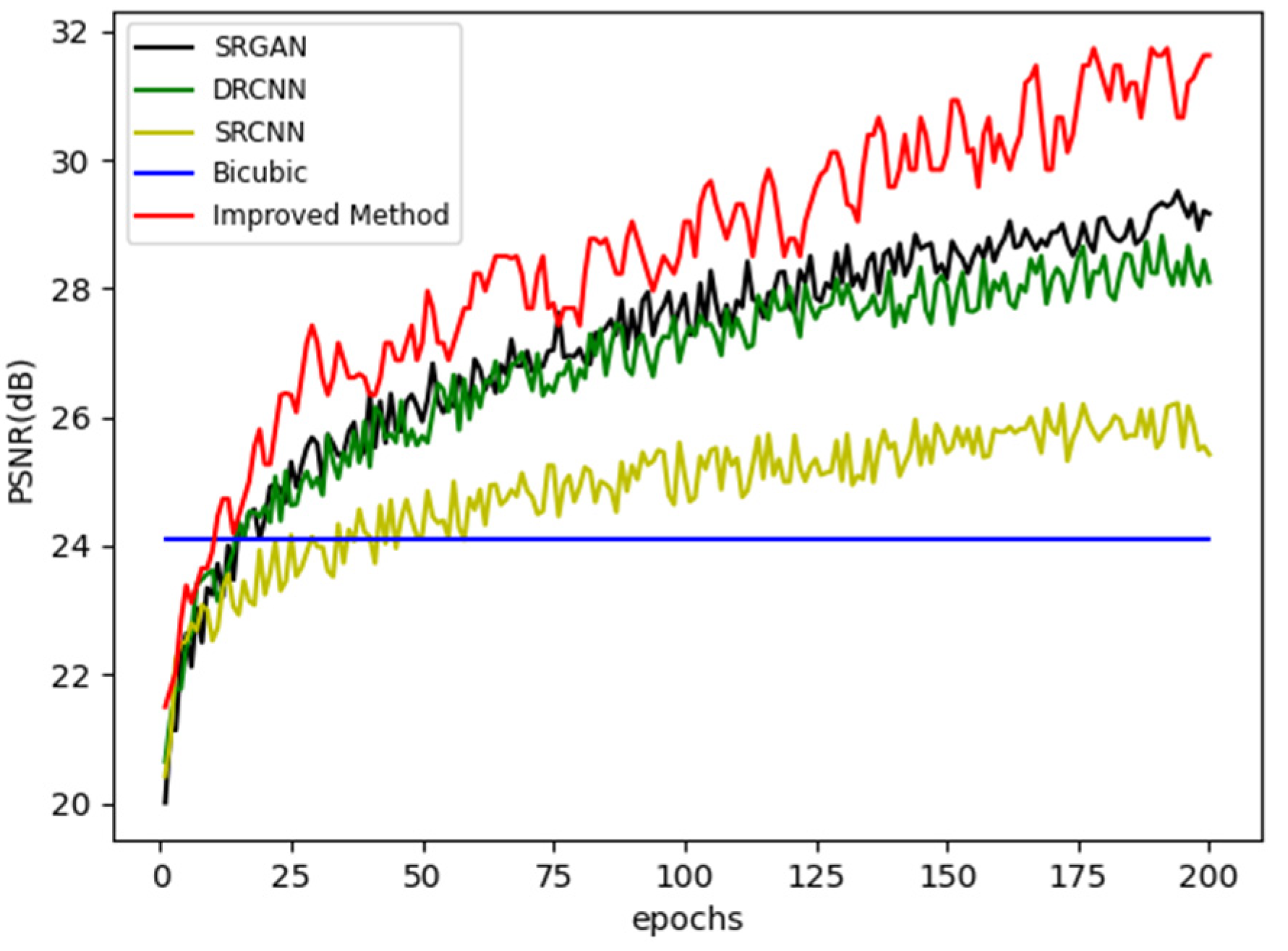

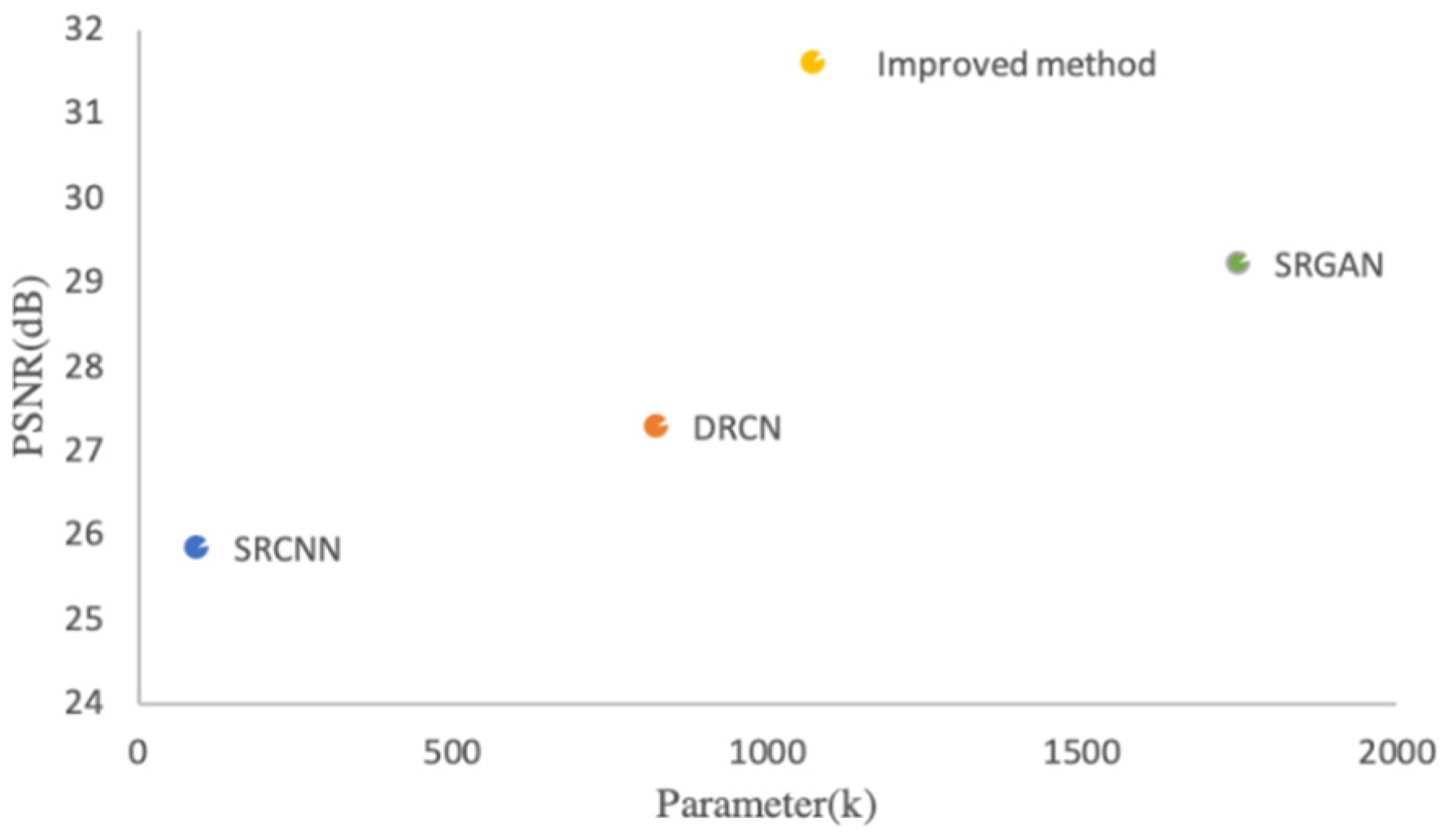

This research incorporates the attention mechanism into the SRGAN model to address the model’s weaknesses. In terms of parameter control, a recursive structure is utilized to share network parameters so that the parameter scale does not expand dramatically as the network depth grows. In the performance of image details, feature extraction is performed on low-resolution images, and the attention mechanism is employed to distinguish between low-frequency information and high-frequency information.

2.2. Generator Design

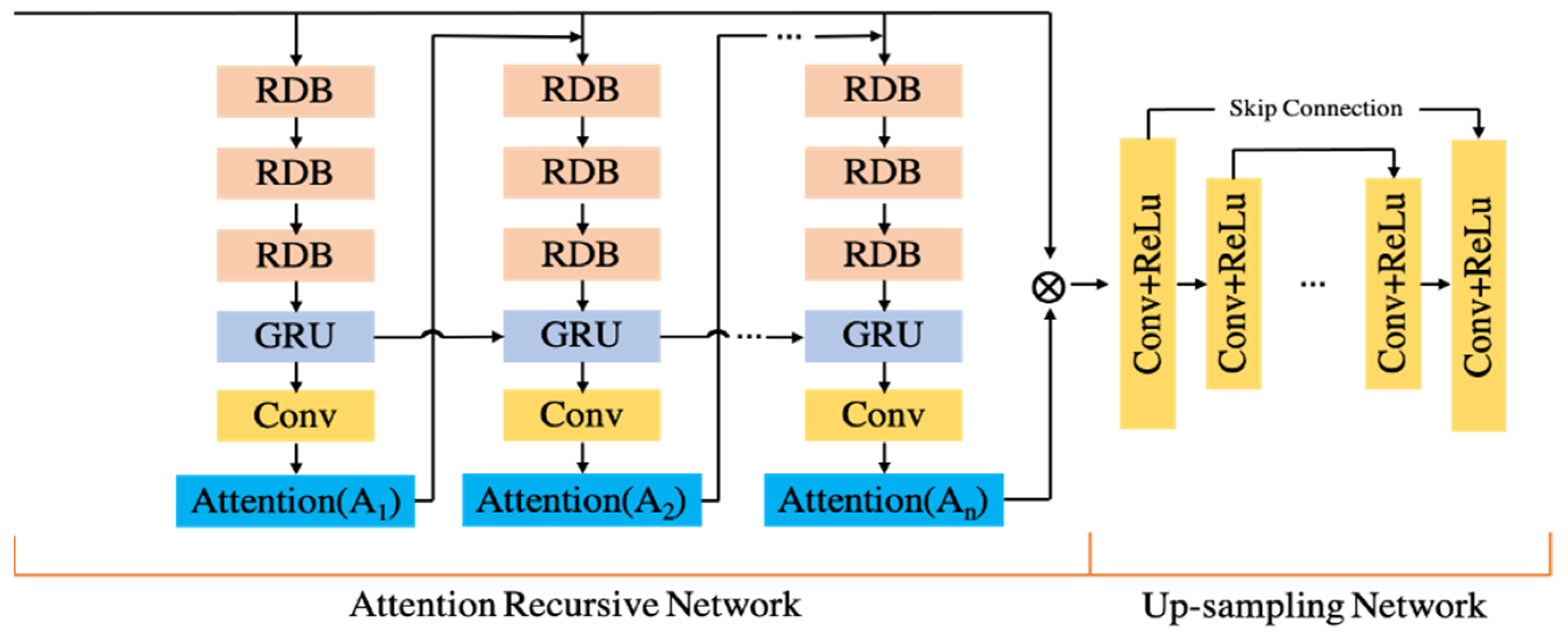

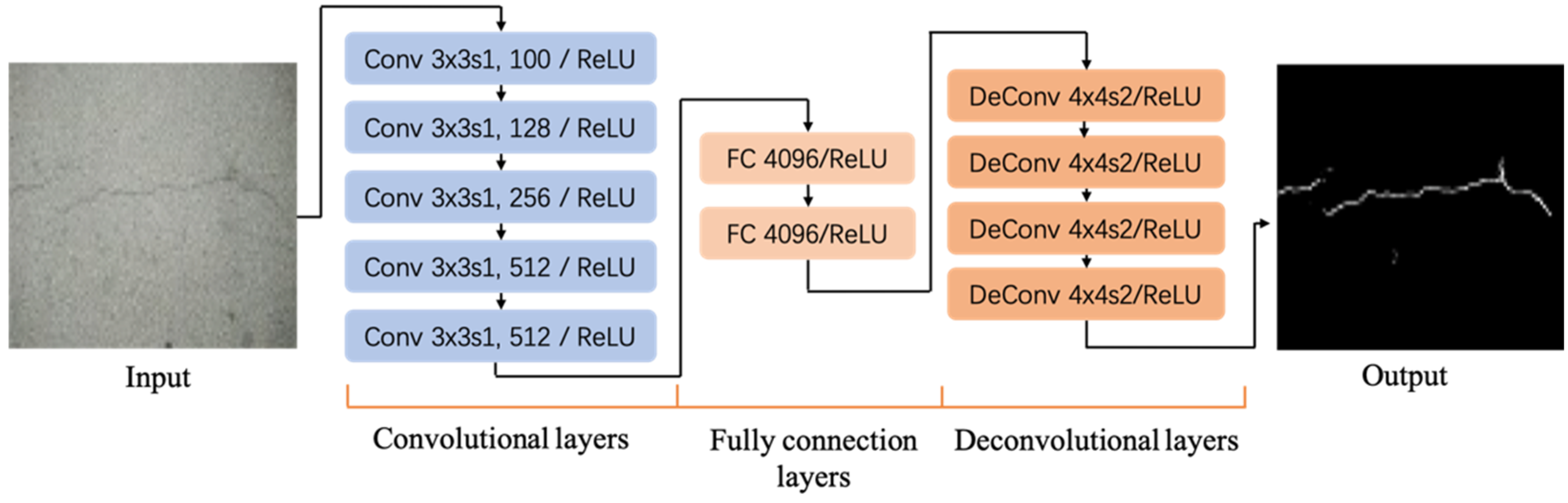

The shallow feature extraction network, nonlinear mapping network, and up-sampling network are the primary components of the generator. As illustrated in

Figure 2, the nonlinear mapping network is an attention recurrent network.

represents the low-resolution image, and

represents the result after network reconstruction. The low-resolution image input by the network extracts shallow feature information through the Shallow Feature Extraction Network, mainly composed of two convolutional layers (Conv). The first Conv layer extracts the feature

from the

, and the second performs further shallow feature extraction on

, and the output is

, as shown in Equations (1) and (2), respectively.

and

serve as the convolution operations of the shallow feature extraction network. After the original input

is subjected to shallow feature extraction, its output

is sent to the attention recurrent network as a new input. The structure of the attention recurrent network is shown in

Figure 3. The main purpose of the attention recurrent network is to extract the texture details that need to be recovered from the input image. The extraction results are enhanced by subsequent nonlinear mapping and used to generate high-resolution images. Therefore, the effect of reconstructing the image depends on the quality of texture detail extraction by the attention recurrent network.

(1) RDB: To replace the original structure, the redesigned dense residual block is shown in

Figure 3. The BN layer creates pseudo-textures in the output images when the statistics of the training and test datasets change significantly, reducing the generalization ability. The BN layer is deleted to stabilize the network’s training, minimize computational complexity, and reduce computational overhead. RDB achieves feature fusion and dimensionality reduction by merging residual blocks with skip connections [

23], resulting in dense blocks. To boost network capacity, the dense residual block not only preserves the feedforward information, but also fully extracts the local feature layer information.

(2) GRU: In Attention Recurrent Networks, GRU are introduced. As illustrated in Equations (3)–(6), the most significant structures are gate structures, namely

Reset Gate rt and

Update Gate zt.

In the hidden layer,

is the state at the previous time,

is the state at the current time, and

is the updated state at the current time.

produces the weight corresponding to the update state,

represents the weight in the reset state, and * represents the convolution operation. To produce 2D attention images, the GRU’s output features are fed to successive convolutional layers. The resulting attention image is concatenated with the input image at each time step during training and utilized as the input to the next layer of the recurrent network. As shown in Equation (7), it is assumed that the output of the recurrent attention network after n layers is

.

represents the recurrent attention network function, and

represents the number of layers of recursion. Feature mapping is performed after the texture detail features are extracted through the attention recurrent network. The designed feature mapping network has 8 Conv + ReLUs structures, and skip connections are added to improve the stability of network training, as shown in the right half of

Figure 3. The feature map network is shown in Equation (8):

FNMN is the output result of using the nonlinear network function

. The up-sampling network consists of convolutional layers before generating the high-resolution image to obtain the super-resolution image output scaled by a factor of 4 in Equation (9):

is the up-sampling function, and is the super-resolution image output.

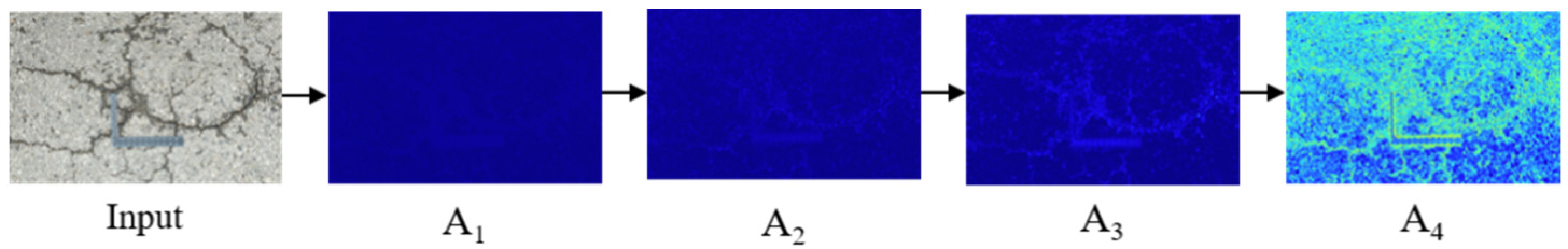

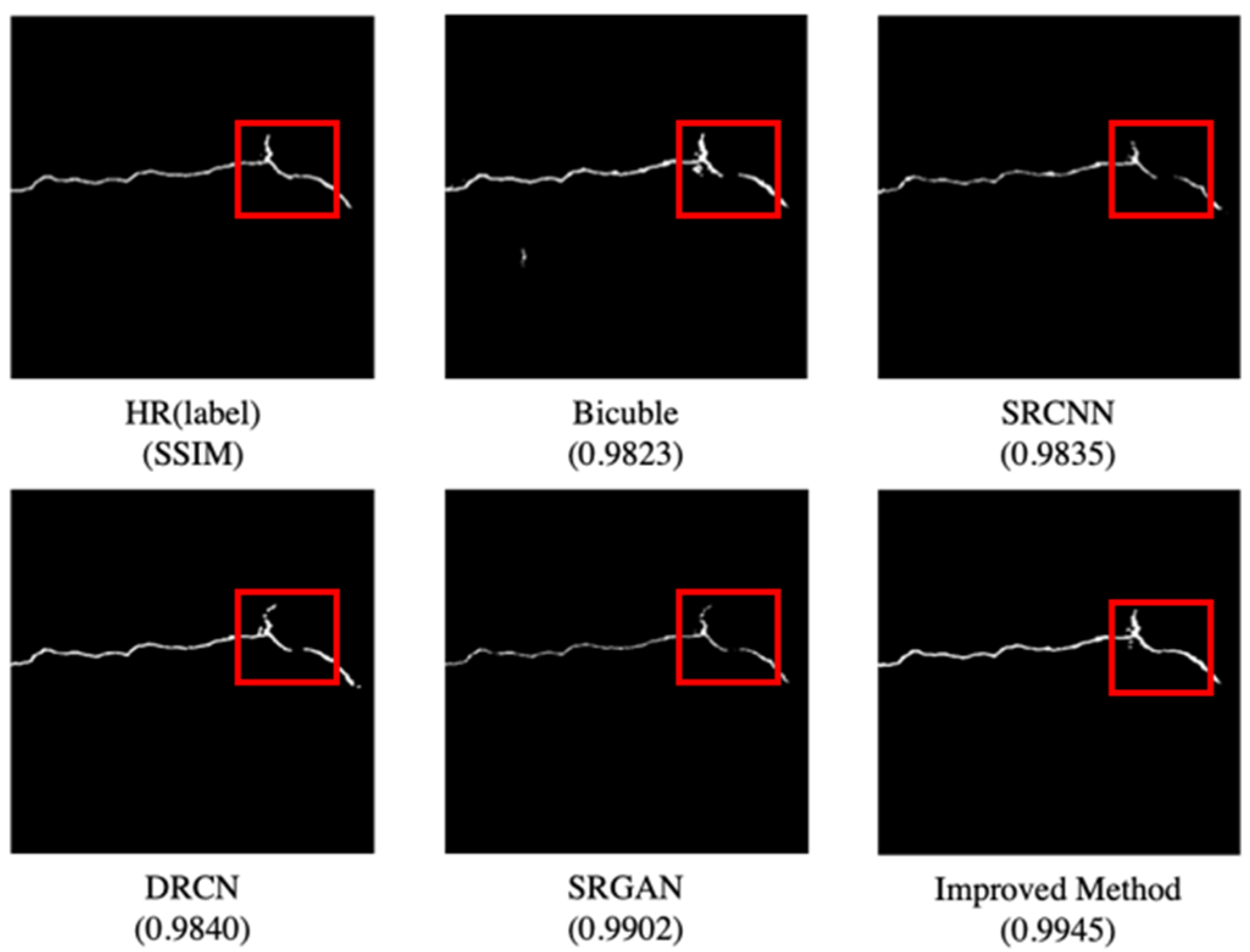

(3) Attention: The attention feature of each layer of a recurrent network is a matrix with values between 0 and 1. The stronger the associated attention, the higher the element values in each matrix. The first four Attention (

A1–

A4) in the Attention recursive network are selected for the output of the attention feature map, and they are colored using pseudocolor, as shown in

Figure 4, where

are the generated visual attention maps. As recursion increases, attention feature maps highlight texture details and edges.

The generated visual attention map output by the first attention module A1 is basically blue. When recursive to Attention (A4), A4 corresponding to the input (the crack target area) is yellow, while the background is still blue. Therefore, the feature maps output by the four attention modules is weak in blue and strong in yellow.

2.3. Discriminator Design

After redesigning the generator, the discriminator needs to be improved to match the new generator in

Figure 5. GAN uses a discriminator to judge the real and generated images, which are then fed back to the generator. The visual characteristics of the high-resolution-produced image to be assessed are extracted using seven layers of convolutional layers, then dimensionally flattened. Lastly, the results are discriminated using the fully connected layer (FC) and the Sigmoid function.

In the first training, the performance of the original generator was considerably inferior to that of the discriminator, causing the model to crash. To avoid this from happening, the loss function must be enhanced. The original loss function is replaced with a new loss function based on the L1 norm, which includes the discriminator’s reconstruction error. The loss function improves the learning ability of the generator while improving the image quality.

The image pixel-level error is considered to obey the Gaussian distribution, and the loss function based on the L1 norm is defined in Equation (10).

indicates the high-resolution images, and

indicates for low-resolution images. The optimization rules of the generator and discriminator loss functions that need to be iterated are expressed in Equations (11)–(15):

x performs a high-resolution image, represents a low-resolution image, is a super-resolution image, represents the loss of a high-resolution image by the discriminator, and indicates a loss of a low-resolution image. represents the increment of , means the result of the -th iteration of , and the change of the value of can be used to improve the learning ability of the generator. The is the ratio of the expected value of the super-resolution image error to the expected value of the high-resolution image. The value of this parameter can improve the quality of the generated image.