A Generalized Robot Navigation Analysis Platform (RoNAP) with Visual Results Using Multiple Navigation Algorithms

Abstract

1. Introduction

2. Framework Structure

2.1. Graphic User Interface

- 1.

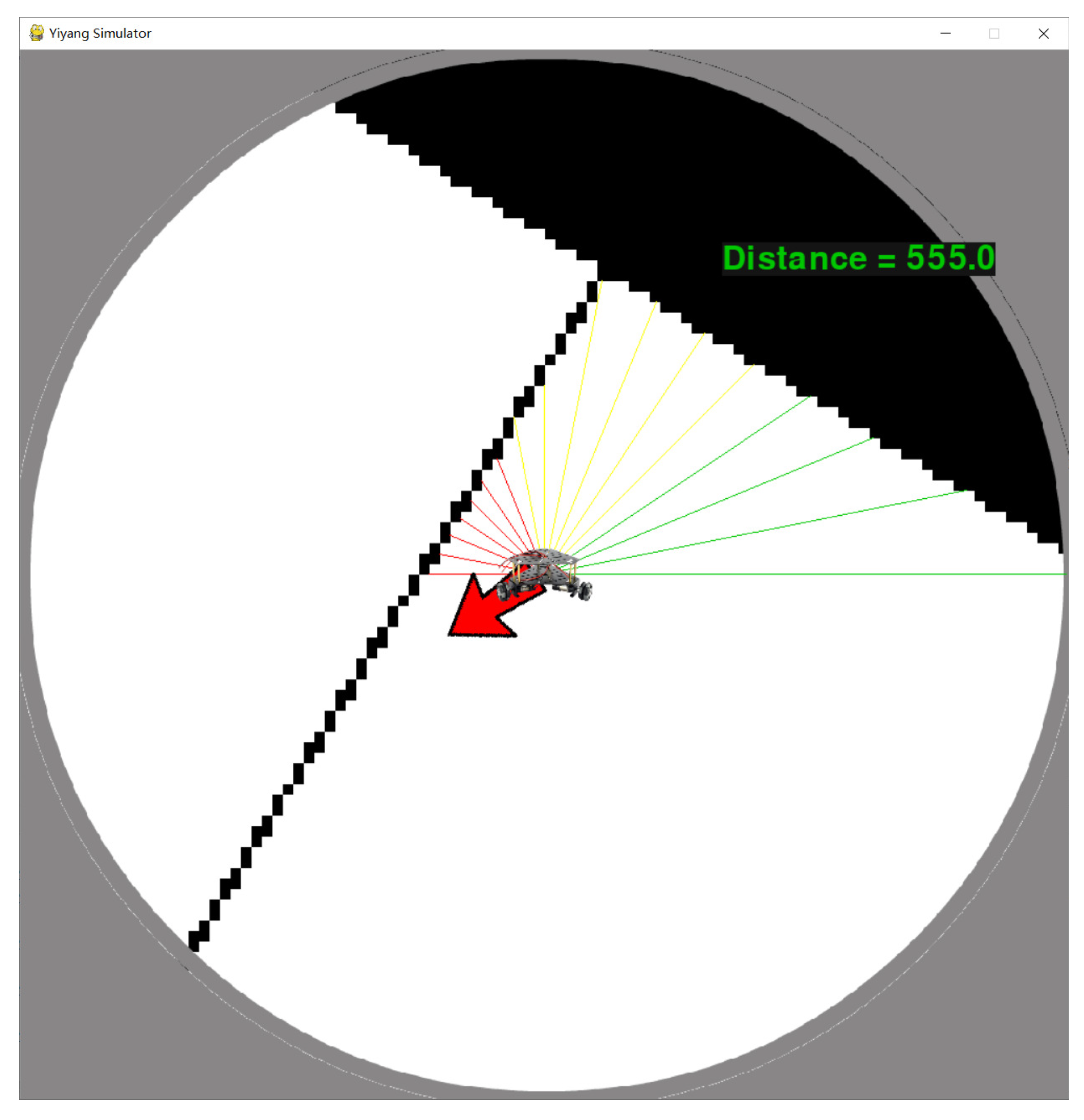

- Start: This function button offers an interface which displays the real-time motion pose of the test robot, as shown in Figure 1b. This interface is built from the first-person perspective of the robot; the scene within the detectable range of the robot is provided, which changes as the robot moves. In addition, the distance of laser detection is indicated by different color rays; the red arrow directly points to the destination position, while the green digits on the upper right indicate the distance to the destination position.

- 2.

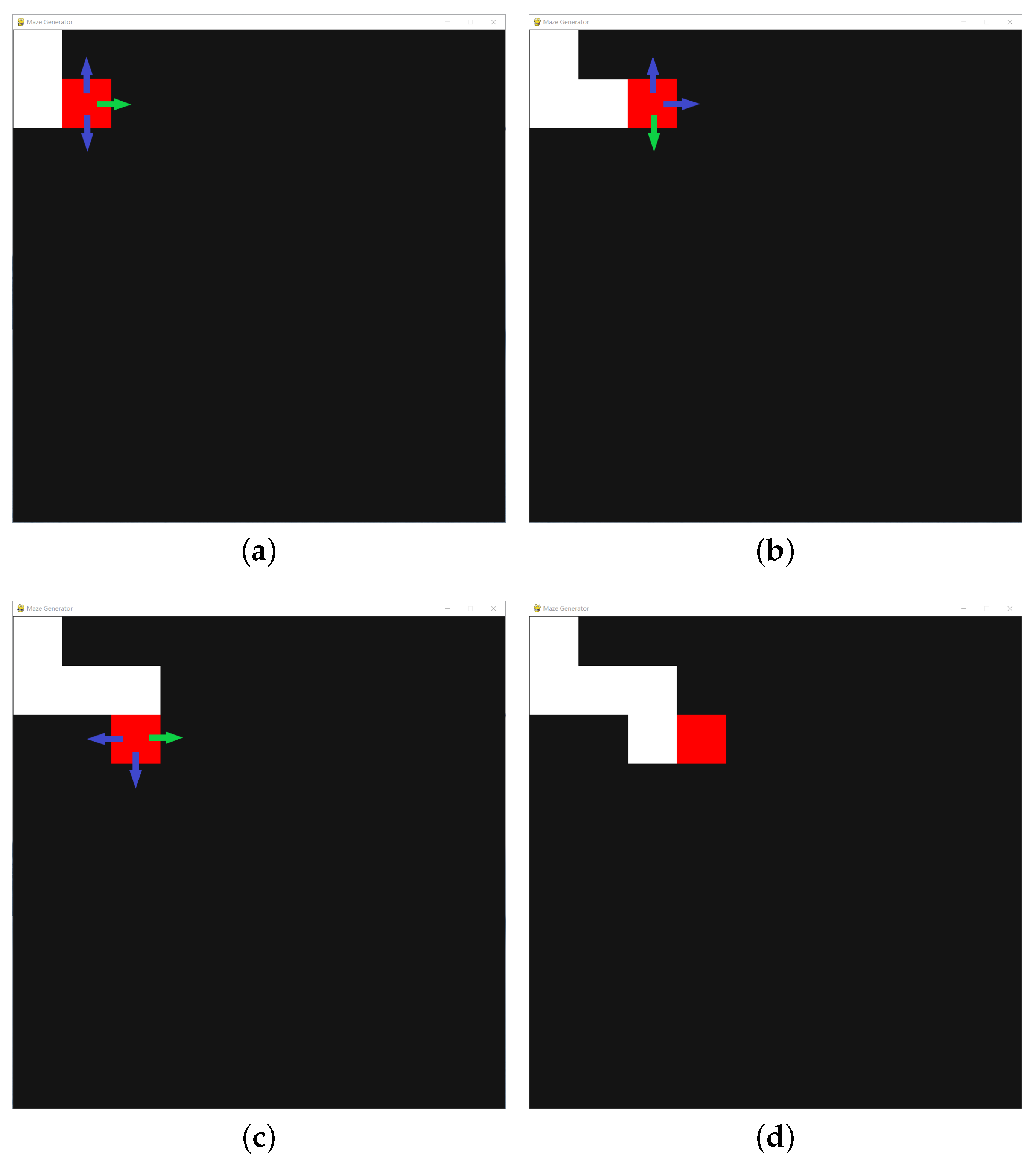

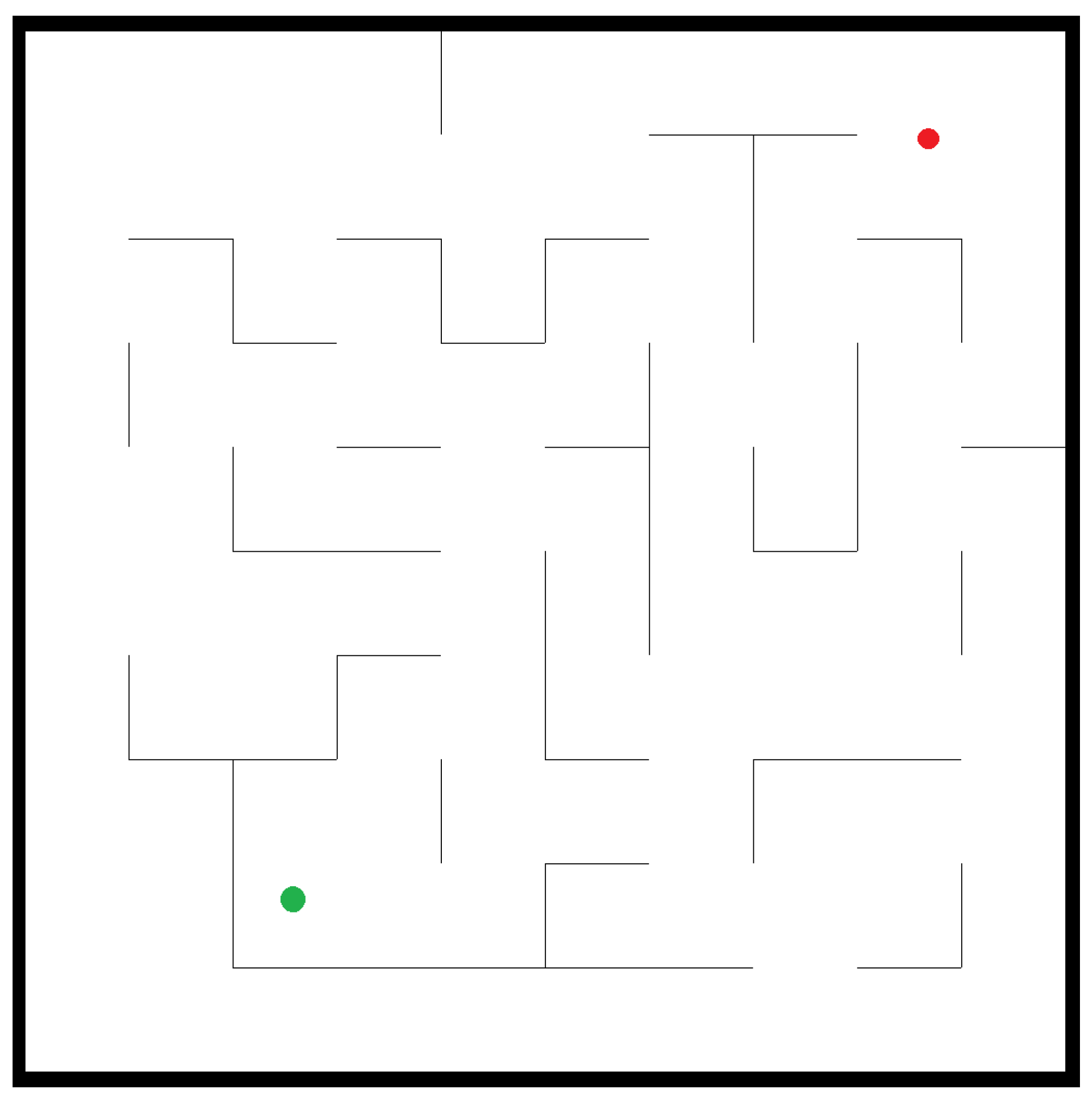

- Maze Generator: This function button is used to generate a new scene with randomly placed obstacles, as shown in Figure 1c. When the user clicks on “Load Map”, the new scene map is loaded as a background picture for the next robot navigation analysis.

- 3.

- Configurations: This function allows users to modify related parameters, such as movement speed, rotation speed, and user name. As shown in Figure 1d, when filling in something with correct format in the blank, the initial settings are replaced.

- 4.

- Save Data: This function button is able to save the data generated during the analysis process, including the laser detection distance, distance to the destination position, the direction in which the robot is moving, the direction to the destination, and the action performed. All information is saved in a .csv file named using the format “username + usage date”.

- 5.

- Collect Data: This function button offers an interface similar to the Start button, where the movement of robots is controlled by the keyboard. Human policy-making data are collected in the scene.

- 6.

- Developer: This function button provides related information on the developer, which allows academics interested in the same field to contact the developer for further communication and learning.

2.2. Features of RoNAP

| keyboard | ← | ↑ | → |

| action | turn left | go ahead | turn right |

| 135.73 | 67.84 | 10.90 | AHEAD |

3. Instructions for Using RoNAP

3.1. Running Environment of RoNAP

3.2. Operation Process of RoNAP

4. Mobile Robot Navigation Approaches Analysis Based on RoNAP

4.1. Problem Formulation

4.2. Deep Q-Learning Network Algorithm

- is a collision penalty function set to reduce collisions with obstacles

- is a distance reward function set to prompt the robot to move toward the destination position

- is a turning penalty function set to avoid the robot spinning around

- is a destination reward function set to issue a reward for completing the task

| Algorithm 1 DQN training procedure. |

| Input: Total training episodes , experience pool capacity D, target network update frequency F, training batch size B, attenuation coefficient , greedy value and maximum total penalty . |

| Output: Target neural network with . |

| 1: Initialization: set initial episode , initial state , total reward of each episode , value neural network Q with random weight , target network with . |

| 2: whiledo |

| 3: while |

| do |

| 4: Select an action through . |

| 5: Execute and obtain and . |

| 6: Store into D. |

| 7: Randomly select B-size data from D, and perform

|

| 8: Compute mean square error loss through |

| 9: Utilize gradient descent algorithm to update . |

| 10: After F steps, perform . |

| 11: Perform . |

| 12: end while |

| 13: Perform and . |

| 14: end while |

| 15: return target neural network with . |

4.3. Deep Learning Algorithm

| Algorithm 2 DL training procedure. |

| Input: Data set , training data number , training batch size B, total training epoch number and learning rate . |

| Output: A neural network with . |

| 1: initialization: Set the first training epoch number and a neural network Q with random weight . |

| 2: Randomly select data from as and the rest to testing data set . |

| 3: whiledo |

| 4: Randomly select B data from , and compute the sum of the cross entropy loss. |

| 5: Compute the gradients of . |

| 6: Perform Adaptive Moment Estimation (Adam) gradient descent training to update using the obtained gradients. |

| 7: Calculate the accuracy of neural networks replicating human behavior in training data set . |

| 8: Perform |

| 9: end while |

| 10: Calculate the accuracy of neural networks replicating human behavior in training data set . |

| 11: return the trained neural network with . |

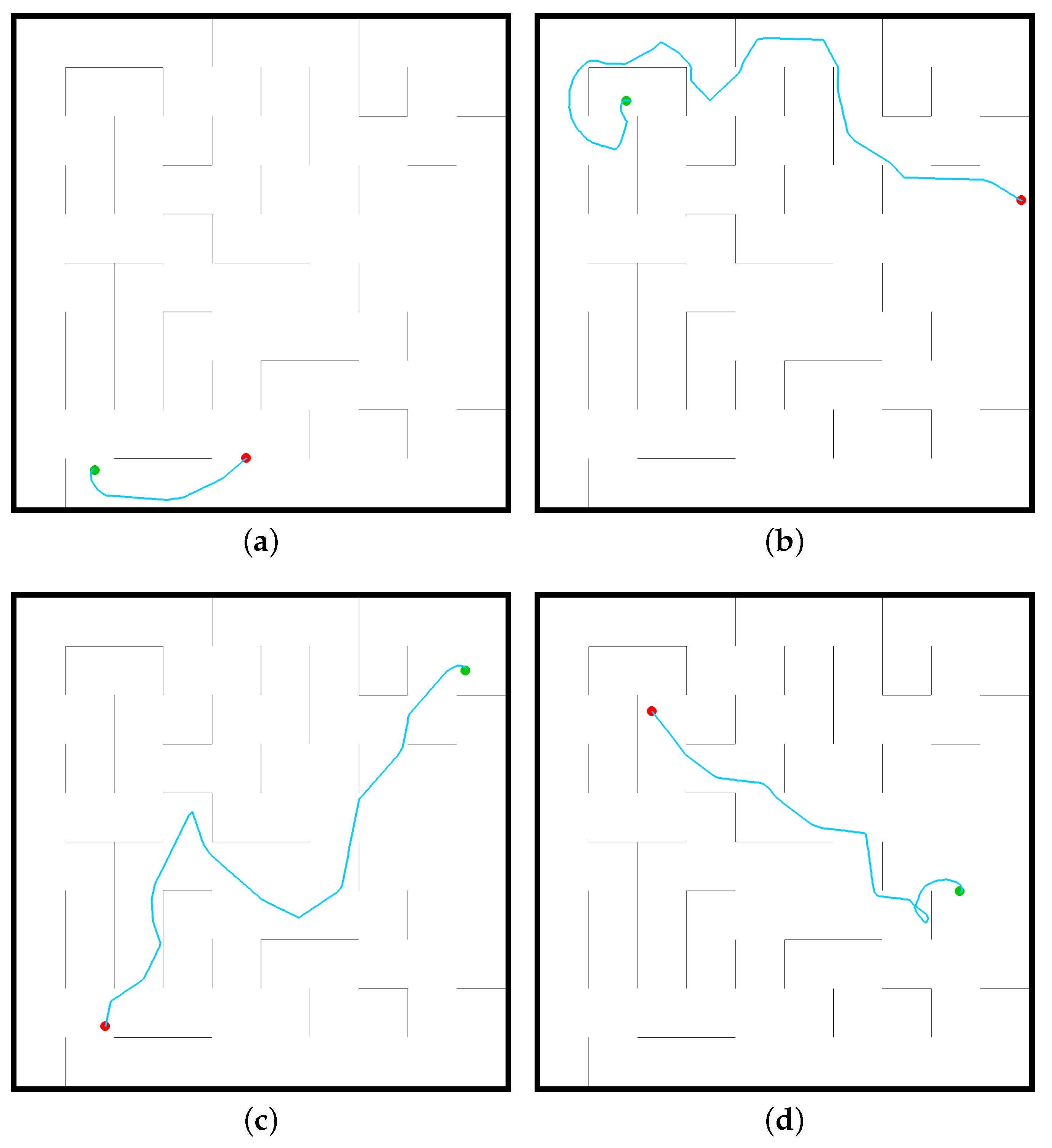

4.4. Artificial Potential Field Method

| Algorithm 3 APF method implementation procedure. |

| Input: Scene map , random start position , random destination position , positions set . |

| Output: A path between and . |

| 1: initialization: Set current position , gray processing and binarization for the map . |

| 2: Confirm the location of obstacles and calculate potential energy of each position. |

| 3: while do |

| 4: Record current position into . |

| 5: Find the position with the smallest potential energy among the eight positions around . |

| 6: Perform . |

| 7: end while |

| 8: return A path with all positions in . |

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| RoNAP | Robot navigation analysis platform |

| GPU | Graphics processing unit |

| CPU | Central processing unit |

| DQN | Deep Q-learning network |

| DL | Deep learning |

| APF | Artificial potential field |

References

- Shao, L.; Zhang, L.; Belkacem, A.N.; Zhang, Y.; Chen, X.; Li, J.; Liu, H. EEG-Controlled Wall-Crawling Cleaning Robot Using SSVEP-Based Brain-Computer Interface. J. Healthc. Eng. 2020, 2020, 6968713. [Google Scholar] [CrossRef] [PubMed]

- Peng, C.; Isler, V. Visual Coverage Path Planning for Urban Environments. IEEE Robot. Autom. Lett. 2020, 5, 5961–5968. [Google Scholar] [CrossRef]

- Hichri, B.; Fauroux, J.C.; Adouane, L.; Doroftei, I.; Mezouar, Y. Design of cooperative mobile robots for co-manipulation and transportation tasks. Robot. Comput.-Integr. Manuf. 2019, 57, 412–421. [Google Scholar] [CrossRef]

- Wahab, M.N.A.; Nefti-Meziani, S.; Atyabi, A. A comparative review on mobile robot path planning: Classical or meta-heuristic methods? Annu. Rev. Control 2020, 50, 233–252. [Google Scholar] [CrossRef]

- Cui, S.; Chen, Y.; Li, X. A Robust and Efficient UAV Path Planning Approach for Tracking Agile Targets in Complex Environments. Machines 2022, 10, 931. [Google Scholar] [CrossRef]

- Patle, B.K.; Pandey, A.; Parhi, D.R.K.; Jagadeesh, A. A review: On path planning strategies for navigation of mobile robot. Def. Technol. 2019, 15, 582–606. [Google Scholar] [CrossRef]

- Qureshi, A.H.; Ayaz, Y. Intelligent bidirectional rapidly-exploring random trees for optimal motion planning in complex cluttered environments. Robot. Auton. Syst. 2015, 68, 1–11. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Orozco-Rosas, U.; Montiel, O.; Sepúlveda, R. Mobile robot path planning using membrane evolutionary artificial potential field. Appl. Soft Comput. 2019, 77, 236–251. [Google Scholar] [CrossRef]

- Masmoudi, M.S.; Krichen, N.; Masmoudi, M.; Derbel, N. Fuzzy logic controllers design for omnidirectional mobile robot navigation. Appl. Soft Comput. 2016, 49, 901–919. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, Y.; Zhang, Y. Machine Learning-Based Model Predictive Control for Collaborative Production Planning Problem with Unknown Information. Electronics 2021, 10, 1818. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, W.; Charalambous, T. Machine learning based iterative learning control for non-repetitive time-varying systems. arXiv 2022, arXiv:2107.00421. [Google Scholar] [CrossRef]

- Chen, H.; Jiang, B. A Review of Fault Detection and Diagnosis for the Traction System in High-Speed Trains. IEEE Trans. Intell. Transp. Syst. 2020, 21, 450–465. [Google Scholar] [CrossRef]

- Chen, H.; Chai, Z.; Dogru, O.; Jiang, B.; Huang, B. Data-Driven Designs of Fault Detection Systems via Neural Network-Aided Learning. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 5694–5705. [Google Scholar] [CrossRef] [PubMed]

- Collins, J.; Chand, S.; Vanderkop, A.; Howard, D. A Review of Physics Simulators for Robotic Applications. IEEE Access 2021, 9, 51416–51431. [Google Scholar] [CrossRef]

- Sharifi, M.; Chen, X.; Pretty, C.; Clucas, D.; Cabon-Lunel, E. Modelling and simulation of a non-holonomic omnidirectional mobile robot for offline programming and system performance analysis. Simul. Model. Pract. Theory 2018, 87, 155–169. [Google Scholar] [CrossRef]

- Karoui, O.; Khalgui, M.; Koubâa, A.; Guerfala, E.; Li, Z.; Tovar, E. Dual mode for vehicular platoon safety: Simulation and formal verification. Inf. Sci. 2017, 402, 216–232. [Google Scholar] [CrossRef]

- Yang, Z.; Merrick, K.; Jin, L.; Abbass, H.A. Hierarchical Deep Reinforcement Learning for Continuous Action Control. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 5174–5184. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Jia, B.; Zhang, K. Trifocal Tensor-Based Adaptive Visual Trajectory Tracking Control of Mobile Robots. IEEE Trans. Cybern. 2017, 47, 3784–3798. [Google Scholar] [CrossRef] [PubMed]

- Winkler, A.W.; Bellicoso, C.D.; Hutter, M.; Buchli, J. Gait and Trajectory Optimization for Legged Systems Through Phase-Based End-Effector Parameterization. IEEE Robot. Autom. Lett. 2018, 3, 1560–1567. [Google Scholar] [CrossRef]

- Bellicoso, C.D.; Jenelten, F.; Gehring, C.; Hutter, M. Dynamic Locomotion Through Online Nonlinear Motion Optimization for Quadrupedal Robots. IEEE Robot. Autom. Lett. 2018, 3, 2261–2268. [Google Scholar] [CrossRef]

- Woodford, G.W.; Pretorius, C.J.; du Plessis, M.C. Concurrent controller and Simulator Neural Network development for a differentially-steered robot in Evolutionary Robotics. Robot. Auton. Syst. 2016, 76, 80–92. [Google Scholar] [CrossRef]

- Woodford, G.W.; du Plessis, M.C. Bootstrapped Neuro-Simulation for complex robots. Robot. Auton. Syst. 2021, 136, 103708. [Google Scholar] [CrossRef]

- Singla, A.; Padakandla, S.; Bhatnagar, S. Memory-Based Deep Reinforcement Learning for Obstacle Avoidance in UAV with Limited Environment Knowledge. IEEE Trans. Intell. Transp. Syst. 2021, 22, 107–118. [Google Scholar] [CrossRef]

- Long, P.; Liu, W.; Pan, J. Deep-Learned Collision Avoidance Policy for Distributed Multiagent Navigation. IEEE Robot. Autom. Lett. 2017, 2, 656–663. [Google Scholar] [CrossRef]

- Cheng, C.; Chen, Y. A Neural Network based Mobile Robot Navigation Approach using Reinforcement Learning Parameter Tuning Mechanism. In Proceedings of the 2021 IEEE China Automation Congress (CAC), Beijing, China, 22–24 October 2021. [Google Scholar] [CrossRef]

- Wu, S.; Roberts, K.; Datta, S.; Du, J.; Ji, Z.; Si, Y.; Soni, S.; Wang, Q.; Wei, Q.; Xiang, Y.; et al. Deep learning in clinical natural language processing: A methodical review. J. Am. Med. Inform. Assoc. 2019, 27, 457–470. [Google Scholar] [CrossRef]

- Ge, P.; Chen, Y.; Wang, G.; Weng, G. A hybrid active contour model based on pre-fitting energy and adaptive functions for fast image segmentation. Pattern Recognit. Lett. 2022, 158, 71–79. [Google Scholar] [CrossRef]

- Ge, P.; Chen, Y.; Wang, G.; Weng, G. An active contour model driven by adaptive local pre-fitting energy function based on Jeffreys divergence for image segmentation. Expert Syst. Appl. 2022, 210, 118493. [Google Scholar] [CrossRef]

- Panagakis, Y.; Kossaifi, J.; Chrysos, G.G.; Oldfield, J.; Nicolaou, M.A.; Anandkumar, A.; Zafeiriou, S. Tensor Methods in Computer Vision and Deep Learning. Proc. IEEE 2021, 109, 863–890. [Google Scholar] [CrossRef]

- Tao, H.; Wang, P.; Chen, Y.; Stojanovic, V.; Yang, H. An unsupervised fault diagnosis method for rolling bearing using STFT and generative neural networks. J. Frankl. Inst. 2020, 357, 7286–7307. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, Y. Machine learning based decision making for time varying systems: Parameter estimation and performance optimization. Knowl.-Based Syst. 2020, 190, 105479. [Google Scholar] [CrossRef]

- Chen, Y.; Cheng, C.; Zhang, Y.; Li, X.; Sun, L. A Neural Network-Based Navigation Approach for Autonomous Mobile Robot Systems. Appl. Sci. 2022, 12, 7796. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, C.; Duan, S.; He, H.; Li, X.; Chen, Y. A Generalized Robot Navigation Analysis Platform (RoNAP) with Visual Results Using Multiple Navigation Algorithms. Sensors 2022, 22, 9036. https://doi.org/10.3390/s22239036

Cheng C, Duan S, He H, Li X, Chen Y. A Generalized Robot Navigation Analysis Platform (RoNAP) with Visual Results Using Multiple Navigation Algorithms. Sensors. 2022; 22(23):9036. https://doi.org/10.3390/s22239036

Chicago/Turabian StyleCheng, Chuanxin, Shuang Duan, Haidong He, Xinlin Li, and Yiyang Chen. 2022. "A Generalized Robot Navigation Analysis Platform (RoNAP) with Visual Results Using Multiple Navigation Algorithms" Sensors 22, no. 23: 9036. https://doi.org/10.3390/s22239036

APA StyleCheng, C., Duan, S., He, H., Li, X., & Chen, Y. (2022). A Generalized Robot Navigation Analysis Platform (RoNAP) with Visual Results Using Multiple Navigation Algorithms. Sensors, 22(23), 9036. https://doi.org/10.3390/s22239036