Advances in Visual Simultaneous Localisation and Mapping Techniques for Autonomous Vehicles: A Review

Abstract

:1. Introduction

- Develop a hierarchy of existing V-SLAM methods with a focus on their respective implementation techniques and perceived advantages over their counterparts;

- Discuss key characteristics of V-SLAM techniques in literature and highlight their advantages and limitations;

- Perform a comparative analysis of recent V-SLAM technologies and identify their strengths and shortcomings;

- Identify open issues and propose future research directions in V-SLAM schemes for AVs.

2. Simultaneous Localisation and Mapping (SLAM)

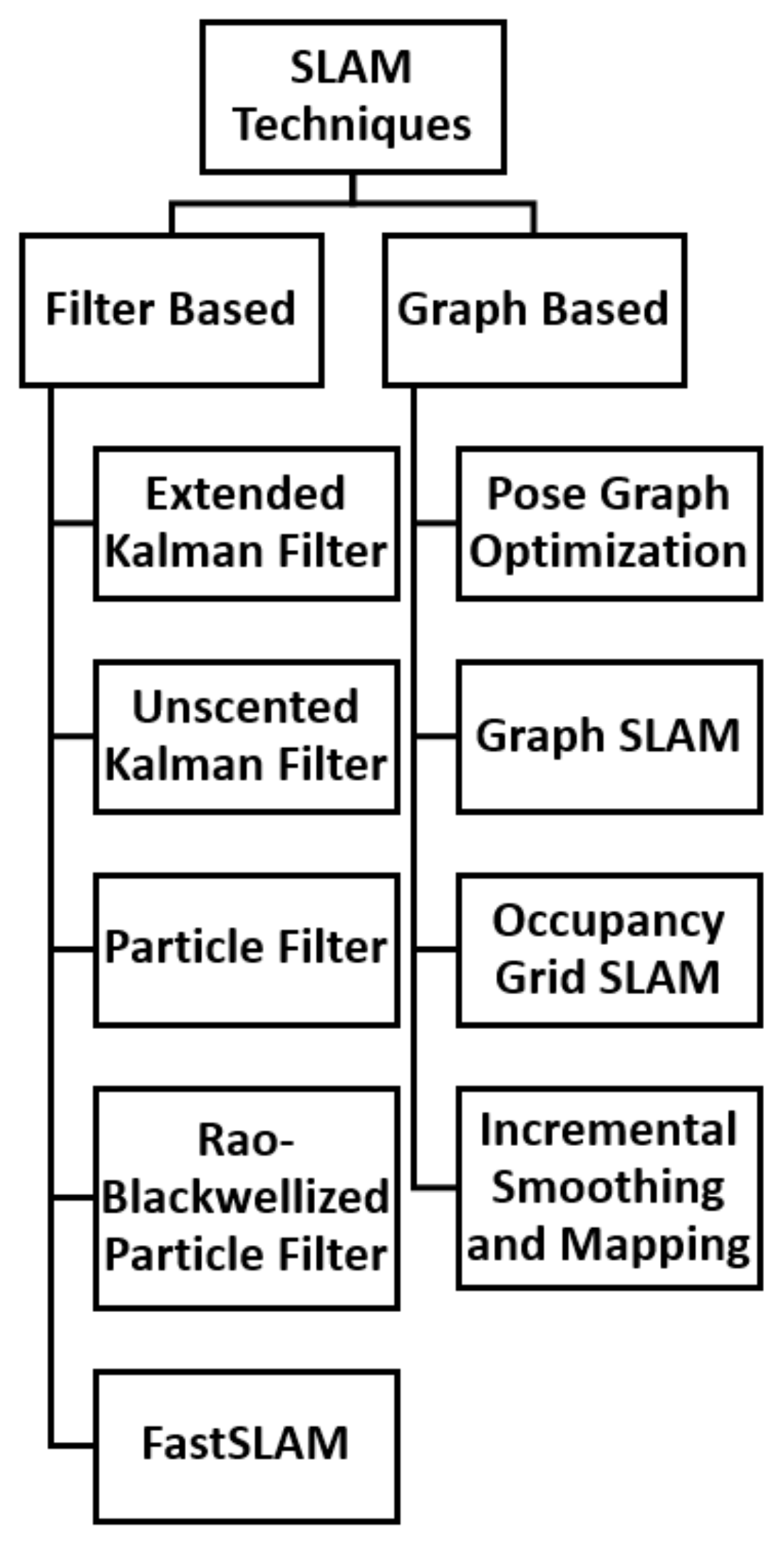

2.1. SLAM Techniques

2.2. Sensors Used in SLAM

3. Visual Simultaneous Localisation and Mapping (V-SLAM)

3.1. Background of V-SLAM

- Acquire, read, and pre-process the data from the camera and other devices;

- Estimate motion and local map of the scene from adjacent camera frames;

- Optimise and adjust camera poses;

- Detect loops to eliminate errors and complete the map.

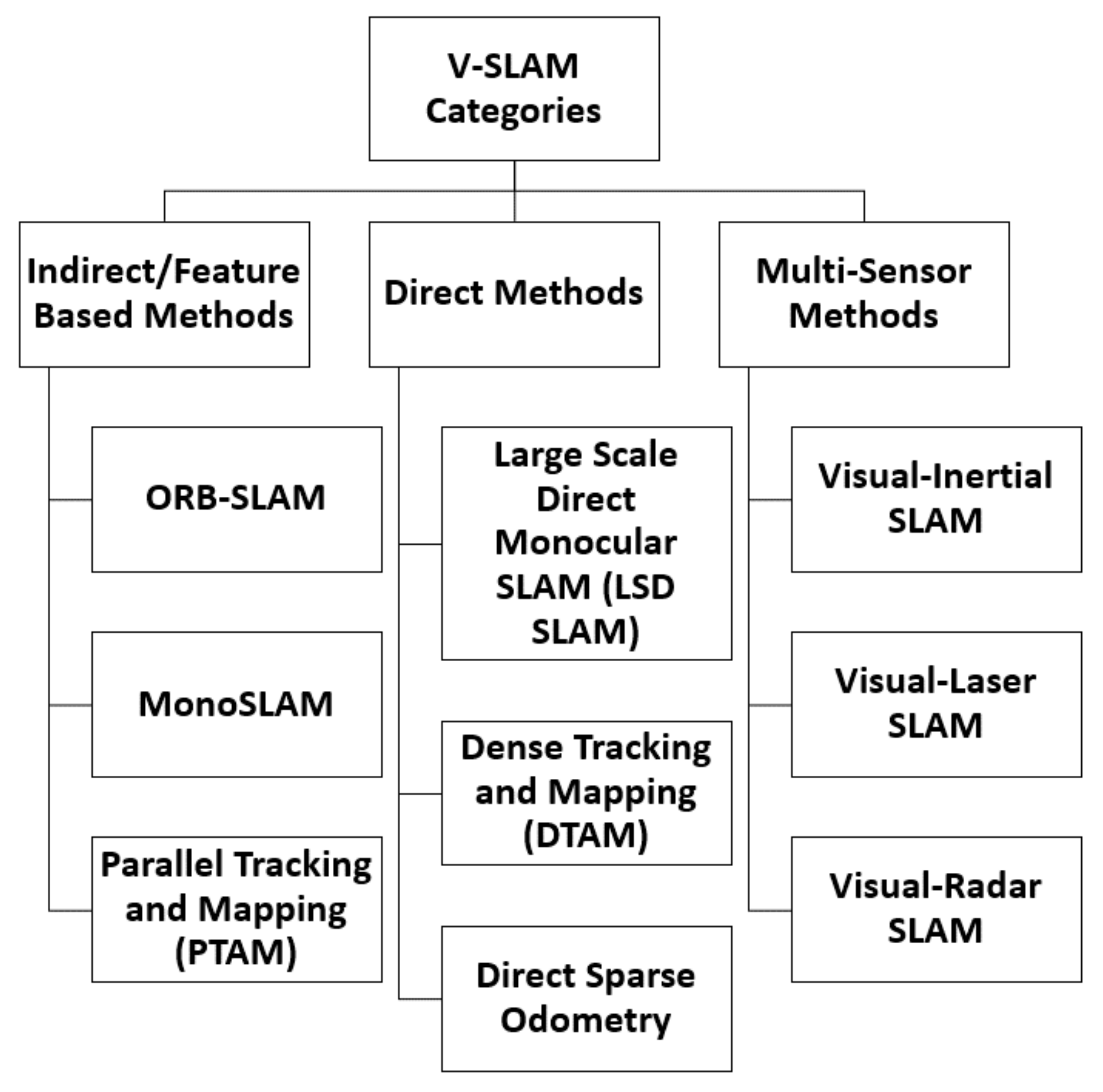

3.2. V-SLAM Categories

3.3. Deep Learning Applications in V-SLAM

4. Challenges and Open Issues in V-SLAM

- (a)

- Reliability in Outdoor Environments: There is room for improvement in the reliability of V-SLAM implementations, especially in outdoor environments. The ineffectiveness of lidar and radar sensors in extreme weather conditions coupled with their high cost makes them [13,102,103] unsuitable for outdoor conditions. Additionally, despite the high precision and strong anti-interference ability of laser scans, they provide no semantic information about the environment [58]. The use of V-SLAM techniques cater for these limitations, however, these methods are susceptible to unpredictable and uncontrollable environmental conditions such as illumination changes [104].

- (b)

- Operability in Dynamic Scenes: The traditional SLAM and V-SLAM techniques assume a static environment, which is not always the case. V-SLAM techniques based on static scenes fail when deployed to dynamic environments [57]. The dynamic environment comprises of moving objects which needs to be taken into account in localisation and mapping operations. In the case of ORB-SLAM, for instance, it is not possible to determine if the extracted feature points are from static or dynamic objects [58]. Although significant research has been carried out in the area of object detection [91] and semantic segmentation [55], V-SLAM implementations in highly dynamic sceneries such as road networks and highways have not been exhaustively explored. Autonomous systems still need to fully comprehend dynamic scenarios and cope with dynamic objects [55,99].

- (c)

- Robustness in Challenging Scenes: V-SLAM techniques need to be robust enough to handle various scenarios. V-SLAM systems have the tendency to fail in situations involving fast motion [57,99]. Additionally, conventional V-SLAM systems rely on stable visual landmarks, which makes implementation difficult [104]. Therefore, achieving robust performance in challenging sceneries is paramount to the success of V-SLAM techniques [105].

- (d)

- Real-time Deployment: Deployment onto embedded hardware is another open issue for V-SLAM implementations [106]. Existing techniques have high computational requirements and slow real-time performance, thus, resulting in high deployment costs. With a high demand of unmanned systems for deployment in sectors such as Agriculture, Oil and Gas, and Military, the need arises for V-SLAM methods can be deployment onto microcontrollers and microcomputer systems.

- (e)

- Control Scheme for Navigation: Majority of the reviewed works lack an effective control technique for navigation based on the V-SLAM output. Considering path planning and control are major modules in AV deployment [8,9], there is a need for an effective control mechanism to navigate the AV in relation to the perceived environment. This will significantly contribute to the advancement towards fully autonomous vehicles.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Du, H.; Leng, S.; Wu, F.; Chen, X.; Mao, S. A new vehicular fog computing architecture for cooperative sensing of autonomous driving. IEEE Access 2020, 8, 10997–11006. [Google Scholar] [CrossRef]

- Jeong, Y.; Kim, S.; Yi, K. Surround vehicle motion prediction using LSTM-RNN for motion planning of autonomous vehicles at multi-lane turn intersections. IEEE Open J. Intell. Transp. Syst. 2020, 1, 2–14. [Google Scholar] [CrossRef]

- Mellucci, C.; Menon, P.P.; Edwards, C.; Challenor, P.G. Environmental feature exploration with a single autonomous vehicle. IEEE Trans. Control. Syst. Technol. 2019, 28, 1349–1362. [Google Scholar] [CrossRef] [Green Version]

- Tang, L.; Yan, F.; Zou, B.; Wang, K.; Lv, C. An improved kinematic model predictive control for high-speed path tracking of autonomous vehicles. IEEE Access 2020, 8, 51400–51413. [Google Scholar] [CrossRef]

- Dixit, S.; Montanaro, U.; Dianati, M.; Oxtoby, D.; Mizutani, T.; Mouzakitis, A.; Fallah, S. Trajectory planning for autonomous high-speed overtaking in structured environments using robust MPC. IEEE Trans. Intell. Transp. Syst. 2019, 21, 2310–2323. [Google Scholar] [CrossRef]

- Gu, X.; Han, Y.; Yu, J. A novel lane-changing decision model for autonomous vehicles based on deep autoencoder network and XGBoost. IEEE Access 2020, 8, 9846–9863. [Google Scholar] [CrossRef]

- Yao, Q.; Tian, Y.; Wang, Q.; Wang, S. Control strategies on path tracking for autonomous vehicle: State of the art and future challenges. IEEE Access 2020, 8, 161211–161222. [Google Scholar] [CrossRef]

- Chae, H.; Yi, K. Virtual target-based overtaking decision, motion planning, and control of autonomous vehicles. IEEE Access 2020, 8, 51363–51376. [Google Scholar] [CrossRef]

- Liao, J.; Liu, T.; Tang, X.; Mu, X.; Huang, B.; Cao, D. Decision-making strategy on highway for autonomous vehicles using deep reinforcement learning. IEEE Access 2020, 8, 177804–177814. [Google Scholar] [CrossRef]

- Benterki, A.; Boukhnifer, M.; Judalet, V.; Maaoui, C. Artificial intelligence for vehicle behavior anticipation: Hybrid approach based on maneuver classification and trajectory prediction. IEEE Access 2020, 8, 56992–57002. [Google Scholar] [CrossRef]

- Yan, F.; Wang, K.; Zou, B.; Tang, L.; Li, W.; Lv, C. Lidar-based multi-task road perception network for autonomous vehicles. IEEE Access 2020, 8, 86753–86764. [Google Scholar] [CrossRef]

- Guo, H.; Li, W.; Nejad, M.; Shen, C.C. Proof-of-event recording system for autonomous vehicles: A blockchain-based solution. IEEE Access 2020, 8, 182776–182786. [Google Scholar] [CrossRef]

- Ort, T.; Gilitschenski, I.; Rus, D. Autonomous navigation in inclement weather based on a localizing ground penetrating radar. IEEE Robot. Autom. Lett. 2020, 5, 3267–3274. [Google Scholar] [CrossRef]

- Zhan, Z.; Jian, W.; Li, Y.; Yue, Y. A slam map restoration algorithm based on submaps and an undirected connected graph. IEEE Access 2021, 9, 12657–12674. [Google Scholar] [CrossRef]

- Saeedi, S.; Bodin, B.; Wagstaff, H.; Nisbet, A.; Nardi, L.; Mawer, J.; Melot, N.; Palomar, O.; Vespa, E.; Spink, T.; et al. Navigating the landscape for real-time localization and mapping for robotics and virtual and augmented reality. Proc. IEEE 2018, 106, 2020–2039. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Wang, C.; Ang, M.H. Real-time visual-inertial localization using semantic segmentation towards dynamic environments. IEEE Access 2020, 8, 155047–155059. [Google Scholar] [CrossRef]

- Zheng, Y.; Chen, S.; Cheng, H. Real-time cloud visual simultaneous localization and mapping for indoor service robots. IEEE Access 2020, 8, 16816–16829. [Google Scholar] [CrossRef]

- Yusefi, A.; Durdu, A.; Aslan, M.F.; Sungur, C. Lstm and filter based comparison analysis for indoor global localization in uavs. IEEE Access 2021, 9, 10054–10069. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Rusli, I.; Trilaksono, B.R.; Adiprawita, W. RoomSLAM: Simultaneous localization and mapping with objects and indoor layout structure. IEEE Access 2020, 8, 196992–197004. [Google Scholar] [CrossRef]

- Steenbeek, A. CNN Based Dense Monocular Visual SLAM for Indoor Mapping And Autonomous Exploration. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2020. [Google Scholar]

- Ozaki, M.; Kakimuma, K.; Hashimoto, M.; Takahashi, K. Laser-based pedestrian tracking in outdoor environments by multiple mobile robots. Sensors 2012, 12, 14489–14507. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Himmelsbach, M.; Mueller, A.; Lüttel, T.; Wünsche, H.J. LIDAR-based 3D object perception. In Proceedings of the 1st International Workshop on Cognition for Technical Systems, München, Germany, 6–7 October 2008; Volume 1. [Google Scholar]

- Wang, W.; Wu, Y.; Jiang, Z.; Qi, J. A clutter-resistant SLAM algorithm for autonomous guided vehicles in dynamic industrial environment. IEEE Access 2020, 8, 109770–109782. [Google Scholar] [CrossRef]

- Zhao, J.; Huang, S.; Zhao, L.; Chen, Y.; Luo, X. Conic feature based simultaneous localization and mapping in open environment via 2D lidar. IEEE Access 2019, 7, 173703–173718. [Google Scholar] [CrossRef]

- Luo, J.; Qin, S. A fast algorithm of simultaneous localization and mapping for mobile robot based on ball particle filter. IEEE Access 2018, 6, 20412–20429. [Google Scholar] [CrossRef]

- Bavle, H.; De La Puente, P.; How, J.P.; Campoy, P. VPS-SLAM: Visual planar semantic SLAM for aerial robotic systems. IEEE Access 2020, 8, 60704–60718. [Google Scholar] [CrossRef]

- Teixeira, B.; Silva, H.; Matos, A.; Silva, E. Deep learning for underwater visual odometry estimation. IEEE Access 2020, 8, 44687–44701. [Google Scholar] [CrossRef]

- Evers, C.; Naylor, P.A. Optimized self-localization for SLAM in dynamic scenes using probability hypothesis density filters. IEEE Trans. Signal Process. 2017, 66, 863–878. [Google Scholar] [CrossRef] [Green Version]

- Thrun, S. Probabilistic robotics. Commun. ACM 2002, 45, 52–57. [Google Scholar] [CrossRef]

- Kaess, M.; Ranganathan, A.; Dellaert, F. iSAM: Incremental smoothing and mapping. IEEE Trans. Robot. 2008, 24, 1365–1378. [Google Scholar] [CrossRef]

- Kaess, M.; Johannsson, H.; Roberts, R.; Ila, V.; Leonard, J.J.; Dellaert, F. iSAM2: Incremental smoothing and mapping using the Bayes tree. Int. J. Robot. Res. 2012, 31, 216–235. [Google Scholar] [CrossRef]

- Smith, R.C.; Cheeseman, P. On the representation and estimation of spatial uncertainty. Int. J. Robot. Res. 1986, 5, 56–68. [Google Scholar] [CrossRef]

- Ullah, I.; Shen, Y.; Su, X.; Esposito, C.; Choi, C. A localization based on unscented Kalman filter and particle filter localization algorithms. IEEE Access 2019, 8, 2233–2246. [Google Scholar] [CrossRef]

- Thrun, S. Particle Filters in Robotics. In UAI; Citeseer: Princeton, NJ, USA, 2002; Volume 2, pp. 511–518. [Google Scholar]

- Thrun, S.; Fox, D.; Burgard, W.; Dellaert, F. Robust Monte Carlo localization for mobile robots. Artif. Intell. 2001, 128, 99–141. [Google Scholar] [CrossRef] [Green Version]

- Llofriu, M.; Andrade, F.; Benavides, F.; Weitzenfeld, A.; Tejera, G. An embedded particle filter SLAM implementation using an affordable platform. In Proceedings of the 2013 16th International Conference on Advanced Robotics (ICAR), Montevideo, Uruguay, 25–29 November 2013; pp. 1–6. [Google Scholar]

- Yatim, N.M.; Buniyamin, N. Particle filter in simultaneous localization and mapping (Slam) using differential drive mobile robot. J. Teknol. 2015, 77, 91–97. [Google Scholar] [CrossRef] [Green Version]

- Slowak, P.; Kaniewski, P. Stratified particle filter monocular SLAM. Remote Sens. 2021, 13, 3233. [Google Scholar] [CrossRef]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improving grid-based slam with rao-blackwellized particle filters by adaptive proposals and selective resampling. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 2432–2437. [Google Scholar]

- Murphy, K.P. Bayesian map learning in dynamic environments. Adv. Neural Inf. Process. Syst. 1999, 12, 1015–1021. [Google Scholar]

- Murphy, K.; Russell, S. Rao-Blackwellised particle filtering for dynamic Bayesian networks. In Sequential Monte Carlo Methods in Practice; Springer: Berlin/Heidelberg, Germany, 2001; pp. 499–515. [Google Scholar]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM 2.0: An improved particle filtering algorithm for simultaneous localization and mapping that provably converges. In Proceedings of the IJCAI, Acapulco, Mexico, 9–15 August 2003; Volume 3, pp. 1151–1156. [Google Scholar]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM: A factored solution to the simultaneous localization and mapping problem. Aaai/iaai 2002, 593598, 593–598. [Google Scholar]

- Duymaz, E.; Oğuz, A.E.; Temeltaş, H. Exact flow of particles using for state estimations in unmanned aerial systemsnavigation. PLoS ONE 2020, 15, e0231412. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Takahashi, Y. Particle filter based landmark mapping for SLAM of mobile robot based on RFID system. In Proceedings of the 2016 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Banff, AB, Canada, 12–15 July 2016; pp. 870–875. [Google Scholar]

- Javanmardi, E.; Javanmardi, M.; Gu, Y.; Kamijo, S. Factors to evaluate capability of map for vehicle localization. IEEE Access 2018, 6, 49850–49867. [Google Scholar] [CrossRef]

- Blanco-Claraco, J.L.; Mañas-Alvarez, F.; Torres-Moreno, J.L.; Rodriguez, F.; Gimenez-Fernandez, A. Benchmarking particle filter algorithms for efficient velodyne-based vehicle localization. Sensors 2019, 19, 3155. [Google Scholar] [CrossRef] [Green Version]

- Niu, Z.; Zhao, X.; Sun, J.; Tao, L.; Zhu, B. A continuous positioning algorithm based on RTK and VI-SLAM with smartphones. IEEE Access 2020, 8, 185638–185650. [Google Scholar] [CrossRef]

- Fairfield, N.; Kantor, G.; Wettergreen, D. Towards particle filter SLAM with three dimensional evidence grids in a flooded subterranean environment. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, ICRA 2006, Orlando, FL, USA, 15–19 May 2006; pp. 3575–3580. [Google Scholar]

- Adams, M.; Zhang, S.; Xie, L. Particle filter based outdoor robot localization using natural features extracted from laser scanners. In Proceedings of the IEEE International Conference on Robotics and Automation, ICRA’04, New Orleans, LA, USA, 26 April–1 May 2004; Volume 2, pp. 1493–1498. [Google Scholar]

- Pei, F.; Wu, M.; Zhang, S. Distributed SLAM using improved particle filter for mobile robot localization. Sci. World J. 2014, 2014, 239531. [Google Scholar] [CrossRef] [PubMed]

- Xie, D.; Xu, Y.; Wang, R. Obstacle detection and tracking method for autonomous vehicle based on three-dimensional LiDAR. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419831587. [Google Scholar] [CrossRef] [Green Version]

- Lu, H.; Yang, S.; Zhao, M.; Cheng, S. Multi-robot indoor environment map building based on multi-stage optimization method. Complex Syst. Model. Simul. 2021, 1, 145–161. [Google Scholar] [CrossRef]

- Ai, Y.; Rui, T.; Lu, M.; Fu, L.; Liu, S.; Wang, S. DDL-SLAM: A robust RGB-D SLAM in dynamic environments combined with deep learning. IEEE Access 2020, 8, 162335–162342. [Google Scholar] [CrossRef]

- Zhang, S.; Zheng, L.; Tao, W. Survey and evaluation of RGB-D SLAM. IEEE Access 2021, 9, 21367–21387. [Google Scholar] [CrossRef]

- Yang, D.; Bi, S.; Wang, W.; Yuan, C.; Wang, W.; Qi, X.; Cai, Y. DRE-SLAM: Dynamic RGB-D encoder SLAM for a differential-drive robot. Remote Sens. 2019, 11, 380. [Google Scholar] [CrossRef] [Green Version]

- Li, F.; Chen, W.; Xu, W.; Huang, L.; Li, D.; Cai, S.; Yang, M.; Xiong, X.; Liu, Y.; Li, W. A mobile robot visual SLAM system with enhanced semantics segmentation. IEEE Access 2020, 8, 25442–25458. [Google Scholar] [CrossRef]

- Zeng, F.; Wang, C.; Ge, S.S. A survey on visual navigation for artificial agents with deep reinforcement learning. IEEE Access 2020, 8, 135426–135442. [Google Scholar] [CrossRef]

- Cheng, J.; Wang, C.; Meng, M.Q.H. Robust visual localization in dynamic environments based on sparse motion removal. IEEE Trans. Autom. Sci. Eng. 2019, 17, 658–669. [Google Scholar] [CrossRef]

- Davison, A.J. Real-time simultaneous localisation and mapping with a single camera. In Proceedings of the Computer Vision, IEEE International Conference on. IEEE Computer Society, Nice, France, 13–16 October 2003; Volume 3, p. 1403. [Google Scholar]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Lu, S.; Zhi, Y.; Zhang, S.; He, R.; Bao, Z. Semi-Direct Monocular SLAM With Three Levels of Parallel Optimizations. IEEE Access 2021, 9, 86801–86810. [Google Scholar] [CrossRef]

- Fan, M.; Kim, S.W.; Kim, S.T.; Sun, J.Y.; Ko, S.J. Simple But Effective Scale Estimation for Monocular Visual Odometry in Road Driving Scenarios. IEEE Access 2020, 8, 175891–175903. [Google Scholar] [CrossRef]

- Nobis, F.; Papanikolaou, O.; Betz, J.; Lienkamp, M. Persistent map saving for visual localization for autonomous vehicles: An orb-slam 2 extension. In Proceedings of the 2020 Fifteenth International Conference on Ecological Vehicles and Renewable Energies (EVER), Monte-Carlo, Monaco, 10–12 September 2020; pp. 1–9. [Google Scholar]

- Li, G.; Liao, X.; Huang, H.; Song, S.; Liu, B.; Zeng, Y. Robust stereo visual slam for dynamic environments with moving object. IEEE Access 2021, 9, 32310–32320. [Google Scholar] [CrossRef]

- Chien, C.H.; Hsu, C.C.J.; Wang, W.Y.; Chiang, H.H. Indirect visual simultaneous localization and mapping based on linear models. IEEE Sens. J. 2019, 20, 2738–2747. [Google Scholar] [CrossRef]

- Hempel, T.; Al-Hamadi, A. Pixel-wise motion segmentation for SLAM in dynamic environments. IEEE Access 2020, 8, 164521–164528. [Google Scholar] [CrossRef]

- Li, C.; Zhang, X.; Gao, H.; Wang, R.; Fang, Y. Bridging the gap between visual servoing and visual SLAM: A novel integrated interactive framework. IEEE Trans. Autom. Sci. Eng. 2021, 19, 2245–2255. [Google Scholar] [CrossRef]

- Yang, S.; Fan, G.; Bai, L.; Li, R.; Li, D. MGC-VSLAM: A meshing-based and geometric constraint VSLAM for dynamic indoor environments. IEEE Access 2020, 8, 81007–81021. [Google Scholar] [CrossRef]

- Li, D.; Liu, S.; Xiang, W.; Tan, Q.; Yuan, K.; Zhang, Z.; Hu, Y. A SLAM System Based on RGBD Image and Point-Line Feature. IEEE Access 2021, 9, 9012–9025. [Google Scholar] [CrossRef]

- Wu, R.; Pike, M.; Lee, B.G. DT-SLAM: Dynamic Thresholding Based Corner Point Extraction in SLAM System. IEEE Access 2021, 9, 91723–91729. [Google Scholar] [CrossRef]

- Zhang, C. PL-GM: RGB-D SLAM with a novel 2D and 3D geometric constraint model of point and line features. IEEE Access 2021, 9, 9958–9971. [Google Scholar] [CrossRef]

- Wang, Q.; Yan, Z.; Wang, J.; Xue, F.; Ma, W.; Zha, H. Line Flow Based Simultaneous Localization and Mapping. IEEE Trans. Robot. 2021, 37, 1416–1432. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar]

- Cremers, D. Direct methods for 3d reconstruction and visual slam. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017; pp. 34–38. [Google Scholar]

- Zhu, Z.; Kaizu, Y.; Furuhashi, K.; Imou, K. Visual-Inertial RGB-D SLAM With Encoders for a Differential Wheeled Robot. IEEE Sens. J. 2021, 22, 5360–5371. [Google Scholar] [CrossRef]

- Zhang, M.; Han, S.; Wang, S.; Liu, X.; Hu, M.; Zhao, J. Stereo visual inertial mapping algorithm for autonomous mobile robot. In Proceedings of the 2020 3rd International Conference on Intelligent Robotic and Control Engineering (IRCE), Oxford, UK, 10–12 August 2020; pp. 97–104. [Google Scholar]

- Wu, R. A Low-Cost SLAM Fusion Algorithm for Robot Localization; University of Alberta: Edmonton, Canada, 2019. [Google Scholar]

- Chen, C.H.; Wang, C.C.; Lin, S.F. A Navigation Aid for Blind People Based on Visual Simultaneous Localization and Mapping. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-Taiwan), Taoyuan, Taiwan, 28–30 September 2020; pp. 1–2. [Google Scholar]

- Haavardsholm, T.V.; Skauli, T.; Stahl, A. Multimodal Multispectral Imaging System for Small UAVs. IEEE Robot. Autom. Lett. 2020, 5, 1039–1046. [Google Scholar] [CrossRef]

- Cui, L.; Ma, C. SDF-SLAM: Semantic depth filter SLAM for dynamic environments. IEEE Access 2020, 8, 95301–95311. [Google Scholar] [CrossRef]

- Jiang, F.; Chen, J.; Ji, S. Panoramic visual-inertial SLAM tightly coupled with a wheel encoder. ISPRS J. Photogramm. Remote Sens. 2021, 182, 96–111. [Google Scholar] [CrossRef]

- Canovas, B.; Nègre, A.; Rombaut, M. Onboard dynamic RGB-D simultaneous localization and mapping for mobile robot navigation. ETRI J. 2021, 43, 617–629. [Google Scholar] [CrossRef]

- Huang, C. Wheel Odometry Aided Visual-Inertial Odometry in Winter Urban Environments. Master’s Thesis, Schulich School of Engineering, Calgary, AB, Canada, 2021. [Google Scholar]

- Xu, Z.; Haroutunian, M.; Murphy, A.J.; Neasham, J.; Norman, R. An Integrated Visual Odometry System With Stereo Camera for Unmanned Underwater Vehicles. IEEE Access 2022, 10, 71329–71343. [Google Scholar] [CrossRef]

- Kuang, Z.; Wei, W.; Yan, Y.; Li, J.; Lu, G.; Peng, Y.; Li, J.; Shang, W. A Real-time and Robust Monocular Visual Inertial SLAM System Based on Point and Line Features for Mobile Robots of Smart Cities Towards 6G. IEEE Open J. Commun. Soc. 2022, 3, 1950–1962. [Google Scholar] [CrossRef]

- Lee, S.J.; Choi, H.; Hwang, S.S. Real-time depth estimation using recurrent CNN with sparse depth cues for SLAM system. Int. J. Control. Autom. Syst. 2020, 18, 206–216. [Google Scholar] [CrossRef]

- Soares, J.C.V.; Gattass, M.; Meggiolaro, M.A. Crowd-SLAM: Visual SLAM Towards Crowded Environments using Object Detection. J. Intell. Robot. Syst. 2021, 102, 1–16. [Google Scholar] [CrossRef]

- Liu, Y.; Miura, J. RDS-SLAM: Real-time dynamic SLAM using semantic segmentation methods. IEEE Access 2021, 9, 23772–23785. [Google Scholar] [CrossRef]

- Liu, Y.; Miura, J. RDMO-SLAM: Real-time visual SLAM for dynamic environments using semantic label prediction with optical flow. IEEE Access 2021, 9, 106981–106997. [Google Scholar] [CrossRef]

- Yang, S.; Zhao, C.; Wu, Z.; Wang, Y.; Wang, G.; Li, D. Visual SLAM Based on Semantic Segmentation and Geometric Constraints for Dynamic Indoor Environments. IEEE Access 2022, 10, 69636–69649. [Google Scholar] [CrossRef]

- Shao, C.; Zhang, C.; Fang, Z.; Yang, G. A deep learning-based semantic filter for ransac-based fundamental matrix calculation and the ORB-slam system. IEEE Access 2019, 8, 3212–3223. [Google Scholar] [CrossRef]

- Han, S.; Xi, Z. Dynamic scene semantics SLAM based on semantic segmentation. IEEE Access 2020, 8, 43563–43570. [Google Scholar] [CrossRef]

- Tu, X.; Xu, C.; Liu, S.; Xie, G.; Huang, J.; Li, R.; Yuan, J. Learning depth for scene reconstruction using an encoder-decoder model. IEEE Access 2020, 8, 89300–89317. [Google Scholar] [CrossRef]

- Liu, W.; Mo, Y.; Jiao, J.; Deng, Z. EF-Razor: An Effective Edge-Feature Processing Method in Visual SLAM. IEEE Access 2020, 8, 140798–140805. [Google Scholar] [CrossRef]

- Li, D.; Yang, W.; Shi, X.; Guo, D.; Long, Q.; Qiao, F.; Wei, Q. A visual-inertial localization method for unmanned aerial vehicle in underground tunnel dynamic environments. IEEE Access 2020, 8, 76809–76822. [Google Scholar] [CrossRef]

- Tomită, M.A.; Zaffar, M.; Milford, M.J.; McDonald-Maier, K.D.; Ehsan, S. Convsequential-slam: A sequence-based, training-less visual place recognition technique for changing environments. IEEE Access 2021, 9, 118673–118683. [Google Scholar] [CrossRef]

- Su, P.; Luo, S.; Huang, X. Real-Time Dynamic SLAM Algorithm Based on Deep Learning. IEEE Access 2022, 10, 87754–87766. [Google Scholar] [CrossRef]

- Yue, J.; Wen, W.; Han, J.; Hsu, L.T. LiDAR data enrichment using deep learning based on high-resolution image: An approach to achieve high-performance LiDAR SLAM using Low-cost LiDAR. arXiv 2020, arXiv:2008.03694. [Google Scholar]

- Hu, T.; Sun, X.; Su, Y.; Guan, H.; Sun, Q.; Kelly, M.; Guo, Q. Development and performance evaluation of a very low-cost UAV-LiDAR system for forestry applications. Remote Sens. 2020, 13, 77. [Google Scholar] [CrossRef]

- Cheng, J.; Zhang, H.; Meng, M.Q.H. Improving visual localization accuracy in dynamic environments based on dynamic region removal. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1585–1596. [Google Scholar] [CrossRef]

- Alkendi, Y.; Seneviratne, L.; Zweiri, Y. State of the art in vision-based localization techniques for autonomous navigation systems. IEEE Access 2021, 9, 76847–76874. [Google Scholar] [CrossRef]

- Yu, J.; Gao, F.; Cao, J.; Yu, C.; Zhang, Z.; Huang, Z.; Wang, Y.; Yang, H. CNN-based Monocular Decentralized SLAM on embedded FPGA. In Proceedings of the 2020 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), New Orleans, LA, USA, 18–22 May 2020; pp. 66–73. [Google Scholar]

| Sensor | Advantages | Limitations |

|---|---|---|

| Lidar | ||

| Radar |

|

|

| Sonar |

| |

| Camera | ||

| Inertial Measurement Unit |

| |

| Wheel Encoders |

| |

| Laser |

| |

| GPS |

|

|

| GNSS |

|

|

| Ref. | Work | Observations |

|---|---|---|

| [57] | Uses measurements of RGB-D camera and encoder to produce robot poses and octo-map, relies on CPU not GPU, works in both static and dynamic indoor environments. | Not designed for outdoor environments, problem of wheel slipping causes inaccuracy, inability to track dynamic objects. |

| [81] | Technique proved agreement between system pose estimates and ground truth. | Performance on dynamic outdoor environment could not be determined, and depth camera in Kinect blinded by sunlight during daytime. |

| [49] | Uses Visual-Inertial SLAM to complement limitations of RTK such as blockage of satellite signals due to buildings and trees. This was achieved with a common smartphone instead of extra specialised devices. | Further work required to evaluate the model more accurately, not designed for dynamic outdoor environments. RTK systems are reliant on further infrastructure which comes with additional costs according to [66]. |

| [82] | System uses pre-existing map and compares obtained images to evaluate user’s position within the map. | Use of pre-existing map not suitable for dynamic outdoor environments. |

| [83] | The study presents the development of a new processing chain based on V-SAM for UAVs. | Data processing performance in real time is low, and the technique focuses on aerial motion and thus, no information was provided for ground movement. |

| [84] | In this study, the authors developed a semantic depth filter for RGB-D SLAM operations making it more accurate in dynamic environments. | Simulated using TUM dataset and thus performance on dynamic outdoor environment could not be determined. |

| [85] | The study presents novel panoramic Visual Inertial SLAM which utilises a wheel encoder to achieve improved robustness and localisation accuracy. | Simulated using University of Michigan North Campus Long-Term Vision and LiDAR Dataset (NCLT) dataset and thus performance on dynamic outdoor environment could not be determined. |

| [86] | The study presents a SLAM algorithm coupled with wheel encoder measures to enhance localisation. A low cost map was generated to enhance speed and memory efficiency. | Despite its ability to handle tracking in dynamic scenarios, the system only considered indoor settings and no information on outdoor performance was provided. |

| [87] | The study uses wheel odometer measurements and monocular camera to develop a Visual Inertial Odometry model coupled with non-holonomic constraints. | Simulated using KITTI and KAIST Complex Urban datasets and thus, real time performance on dynamic outdoor environment could not be accurately determined. |

| [88] | This article presents Integrated Visual Odometry with a Stereo Camera (IVO-S), a unique low-cost underwater visual navigation approach. Unlike pure visual odometry, the suggested approach combines data from inertial sensors and a sonar to function in context-sparse situations. | The suggested approach performs effectively in underwater sparse-feature settings with high precision, but existing visual slams or odometries, like as ORB-SLAM2 and OKVIS, do not. However, the technique does not include loop closure detection and map reconstruction operations. |

| [89] | This research presents a real-time and resilient point-line based monocular visual inertial SLAM (VINS) system for smart city mobility robots heading towards 6G. EDLines with adaptive gamma correction are used to extract a higher proportion of long line features among all extracted line features faster. | The experimental findings reveal that the VINS system outperforms other sophisticated systems in terms of localization accuracy, and robustness in challenging situations. However, the performance in outdoor scenes could not be accurately determined since the model was not deployed in outdoor settings. |

| Ref. | Work | Observations |

|---|---|---|

| [20] | This study presents a SLAM technique that uses objects and walls as elements of the environment model. The objects are identified using YOLO v3 technique. The system exhibited better performance than the RGB-D SLAM and a comparable performance to ORB SLAM. | The technique was tested in a static indoor environment and thus, the performance on a dynamic outdoor environment could not be determined. |

| [95] | In this study, a semantic filter-based faster R-CNN is utilised to solve fundamental matrix calculations in ORB SLAM. This method reduced the trajectory error, number of low quality feature correspondences, and position error. | Simulated sing KITTI ad ETH datasets and thus performance on dynamic outdoor environment could not be determined. |

| [55] | Developed a novel RGB-D SLAM method combined with deep learning in order to decrease impact of moving objects in the estimation of camera pose. This was achieved using semantic segmentation and multi-view geometry. | Real time performance needs to be improved, not designed for outdoor environments. |

| [96] | Technique combined ORB-SLAM2 and PSPNet-based semantic segmentation to identify and eliminate dynamics points. This reduced the trajectory and pose errors. | Simulated using the TUM RGB-D dataset and thus performance on dynamic outdoor environments could not be determined. |

| [97] | Here, a Decoder-Encoder Model (DEM) was developed which uses CNNs to improve depth estimation performance. Additionally, a loss function was developed to enhance the training of the DEM. | Simulated using indoor NYU-Depth-v2 and outdoor KITTI datasets and thus performance on dynamic outdoor environment could not be determined. |

| [98] | In this study, YOLO v3 was used to provide semantic information in order to distinguish edge features and reduce the effect of unstable features. This process improved the positioning accuracy of the system. | Simulated using public TUM RGB-D dataset and thus performance on dynamic outdoor environment could not be determined. |

| [16] | The study utilises CNN-based semantic segmentation and multi-view geometric constraints to identify and avoid using dynamic object feature points. | Simulated using ADVIO dataset and thus performance on dynamic outdoor environment could not be determined. |

| [99] | The study presents a dynamic point detection and rejection algorithm centred on neural network-based semantic segmentation. This eliminates dynamic object interference during pose estimation. | The technique was simulated on the EuRoC dataset and collected underground images tunnel. However, the real-time performance could not be evaluated on dynamic ground outdoor environments. |

| [100] | Presented in this study was a novel Visual Place Recognition technique capable of operating under changing viewpoint and appearance conditions. The system avoids the use of CNN which has high computational requirements. | The system was simulated on various public VPR datasets but focused mainly on static environments. |

| [101] | A deep learning-based real-time visual SLAM technique is proposed in this work. A parallel semantic thread is created using the lightweight object detection network YOLOv5s to obtain semantic information in the scene more quickly. | The experimental findings suggest that the system improves in terms of accuracy as well as real-time performance. However, for practicality, the map generating process and computation speed need to be improved. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bala, J.A.; Adeshina, S.A.; Aibinu, A.M. Advances in Visual Simultaneous Localisation and Mapping Techniques for Autonomous Vehicles: A Review. Sensors 2022, 22, 8943. https://doi.org/10.3390/s22228943

Bala JA, Adeshina SA, Aibinu AM. Advances in Visual Simultaneous Localisation and Mapping Techniques for Autonomous Vehicles: A Review. Sensors. 2022; 22(22):8943. https://doi.org/10.3390/s22228943

Chicago/Turabian StyleBala, Jibril Abdullahi, Steve Adetunji Adeshina, and Abiodun Musa Aibinu. 2022. "Advances in Visual Simultaneous Localisation and Mapping Techniques for Autonomous Vehicles: A Review" Sensors 22, no. 22: 8943. https://doi.org/10.3390/s22228943

APA StyleBala, J. A., Adeshina, S. A., & Aibinu, A. M. (2022). Advances in Visual Simultaneous Localisation and Mapping Techniques for Autonomous Vehicles: A Review. Sensors, 22(22), 8943. https://doi.org/10.3390/s22228943