Sensor-Based Motion Tracking System Evaluation for RULA in Assembly Task

Abstract

1. Introduction

- We propose a geometric-based wrist kinematics measurement for radial–ulnar deviation and wrist twist measurement based on the flexion–extension angle and forearm pronation–supination from the hand tracking data sensor.

- We present a comprehensive ergonomics assessment (i.e., RULA) using a derived wrist posture measure, along with a body posture measure, in the assembly process automatically.

- We present an extensive experiment to show a personalized ergonomic assessment using multimodal unobtrusive sensors (i.e., body tracking and hand tracking sensors).

2. Related Work

2.1. Ergonomics Assessment

2.2. Wrist Kinematics

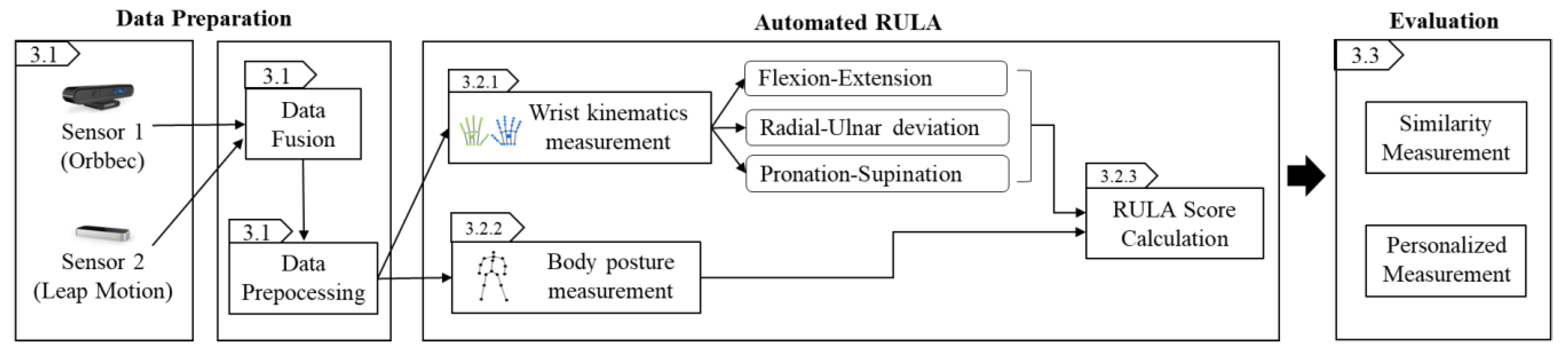

3. Method

3.1. Data Preparation

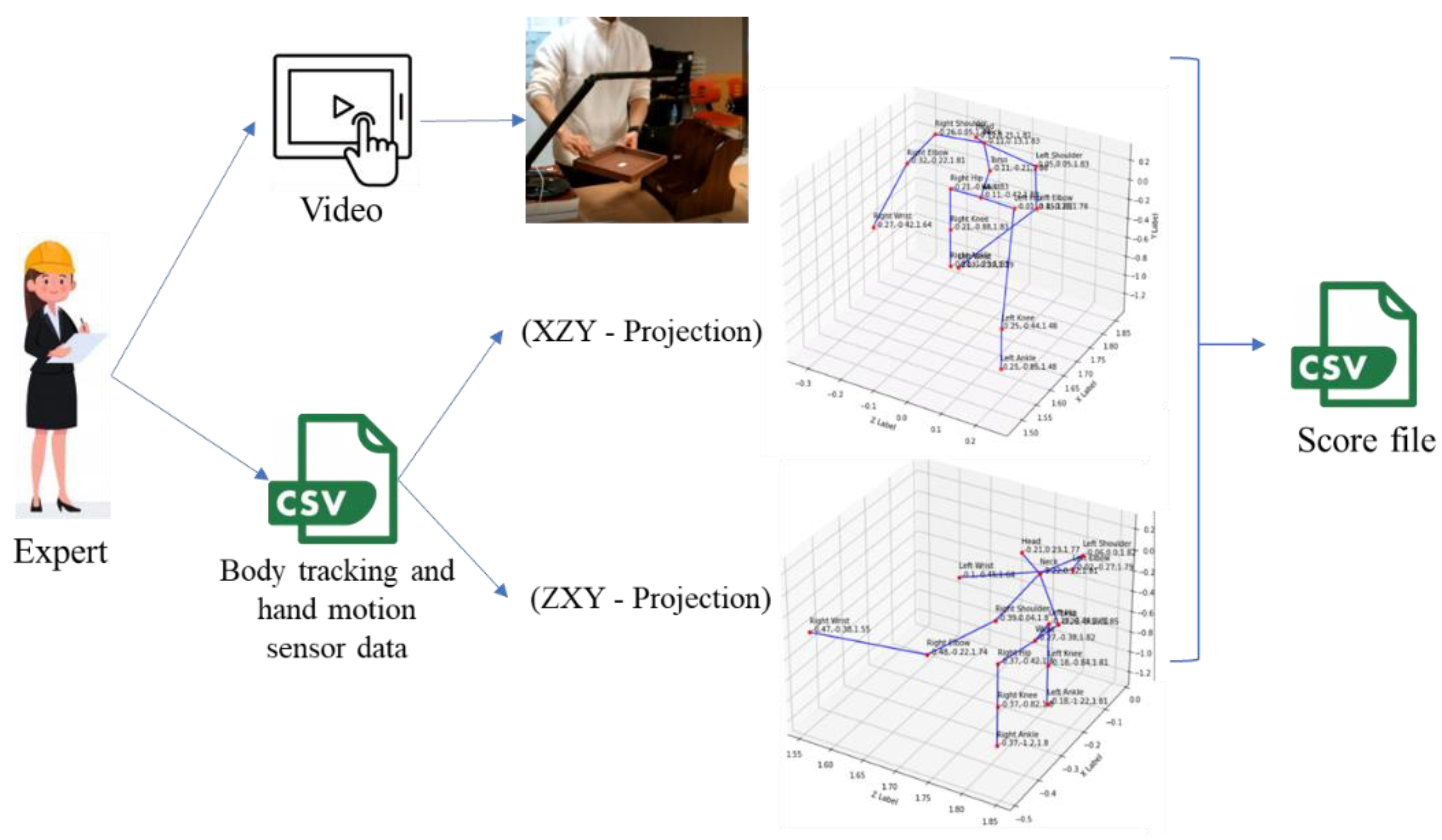

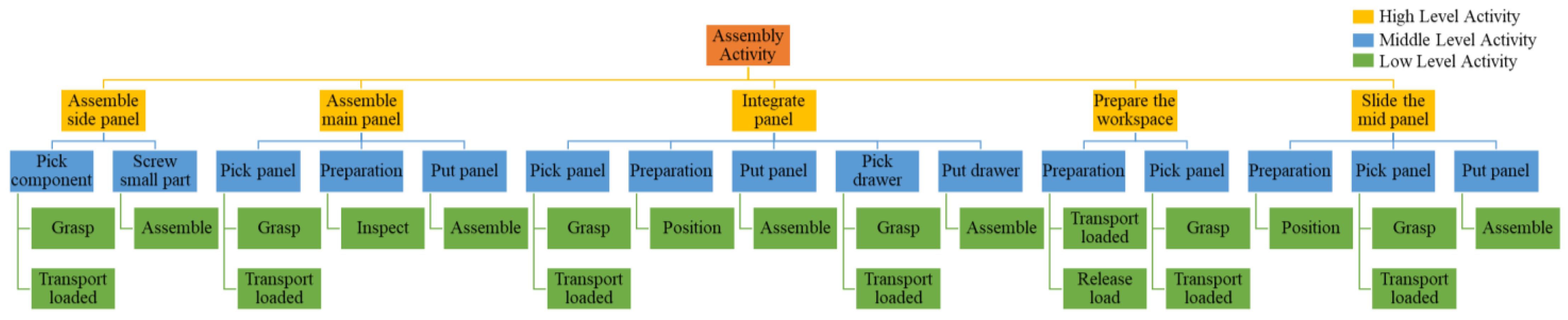

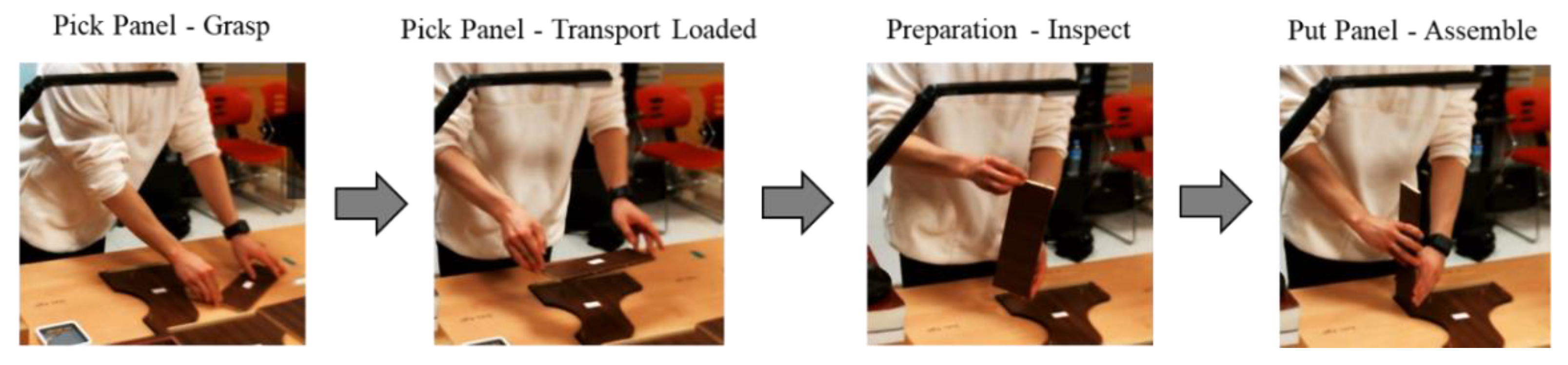

3.2. Labeling by Expert

3.3. Automated RULA

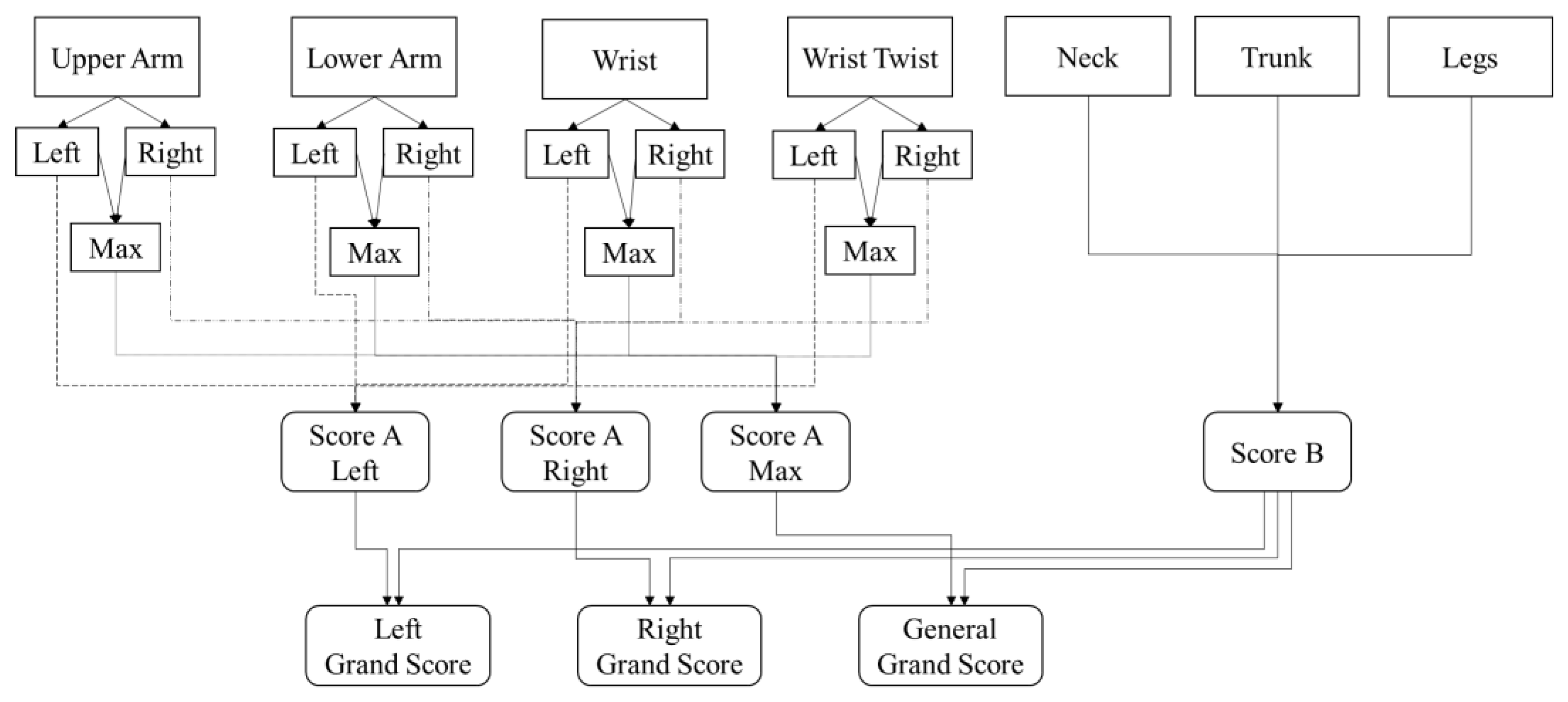

3.3.1. RULA Score Calculation

3.3.2. Wrist Kinematics Measurement

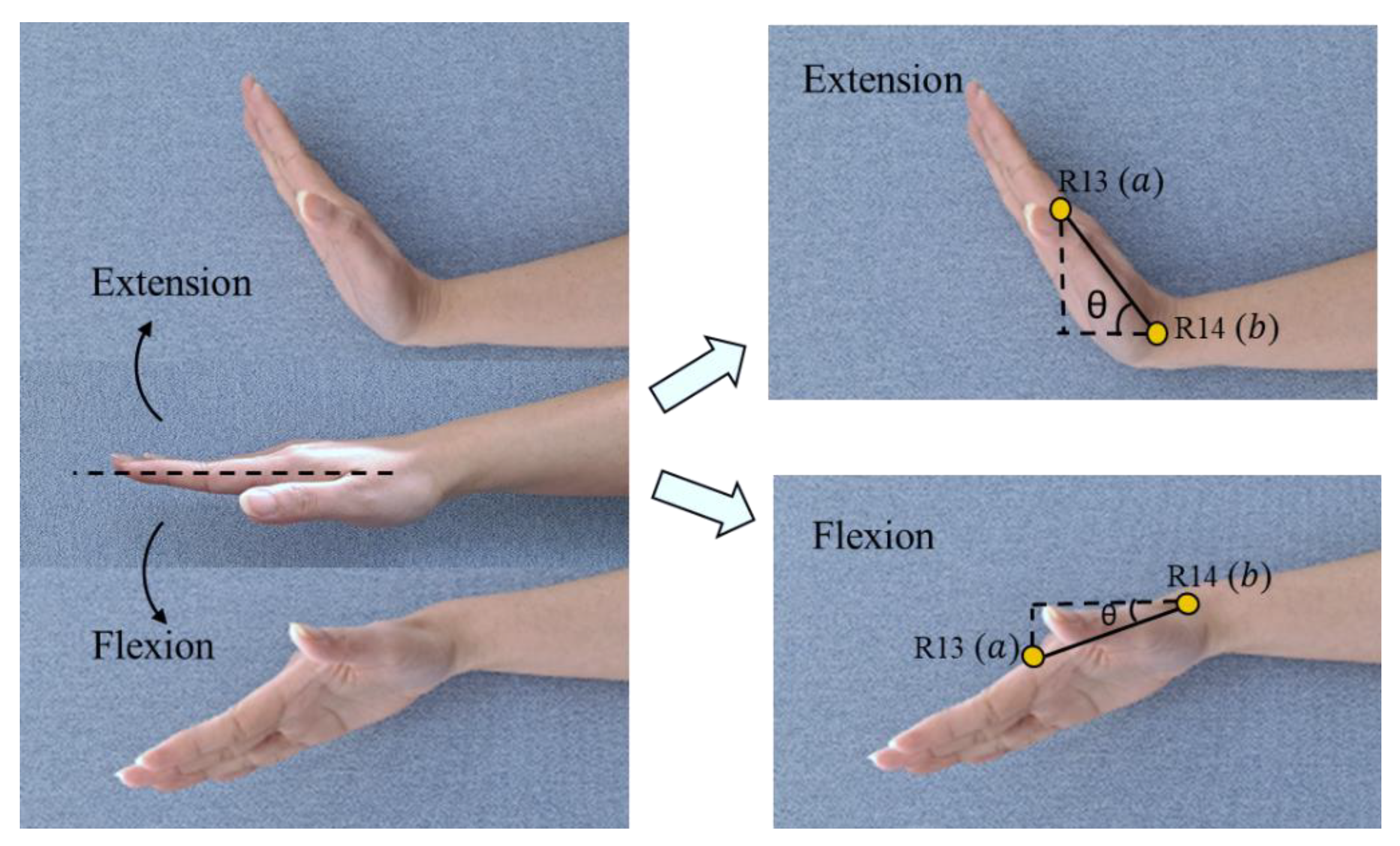

- Wrist flexion–extension

- 2.

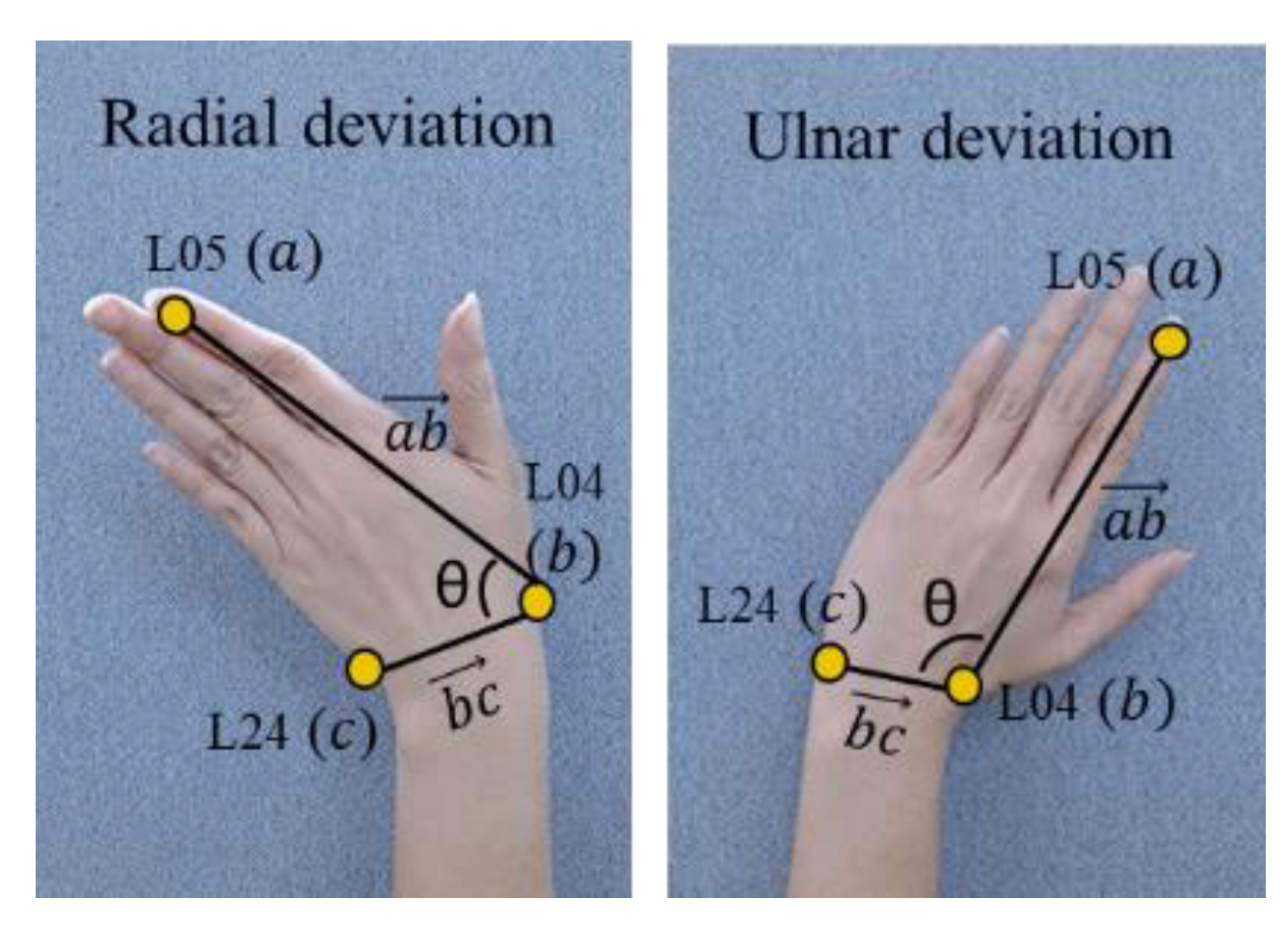

- Wrist radial–ulnar deviation

- 3.

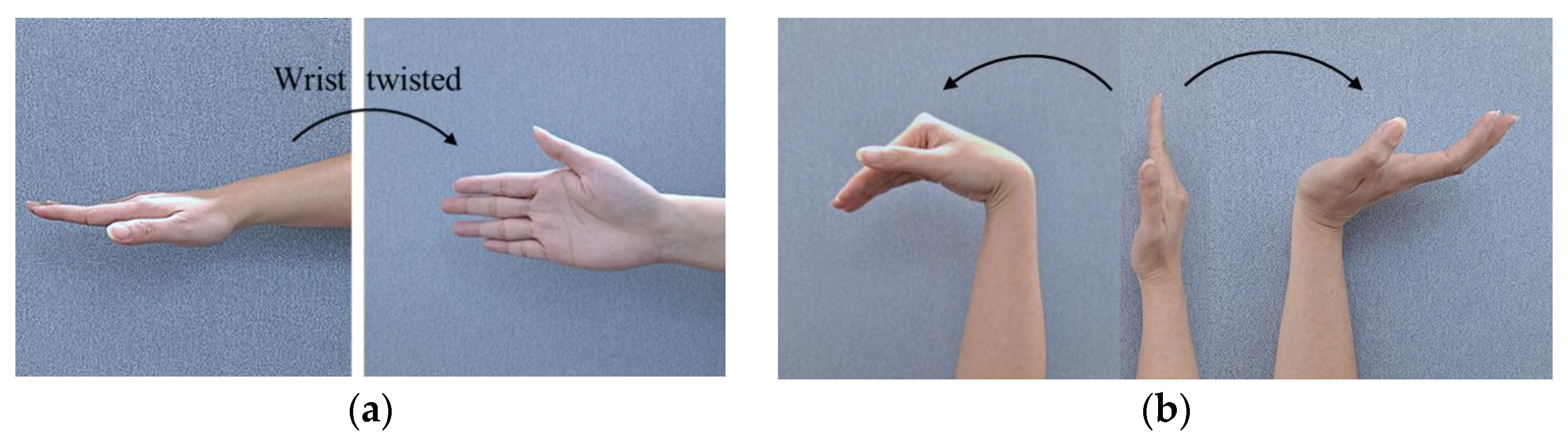

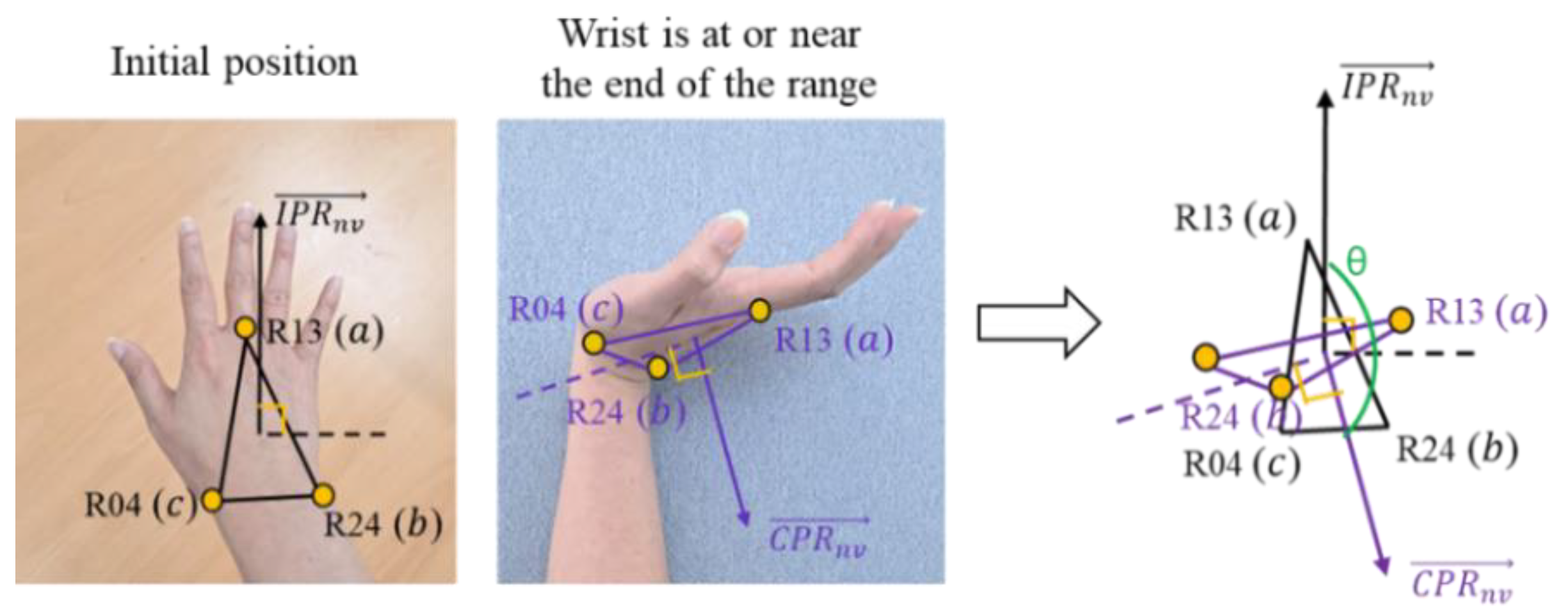

- Forearm pronation–supination

3.3.3. Body Posture Measurement

3.4. Evaluation

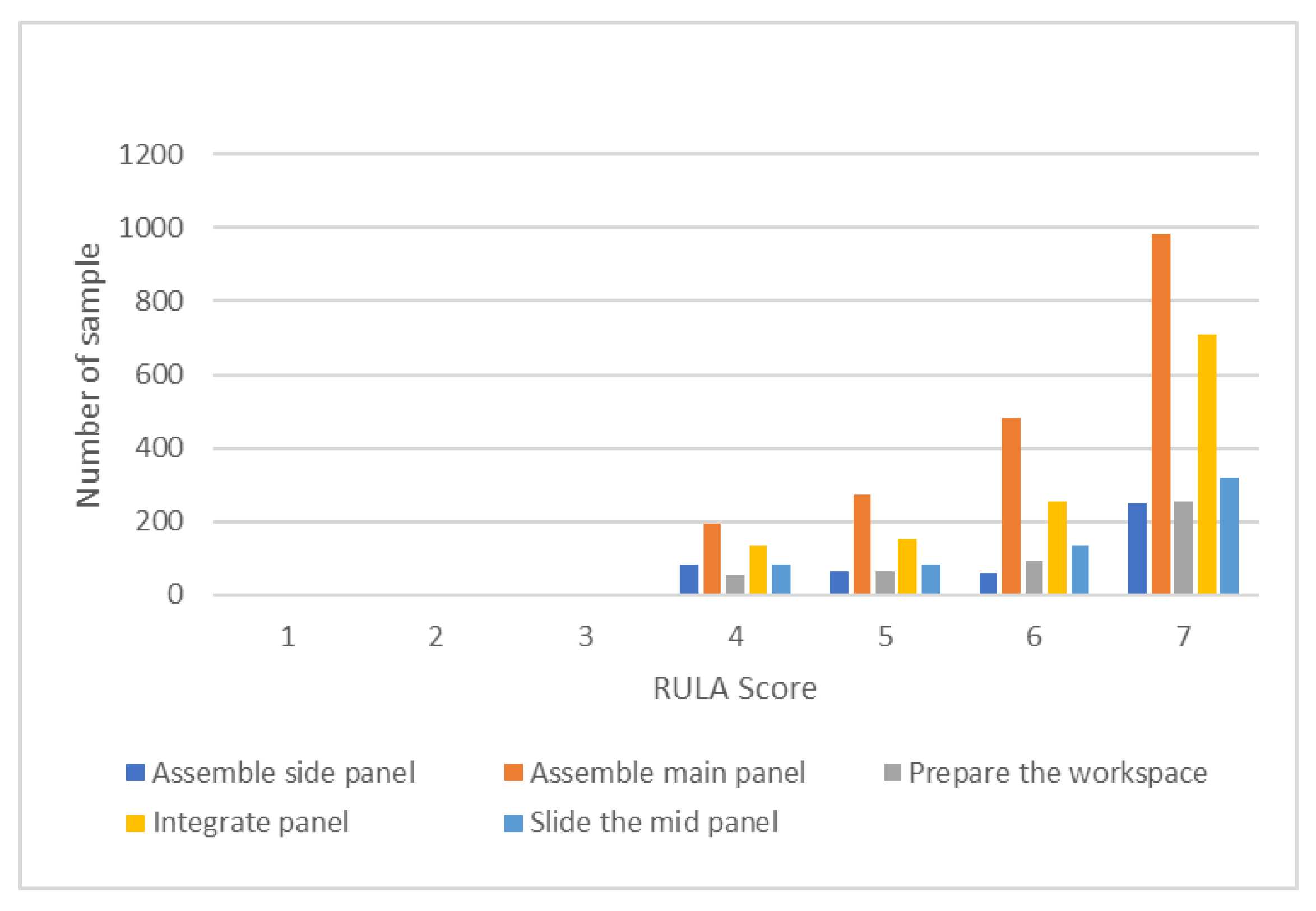

4. Experiment and Results

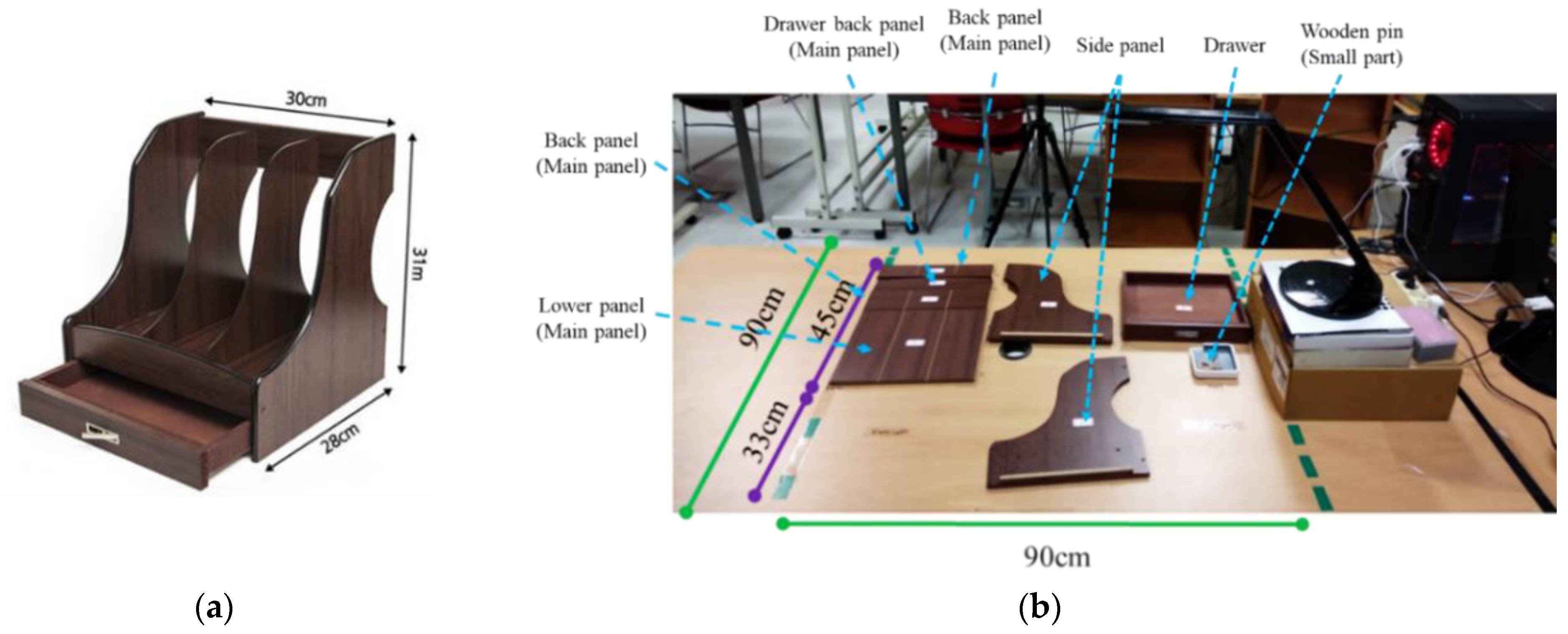

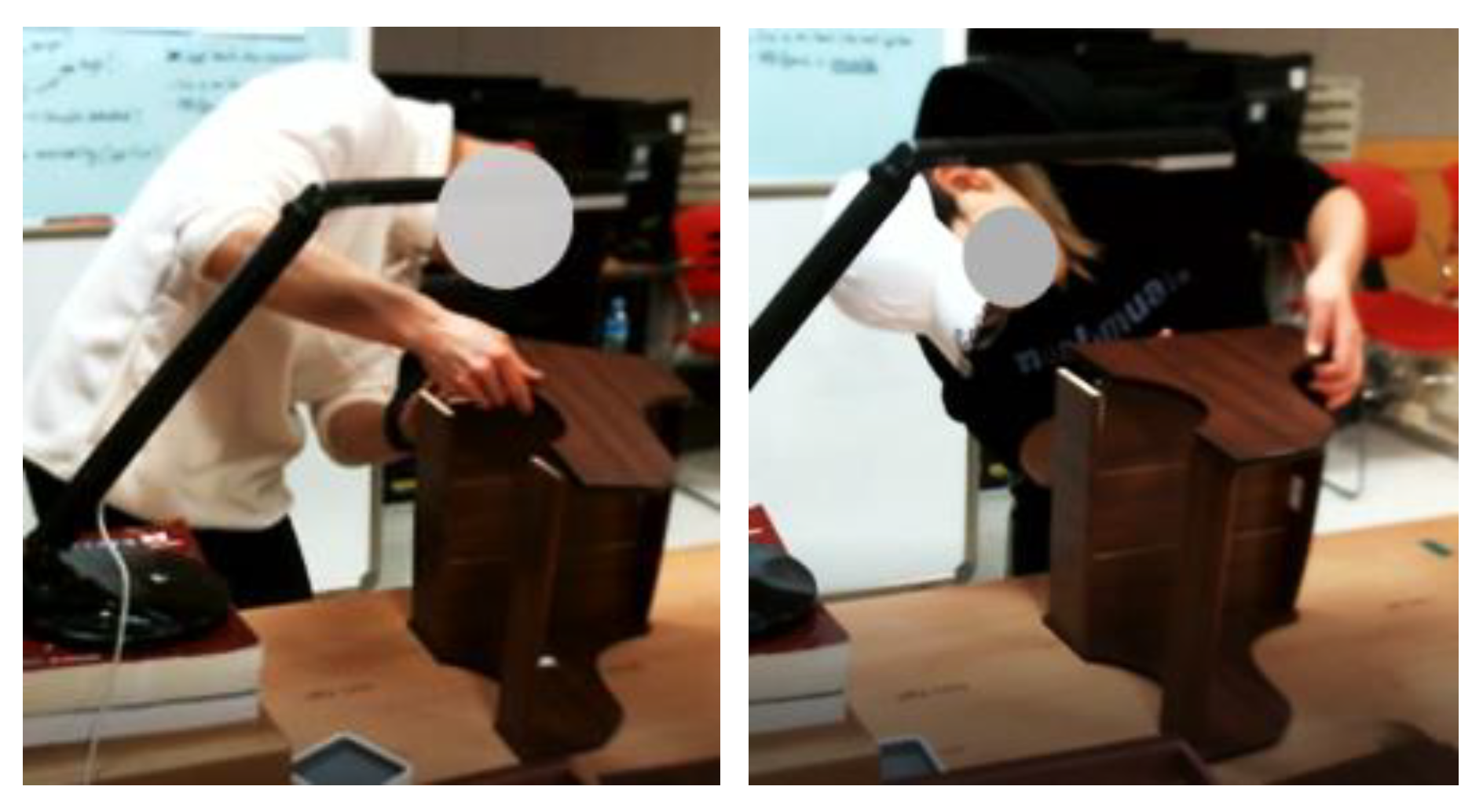

4.1. Laboratory Setup

4.2. Evaluation Results

4.2.1. Similarity Measurement

4.2.2. Personalized Measurement

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

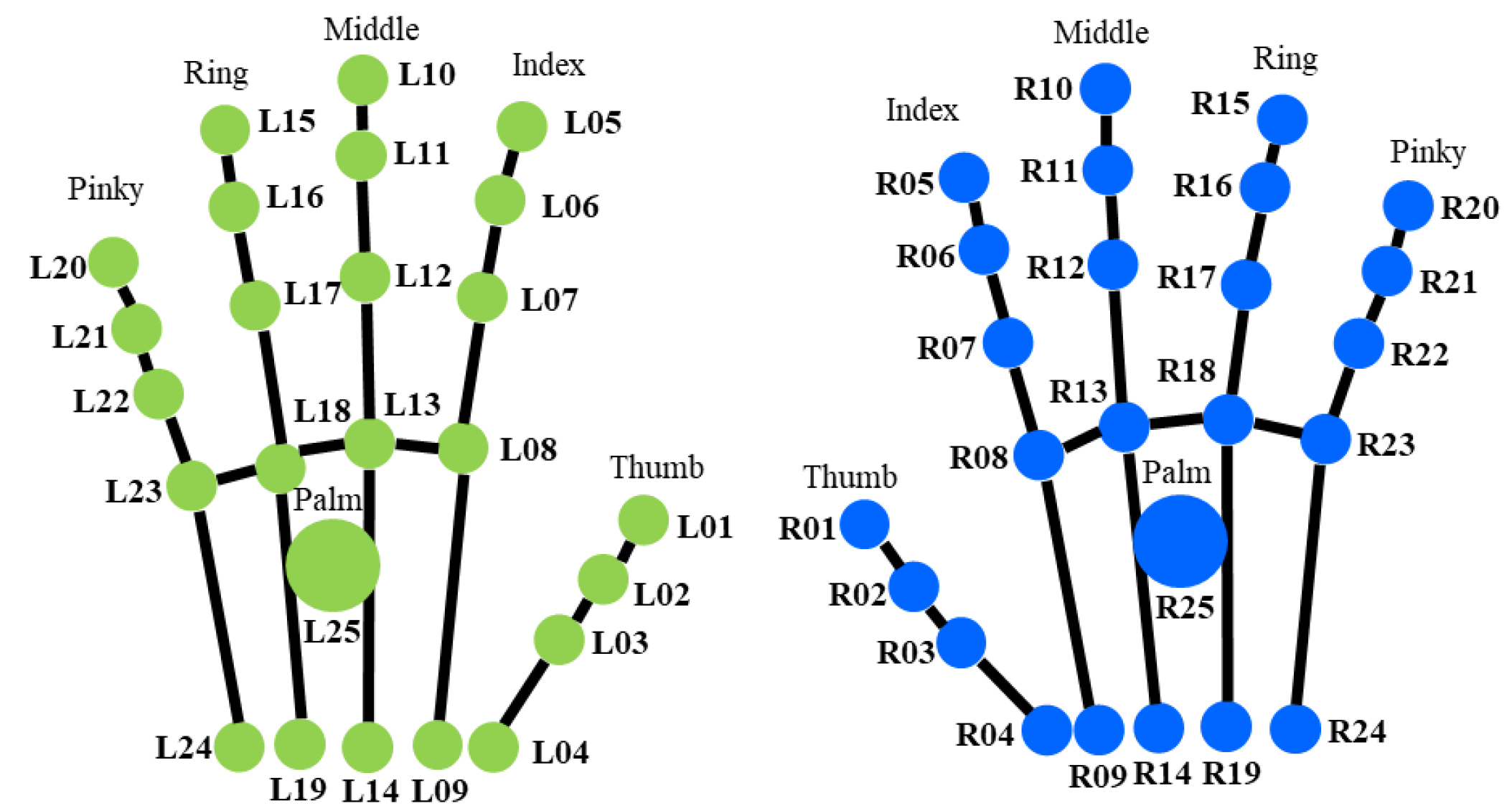

Appendix A

| Joint Label | Joint Name |

|---|---|

| L01–L04 | Left thumb joint |

| L05–L09 | Left index finger joint |

| L010–L14 | Left middle finger joint |

| L15–L19 | Left ring finger joint |

| L20–L24 | Left pinky finger joint |

| L25 | Left palm joint |

| R01–R04 | Right thumb joint |

| R05–R09 | Right index finger joint |

| R10–R14 | Right middle finger joint |

| R15–R19 | Right ring finger joint |

| R20–R24 | Right pinky finger joint |

| R25 | Right palm joint |

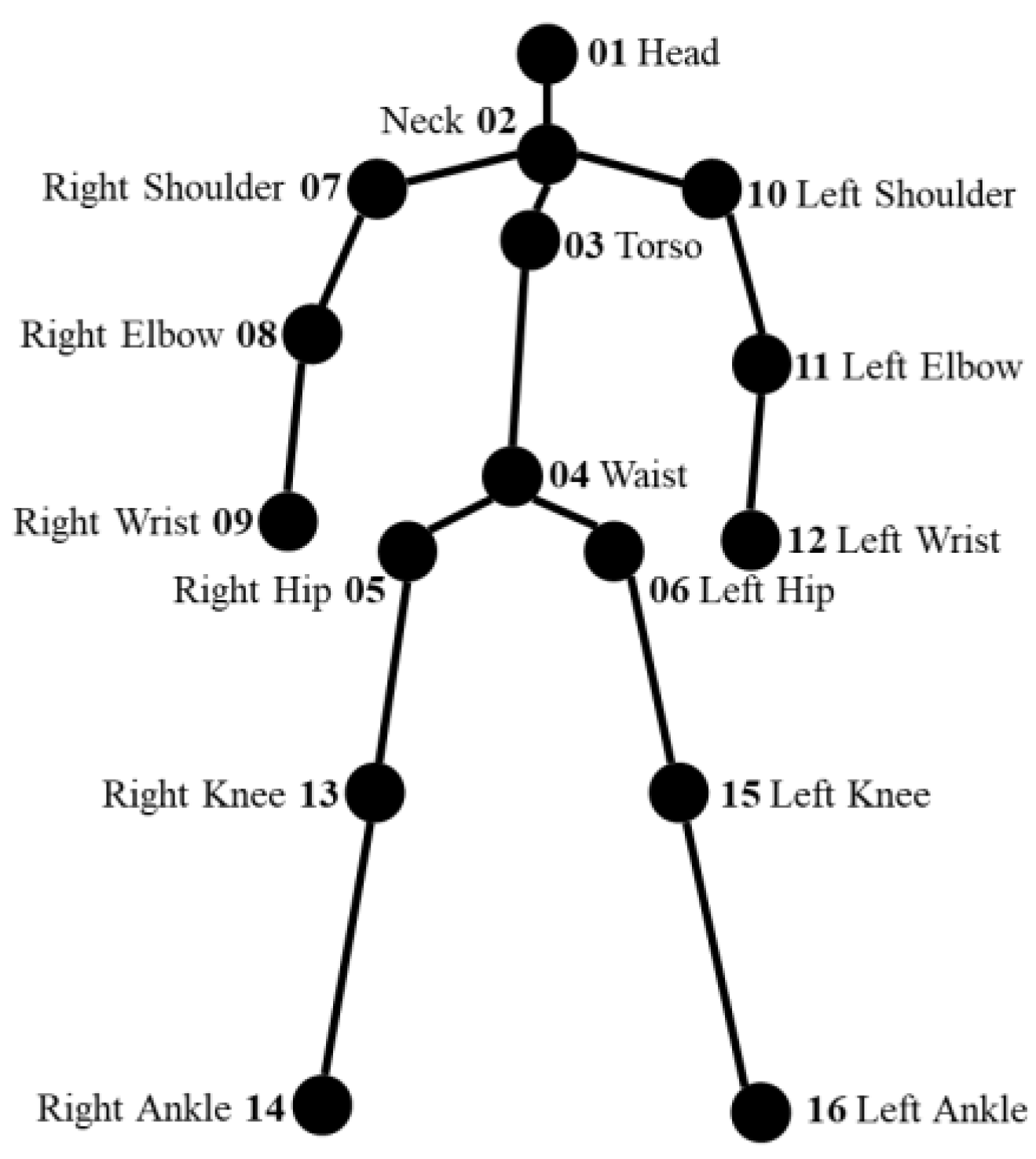

| Joint Label | Joint Name |

|---|---|

| 01 | Head |

| 02 | Neck |

| 03 | Torso |

| 04 | Waist |

| 05 | Right hip |

| 06 | Left hip |

| 07 | Right shoulder |

| 08 | Right elbow |

| 09 | Right wrist |

| 10 | Left shoulder |

| 11 | Left elbow |

| 12 | Left wrist |

| 13 | Right knee |

| 14 | Right ankle |

| 15 | Left knee |

| 16 | Left ankle |

| Body Section | All Data (Seconds) | 1 Pose (Seconds) |

|---|---|---|

| Upper arm | 378.3397958 | 0.00696489 |

| Lower arm | 430.0206666 | 0.007916288 |

| Wrist | 461.6637599 | 0.008498808 |

| Neck | 322.8410137 | 0.005943208 |

| Trunk | 297.4668169 | 0.005476092 |

| Section A (Left) | 70.83219051 | 0.001303956 |

| Section A (Right) | 63.70874691 | 0.00117282 |

| Section A (Max) | 76.78008223 | 0.001413451 |

| Section B | 64.08647466 | 0.001179773 |

| Section C (Left) | 56.95984936 | 0.001048579 |

| Section C (Right) | 51.78826308 | 0.000953375 |

| Section C (Max) | 69.78941703 | 0.001284759 |

| Total | 2344.277077 | 0.043156 |

References

- Humadi, A.; Nazarahari, M.; Ahmad, R.; Rouhani, H. In-field instrumented ergonomic risk assessment: Inertial measurement units versus Kinect V2. Int. J. Ind. Ergon. 2021, 84, 103147. [Google Scholar] [CrossRef]

- Kee, D. Development and evaluation of the novel postural loading on the entire body assessment. Ergonomics 2021, 64, 1555–1568. [Google Scholar] [CrossRef]

- Li, L.; Xu, X. A deep learning-based RULA method for working posture assessment. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2019, 63, 1090–1094. [Google Scholar] [CrossRef]

- Nath, N.D.; Chaspari, T.; Behzadan, A.H. Automated ergonomic risk monitoring using body-mounted sensors and machine learning. Adv. Eng. Inform. 2018, 38, 514–526. [Google Scholar] [CrossRef]

- Merikh-Nejadasl, A.; El Makrini, I.; Van De Perre, G.; Verstraten, T.; Vanderborght, B. A generic algorithm for computing optimal ergonomic postures during working in an industrial environment. Int. J. Ind. Ergon. 2021, 84, 103145. [Google Scholar] [CrossRef]

- Wang, J.; Han, S.; Li, X. 3D fuzzy ergonomic analysis for rapid workplace design and modification in construction. Autom. Constr. 2020, 123, 103521. [Google Scholar] [CrossRef]

- Donisi, L.; Cesarelli, G.; Coccia, A.; Panigazzi, M.; Capodaglio, E.; D’Addio, G. Work-Related Risk Assessment According to the Revised NIOSH Lifting Equation: A Preliminary Study Using a Wearable Inertial Sensor and Machine Learning. Sensors 2021, 21, 2593. [Google Scholar] [CrossRef] [PubMed]

- Colim, A.; Faria, C.; Braga, A.C.; Sousa, N.; Rocha, L.; Carneiro, P.; Costa, N.; Arezes, P. Towards an Ergonomic Assessment Framework for Industrial Assembly Workstations—A Case Study. Appl. Sci. 2020, 10, 3048. [Google Scholar] [CrossRef]

- Huang, C.; Kim, W.; Zhang, Y.; Xiong, S. Development and Validation of a Wearable Inertial Sensors-Based Automated System for Assessing Work-Related Musculoskeletal Disorders in the Workspace. Int. J. Environ. Res. Public Health 2020, 17, 6050. [Google Scholar] [CrossRef] [PubMed]

- Norasi, H.; Tetteh, E.; Money, S.R.; Davila, V.J.; Meltzer, A.J.; Morrow, M.M.; Fortune, E.; Mendes, B.C.; Hallbeck, M.S. Intraoperative posture and workload assessment in vascular surgery. Appl. Ergon. 2020, 92, 103344. [Google Scholar] [CrossRef] [PubMed]

- Abobakr, A.; Nahavandi, D.; Hossny, M.; Iskander, J.; Attia, M.; Nahavandi, S.; Smets, M. RGB-D ergonomic assessment system of adopted working postures. Appl. Ergon. 2019, 80, 75–88. [Google Scholar] [CrossRef] [PubMed]

- Chatzis, T.; Konstantinidis, D.; Dimitropoulos, K. Automatic Ergonomic Risk Assessment Using a Variational Deep Network Architecture. Sensors 2022, 22, 6051. [Google Scholar] [CrossRef] [PubMed]

- Plantard, P.; Shum, H.P.; Le Pierres, A.-S.; Multon, F. Validation of an ergonomic assessment method using Kinect data in real workplace conditions. Appl. Ergon. 2017, 65, 562–569. [Google Scholar] [CrossRef] [PubMed]

- Ito, H.; Nakamura, S. Rapid prototyping for series of tasks in atypical environment: Robotic system with reliable program-based and flexible learning-based approaches. ROBOMECH J. 2022, 9, 1–14. [Google Scholar] [CrossRef]

- Li, L.; Martin, T.; Xu, X. A novel vision-based real-time method for evaluating postural risk factors associated with musculoskeletal disorders. Appl. Ergon. 2020, 87, 103138. [Google Scholar] [CrossRef] [PubMed]

- Massiris Fernández, M.; Fernández, J.; Bajo, J.M.; Delrieux, C.A. Ergonomic risk assessment based on computer vision and machine learning. Comput. Ind. Eng. 2020, 149, 106816. [Google Scholar] [CrossRef]

- Bao, T.; Zaidi, S.A.R.; Xie, S.; Yang, P.; Zhang, Z.-Q. A CNN-LSTM Hybrid Model for Wrist Kinematics Estimation Using Surface Electromyography. IEEE Trans. Instrum. Meas. 2020, 70, 1–9. [Google Scholar] [CrossRef]

- Bao, T.; Zaidi, S.A.R.; Xie, S.; Yang, P.; Zhang, Z.-Q. Inter-Subject Domain Adaptation for CNN-Based Wrist Kinematics Estimation Using sEMG. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1068–1078. [Google Scholar] [CrossRef]

- Senjaya, W.F.; Prathama, F.; Setiawan, F.; Prabono, A.G.; Yahya, B.N.; Lee, S.L. Automated RULA for a sequence of activities based on sensor data. In Proceedings of the 2020 Fall Conference of the Korean Society of Industrial Engineering, Seoul, Republic of Korea, 23–25 November 2020. [Google Scholar]

- Xie, B.; Liu, H.; Alghofaili, R.; Zhang, Y.; Jiang, Y.; Lobo, F.D.; Li, C.; Li, W.; Huang, H.; Akdere, M.; et al. A Review on Virtual Reality Skill Training Applications. Front. Virtual Real. 2021, 2, 645153. [Google Scholar] [CrossRef]

- Charles, S.K. It’s All in the Wrist: A Quantitative Characterization of Human Wrist Control; Massachusetts Institute of Technology: Cambridge, MA, USA, 2008. [Google Scholar]

- Nweke, H.F.; Teh, Y.W.; Mujtaba, G.; Alo, U.R.; Al-Garadi, M.A. Multi-sensor fusion based on multiple classifier systems for human activity identification. Human-Centric Comput. Inf. Sci. 2019, 9, 1–44. [Google Scholar] [CrossRef]

- Das, B. Improved work organization to increase the productivity in manual brick manufacturing unit of West Bengal, India. Int. J. Ind. Ergon. 2021, 81, 103040. [Google Scholar] [CrossRef]

- Bortolini, M.; Gamberi, M.; Pilati, F.; Regattieri, A. Automatic assessment of the ergonomic risk for manual manufacturing and assembly activities through optical motion capture technology. Procedia CIRP 2018, 72, 81–86. [Google Scholar] [CrossRef]

- Nahavandi, D.; Hossny, M. Skeleton-free RULA ergonomic assessment using Kinect sensors. Intell. Decis. Technol. 2017, 11, 275–284. [Google Scholar] [CrossRef]

- Tamantini, C.; Cordella, F.; Lauretti, C.; Zollo, L. The WGD—A Dataset of Assembly Line Working Gestures for Ergonomic Analysis and Work-Related Injuries Prevention. Sensors 2021, 21, 7600. [Google Scholar] [CrossRef]

- Slyper, R.; Hodgins, J.K. Action Capture with Accelerometers. In Proceedings of the 2008 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Dublin, Ireland, 7–9 July 2008; pp. 193–199. [Google Scholar]

- Mousas, C. Full-Body Locomotion Reconstruction of Virtual Characters Using a Single Inertial Measurement Unit. Sensors 2017, 17, 2589. [Google Scholar] [CrossRef]

- Lawrence, N.D. Gaussian Process Latent Variable Models for Visualisation of High Dimensional Data. In Proceedings of the 16th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–13 December 2003. [Google Scholar]

- Eom, H.; Choi, B.; Noh, J. Data-Driven Reconstruction of Human Locomotion Using a Single Smartphone. Comput. Graph. Forum 2014, 33, 11–19. [Google Scholar] [CrossRef]

- Brigante, C.M.N.; Abbate, N.; Basile, A.; Faulisi, A.C.; Sessa, S. Towards Miniaturization of a MEMS-Based Wearable Motion Capture System. IEEE Trans. Ind. Electron. 2011, 58, 3234–3241. [Google Scholar] [CrossRef]

- Doniyorbek, K.; Jung, K. Development of a Semi-Automatic Rapid Entire Body Assessment System using the Open Pose and a Single Working Image. In 2019 Fall Conference of the Korean Society of Industrial Engineering; Springer: New York, NY, USA, 2020; pp. 1503–1517. [Google Scholar]

- Manghisi, V.M.; Uva, A.E.; Fiorentino, M.; Bevilacqua, V.; Trotta, G.F.; Monno, G. Real time RULA assessment using Kinect v2 sensor. Appl. Ergon. 2017, 65, 481–491. [Google Scholar] [CrossRef]

- Mehrizi, R.; Peng, X.; Xu, X.; Zhang, S.; Metaxas, D.; Li, K. A computer vision based method for 3D posture estimation of symmetrical lifting. J. Biomech. 2018, 69, 40–46. [Google Scholar] [CrossRef]

- Li, X.; Han, S.; Gül, M.; Al-Hussein, M. Automated post-3D visualization ergonomic analysis system for rapid workplace design in modular construction. Autom. Constr. 2018, 98, 160–174. [Google Scholar] [CrossRef]

- Bartnicka, J.; Zietkiewicz, A.A.; Kowalski, G.J. An ergonomics study on wrist posture when using laparoscopic tools in four techniques in minimally invasive surgery. Int. J. Occup. Saf. Ergon. 2018, 24, 438–449. [Google Scholar] [CrossRef] [PubMed]

- Sánchez-Margallo, J.A.; González, A.G.; Moruno, L.G.; Gómez-Blanco, J.C.; Pagador, J.B.; Sánchez-Margallo, F.M. Comparative Study of the Use of Different Sizes of an Ergonomic Instrument Handle for Laparoscopic Surgery. Appl. Sci. 2020, 10, 1526. [Google Scholar] [CrossRef]

- Onyebeke, L.C.; Young, J.G.; Trudeau, M.B.; Dennerlein, J.T. Effects of forearm and palm supports on the upper extremity during computer mouse use. Appl. Ergon. 2014, 45, 564–570. [Google Scholar] [CrossRef] [PubMed]

- Ma, C.; Weng, X.; Shan, Z. Early Classification of Multivariate Time Series Based on Piecewise Aggregate Approximation. Lect. Notes Comput. Sci. 2017, 10594, 81–88. [Google Scholar] [CrossRef]

- Middlesworth, M. A Step-by-Step Guide Rapid Upper Limb Assessment (RULA). Ergonomics Plus. 2012. Available online: www.ergo-plus.com (accessed on 19 July 2021).

- Gellert, W.; Hellwich, M.; Kästner, H.; Küstner, H. (Eds.) VNR Concise Encyclopedia of Mathematics, 2nd ed.; Springer Science & Business Media: New York, NY, USA, 2012. [Google Scholar]

- Cassisi, C.; Montalto, P.; Aliotta, M.; Cannata, A.; Pulvirenti, A. Similarity Measures and Dimensionality Reduction Techniques for Time Series Data Mining. In Advances in Data Mining Knowledge Discovery and Applications; IntechOpen: London, UK, 2012; pp. 71–96. [Google Scholar] [CrossRef]

- Ferguson, D. Therbligs: The Keys to Simplifying Work. The GIlbreth Network. 2000. Available online: http://web.mit.edu/allanmc/www/Therblgs.pdf (accessed on 28 December 2020).

- Oyekan, J.; Hutabarat, W.; Turner, C.; Arnoult, C.; Tiwari, A. Using Therbligs to embed intelligence in workpieces for digital assistive assembly. J. Ambient Intell. Humaniz. Comput. 2019, 11, 2489–2503. [Google Scholar] [CrossRef]

| Ref. | Data | Body Joint | Finger Joint | Assessment Tools | Wrist Score |

|---|---|---|---|---|---|

| [15] | Image | 17 | - | RULA | Set manually |

| [16] | Image and video | 25 | - | RULA | Set manually |

| [3] | Image | 17 | - | RULA | Set manually |

| [11] | Image | - | - | RULA | Not available |

| [33] | Skeleton | 25 | - | RULA | Set manually |

| [13] | Skeleton | 12 | - | RULA | Set manually |

| [34] | Image | 26 | - | RULA | Not available |

| [32] | Image | 17 | - | REBA | Set manually |

| [35] | 3D Model | 22 | - | RULA and REBA | Not available |

| [10] | Survey and wearable sensors | 4 | - | RULA | Not available |

| [24] | Skeleton | 25 | - | EAWS | Not available |

| [9] | Wearable inertial sensors | 17 | - | RULA and REBA | Not available |

| Ours | - Body-Tracking Sensor - Hand-Tracking Sensor | 16 | 50 | RULA | Calculate by system |

| Score | Risk Level | Action to Be Taken |

|---|---|---|

| 1–2 | Negligible | Acceptable posture if it is not repeated for a longer period |

| 3–4 | Low | Further investigation and change may be needed in future |

| 5–6 | Medium | The investigation and change are required soon |

| 7 | High | The investigation and change are required immediately |

| Wrist Posture | Wrist Kinematics | Side | Formula | Score | |

|---|---|---|---|---|---|

| Wrist | Wrist Position | flexion–extension | Right | R13, R14 | +1 (0°) +2 (15° up, 15° down) +3 (>15° up, >15° down) |

| Left | L13, L14 | ||||

| Wrist is bent from midline | radial–ulnar deviation | Right | R05, R04, R24 | +1 (15° left, 15° right) | |

| Left | L05, L04, L24 | ||||

| Wrist Twist | Wrist is twisted in mid-range | pronation–supination | Right | , | +1 (75°,105°) |

| Left | , | ||||

| Wrist is at or near the end of the range | range of pronation–supination | Right | , | +2 (105°, 165°) | |

| Left | , | ||||

| Body Regions | Side | Formula | Score | |

|---|---|---|---|---|

| Upper Arm | Upper arm position | Right | 08, 07, 05 | +1 (−20°, 20°) +2 (−∞, −20°) +2 (20°, 45°) +3 (45°, 90°) +4 (90°, ∞) |

| Left | 11, 10, 06 | |||

| Shoulder is raised | Right | 04, 02, 07 | +1 (90°, ∞) | |

| Left | 04, 19, 02 | |||

| Upper arm is abducted | Right | 02, 07, 08 | +1 (20°, ∞) | |

| Left | 02, 10, 11 | |||

| Lower Arm | Lower arm position | Right | 09, 08, 07 | +1 (60°, 100°) +2 (0°, 60°) +2 (100°, ∞) |

| Left | 12, 11, 10 | |||

| Arm is working across the midline | Right | 07, 03, 09 | +1 (90°, ∞) | |

| Left | 10, 03, 12 | |||

| Arm is out to the side of the body | Right | 08, 07, 05 | +1 (30°, ∞) | |

| Left | 11, 10, 06 | |||

| Neck | Neck position | 01, 02, 04 | +1 (0°, 10°) +2 (10°, 20°) +3 (20°, ∞) +4 (−∞, 0°) | |

| Neck is side bending | 90 - (10, 02, 01) | +1 (20°, ∞) | ||

| Trunk | Trunk position | 180 - (01, 04, [0,0,1]) | +1 (0°) +2 (0°, 20°) +3 (20°, 60°) +4 (60°, ∞) | |

| Trunk is twisted | 04, 06, NV (02, 05,06) | +1 (20°, ∞) to left and right | ||

| Trunk is side bending | Right | 02, 04, 05 | +1 (20°, ∞) | |

| Left | 02, 04, 06 | |||

| High-Level Activity | Upper Arm | Lower Arm | Wrist Position | Wrist Twist | |||||

|---|---|---|---|---|---|---|---|---|---|

| Right | Left | Right | Left | Right | Left | Right | Left | AVG | |

| Assemble side panel | 0.891 | 0.902 | 0.914 | 0.953 | 0.94 | 0.947 | 0.846 | 0.916 | 0.914 |

| Assemble main panel | 0.87 | 0.873 | 0.853 | 0.861 | 0.941 | 0.942 | 0.971 | 0.837 | 0.894 |

| Prepare the workspace | 0.882 | 0.914 | 0.917 | 0.882 | 0.934 | 0.939 | 0.837 | 0.89 | 0.899 |

| Integrate panel | 0.831 | 0.873 | 0.839 | 0.822 | 0.954 | 0.947 | 0.821 | 0.895 | 0.873 |

| Slide the mid-panel | 0.865 | 0.909 | 0.876 | 0.856 | 0.944 | 0.951 | 0.833 | 0.907 | 0.893 |

| AVG | 0.87 | 0.89 | 0.88 | 0.87 | 0.94 | 0.95 | 0.86 | 0.89 | |

| High-Level Activity | Upper Arm | Lower Arm | Wrist Position | Wrist Twist | AVG |

|---|---|---|---|---|---|

| Assemble side panel | 0.926 | 0.865 | 0.979 | 0.961 | 0.933 |

| Assemble main panel | 0.897 | 0.845 | 0.971 | 0.968 | 0.920 |

| Prepare the workspace | 0.881 | 0.832 | 0.963 | 0.949 | 0.906 |

| Integrate panel | 0.899 | 0.824 | 0.981 | 0.966 | 0.918 |

| Slide the mid-panel | 0.861 | 0.849 | 0.979 | 0.968 | 0.914 |

| AVG | 0.893 | 0.843 | 0.975 | 0.962 |

| High-Level Activity | Previous Study [19] | This Study | ||

|---|---|---|---|---|

| Wrist Position | Wrist Twist | Wrist Position | Wrist Twist | |

| Assemble side panel | 0.979 | 0.958 | 0.979 | 0.961 |

| Assemble main panel | 0.915 | 0.966 | 0.971 | 0.968 |

| Prepare the workspace | 0.869 | 0.948 | 0.963 | 0.949 |

| Integrate panel | 0.863 | 0.956 | 0.981 | 0.966 |

| Slide the mid-panel | 0.908 | 0.968 | 0.979 | 0.968 |

| AVG | 0.907 | 0.959 | 0.975 | 0.962 |

| High-Level Activity | Neck | Trunk | AVG |

|---|---|---|---|

| Assemble side panel | 0.839 | 0.828 | 0.834 |

| Assemble main panel | 0.819 | 0.805 | 0.812 |

| Prepare the workspace | 0.806 | 0.835 | 0.821 |

| Integrate panel | 0.81 | 0.808 | 0.809 |

| Slide the mid-panel | 0.836 | 0.83 | 0.833 |

| AVG | 0.82 | 0.82 |

| High-Level Activity | Grand Score | ||

|---|---|---|---|

| Right | Left | General | |

| Assemble side panel | 0.88 | 0.884 | 0.899 |

| Assemble main panel | 0.878 | 0.881 | 0.898 |

| Prepare the workspace | 0.852 | 0.843 | 0.861 |

| Integrate panel | 0.89 | 0.882 | 0.908 |

| Slide the mid-panel | 0.869 | 0.858 | 0.882 |

| AVG | 0.87 | 0.87 | 0.89 |

| S1 | S2 | S3 | S4 | S5 | S6 | |

|---|---|---|---|---|---|---|

| S1 | 1.0 | 0.74 × 10−3 | 1.41 × 10−15 | 2.53 × 10−7 | 9.77 × 10−13 | 1.0 |

| S2 | 0.74 × 10−3 | 1.0 | 2.32 × 10−7 | 6.54 × 10−2 | 0.18 × 10−3 | 0.74 × 10−3 |

| S3 | 1.41 × 10−15 | 2.32 × 10−7 | 1.0 | 0.27 × 10−3 | 2.48 × 10−2 | 1.41 × 10−15 |

| S4 | 2.53 × 10−7 | 6.54 × 10−2 | 0.27 × 10−3 | 1.0 | 6.32 × 10−2 | 2.53 × 10−7 |

| S5 | 9.77 × 10−13 | 0.18 × 10−3 | 2.48 × 10−2 | 6.32 × 10−2 | 1.0 | 9.77 × 10−13 |

| S6 | 1.0 | 0.74 × 10−3 | 1.41 × 10−15 | 2.53 × 10−7 | 9.77 × 10−13 | 1.0 |

| S7 | 8.53 × 10−1 | 0.22 × 10−3 | 1.04 × 10−16 | 2.76 × 10−8 | 4.06 × 10−14 | 8.53 × 10−1 |

| S8 | 4.36 × 10−3 | 5.11 × 10−1 | 5.05 × 10−9 | 0.99 × 10−2 | 5.55 × 10−6 | 0.44 × 10−2 |

| S9 | 6.30 × 10−8 | 4.57 × 10−2 | 0.24 × 10−3 | 9.24 × 10−1 | 6.87 × 10−2 | 6.30 × 10−8 |

| S10 | 9.07 × 10−1 | 0.32 × 10−3 | 7.12 × 10−18 | 2.59 × 10−8 | 1.25 × 10−14 | 9.07 × 10−1 |

| S11 | 9.79 × 10−8 | 5.49 × 10−2 | 0.19 × 10−3 | 9.48 × 10−1 | 6.90 × 10−2 | 9.79 × 10−8 |

| S12 | 1.01 × 10−1 | 8.66 × 10−2 | 8.12 × 10−11 | 0.43 × 10−3 | 5.85 × 10−8 | 1.01 × 10−1 |

| S7 | S8 | S9 | S10 | S11 | S12 | |

|---|---|---|---|---|---|---|

| S1 | 8.53 × 10−1 | 0.44 × 10−2 | 6.30 × 10−8 | 9.07 × 10−1 | 9.79 × 10−8 | 1.01 × 10−1 |

| S2 | 0.22 × 10−3 | 5.11 × 10−1 | 0.46 × 10−1 | 0.32 × 10−3 | 5.49 × 10−2 | 0.87 × 10−1 |

| S3 | 1.04 × 10−16 | 5.05 × 10−9 | 0.24 × 10−3 | 7.12 × 10−18 | 0.19 × 10−3 | 8.12 × 10−11 |

| S4 | 2.76 × 10−8 | 0.99 × 10−2 | 9.24 × 10−1 | 2.59 × 10−8 | 9.48 × 10−1 | 0.43 × 10−3 |

| S5 | 4.06 × 10−14 | 5.55 × 10−6 | 0.69 × 10−1 | 1.25 × 10−14 | 0.69 × 10−1 | 5.85 × 10−8 |

| S6 | 8.53 × 10−1 | 0.436 × 10−2 | 6.30 × 10−8 | 9.07 × 10−1 | 9.79 × 10−8 | 1.01 × 10−1 |

| S7 | 1.0 | 0.150 × 10−2 | 5.49 × 10−9 | 7.45 × 10−1 | 1.48 × 10−8 | 0.59 × 10−1 |

| S8 | 0.15 × 10−2 | 1.0 | 0.58 × 10−2 | 0.25 × 10−2 | 0.84 × 10−2 | 2.56 × 10−1 |

| S9 | 5.49 × 10−9 | 0.58 × 10−2 | 1.0 | 5.06 × 10−9 | 9.78 × 10−1 | 0.19 × 10−3 |

| S10 | 7.45 × 10−1 | 0.25 × 10−2 | 5.06 × 10−9 | 1.0 | 1.07 × 10−8 | 0.92 × 10−1 |

| S11 | 1.48 × 10−8 | 0.84 × 10−2 | 9.78 × 10−1 | 1.06 × 10−8 | 1.0 | 0.31 × 10−3 |

| S12 | 5.87 × 10−2 | 2.56 × 10−1 | 0.19 × 10−3 | 0.91 × 10−1 | 0.31 × 10−3 | 1.0 |

| High-Level Activity | Number of Samples |

|---|---|

| Assemble side panel | 458 |

| Assemble main panel | 1936 |

| Integrate panel | 464 |

| Prepare the workspace | 1257 |

| Slide the mid-panel | 614 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Senjaya, W.F.; Yahya, B.N.; Lee, S.-L. Sensor-Based Motion Tracking System Evaluation for RULA in Assembly Task. Sensors 2022, 22, 8898. https://doi.org/10.3390/s22228898

Senjaya WF, Yahya BN, Lee S-L. Sensor-Based Motion Tracking System Evaluation for RULA in Assembly Task. Sensors. 2022; 22(22):8898. https://doi.org/10.3390/s22228898

Chicago/Turabian StyleSenjaya, Wenny Franciska, Bernardo Nugroho Yahya, and Seok-Lyong Lee. 2022. "Sensor-Based Motion Tracking System Evaluation for RULA in Assembly Task" Sensors 22, no. 22: 8898. https://doi.org/10.3390/s22228898

APA StyleSenjaya, W. F., Yahya, B. N., & Lee, S.-L. (2022). Sensor-Based Motion Tracking System Evaluation for RULA in Assembly Task. Sensors, 22(22), 8898. https://doi.org/10.3390/s22228898