A Novel Fuzzy Controller for Visible-Light Camera Using RBF-ANN: Enhanced Positioning and Autofocusing

Abstract

1. Introduction

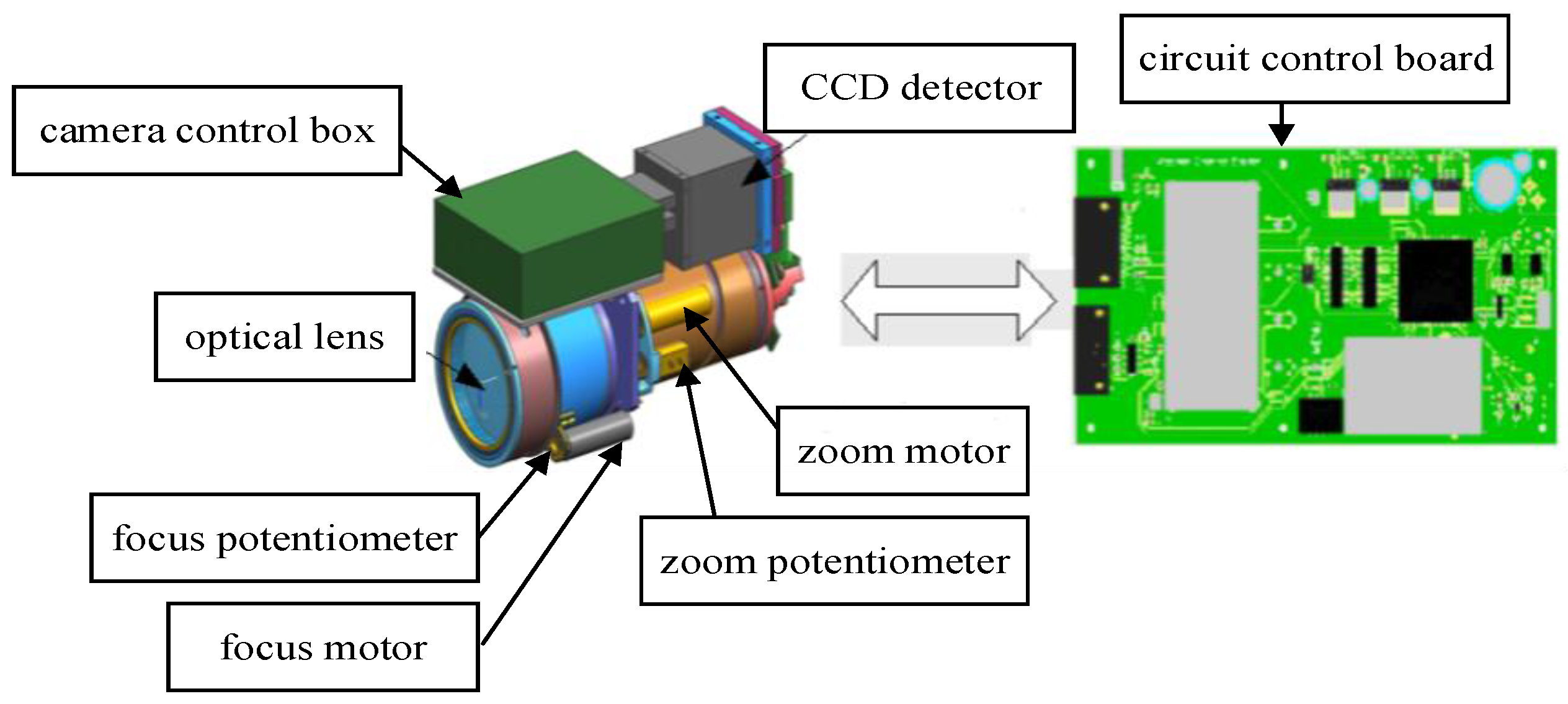

2. Visible-Light Camera System Hardware Structure

3. Visible-Light Camera Control Algorithm

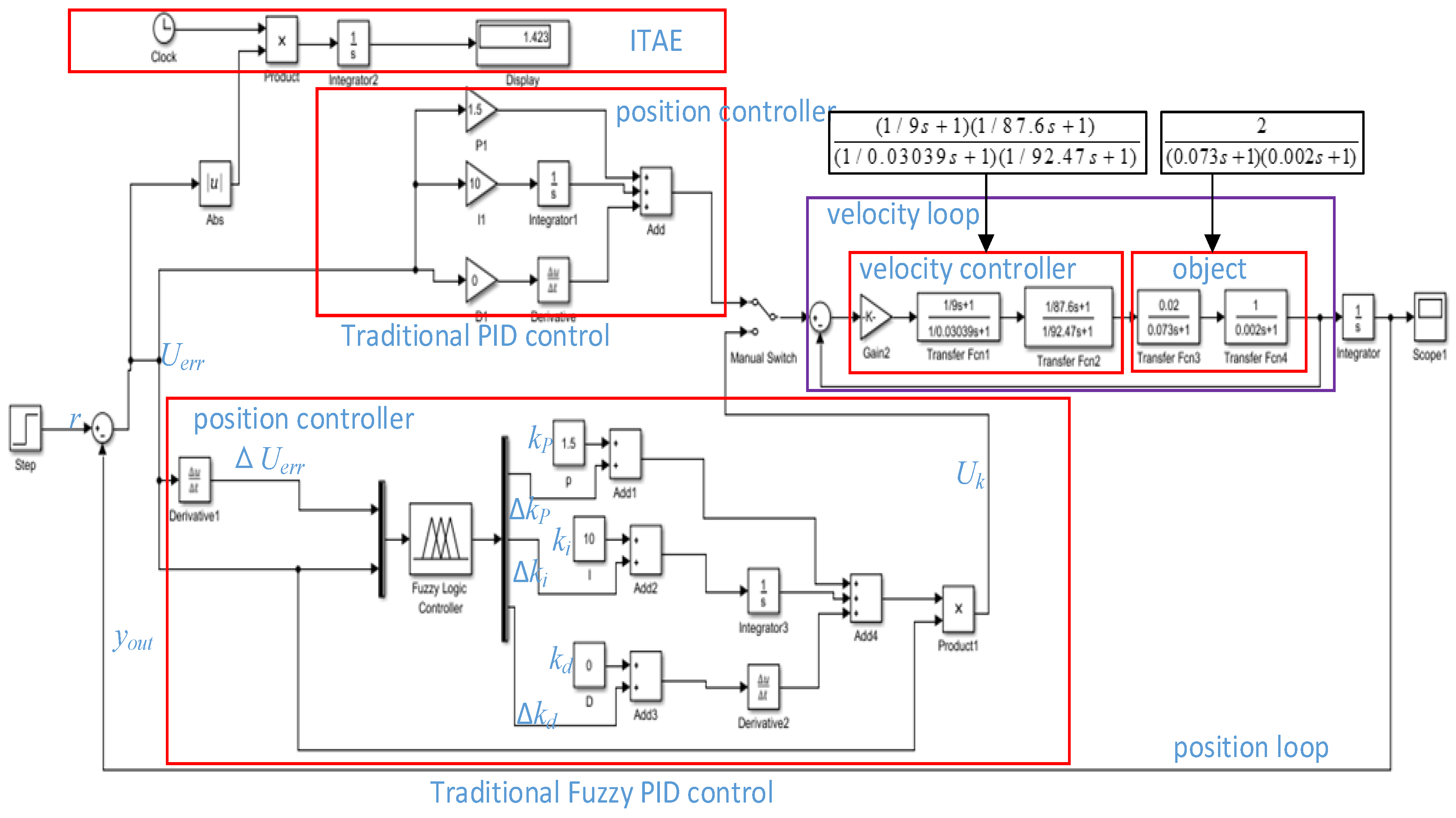

3.1. Traditional Fuzzy PID Control Algorithm

3.2. Improved RBF ANN Fuzzy PID Control Algorithm

3.3. The Focal Length Fitting Algorithm

3.3.1. Camera Focal Length Calibration

3.3.2. Relation of the Focal Length and Zoom Potentiometer Code Values

3.3.3. Levenberg–Marquardt Algorithm Identification Parameters

3.4. Autofocusing Control Algorithm

3.4.1. Improving the Area Search Algorithm

3.4.2. Improving the Autofocusing Algorithm Implementation Process

4. Experimental Results

4.1. Improved RBF ANN Fuzzy Control Experiment

4.2. Continuous Zoom Experiment

4.3. The Focal Length Fitting Experiment

4.4. Autofocusing Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, J.P.; Li, Y.; Chen, J.; Zhang, H. Research on six degrees of freedom compound control technology for improving photoelectric pod pointing accuracy. Opt. Rev. 2017, 24, 579–589. [Google Scholar] [CrossRef][Green Version]

- Xue, S.T.; Zhao, Z.G.; Liu, F. Latent variable point-to-point iterative learning model predictive control via reference trajectory updating. Eur. J. Control 2022, 65, 100631. [Google Scholar] [CrossRef]

- Yang, C.Y.; Li, F.; Kong, Q.K.; Chen, X.Y.; Wang, J. Asynchronous fault-tolerant control for stochastic jumping singularly perturbed systems: An H∞ sliding mode control scheme. Appl. Math. Comput. 2020, 389, 125562. [Google Scholar] [CrossRef]

- Zhu, H.Z.; Weng, F.L.; Makeximu; Li, D.; Zhu, M. Active control of combustion oscillation with active disturbance rejection control (ADRC) method. J. Sound Vib. 2022, 540, 117245. [Google Scholar] [CrossRef]

- Wu, Z.L.; Makeximu; Yuan, J.; Liu, Y.; Li, D.; Chen, Y.Q. A synthesis method for first-order active disturbance rejection controllers: Procedures and field tests. Control Eng. Pract. 2022, 127, 105286. [Google Scholar] [CrossRef]

- Song, B.; Zhang, Y.; Park, J.H. H∞ control for Poisson-driven stochastic systems. Appl. Math. Comput. 2020, 392, 125716. [Google Scholar] [CrossRef]

- Khishtan, A.; Wang, Z.H.; Kim, S.H.; Park, S.S.; Lee, J. Nonlinear disturbance observer-based compliance error compensation in robotic milling. Manuf. Lett. 2022, 33, 117–122. [Google Scholar] [CrossRef]

- Jimoh, I.A.; Küçükdemiral, I.B.; Bevan, G. Fin control for ship roll motion stabilisation based on observer enhanced MPC with disturbance rate compensation. Ocean. Eng. 2021, 224, 108706. [Google Scholar] [CrossRef]

- Zhang, G.W.; Wang, X.Y.; Li, L. Design and Simulation of Intelligent Vehicle Trajectory Tracking Control Algorithm Based on LQR and PID. J. Taiyuan Univ. Technol. 2022, 43, 72–79. [Google Scholar]

- Hao, F.; Song, Q.Y.; Ma, S.; Ma, W.; Yin, C.; Cao, D.; Yu, H. A new adaptive sliding mode controller based on the RBF neural network for an electro-hydraulic servo system. ISA Trans. 2022, 129, 472–484. [Google Scholar]

- Zhang, R.; Gao, L.X. The Brushless DC motor control system Based on neural network fuzzy PID control of power electronics technology. Optik 2022, 271, 169879. [Google Scholar] [CrossRef]

- Dameshghi, A.; Refan, M.H.; Amiri, P. Wind turbine doubly fed induction generator rotor electrical asymmetry detection based on an adaptive least mean squares filtering of wavelet transform. Wind. Eng. 2021, 45, 138–159. [Google Scholar] [CrossRef]

- Hsu, C.-F.; Lin, C.-M.; Yeh, R.-G. Supervisory adaptive dynamic RBF-based neural-fuzzy control system design for unknown nonlinear systems. Appl. Soft Comput. 2013, 13, 1620–1626. [Google Scholar] [CrossRef]

- Yuan, Y.; Yu, S.; Wang, X.R.; Zhang, J.L. Resolution enhanced 3D image reconstruction by use of ray tracing and autofocus in computational integral imaging. Opt. Commun. 2017, 404, 73–79. [Google Scholar] [CrossRef]

- Choi, H.; Ha, H.-G.; Lee, H.; Hong, J. Robust control point estimation with an out-of-focus camera calibration pattern. Pattern Recognit. Lett. 2021, 143, 1–7. [Google Scholar] [CrossRef]

- Chen, C.Y.; Hwang, R.C.; Chen, Y.J. A passive autofocus camera control system. Appl. Soft Comput. 2010, 10, 293–303. [Google Scholar] [CrossRef]

- Wang, D.J.; Ding, X.; Zhang, T.; Kuang, H. A fast autofocusing technique for the long focal lens TDI CCD camera in remote sensing applications. Opt. Laser Technol. 2013, 45, 190–197. [Google Scholar] [CrossRef]

- Liao, L.Y.; Albuquerque, B.F.C.; Parks, R.E.; Sasian, J.M. Precision focal-length measurement using imaging conjugates. Opt. Eng. 2012, 51, 113604. [Google Scholar] [CrossRef][Green Version]

- Yang, G.; Miao, L.; Zhang, X.; Sun, C.; Qiao, Y. High-accuracy measurement of the focal length and distortion of optical systems based on interferometry. Appl. Opt. 2018, 57, 5217–5223. [Google Scholar] [CrossRef]

- Lee, S. Talbot interferometry for measuring the focal length of a lens without moier fringes. J. Opt. Soc. Korea 2015, 19, 165–168. [Google Scholar] [CrossRef]

- Wang, W.W.; Li, S.Y.; Liu, P.H.; Zhang, Y.; Yan, Q.; Guo, T.; Zhou, X.; Wu, C. Improved depth of field of the composite microlens arrays by electrically. Opt. Laser Technol. 2022, 148, 107748. [Google Scholar] [CrossRef]

- Zhang, L.P.; He, S.L.; Peng, X.; Huang, L.; Yang, X.; Wang, G.; Liu, H.; He, Y.; Deng, D. Tightly focusing evolution of the auto-focusing linear polarized circular Pearcey Gaussian vortex beams. Chaos Solitons Fractals 2021, 143, 110608. [Google Scholar] [CrossRef]

- He, F.; Bai, J. Analysis and correction of spherical aberrations in long focal length measurements. Opt. Commun. 2021, 482, 126564. [Google Scholar] [CrossRef]

- Liu, H.; Li, H.Y.; Luo, J.; Xie, S.; Sun, Y. Constuction of all-in-focus images assisted by depth sensing. Sensors 2019, 19, 1409. [Google Scholar] [CrossRef]

- Lee, H.-J.; Park, T.-H. Autofocusing system for a curved panel using a curve estimation. In Proceedings of the 2016 IEEE International Conference on Information and Automation (ICIA), Ningbo, China, 1–3 August 2016; pp. 1116–1121. [Google Scholar]

- Wang, X.Y.; Ji, J.Y.; Zhu, Y.Y. A zoom tracking algorithm based on deep learning. Multimed. Tools Appl. 2021, 80, 25087–25105. [Google Scholar] [CrossRef]

- Ma, R.; Yang, D.Y.; Yu, T.; Li, T.; Sun, X.; Zhu, Y.; Yang, N.; Wang, H.; Shi, Y. Sharpness-statistics-based autofocusing algorithm for optical ptychography. Opt. Lasers Eng. 2020, 128, 106053. [Google Scholar] [CrossRef]

- Sha, X.P.; Li, W.C.; Lv, X.Y.; Lv, J.T.; Li, Z.Q. Research on auto-focusing technology for micro vision system. Optik 2017, 142, 226–233. [Google Scholar] [CrossRef]

- Vasin, V.V.; Perestoronina, G.Y. The Levenberg–Marquardt method and its modified versions for solving nonlinear equations with application to the inverse gravimetry problem. Proc. Steklov Inst. Math. 2013, 280, 174–182. [Google Scholar] [CrossRef]

- Zhou, J.P.; Li, Y. Application of Fuzzy Self-tuning PID controller in the Optical Electronic Servo Device. Electr. Drive 2015, 45, 1–4. [Google Scholar]

| Frame frequency | |

| Pixels | |

| Pixel Size | |

| Focus Length | |

| Aperture |

| Serial Number | Given Focal Length (mm) | Traditional Fuzzy PID Control Average Error (mm) | Improved RBF ANN Fuzzy PID Control Average Error (mm) |

|---|---|---|---|

| 1 | 25 | 0.04 | 0.02 |

| 2 | 50 | 0.09 | 0.05 |

| 3 | 80 | 0.08 | 0.04 |

| 4 | 100 | 0.07 | 0.03 |

| 5 | 200 | 0.03 | 0.01 |

| 6 | 300 | 0.05 | 0.02 |

| 7 | 400 | 0.04 | 0.02 |

| 8 | 500 | 0.03 | 0.01 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Xue, L.; Li, Y.; Cao, L.; Chen, C. A Novel Fuzzy Controller for Visible-Light Camera Using RBF-ANN: Enhanced Positioning and Autofocusing. Sensors 2022, 22, 8657. https://doi.org/10.3390/s22228657

Zhou J, Xue L, Li Y, Cao L, Chen C. A Novel Fuzzy Controller for Visible-Light Camera Using RBF-ANN: Enhanced Positioning and Autofocusing. Sensors. 2022; 22(22):8657. https://doi.org/10.3390/s22228657

Chicago/Turabian StyleZhou, Junpeng, Letang Xue, Yan Li, Lihua Cao, and Changqing Chen. 2022. "A Novel Fuzzy Controller for Visible-Light Camera Using RBF-ANN: Enhanced Positioning and Autofocusing" Sensors 22, no. 22: 8657. https://doi.org/10.3390/s22228657

APA StyleZhou, J., Xue, L., Li, Y., Cao, L., & Chen, C. (2022). A Novel Fuzzy Controller for Visible-Light Camera Using RBF-ANN: Enhanced Positioning and Autofocusing. Sensors, 22(22), 8657. https://doi.org/10.3390/s22228657