Performance and Accuracy Comparisons of Classification Methods and Perspective Solutions for UAV-Based Near-Real-Time “Out of the Lab” Data Processing

Abstract

:1. Introduction

2. Materials and Methods

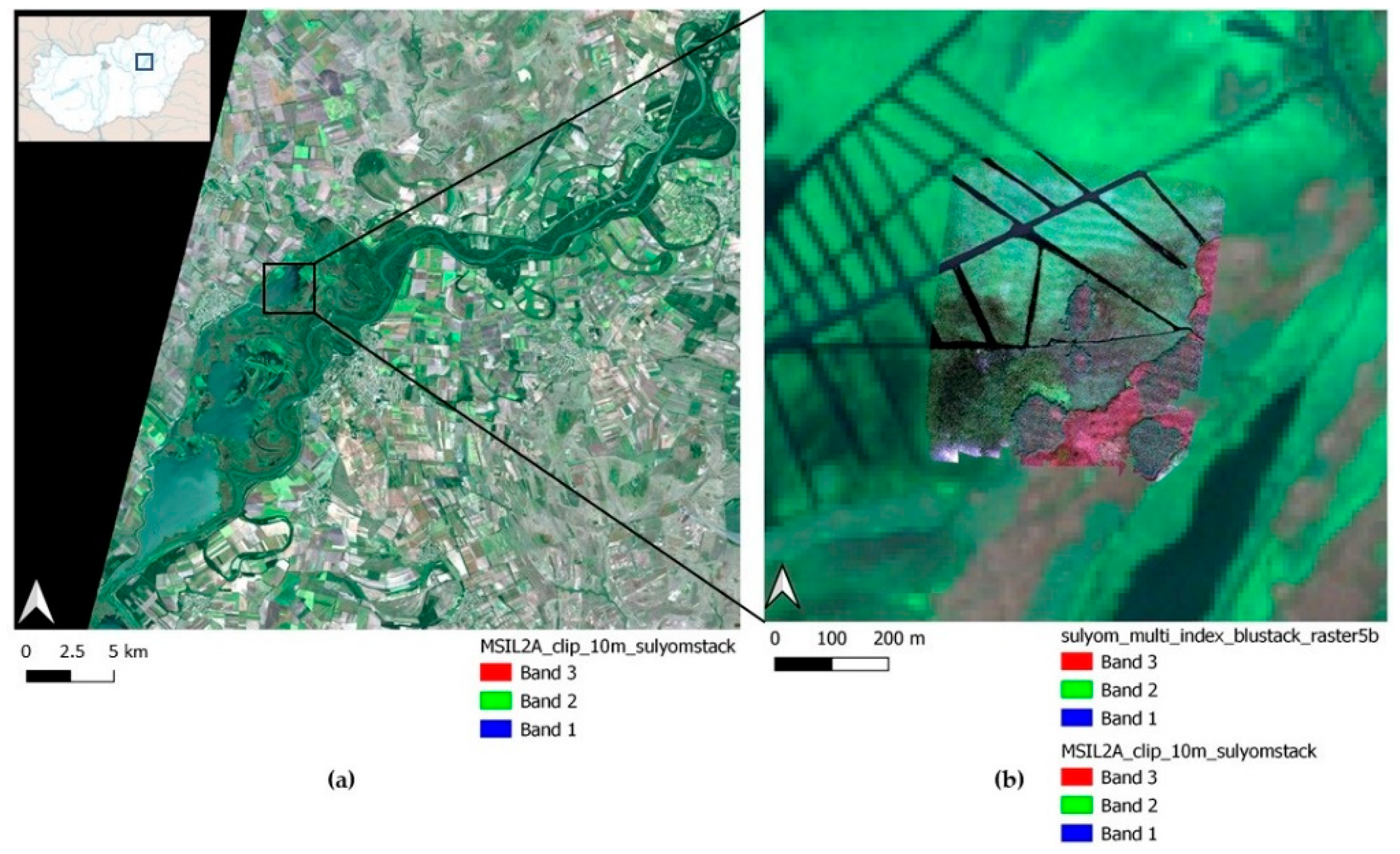

2.1. Study Area

2.2. Processing Workflow and the Examined Algorithms

3. Results

3.1. Running Time

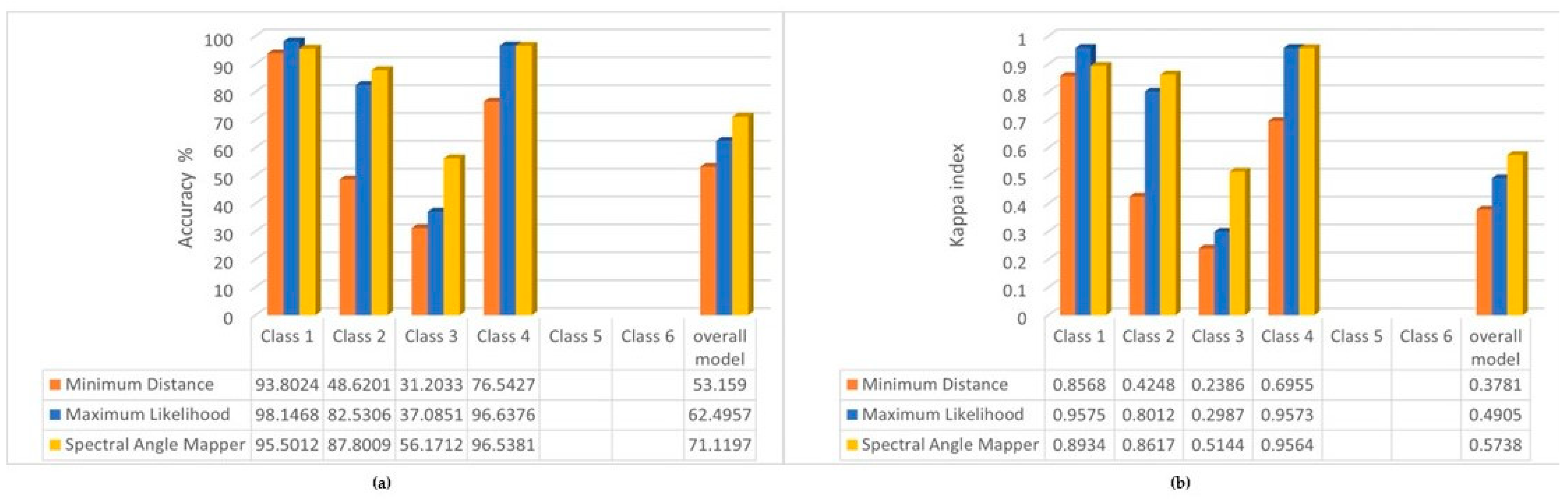

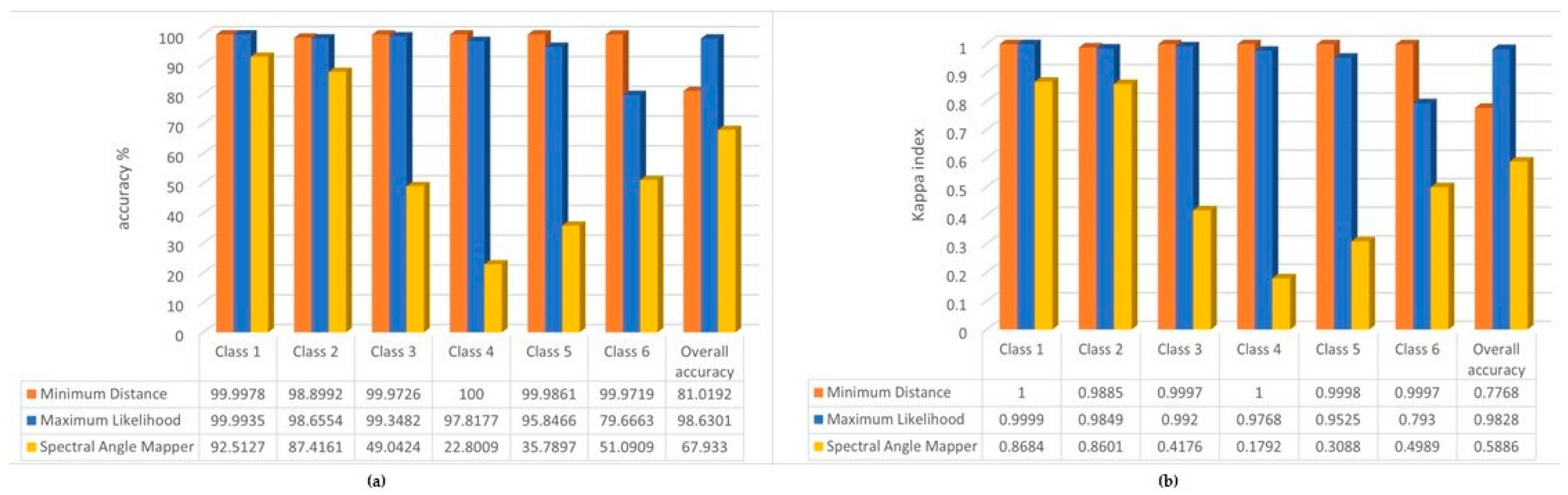

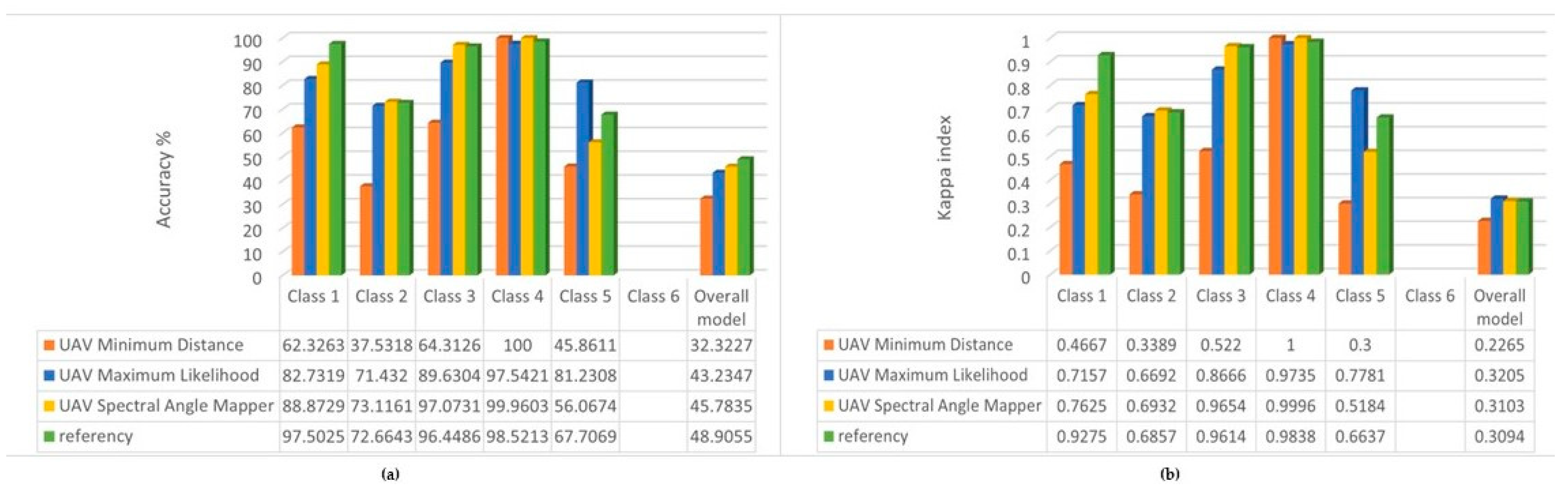

3.2. Accuracy Assessment Results of the Supervised Classification Algorithms

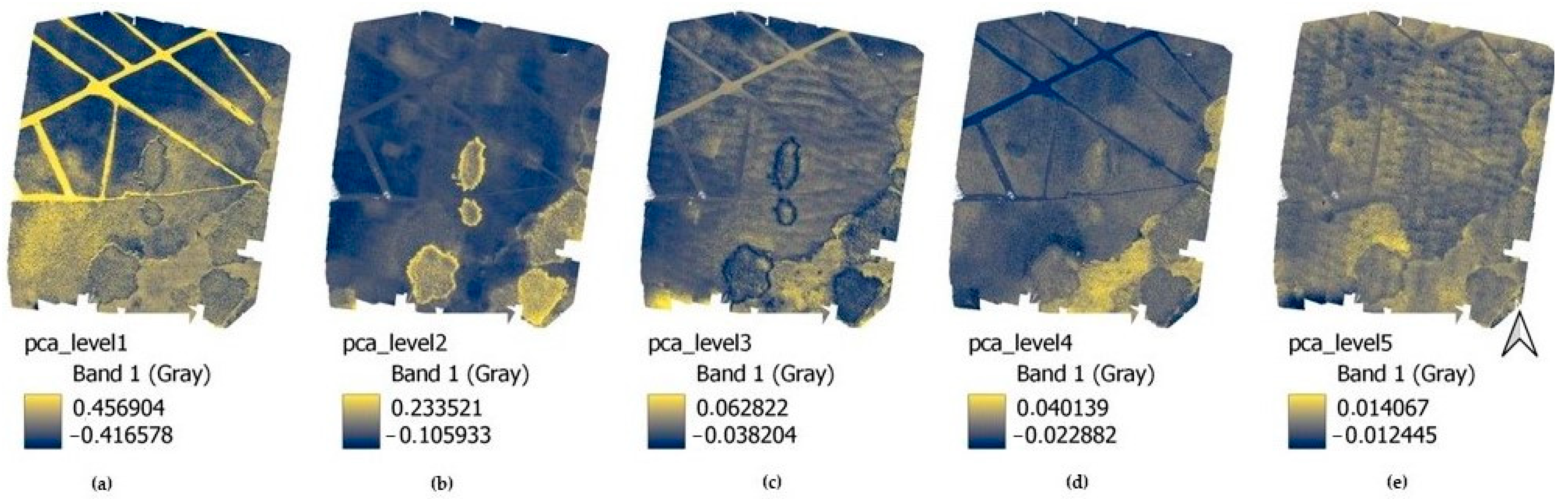

3.3. Results of Principal Component Analysis (PCA)

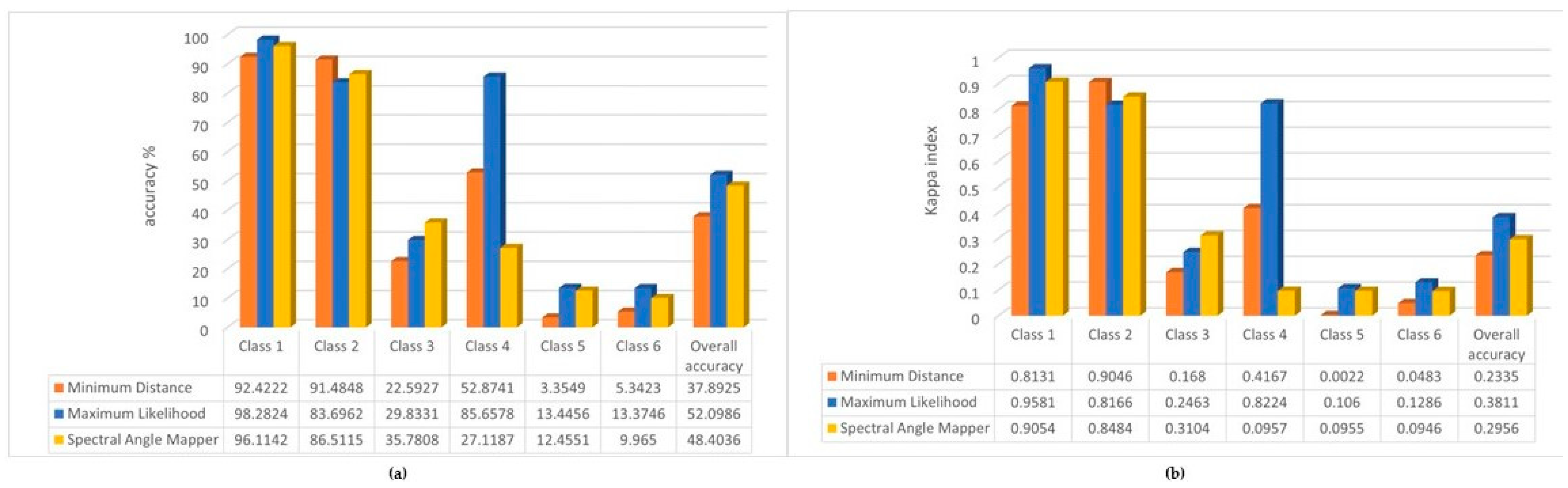

3.4. Results of the Decision Rules

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fan, B.; Li, Y.; Zhang, R.; Fu, Q. Review on the Technological Development and Application of UAV Systems. Chin. J. Electron. 2020, 29, 199–207. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Xiang, J.; Jin, Y.; Liu, R.; Yan, J.; Wang, L. Boost Precision Agriculture with Unmanned Aerial Vehicle Remote Sensing and Edge Intelligence: A Survey. Remote Sens. 2021, 13, 4387. [Google Scholar] [CrossRef]

- Jung, A.; Vohland, M. Hyperspectral Remote Sensing and Field Spectroscopy: Applications in Agroecology and Organic Farming. In Drones Information Technologies in Agroecology and Organic Farming Contributions to Technological Sovereignty, 1st ed.; De Marchi, M., Diantini, A., Eds.; CRC Press: Boca Raton, FL, USA, 2022; pp. 99–121. [Google Scholar] [CrossRef]

- Willkomm, M.; Bolten, A.; Bareth, G. Non-destructive monitoring of rice by hyperspectral in-field spectrometry and UAV-based remote sensing: Case study of field-grown rice in north Rhine-Westphalia, Germany. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 1071–1077. [Google Scholar] [CrossRef] [Green Version]

- Gómez-Candón, D.; de Castro, A.I.; López-Granados, F. Assessing the accuracy of mosaics from unmanned aerial vehicle (UAV) imagery for precision agriculture purposes in wheat. Precis. Agric. 2014, 15, 44–56. [Google Scholar] [CrossRef] [Green Version]

- Hognogi, G.-G.; Pop, A.-M.; Marian-Potra, A.-C.; Someșfălean, T. The Role of UAS–GIS in Digital Era Governance. A Systematic Literature Review. Sustainability 2021, 13, 11097. [Google Scholar] [CrossRef]

- Chen, K.; Reichard, G.; Akanmu, A.; Xu, X. Geo-registering UAV-captured close-range images to GIS-based spatial model for building façade inspections. Autom. Constr. 2021, 122, 103503. [Google Scholar] [CrossRef]

- Balázsik, V.; Tóth, Z. Az UAV Technológia Pontossági Kérdései. In Proceedings of the Conference: Drón Felhasználói Fórum, Budapest, Hungary, 8 November 2019. [Google Scholar] [CrossRef]

- Fanta-Jende, P.; Steininger, D.; Bruckmüller, F.; Sulzbachner, C. A versatile UAV near real-time mapping solution for disaster response—Concept, ideas and implementation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B1-2020, 429–435. [Google Scholar] [CrossRef]

- Luo, F.; Jiang, C.; Yu, S.; Wang, J.; Li, Y.; Ren, Y. Stability of Cloud-Based UAV Systems Supporting Big Data Acquisition and Processing. IEEE Trans. Cloud Comput. 2019, 7, 866–877. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; Partel, V.; Costa, L. Agroview: Cloud-based application to process, analyze and visualize UAV-collected data for precision agriculture applications utilizing artificial intelligence. Comput. Electron. Agric. 2020, 174, 105457. [Google Scholar] [CrossRef]

- Athanasis, N.; Themistocleous, M.; Kalabokidis, K.; Chatzitheodorou, C. Big Data Analysis in UAV Surveillance for Wildfire Prevention and Management. In Information Systems, Proceedings of the EMCIS 2018, Limassol, Cyprus, 4–5 October 2018; Lecture Notes in Business Information Processing; Themistocleous, M., Rupino da Cunha, P., Eds.; Springer: Hillerød, Denmark, 2019; Volume 341, pp. 47–58. [Google Scholar] [CrossRef]

- Huang, Y.; Chen, Z.; Yu, T.; Huang, X.; Gu, X. Agricultural remote sensing big data: Management and applications. J. Integr. Agric. 2018, 17, 1915–1931. [Google Scholar] [CrossRef]

- Ang, K.L.-M.; Seng, J.K.P. Big Data and Machine Learning With Hyperspectral Information in Agriculture. IEEE Access 2021, 9, 36699–36718. [Google Scholar] [CrossRef]

- Agarwal, A.; El-Ghazawi, T.; El-Askary, H.; Le-Moigne, J. Efficient Hierarchical-PCA Dimension Reduction for Hyperspectral Imagery. In Proceedings of the IEEE International Symposium on Signal Processing and Information Technology, Giza, Egypt, 15–18 December 2007; pp. 353–356. [Google Scholar] [CrossRef]

- Licciardi, G.; Marpu, P.R.; Chanussot, J.; Benediktsson, J.A. Linear Versus Nonlinear PCA for the Classification of Hyperspectral Data Based on the Extended Morphological Profiles. IEEE Geosci. Remote Sens. Lett. 2012, 9, 447–451. [Google Scholar] [CrossRef] [Green Version]

- Uddin, M.P.; Mamun, M.A.; Afjal, M.I.; Hossain, A. Information-theoretic feature selection with segmentation-based folded principal component analysis (PCA) for hyperspectral image classification. Int. J. Remote Sens. 2021, 42, 286–321. [Google Scholar] [CrossRef]

- Wang, P.; Wang, L.; Leung, H.; Zhang, G. Super-Resolution Mapping Based on Spatial-Spectral Correlation for Spectral Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 2256–2268. [Google Scholar] [CrossRef]

- Nasrollahi, K.; Moeslund, T.B. Super-resolution: A comprehensive survey. Mach. Vis. Appl. 2014, 25, 1423–1468. [Google Scholar] [CrossRef]

- Shang, X.; Song, M.; Wang, Y.; Yu, C. Target-Constrained Interference-Minimized Band Selection for Hyperspectral Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6044–6064. [Google Scholar] [CrossRef]

- Inzerillo, L.; Acuto, F.; Di Mino, G.; Uddin, M.Z. Super-Resolution Images Methodology Applied to UAV Datasets to Road Pavement Monitoring. Drones 2022, 6, 171. [Google Scholar] [CrossRef]

- Ofli, F.; Meier, P.; Imran, M.; Castillo, C.; Tuia, D.; Rey, N.; Briant, J.; Millet, P.; Reinhard, F.; Parkan, M.; et al. Combining Human Computing and Machine Learning to Make Sense of Big (Aerial) Data for Disaster Response. Big Data 2016, 4, 47–59. [Google Scholar] [CrossRef] [Green Version]

- Alexakis, D.D.; Tapoglou, E.; Vozinaki, A.-E.K.; Tsanis, I.K. Integrated Use of Satellite Remote Sensing, Artificial Neural Networks, Field Spectroscopy, and GIS in Estimating Crucial Soil Parameters in Terms of Soil Erosion. Remote Sens. 2019, 11, 1106. [Google Scholar] [CrossRef] [Green Version]

- Cresson, R. A Framework for Remote Sensing Images Processing Using Deep Learning Techniques. IEEE Geosci. Remote Sens. Lett. 2018, 16, 25–29. [Google Scholar] [CrossRef] [Green Version]

- Yao, X.; Yang, H.; Wu, Y.; Wu, P.; Wang, B.; Zhou, X.; Wang, S. Land Use Classification of the Deep Convolutional Neural Network Method Reducing the Loss of Spatial Features. Sensors 2019, 19, 2792. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baumgartner, S.; Bognár, G.; Lang, O.; Huemer, M. Neural Network Based Data Estimation for Unique Word OFDM. In Proceedings of the 2021 55th Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 30 October–2 November 2021; pp. 381–388. [Google Scholar] [CrossRef]

- Punitha, P.A.; Sutha, J. Object based classification of high resolution remote sensing image using HRSVM-CNN classifier. Eur. J. Remote Sens. 2020, 53, 16–30. [Google Scholar] [CrossRef] [Green Version]

- Elek, I.; Cserép, M. Processing Drone Images with the Open Source Giwer Software Package. In Proceedings of the Future Technologies, Virtual, 28–29 October 2021; Lecture Notes in Networks and Systems; Kacprzyk, J., Ed.; Springer: Hillerød, Denmark, 2021; Volume 359, pp. 201–209. [Google Scholar] [CrossRef]

- Mittal, P.; Sharma, A.; Singh, R.; Sangaiah, A.K. On the performance evaluation of object classification models in low altitude aerial data. J. Supercomput. 2022, 78, 14548–14570. [Google Scholar] [CrossRef] [PubMed]

- Śledziowski, J.; Terefenko, P.; Giza, A.; Forczmański, P.; Łysko, A.; Maćków, W.; Stępień, G.; Tomczak, A.; Kurylczyk, A. Application of Unmanned Aerial Vehicles and Image Processing Techniques in Monitoring Underwater Coastal Protection Measures. Remote Sens. 2022, 14, 458. [Google Scholar] [CrossRef]

- Wang, H.; Duan, Y.; Shi, Y.; Kato, Y.; Ninomiya, S.; Guo, W. EasyIDP: A Python Package for Intermediate Data Processing in UAV-Based Plant Phenotyping. Remote Sens. 2021, 13, 2622. [Google Scholar] [CrossRef]

- Fekete, A.; Cserép, M. Tree segmentation and change detection of large urban areas based on airborne LiDAR. Comput. Geosci. 2021, 156, 104900. [Google Scholar] [CrossRef]

- Zawieska, D.; Markiewicz, J.; Turek, A.; Bakuła, K.; Kowalczyk, M.; Kurczyński, Z.; Ostrowski, W.; Podlasiak, P. Multi-criteria GIS analyses with the use of UAVs for the needs of spatial planning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 1165–1171. [Google Scholar] [CrossRef] [Green Version]

- Bou Kheir, R.; Bøcher, P.K.; Greve, M.B.; Greve, M.H. The application of GIS based decision-tree models for generating the spatial distribution of hydromorphic organic landscapes in relation to digital terrain data. Hydrol. Earth Syst. Sci. 2010, 14, 847–857. [Google Scholar] [CrossRef] [Green Version]

- Patel, H.H.; Prajapa, P. Study and Analysis of Decision Tree Based Classification Algorithms. Int. J. Comput. Appl. 2018, 6, 74–78. [Google Scholar] [CrossRef]

- Congedo, L. Semi-Automatic Classification Plugin: A Python tool for the download and processing of remote sensing images in QGIS. J. Open Source Softw. 2021, 6, 3172. [Google Scholar] [CrossRef]

- SNAP—ESA Sentinel Application Platform v8.0.0. Available online: https://step.esa.int (accessed on 25 October 2020).

- Grizonnet, M.; Michel, J.; Poughon, V.; Inglada, J.; Savinaud, M.; Cresson, R. Orfeo ToolBox: Open source processing of remote sensing images. Open Geospat. Data Softw. Stand. 2017, 2, 15. [Google Scholar] [CrossRef] [Green Version]

- Jensen, R.R.; Hardin, P.J.; Yu, G. Artificial neural networks and remote sensing. Geogr. Compass 2009, 3, 630–646. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Haridas, N.; Sowmya, V.; Soman, K.P. GURLS vs LIBSVM: Performance Comparison of Kernel Methods for Hyperspectral Image Classification. Indian J. Sci. Technol. 2015, 8, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Melgani, F.; He, B. Fully Convolutional SVM for Car Detection in UAV Imagery. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 2451–2454. [Google Scholar] [CrossRef]

- Bazi, Y.; Melgani, F. Convolutional SVM Networks for Object Detection in UAV Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3107–3118. [Google Scholar] [CrossRef]

- Zheng, C.; Sun, D.-W. 2—Image Segmentation Techniques. In Computer Vision Technology for Food Quality Evaluation; Sun, D.-W., Ed.; Academic Press: Cambridge, CA, USA, 2008; pp. 37–56. [Google Scholar] [CrossRef]

- Sirat, E.F.; Setiawan, B.D.; Ramdani, F. Comparative Analysis of K-Means and Isodata Algorithms for Clustering of Fire Point Data in Sumatra Region. In Proceedings of the 4th International Symposium on Geoinformatics (ISyG), Malang, Indonesia, 10–12 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

| Classification Method | 8 GB DRAM | 4 GB DRAM | |

|---|---|---|---|

| Running Times (h) | |||

| unsupervised classification | Clustering (ISODATA) | 9.7 | >24 h |

| deep learning | Convolutional Neural Networks (CNN) | >24 h | - |

| Object-based classification | >24 h | - | |

| machine learning | Random Forest | 7.4 | >24 h |

| Support Vector Machine (SVM) | 10.6 | >24 h | |

| Artificial Neural Networks (ANN) | 10.2 | >24 h | |

| supervised classification | Minimum Distance | 1.2 | 6.5 |

| Maximum Likelihood | 1.2 | 6.5 | |

| Spectral Angle Mapper | 1.2 | 6.5 | |

| PCA | 3.5 | 8.5 | |

| Decision rules | 0.5 | 2 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Varga, Z.; Vörös, F.; Pál, M.; Kovács, B.; Jung, A.; Elek, I. Performance and Accuracy Comparisons of Classification Methods and Perspective Solutions for UAV-Based Near-Real-Time “Out of the Lab” Data Processing. Sensors 2022, 22, 8629. https://doi.org/10.3390/s22228629

Varga Z, Vörös F, Pál M, Kovács B, Jung A, Elek I. Performance and Accuracy Comparisons of Classification Methods and Perspective Solutions for UAV-Based Near-Real-Time “Out of the Lab” Data Processing. Sensors. 2022; 22(22):8629. https://doi.org/10.3390/s22228629

Chicago/Turabian StyleVarga, Zsófia, Fanni Vörös, Márton Pál, Béla Kovács, András Jung, and István Elek. 2022. "Performance and Accuracy Comparisons of Classification Methods and Perspective Solutions for UAV-Based Near-Real-Time “Out of the Lab” Data Processing" Sensors 22, no. 22: 8629. https://doi.org/10.3390/s22228629

APA StyleVarga, Z., Vörös, F., Pál, M., Kovács, B., Jung, A., & Elek, I. (2022). Performance and Accuracy Comparisons of Classification Methods and Perspective Solutions for UAV-Based Near-Real-Time “Out of the Lab” Data Processing. Sensors, 22(22), 8629. https://doi.org/10.3390/s22228629