Multi-Currency Integrated Serial Number Recognition Model of Images Acquired by Banknote Counters

Abstract

:1. Introduction

- The proposed method is a 1-stage method that simultaneously performs character region detection and classification instead of only character recognition.

- The proposed method achieves state-of-the-art performance on both detection and recognition tasks because the 1-stage object detector is optimized for serial number recognition and the data are augmented according to the characteristics of banknote counters.

- It achieves the highest serial number recognition performance compared with previous methods despite the increase in classes and the additional detection task.

- The recognition model trained using a multi-currency dataset performed better than the recognition model trained on only single-currency datasets, indicating that the multi-currency dataset improved the generalization performance of the recognition model.

2. Related Work

2.1. Handcrafted Feature Extraction Approach

2.2. Deep Learning-Based Approach

3. Materials and Methods

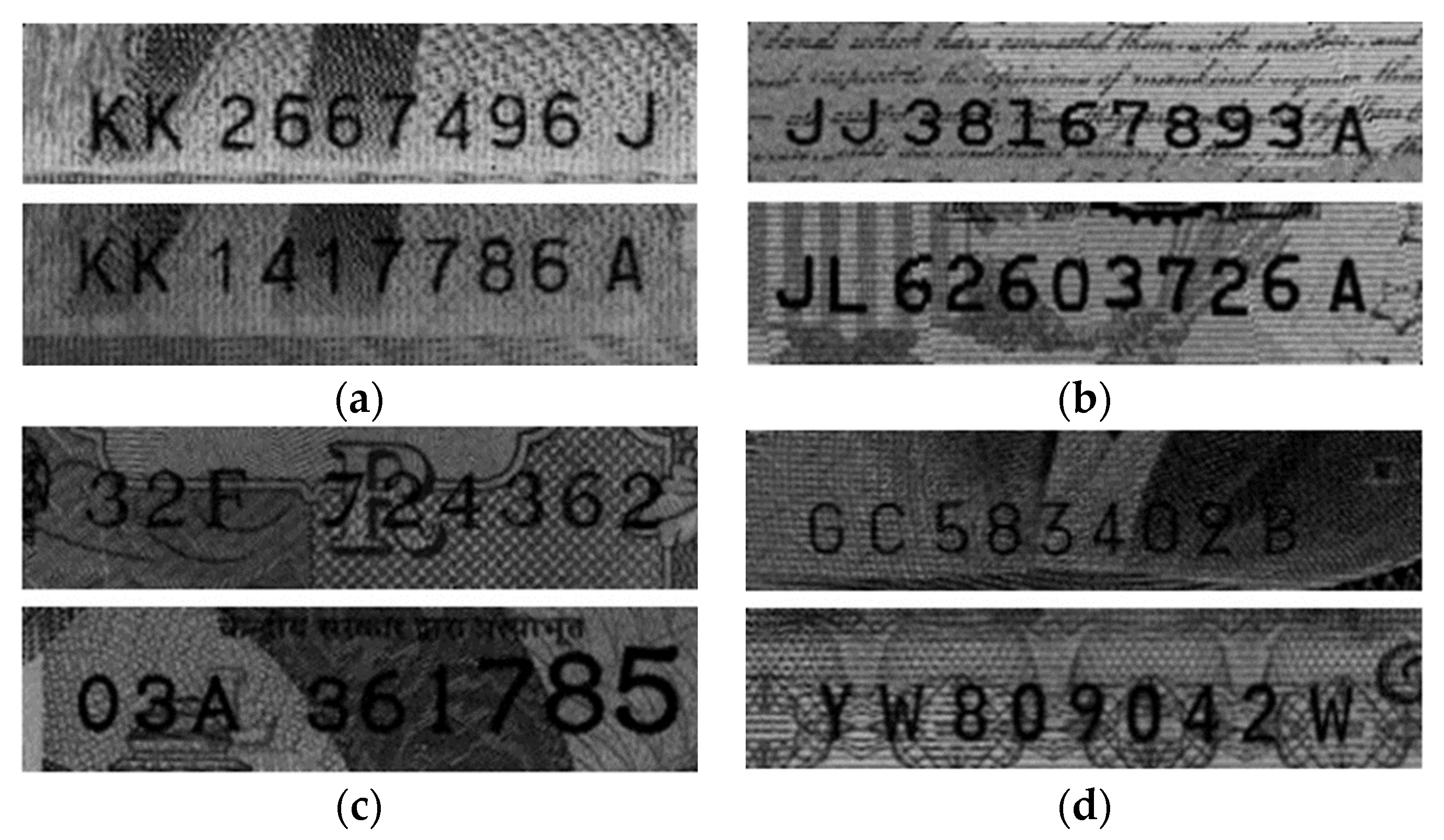

3.1. Data Acquisition and Preprocessing

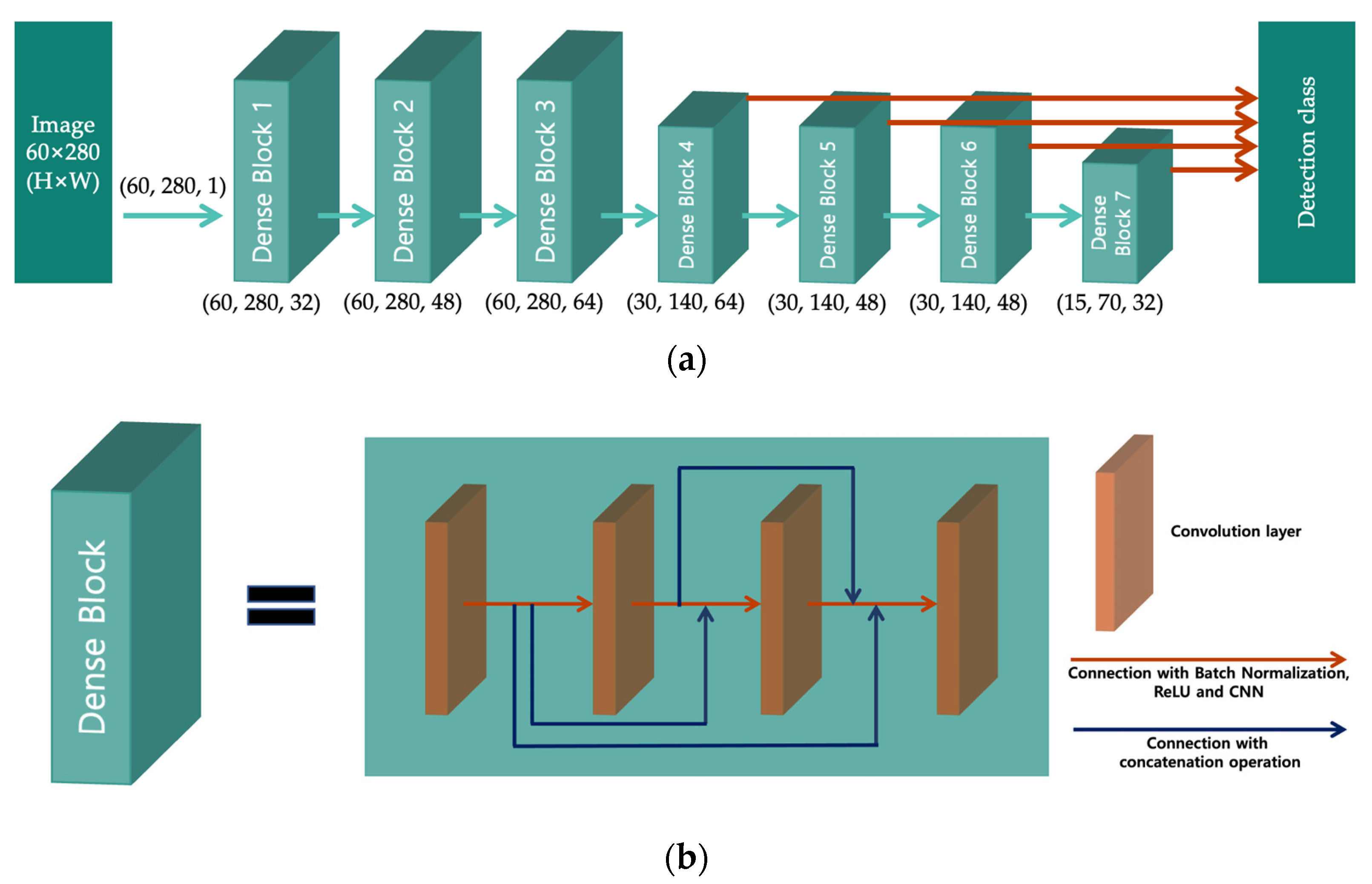

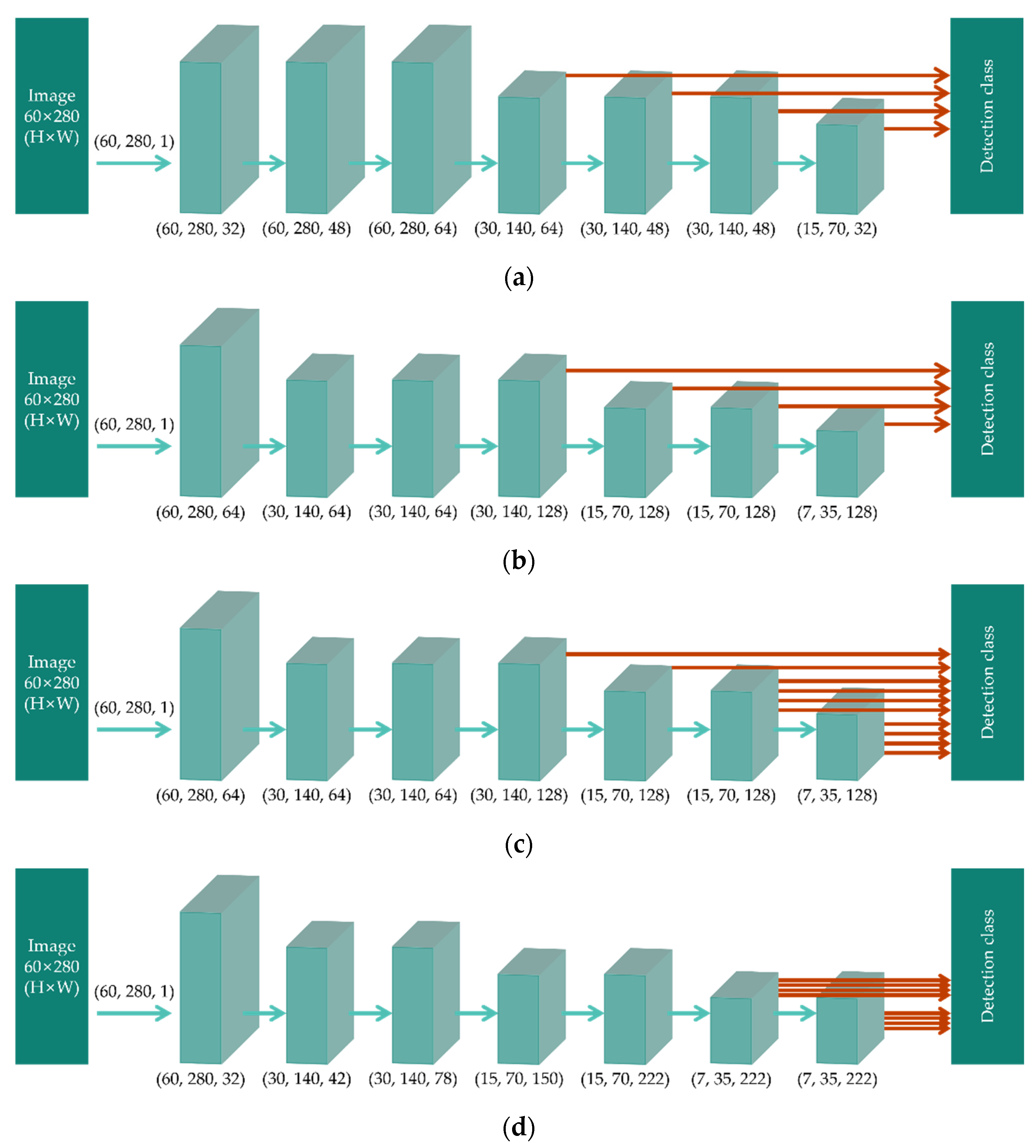

3.2. Model Architecture

4. Experimental Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kim, E.; Turton, T. The next generation banknote project. Reserve Bank Aust. Bull. 2014, 1–11. [Google Scholar]

- Wang, H.; Wang, N.; Jiang, Y.; Yang, J. Design of banknote withdrawal system of supermarket automatic change machine. In Proceedings of the 2018 8th International Conference on Applied Science, Engineering and Technology, Qingdao, China, 25–26 March 2018; Atlantis Press: Paris, France, 2018; pp. 130–134. [Google Scholar]

- Kavale, A.; Shukla, S.; Bramhe, P. Coin counting and sorting machine. In Proceedings of the 2019 9th International Conference on Emerging Trends in Engineering and Technology-Signal and Information Processing, Nagpur, India, 1–2 November 2019; pp. 1–4. [Google Scholar]

- Cardaci, R.; Burgassi, S.; Golinelli, D.; Nante, N.; Battaglia, M.A.; Bezzini, D.; Messina, G. Automatic vending-machines contamination: A pilot study. Glob. J. Health Sci. 2016, 9, 63. [Google Scholar]

- Lee, J.W.; Hong, H.G.; Kim, K.W.; Park, K.R. A survey on banknote recognition methods by various sensors. Sensors 2017, 17, 313. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bruna, A.; Farinella, G.M.; Guarnera, G.C.; Battiato, S. Forgery detection and value identification of Euro banknotes. Sensors 2013, 13, 2515–2529. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Panahi, R.; Gholampour, I. Accurate detection and recognition of dirty vehicle plate numbers for high-speed applications. IEEE Trans. Intell. Transp. Syst. 2016, 18, 767–779. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Bulatov, Y.; Ibarz, J.; Arnoud, S.; Shet, V. Multi-digit number recognition from street view imagery using deep convolutional neural networks. arXiv 2013, arXiv:1312.6082. [Google Scholar]

- Tele, G.S.; Kathalkar, A.P.; Mahakalkar, S.; Sahoo, B.; Dhamane, V. Detection of fake Indian currency. Int. J. Adv. Res. Ideas Innov. Technol. 2019, 4, 170–176. [Google Scholar]

- Zhao, T.T.; Zhao, J.Y.; Zheng, R.R.; Zhang, L.L. Study on RMB number recognition based on genetic algorithm artificial neural network. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; Volume 4, pp. 1951–1955. [Google Scholar]

- Ebrahimzadeh, R.; Jampour, M. Efficient handwritten digit recognition based on histogram of oriented gradients and SVM. Int. J. Comput. Appl. 2014, 104, 10–13. [Google Scholar]

- Feng, B.Y.; Ren, M.; Zhang, X.Y.; Suen, C.Y. Automatic recognition of serial numbers in bank notes. Pattern Recognit. 2014, 47, 2621–2634. [Google Scholar]

- Feng, B.Y.; Ren, M.; Zhang, X.Y.; Suen, C.Y. Part-based high accuracy recognition of serial numbers in bank notes. In IAPR Workshop on Artificial Neural Networks in Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2014; pp. 204–215. [Google Scholar]

- Tian, S.; Bhattacharya, U.; Lu, S.; Su, B.; Wang, Q.; Wei, X.; Lu, Y.; Tan, C.L. Multilingual scene character recognition with co-occurrence of histogram of oriented gradients. Pattern Recognit. 2016, 51, 125–134. [Google Scholar]

- Zhou, J.; Wang, F.; Xu, J.; Yan, Y.; Zhu, H. A novel character segmentation method for serial number on banknotes with complex background. J. Ambient Intell. Humaniz. Comput. 2019, 10, 2955–2969. [Google Scholar] [CrossRef]

- Alwzwazy, H.A.; Albehadili, H.M.; Alwan, Y.S.; Islam, N.E. Handwritten digit recognition using convolutional neural networks. Int. J. Innov. Res. Comput. Commun. Eng. 2016, 4, 1101–1106. [Google Scholar]

- Boufenar, C.; Kerboua, A.; Batouche, M. Investigation on deep learning for off-line handwritten Arabic character recognition. Cogn. Syst. Res. 2018, 50, 180–195. [Google Scholar] [CrossRef]

- OAHCDB-40 30000 Examples. Available online: http://www.mediafire.com/file/wks8xgfs0dmm4db/OAHCDB-40_30000_Exemples.rar/file (accessed on 23 September 2022).

- Zhao, N.; Zhang, Z.; Ouyang, X.; Lv, N.; Zang, Z. The recognition of RMB serial number based on CNN. In Proceedings of the 2018 Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 3303–3306. [Google Scholar]

- Wang, F.; Zhu, H.; Li, W.; Li, K. A hybrid convolution network for serial number recognition on banknotes. Inf. Sci. 2020, 512, 952–963. [Google Scholar]

- Jang, U.; Suh, K.H.; Lee, E.C. Low-quality banknote serial number recognition based on deep neural network. J. Inf. Process. Syst. 2020, 16, 224–237. [Google Scholar]

- Namysl, M.; Konya, I. Efficient, lexicon-free OCR using deep learning. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 295–301. [Google Scholar]

- Caldeira, T.; Ciarelli, P.M.; Neto, G.A. Industrial optical character recognition system in printing quality control of hot-rolled coils identification. J. Control Autom. Electr. Syst. 2020, 31, 108–118. [Google Scholar]

- Gang, S.; Fabrice, N.; Chung, D.; Lee, J. Character recognition of components mounted on printed circuit board using deep learning. Sensors 2021, 21, 2921. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- KISAN ELECTRONICS. Available online: https://kisane.com/ (accessed on 24 September 2022).

- Deng, G.; Cahill, L.W. An adaptive Gaussian filter for noise reduction and edge detection. In Proceedings of the IEEE Conference Record Nuclear Science Symposium and Medical Imaging Conference, San Francisco, CA, USA, 31 October–6 November 1993. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Rothe, R.; Guillaumin, M.; Gool, L.V. Non-maximum suppression for object detection by passing messages between windows. In Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 290–306. [Google Scholar]

| Currency | Training | Validation | Test | Total |

|---|---|---|---|---|

| KRW | 35,000 | 7500 | 7500 | 50,000 |

| USD | 37,724 | 8083 | 8094 | 53,901 |

| INR | 31,500 | 6750 | 6750 | 45,000 |

| JPY | 31,260 | 6695 | 6696 | 44,651 |

| Total | 97,080 | 26,624 | 21,832 | 145,536 |

| Version | 1 | 2 | 3 | 4 |

| Accuracy (%) | 97.89 | 99.33 | 99.42 | 99.88 |

| Number of detection errors | 50 | 32 | 30 | 0 |

| Number of classification errors | 108 | 12 | 13 | 9 |

| 1st accuracy (%) | 98.97 | 99.49 | 99.72 | 100 |

| 2nd accuracy (%) | 96.81 | 99.17 | 99.12 | 99.75 |

| Time (ms) | 44 | 42 | 44 | 30 |

| Case | Scale | Aspect Ratio Set |

|---|---|---|

| 1 | [0.41, 0.45, 0.61, 0.65] | [0.81, 0.85, 0.88] |

| 2 | [0.30, 0.39, 0.47, 0.57] | [0.8, 0.9] |

| 3 | [0.33, 0.41, 0.55, 0.62] | [0.5, 0.65, 0.88, 1.0, 1.1, 1.25, 1.4] |

| 4 | [0.28, 0.36, 0.44, 0.52] | [0.5, 0.65, 0.88, 1.0, 1.1, 1.25, 1.4] |

| 5 | [0.28, 0.36, 0.44, 0.52] | [0.5, 0.65, 0.88, 1.0, 1.1, 1.25, 1.4] |

| 6 | [0.28, 0.36, 0.44, 0.52] | [0.5, 0.65, 0.88, 1.0, 1.1, 1.25, 1.4] |

| Case | 1 | 2 | 3 | 4 | 5 | 6 |

| Accuracy (%) | 99.76 | 99.81 | 99.86 | 99.95 | 99.96 | 99.97 |

| Number of detection errors | 49 | 38 | 32 | 8 | 6 | 0 |

| Number of classification errors | 19 | 16 | 6 | 6 | 4 | 6 |

| 1st accuracy (%) | 99.89 | 99.92 | 99.93 | 99.95 | 99.95 | 99.97 |

| 2nd accuracy (%) | 99.63 | 99.69 | 99.80 | 99.95 | 99.95 | 99.97 |

| Approach | Method | Task | Number of Classes | Detection Accuracy (%) | Classification Accuracy (%) |

|---|---|---|---|---|---|

| Handcrafted feature extraction | Zhao et al. [10] | Classification | 17 | - | 95 |

| Ebrahimzadeh et al. [11] | Classification | 17 | - | 97.25 | |

| Feng et al. [13] | Classification | 17 | - | 99.33 | |

| Deep- learning based | Alwzwazy et al. [16] | Classification | 17 | - | 95.7 |

| Boufenar et al. [17] | Classification | 17 | - | 100 | |

| Zhao et al. [19] | Classification | 17 | - | 99.99 | |

| Wang et al. [21] | Classification | 17 | - | 99.89 | |

| Jang et al. [22] | Classification | 17 | - | 99.92 | |

| Our method | Detection + Classification | 37 | 100 | 99.97 |

| National Currency | Metrics | Single-Currency Model | Multi-Currency Integrated Model |

|---|---|---|---|

| KRW | Accuracy (%) | 99.88 | 99.94 |

| Number of detection errors | 0 | 0 | |

| Number of classification errors | 9 | 4 | |

| 1st accuracy (%) | 100 | 99.94 | |

| 2nd accuracy (%) | 99.75 | 99.94 | |

| USD | Accuracy (%) | 99.94 | 99.97 |

| Number of detection errors | 5 | 0 | |

| Number of classification errors | 0 | 2 | |

| 1st accuracy (%) | 99.95 | 99.97 | |

| 2nd accuracy (%) | 99.92 | 99.97 | |

| INR | Accuracy (%) | 99.88 | 100 |

| Number of detection errors | 5 | 0 | |

| Number of classification errors | 3 | 0 | |

| 1st accuracy (%) | 99.93 | 100 | |

| 2nd accuracy (%) | 99.83 | 100 | |

| JPY | Accuracy (%) | 99.80 | 100 |

| Number of detection errors | 0 | 0 | |

| Number of classification errors | 13 | 0 | |

| 1st accuracy (%) | 99.98 | 100 | |

| 2nd accuracy (%) | 99.62 | 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jang, W.; Lee, C.; Jeong, D.S.; Lee, K.; Lee, E.C. Multi-Currency Integrated Serial Number Recognition Model of Images Acquired by Banknote Counters. Sensors 2022, 22, 8612. https://doi.org/10.3390/s22228612

Jang W, Lee C, Jeong DS, Lee K, Lee EC. Multi-Currency Integrated Serial Number Recognition Model of Images Acquired by Banknote Counters. Sensors. 2022; 22(22):8612. https://doi.org/10.3390/s22228612

Chicago/Turabian StyleJang, Woohyuk, Chaewon Lee, Dae Sik Jeong, Kunyoung Lee, and Eui Chul Lee. 2022. "Multi-Currency Integrated Serial Number Recognition Model of Images Acquired by Banknote Counters" Sensors 22, no. 22: 8612. https://doi.org/10.3390/s22228612

APA StyleJang, W., Lee, C., Jeong, D. S., Lee, K., & Lee, E. C. (2022). Multi-Currency Integrated Serial Number Recognition Model of Images Acquired by Banknote Counters. Sensors, 22(22), 8612. https://doi.org/10.3390/s22228612