1. Introduction

Sensors are used in a wide range of fields, such as autonomous driving, robotics, Internet of Things, medical, satellite, military, and surveillance. The development of sensors leads to miniaturization and increased performance. Image and video sensors are essentially used to handle the visual aspect. Although image and video sensors were developed to work in environments of low latency and complexity, they operated in environments with low network bandwidth, which limits the quality of input images and videos. Therefore, various image and video processing methods, such as super-resolution (SR) [

1,

2,

3,

4,

5,

6,

7,

8], deblurring [

9,

10,

11,

12,

13], and denoising [

14,

15,

16,

17], are used for restoration.

SR aims to generate high-resolution (HR) data from low-resolution (LR) data. Despite the initial SR methods based on pixel-wise interpolation algorithms, such as bicubic, bilinear, and nearest neighbor, being straightforward and intuitive in strategy, they have limitations in reconstructing high-frequency textures in the interpolated HR area.

With the development of deep learning technologies, image or video SR methods are currently investigated using convolutional neural network (CNN) [

18] and recurrent neural network (RNN) [

19]. Although deep learning-based SR methods [

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33] have superior performance, with development, parameter size and memory capacity are increased in the networks. Thus, methods for reducing network complexity are proposed for use in sensors of lightweight memory and limited computing environment devices such as smartphones.

In this paper, we propose a deformable convolution-based alignment network (DCAN) with a lightweight structure, which enhances perceptual quality better than the previous methods in terms of peak signal-to-noise ratio (PSNR) [

34] and structural similarity index measure (SSIM) [

35]. Through a variety of ablation studies, we also investigate the trade-off between the network complexity and the video super-resolution (VSR) performance in optimizing the proposed network. The contributions of this study are summarized as follows:

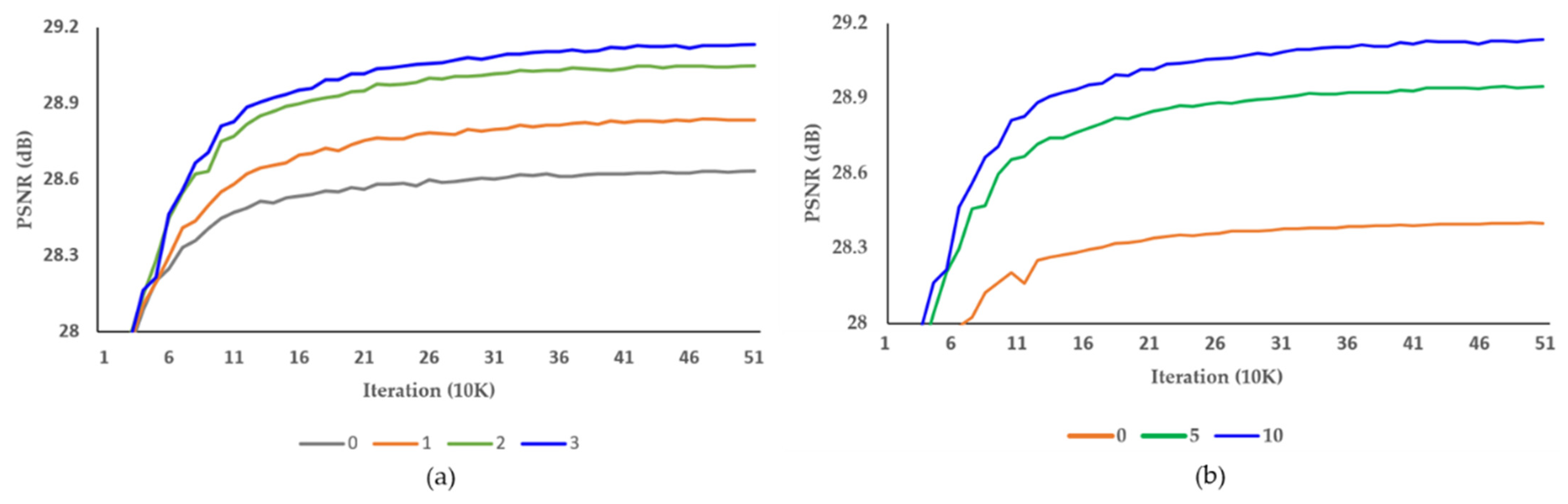

To improve VSR performance, we propose two alignment blocks designed to combine dilation and attention-based deformable convolution and develop two alignment methods using the neighboring input frames, such as attention-based alignment block (AAB) and dilation-based alignment block (DAB), in the proposed VSR model. Firstly, AAB extracts characteristics similar to the current frame using the attention method to obtain spatial and channel weights using max and average pooling. Secondly, DAB learns a wide range of receptive fields of feature maps by applying dilated convolution.

Through the optimization for our model, we conducted a tool-off test on AAB and DAB, Resblock in the alignment block and up-sampling block, and the pixel-shuffle layer. Firstly, AAB and DAB increased SR performance by 0.64 dB. Secondly, optimal Resblock in the alignment block and up-sampling block enhanced SR performance by 0.5 and 0.73 dB, respectively. Thirdly, the model using two pixel-shuffle layers was better than the model using one layer, by 0.01 dB.

Finally, we verified that the proposed network can improve PSNR and SSIM by up to 0.28 dB and 0.015 on average, respectively, compared to the latest method. The proposed method can significantly decrease the number of parameters, total memory size, and inference speed by 14.35%, 3.29%, and 8.87%, respectively.

The remainder of this paper is organized as follows: In

Section 2, we review the previous CNN-based VSR methods, including the essential network components. In

Section 3, we describe the frameworks of the proposed DCAN. Finally, experimental results and conclusions are presented in

Section 4 and

Section 5, respectively.

2. Related Works

Although pixel-wise interpolation methods were conventionally used in initial SR, it was difficult to properly represent the complex textures with high quality in the interpolated SR output. As CNN-based approaches have recently produced convincing results in the image and video restoration area, SR methods that use CNN can also achieve more SR accuracy than the conventional SR methods.

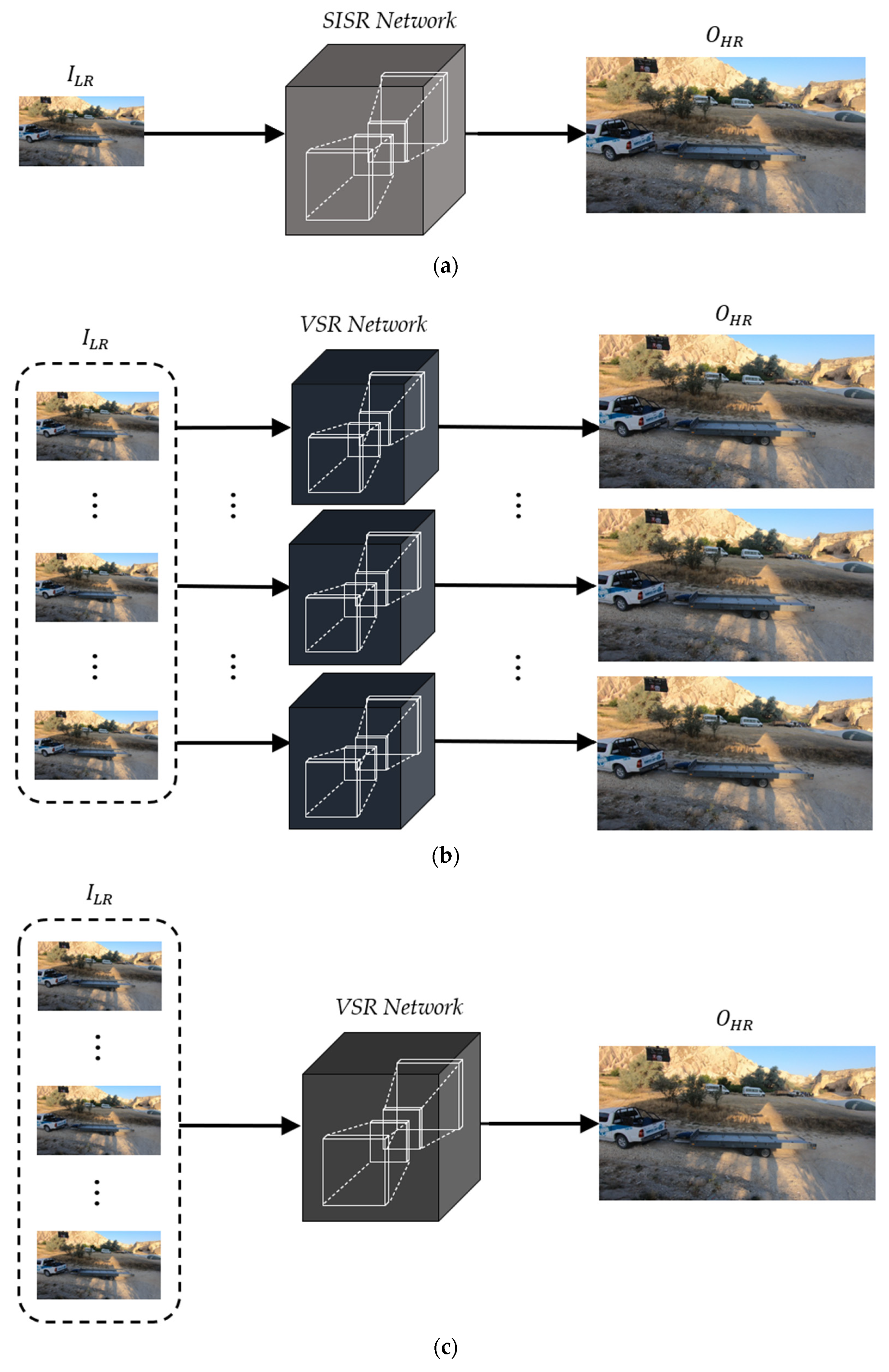

Figure 1 shows the CNN-based image and video super-resolution schemes.

Figure 1a is the general architecture of a single-image super-resolution (SISR) to generate an HR image (

) from an LR image (

). On the other hand, most video super-resolution (VSR) methods generate multiple HR frames from the corresponding LR frames, as shown in

Figure 1b. Although these approaches can be implemented with simple and intuitive network architectures, they tend to degrade the VSR performance due to a lack of temporal correlations between consecutive LR frames.

To overcome the limitations of the previous VSR schemes, recent VSR methods have been designed to generate single HR frames from multiple LR frames, as shown in

Figure 1c. Note that the generated single HR frame corresponds to the current LR frame. To improve the VSR performance in this approach, it is important that the neighboring LR frames be aligned to contain as much context of the current LR frame as possible before conducting CNN operations at the stage of input feature extraction. As one of the alignment methods, optical flow can be applied to each neighboring LR frame to perform pixel-level prediction through the two-dimensional (2D) pixel adjustment.

Although this scheme can provide better VSR performance compared to that of the conventional VSR schemes, as in

Figure 1b, all input LR frames including the aligned neighboring frames are generally used with the same weights. It means that the VSR network generates a single HR frame without considering the priorities between them. In addition, the alignment processes generally make the VSR networks more complicated due to the increase in total memory size and number of parameters.

The exponential increase in GPU performance has enabled the development of more sophisticated networks with deeper and denser CNN architectures. To design elaborate networks, there are several principal techniques to extract more accurate feature maps in the process of convolution operations, such as spatial attention [

36], channel attention [

37], dilated convolution [

38], and deformable convolution [

39].

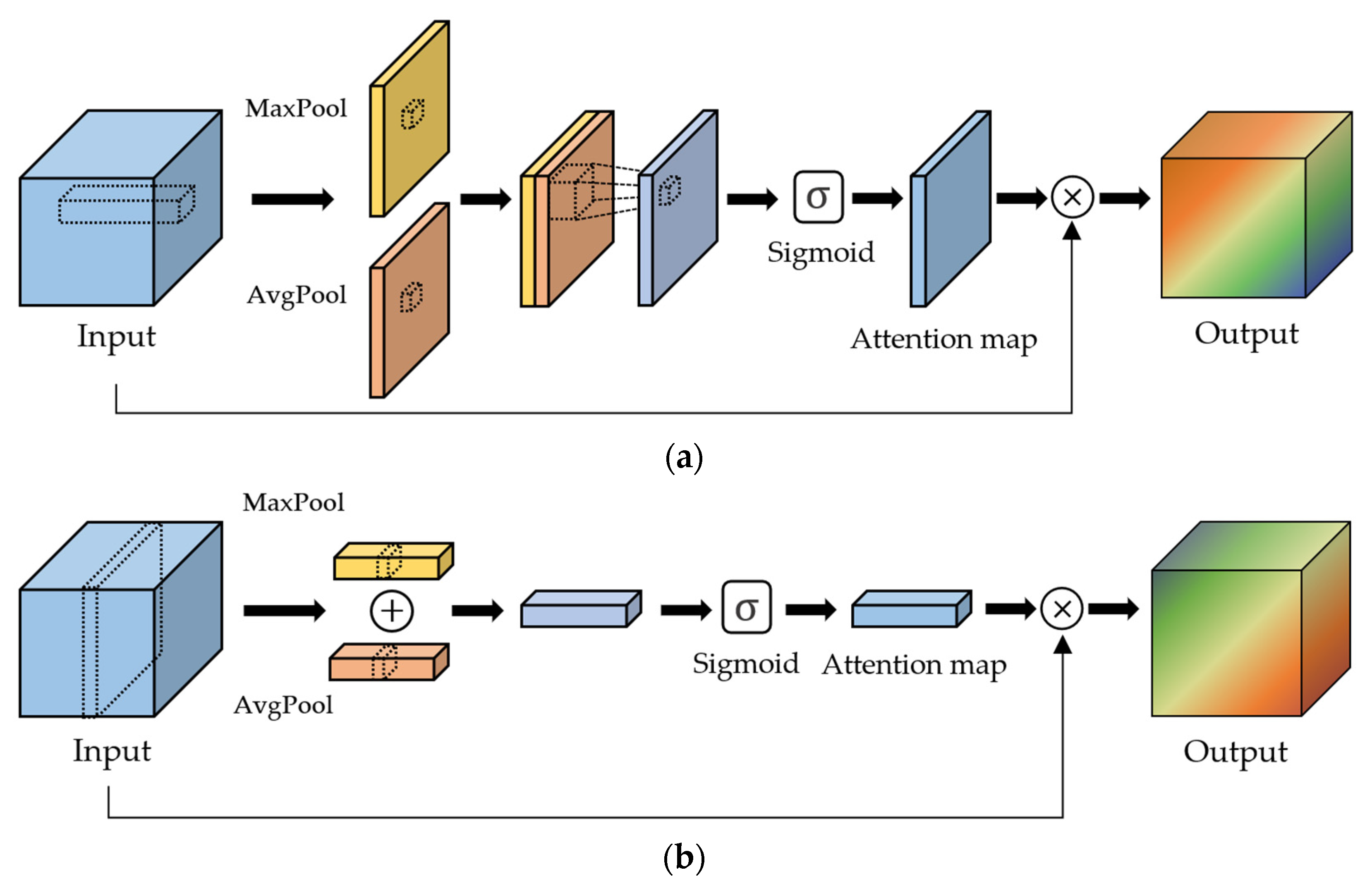

Spatial attention: Spatial attention improves the accuracy of the feature maps. As shown in

Figure 2a, it generates a spatial attention map after combining the intermediate feature maps from max and average pooling. Note that the spatial attention map consists of weight values between 0 and 1 as the result of the sigmoid function. Then, all features in the same location over the channels of the intermediate feature maps are multiplied by the corresponding weight of the spatial attention map.

Channel attention: The aim of channel attention is to allocate different priorities to each channel of the feature maps generated by convolution operations. Initial channel attention was proposed by Hu et al. [

37] in the squeeze-and-excitation network (SENet). Like spatial attention, Woo et al. [

36] proposed to generate a channel attention map using max and average pooling per each channel, as shown in

Figure 2b. Then, each channel of the feature maps is multiplied by the corresponding weight of the channel attention map.

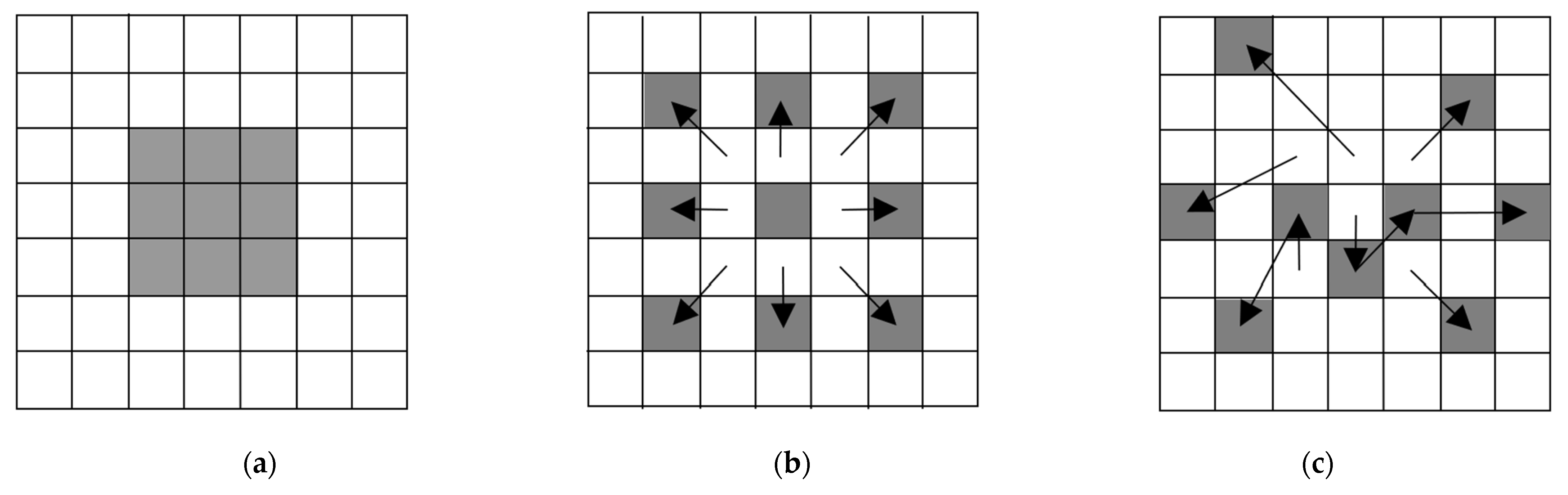

Dilated convolution: While convolution operations with the different multiple kernels can generally extract better output feature maps, it requires an extra burden, such as the increase of the kernel parameters. The aim of dilated convolution is to have similar effects with the different multiple kernels while reducing the number of kernel parameters. In

Figure 3a, it means that dilation factor 1 is equivalent to the conventional convolution. On the other hand, convolution operations are applied to the 5 × 5 input feature area according to the number of dilation factors, as shown in

Figure 3b.

Deformable convolution: In terms of neural network-based tasks, motion is adaptively adjusted through deformable convolution [

39], optical flow [

40], and motion attentive [

41] methods. To obtain better output features, the deformable convolution helps to find the exactly matched input feature corresponding to each kernel parameter. Contrary to the conventional operation, it generates two feature maps, which indicate X and Y axis offsets to shift the kernel parameter for geometric transformations, as shown in

Figure 3c. Although deformable convolution using multiple offsets [

42] recently improved SR performance, the operation tends to be more complicated, with huge parameter sizes and memory consumption.

With the mentioned techniques, various VSR networks have been designed to achieve better VSR performance. As the first CNN-based VSR method, Liao et al. [

43] proposed the deep draft-ensemble learning (Deep-DE) architecture, which was composed of three convolution layers and a single deconvolution layer. Since the advent of Deep-DE, Kappeler et al. [

44] proposed a more complicated VSR network (VSRnet), which consists of motion estimation and compensation modules to align the neighboring LR frames and three convolution layers, with the rectified linear unit (ReLU) [

45] used as an activation function. Caballero et al. [

46] developed a video-efficient sub-pixel convolution network (VESPCN) to effectively exploit temporal correlations between the input LR frames. It also adopted a spatial motion compensation transformer module to perform the motion estimation and compensation. After the feature maps are extracted from the motion-compensated input frames, an output HR frame is generated from them using a sub-pixel convolution layer. Jo et al. [

47] proposed dynamic up-sampling filters (DUF), which consist of 3D convolution filters to replace motion estimation, dynamic filter, and residual learning.

Isobe et al. [

48] developed the temporal group attention (TGA) structure to fuse spatio-temporal information through the frame-rate-aware groups hierarchically. It introduced a fast spatial alignment method to handle input LR sequence videos with large motion. Additionally, TGA adopted 3D and 2D dense layers to improve SR accuracy. As feature maps generated by previous convolution operations are concatenated with the current feature maps, it demands a large parameter size and memory. In the super-resolve optical flows (SOF) for the video super-resolution network [

49], it was composed of an optical flow reconstruction network, motion compensation module, and SR network to exploit the temporal dependency. Although optical flows for the video super-resolution network improved VSR performance by recovering temporal details, this type of approach caused a kind of blurring effect due to the excessive motion compensation. In addition, it used down-sampling and up-sampling at each level and caused a loss in the feature map information. Tian et al. [

50] proposed a temporally deformable alignment network (TDAN), which was designed with multiple residual blocks and a deformable convolution layer. As it lacked preprocessing before the deformable convolution operation, it had limitations in improving the SR accuracy of the generated HR frame. Wen et al. [

51] proposed a spatio-temporal alignment network (STAN) which consists of a filter-adaptive alignment network and an HR image reconstruction network. After the iterative spatio-temporal learning scheme of the filter adaptive alignment network extracts the intermediate feature maps from the input LR frames, a final HR frame is generated using the HR image reconstruction network, which consists of twenty residual channel attention blocks and two up-sampling layers. Although STAN achieved higher VSR performance than the previous methods, its limitation is in feature alignment of the corresponding current frame repeatably using the aligned feature maps of the corresponding previous frame. Besides, using hundreds of convolutions in the HR image reconstruction network, the number of parameters, memory size, and complexity were significantly increased.

In this study, we designed the proposed method by supplementing the limitations of the previous method. Therefore, by learning the aligned current frame with the neighboring frame, as shown in

Figure 4, our proposed method provides superior SR performance and is lightweight compared to the previous methods.

5. Conclusions

With the recent advances in sensor technology, image and video processing sensors have been used to handle the visual area. There is demand for high-quality and high-resolution images and videos. In this study, we proposed DCAN, which aims to achieve spatio-temporal learning through deformable-based feature map alignment. It generates HR video frames from LR video frames. DCAN is composed of FEB, alignment blocks, and an up-sampling block. We evaluated the performance of DCAN by training and testing with REDS and Vimeo-90K datasets. We performed ablation studies to determine the optimal network architecture considering AAB, DAB, and the number of Resblocks, respectively. DCAN improved the average PSNR by 0.28, 0.79, 0.92, and 0.81 dB compared to STAN, TDAN, SOF, and TGA, respectively. It reduced the number of parameters, total memory, and inference speed by as low as 14.35%, 3.29%, and 8.87%, respectively, compared to STAN.

To facilitate the use of sensors in lightweight memory devices with limitations of memory and computing environments, such as smartphones, methods to reduce network complexity are required. In the future, we aim to proceed with lightweight network research that can perform VSR in real-time.