Abstract

In order to improve the initialization robustness of visual inertial SLAM, the complementarity of the optical flow method and the feature-based method can be used in vision data processing. The parallel initialization method is proposed, where the optical flow inertial initialization and the monocular feature-based initialization are carried out at the same time. After the initializations, the state estimation results are jointly optimized by bundle adjustment. The proposed method retains more mapping information, and correspondingly is more adaptable to the initialization scene. It is found that the initialization map constructed by the proposed method features a comparable accuracy to the one constructed by ORB-SLAM3 in monocular inertial mode. Since the online extrinsic parameter estimation can be realized by the proposed method, it is considered better than ORB-SLAM3 in the aspect of portability. By the experiments performed on the benchmark dataset EuRoC, the effectiveness and robustness of the proposed method are validated.

1. Introduction

In the research field of multi-sensor fusion simultaneous localization and mapping (SLAM), the fusion of vision and inertia has always been a hot research topic in recent years [1,2,3,4,5,6,7,8]. The rich environmental information captured by image can be used for building maps and estimating the up-to-scale trajectory of the camera, which is attached to the mobile platform. The measurements of gyroscope and accelerometer contained in the inertial measurement unit (IMU) sensor can be integrated to estimate accurate short-term rigid body motion. In addition, both the camera and the IMU have matured miniaturized products. These properties make the combination of the monocular vision camera and the IMU economical, effective, and low energy for localization and mapping in small mobile robot or other platforms with limited load.

The state-of-the-art visual-inertial SLAM methods [1,2,3,4,5,6,7,8] presented in the literature widely use the IMU preintegration [9] and the keyframe-based nonlinear optimization methods [6]. However, the performance of these techniques heavily depends on prior precise extrinsic calibration of the six-degree-of-freedom (6-DOF) transformation between the camera and the IMU. As the coordinate conversion parameters between the camera and the IMU, the extrinsic parameters play important roles in accurate data fusion between the camera and the IMU. Incorrect extrinsic calibration leads to system deviation and degrades the overall navigation performance. For the acquisition of extrinsic parameters, most of the visual-inertial SLAM methods [1,2,3,4,5,6,7] use Kalibr to calibrate the extrinsic parameters, which a state-of-the-art marker-based off-line calibration method [10]. Thereafter, the calibrated extrinsic parameters by Kalibr are considered accurate and constant during the running of the whole program. However, this process is complex and time consuming. Additionally, it is usually required to repeat this process when the sensors are repositioned. Therefore, in order to improve the portability and operability of the program, an automatic extrinsic parameter estimation is necessary. At present, the automatic extrinsic parameter calibration [11] has been implemented in a monocular visual-inertial system constructed by one camera and a low-cost IMU (VINS-Mono) [8]. Weibo Huang et al. [12] implemented self-calibration of the camera and the IMU extrinsic parameters in the monocular ORB feature-based SLAM system (ORB-SLAM) framework [1,2]; ORB are binary features invariant to rotation and scale (in a certain range), resulting in a very fast recognizer with good invariance to viewpoint, although this code is not open source.

For visual measurement processing, it can be divided into the optical flow methods [13] and the feature-based methods [14,15,16] according to the matching strategy.

The feature-based methods represented by ORB-SLAM series [1,2,3,4] extract the ORB feature points [14] from the image, then calculate the descriptor of the corresponding feature points, and finally obtain the feature point pairs in two frames through the descriptor comparison. This kind of method features high positioning accuracy, but it is time-consuming and easy to lose tracking in a weak texture environment or due to blurred image in fast motion [17].

The same as feature-based methods, the optical flow methods represented by VINS-Mono also extract feature points from the image. However, the latter ones use image pixel values to match feature points, and eliminate the descriptor calculation of feature points, which is the most time-consuming process in feature-based methods. This characteristic makes the optical flow methods suitable for real-time and resource-constrained occasions, while the accuracy is also guaranteed to a certain extent. Moreover, the robustness to pixel noise of the optical flow methods is better than feature-based methods.

It must be noted that, due to the assumption of photometric invariance [14], the optical flow methods are easily affected by external illumination, which means the feature-based methods feature a better robustness to external illumination than optical flow methods. Hence, a mixed method can be built based on the optical flow and feature-based methods, in order to achieve their robustness complementarity. The optical flow methods complement the feature point method when the image descriptor cannot be accurately calculated due to motion blur. The feature point methods provide better inter-frame pose results to suppress the motion drift caused by the wrong feature matching of the optical flow method.

Although the robustness complementarity has been achieved in the mixed method, the risk of tracking loss in weak illumination and low texture environment cannot be eliminated. Thus, the participation of IMU is very necessary.

The involvement of IMU data needs to go through an initialization process [18,19], which mainly completes the work of finding the gravity direction and correcting the bias of gyroscopes and accelerometers [20]. The initialization of IMU data has been implemented by many algorithms [1,2,3,4,5,6,7,8,9]. Nowadays, most of the state-of-the-art methods use the visual initialization information as a reference to correct the gyroscope and accelerometer bias of IMU [4,5,6,7,8] at the start. Taking VINS-Mono as an example [19], the initialization of the IMU is realized by aligning the IMU preintegration results with the vision-only structure from motion (SfM) results, which can obtain a rough estimation of IMU state vector. Then, the related parameters of the IMU are continuously optimized in the subsequent tracking process. This leads to the fact that the accuracy of VINS-Mono may not be ideal when the map is just initialized. For the monocular mode of ORB-SLAM2 [21], only two keyframes with sufficient matching feature points are needed for initialization, and the combination with IMU is not considered, so the initialization process is faster than that of VINS-Mono, but the map is constructed up-to-scale. Although the open-source code of ORB-SLAM3 [22] adds the mode of monocular camera with IMU, it also includes multi-map management and feature-based relocation and loop closing. The implementation of these functions relies on the continuous increase of feature saving and management with the expansion of the map range, which consumes a large amount of computer memory, making ORB-SLAM3 unable to run in real time on resource-constrained platforms. In addition, the self-calibration of extrinsic parameters is not considered in ORB-SLAM3.

Based on the above analysis, this paper proposes a robust parallel initialization method for monocular visual inertial SLAM, which integrates the characteristics of VINS-Mono and monocular mode of ORB-SLAM2 in the initialization phase. Based on the ORB-SLAM2 initialization algorithm framework and the VINS-Mono initialization algorithm framework, the research work of this paper first executes the monocular initialization thread in ORB-SLAM2 and the monocular inertial initialization process in VINS-Mono in parallel, and then uses the Umeyama algorithm [23] and bundle adjustment (BA) [24] optimization to integrate their initialization data. The result of this method is more robust, and with higher accuracy than the original VINS-Mono initialization. Compared with the original monocular initialization trajectory of ORB-SLAM2, the trajectory obtained by the initialization in this paper is in metric unit instead of up-to-scale, which better describes the real external environment. It also retains the online extrinsic parameter calibration function in VINS-Mono initialization, which improves the portability of the initialization algorithm. In the subsequent experimental comparison with the state-of-the-art ORB-SLAM3 initialization results, it can be found that the accuracy of the map obtained by the two initializations is comparable. The contributions can be summarized as follows:

- The design of two-thread parallel initialization makes SLAM system have better portability and initialization robustness.

- The designed initialization mode improves the initialization accuracy of the original VINS-Mono, which can provide a more accurate initial value of state estimation to the visual inertial SLAM system.

- The initialization state estimated by the proposed method makes the trajectory accurate like the one estimated by ORB-SLAM3 in monocular-inertial mode.

The remainder of this article is arranged as follows: In Section 2, the measurement preprocessing of the IMU and the camera information are described. In Section 3, the detail of the initialization process in the proposed method is introduced. Section 4 presents the experimental results of the improved initialization of monocular visual-inertial SLAM. Finally, the conclusions of this study are presented in Section 5.

2. Measurement Preprocessing

To implement the initialization method proposed in this paper, monocular camera and IMU measurements need to be preprocessed. For monocular visual measurements, we use the combination of the optical flow method and the feature-based method to track features between consecutive frames and detect new features in the latest frame. For IMU measurements, the outputs of IMU are pre-integrated between consecutive frames.

2.1. Vision Preprocessing

The optical flow tracking algorithm and ORB feature-based method are carried out in parallel for each new image. In both of them, keyframes are selected and saved according to the tracking effect. The optical flow method judges keyframes according to the average disparity between the current frame and the previous keyframe. The feature-based method is judged by the number of tracked feature points.

2.1.1. Optical Flow Tracking Algorithm

The main function of the optical flow tracking algorithm in this paper is to match the feature points between successive image frames based on the optical flow method for the feature points extracted from image data, and to get the feature matching relationship between image frames.

Specifically, for each new frame, new corner feature points were detected after existing feature points were tracked by Kanade–Lucas–Tomasi (KLT) sparse optical flow algorithm [25], and this operation is to ensure the number of feature points (100–300) detected in each frame are sufficient. Then, outlier rejection is performed using RANSAC [26] with a fundamental matrix model.

KLT corner tracking algorithm is also known as LK tracking algorithm. It is a classic corner tracking algorithm. The algorithm assumes that the target is in the video stream, only a small consistent displacement is generated, and the gray level of the target does not change much. The detailed description of the LK tracking algorithm is attached in Appendix A.

2.1.2. ORB Feature-Based Method

The feature-based method includes detecting and matching steps of feature points. The form of feature points adopts ORB feature points [14], which include FAST corner extraction and BRIEF descriptor calculation.

The main role of the method based on ORB feature in this paper is to obtain the motion relationship between two frames as well. The method is to calculate the matching relationship of ORB feature points extracted between two frames, and then to establish the initialized map according to the motion relationship and the position information of feature points obtained by the principle of epipolar geometry. The matching relationship is achieved by calculating the Hamming distance of the ORB descriptor of the relevant feature points in the image.

For instance, when the similarity between the descriptor of feature point A in image frame 1 and that of feature point B in image frame 2 is greater than 90%, A and B are considered to be the same feature point, that is, the two points are successfully matched. Since the motion relationship between image frame 1 and image frame 2 is unknown during the initialization process, we usually match all feature points within a certain range of pixel coordinates of feature point A of image 2 to obtain feature point B that matches feature point A in image frame 1.

2.2. IMU Preprocessing

IMU senses the information of acceleration and angular velocity of motion and provides relative positioning information. The combination of IMU and monocular camera can provide an absolute scale reference of the real environment for the SLAM system. The derivation process of preintegration is consistent with subsection B of section IV in VINS-Mono [27].

2.2.1. Model of Output

An IMU measures the rotation rate and the acceleration of the sensors with respect to an inertial frame. The measurements, namely and , are affected by additive white noise and a slowly varying sensor bias . Considering accelerometer bias , gyroscope bias and additive noise, the raw measurements of accelerometer and gyroscope, and , are separately modeled as follows:

The first items on the right side of (1) are the true value of accelerometer and gyroscope, which are all three-axis measurement information, as shown in (3), the second term is the bias, and the last term is the measurement noise. When the accelerometer is stationary, it is affected by the gravity acceleration value in the world coordinate system .

The additive noise in acceleration and gyroscope measurements are assumed as Gaussian white noise, , . Acceleration bias and gyroscope bias are modeled as random walk, , , whose derivatives are Gaussian white noise, , . The t subscript in the corresponding parameter denotes the value of the parameter at time t. represents the rotation matrix from the world coordinate system to the current inertial coordinate system.

2.2.2. IMU Preintegration

For two consecutive frames and , there exists several inertial measurements in time interval . Given the bias estimation, we integrate them in local frame as:

where is the quaternion representation of angular velocity increment, among

According to the output of accelerometer and gyro in Equation (1), , , and are the velocity increment pre-integrated measurements, the position increment pre-integrated measurements, and the rotation amount pre-integrated measurements of the motion between consecutive frames and , respectively. It can be seen that the preintegration term (2) can be solely with IMU measurements by taking as the reference frame given bias. is the rotation matrix from the inertial frame at time t to the inertial frame of .

2.2.3. Bias Correction

Equation (2) is derived basing on the assumption that the bias of gyro and additive table is constant in the integration interval, while in fact the bias changes slowly in the output process of IMU. When the bias changes, if the measurement is still calculated according to Equation (2), the pre-integral measurements need to be recomputed, which is computationally expensive. To solve this problem, if the estimation of bias changes minorly, we adjust , , and by their first-order approximations with respect to the bias as:

where denotes the Jacobian matrix of y versus x; refer to VINS-Mono for specific definitions. At the beginning, and are 0, and is identity quaternion. Moreover, is a quaternion multiplication operator.

3. The Initialization Method Integrates Feature-Based Pose Information, Optical Flow Pose Information and Inertial Information

If we process the results of the optical flow method, the feature-based method, and IMU preintegration at the same time, the amount of data to be optimized is considerable, which requires high computer performance; therefore, we use parallel threads to initialize and a two-step optimization. In order to make full use of the existing open-source programs and reduce the amount of calculation of the programs at the same time, we first initialize the optical flow data and feature-based data separately, and then fuse the two initialization results to complete the combination of visual information as well as the inertial information.

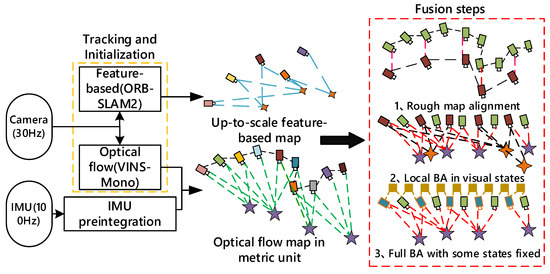

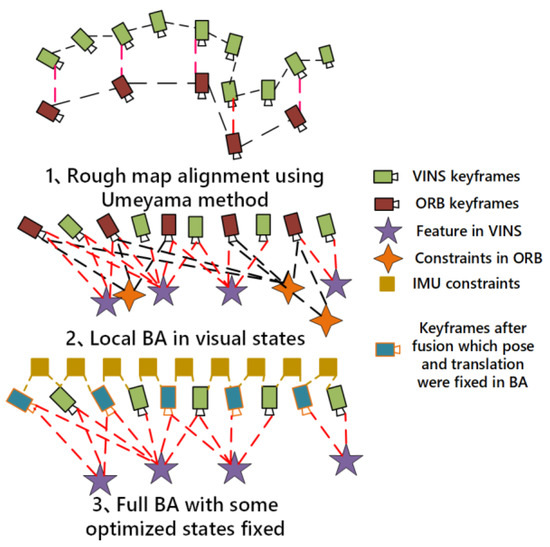

The specific implementation process is shown in Figure 1. Firstly, the initialization threads based on feature points and optical flow method are carried out in parallel. After the completion of the initialization of the two threads, the coarse alignment of the initial map is realized by using the Umeyama algorithm. Then, the local BA fusion of visual items is performed. Finally, the visual and inertial items in the two threads are fused to obtain the optimized initialization results.

Figure 1.

The framework of the robust parallel initialization methods.

3.1. Initialization Framework for Monocular VIO in the Optical Flow Method

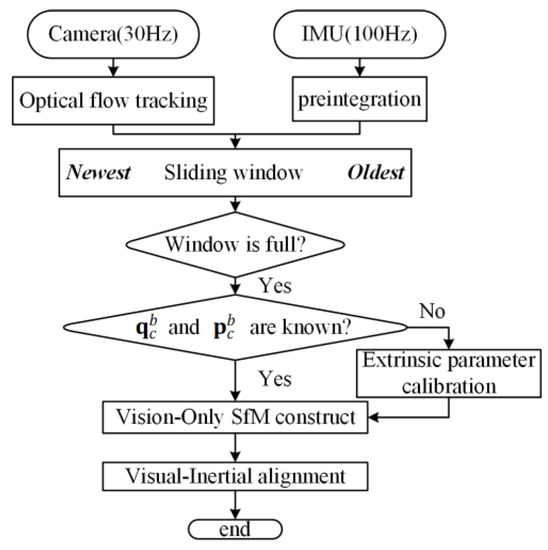

The optical flow monocular inertial initialization method adopted by VINS-Mono is advanced and widely used. It also includes the extrinsic parameters estimation [11] between the camera and the IMU, and the alignment of the world coordinate with the gravity direction. The procedure of initialization framework for VINS is shown in Figure 2.

Figure 2.

The initialization framework of VINS-Mono, including extrinsic calibration.

Visual tracking based on optical flow method requires continuous frames and small movement distance. However, in order to obtain the initial map points, the matching feature points need to be triangulated, and the premise of triangulation is that there is a large enough displacement between the two image frames where the feature points are located. Therefore, the initialization based on the optical flow method tends to establish a sliding window of size 10 as a data buffer to save the image frame, and the saving unit is one image frame. The initialized operation will find two frames with appropriate disparity in the sliding window for triangulation and propagate the triangulation results to other frames in the sliding window based on the results of optical flow tracking. Note that only frames that are successfully tracked and have a certain difference from the previous frame will be added to the sliding window. In VINS-Mono, section VI, Part D, has specific operations on marginalization of state items in the sliding window.

3.1.1. Vision-Only SfM in Sliding Window

As can be seen in Figure 2, the initialization method based on the optical flow method starts on the premise that the sliding window, which serves as the image frame data buffer, has been filled with the image frames output by the camera. When the sliding window is full of frames, we check feature correspondences between the latest frame and all previous frames. If we can find stable feature tracking (more than 30 tracked features) and sufficient parallax (more than 20 pixels) between the latest frame and any other frames in the sliding window, we recover the relative rotation and up-to-scale translation between these two frames using the five-point algorithm [28]. Then, the feature points observed in the two frames are triangulated. Based on these triangulated features, a PnP method [29] is performed to estimate poses of all other frames in the window. Finally, a global full BA is carried on to minimize the total reprojection error of all feature observations. In this case, the first image frame in the sliding window is the reference coordinate system.

3.1.2. Visual-Inertial Alignment

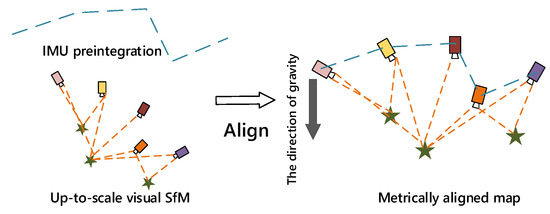

In VINS-Mono, to scale the visual map to metric scale, the IMU preintegration is aligned with the SfM pose estimation results from the previous step, as show in Figure 3 [8]. The basic idea is to match the up-to-scale visual structure with IMU preintegration.

Figure 3.

Illustration of the visual-inertial alignment process for initialization.

According to the spatial transformation relationship, we can easily know the coordinate transformation relationship between the camera frame and IMU frame as follows:

where

are matrix representation for left and right quaternion multiplication, is the skew-symmetric matrix from the first three elements of a quaternion, is the real part of the quaternion. Moreover, denotes the rotation quaternion from the y coordinate system to the x coordinate system, where and at positions of x and y represent the camera coordinate system and inertial coordinate system corresponding to the i-th frame image, respectively.

The relation of Equation (5) holds between all adjacent frames, and we can construct the overdetermined equation according to the equality relationship between multiple frames as:

where N is the index of the latest frame which keeps growing until the end of rotation calibration and .is weight derived from the robust norm for a better outlier processing. Then, use singular value decomposition (SVD) to obtain the rotation relationship between IMU and camera, . The extrinsic parameter results obtained by this method are not completely accurate because IMU error is not considered, and the value needs to be optimized by back-end optimization in the future. If is known exactly, skip this step. See Formula (4)–(9) in [11] for the detailed calculation process.

- Gyroscope Bias Calibration

Combining the results of SfM, , and IMU preintegration in Equations (2) and (4), we can construct an equation between two consecutive frames, and :

The cost equation is constructed accordingly, while the linear equation for IMU preintegration with respect to the gyro deviation is as follows to minimize the cost function:

where indexes all frames in the window. In such a way, we get an initial calibration of the gyroscope bias . Then, we repropagate all IMU preintegration terms , and using the new gyroscope bias.

- Velocity, Gravity Vector, and Metric Scale Initialization

We set the first camera frame as the reference frame for SfM. All frame poses (, ) and feature positions are represented with respect to . Moreover, (, ) are extrinsic parameters between the camera and the IMU. After the gyroscope bias is initialized, the gravity vector and metric scale in the world coordinate system can be initialized by establishing the relation equation between the IMU preintegration of two consecutive frames and the camera pose information obtained by SfM as:

among

According to the above equation, and the velocity , gravity vector and scale are taken as the state quantities to be estimated as:

Combine Equations (10) and (11) into the following linear measurement model:

where

, , and are obtained from the up-to-scale monocular visual SfM, and is the time interval between two consecutive frames. By solving this linear least-square problem:

the body frame velocities for every frame in the window, the gravity vector in the visual reference frame , as well as the scale parameter can be obtained.

- Gravity Refinement

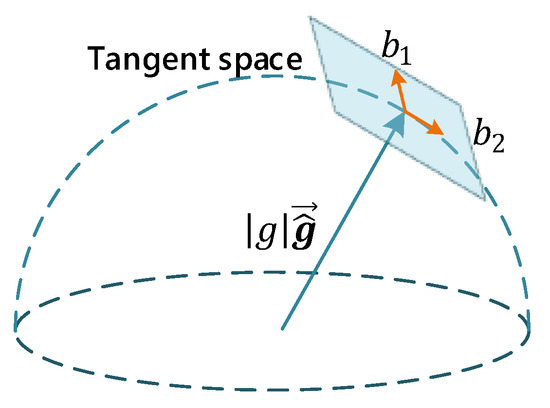

Then, the gravity is perturbed with two variables on its tangent space, which preserves 2-DOF as in Equation (16). The aim is to optimize the direction of the gravity vector obtained in the initialization of the previous step:

where is the known magnitude of the gravity, and is the unit vector representing the direction of gravity. Moreover, and are two orthogonal bases spanning the tangent plane, as shown in Figure 4 [8], and and are 2-D perturbation toward and , respectively. Lastly, and can be set arbitrarily as long as they are not parallel.

Figure 4.

Illustration of 2-DOF perturbation of gravity. The magnitude of gravity is known.

Then, the in Equation (10) is replaced by , and solve for 2-D together with other state variables. This process iterates several times until converges.

- Completing Initialization

The rotation between the world frame and the camera frame can be obtained by rotating the refined gravity vector to the z-axis. Then, all variables from the reference frame are rotated to the world frame . The body frame velocities will also be rotated to the world frame. Translational components from the visual SfM will be scaled to metric units. At this point, the initialization procedure is finished.

3.2. Initialization Framework for Monocular VO in ORB Feature-Based Method

After the detection and description of feature points in an image frame is finished, feature matching is carried out.

In this paper, the ORB initialization framework only carries out the pure vision initialization process as in ORB-SLAM2.

The monocular initialization process of ORB-SLAM2 can be divided into the following three stages.

3.2.1. Select two Frames as Initial Reference Frames

The first reference frame selected during initialization requires a sufficient number of feature points detected (>100); there is also a requirement on the number of feature point detection for the following image frames. If it does not meet the requirement on number of feature points in the two consecutive frames, the first reference frame should be selected again, and the above process should be repeated.

3.2.2. Calculation of Relative Pose Based on the Matching Features and Triangulation

When the number of feature points in two consecutive frames is sufficient, DBOW2 bag-of-word is used to accelerate the matching process of feature points. After word bag matching, multi-thread parallel processing is used to calculate the relative pose using basic matrix model and homography matrix model, respectively, and the pose transformation results of the two frames are obtained. The reprojection error of features is used to score the two pose results. The pose results obtained by the model with better score are selected to complete the triangulation of matching points. Then, the PnP algorithm is used to calculate the relative poses of the new image.

3.2.3. Global BA

Global BA is performed on the previously recovered feature points and poses to optimize the initialized map.

So far, we can obtain an initial up-to-scale map based on the ORB feature-based method that saves the relative poses and 3D coordinates of the corresponding feature points between image frames.

From the above description, we can see that the initialization process of the visual map based on ORB feature points is simple and fast, but the established map is not in metric units and does not align with the inertial coordinate system. On the premise that the two initialization processes are carried out synchronously, the map initialization based on ORB feature points should be completed first.

3.3. The Initialization Framework That Fuses the Initial Results of the Optical Flow Method and Feature Point Methods

The data fusion operation proposed in this paper will be performed after the initialization of both the optical flow method and the feature-based method. Since the initialization of the feature-based method only depends on the image of the monocular camera, the visual map established by feature-based method needs to be multiplied by a fixed scale to be in metric units. This map is referred to as the feature-based map in the following. So, the map established by optical flow method is referred to as the optical flow map.

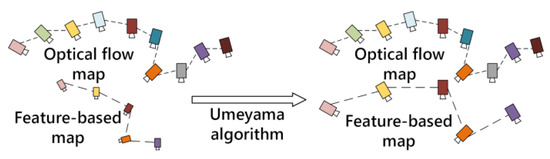

The fusion process can be divided into three steps, as shown in Figure 5. First, the similarity matrix transformation from feature-based map is obtained by the Umeyama algorithm. The Umeyama algorithm is designed to compute the positional relationship between two sets of data. When aligning two trajectories and , the goal is to compute a set of s, and such that the objective function (17) is optimal:

where is the scaling factor, is the rotation matrix, and represents the translation.

Figure 5.

The illustration of data fusion process between the feature-based map and the optical map.

Then, the transfer matrix between two co-view frames in the feature-based map is substituted into the corresponding relationship in optical flow method for optimization. Finally, the pose and rotation information of the state vector optimized in the previous step is fixed, and the remaining states in the state vector are further optimized.

3.3.1. Rough Map Alignment Using Umeyama Algorithm

In order to better perform the data fusion operation between the two maps, we use the Umeyama algorithm to solve the similarity transformation matrix, which is solved by the inputs of position information from the common key frame in the two maps. The basic idea of this step is to restore the feature-based map to metric unit and align with the world coordinate system in optical flow map through scaling, rotation, and translation operations.

Through the Umeyama algorithm, we can get the similarity transformation matrix and make the following equation between the two maps approximately true:

The feature-based map can be approximate aligned with the optical flow map after the similarity transformation above, and the alignment process is shown in Figure 6.

Figure 6.

Illustration of the alignment process between optical flow map and feature-based map by using Umeyama algorithm.

3.3.2. Local BA in Visual States

The feature-based map mainly saves the relative rotation and translation information between frames. Due to the higher accuracy of the feature matching algorithm used in the initialization of feature-based method, the optimization of the states in feature-based map is ignored. The relevant information provided by the feature-based map is added to the optimization as measurement.

Thus, the state vector in the local BA is defined as:

where is the position state of IMU states at the time the kth image is captured. It contains position and orientation of the IMU in the world frame. Moreover, is scale value in the similarity matrix which calculated by Umeyama algorithm and needs to be optimized in BA, n is the total number of keyframes, and m is the total number of features tracked by optical flow method in the sliding window. Lastly, is the inverse distance of the lth features from its first observation.

The visual BA formulation can be optimized by minimizing the sum of the Mahalanobis norm of all measurement residuals to obtain a maximum posteriori estimation as:

where the Huber norm is defined as:

where , and are residuals for scale estimation in feature-based map, rotation estimation between two common-view frames in feature-based map, and visual measurements in optical flow map, respectively. The detailed definition of residual term will be presented next.

- Residuals for Rotation Estimation between Two Common-view Frames in feature-based Map

The position parameters of the image frames in the optical flow map are mainly optimized based on the relative rotation between frames solved by the feature-based map. According to the inter-frame conversion matrix of the feature-based map, combined with Equation (18), the constrained equation holds as follow:

where , and are the quaternion form of , and , respectively. is calculated in Equation (18). The ith image is co-viewed with the jth image. Lastly, is the set of keyframe pairs in the feature-based map with co-view feature points.

- Residuals for Scale Estimation in ORB Map

Since the accuracy of the feature-based map on inter-frame rotation matrix is considerable, the translation requires a scale factor to restore it.

According to the feature-based map and the similarity matrix provided by Umeyama algorithm in the first step, the translation between two co-view frames and scale factor s are added to the residual formulation. Combined with Equation (18), the residuals corresponding to s are defined as:

where is the transformation matrix from the inertial coordinate system of the jth image frame to the world coordinate system of the optical flow map. The reason for considering the inertial coordinate system is to further optimize the extrinsic parameters between the camera and the IMU. Lastly, denotes the norm of the vector.

- Visual Measurement Residual for features tracked by Optical flow

Consider the lth feature that is first observed in the ith image, the residual for the feature observation in the jth image is defined as:

where is the first observation of the lth feature that happens in the ith image, is the observation of the same feature in the jth image, is the back projection function, which turns a pixel location into a unit vector using camera intrinsic parameters, is the unit vector for the observation of the lth feature in the jth frame, and is predicted feature measurement on the unit sphere by transforming its first observation in the ith frame to the jth frame.

3.3.3. Full BA with Some Optimized States Fixed

In the previous step, the inter-frame constraint in the feature-based map is introduced to optimize the state of image frame and extrinsic parameters in optical flow map. The optimized states will be fixed, and then the Full BA operation of visual states in optical flow map and inertial states is performed:

where is the IMU state at the time that the kth image is captured. It contains position, velocity, and orientation of the IMU in the world frame, and the acceleration bias and gyroscope bias in the IMU body frame.

The visual measurement model here is same as in Local BA, as Equation (24). The only difference is that the position information () of the frame that exits in both the optical flow map and the feature-based map is treated as a constant. To this end, the nonlinear cost function here is formulated as:

4. Experiments and Discussion

To evaluate the effectiveness and practicability of the improved initialization of monocular visual-inertial SLAM, this paper conducts experiments on the visual-inertial datasets (EuRoC) collected on-board a micro aerial vehicle (MAV). The datasets contain stereo images, synchronized IMU measurements, and accurate motion and structure ground-truth [30]. The main experiments are carried out in three indoor sequences of EuRoC dataset which were recorded with a micro aerial vehicle equipped with two global-shutter, monochrome cameras, and an IMU. The two cameras and the IMU were hardware time-synchronized and were logged at a rate of 20 Hz and 200 Hz, respectively.

The sequences we chose contain the following three cases in the initialization phase: (1) MH_01_easy has a fast translational motion in the initialization phase; (2) MH_04_difficult starts from static in the initialization stage, and the weak texture part accounts for a large proportion in the starting screen; (3) The initialization phase of V1_03_difficult starts with a stationary phase followed by a fast, large rotation motion. The above three scenarios are enough to cover most application scenarios. All the experiments are carried out with an Intel CPU i7-8565U (8 cores @1.8GHz) laptop computer with 16GB RAM.

4.1. Implementation Details

The proposed initialization method is implemented in the combination of VINS-Mono and ORB-SLAM2. The VINS-Mono is deeply combined with the monocular mode of ORB-SLAM2 to realize the synchronous input and processing of data. Then, the initialization process based on the optical flow method in VINS-Mono and the feature based method in ORB-SLAM2 can be carried out synchronously.

The specific fusion optimization algorithm is shown at Section 3. Firstly, the Umeyama algorithm is used to estimate the scale of ORB-SLAM2 based on the matching trajectories obtained by the two initialization threads. Then, the constraints provided by the trajectory which is obtained from the feature-based method after scaling to metric unit are added to the modified back-end optimization of VINS-Mono. Finally, the optimized state estimates are obtained after several iterations.

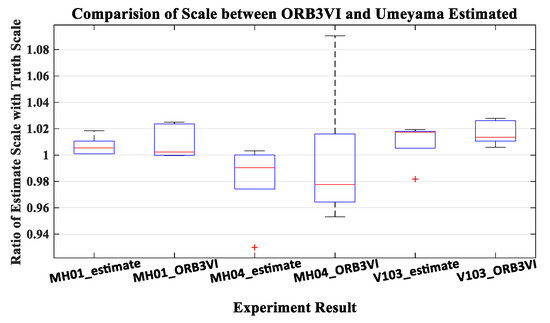

4.2. Validation of Umeyama Algorithm

In order to verify the effectiveness of the Umeyama algorithm, five tries were performed in the MH_01_easy, MH_04_difficult, and V1_03_difficult sequences, respectively. The comparison between the Umeyama estimated scale values and the actual scale values compared with trajectory truth are shown in Table 1. The scale is determined by the choice of two initialization reference frames, which is different in each time of initialization phase in ORB-SLAM2. The scale to scaling the initialization trajectory of ORB-SLAM2 to metric unit is not the same for each experiment. The ratio in Table 1 represents the scale error between the trajectory of ORB-SLAM2 scaled by the value estimated by Umeyama and the trajectory truth in percentage. It is calculated as Equation (28).

Table 1.

Scale Comparision of Umeyama Estimated against the Truth.

From the value of ratio, we can see that the scale estimated by Umeyama is relatively accurate compared with the actual value. To further verify, we compare the ratio value in Table 1 with the ratio value of the ORB-SLAM3 monocular inertial mode trajectory. The ratio value of ORB-SLAM3 is obtained by EVO (Python package for the evaluation of odometry and SLAM) [31]. As shown in Figure 7, the accuracy of the estimated scale is similar to ORB-SLAM3 or even better than ORB-SLAM3. ORB-SLAM3 as the state-of-the-art SLAM system is enough to prove the effect of Umeyama algorithm.

Figure 7.

The distribution of ratio value in five times experiments. The experiments are carried out in MH_01_easy, MH_04_difficult, and V1_03_difficult sequences. The XX_estimate represents the ratio estimated by Umeyama algorithm, and the XX_ORB3VI represents the ratio obtained by ORB-SLAM3 in monocular-inertial mode.

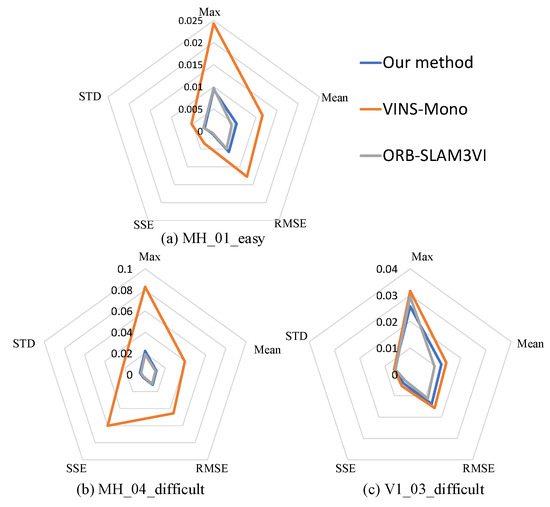

4.3. Evaluation on Optimization Results

The evaluation method is carried out by EVO_APE of EVO, which is mainly used to evaluate the global consistency of the whole trajectory. APE stands for absolute pose error. By default, it executes ATE (absolute trajectory error). To evaluate this error results, we mainly compare from five aspects: max (maximum error), mean (mean value of error), RMSE (root mean square error), SSE (sum of squares for error), and STD (the standard deviation). Each index uses the mean of the five experiments, and the comparison results are shown in Figure 8; (a), (b), and (c) are the comparison results in sequence MH_01_easy, MH_04_difficult, and V1_03_difficult, respectively. It can be found that the optimized trajectory obtained by our proposed method has comparable accuracy with the trajectory obtained by ORB-SLAM3 initialization, while the trajectory obtained by VINS-Mono initialization has poor accuracy. Because VINS-Mono uses the method that the initialization phase completes the coarse estimation of the IMU bias and extrinsic parameters, and then continues to modify these states in the subsequent back-end optimization process, this method cannot guarantee a good positioning accuracy in the initialization phase. In addition, due to the design of parallel threads, an advantage of our proposed method is that even when the extrinsic parameters between the camera and the IMU are unknown, the map construction and tracking process can be started by ORB-SLAM2 in monocular mode, and the scale scaling operation will be realized after the data fusion optimization with the states estimate by initialization process of VINS-Mono.

Figure 8.

The comparison of trajectories between our method, VINS-Mono, and ORB-SLAM3 with the trajectory truth in absolute trajectory error, respectively.

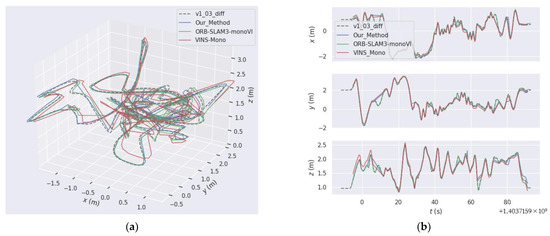

In order to show the trajectory comparison of the proposed method more intuitively, we take V1_03_difficult Sequence as an example. The trajectory comparison diagram is shown in Figure 9; (a) is the display of the trajectory in 3D space, and (b) is the error comparison in the XYZ axis. As can be seen from Figure 9, our proposed method is comparable to the monocular-inertial mode of ORB-SLAM3. There are some discontinuities in the trajectory because we only use inertial data in the initialization stage, and the combination algorithm with optical flow method and inertia has not been implemented in the subsequent tracking stage. Therefore, the subsequent trajectory is actually the trajectory after ORB-SLAM2 was scaled to metric unit, and its tracking process will be lost due to insufficient number of feature points, but it can continue to locate after relocation. The discontinuity of VINS-Mono is because the saved frame pose data is saved together with the key frames in ORB-SLAM2, so the discontinuity also occurs.

Figure 9.

The comparison of trajectories between our method, VINS-Mono and ORB-SLAM3 with the trajectory truth, v1_03_diff. (a) trajectory absolute errors shown in 3D space; (b) trajectory absolute errors shown in x, y and z axes, respectively.

5. Conclusions

In this paper, an improved initialization method for monocular visual-inertial SLAM is proposed. It uses two visual processing methods to initialize in parallel, which improves the robustness of system initialization and the utilization of image information. The extrinsic parameter estimation algorithm in VINS-Mono is used to improve the portability of SLAM. The initialization accuracy of the proposed method is comparable to that of ORB-SLAM3. The experimental results show that the proposed method features the same scale estimation accuracy and positioning performance as ORB-SLAM3 in monocular inertia model. In addition, the extrinsic parameter estimation of the camera and the IMU is added, which enhances the portability of the proposed method. It is planned to further integrate the optical flow information, feature point information, and inertia information in the subsequent tracking stage to achieve robust and lightweight monocular visual inertia SLAM.

Author Contributions

Conceptualization, M.Z. and H.W.; methodology, M.Z.; software, M.Z., H.W. and Y.Y.; validation, M.Z. and X.X; formal analysis, M.Z.; writing—original draft preparation, M.Z.; writing—review and editing, M.Z.; supervision, X.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Natural Science Foundation of China under Grants 62073080, 62003085 and 61921004, in part by the Zhishan Youth Scholar Program of Southeast University under Grant 2242021R41185, in part by the Fundamental Research Funds for the Central Universities under Grant 2242022K30017 and Grant 2242022K30018.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments on the paper and the builders of the datasets.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

LK Optical Flow Algorithm

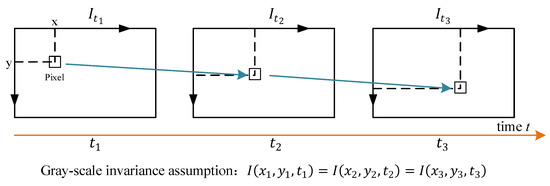

Based on the gray-scale invariance assumption and equality relation in Figure A1, for a pixel located at (x, y) at time t, we suppose that it moves to () at time . Due to the gray-scale invariance assumption, there is:

The Taylor expansion of the left-hand side is:

and because of the gray invariant assumption,

divide both sides by and get:

is the velocity of the pixel in the x axis, and is the velocity in the y axis, denoted as . Moreover, is the gradient of the image gray scale in the x direction at this point, and is the gradient in the y direction, denoted as and . The change of the image gray level with time is denoted as . Written in matrix form, we have .

Figure A1.

LK optical flow algorithm schematic diagram. denote the image data at time , x and y are the pixel coordinates of the corresponding feature point, and represents the gray value of the image frame at pixel coordinates at time .

The above equation is a first-order equation with two variables, and cannot be solved by the constraint of only one pixel. Therefore, in LK optical flow, assuming that the pixels in a certain window of size have the same motion, there is:

This is an overdetermined equation with respect to . The traditional solution is to find the least squares solution, which gives the velocity of the pixel motion between image frames. In SLAM, LK optical flow is commonly used to track the motion of feature points.

References

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An open-source SLAM system for monocular stereo and RGB-D cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Visual-inertial monocular SLAM with map reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Leutenegger, S.; Furgale, P.; Rabaud, V.; Chli, M.; Konolige, K.; Siegwart, R. Keyframe-based visual-inertial SLAM using nonlinear optimization. Proc. Robot. Sci. Syst. 2013. [Google Scholar] [CrossRef]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual-inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Stumberg, L.v.; Cremers, D. DM-VIO: Delayed Marginalization Visual-Inertial Odometry. IEEE Robot. Autom. Lett. 2022, 7, 1408–1415. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Lupton, T.; Sukkarieh, S. Visual-inertial-aided navigation for high-dynamic motion in built environments without initial conditions. IEEE Trans. Robot. 2012, 28, 61–76. [Google Scholar] [CrossRef]

- Furgale, P.; Rehder, J.; Siegwart, R. Unified temporal and spatial calibration for multi-sensor systems. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1280–1286. [Google Scholar]

- Yang, Z.; Shen, S. Monocular visual–inertial state estimation with online initialization and camera–IMU extrinsic calibration. IEEE Trans. Autom. Sci. Eng. 2017, 14, 39–51. [Google Scholar] [CrossRef]

- Huang, W.; Liu, H. Online Initialization and Automatic Camera-IMU Extrinsic Calibration for Monocular Visual-Inertial SLAM. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 5182–5189. [Google Scholar]

- Negahdaripour, S. Revised definition of optical flow: Integration of radiometric and geometric cues for dynamic scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 961–979. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary robust independent elementary features. In Computer Vision—ECCV 2010; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6314, pp. 778–792. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded up robust features. In Computer Vision—ECCV 2006; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951, pp. 404–417. [Google Scholar]

- Moreno, F.-A.; Zuñiga-Noël, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar]

- Li, M.; Mourikis, A.I. High-precision consistent EKF-based visual-inertial odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Qin, T.; Shen, S. Robust initialization of monocular visual-inertial estimation on aerial robots. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4225–4232. [Google Scholar]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. IMU preintegration on manifold for efficient visual-inertial maximum-a-posteriori estimation. Proc. Robot. Sci. Syst. 2015. Available online: http://hdl.handle.net/1853/55417 (accessed on 1 June 2022).

- Mur-Artal, R.; Tardós, J.D.; Montiel, J.M.M.; Gálvez-López, D. ORB-SLAM2. 2016. Available online: https://github.com/raulmur/ORB-SLAM2 (accessed on 1 June 2021).

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM3. 2021. Available online: https://github.com/UZ-SLAMLab/ORB-SLAM3 (accessed on 1 June 2021).

- Umeyama, S. Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 376–380. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment: A modern synthesis. In Vision Algorithms: Theory and Practice; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1999; Volume 6314, pp. 298–372. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th international joint conference on Artificial intelligence, San Francisco, CA, USA, 24–28 August 1981. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Shen, S.; Michael, N.; Kumar, V. Tightly-coupled monocular visual-inertial fusion for autonomous flight of rotorcraft MAVs. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5303–5310. [Google Scholar]

- Nistér, D. An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–770. [Google Scholar] [CrossRef] [PubMed]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An accurate O(n) solution to the PnP problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- European Robotics Challenge. Available online: http://http://www.euroc-project.eu/ (accessed on 1 June 2021).

- Evo. Available online: https://github.com/MichaelGrupp/evo (accessed on 1 June 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).