1. Introduction

It is known that the support of computational systems is in several areas of knowledge, be it in the human, exact, and biological areas. Consequently, this contributes to the accelerated increase in the generation, consumption, and transmission of data in the global network. According to the study by the Statista Research Department [

1], in 2018, the total amount of data created, captured, and consumed in the world was 33 zettabytes (ZB)—equivalent to 33 trillion gigabytes. Already in 2020 it has grown to 59 ZB and is expected to reach 175 ZB by 2025.

In the Internet of Things (IoT) context, we know that these devices (e.g., virtual assistants) are connected to the Internet and generate large amounts of data. On the other hand, we also have Web 2.0 platforms, e.g., social networks, micro-blogs, and all these types of websites with massive amounts of textual information available online. It is worth mentioning that the data generated by these devices and websites are growing faster and faster. An important point worth mentioning is that the information generated from a large amount of text/data generated by users for many entrepreneurs or public agents is vital for maintaining their business. This way, one can exploit this constant and continuous feedback on a particular subject/product through these data. Due to the ever-increasing volume of online text data, the text classification task is more necessary than ever. In this context, text classification (automatically classifying textual) is an essential task.

Automatic text classification can be described as a task that automatically categorizes group documents into one or more predefined classes according to their topics. Thereby, the primary objective of text classification is to extract information from textual resources. The text classification task is the basic module for many NLP (natural language processing) applications. However, this necessitates the presence of efficient and flexible methods to access, organize, and extract useful information from different data sources. These methods can include text classification [

2,

3,

4], information retrieval [

5,

6], summarization [

7,

8], text clustering [

9,

10], and others, collectively named text mining [

2,

4,

6].

Many works are available in the literature on text classification tasks using various neural network models. Some typical works include convolutional neural network (CNN) models [

11,

12], attentional models [

13,

14], adversarial models [

3], and recurrent neural network (RNN) models [

13], which particularly outperform many statistics-based models. The previously mentioned works represent text based on words, i.e., word vectors pre-trained over a large-scale corpus are usually used as the sequence features. Such vectors are usually trained via the word2vec tool [

15] or Glove [

16,

17] algorithm based on the presumption that similar words tend to appear in similar contexts.

In recent years, to avoid specific structures and significantly decrease the parameters to be learned from scratch, as is done in the models presented above, some researchers have contributed in another direction, highlighting the pre-training models for general language and fine-tuning them to downstream tasks. Another problem with traditional NLP approaches worth mentioning is the issue of multilingualism [

18]. The Open AI group (

https://openai.com/, accessed on 13 July 2022) proposes the GPT (Generative Pre-trained Transformer) using a left-to-right multi-layer Transformer architecture to learn the general language presentations from a large-scale corpus to deal with the abovementioned problems [

19]. Later, Google, inspired by GPT, presented a new language representation called BERT (Bidirectional Encoder Representations from Transformers) [

20]. BERT is a state-of-the-art language representation model designed to pre-train deep bidirectional representations from unlabeled text and is fine-tuned using labeled text for different NLP tasks [

20]. A smaller, faster, and lighter version of BERT architecture, well-known as DistilBERT, was implemented by the HuggingFace team (

https://github.com/huggingface/transformers, accessed on 13 July 2022).

This work aimed to examine an extensive dataset from different contexts, including datasets from different languages, specifically English and Brazilian Portuguese, to analyze the performance of the two models (BERT and DistilBERT). To do this, we first fine-tuned BERT and DistilBERT, then the aggregating layer was utilized as the text embedding, and then we compared the two models with several selected datasets. As a general result, we can highlight that the DistilBERT is nearly 40% smaller and around 45% faster than its larger counterpart. Yet, it preserves around 96% of language comprehension skills for both English and Brazilian Portuguese for balanced datasets.

The main contributions of the paper are as follows:

We compare BERT and DistilBERT, demonstrating how the Light Transformer model can be very close in effectiveness compared to its larger model for different languages;

We compared models Transformer (BERT) and Light Transformer (DistilBERT) for both English and Brazilian Portuguese.

The rest of the document is organized as follows:

Section 2 presents a short summary of the necessary concepts to understand this work, while

Section 3 presents the method and hyperparameter configuration for automatic text classification. The case study of this work is presented in

Section 4, and the results are presented in

Section 5. Thereafter, in

Section 6, we discuss the performance of the two models (BERT and DistilBERT) in the different datasets used. Finally,

Section 7 concludes with a discussion and recommendations for future work.

3. Method and Hyperparameter Configuration

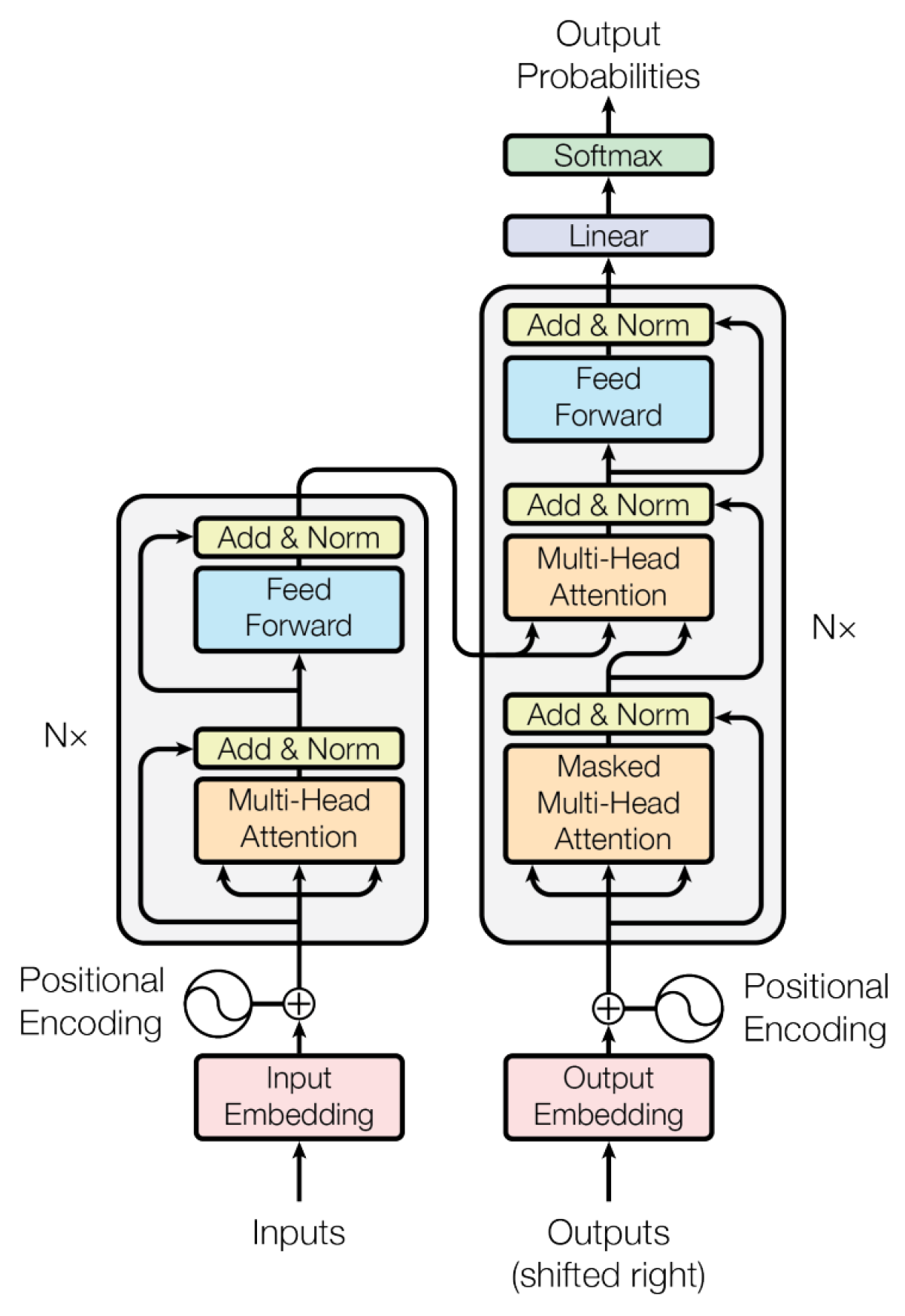

This section presents the details of our proposed method for automatic text classification from different languages. The approaches we designed were mainly inspired by the works of Vaswani et al. [

19] and Devlin et al. [

20], in which attention mechanisms made it possible to track the relations between words across very long text sequences in both forward and reverse directions. Notwithstanding, we explore an extensive dataset from different contexts, including datasets from different languages, specifically English and Brazilian Portuguese, to analyze the performance of the two state-of-the-art models (BERT and DistilBERT).

Our implementation follows the fine-tuning model released in the BERT project [

20]. For the multi-class purpose, we use sigmoid cross entropy with logits function to replace the original softmax function, which is appropriate for one-hot classification only. To do this, we first fine-tuned the BERT and DistilBERT, used the aggregating layer as the text embedding, and compared the two models with several selected datasets.

The methodological details are organized into two subsections. The structural steps are the following:

Section 3.1 presents the details of the hyperparameter configuration for fine-tuning process, while

Section 3.2 presents the environment where the experiments were performed.

3.1. Hyperparameter Optimization for Fine-Tuning

In this section, we present the hyperparameter optimization for fine-tuning of our work. All the fine-tuning and evaluation steps performed on each model in this article used the Simple Transformers Library (

https://simpletransformers.ai/docs/usage/ accessed on 1 August 2022).

Table 1 reports the details of each hyperparameter configuration for fine-tuning process.

The

is a hyperparameter that controls the number of samples from the training dataset used on each training step. On each step, the predictions are compared with the expected results, an error is calculated, and the internal parameters of the model are improved [

31].

The second parameter of

Table 1,

, controls the number of times the training dataset will pass through the model during the training process. An epoch has one or more batches [

31]. A high number of epochs can make the model overfit, causing it not to generalize, so when the model receives unseen data, it will not make a trustful prevision [

32].

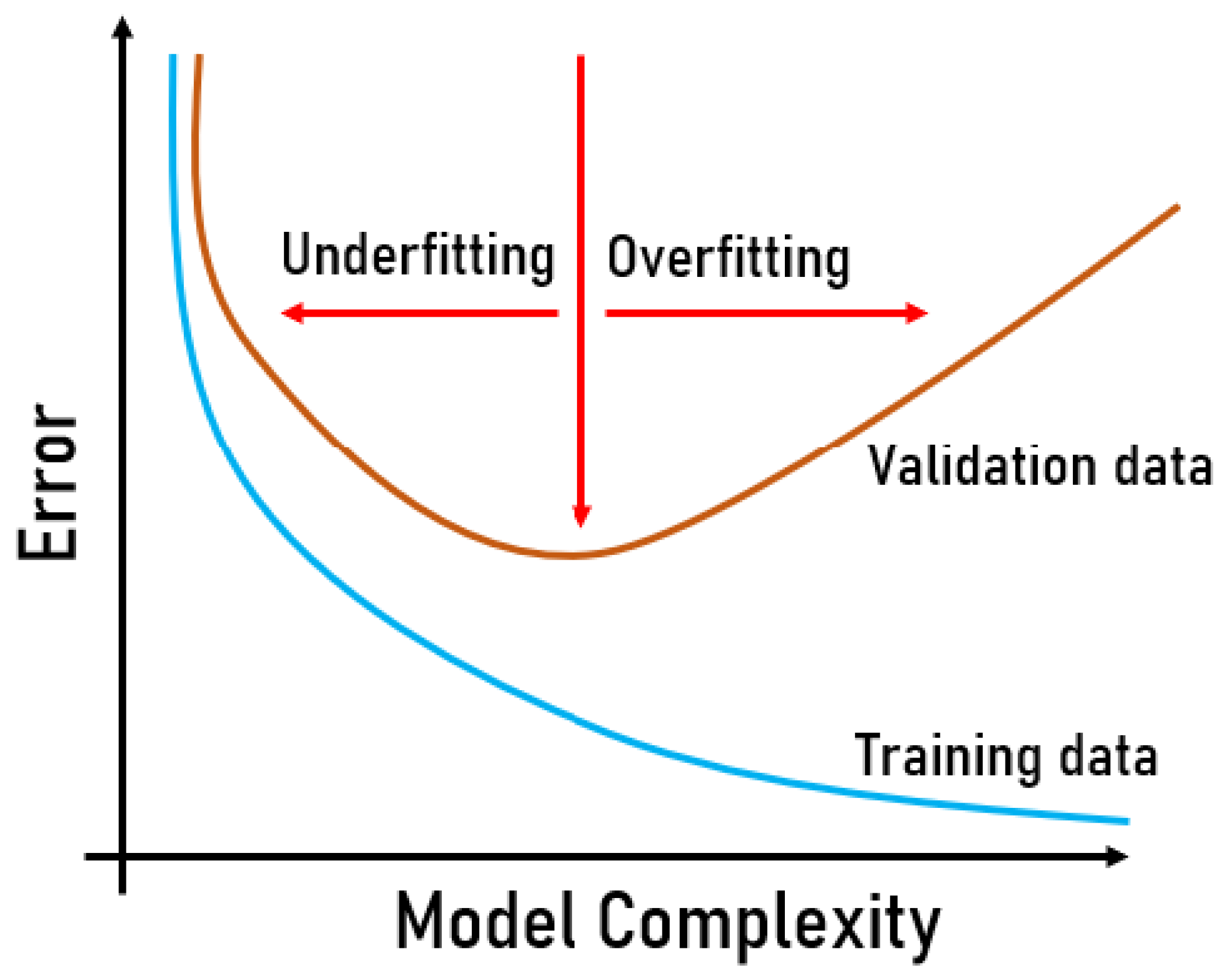

Overfitting can be detected in the evaluation step by analyzing the error of the predictions, as in

Figure 3. A low number of epochs can also cause underfitting, which means that the models still need more training to learn from the training dataset.

Furthermore, the

is also related to underfitting or overfitting. This parameter controls how fast the model learns according to the errors obtained. Increasing the learning rate can bring the model from underfitting to overfitting [

33].

The

determines in what measure the weight and the learning rate should be changed in order to reduce the losses of the models. The AdamW is a variant of Adam Optimizer [

34].

is a parameter used on Adam Optimizer.

The refers to the class from the Simple Transformers Library that was used to fine-tune the models. The maximum sequence length parameter refers to the maximum size of the sequence of tokens that can be inputted into the model.

Table 2 presents the hyperparameters of the pre-trained models used in this article for the performance evaluation. The distilled version of the models has six hidden layers, less than the original BERT and BERTimbau models, demonstrating how much smaller the distilled models are. Additionally, the DistilBERT model has 50 million fewer parameters than BERT. The author does not provide the number of parameters of the DistilBERTimbau model.

3.2. Implementation

A cloud GPU environment (Google Colab Pro

https://colab.research.google.com, accessed on 8 April 2022) was chosen to conduct the fine-tuning process on the models using the datasets selected; the metrics used to evaluate the models were defined. During the fine-tuning process, the (Weights and Biases

https://wandb.ai/site, accessed on 8 April 2022) tool was used to monitor each training step and the models’ learning process to detect some overfitting or anything that would bring about poor learning performance.

We trained our models on Google Colab Pro using the hyperparameters described in

Table 1 and

Table 2. The results were computed and compared between each model to extract information about their performance, and graphics were built to visualize better and compare the results.

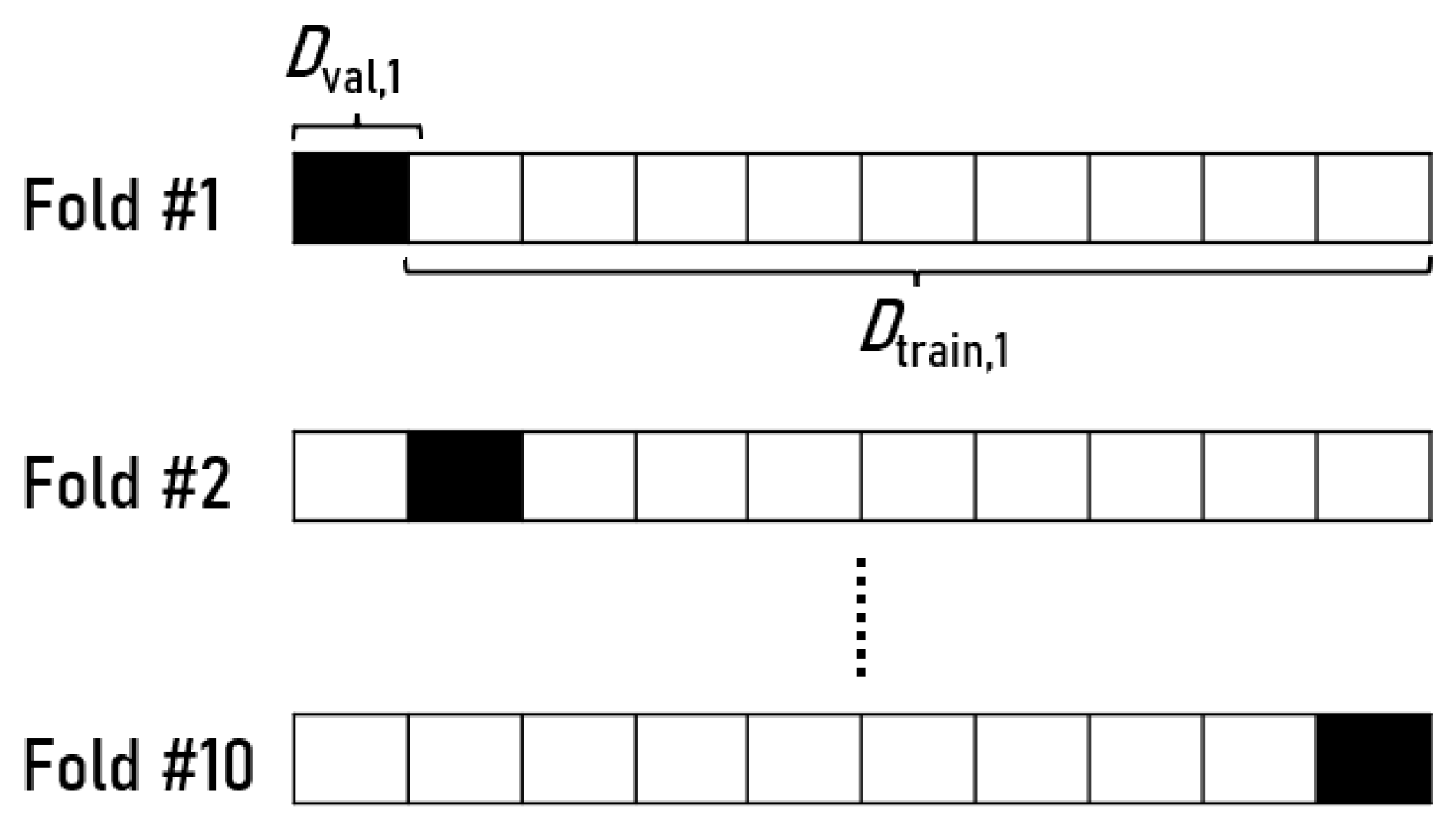

Furthermore, the K-fold cross-validation method was used, which consists of splitting the dataset into

n folders so that every validation set is different from the others. The

K refers to the number of approximately equal size disjoint subsets, and the fold refers to the number of subsets created. This splitting step is done by randomly sampling cases from the dataset without replacement [

35].

Figure 4 represents an example from 10-fold cross-validation. Ten subsets were generated, and each subset is divided into ten parts where nine of them are used to train

and the other one to evaluate

the model.

Every evaluation part,

, differs between the subsets. The model is trained, evaluated, and then discarded for each subset or fold, so every part of the dataset will be used for training and evaluation. This allows us to see the potential of the model’s generalization and prevent overfitting [

35,

36].

To evaluate the models, a 5-fold cross-validation was used. So five subsets were created, and each one was divided into five parts where a fourth of them (80%) are used for the fine-tuning process , and rest (20%) to evaluate .

5. Results

This section shows the performance assessment of the BERT, DistilBERT, BERTimbau, and DistilBERTimbau models. For a better presentation, this section was divided into two subsections. The first presented the results of the English language (

Section 5.1), and the second presented the results of the Brazilian Portuguese language (

Section 5.2).

It is worth mentioning that, after each K-fold iteration, an evaluation is made using the evaluation part of the dataset to measure the score of the fine-tuned model.

5.1. English Language

Brexit Blog Corpus was the first dataset evaluated. The BERT model’s results are presented in

Table 8 and the DistilBERT model’s results are in

Table 9.

The Brexit Blog Corpus dataset obtained relatively low score results for all metrics evaluated, see

Table 9. This behavior is expected since the dataset used is unbalanced. That is, many classes and few samples for each class; furthermore, some class has significantly more or fewer samples than others.

Additionally, the score results obtained by the distilled model of BERT are similar to those of its original model BERT. Still, the distilled model took around 47.7% less time on the fine-tuning process than BERT since DistilBERT is a more lightweight model than BERT.

The second English dataset evaluated was the BBC Text. The evaluation score results are presented in

Table 10 for the BERT model and in

Table 11 for DistilBERT.

Unlike the Brexit Blog Corpus dataset, the BBC Text achieved outstanding score results. It is known that this dataset is balanced, having a good and uniform number of samples for each class. Comparing the two models, the evaluation results are very similar, but the fine-tuning time is around 37.3% lower for DistilBERT compared to BERT.

The last English dataset evaluated was Amazon Alexa Review Dataset. The BERT model’s score result are presented on

Table 12 and

Table 13 for DistilBERT model.

The Amazon Alexa Reviews dataset reached good results. Analyzing

Table 12 and

Table 13, it is possible to note that the precision, recall, and F1-score are a little lower than the accuracy score. Those results may occur because the dataset has fewer examples for the negative class and a very high number of samples for the positive class.

The BERT and DistilBERT score results were also very similar when compared. The DistilBERT model took around 52.1% less time to fine-tune when compared to its larger counterpart.

5.2. Brazilian Portuguese Language

In order to evaluate the Portuguese model BERTimbau and the distilled version DistilBERTimbau, the first Portuguese dataset selected was the Textual Complexity Corpus for School Internships in the Brazilian Educational System Dataset (TCIE). The BERTimbau score results are presented in

Table 14 and the DistilBERTimbau results in

Table 15.

The TCIE dataset accomplished good results. Looking over

Table 14 and

Table 15, it is possible to note that the distilled model had an evaluation score slightly lower than the BERTimbau model on every metric, but the fine-tuning process took around 21.5% longer on BERTimbau than the distilled version.

The second Portuguese dataset used was the PorSimples Corpus. For this dataset, the parameters used on the other datasets presented in

Table 1 caused overfitting. A lower number of the learning rate hyperparameter was used to correct this issue,

instead of

. This reduces the model’s learning speed, solving the overfitting issue. The BERTimbau results are presented in

Table 16 and the DistilBERTimbau evaluation score results are presented in

Table 17.

The evaluation result with this dataset did not achieve very high scores in both the BERTimbau and DistilBERTimbau models. These low results may be explained because, on the PorSimples Corpus dataset, some sentences are similar to the others when passing through the simplifications process, so similar sentences are presented in each dataset class. Hence, the model has more challenges when learning the class differences. Additionally, the BERTimbau model took around 49.2% more time than the distilled model to the fine-tuning process. Furthermore, the high time results presented in

Table 16 and

Table 17 were expected since this dataset has 11,944 samples, many more when compared to the other datasets.

Table 18 contains the size of the models generated after the fine-tuning process for each dataset. Analyzing the results, it is possible to identify that the distilled models produced models around 40% smaller than their larger counterparts.

An important observation is that on every evaluation, the scores reached on every k-fold iteration had very similar results, which show the model’s generalization capability.

The barplot presented in

Figure 5 contains the arithmetic mean of each scoring metric on each k-fold iteration. In this figure, the red bars refer to BERT/BERTimbau models, and the blue ones to DistilBERT/DistilBERTimbau models.

As we can see, the score recorded by the distilled models is very similar to the ones scored by the original models. This shows the power of the compression of deep learning models technique, which produces smaller models, requires fewer computation resources, and has almost the same power as the original models.

6. Discussion

Analyzing the results presented in

Section 5 and

Figure 5, the scores recorded by the distilled models are very similar to the ones scored by the original models. In our experiments, they were around 45% faster in the fine-tuning process, about 40% smaller, and also preserving about 96% of the language comprehension skills performed by BERT and BERTimbau. It is worth noting that these results are similar to the results presented on [

25], where the DistilBERT models were 40% smaller, 60% faster, and retained 97% of BERT’s comprehension capability.

The work presented in [

42] compared BERT, DistilBERT, and other pre-trained models for emotion recognition and also achieved similar score results on BERT and DistilBERT. Furthermore, the DistilBERT model was the fastest one. These results presented in that work and also in the literature show the power of the compression of deep learning models technique, which produces smaller models, requires fewer computation resources, and has almost the same power as the original models.

Another critical point we can highlight in

Figure 5 is the importance of the quality of the datasets to produce a good predicted model. In two unbalanced datasets, such as Brexit Blog Corpus and PorSimples Corpus, the accuracy was low against the other balanced datasets. The Amazon Alexa Reviews achieve good accuracy, but lower precision, recall, and F1 score since this dataset has a low number of negative samples.

Other pre-trained models have been widely developed for other languages such as BERTino [

43], an Italian DistilBERT, and CamemBERT [

44] for the French language based on the RoBERTa [

45] model, a variation of the BERT model. The main goal of pre-trained models is to remove the necessity of building a specific model for each task and to improve the necessity of developing a pre-trained model for each language, bigger models that understand multiple languages have been developed such as BERT Multilingual [

30] and also GPT-3 [

46]. Still, those models are trained with more data than BERT for specific languages, especially GPT-3, and should require more computational resources.

7. Conclusions

Inspired by a state-of-the-art language representation model, this paper analyzed two state-of-the-art models, BERT and DistilBERT, for text classification tasks for both English and Brazilian Portuguese. These models have been compared with several selected datasets. The experiment results showed that the compression of neural networks responsible for the generation of the DistilBERT and DistilBERTimbau produce models around 40% smaller and take around 45% (our experiments ranged from 21.5% to 66.9%) less time for the fine-tuning process. In other words, compression models require fewer computational resources, which did not significantly impact the model’s performance. Thus, the lightweight models allow being executed with low computational resources and with the performance of their larger counterparts. In addition, the distilled models preserve about 96% of language comprehension skills for balanced datasets.

Some extensions of our future work can be highlighted:

(i) other robust models are being widely studied and developed, such as in [

47] and GPT-3 [

46], which can be evaluated and compared with the models mentioned in this work; and

(ii) perform task classification for non-Western languages (e.g., Japanese, Chinese, and Korean).

In closing, the experiment results show how robust the Transformer architecture is and the possibility of using it for more languages than English, such as the Brazilian Portuguese models studied in this work.