Combining Photogrammetry and Photometric Stereo to Achieve Precise and Complete 3D Reconstruction

Abstract

1. Introduction

- The surface of the object should have an ideal diffuse reflection with no shadow and specularities on the surface.

- Light rays arriving at the surface should be parallel to each other.

- Camera uses an orthogonal projection.

- A semi-automatic image acquisition system based on the near-field photometric stereo lighting system and suitable for integrating photogrammetry measurements and photometric stereo;

- An algorithm for removing specular reflection and shadow, as well as determining lighting direction and illumination attenuation at each surface point, using the accurate geometry of the lighting system and the object’s sparse 3D shape.

- Three different approaches to take advantage of photogrammetric 3D measurements to correct the global shape deviation of photometric stereo depth caused by violating assumptions such as orthogonal projection, perfect diffuse reflection, or unknown error resources.

2. State of the Art

2.1. Photogrammetric Methods

2.2. Photometric Stereo

2.3. Combined Methods

3. Methodology

- Method A: it corrects the shape deviation by applying polynomial adjustment globally on the whole object;

- Method B: it segments the object based on the normal and curvature and then applies the shape correction procedure on each segment separately;

- Method C: it splits the object into small patches and then applies the shape correction procedure on each patch separately.

3.1. Basic Photometric Stereo

3.2. Light Direction per Pixel

3.3. Backprojection

- ;

- , , , and are radial distortion coefficients;

- , , , are tangential distortion coefficients.

3.4. Intensity Attenuation

3.4.1. Radial Intensity Attenuation

3.4.2. Angular Intensity Attenuation

3.5. Shadow and Specular Reflection Removal

3.6. Helmert Transformation

3.7. Global Shape Correction with Polynomial Model (Method A)

3.8. D Surface Segmentation (Method B)

3.9. Piecewise Shape Correction (Method C)

4. Data Acquisition System

4.1. Imaging Setup

4.2. System Calibration

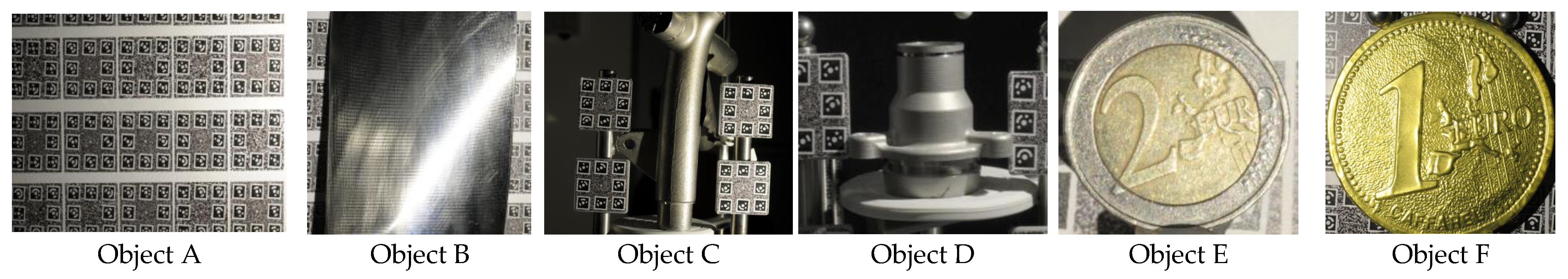

4.3. Testing Object

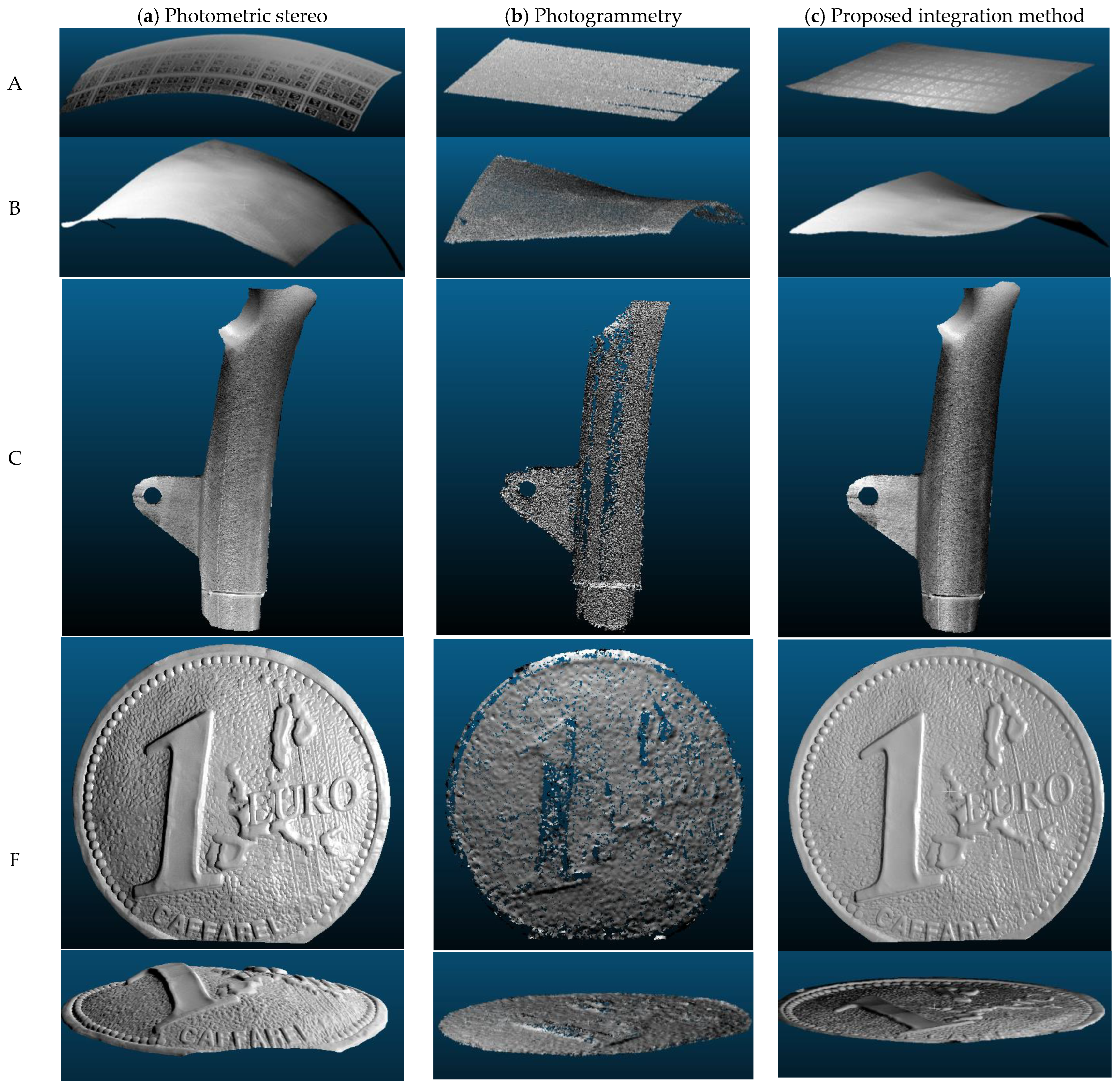

5. Experiments and Discussion

5.1. Low Frequency Evaluation

5.1.1. Cloud-to-Cloud Comparison

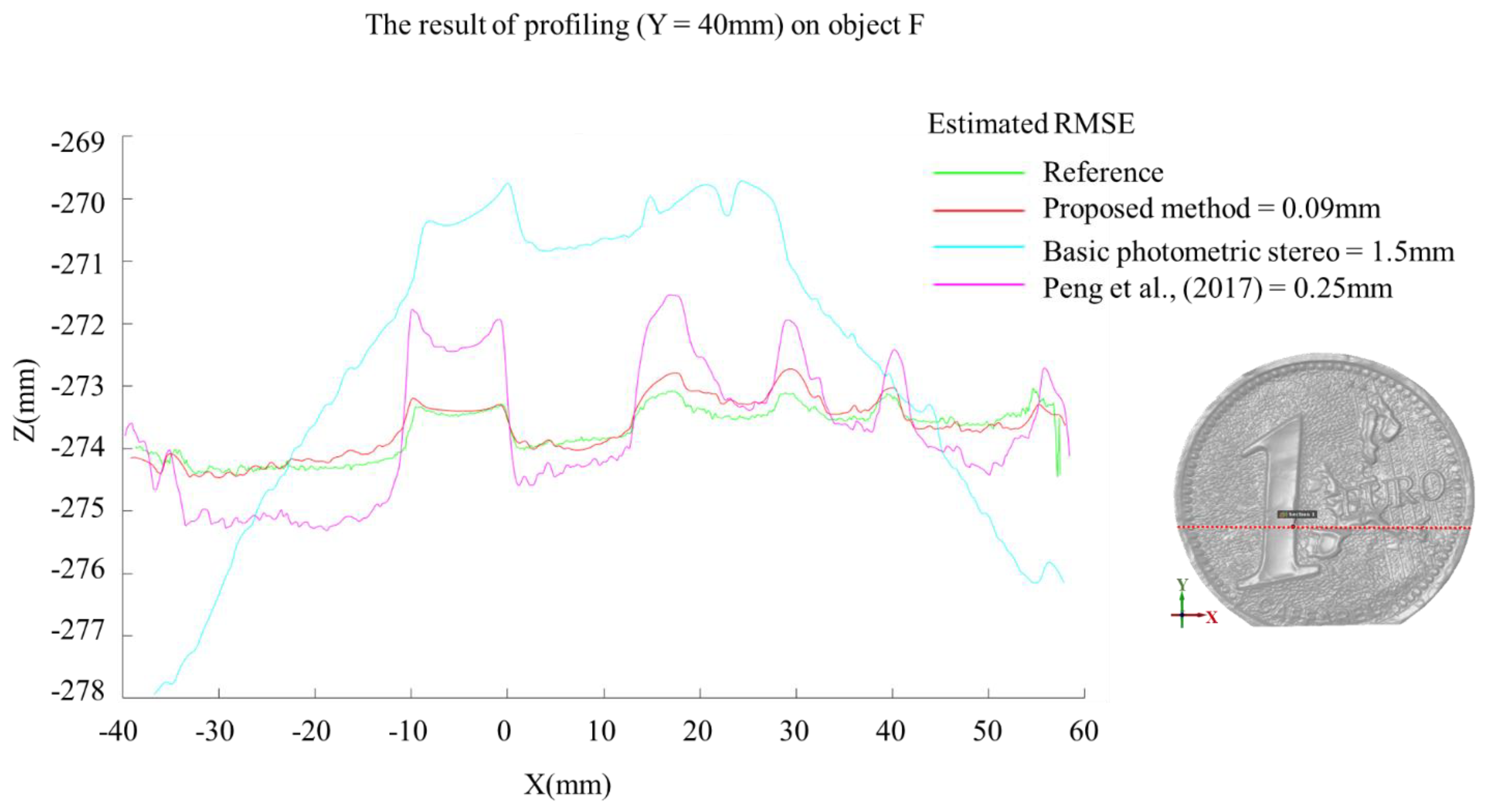

5.1.2. Profiling

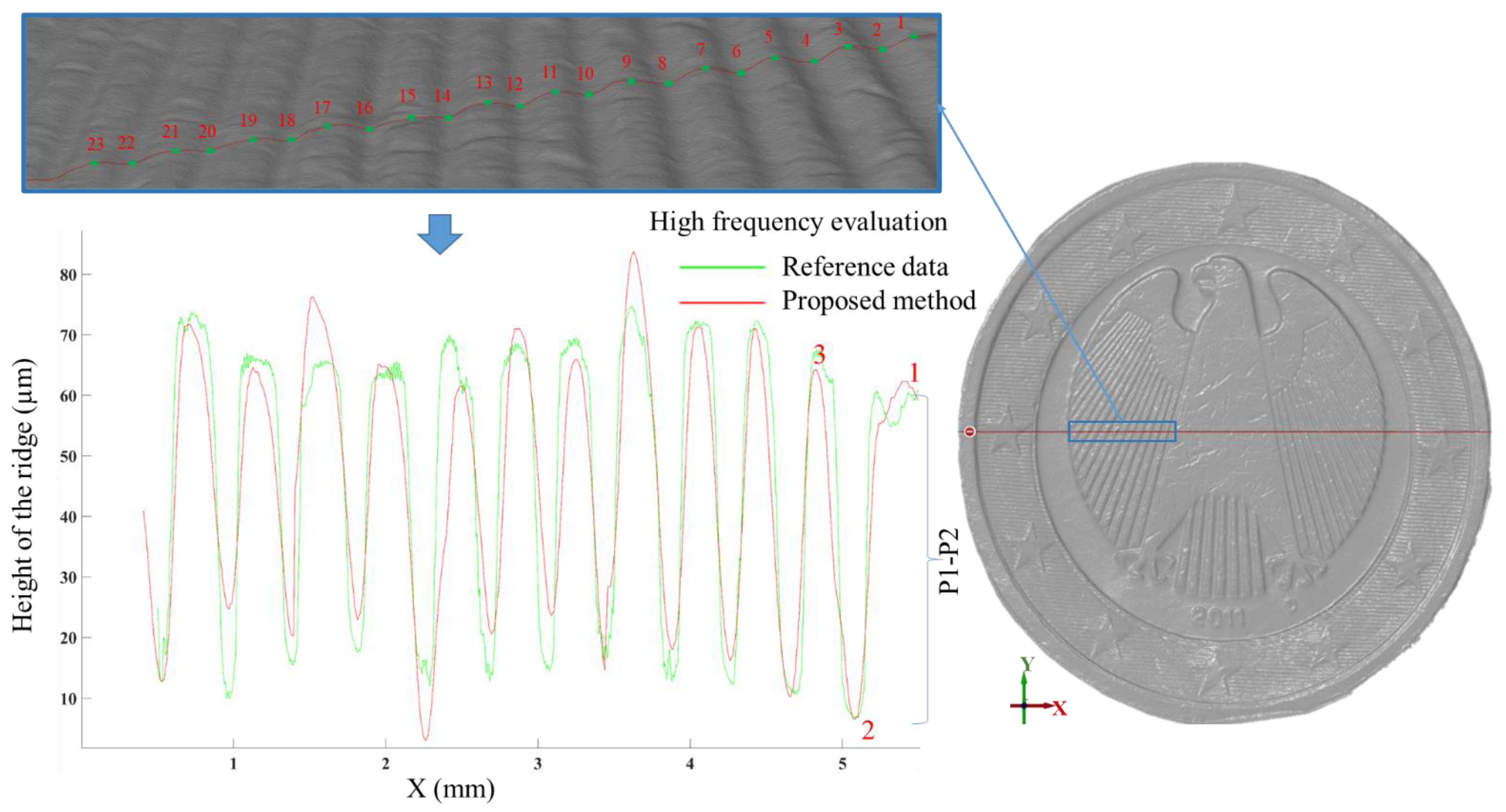

5.2. High Frequency Evaluation

5.3. Comparing against State-of-the-Art

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hosseininaveh, A.; Yazdan, R.; Karami, A.; Moradi, M.; Ghorbani, F. A low-cost and portable system for 3D reconstruction of texture-less objects. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2015, 40, 327. [Google Scholar] [CrossRef]

- Ahmadabadian, A.H.; Karami, A.; Yazdan, R. An automatic 3D reconstruction system for texture-less objects. Robot. Auton. Syst. 2019, 117, 29–39. [Google Scholar] [CrossRef]

- Menna, F.; Nocerino, E.; Morabito, D.; Farella, E.M.; Perini, M.; Remondino, F. An open source low-cost automatic system for image-based 3D digitization. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2017, 42, 155. [Google Scholar] [CrossRef]

- Ren, J.; Jian, Z.; Wang, X.; Mingjun, R.; Zhu, L.; Jiang, X. Complex surface reconstruction based on fusion of surface normals and sparse depth measurement. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Mohammadi, M.; Rashidi, M.; Mousavi, V.; Karami, A.; Yu, Y.; Samali, B. Quality evaluation of digital twins generated based on UAV photogrammetry and TLS: Bridge case study. Remote Sens. 2021, 13, 3499. [Google Scholar] [CrossRef]

- Barazzetti, L.; Gianinetto, M.; Scaioni, M. Automatic image-based 3D modeling for medical applications. In Proceedings of the 2012 5th International Conference on BioMedical Engineering and Informatics (ICEBEHI), Chongqing, China, 16–18 October 2012; pp. 228–232. [Google Scholar]

- Belbachir, A.N.; Hofstätter, M.; Litzenberger, M.; Schön, P. High-speed embedded-object analysis using a dual-line timed-address-event temporal-contrast vision sensor. IEEE Trans. Ind. Electron. 2010, 58, 770–783. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, S.; Cheng, X.; Xu, C.; Hao, Q. Dynamic deformation measurement of specular surface with deflectometry and speckle digital image correlation. Sensors 2020, 20, 1278. [Google Scholar] [CrossRef]

- Gao, W.; Haitjema, H.; Fang, F.; Leach, R.; Cheung, C.; Savio, E.; Linares, J. On-machine and in-process surface metrology for precision manufacturing. CIRP Ann. 2019, 68, 843–866. [Google Scholar] [CrossRef]

- Helle, R.H.; Lemu, H.G. A case study on use of 3D scanning for reverse engineering and quality control. Mater. Today Proc. 2021, 45, 5255–5262. [Google Scholar] [CrossRef]

- Karami, A.; Menna, F.; Remondino, F.; Varshosaz, M. Exploiting light directionality for image-based 3D reconstruction of non-collaborative surfaces. Photogramm. Rec. 2022, 37, 111–138. [Google Scholar] [CrossRef]

- Tang, Y.; Li, L.; Wang, C.; Chen, M.; Feng, W.; Zou, X.; Huang, K. Real-time detection of surface deformation and strain in recycled aggregate concrete-filled steel tubular columns via four-ocular vision. Robot. Comput. Integr. Manuf. 2019, 59, 36–46. [Google Scholar] [CrossRef]

- Rodríguez-Gonzálvez, P.; Rodríguez-Martín, M.; Ramos, L.; González-Aguilera, D. 3D reconstruction methods and quality assessment for visual inspection of welds. Autom. Constr. 2017, 79, 49–58. [Google Scholar] [CrossRef]

- Huang, S.; Xu, K.; Li, M.; Wu, M. Improved visual inspection through 3D image reconstruction of defects based on the photometric stereo technique. Sensors 2019, 19, 4970. [Google Scholar] [CrossRef] [PubMed]

- Menna, F.; Troisi, S. Low cost reverse engineering techniques for 3D modelling of propellers. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2010, 38, 452–457. [Google Scholar]

- Geng, Z.; Bidanda, B. Review of reverse engineering systems—Current state of the art. Virtual Phys. Prototyp. 2017, 12, 161–172. [Google Scholar] [CrossRef]

- Karami, A.; Battisti, R.; Menna, F.; Remondino, F. 3D digitization of transparent and glass surfaces: State of the art and analysis of some methods. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2022, 43, 695–702. [Google Scholar] [CrossRef]

- Johnson, M.K.; Cole, F.; Raj, A.; Adelson, E.H. Microgeometry capture using an elastomeric sensor. ACM Trans. Graph. 2011, 30, 1–8. [Google Scholar] [CrossRef]

- Atsushi, K.; Sueyasu, H.; Funayama, Y.; Maekawa, T. System for reconstruction of three-dimensional micro objects from multiple photographic images. Comput. Des. 2011, 43, 1045–1055. [Google Scholar] [CrossRef]

- Lu, Z.; Cai, L. Accurate three-dimensional measurement for small objects based on the thin-lens model. Appl. Opt. 2020, 59, 6600–6611. [Google Scholar] [CrossRef]

- Luhmann, T.; Robson, S.; Kyle, S.; Boehm, J. Close-range photogrammetry and 3D imaging. In Close-Range Photogrammetry and 3D Imaging, 3rd ed.; De Gruyter: Berlin, Germany, 2020; p. 684. [Google Scholar]

- Ahmadabadian, A.H.; Yazdan, R.; Karami, A.; Moradi, M.; Ghorbani, F. Clustering and selecting vantage images in a low-cost system for 3D reconstruction of texture-less objects. Measurement 2017, 99, 185–191. [Google Scholar] [CrossRef]

- Santoši, Ž.; Budak, I.; Stojaković, V.; Šokac, M.; Vukelić, Đ. Evaluation of synthetically generated patterns for image-based 3D reconstruction of texture-less objects. Measurement 2019, 147, 106883. [Google Scholar] [CrossRef]

- Hafeez, J.; Lee, J.; Kwon, S.; Ha, S.; Hur, G.; Lee, S. Evaluating feature extraction methods with synthetic noise patterns for image-based modelling of texture-less objects. Remote Sens. 2020, 12, 3886. [Google Scholar] [CrossRef]

- Karami, A.; Menna, F.; Remondino, F. Investigating 3D reconstruction of non-collaborative surfaces through photogrammetry and photometric stereo. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B2-2, 519–526. [Google Scholar] [CrossRef]

- Woodham, R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 191139. [Google Scholar] [CrossRef]

- Scherr, T. Gradient-Based Surface Reconstruction and the Application to Wind Waves. Master’s Thesis, Ruprecht-Karls-University Heidelberg, Heidelberg, Germany, 2017. [Google Scholar]

- Antensteiner, D.; Štolc, S.; Pock, T. A review of depth and normal fusion algorithms. Sensors 2018, 18, 431. [Google Scholar] [CrossRef]

- Li, M.; Zhou, Z.; Wu, Z.; Shi, B.; Diao, C.; Tan, P. Multi-view photometric stereo: A robust solution and benchmark dataset for spatially varying isotropic materials. IEEE Trans. Image Process. 2020, 29, 4159–4173. [Google Scholar] [CrossRef]

- Jiddi, S.; Robert, P.; Marchand, E. Detecting specular reflections and cast shadows to estimate reflectance and illumi-nation of dynamic indoor scenes. IEEE Trans. Vis. Comput. 2020, 28, 1249–1260. [Google Scholar] [CrossRef]

- Wei, M.; Song, Z.; Nie, Y.; Wu, J.; Ji, Z.; Guo, Y.; Xie, H.; Wang, J.; Wang, F.L. Normal-based bas-relief modelling via near-lighting photometric stereo. Comput. Graph. Forum. 2020, 39, 204–219. [Google Scholar] [CrossRef]

- Shi, B.; Mo, Z.; Wu, Z.; Duan, D.; Yeung, S.K.; Tan, P. A benchmark dataset and evaluation for non-lambertian and un-calibrated photometric stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 271–284. [Google Scholar] [CrossRef]

- Abzal, A.; Saadatseresht, M.; Varshosaz, M. Development of a novel simplification mask for multi-shot optical scanners. ISPRS J. Photogramm. Remote Sens. 2018, 142, 12–20. [Google Scholar] [CrossRef]

- Mousavi, V.; Khosravi, M.; Ahmadi, M.; Noori, N.; Haghshenas, S.; Hosseininaveh, A.; Varshosaz, M. The performance evaluation of multi-image 3D reconstruction software with different sensors. Measurement 2018, 120, 1–10. [Google Scholar] [CrossRef]

- Nicolae, C.; Nocerino, E.; Menna, F.; Remondino, F. Photogrammetry applied to problematic artefacts. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 451. [Google Scholar] [CrossRef]

- Menna, F.; Nocerino, E.; Remondino, F.; Dellepiane, M.; Callieri, M.; Scopigno, R. 3D digitization of an heritage masterpiece—A critical analysis on quality assessment. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, 41, 675–683. [Google Scholar] [CrossRef]

- Wallis, R.H. An approach for the space variant restoration and enhancement of images. Proc. Symp. Cur. Math. Pro. Dep. Math. Image Sci. 1976, 6, 2. [Google Scholar] [CrossRef][Green Version]

- Gaiani, M.; Remondino, F.; Apollonio, F.I.; Ballabeni, A. An advanced pre-processing pipeline to improve automated photogrammetric reconstructions of architectural scenes. Remote Sens. 2016, 8, 178. [Google Scholar] [CrossRef]

- Calantropio, A.; Chiabrando, F.; Seymour, B.; Kovacs, E.; Lo, E.; Rissolo, D. Image pre-processing strategies for enhancing photogrammetric 3D reconstruction of underwater shipwreck datasets. Proc. Symp. Cur. Math. Pro. Dep. Math. Image Sci. 2020, 43, 941–948. [Google Scholar] [CrossRef]

- Lin, H.; Gao, J.; Zhang, G.; Chen, X.; He, Y.; Liu, Y. Review and comparison of high-dynamic range three-dimensional shape measurement techniques. J. Sens. 2017, 2017, 1–11. [Google Scholar] [CrossRef]

- Palousek, D.; Omasta, M.; Koutny, D.; Bednar, J.; Koutecky, T.; Dokoupil, F. Effect of matte coating on 3D optical measurement accuracy. Opt. Mater. 2015, 40, 1–9. [Google Scholar] [CrossRef]

- Pereira, J.R.M.; Penz, I.D.L.E.S.; Da Silva, F.P. Effects of different coating materials on three-dimensional optical scanning accuracy. Adv. Mech. Eng. 2019, 11, 1687814019842416. [Google Scholar] [CrossRef]

- Rostami, M.; Michailovich, O.; Wang, Z. Gradient-based surface reconstruction using compressed sensing. In Proceedings of the 2012 19th IEEE International Conference on Image Processing (ICIP), Orlando, FL, USA, 30 September–3 October 2012; pp. 913–916. [Google Scholar]

- Solomon, F.; Ikeuchi, K. Extracting the shape and roughness of specular lobe objects using four light photometric stereo. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 449–454. [Google Scholar] [CrossRef]

- Barsky, S.; Petrou, M. The 4-source photometric stereo technique for three-dimensional surfaces in the presence of high-lights and shadows. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1239–1252. [Google Scholar] [CrossRef]

- Verbiest, F.; Van Gool, L. Photometric stereo with coherent outlier handling and confidence estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 24–26 June 2008; pp. 1–8. [Google Scholar]

- Sunkavalli, K.; Zickler, T.; Pfister, H. Visibility subspaces: Uncalibrated photometric stereo with shadows. In Proceedings of the European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6312, pp. 251–264. [Google Scholar]

- MacDonald, L.W. Colour and directionality in surface reflectance. Proc. Conf. Artif. Intell. Simul. Behav. AISB. 2014, 50, 1175–1251. [Google Scholar]

- MacDonald, L.; Toschi, I.; Nocerino, E.; Hess, M.; Remondino, F.; Robson, S. Accuracy of 3D reconstruction in an illumination dome. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2016, 41, 69–76. [Google Scholar] [CrossRef]

- Queau, Y.; Wu, T.; Lauze, F.; Durou, J.-D.; Cremers, D. A non-convex variational approach to photometric stereo under inaccurate lighting. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2017, 350–359. [Google Scholar] [CrossRef]

- Peng, S.; Haefner, B.; Quéau, Y.; Cremers, D. Depth super-resolution meets uncalibrated photometric stereo. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshop (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2961–2968. [Google Scholar]

- Cho, D.; Matsushita, Y.; Tai, Y.-W.; Kweon, I.S. Semi-calibrated photometric stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 232–245. [Google Scholar] [CrossRef]

- Chandraker, M.; Agarwal, S.; Kriegman, D. Shadowcuts: Photometric stereo with shadows. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 18–23 June 2007; pp. 1–8. [Google Scholar]

- Chen, G.; Han, K.; Wong, K.Y.K. PS-FCN: A flexible learning framework for photometric stereo. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–18. [Google Scholar]

- Chung, H.-S.; Jia, J. Efficient photometric stereo on glossy surfaces with wide specular lobes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Georghiades, A.S. Incorporating the torrance and sparrow model of reflectance in uncalibrated photometric stereo. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 816–823. [Google Scholar]

- Nam, G.; Lee, J.H.; Gutierrez, D.; Kim, M.H. Practical SVBRDF acquisition of 3D objects with unstructured flash pho-tography. ACM Trans. Graph. 2018, 37, 1–12. [Google Scholar] [CrossRef]

- Yeung, S.-K.; Wu, T.-P.; Tang, C.-K.; Chan, T.F.; Osher, S.J. Normal estimation of a transparent object using a video. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 890–897. [Google Scholar] [CrossRef]

- Shi, B.; Tan, P.; Matsushita, Y.; Ikeuchi, K. Bi-polynomial modeling of low-frequency reflectances. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1078–1091. [Google Scholar] [CrossRef]

- Otani, H.; Komuro, T.; Yamamoto, S.; Tsumura, N. Bivariate BRDF estimation based on compressed sensing. In Proceedings of the Computer Graphics International Conference, Calgary, Canada, 17–20 June 2019; Springer: Cham, Switzerland; pp. 483–489. [Google Scholar] [CrossRef]

- Lu, F.; Chen, X.; Sato, I.; Sato, Y. SymPS: BRDF symmetry guided photometric stereo for shape and light source estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 221–234. [Google Scholar] [CrossRef]

- Boss, M.; Jampani, V.; Kim, K.; Lensch, H.P.; Kautz, J. Two-shot spatially-varying BRDF and shape estimation. Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3981–3990. [Google Scholar] [CrossRef]

- Zheng, Q.; Kumar, A.; Shi, B.; Pan, G. Numerical reflectance compensation for non-lambertian photometric stereo. IEEE Trans. Image Process. 2019, 28, 3177–3191. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Jian, Z.; Ren, M. Non-lambertian photometric stereo network based on inverse reflectance model with collocated light. IEEE Trans. Image Process. 2020, 29, 6032–6042. [Google Scholar] [CrossRef] [PubMed]

- Wen, S.; Zheng, Y.; Lu, F. Polarization guided specular reflection separation. IEEE Trans. Image Process. 2021, 30, 7280–7291. [Google Scholar] [CrossRef] [PubMed]

- Fan, H.; Qi, L.; Wang, N.; Dong, J.; Chen, Y.; Yu, H. Deviation correction method for close-range photometric stereo with nonuniform illumination. Opt. Eng. 2017, 56, 103102. [Google Scholar] [CrossRef]

- Smithwick, Q.Y.J.; Seibel, E.J. Depth enhancement using a scanning fiber optical endoscope. Opt. Biopsy IV 2002, 4613, 222–234. [Google Scholar] [CrossRef]

- Nehab, D.; Rusinkiewicz, S.; Davis, J.; Ramamoorthi, R. Efficiently combining positions and normals for precise 3D geometry. ACM Trans. Graph. 2005, 24, 536–543. [Google Scholar] [CrossRef]

- Esteban, C.H.; Vogiatzis, G.; Cipolla, R. Multiview photometric stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 548–554. [Google Scholar] [CrossRef]

- Kaya, B.; Kumar, S.; Oliveira, C.; Ferrari, V.; Van Gool, L. Uncertainty-aware deep multi-view photometric stereo. Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12591–12601. [Google Scholar] [CrossRef]

- Bylow, E.; Maier, R.; Kahl, F.; Olsson, C. Combining depth fusion and photometric stereo for fine-detailed 3D models. In Scandinavian Conference on Image Analysis (SCIA); Springer: Cham, Switzerland, 2019; pp. 261–274. [Google Scholar] [CrossRef]

- Zollhöfer, M.; Stotko, P.; Görlitz, A.; Theobalt, C.; Nießner, M.; Klein, R.; Kolb, A. State of the art on 3D reconstruction with RGB-D cameras. Comput. Graph. Forum 2018, 37, 625–652. [Google Scholar] [CrossRef]

- Park, J.; Sinha, S.N.; Matsushita, Y.; Tai, Y.W.; So Kweon, I. Multiview photometric stereo using planar mesh parameterization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 1161–1168. [Google Scholar]

- Park, J.; Sinha, S.N.; Matsushita, Y.; Tai, Y.W.; Kweon, I.S. Robust multi-view photometric stereo using planar mesh parameterization. IEEE Trans. PAMI. 2016, 39, 1591–1604. [Google Scholar] [CrossRef]

- Logothetis, F.; Mecca, R.; Cipolla, R. A differential volumetric approach to multi-view photometric stereo. Proc. ICCV 2019, 1052–1061. [Google Scholar] [CrossRef]

- Ren, M.; Ren, J.; Wang, X.; Gao, F.; Zhu, L.; Yao, Z. Multi-scale measurement of high-reflective surfaces by integrating near-field photometric stereo with touch trigger probe. CIRP Ann. 2020, 69, 489–492. [Google Scholar] [CrossRef]

- Kaya, B.; Kumar, S.; Sarno, F.; Ferrari, V.; Van Gool, L. Neural radiance fields approach to deep multi-view photometric stereo. IEEE Winter Conf. Appl. Comput. Vis. 2022, 1965–1977. [Google Scholar] [CrossRef]

- MacDonald, L.W.; Ahmadabadian, A.H.; Robson, S. Determining the coordinates of lamps in an illumination dome. Videometrics Range Imaging Appl. 2015, 9528, 95280I. [Google Scholar] [CrossRef]

- Brown, D.C. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Mecca, R.; Wetzler, A.; Bruckstein, A.M.; Kimmel, R. Near field photometric stereo with point light sources. SIAM J. Imaging Sci. 2014, 7, 2732–2770. [Google Scholar] [CrossRef]

- Mikhail, E.M.; Bethel, J.S.; McGlone, J.C. Introduction to Modern Photogrammetry; John Wiley & Sons: Hoboken, NJ, USA, 2001; Volume 29, pp. 329–330. [Google Scholar]

- Murtiyoso, A.; Grussenmeyer, P. Virtual disassembling of historical edifices: Experiments and assessments of an automatic approach for classifying multi-scalar point clouds into architectural elements. Sensors 2020, 20, 2161. [Google Scholar] [CrossRef] [PubMed]

- Xiong, Y.; Chakrabarti, A.; Basri, R.; Gortler, S.J.; Jacobs, D.W.; Zickler, T. From shading to local shape. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 67–79. [Google Scholar] [CrossRef] [PubMed]

- Hexagon. AICON PrimeScan Scanner. Available online: https://www.hexagonmi.com (accessed on 30 August 2022).

- Besl, P.J.; McKay, N.D. A method for registration of 3D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

| Object | Size (mm) | f/Stop | Exposure Time (s) | Focal Length (mm) | GSD (mm) |

|---|---|---|---|---|---|

| A | 240 × 150 | 1/16 | 1/8 | 60 | 0.02 |

| B | 160 × 200 × 30 | 1/16 | 1/8 | 60 | 0.02 |

| C | 140 × 50 × 40 | 1/22 | 1/4 | 60 | 0.02 |

| D | 50 × 50 × 40 | 1/22 | 1/8 | 60 | 0.02 |

| E | 25.75 × 25.75 × 2.2 | 1/22 | 1/8 | 105 | 0.01 |

| F | 100 × 100 × 10 | 1/22 | 1/30 | 60 | 0.02 |

| P1-P2 | P2-P3 | P3-P4 | P4-P5 | P5-P6 | P6-P7 | P7-P8 | P8-P9 | P9-P10 | P10-P11 | P11-P12 | P12-P13 | |

| Reference | 53.79 | 60 | 54.03 | 59.73 | 58.53 | 58.03 | 56.03 | 58.63 | 57.7 | 51.8 | 52.85 | 52.05 |

| Proposed | 55.683 | 57.523 | 53.52 | 60.4 | 54.46 | 54.63 | 53.11 | 62.07 | 65.59 | 51.35 | 41.55 | 46.61 |

| Residual | 1.893 | −2.477 | −0.51 | 0.67 | −4.07 | −3.4 | −2.92 | 3.44 | 7.89 | −0.45 | −11.3 | −5.44 |

| P13-P14 | P14-P15 | P15-P16 | P16-P17 | P17-P18 | P18-P19 | P19-P20 | P20-P21 | P21-P22 | P22-P23 | |

| Reference | 55.56 | 56.56 | 56.8 | 52.77 | 46.97 | 47.7 | 49.8 | 49.4 | 55 | 63.4 |

| Proposed | 50.44 | 41 | 58.95 | 61.57 | 41.24 | 52.39 | 55 | 44.68 | 41.99 | 48.72 |

| Residual | −5.12 | −15.36 | 2.15 | 8.8 | −5.73 | 4.69 | 5.2 | −4.72 | −13.01 | −14.68 |

| Mean of Residuals | Maximum Residual | RMSE | MAE |

|---|---|---|---|

| −2.46 | −15.36 | 1.5 | 5.48 |

| Object | Proposed (Method A) | Xiong et al. [83] | Quéau et al. [50] | Peng et al. [51] |

|---|---|---|---|---|

| D | 0.54 | 1.24 | 1.3 | 1.1 |

| E | 0.025 | 0.22 | 0.14 | 0.13 |

| F | 0.16 | 2.6 | 1.7 | 0.71 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karami, A.; Menna, F.; Remondino, F. Combining Photogrammetry and Photometric Stereo to Achieve Precise and Complete 3D Reconstruction. Sensors 2022, 22, 8172. https://doi.org/10.3390/s22218172

Karami A, Menna F, Remondino F. Combining Photogrammetry and Photometric Stereo to Achieve Precise and Complete 3D Reconstruction. Sensors. 2022; 22(21):8172. https://doi.org/10.3390/s22218172

Chicago/Turabian StyleKarami, Ali, Fabio Menna, and Fabio Remondino. 2022. "Combining Photogrammetry and Photometric Stereo to Achieve Precise and Complete 3D Reconstruction" Sensors 22, no. 21: 8172. https://doi.org/10.3390/s22218172

APA StyleKarami, A., Menna, F., & Remondino, F. (2022). Combining Photogrammetry and Photometric Stereo to Achieve Precise and Complete 3D Reconstruction. Sensors, 22(21), 8172. https://doi.org/10.3390/s22218172