Kids’ Emotion Recognition Using Various Deep-Learning Models with Explainable AI

Abstract

1. Introduction

1.1. Facial Emotions

1.2. Need of Facial Emotion Recognition

1.3. Use of FER in Online Teaching

2. Literature Review

3. Motivation

4. Contribution of the Paper

- This study significantly contributed to an increase in the accuracy of LIRIS as compared with the created dataset’s accuracy, which no other study in the field of FER obtained.

- As per the literature, it was observed that there is a lack of emotion datasets from kids of ages 7 to 10; therefore, the authors proposed their dataset with kids from this age group.

- The authors used seven different CNNs for classification and performed a comparative analysis on both the LIRIS and author-created datasets.

- The study focused on how children’s face is different from that of adults according to a recent study [17], and to understand more about the emotion, the authors used seven different CNN classifications using 3D 468 landmark points and carried out a comparative analysis of both the LIRIS and authors’ proposed datasets.

- This study also focused on how these seven different CNNs recognize emotions on the dataset using XAI, namely using Grad-CAM, Grad-CAM++ and SoftGrad.

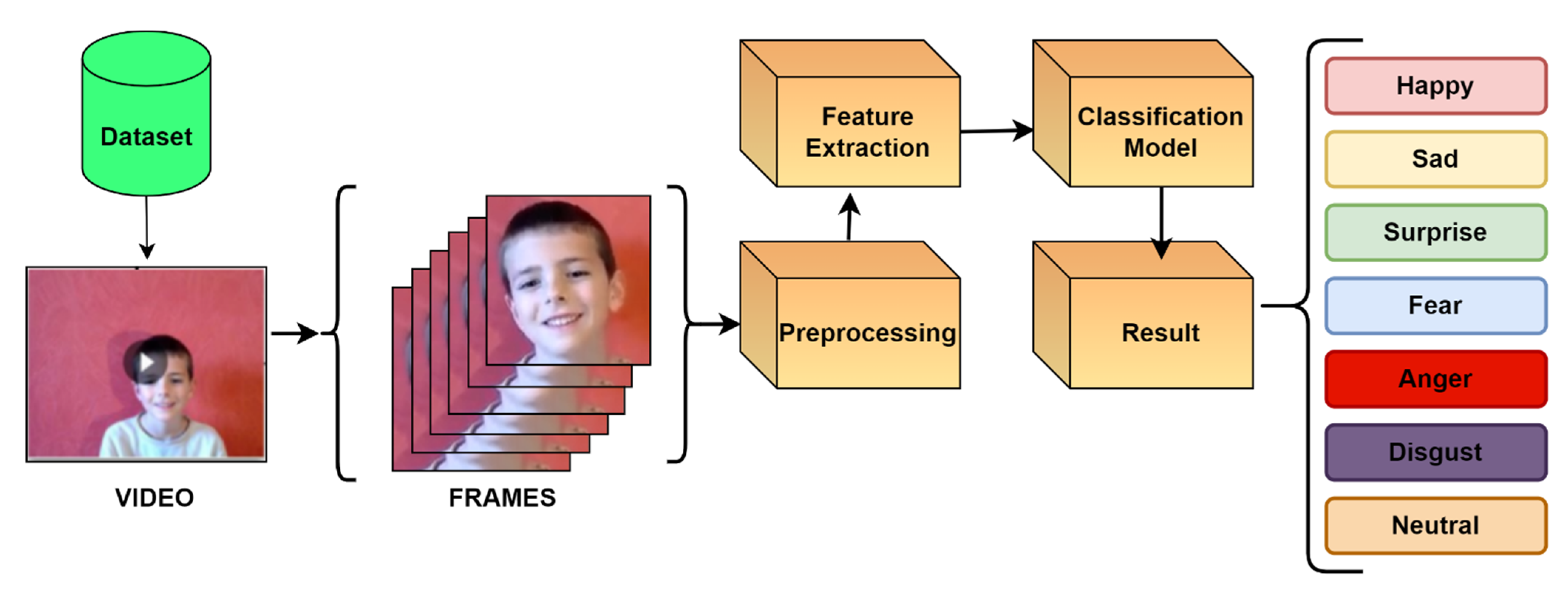

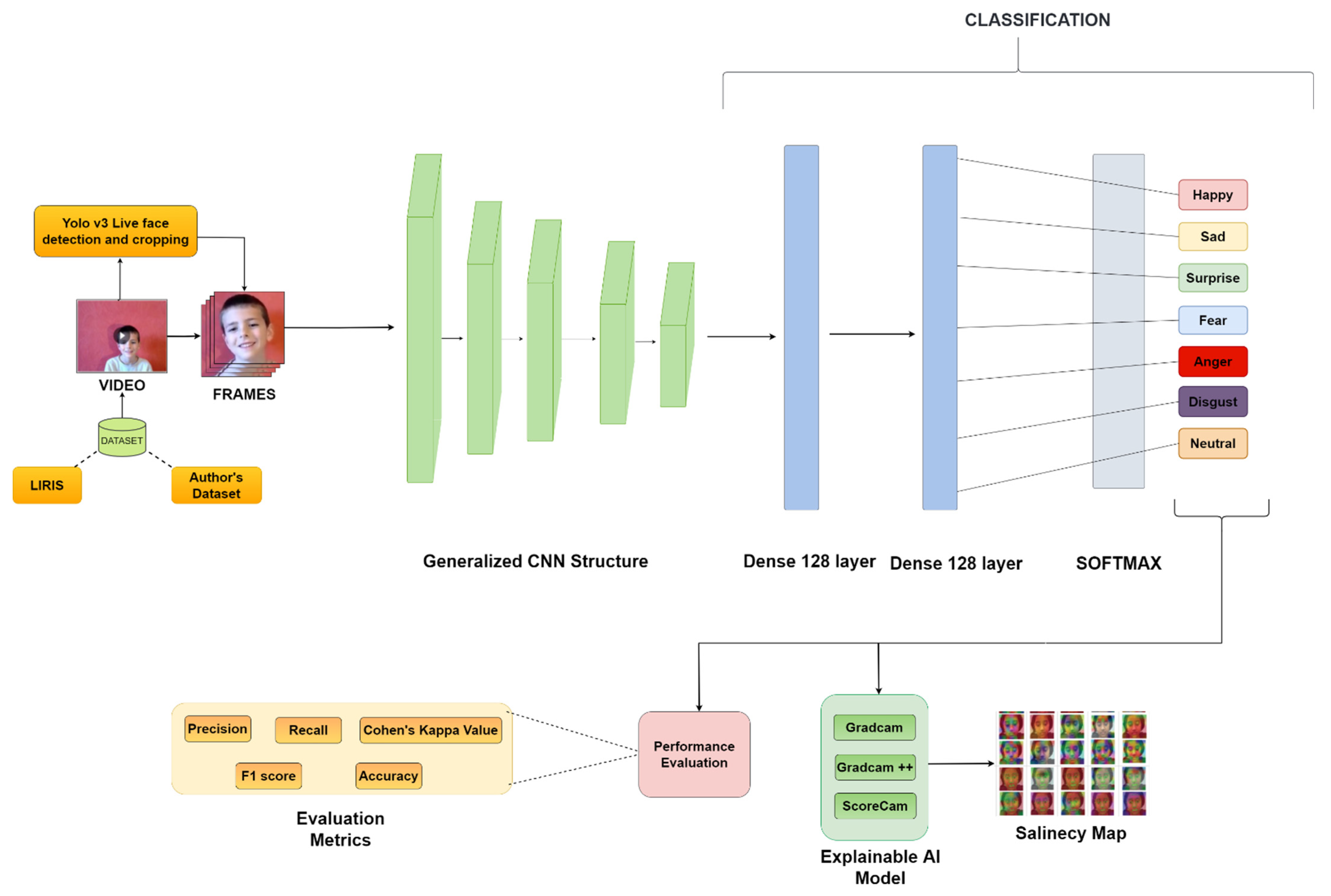

5. Proposed Model

6. Breakdown of Proposed Emotion Recognition in Brief

6.1. Dataset

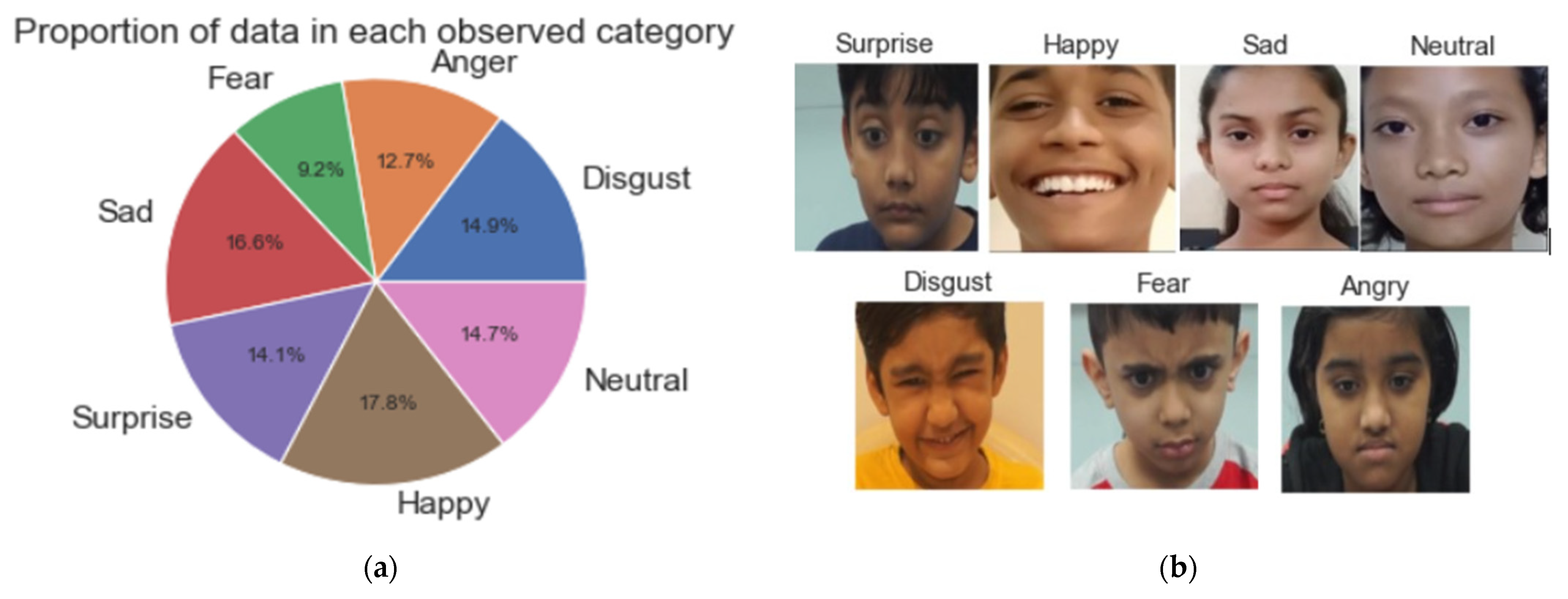

6.1.1. LIRIS Dataset

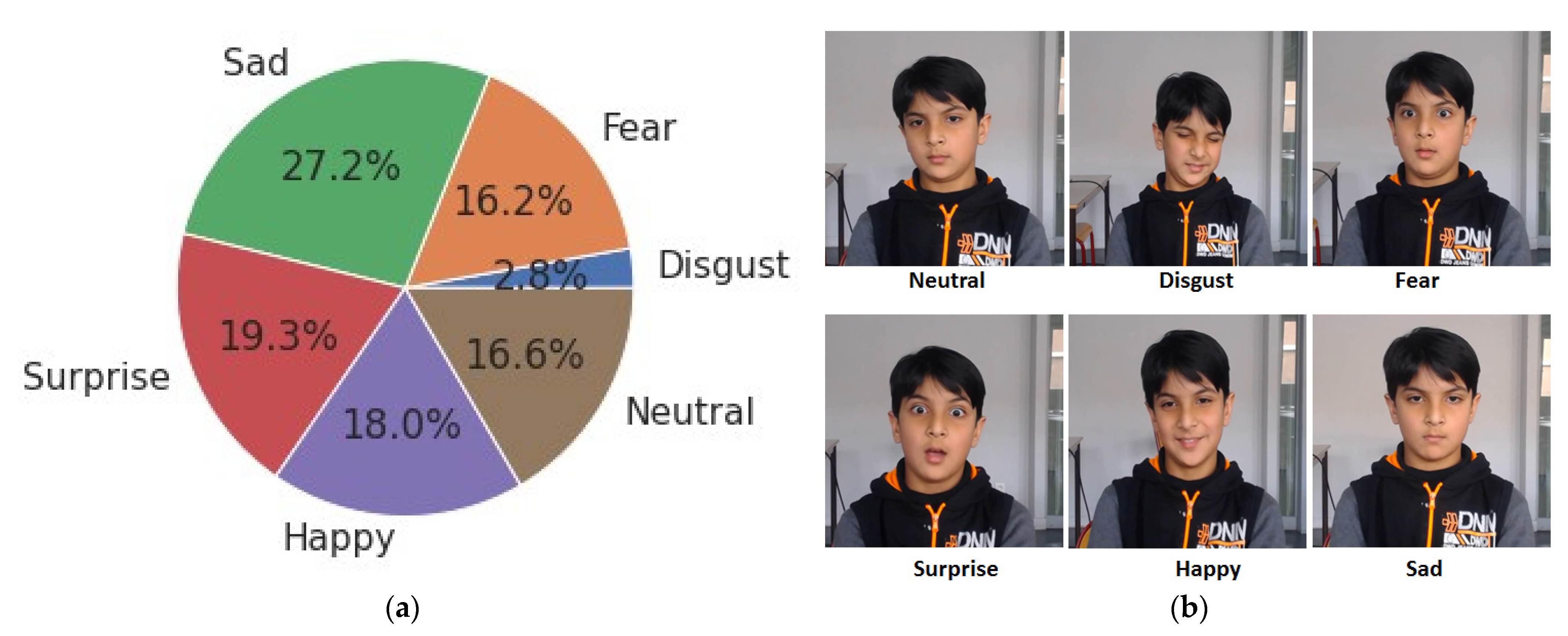

6.1.2. Author Dataset

6.2. Preprocessing for Visual Features

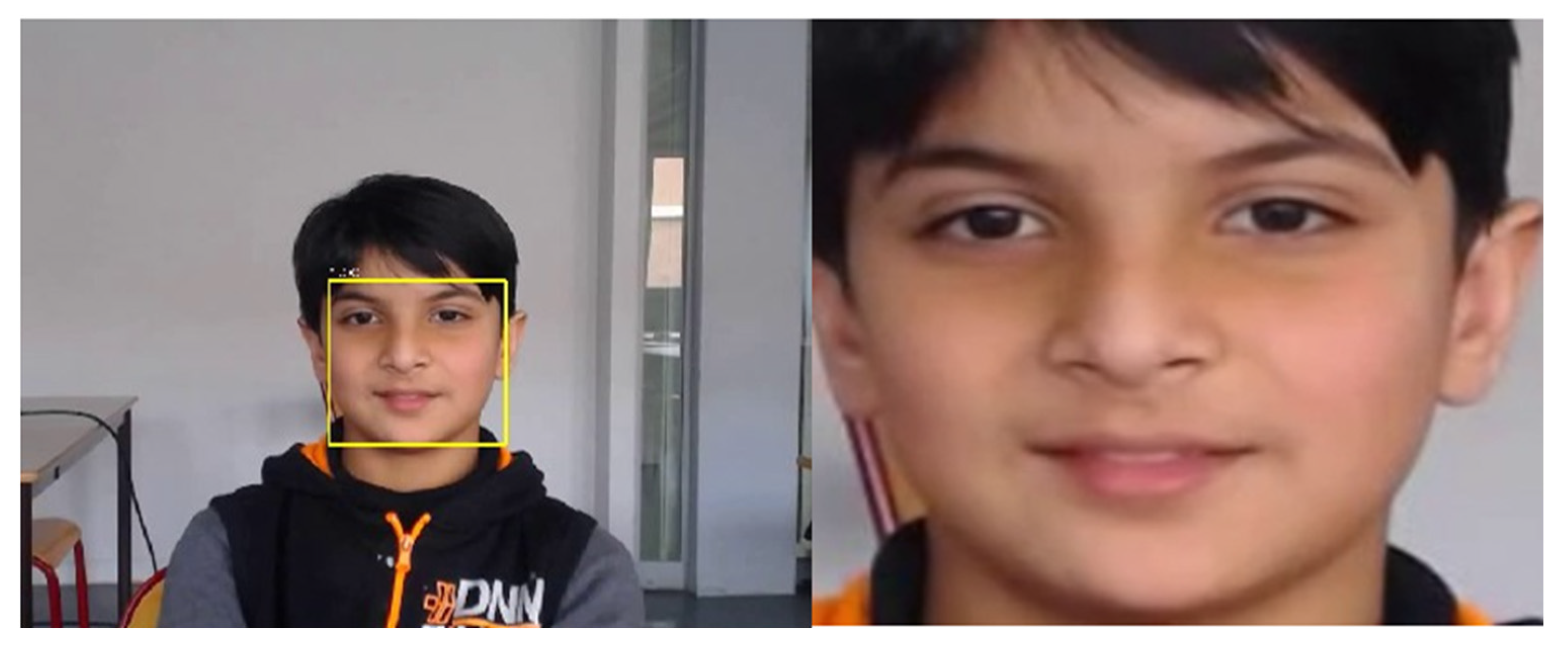

6.2.1. Face Detection

6.2.2. Face Cropping

6.2.3. Face 3D Landmark Generation

6.2.4. Data Augmentation

6.3. Deep Models Used

6.3.1. VGGNets

6.3.2. ResNets

6.3.3. DenseNets

6.3.4. InceptionV3

6.3.5. InceptionResNetV2

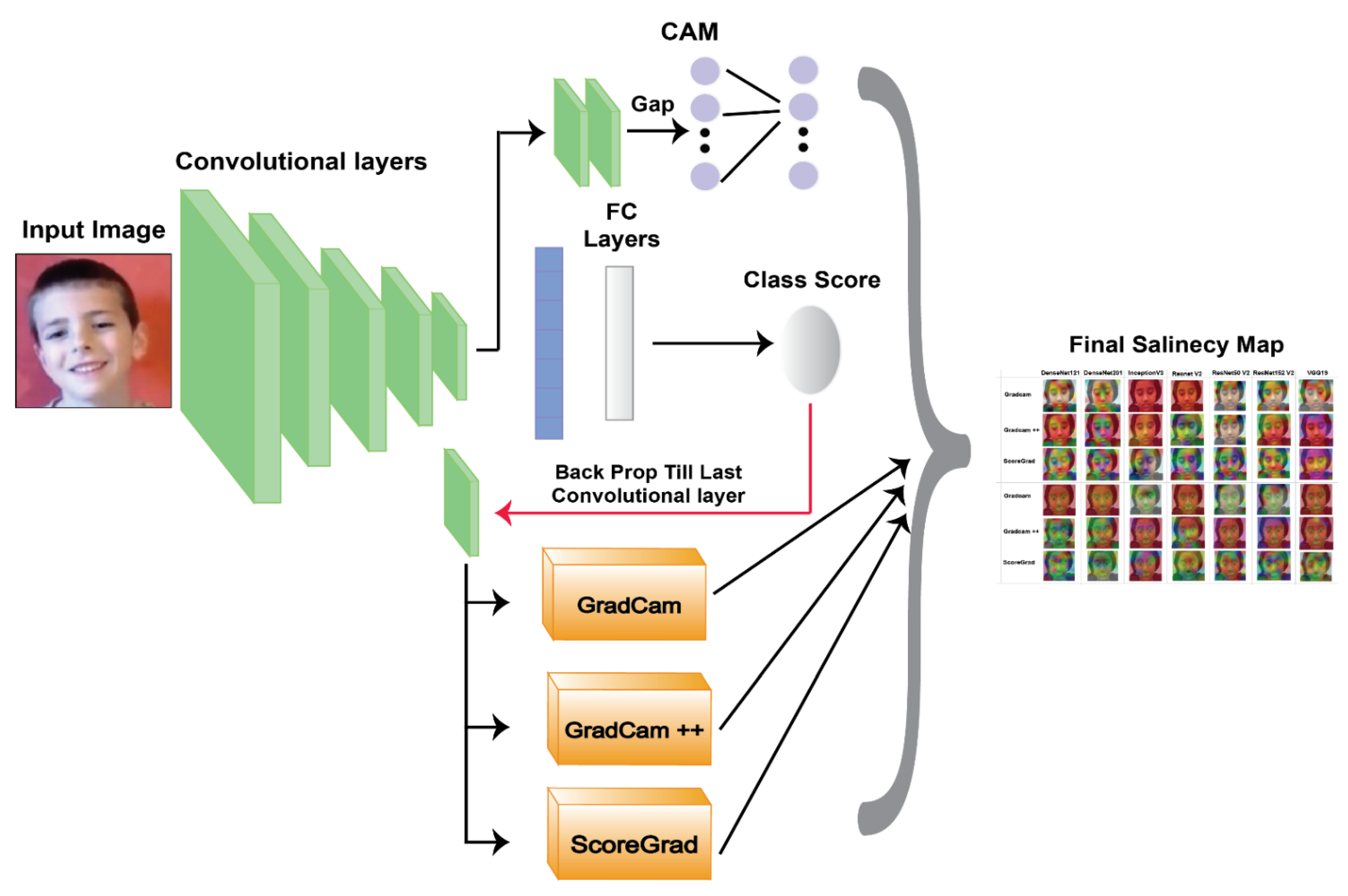

6.4. Explainable AI

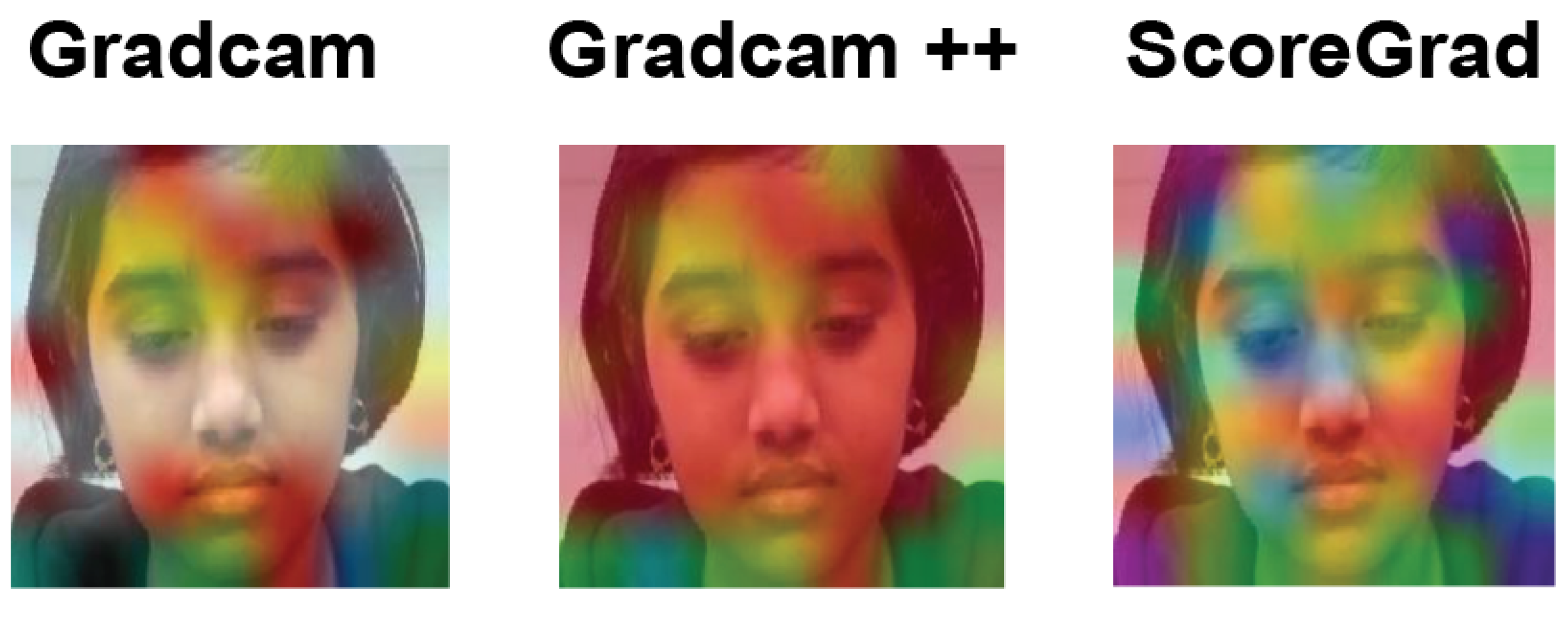

6.4.1. Grad-CAM

6.4.2. Grad-CAM++

6.4.3. ScoreGrad

7. Experimental Results

7.1. Results of Deep-Learning Models

7.2. Explaining Explainable AI Visualizations

8. Experimental Results

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dalvi, C.; Rathod, M.; Patil, S.; Gite, S.; Kotecha, K. A Survey of AI-Based Facial Emotion Recognition: Features, ML DL Techniques, Age-Wise Datasets and Future Directions. IEEE Access 2021, 9, 165806–165840. [Google Scholar] [CrossRef]

- Mahendar, M.; Malik, A.; Batra, I. A Comparative Study of Deep Learning Techniques for Emotion Estimation Based on E-Learning Through Cognitive State Analysis; Springer: Berlin/Heidelberg, Germany, 2021; pp. 226–235. [Google Scholar] [CrossRef]

- Khosravi, H.; Shum, S.B.; Chen, G.; Conati, C.; Tsai, Y.S.; Kay, J.; Knight, S.; Martinez-Maldonado, R.; Sadiq, S.; Gašević. Student Engagement Detection Using Emotion Analysis, Eye Tracking and Head Movement with Machine Learning. September 2019. Available online: https://ui.adsabs.harvard.edu/abs/2019arXiv190912913S (accessed on 19 August 2022).

- Khosravi, H.; Shum, S.B.; Chen, G.; Conati, C.; Tsai, Y.-S.; Kay, J.; Knight, S.; Martinez-Maldonado, R.; Sadiq, S.; Gašević, D. Explainable Artificial Intelligence in education. Comput. Educ. Artif. Intell. 2022, 3, 100074. [Google Scholar] [CrossRef]

- Khalfallah, J.; Slama, J.B.H. Facial Expression Recognition for Intelligent Tutoring Systems in Remote Laboratories Platform. Procedia Comput. Sci. 2015, 73, 274–281. [Google Scholar] [CrossRef]

- L.B, K.; Gg, L.P. Student Emotion Recognition System (SERS) for e-learning Improvement Based on Learner Concentration Metric. Procedia Comput. Sci. 2016, 85, 767–776. [Google Scholar] [CrossRef]

- Bahreini, K.; Nadolski, R.; Westera, W. Towards real-time speech emotion recognition for affective e-learning. Educ. Inf. Technol. 2016, 21, 1367–1386. [Google Scholar] [CrossRef]

- Bahreini, K.; Nadolski, R.; Westera, W. Data Fusion for Real-time Multimodal Emotion Recognition through Webcams and Microphones in E-Learning. Int. J. Hum. -Comput. Interact. 2016, 32, 415–430. [Google Scholar] [CrossRef]

- Sun, A.; Li, Y.-J.; Huang, Y.-M.; Li, Q. Using Facial Expression to Detect Emotion in E-learning System: A Deep Learning Method. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); LNCS Springer: Cape Town, South Africa, 2017; Volume 10676, pp. 446–455. [Google Scholar] [CrossRef]

- Yang, D.; Alsadoon, A.; Prasad, P.; Singh, A.; Elchouemi, A. An Emotion Recognition Model Based on Facial Recognition in Virtual Learning Environment. Procedia Comput. Sci. 2018, 125, 2–10. [Google Scholar] [CrossRef]

- Hook, J.; Noroozi, F.; Toygar, O.; Anbarjafari, G. Automatic speech based emotion recognition using paralinguistics features. Bull. Pol. Acad. Sci. Tech. Sci. 2019, 67, 479–488. [Google Scholar] [CrossRef]

- Meuwissen, A.S.; Anderson, J.E.; Zelazo, P.D. The Creation and Validation of the Developmental Emotional Faces Stimulus Set. Behav. Res. Methods 2017, 49, 3960. [Google Scholar] [CrossRef] [PubMed]

- Egger, H.L.; Pine, D.S.; Nelson, E.; Leibenluft, E.; Ernst, M.; Towbin, K.E.; Angold, A. The NIMH Child Emotional Faces Picture Set (NIMH-ChEFS): A new set of children’s facial emotion stimuli. Int. J. Methods Psychiatr. Res. 2011, 20, 145–156. [Google Scholar] [CrossRef] [PubMed]

- LoBue, V.; Thrasher, C. The Child Affective Facial Expression (CAFE) set: Validity and reliability from untrained adults. Front. Psychol. 2014, 5, 1532. [Google Scholar] [CrossRef] [PubMed]

- Nojavanasghari, B.; Baltrušaitis, T.; Hughes, C.E.; Morency, L.-P. Emo react: A multimodal approach and dataset for recognizing emotional responses in children. In Proceedings of the ICMI 2016—18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 137–144. [Google Scholar] [CrossRef]

- Khan, R.A.; Crenn, A.; Meyer, A.; Bouakaz, S. A novel database of children’s spontaneous facial expressions (LIRIS-CSE). Image Vis. Comput. 2019, 83–84, 61–69. [Google Scholar] [CrossRef]

- Silvers, J.A.; McRae, K.; Gabrieli, J.D.E.; Gross, J.J.; Remy, K.A.; Ochsner, K.N. Age-Related Differences in Emotional Reactivity, Regulation, and Rejection Sensitivity in Adolescence. Emotion 2012, 12, 1235–1247. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. April 2018. Available online: https://doi.org/10.48550/arxiv.1804.02767 (accessed on 1 October 2022). [CrossRef]

- Siam, A.I.; Soliman, N.F.; Algarni, A.D.; El-Samie, F.E.A.; Sedik, A. Deploying Machine Learning Techniques for Human Emotion Detection. Comput. Intell. Neurosci. 2022, 2022, 8032673. [Google Scholar] [CrossRef] [PubMed]

- Ouanan, H.; Ouanan, M.; Aksasse, B. Facial landmark localization: Past, present and future. In Proceedings of the 2016 4th IEEE International Colloquium on Information Science and Technology (CiSt), Tangier, Morocco, 24–26 October 2016; pp. 487–493. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2020, arXiv:1905.11946. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. September 2014. Available online: https://ui.adsabs.harvard.edu/abs/2014arXiv1409.1556S (accessed on 19 August 2022).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Weinberger, Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Adhinata, F.D.; Rakhmadani, D.P.; Wibowo, M.; Jayadi, A. A Deep Learning Using DenseNet201 to Detect Masked or Non-masked Face. JUITA J. Inform. 2021, 9, 115. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, AAAI, San Francisco, CA, USA, 4–9 February 2016; pp. 4278–4284. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Improved Visual Explanations for Deep Convolutional Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018, Lake Tahoe, NV, USA, 12–15 March 2017; pp. 839–847. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Z.; Du, M.; Yang, F.; Zhang, Z.; Ding, S.; Mardziel, P.; Hu, X. Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, New Orleans, LA, USA, 19–20 June 2019; pp. 111–119. [Google Scholar] [CrossRef]

- Omeiza, D.; Speakman, S.; Cintas, C.; Weldermariam, K. Smooth Grad-CAM++: An Enhanced Inference Level Visualization Technique for Deep Convolutional Neural Network Models. arXiv 2019, arXiv:1908.01224. [Google Scholar] [CrossRef]

| Reference | Methodology | Modality Used | Issues | Accuracy |

|---|---|---|---|---|

| [5] | They have used a JavaScript library clmtrackr to detect coordinates of the face and then apply a machine-learning algorithm to identify the emotion such as anger, happiness, sad, surprise | Live video-based facial expressions to detect emotions while doing lab work online | Currently, the implemented approach works on identifying the emotions, but the further usage of identified emotions is missing | N/A |

| [6] | Have developed a framework to detect the eye-and-head movement to find out the concentration levels and sentiment during ongoing teaching sessions | Live video-based eye movements and head movement detection during an ongoing lecture | Currently, the implemented approach works on identifying the emotions, but the further usage of identified emotions is missing | 87% |

| [7] | Have captured the audio, then applied sentence-level segmentation and have used WEKA classifier for emotion classification | The audio file is analyzed to find out the learning sentiment | The accuracy of classification can be improved further with different classifiers | 96% |

| [8] | Have used webcam and microphone input and then used data fusion techniques to find out the learning emotions | Live audio and video to determine the learner’s mood in an ongoing class | The classification outcome is given to the user, not to the teacher | 98.6% |

| [9] | A deep-learning algorithm CNN is used to detect the emotion of learning in online tutoring. They have used CK+, JAFFE, and NVIE datasets to train and test the models | Image-based facial expression recognition is performed to detect learning emotions | It was conducted as a simulation study; however, the actual testing in real time was not performed | N/A |

| [10] | Developed an emotion detection framework using the HAAR cascades method to analyze the eyes and mouth section from the images to detect emotion | Image-based facial expression recognition is performed to detect learning emotions | The outcome is drawn only based on two facial features, i.e., eyes and mouth | 78–95% |

| [11] | Have used a support vector machine and random forest classifier to detect the emotion via the audio input | Live audio input is analyzed to find out the learning emotions | It lacked clear discrimination between features such as anger and happiness | 80–93% |

| Dataset | Sample | Subjects | Recording Condition | Elicitation Method | Expressions |

|---|---|---|---|---|---|

| LIRIS | 208 video samples | 12 | Lab and home | Spontaneous | Disgust, happy, sad, surprise, neutral, fear |

| DEFSS | 404 images | 116 | Lab | Posed | Happy, angry, fear, sad, neutral |

| NIMH-Chefs | 480 images | 59 | Lab | Posed | Fear, happy, sad, neutral |

| DDCF | 3200 images | 80 | Lab | Posed | Angry, sad, disgust, afraid, happy, surprised, contempt, neutral |

| CAFE | 1192 images | 90 women 64 men | Lab | Posed (exception: surprise) | Sad, happy, surprise, anger, disgust, fear, neutral |

| EmoReact | 1102 videos | 32 women 31 men | Web | Spontaneous | Happy, sad, surprise, fear, disgust, anger, neutral, valence, curiosity, uncertainty, excitement, attentiveness, exploration, confusion, anxiety, embarrassment, frustration |

| Authors’ dataset | 53 videos, 8 lectures | 4 men 4 women | Lab and home | Spontaneous + posed | Angry, sad, disgust, fear, happy, surprised, neutral |

| Model | LIRIS % | Our Dataset % | CK+/FER2013 % |

|---|---|---|---|

| InceptionV3 | 0.8406 | 0.8861 | 0.7404 |

| InceptionResNet V2 | 0.8405 | 0.8854 | 0.7404 |

| ResNet50 V2 | 0.8671 | 0.8998 | 0.7204 |

| ResNet152 V2 | 0.8931 | 0.9098 | 0.7600 |

| DenseNet121 | 0.8303 | 0.8907 | 0.5206/0.5144 |

| DenseNet201 | 0.8307 | 0.8887 | 0.6475 |

| VGG19 | 0.8100 | 0.8457 | 0.7270/0.8990 |

| Model on LIRIS | Accuracy % | Precision | Recall | F1 Score | Cohen’s Kappa |

|---|---|---|---|---|---|

| InceptionV3 | 0.8406 | 0.8418 | 0.8468 | 0.8458 | 0.8221 |

| InceptionResNet V2 | 0.8405 | 0.8467 | 0.8448 | 0.8440 | 0.8211 |

| ResNet50 V2 | 0.8671 | 0.8611 | 0.8649 | 0.8615 | 0.8454 |

| ResNet152 V2 | 0.8931 | 0.8961 | 0.8994 | 0.8986 | 0.8665 |

| DenseNet121 | 0.8303 | 0.8314 | 0.8367 | 0.8342 | 0.8136 |

| DenseNet201 | 0.8307 | 0.8315 | 0.8368 | 0.8327 | 0.8137 |

| VGG19 | 0.8100 | 0.8055 | 0.8034 | 0.8066 | 0.7898 |

| Model on LIRIS | Accuracy % | Precision | Recall | F1 Score | Cohen’s Kappa |

|---|---|---|---|---|---|

| InceptionV3 | 0.8861 | 0.8854 | 0.8860 | 0.8858 | 0.8864 |

| InceptionResNet V2 | 0.8854 | 0.8867 | 0.8848 | 0.8852 | 0.8842 |

| ResNet50 V2 | 0.8998 | 0.8997 | 0.8998 | 0.8997 | 0.8997 |

| ResNet152 V2 | 0.9098 | 0.9061 | 0.9094 | 0.9086 | 0.9065 |

| DenseNet121 | 0.8907 | 0.8905 | 0.8908 | 0.8909 | 0.8908 |

| DenseNet201 | 0.8887 | 0.8884 | 0.8886 | 0.8888 | 0.8885 |

| VGG19 | 0.8457 | 0.8455 | 0.8447 | 0.8455 | 0.8431 |

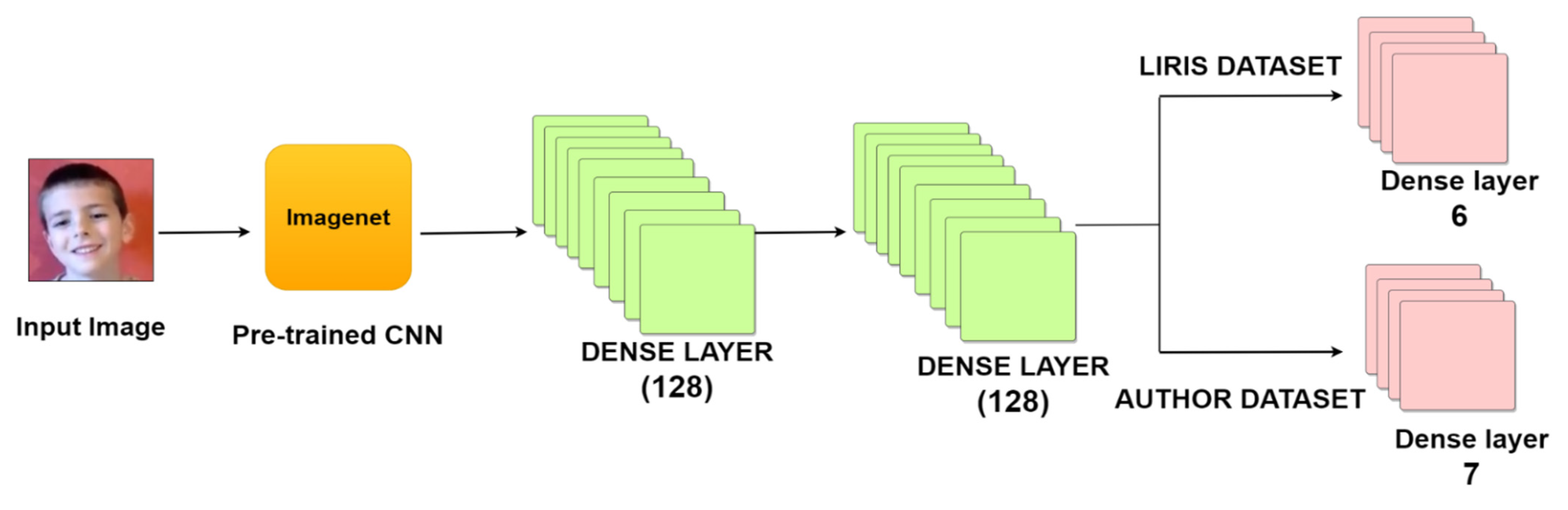

| Layers | Detail Layers | Filters | Units | Kernel Size | Stride | Activation |

|---|---|---|---|---|---|---|

| 1 | Input data | - | - | - | - | - |

| 2 | Pretrained ImageNet CNN | - | - | - | - | - |

| 3 | Dense_1 | - | 128 | - | - | ReLu |

| 4 | Dense_2 | - | 128 | - | - | ReLu |

| 5 | Dense_3 | - | 6 (if LIRIS) 7 (If authors dataset) | - | - | Softmax |

| CNN Used | Epoch Used in LIRIS | Batch Size Used in LIRIS | Epoch Used in Authors Dataset | Batch Size used in Authors Dataset | Pretrained ImageNet Weights Used | Additional Weight is Used |

|---|---|---|---|---|---|---|

| InceptionV3 | 30 | 32 | 6 | 16 | ✓ | ✓ |

| InceptionResNet V2 | 30 | 32 | 6 | 16 | ✓ | ✓ |

| ResNet50 V2 | 30 | 32 | 6 | 16 | ✓ | ✓ |

| ResNet152 V2 | 30 | 32 | 6 | 16 | ✓ | ✓ |

| DenseNet121 | 30 | 32 | 6 | 16 | ✓ | ✓ |

| DenseNet201 | 30 | 32 | 6 | 16 | ✓ | ✓ |

| VGG19 | 30 | 32 | 6 | 16 | ✓ | ✓ |

| Model | WITHOUT FACE MESH % | WITH FACE MESH % | ||

|---|---|---|---|---|

| LIRIS | AUTHOR’S DATASET | LIRIS | AUTHOR’S DATASET | |

| InceptionV3 | 0.8406 | 0.8861 | 0.8164 | 0.8661 |

| InceptionResNetV2 | 0.8405 | 0.8854 | 0.8182 | 0.8626 |

| ResNet50 V2 | 0.8671 | 0.8998 | 0.8356 | 0.8988 |

| ResNet152 V2 | 0.8931 | 0.9098 | 0.8386 | 0.9087 |

| DenseNet121 | 0.8303 | 0.8907 | 0.8157 | 0.8878 |

| DenseNet201 | 0.8307 | 0.8887 | 0.8122 | 0.8821 |

| VGG19 | 0.81 | 0.8457 | 0.8002 | 0.8236 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rathod, M.; Dalvi, C.; Kaur, K.; Patil, S.; Gite, S.; Kamat, P.; Kotecha, K.; Abraham, A.; Gabralla, L.A. Kids’ Emotion Recognition Using Various Deep-Learning Models with Explainable AI. Sensors 2022, 22, 8066. https://doi.org/10.3390/s22208066

Rathod M, Dalvi C, Kaur K, Patil S, Gite S, Kamat P, Kotecha K, Abraham A, Gabralla LA. Kids’ Emotion Recognition Using Various Deep-Learning Models with Explainable AI. Sensors. 2022; 22(20):8066. https://doi.org/10.3390/s22208066

Chicago/Turabian StyleRathod, Manish, Chirag Dalvi, Kulveen Kaur, Shruti Patil, Shilpa Gite, Pooja Kamat, Ketan Kotecha, Ajith Abraham, and Lubna Abdelkareim Gabralla. 2022. "Kids’ Emotion Recognition Using Various Deep-Learning Models with Explainable AI" Sensors 22, no. 20: 8066. https://doi.org/10.3390/s22208066

APA StyleRathod, M., Dalvi, C., Kaur, K., Patil, S., Gite, S., Kamat, P., Kotecha, K., Abraham, A., & Gabralla, L. A. (2022). Kids’ Emotion Recognition Using Various Deep-Learning Models with Explainable AI. Sensors, 22(20), 8066. https://doi.org/10.3390/s22208066