Improved Wearable Devices for Dietary Assessment Using a New Camera System

Abstract

1. Introduction

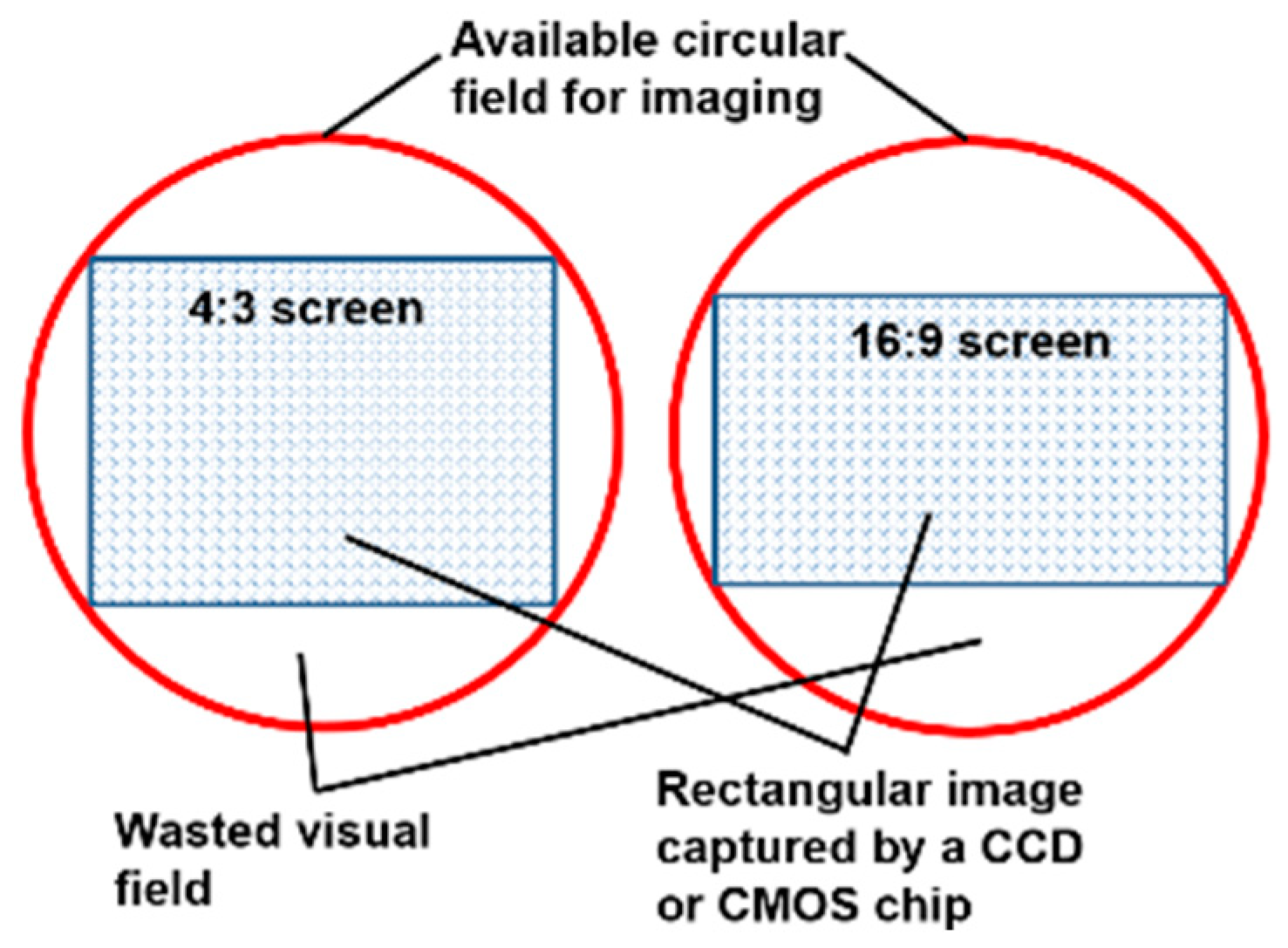

2. Circular vs. Rectangular Images

2.1. Loss of Image Content

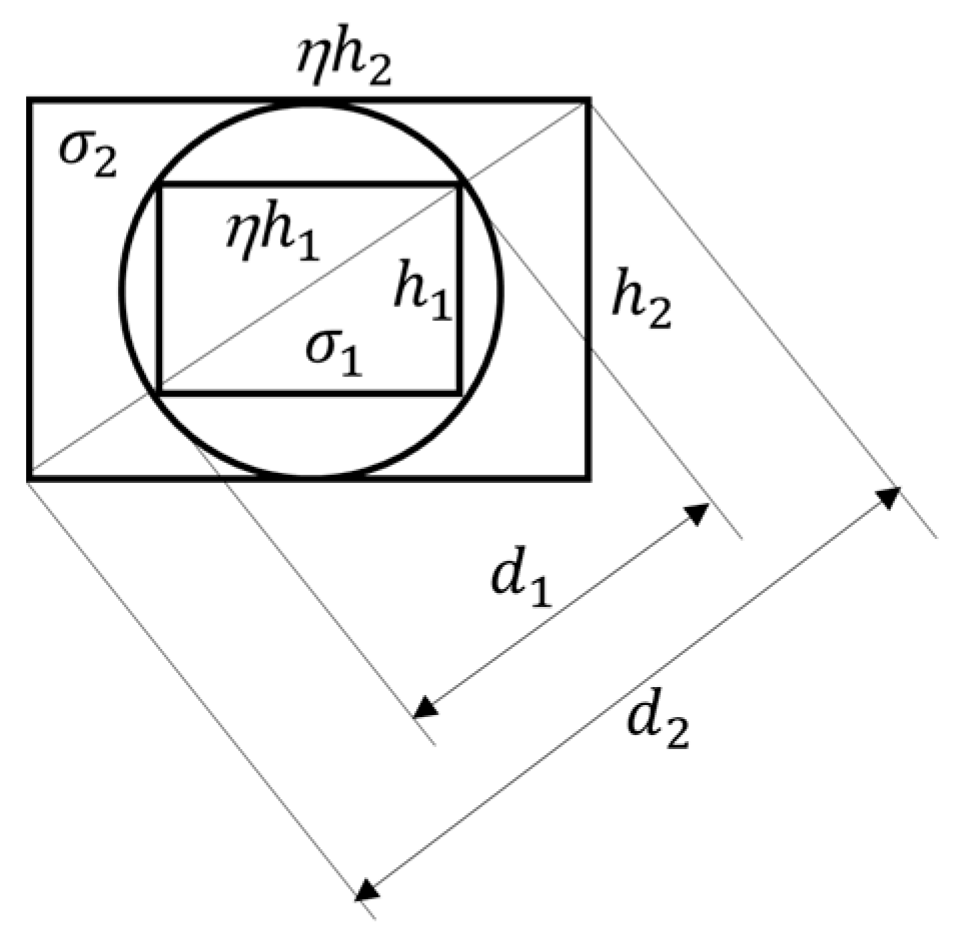

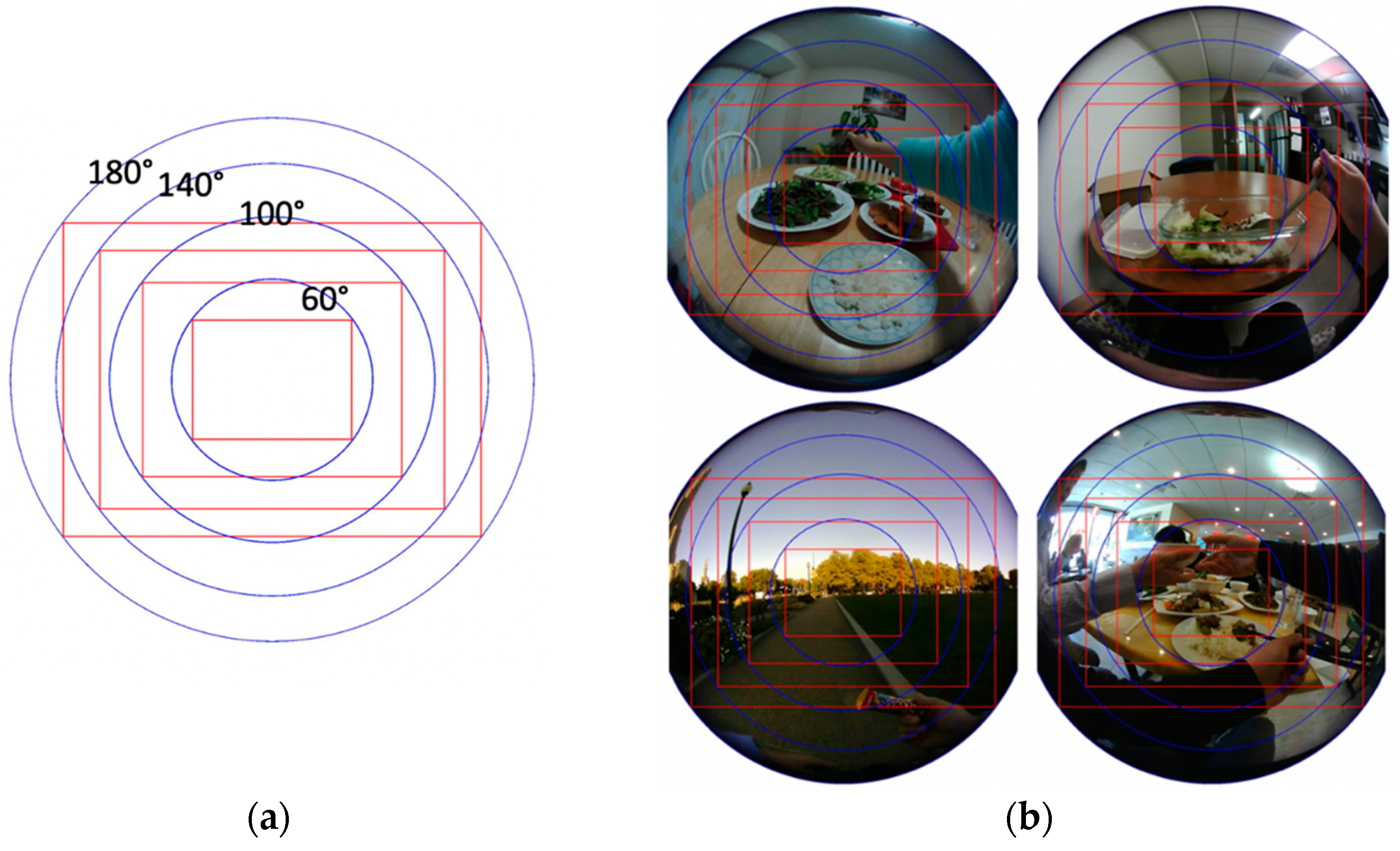

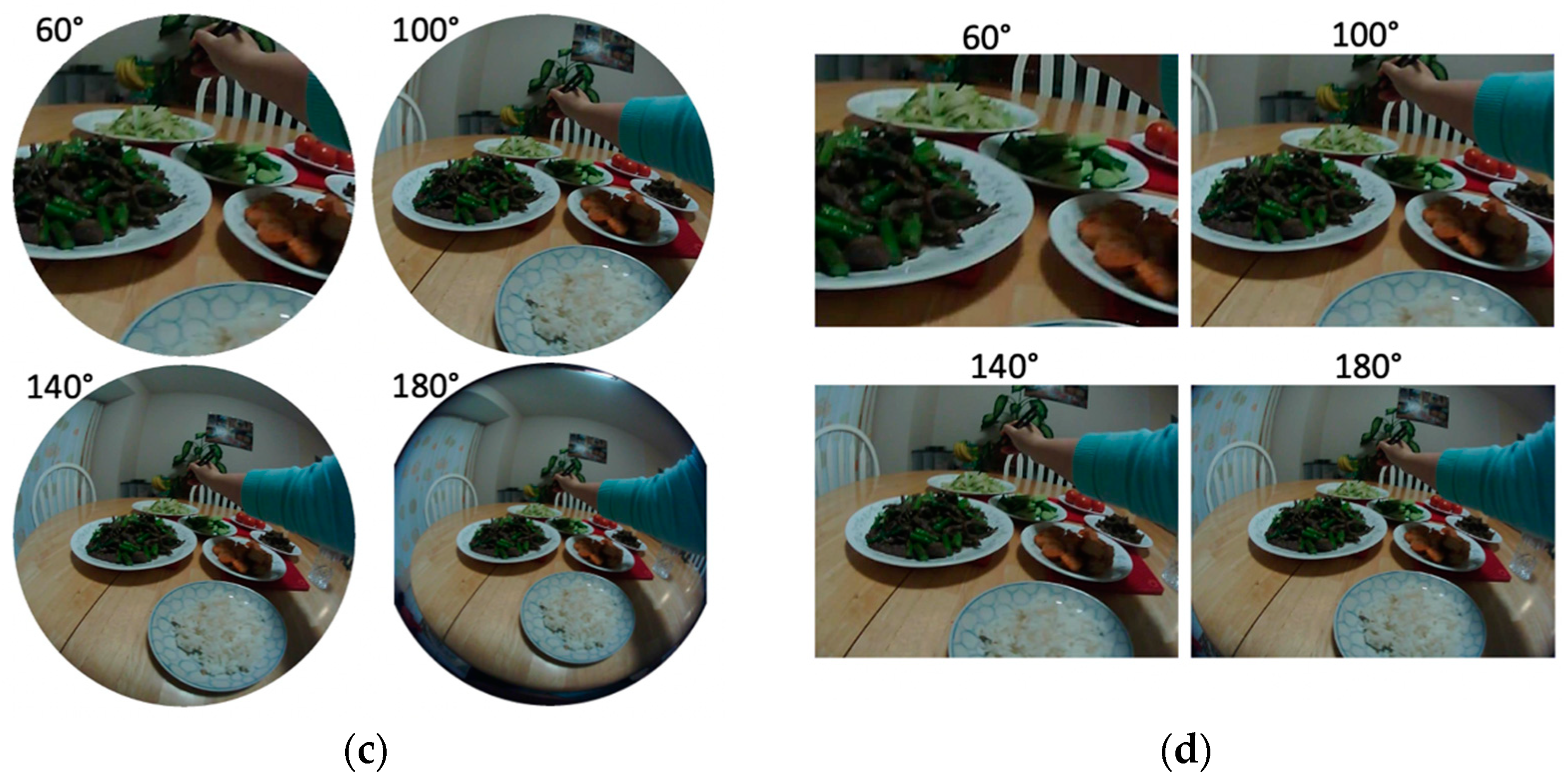

2.2. Variable Field of View

2.3. Effect of Image Distortion

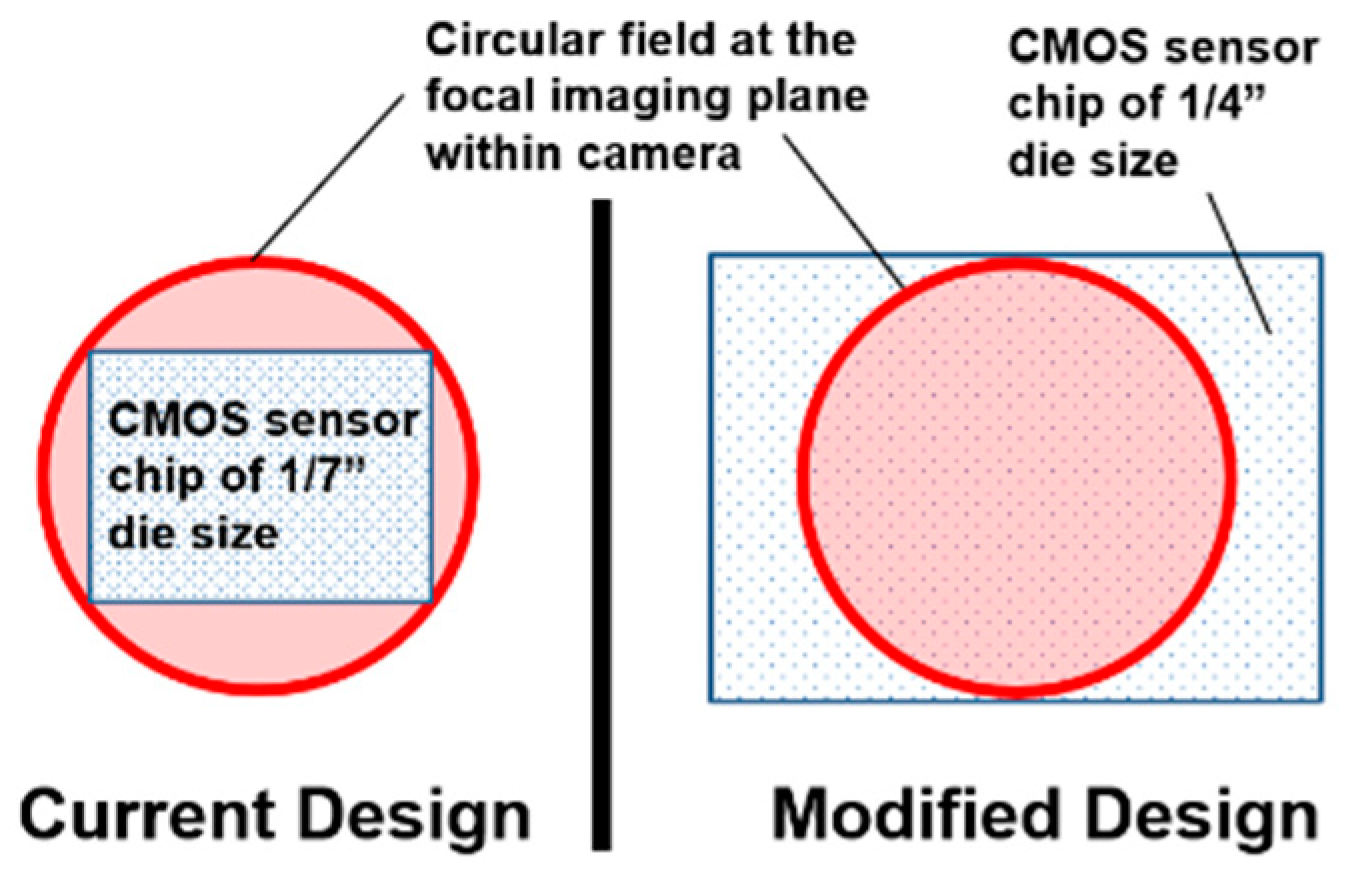

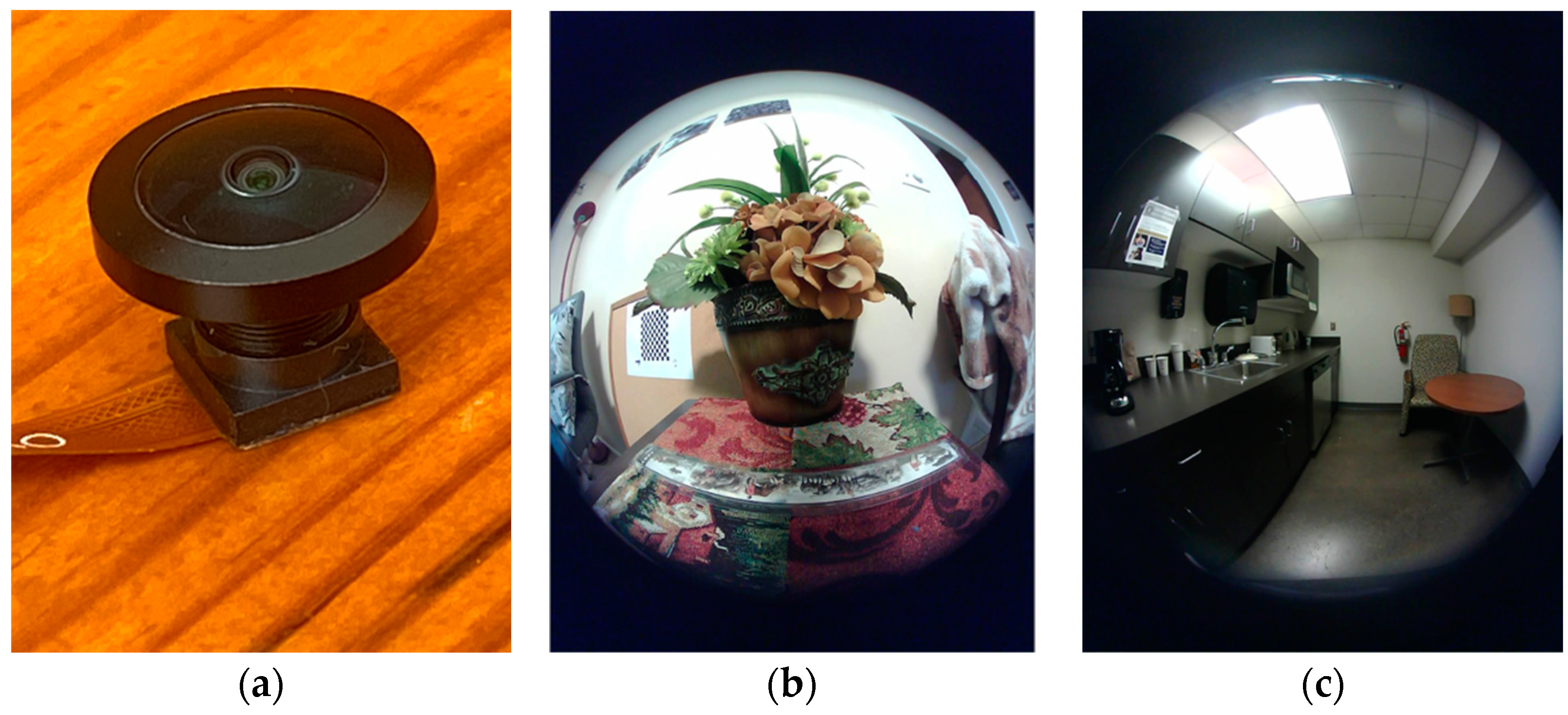

3. Circular Image Generation

3.1. Rematch between Sensor Chip and Lens

3.2. Utilizing a Fisheye Lens

3.3. Comparisons between Circular and Rectangular Images

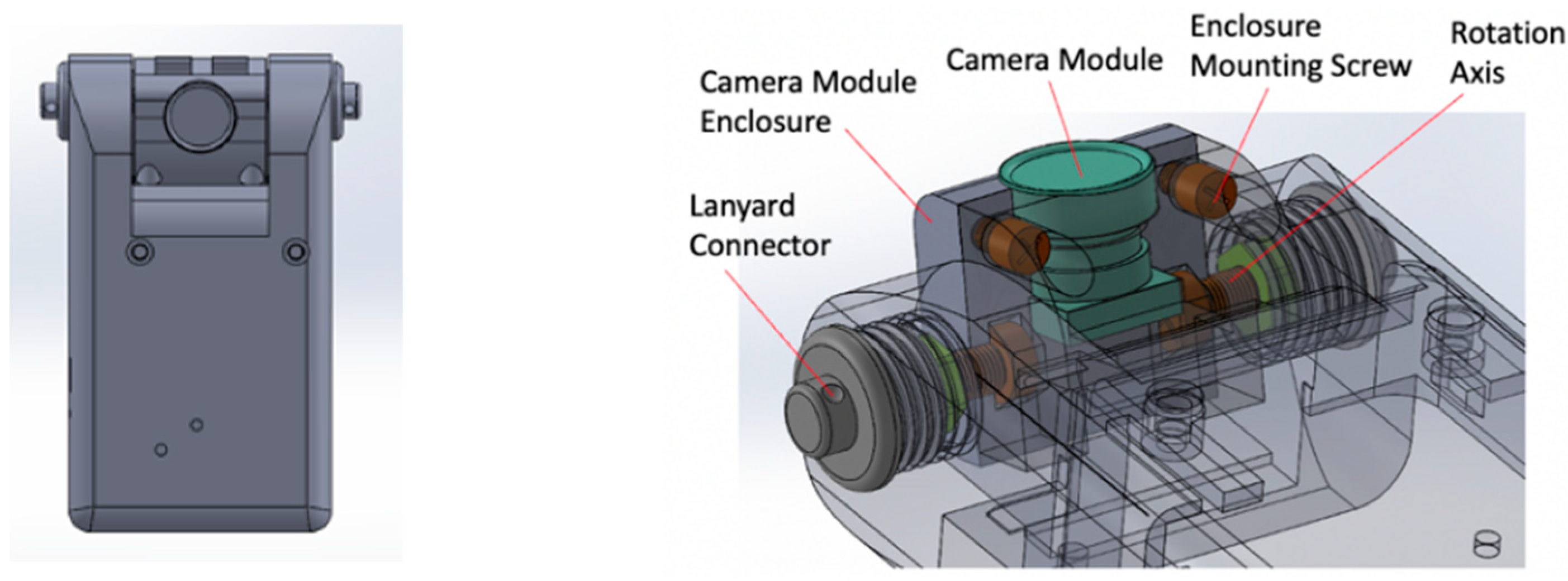

4. Lens Orientation Adjustment

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cecchini, M.; Sassi, F.; Lauer, J.A.; Lee, Y.Y.; Guajardo-Barron, V.; Chisholm, D. Tackling of unhealthy diets, physical inactivity, and obesity: Health effects and cost-effectiveness. Lancet 2010, 376, 1775–1784. [Google Scholar] [CrossRef]

- Forouzanfar, M.H.; Alexander, L.; Anderson, H.R.; Bachman, V.F.; Biryukov, S.; Brauer, M.; Burnett, R.; Casey, D.; Coates, M.M.; Cohen, A.; et al. Global, regional, and national comparative risk assessment of 79 behavioural, environmental and occupational, and metabolic risks or clusters of risks in 188 countries, 1990–2013: A systematic analysis for the Global Burden of Disease Study 2013. Lancet 2015, 386, 2287–2323. [Google Scholar] [CrossRef]

- Shim, J.S.; Oh, K.; Kim, H.C. Dietary assessment methods in epidemiologic studies. Epidemiol. Health 2014, 36, e2014009. [Google Scholar] [CrossRef] [PubMed]

- Gibson, R.S. (Ed.) Principles of Nutritional Assessment; Oxford University Press: Oxford, UK, 2005. [Google Scholar]

- Thompson, F.E.; Subar, A.F. Chapter 1. Dietary Assessment Methodology; Academic Press: San Diego, CA, USA, 2001; pp. 3–30. [Google Scholar]

- Ortega, R.M.; Pérez-Rodrigo, C.; López-Sobaler, A.M. Dietary assessment methods: Dietary records. Nutr. Hosp. 2015, 31, 38–45. [Google Scholar] [CrossRef] [PubMed]

- Baranowski, T. 24-hour recall and diet record methods. In Nutritional Epidemiology, 3rd ed.; Willett, W., Ed.; Oxford University Press: New York, NY, USA, 2012. [Google Scholar]

- Schembre, S.M.; Liao, Y.; O’Connor, S.G.; Hingle, M.D.; Shen, S.E.; Hamoy, K.G.; Huh, J.; Dunton, G.F.; Weiss, R.; Thomson, C.A.; et al. Mobile ecological momentary diet assessment methods for behavioral research: Systematic review. JMIR mHealth uHealth 2018, 6, e11170. [Google Scholar] [CrossRef]

- Subar, A.F.; Kirkpatrick, S.I.; Mittl, B.; Zimmerman, T.P.; Thompson, F.E.; Bingley, C.; Willis, G.; Islam, N.G.; Baranowski, T.; McNutt, S.; et al. The automated self-administered 24-hour dietary recall (asa24): A resource for researchers, clinicians, and educators from the national cancer institute. J. Acad. Nutr. Diet. 2012, 112, 1134–1137. [Google Scholar] [CrossRef]

- Wark, P.A.; Hardie, L.J.; Frost, G.S.; Alwan, N.A.; Carter, M.; Elliott, P.; Ford, H.E.; Hancock, N.; Morris, M.A.; Mulla, U.Z.; et al. Validity of an online 24-h recall tool (myfood24) for dietary assessment in population studies: Comparison with biomarkers and standard interviews. BMC Med. 2018, 16, 136. [Google Scholar] [CrossRef]

- Foster, E.; Lee, C.; Imamura, F.; Hollidge, S.E.; Westgate, K.L.; Venables, M.C.; Poliakov, I.; Rowland, M.K.; Osadchiy, T.; Bradley, J.C.; et al. Validity and reliability of an online self-report 24-h dietary recall method (Intake24): A doubly labelled water study and repeated-measures analysis. J. Nutr. Sci. 2019, 8, e29. [Google Scholar] [CrossRef]

- Hasenbohler, A.; Denes, L.; Blanstier, N.; Dehove, H.; Hamouche, N.; Beer, S.; Williams, G.; Breil, B.; Depeint, F.; Cade, J.E.; et al. Development of an innovative online dietary assessment tool for france: Adaptation of myfood24. Nutrients 2022, 14, 2681. [Google Scholar] [CrossRef]

- U.S. Department of Agriculture, Agricultural Research Service. 2020 USDA Food and Nutrient Database for Dietary Studies 2017–2018. Food Surveys Research Group Home Page, /ba/bhnrc/fsrg. Available online: https://www.ars.usda.gov/northeast-area/beltsville-md-bhnrc/beltsville-human-nutrition-research-center/food-surveys-research-group/docs/fndds-download-databases/ (accessed on 1 October 2022).

- Poslusna, K.; Ruprich, J.; de Vries, J.H.; Jakubikova, M.; van’t Veer, P. Misreporting of energy and micronutrient intake estimated by food records and 24 hour recalls, control and adjustment methods in practice. Br. J. Nutr. 2009, 101 (Suppl. 2), S73–S85. [Google Scholar] [CrossRef]

- Kipnis, V.; Midthune, D.; Freedman, L.; Bingham, S.; Day, N.E.; Riboli, E.; Ferrari, P.; Carroll, R.J. Bias in dietary-report instruments and its implications for nutritional epidemiology. Pub. Health Nutr. 2002, 5, 915–923. [Google Scholar] [CrossRef] [PubMed]

- Gemming, L.; Utter, J.; Ni Mhurchu, C. Image-assisted dietary assessment: A systematic review of the evidence. J. Acad. Nutr. Diet. 2015, 115, 64–77. [Google Scholar] [CrossRef] [PubMed]

- Boushey, C.J.; Spoden, M.; Zhu, F.M.; Delp, E.J.; Kerr, D.A. New mobile methods for dietary assessment: Review of image-assisted and image-based dietary assessment methods. Proc. Nutr. Soc. 2017, 76, 283–294. [Google Scholar] [CrossRef]

- Limketkai, B.N.; Mauldin, K.; Manitius, N.; Jalilian, L.; Salonen, B.R. The age of artificial intelligence: Use of digital technology in clinical nutrition. Curr. Surg. Rep. 2021, 9, 20. [Google Scholar] [CrossRef] [PubMed]

- O’Loughlin, G.; Cullen, S.J.; McGoldrick, A.; O’Connor, S.; Blain, R.; O’Malley, S.; Warrington, G.D. Using a wearable camera to increase the accuracy of dietary analysis. Am. J. Prev. Med. 2013, 44, 297–301. [Google Scholar] [CrossRef]

- Farooq, M.; Doulah, A.; Parton, J.; McCrory, M.A.; Higgins, J.A.; Sazonov, E. Validation of sensor-based food intake detection by multicamera video observation in an unconstrained environment. Nutrients 2019, 11, 609. [Google Scholar] [CrossRef]

- Doulah, A.; Farooq, M.; Yang, X.; Parton, J.; McCrory, M.A.; Higgins, J.A.; Sazonov, E. Meal microstructure characterization from sensor-based food intake detection. Front. Nutr. 2017, 4, 31. [Google Scholar] [CrossRef]

- Fontana, J.M.; Farooq, M.; Sazonov, E. Automatic ingestion monitor: A novel wearable device for monitoring of ingestive behavior. IEEE Trans. Biomed. Eng. 2014, 61, 1772–1779. [Google Scholar] [CrossRef]

- Aziz, O.; Atallah, L.; Lo, B.; Gray, E.; Athanasiou, T.; Darzi, A.; Yang, G.Z. Ear-worn body sensor network device: An objective tool for functional postoperative home recovery monitoring. J. Am. Med. Inf. Assoc. 2011, 18, 156–159. [Google Scholar] [CrossRef]

- Sun, M.; Burke, L.E.; Mao, Z.H.; Chen, Y.; Chen, H.C.; Bai, Y.; Li, Y.; Li, C.; Jia, W. eButton: A wearable computer for health monitoring and personal assistance. In Proceedings of the 51st Annual Design Automation Conference, San Francisco, CA, USA, 1–5 June 2014; pp. 1–6. [Google Scholar]

- Sun, M.; Burke, L.E.; Baranowski, T.; Fernstrom, J.D.; Zhang, H.; Chen, H.C.; Bai, Y.; Li, Y.; Li, C.; Yue, Y.; et al. An exploratory study on a chest-worn computer for evaluation of diet, physical activity and lifestyle. J. Healthc. Eng. 2015, 6, 1–22. [Google Scholar] [CrossRef]

- McCrory, M.A.; Sun, M.; Sazonov, E.; Frost, G.; Anderson, A.; Jia, W.; Jobarteh, M.L.; Maitland, K.; Steiner, M.; Ghosh, T.; et al. Methodology for objective, passive, image- and sensor-based assessment of dietary intake, meal-timing, and food-related activity in Ghana and Kenya. In Proceedings of the Annual Nutrition Conference, Baltimore, MD, USA, 8–11 June 2019. [Google Scholar]

- Chan, V.; Davies, A.; Wellard-Cole, L.; Lu, S.; Ng, H.; Tsoi, L.; Tiscia, A.; Signal, L.; Rangan, A.; Gemming, L.; et al. Using wearable cameras to assess foods and beverages omitted in 24 hour dietary recalls and a text entry food record app. Nutrients 2021, 13, 1806. [Google Scholar] [CrossRef] [PubMed]

- Jobarteh, M.L.; McCrory, M.A.; Lo, B.; Sun, M.; Sazonov, E.; Anderson, A.K.; Jia, W.; Maitland, K.; Qiu, J.; Steiner-Asiedu, M.; et al. Development and validation of an objective, passive dietary assessment method for estimating food and nutrient intake in households in low- and middle-income countries: A study protocol. Curr. Dev. Nutr. 2020, 4, nzaa020. [Google Scholar] [CrossRef] [PubMed]

- Gemming, L.; Doherty, A.; Kelly, P.; Utter, J.; Ni Mhurchu, C. Feasibility of a SenseCam-assisted 24-h recall to reduce under-reporting of energy intake. Eur. J. Clin. Nutr. 2013, 67, 1095–1099. [Google Scholar] [CrossRef] [PubMed]

- Cavallaro, A.; Brutti, A. Chapter 5-Audio-visual learning for body-worn cameras. In Multimodal Behavior Analysis in the Wild; Alameda-Pineda, X., Ricci, E., Sebe, N., Eds.; Academic Press: Cambridge, MA, USA, 2019; pp. 103–119. [Google Scholar]

- OMNIVISION-Image Sensor. Available online: https://www.ovt.com/products/#image-sensor (accessed on 5 October 2022).

- Zhang, Z.Y. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A toolbox for easily calibrating omnidirectional cameras. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Benjing, China, 9–15 October 2006; pp. 5695–5701. [Google Scholar]

- Weng, J.; Cohen, P.; Herniou, M. Camera calibration with distortion models and accuracy evaluation. IEEE Trans. Pattern Anal. 1992, 14, 965–980. [Google Scholar] [CrossRef]

- Urban, S.; Leitloff, J.; Hinz, S. Improved wide-angle, fisheye and omnidirectional camera calibration. ISPRS J. Photogramm. Remote. Sens. 2015, 108, 72–79. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A flexible technique for accurate omnidirectional camera calibration and structure from motion. In Proceedings of the Fourth IEEE International Conference on Computer Vision Systems (ICVS’06), New York, NY, USA, 4–7 January 2006; p. 45. [Google Scholar]

- Micusik, B.; Pajdla, T. Estimation of omnidirectional camera model from epipolar geometry. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; p. I. [Google Scholar]

- Mohanty, S.P.; Singhal, G.; Scuccimarra, E.A.; Kebaili, D.; Heritier, H.; Boulanger, V.; Salathe, M. The food recognition benchmark: Using deep learning to recognize food in images. Front. Nutr. 2022, 9, 875143. [Google Scholar] [CrossRef]

- Lohala, S.; Alsadoon, A.; Prasad, P.W.C.; Ali, R.S.; Altaay, A.J. A novel deep learning neural network for fast-food image classification and prediction using modified loss function. Multimed. Tools Appl. 2021, 80, 25453–25476. [Google Scholar] [CrossRef]

- Jia, W.; Li, Y.; Qu, R.; Baranowski, T.; Burke, L.E.; Zhang, H.; Bai, Y.; Mancino, J.M.; Xu, G.; Mao, Z.H.; et al. Automatic food detection in egocentric images using artificial intelligence technology. Pub. Health Nutr. 2019, 22, 1168–1179. [Google Scholar] [CrossRef]

- Qiu, J.; Lo, F.P.; Lo, B. Assessing individual dietary intake in food sharing scenarios with a 360 camera and deep learning. In Proceedings of the 2019 IEEE 16th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Chicago, IL, USA, 19–22 May 2019; pp. 1–4. [Google Scholar]

- Lo, F.P.; Sun, Y.; Qiu, J.; Lo, B. Food volume estimation based on deep learning view synthesis from a single depth map. Nutrients 2018, 10, 2005. [Google Scholar] [CrossRef]

- Subhi, M.A.; Ali, S.M. A deep convolutional neural network for food detection and recognition. In Proceedings of the 2018 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), Sarawak, Malaysia, 3–6 December 2018; pp. 284–287. [Google Scholar]

- Temdee, P.; Uttama, S. Food recognition on smartphone using transfer learning of convolution neural network. In Proceedings of the 2017 Global Wireless Summit (GWS), Cape Town, South Africa, 15–18 October 2017; pp. 132–135. [Google Scholar]

- Liu, C.; Cao, Y.; Luo, Y.; Chen, G.; Vokkarane, V.; Ma, Y. DeepFood: Deep learning-based food image recognition for computer-aided dietary assessment. In Proceedings of the International Conference on Smart Homes and Health Telematics, Wuhan, China, 25–27 May 2016; pp. 37–48. [Google Scholar]

- Aguilar, E.; Nagarajan, B.; Remeseiro, B.; Radeva, P. Bayesian deep learning for semantic segmentation of food images. Comput. Electr. Eng. 2022, 103, 108380. [Google Scholar] [CrossRef]

- Mezgec, S.; Korousic Seljak, B. NutriNet: A deep learning food and drink image recognition system for dietary assessment. Nutrients 2017, 9, 657. [Google Scholar] [CrossRef] [PubMed]

- Kawano, Y.; Yanai, K. Food image recognition with deep convolutional features. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014; pp. 589–593. [Google Scholar]

- Pan, L.L.; Qin, J.H.; Chen, H.; Xiang, X.Y.; Li, C.; Chen, R. Image augmentation-based food recognition with convolutional neural networks. Comput. Mater. Contin. 2019, 59, 297–313. [Google Scholar] [CrossRef]

- Rashed, H.; Mohamed, E.; Sistu, G.; Kumar, V.R.; Eising, C.; El-Sallab, A.; Yogamani, S.K. FisheyeYOLO: Object detection on fisheye cameras for autonomous driving. In Proceedings of the Machine Learning for Autonomous Driving NeurIPS 2020 Virtual Workshop, Virtual, 11 December 2020. [Google Scholar]

- Baek, I.; Davies, A.; Yan, G.; Rajkumar, R.R. Real-time detection, tracking, and classification of moving and stationary objects using multiple fisheye images. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 447–452. [Google Scholar]

- Goodarzi, P.; Stellmacher, M.; Paetzold, M.; Hussein, A.; Matthes, E. Optimization of a cnn-based object detector for fisheye cameras. In Proceedings of the 2019 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Cairo, Egypt, 4–6 September 2019; pp. 1–7. [Google Scholar]

- Radar Display. Available online: https://en.wikipedia.org/wiki/Radar_display (accessed on 5 October 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, M.; Jia, W.; Chen, G.; Hou, M.; Chen, J.; Mao, Z.-H. Improved Wearable Devices for Dietary Assessment Using a New Camera System. Sensors 2022, 22, 8006. https://doi.org/10.3390/s22208006

Sun M, Jia W, Chen G, Hou M, Chen J, Mao Z-H. Improved Wearable Devices for Dietary Assessment Using a New Camera System. Sensors. 2022; 22(20):8006. https://doi.org/10.3390/s22208006

Chicago/Turabian StyleSun, Mingui, Wenyan Jia, Guangzong Chen, Mingke Hou, Jiacheng Chen, and Zhi-Hong Mao. 2022. "Improved Wearable Devices for Dietary Assessment Using a New Camera System" Sensors 22, no. 20: 8006. https://doi.org/10.3390/s22208006

APA StyleSun, M., Jia, W., Chen, G., Hou, M., Chen, J., & Mao, Z.-H. (2022). Improved Wearable Devices for Dietary Assessment Using a New Camera System. Sensors, 22(20), 8006. https://doi.org/10.3390/s22208006