1. Introduction

Due to long-term exposure to the wild environment, transmission line fittings are prone to defects such as aging, damage and rust, resulting in heavy risk to the transmission safety. It is significantly important to detect and deal with the rust of transmission line fitting in a timely manner. Presently, unmanned aerial vehicle (UAV) inspection has replaced labor routing inspection in many scenarios due to some merits such as no terrain limitation, fast speed, high efficiency, low labor costs, strong safety and so on. In the UAV inspection mode, however, UAVs generally collect monitoring data for the artificial check, which is with low efficiency. Machine vision with artificial intelligence techniques is currently becoming a promising tool to analyze the UAV monitoring data, and has shown prevailing performance compared artificial check. It is of great significance to develop a robust and accurate rust detection method for transmission line fittings.

From the theoretical perspective of machine vision, the rust detection problem can be viewed as the problem of object detection [

1]. With the rapid development of convolutional neural network (CNN), deep learning techniques have become a promising tool in object detection [

2]. In summary, these techniques can be divided into two strategies: one-stage detection and two-stage detection. The one-stage algorithm, such as YOLO [

3], SSD [

4,

5], RetinaNet [

6], uses a unified deep neural network (e.g., CNN) for feature extraction, target classification and bounding box regression, achieving end-to-end object detection. It has a faster detection speed and relatively lower detection accuracy. The two-stage algorithms, mainly the variants of R-CNN, i.e., R-CNN [

7], Fast R-CNN [

8], Faster R-CNN [

9] and Mask R-CNN [

10], adopt a classical sliding window mechanism to extract interested region and then carry out classification with the features of the regions. In these algorithms, Faster R-CNN is on par with, or even outperforms, the other algorithms in terms of detection accuracy. Nevertheless, the classical Faster R-CNN still has some limitations in the detection of small-size objects, especially under complex background. Many studies have been devoted to overcoming the limitations. For instance, Cui et al. [

11] adopted a feature pyramid network in Faster R-CNN with attention module. By highlighting the saliency of object’s features, the detection accuracy can be improved. Lim et al. [

12] introduced a residual attention mechanism to obtain rich information of small-size objects. Aside from considering feature representation, Xue et al. [

13] also introduced coordinate attention mechanism into Faster R-CNN for incorporating the location information that is believed helpful to the channel information. Hong et al. [

14] designed a quartile attention mechanism that uses four branches to capture internal and cross-latitude interactions between channels and spatial locations, making better use of contextual information.

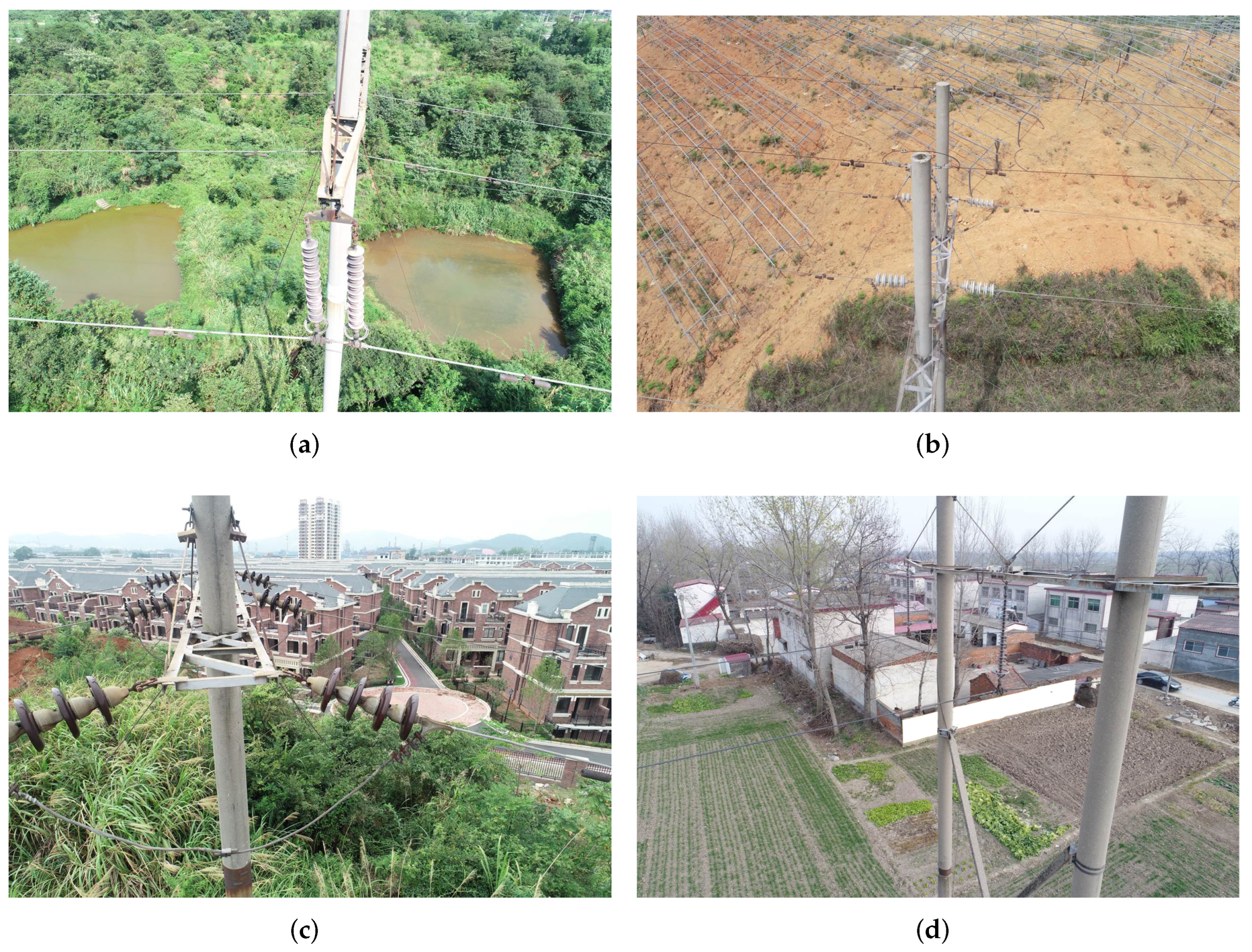

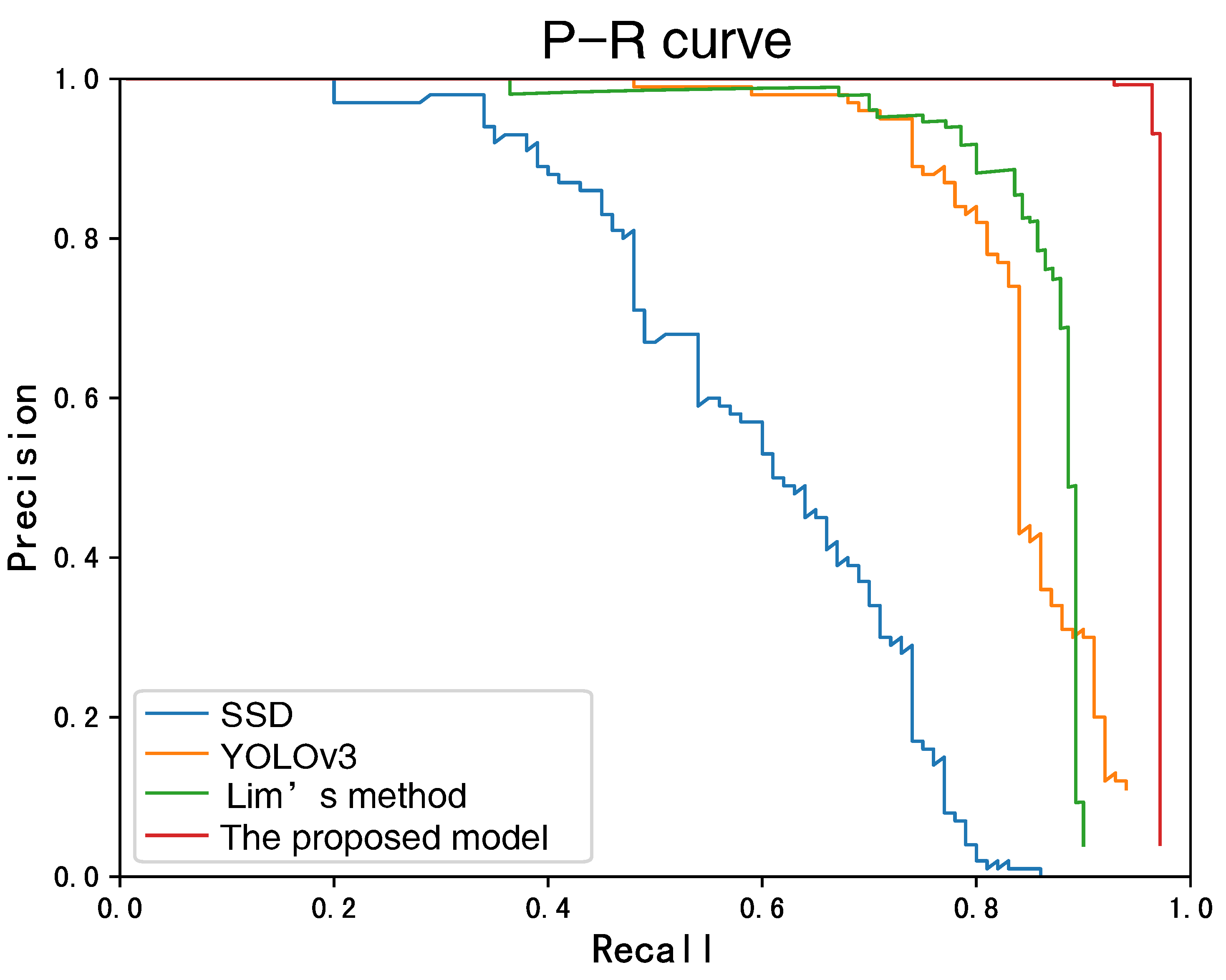

These studies can improve the detection robustness under challenging environments by extracting rich semantic information. According to our empirical study, however, these methods do not work well in the rust detection of transmission line fittings. The reason is that the rust detection of transmission line fittings has some special challenges. In most actual applications, the transmission line is long and widely distributed, leading to complex background for the detection. Too many disturbance items such as tree, car, village, house, etc., exist and raise false detection. Moreover, UAV graph usually contains several fittings, each of which has relatively small size, also raising missing detection.

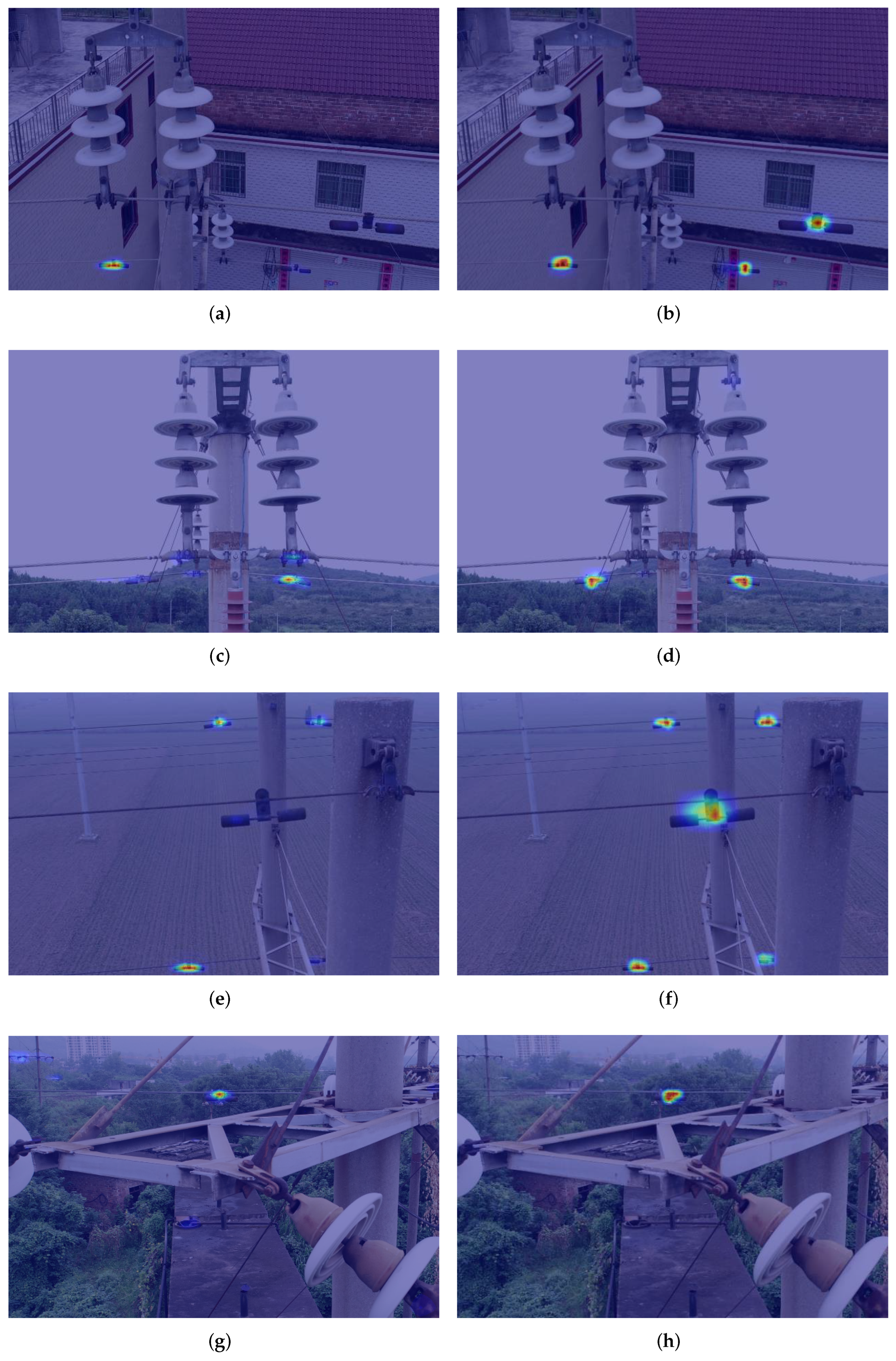

Figure 1 shows some real-world examples for each challenge. It is clear that the small size of fittings, as well as various kinds of disturbance items, brings heavy obstacle for the rust detection. The current methods all work to improve feature representation in front end, e.g., using attention mechanism and pyramid architecture. However, for the rust detection, these front-end improvements cannot guarantee the valid detection for small-size fittings and effectively eliminate the disturbance from the background environment. According to our literature survey, there have been some studies for solving similar problems. For instance, Zhai et al. [

15] proposed a new cascade reasoning graph network for multi-fitting detection on transmission lines. This network incorporates three kinds of domain knowledge, i.e., co-occurrence knowledge, semantic knowledge and spatial knowledge, to represent the co-relation of different mini-size fittings. With these knowledge reasoned by graph attention network, more discriminative features can be extracted based on the original visual features to recognize and position the fittings. However, this method still works in front end and is devoted to feature enhancement before generating accurate proposals. It aims to develop the detection accuracy and does not consider the disturbance of complex background which will reduce the detection robustness. As shown in

Figure 2, missing detection, as well as false detection, has occurred many times in our experiment when running the methods discussed above. For an actual applications, missing detection and false detection should be significantly avoided from the rust detection, especially in online scenarios. For online tour-inspection, UAVs, which are equipped the detection algorithms, need to provide more reliable and robust detection results. It is necessary to enhance the feature representation of fittings based on the current front-end techniques to improve the detection accuracy and robustness.

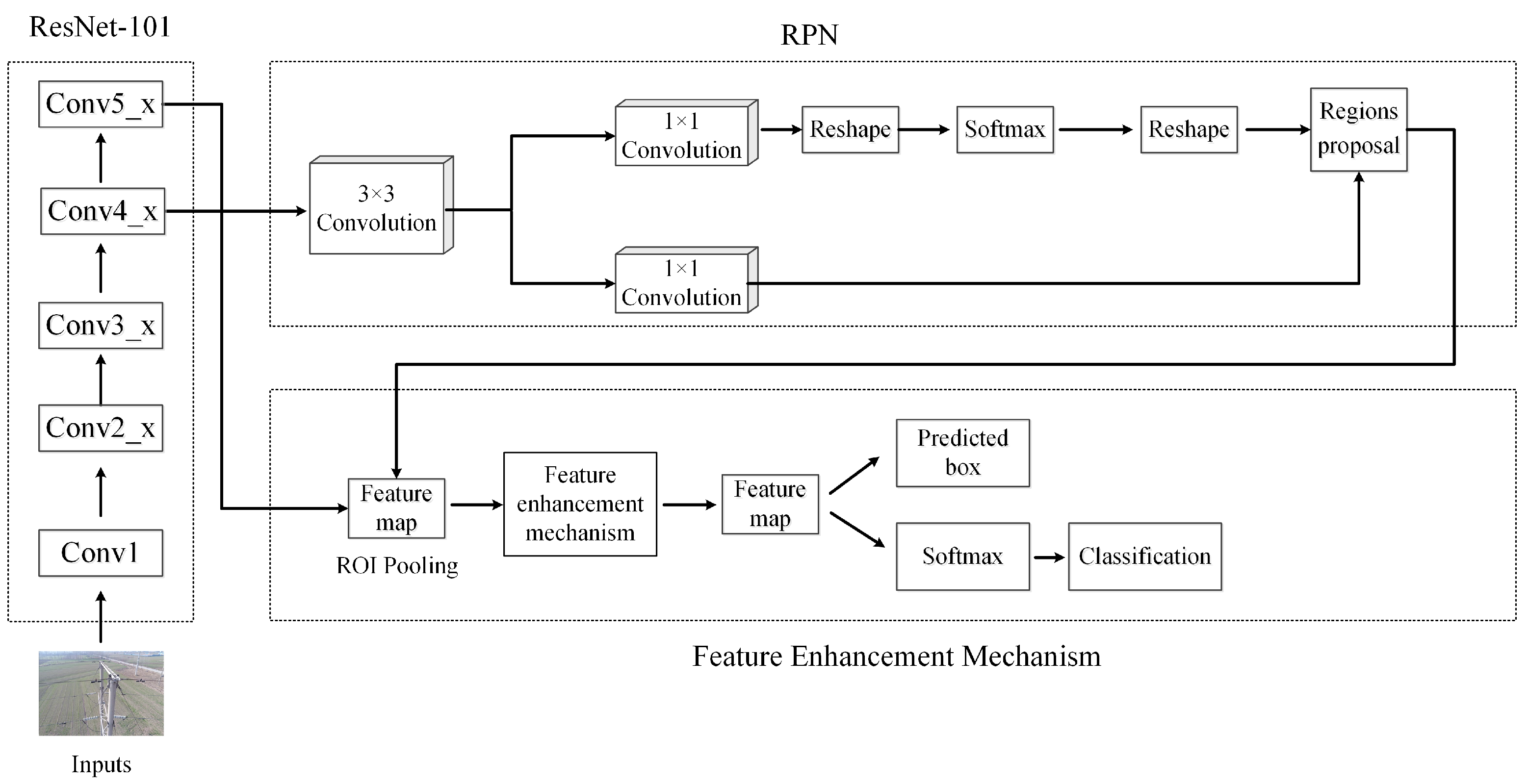

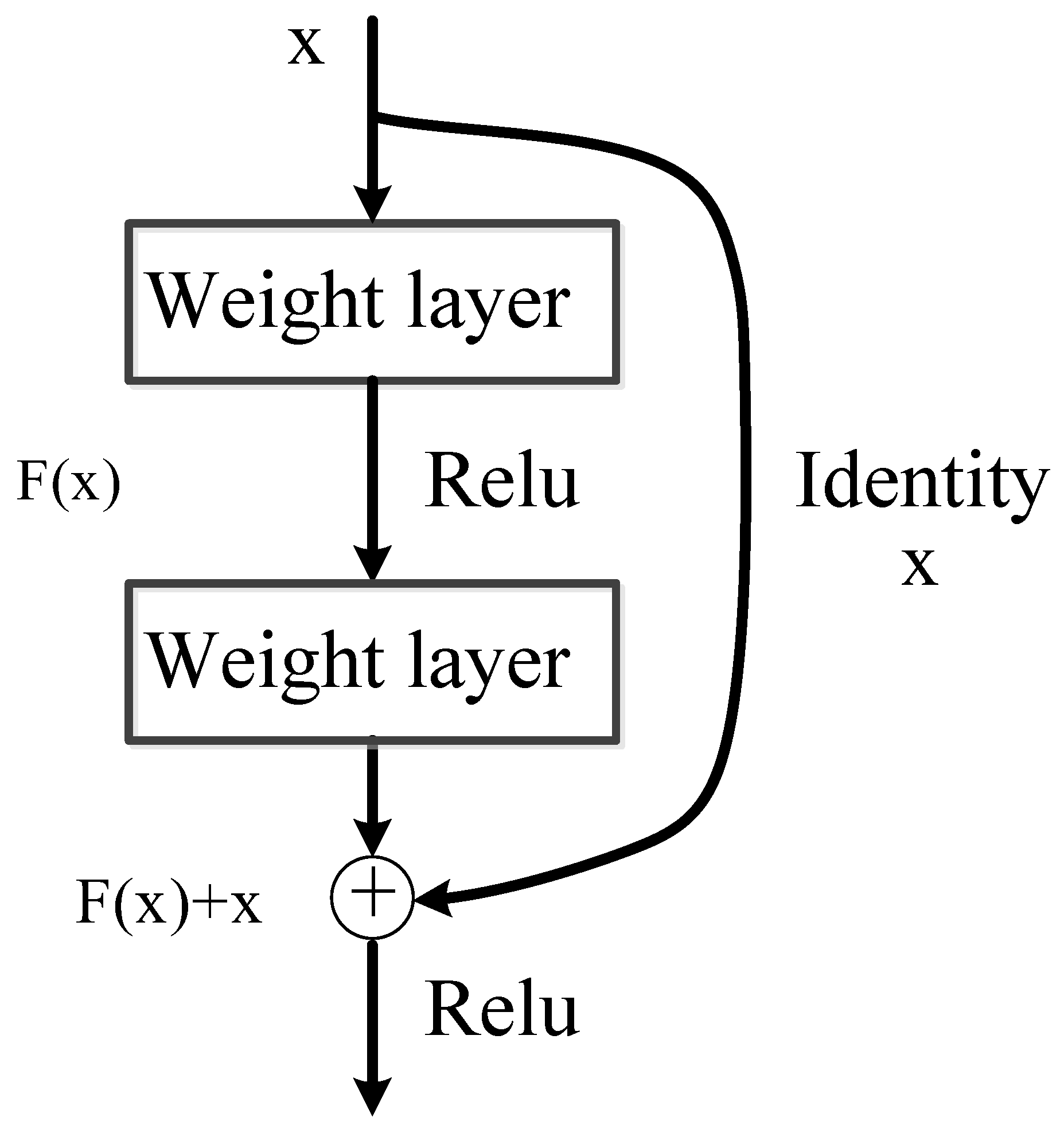

Based on the analysis mentioned above, the main challenge for the rust detection of fittings in complex environment is developing feature representations of the fittings against the background disturbance. We observe an interesting phenomenon from our empirical evaluations. Despite of many cases of missing detection and false detection, Faster R-CNN can still obtain the interesting regions, most of which have a certain degree of feature representation of the fittings. In other words, most of the region proposals in Faster R-CNN actually are related to the fitting object. Then it motivates us a new idea: enhance the feature representation from these regions themselves. Following this idea, we build a new Faster R-CNN model for the robust rust detection of transmission line fitting in this paper. The backbone network, VGG16 network, is replaced by a deeper network ResNet-101 for extracting more rich information about the fitting object from UAV images. More importantly, a new feature enhancement mechanism is built after the region of interest (RoI) pooling layer to improve the feature representation of the regions that have real fittings. The weight of the disturbance terms can then be relatively reduced. Comparative results on some real-world UAV monitoring images verify that the proposed model can significantly increase the detection accuracy and robustness.

The main contributions of this paper can be summarized as follows: (1) From the application perspective, this paper proposes an lightweight but effective solution for the rust detection of transmission line fittings. The proposed method is simple and of high accuracy as well as robustness. To our best knowledge, the study of the rust detection for transmission line fittings is still at its infancy. (2) From the theoretical perspective, this paper constructs a new feature enhancement mechanism in the back end of classical object detection algorithms. Different from most of current methods, this mechanism can further enhance the feature representation based on the generated features. This mechanism can apply for the current two-stage detection methods without too much modification on the algorithmic architecture. We believe this mechanism can provide a different aspect to improve the detection reliability and robustness.

The remaining part of this paper is as follows.

Section 2 is dedicated to the implementation of the classical Faster R-CNN.

Section 3 describes the proposed model in detail.

Section 4 carries out a set of comparative experiments, followed by a conclusion in

Section 5.

2. Background of Faster R-CNN

Faster R-CNN was developed from R-CNN and Fast R-CNN. R-CNN is the first algorithm to apply CNN to an object detection task. It uses a selective search algorithm to obtain region proposals with fine-tuning the CNN, and trains a support vector machine (SVM) classifier that also performs border regression. This method does not work end-to-end. Based on the spatial pyramid pooling network (SPP-Net [

16]), Fast R-CNN inputs the whole image instead of each candidate region into R-CNN for feature extraction, also with the region proposals generated through selective search. The biggest improvement of Faster R-CNN is the use of region proposal network (RPN) to generate regions of interest (ROI), no longer using the selective search strategy again. Another interesting point is that the whole training process can run under GPU environment, indicating computationally inexpensive.

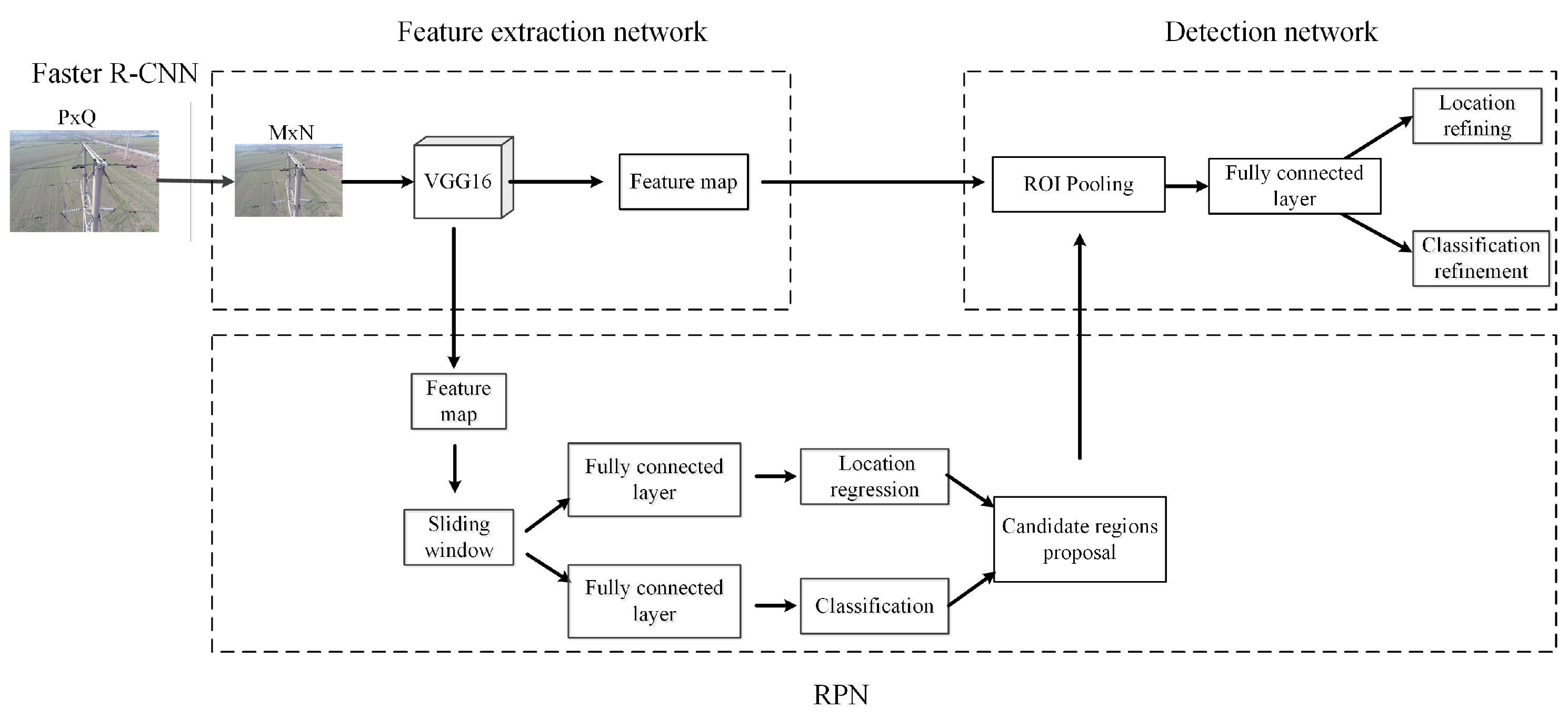

The classical Faster R-CNN algorithm is composed of an RPN network and a Fast R-CNN network. The whole architecture includes four parts: convolution layer, RPN layer, ROI pooling layer and classification regression layer, as shown in

Figure 3. To improve readability, here we take the rust detection problem as an example to describe the algorithmic details.

Faster R-CNN first scales each UAV image of size to the size of , then inputs the image to a CNN network (e.g., the commonly used VGG16) to obtain a feature map. The feature map is then fed into the RPN that generates region proposals on the feature map. The object category, as well as its position, in the region proposals can be obtained through the classification regression layer. Specifically, the RPN distinguishes between the foreground and background of region proposals, and outputs the region proposal in the foreground region. The ROI pooling layer reshape the region proposal in foreground region to a fixed size () by combining the CNN features and RPN information. The region is connected to a detection network for judging the object category and fine-tuning its position as well.

In

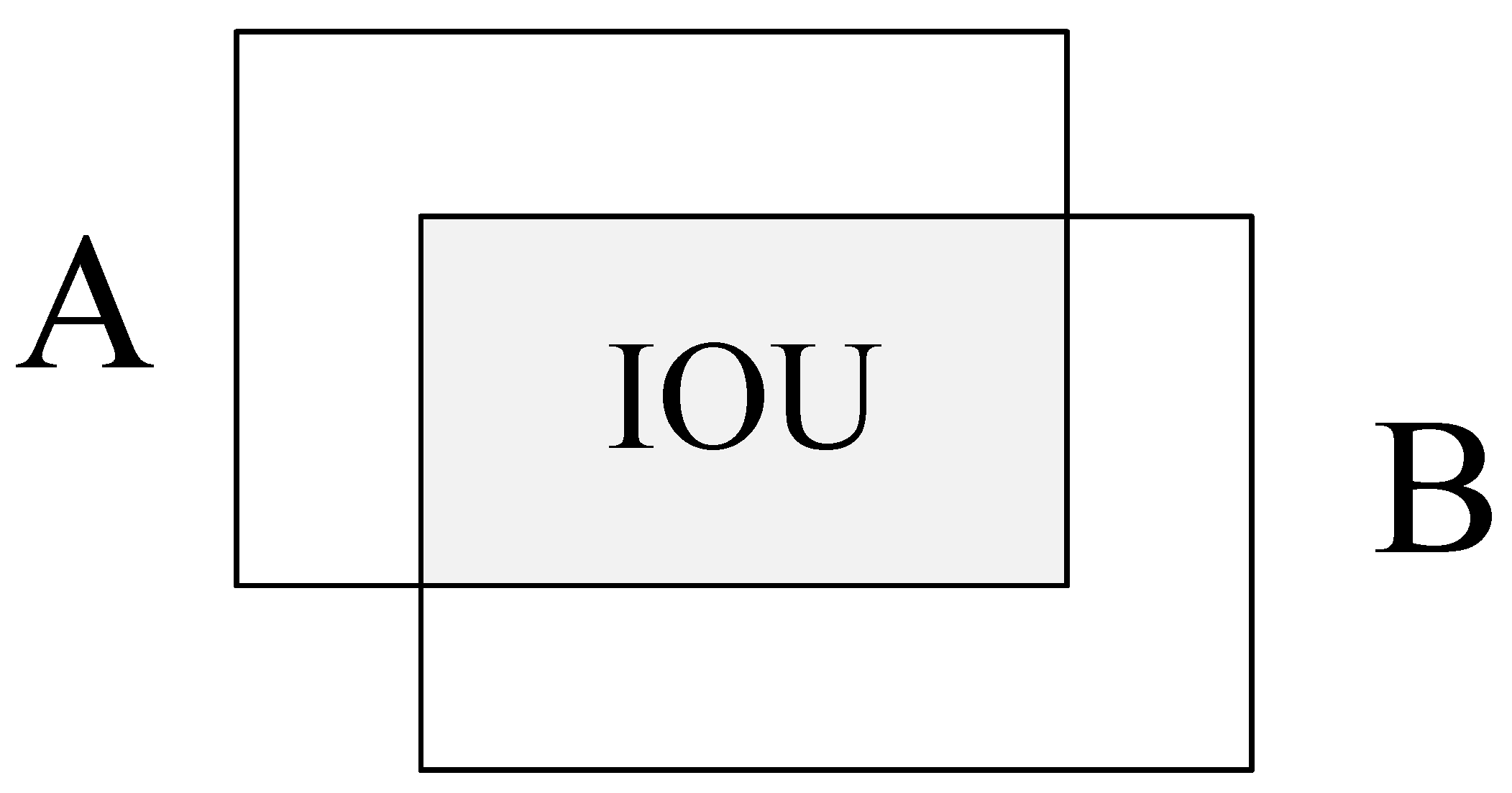

Figure 3, the foreground classification is the key. It requires to compare the region proposals with the ground-truth box manually annotated by experts, and further calculates the intersection ratio of the two boxes, defined as Intersection over Union (IoU):

, just as shown in

Figure 4. When the IoU of one region proposal is greater than 0.7, the region is set as positive sample, i.e., the foreground. If the IoU < 0.3, the region is set as negative sample, i.e., the background. The region proposal with IoU value of 0.3–0.7 is not involved in the training. The positive and negative samples are then used to train RPN.

5. Conclusions

In this paper, a new robust Faster R-CNN model is proposed for the rust detection of transmission line fitting. This model aims at solving the two challenges of the rust detection: disturbance of complex environment and small size of fitting object. The proposed model focuses on the feature enhancement based on the obtained region proposals. With the proposed feature enhancement mechanism, the feature representation of the rusted fittings can be improved in an targeted mode. Moreover, the mechanism is of good application universality, since it can work on different kinds of two-stage detection architectures. With self-learning the rich information about the fitting object, the detection robustness as well as accuracy can then be developed with much lower missing detection rate and false detection rate. Then the reliability of the detection results can be much improved. The proposed model is easy to implement and has better deployment capacity for real-world applications, especially for online scenarios.

In future works, we plan to exploit the structured information about fittings. It can be observed that the appearance of fittings must be accompanied with transmission lines, which indicates sort of structured information. This information is believed beneficial for the rust detection. Moreover, for an actual engineering, the trustworthy decision is more preferable. Interpretability analysis will be applied to the rust detection. How to understand the detection results is another interesting problem. Online rust detection should be also paid more attention since online tour-inspection is an actual demand for UAV applications. In our current engineering, the online detection task is made by loading the offline-trained detection algorithm into the UAV, which motivated our study in this paper, i.e., enhancing the robustness of Faster R-CNN. We think another feasible solution is updating the detection algorithm online with the sequentially-collected images, i.e., in an incremental mode. For example, if the UAV tours around some special terrains, such as forest, villages, rivers, etc., the images with such terrain characteristics should bring more kinds of feature representation for the detection algorithm. The detection model is then required to be updated automatically and incrementally. How to incrementally update the online detection model is interesting, of course, not easy to realize, for the online tour-inspection. We will study this problem in the future work.