Abstract

Affective, emotional, and physiological states (AFFECT) detection and recognition by capturing human signals is a fast-growing area, which has been applied across numerous domains. The research aim is to review publications on how techniques that use brain and biometric sensors can be used for AFFECT recognition, consolidate the findings, provide a rationale for the current methods, compare the effectiveness of existing methods, and quantify how likely they are to address the issues/challenges in the field. In efforts to achieve the key goals of Society 5.0, Industry 5.0, and human-centered design better, the recognition of emotional, affective, and physiological states is progressively becoming an important matter and offers tremendous growth of knowledge and progress in these and other related fields. In this research, a review of AFFECT recognition brain and biometric sensors, methods, and applications was performed, based on Plutchik’s wheel of emotions. Due to the immense variety of existing sensors and sensing systems, this study aimed to provide an analysis of the available sensors that can be used to define human AFFECT, and to classify them based on the type of sensing area and their efficiency in real implementations. Based on statistical and multiple criteria analysis across 169 nations, our outcomes introduce a connection between a nation’s success, its number of Web of Science articles published, and its frequency of citation on AFFECT recognition. The principal conclusions present how this research contributes to the big picture in the field under analysis and explore forthcoming study trends.

1. Introduction

Global research in the field of neuroscience and biometrics is shifting toward the widespread adoption of technology for the detection, processing, recognition, interpretation and imitation of human emotions and affective attitudes. Due to their ability to capture and analyze a wide range of human gestures, affective attitudes, emotions and physiological changes, these innovative research models could play a vital role in areas such as Industry 5.0, Society 5.0, the Internet of Things (IoT), and affective computing, among others.

For hundreds of years, researchers have been interested in human emotions. Reviews on the applications of affective neuroscience include numerous related topics, such as the mirror mechanism and its role in action and emotion [1], the neuroscience of under-standing emotions [2], consumer neuroscience [3], the role of positive emotions in education [4], mapping the brain as the basis of feelings and emotions [5], the neuroscience of positive emotions and affect [6], the cognitive neuroscience of music perception [7], and social cognition in schizophrenia [8]. Applications in neuroscience also include the analysis of cognitive neuroscience [9,10,11], and brain sensors [12,13], and works in the literature also discuss the recognition of basic emotions using brain sensors [14].

Studies of the applications of affective biometrics can be found in the literature in the fields of brain biometric analysis [15], predictive biometrics [16], keystroke dynamics [17], applications in education [18], consumer neuroscience [19], adaptive biometric systems [20], emotion recognition from gait analyses [21], ECG databases [22], and others. Several works on affective states have integrated multiple biometric and neuroscience methods, but none have included an integrated review of the application of neuroscience and biometrics and an analysis of all of the emotions and affective attitudes in Plutchik’s wheel of emotions.

Scientists analyzed various brain and biometric sensors in the reviews [23,24,25,26]. Curtin et al. [23], for instance, state that both fNIRS and rTMS sensors have changed significantly over the past decade and have been improved (their hardware, neuronavigated targeting, sensors, and signal processing), thus clinicians and researchers now have more granular control over the stimulation systems they use. Krugliak and Clarke [26], da Silva [24], and Gramann et al. [27] analyzed the use of EEG and MEG sensors to measure functional and effective connectivity in the brain. Khushaba et al. [25] used brain and biometric sensors to integrate EEG and eye tracking for assessing the brain response. Other scientists [28,29,30,31,32,33] used the following biometric sensors in their studies: heart rate, pulse rate variability, odor, pupil dilation and contraction, skin temperature, face recognition, voice, signature, gestures, and others.

Indeed, the biometrics and neuroscience field has been the focus of studies by many researchers who have achieved significant results. A number of neuroscience studies have analyzed the detection and recognition of human arousal [34], valence [35,36], affective attitudes [36,37], emotional [38,39,40,41], and physiological [42] states (AFFECT) by capturing human signals.

Though most neuroimaging approaches disregard context, the hypothesis behind situated models of emotion is that emotions are honed for the current context [43]. According to the theory of constructed emotion, the construction of emotions should be holistic, as a complete phenomenon of brain and body in the context of the moment [44]. Barrett [45] argues that rather than being universal, emotions differ across cultures. Emotions are not triggered—they are created by the person who experiences them. The combination of the body’s physical characteristics, the brain (which is flexible enough to adapt to whatever environment it is in), and the culture and upbringing that create that environment, is what causes emotions to surface [45]. Recently, there have been attempts in the academic community to supply contextual (from cultural and other circumstances) analysis [46,47].

Various theories and approaches (positive psychology [48,49,50], environmental psychology [51,52,53], ergonomics—human factors science [54,55,56], environment–behavior studies, environmental design [57,58,59], ecological psychology [60,61], person–environment–behavior [62], behavioral geography [63], and social ecology research [64] also emphasize emotion context sensitivity.

The objective of this research is to provide an overview of the sensors and methods used in AFFECT (affective, emotional, and physiological states) recognition, in order to outline studies that discuss trends in brain and biometric sensors, and give an integrated review of AFFECT recognition analysis using Plutchik’s [65] wheel of emotions as the basis. Furthermore, the research aim is to review publications on how techniques that use brain and biometric sensors can be used for AFFECT recognition. In addition, this is a quantitative study to assess how the success of the 169 countries impacted the number of Web of Science articles on AFFECT recognition techniques that use brain and biometric sensors that were published in 2020 (or the latest figures available).

In this paper, we identify the critical changes in this field over the past 32 years by applying text analytics to 21,397 articles indexed by Web of Science from 1990 to 2022. For this review, we examined 634 publications in detail. We have analyzed the global gap in the area of neuroscience and affective biometric sensors and have aimed to update the current big picture. The aforementioned research findings are the result of this work.

When emotions as well as affective and physiological states are determined by recognition sensors and methods—and, later, when such studies are put to practice—a number of issues arise, and we have addressed these issues in this review. Moreover, our research has filled several research gaps and contributes to the big picture as outlined below:

- A fairly large number of studies around the world apply biometric and neuroscience methods to determine and analyze AFFECT. However, there has been no integrated review of these studies.

- Another missing piece is a review of AFFECT recognition, classification, and analysis based on Plutchik’s wheel of emotions theory. We have examined 30 emotions and affective states defined in the theory.

- Information on diversity attitudes, socioeconomic status, demographic and cultural background, and context is missing from many studies. We have therefore identified real-time context data and integrated them with AFFECT data. The correct assessment of AFFECT and predictions of imminent behavior are becoming very important in a highly competitive market.

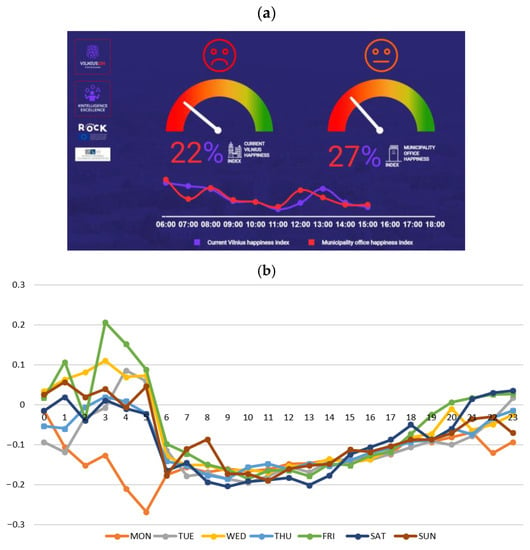

- To demonstrate a few of the aforementioned new research areas in practice, we have developed our own metric, the Real-time Vilnius Happiness Index (Section 4), among other tools. These studies have used integrated methods of biometrics and neuroscience, which are widely applied in various fields of human activity.

- In this research, we therefore examine a more complex problem than any prior studies.

The following sections present the results of this study, a discussion, the conclusions we can draw, and avenues for future research. The method is presented in Section 2. Section 3 summarizes the emotion models. In Section 4, we discuss about brain and biometrics AFFECT sensors, classifications of biometric and neuroscience methods and technologies, emotions and explores the use of traditional, non-invasive neuroscience methods (Section 4) and widely used and advanced physiological and behavioral biometrics (Section 4). Section 4 also summarizes prior research and studies techniques for the recognition of arousal, valence, affective attitudes, and emotion-al and physiological states (AFFECT) in more detail. We summarize existing research on users’ demographic and cultural backgrounds, socioeconomic status, diversity attitudes, and the context in Section 5. We present our research results in Section 6, evaluation of biometric systems in Section 7, and finally, a discussion and our conclusions in Section 8.

2. Method

The research method we used can be broken down as follows: (1) formulating the research problem; (2) examining the most popular emotion models, identifying the best option among them for our research (Section 3), and creating the Big Picture for the model; (3) carrying out a review of publications in the field (Section 4); (4) raising and confirming two hypotheses; (5) collecting data; (6) using the INVAR method for multiple criteria analysis of 169 countries; (7) determining correlations; (8) developing three maps to illustrate the way the success of the 169 countries impacts the number of Web of Science articles on AFFECT (emotional, affective, and physiological states) recognition and their citation rates; (9) developing three regression models; and (10) consolidating the findings, providing a rationale for the current methods, comparing the effectiveness of existing methods, and quantifying how likely they are to address the issues and challenges in the field. The following ten steps of the method describe the proposed algorithm and its experimental evaluation in detail.

Furthermore, the research aim is to review publications on how techniques that use brain and biometric sensors can be used for AFFECT recognition, consolidate the findings, provide a rationale for the current methods, compare the effectiveness of existing methods, and quantify how likely they are to address the issues/challenges in the field (Step 1). We have analyzed the global gap in the area of neuroscience and affective biometric sensors and have set the goal of updating the current big picture. The findings of the research above framed the problem.

Step 2 of the research was to examine the most popular emotion models (Section 3) and identify the best option among them for our research. We have chosen the Plutchik’s wheel of emotions and one of the main reasons is that the model enables integrated analysis of human emotional, affective, and physiological states.

Step 3 was to review sensors, methods, and applications that can be used in the recognition of emotional, affective, and physiological states (Section 4). We have identified the major changes in the field over the past 32 years through a text analysis of 21,397 articles indexed by Web of Science from 1990 to 2022. We searched for keywords in three databases (Web of Science, ScienceDirect, Google Scholar) to identify studies investigating the use of both neuroscience and affective biometric sensors. A total of 634 studies that used both neuroscience and affective biometric sensor techniques in the study methodology were included, and no restrictions were placed on the date of publication. Studies which investigated any population group were at any age or gender were considered in this work.

A set of keywords related to biometric and neuroscience sensors were used for the above search of three databases. Two main sets of keywords “sensors + biometrics + emotions” and “sensors + neuroscience/brain + emotions” were used in our main search. More specific search terms related to biometrics (i.e., eye tracking, blinking, iris, odor, heart rate), neuroscience/brain techniques (i.e., EEG, MEG, TMS, NIRS, SST) and their components (i.e., algorithms, functionality, performance) were also used to refine the search. For each candidate article, the full text was accessed and reviewed to determine its eligibility. The primary results and article conclusions were identified, and discrepancies were resolved by way of discussion. The studies differed significantly in terms of protocol design, signal processing, stimulation methods, the equipment used, the study population, and statistical methods.

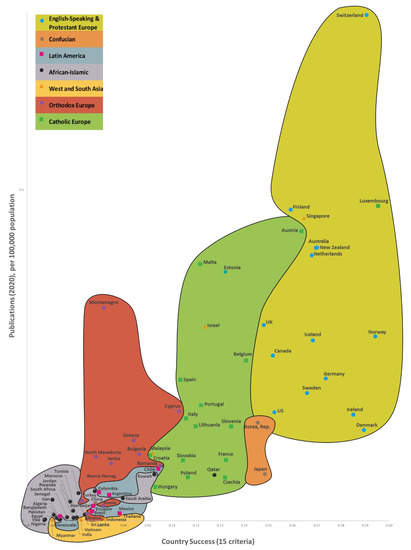

In Step 4, two central hypotheses were raised and confirmed:

Hypothesis 1.

There is an interconnection between a country’s success, its number of Web of Science articles published, and its citation frequency on AFFECT recognition. When there are changes in the country’s success, its number of Web of Science articles published, and its citation times on AFFECT recognition, the countries’ 7 cluster boundaries remain roughly the same (Section 6).

Hypothesis 2.

Increases in a country’s success usually go hand in hand with a jump in its number of Web of Science articles published and its citation times on AF-FECT recognition.

Next, in Step 5, we collected data. The determination of the success of 169 countries and the results obtained are described in detail in a study by Kaklauskas et al. [66]. This study used data [66] from the framework of variables taken from a number of databases and websites, such as the World Bank, Eurostat-OECD, the World Health Organization, Global Data, Global Finance, Transparency International, Freedom House, Knoema, Socioeconomic Data and Applications Center, Heritage, the Global Footprint Network, Climate Change Knowledge Portal (World Bank Group, Washington, DC, USA), the Institute for Economics and Peace, and Our World in Data; global and national statistics and publications were also used. We based our research calculations on publicly available data from 2020 (or the latest available).

We used the INVAR method [67] to conduct a multi-criteria examination of the 169 nations—the outcomes can be found in Section 6 (Step 6). This method determines a combined indicator for whole nation success. This combined indicator is in direct proportion to the corresponding impact of the values and significances of the specified indicators on a nation’s success. The INVAR method was used to conduct multiple criteria analyzes of different groups of countries, such as the former Soviet Union [68], Asian countries [69], and the global analysis of 169 [66] and 173 [70] countries.

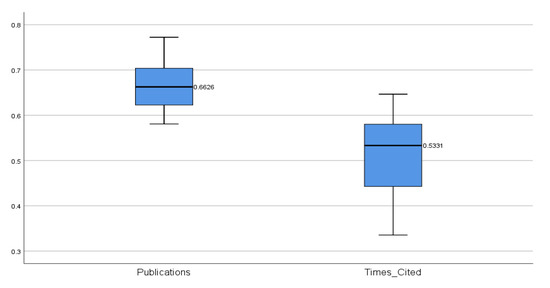

The study’s 7th step presents the median values of the correlations for 169 countries, its publications, and citations (Section 6). It was found that the median correlation of the dependent variable of the Publications—Country Success model with the independent variables (0.6626) is higher than in the Times Cited—Country Success model (0.5331). Therefore, it can be concluded that the independent variables in the Publications—Country Success model are more closely related to the dependent variable than in the Times Cited—Country Success model.

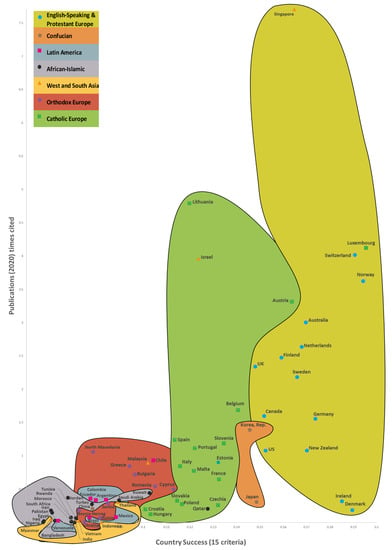

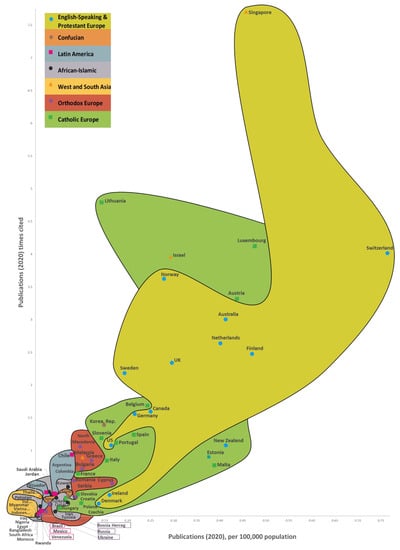

In Step 8, we developed three maps that illustrate the way the success of the 169 countries impacts the number of Web of Science articles on AFFECT (emotional, affective, and physiological states) recognition and their citation rates. The Country’s Success and AFFECT Recognition Publications (CSP) Maps of the World are a convenient way to illustrate how the three predominant CSP dimensions (a country’s success, the numbers of publications, and the frequency of articles being cited) are interconnected for the 169 countries, while the CSP models allow for these connections to be statistically analyzed from various perspectives. It also allows for CSP dimensions to be forecast based on the country’s success criteria. In other words, the CSP models give us a more detailed analysis of the CSP dimensions through statistical and multi-criteria analysis, while the CSP maps (Section 6) are more of a way to present the results in a visual manner. The amount of data available is gradually increasing, as is the knowledge gained from research conducted around the world. As a result, the CSP models are becoming better and better, and providing a clearer reflection of the actual picture. This means that they can effectively facilitate research and innovation policy decisions.

In Step 9, we created two regression models (Section 6). For the multiple linear regressions, we used IBM SPSS V.26 to build two regression models on 15 indicators of country success [66] and the three predominant CSP dimensions (Section 6). Step 9 entailed the construction of regression models for the number of publications and their citation rates, and the calculation of the effect size indicators describing them. Two dependent variables and 15 independent variables were analyzed to construct these regression models. The process was as follows:

- Construction of regression models for the numbers of publications and their citations.

- Calculation of statistical (Pearson correlation coefficient (r), standardized beta coefficient (β), coefficient of determination (R2), standard deviation, p-values) and non-statistical (research context, practical benefit, indicators with low values) effect size indicators describing these regression models.

It was found that changes in the values of the Country Success variable explain the variance of the Publications variable by 89.5%, and the variance of the Times Cited variable by 54.0%. Additionally, when the value of the Country Success variable increases by 1%, the value of Publications increases by 1.962% and Times Cited—by 2.101%. As the success of a country increased by 1%, the numbers of Web of Science articles published and their citations grew by 1.962% and 2.101%, respectively. A reliability analysis of the compiled regression models allows us to conclude that the models are suitable for analysis (p < 0.05). The 15 country success indicators explained 69.4% and 51.18% of the number of Web of Science articles published and their citations, respectively.

Step 10 was to assess the biometric systems under analysis: the rationale behind the available biometric and brain approaches was outlined, the efficacy of existing methods compared, and their ability to address issues and challenges present in the field determined (Section 7).

3. Emotion Models

First, this chapter will discuss emotion models in more detail. Then, we will choose the best option for our research and look at the Big Picture, i.e., the links between the selected emotion model and biometric and brain sensors, and the trends.

Emotional responses are natural to humans, and evidence shows they influence thoughts, behavior, and actions. Emotions fall into different groups related to various affects, corresponding to the current situation that is being experienced [71]. People encounter complex interactions in real life, and respond to them with complex emotions that often can be blends [72]. Emotional responses are the way for our brain and body to deal with our environment, and that is why they are fluid and depend on the context around us [73].

Two fundamental viewpoints form the basis in approaches to the classification of emotions: (a) emotions are discrete constructs and they have fundamental differences, and (b) emotions can be grouped and characterized on a dimensional basis [74]. These classifications (emotions as discrete categories and dimensional models of emotion) are briefly analyzed next.

In word recognition, alternative models have so far received little interest, and one example is the discrete emotion theory [75]. This theory posits that there is a limited set of universal basic emotions hardwired through evolution, and that each of the wide variety of affective experiences can essentially be categorized into this limited set [76,77]. The discrete emotion theory states that many emotions can be distinguished on the basis of expressive, behavioral, physiological, and neural features [78]. The definition of emotions provided by Fox [79] states they are consistent and discrete responding processes that can include verbal, physiological, behavioral, and neural mechanisms. They are triggered and changed by external or internal stimuli or events and respond to the environment. Russell and Barrett [80] argue that, unlike the discrete emotion theory, their alternative models can account for the rich context-sensitivity and diversity of emotions. Emotion blends could be of three kinds: (a) Positive-blended emotions were blends of only positive emotions; (b) negative-blended emotions were blends of only negative emotions; and (c) mixed emotions were blends of both positive and negative emotions, as well as neutral ones. The way teachers have described blended emotions reflects that mathematics teaching involves many and complex tasks, where the teacher has to continuously keep gauging the level of progress [81].

Emotional dimensions represent the classes of emotion. Categorized emotions can be characterized in a dimensional form, with each emotion located in a different location in space, for example in 2D (the circumplex model, “consensual” model of emotion, and vector model) or 3D (the Lövheim cube, the pleasure–arousal–dominance (PAD) emotional state model, and Plutchik’s model) [82].

The circumplex model [83] proposes that two independent neurophysiological systems: One of the systems is related to arousal (activated/deactivated) and to valence (a pleasure–displeasure continuum), and the other to valence (a continuum from pleasure to displeasure) and to arousal (activation–deactivation) [84]. Each emotion can be understood as having varying valence and arousal, and is a linear combination of these two dimensions, or as varying valence and arousal [83,85]. We already applied the Russel’s circumplex model of emotions to perform a review of the human emotion recognition of sensors and methods [85].

The vector model comprises two vectors. The model holds that there is an underlying dimension of arousal with a binary choice of valence that determines direction, and an underlying dimension of arousal. This results in there being two vectors that, both starting at zero arousal and neutral valence and zero arousal, proceed as straight lines, one in a positive, and one in the direction of negative valence and the other in the direction of positive valence. Typically, the vector model uses direct scaling of the dimensions of each individual stimulus individually in this model [86,87].

The positive activation–negative activation (PANA) or “consensual” model of emotion, also known as positive activation/negative activation (PANA), assumes that there are two separate systems—positive affect and negative affect. In the PANA model, the vertical axis represents low to high positive affect, and the horizontal axis of this model represents low to high negative affect (low to high). The vertical axis represents positive affect (low to high) [88]. There are two uncorrelated and independent dimensions: Positive Affect (PA), represents the extent (from low to high) to which a person shows enthusiasm for life. The second factor is Negative Affect (NA), and NA represents the extent to which a person is feeling upset or unpleasantly aroused. Positive Affect and Negative Affect are independent and uncorrelated dimensions [89].

The Pleasure–Arousal–Dominance (PAD) Emotional-State Model, offers a general three-dimensional approach to measuring emotions [90]. This 3D model captures emotional response, and includes the three dimensions of pleasure–displeasure (P), arousal–nonarousal (A), and dominance–submissiveness (D) as basic factors of emotional response [91]. The initials PAD stand for pleasure, arousal, and dominance, which span different emotions. For instance, pleasure can be happy/unhappy, hopeful/despairing, satisfied/unsatisfied, pleased/annoyed, content/melancholic, and relaxed/bored. Arousal can be excited/calm, stimulated/relaxed, wide-awake/sleepy, jittery/dull, frenzied/sluggish, and aroused/unaroused. Dominance can be important/awed, dominant/submissive, influential/influenced, controlling/controlled, in control/cared-for, and autonomous/guided [92]. The neuro-decision and neuro-correlation tables, the inverted U-curve theory, the PAD emotional state model, neuro-decision making, and neuro-correlation tables are used to evaluate the impact of digital twin smart spaces (such as indoor air quality, a level of the lighting intensity and colors, learning materials, images, smells, music, pollution, and others) on users, and track their response dynamics in real time, and to then react to this response [93].

The PAD is composed of three different subscales, reflecting pleasure, arousal, and dominance. These can represent different emotions; for example, the pleasure states include happy (unhappy), pleased (annoyed), satisfied (unsatisfied), contented (melancholic), hopeful (despairing) and relaxed (bored), while the arousal states include stimulated (relaxed), excited (calm), frenzied (sluggish), jittery (dull), wide awake (sleepy) and aroused (unaroused), and the dominance states include controlling (controlled), influential (influenced), in control (cared for), important (awed), dominant (submissive), and autonomous (guided) [92]. The affective space model makes it possible to visualize the distribution of emotions along the two axes of valance (V) and arousal (A). Using this model, different emotions can be identified, such as happiness, calmness, fear, and sadness [94].

Swedish neurophysiologist Lövheim proposed that a cube of emotion is the direct relation between certain specific combinations of the levels of the three signal substances (serotonin, noradrenaline, and dopamine) and eight basic emotions [95]. A three-dimensional model, the Lövheim cube of emotion, was presented where there is a model with each of the signal substances of form represented as the axes of a coordinated system, and each corner of this 3D space holding one of the eight basic emotions is placed in the eight corners. In this model, anger is produced by the combination of high noradrenaline, high dopamine, and low serotonin [96].

The eight main categories of emotions defined by Robert Plutchik in 1980s include two equal groups opposite to each other: half are positive emotions and the other half are negative ones [97]. To visualize eight primary emotion dimensions, which are fear, trust, surprise, anticipation, anger, joy, disgust and sadness, eight sectors have been isolated [98]. The Emotion Wheel shows each of the eight basic emotions highlighted with a recognizable color [99]. When we add another dimension, the Wheel of Emotions becomes a cone with its vertical dimension representing intensity. Moving from the outside towards the wheel’s center emotions intensify and this fact is highlighted by the indicator color. The intensity of emotions is decreasing towards the outer edge and the color, correspondingly, becomes less intense [98,99]. When feelings intensify one feeling can turn into another: annoyance into rage, serenity into ecstasy, interest into vigilance, apprehension into terror, acceptance into admiration, pensiveness into grief, distraction into amazement, and, if left unchecked, boredom can become loathing [98]. Some emotions have no color marking. They are a mix of two primary emotions [98,99]. Joy and anticipation, for instance, combine to become optimism. When anticipation combines with anger it becomes aggressiveness. The combination of trust and fear is submission, joy and trust combine to become love, surprise and fear become awe, the pair of disgust and anger becomes contempt, sadness and disgust combine to become remorse, and surprise and sadness become disapproval [100].

After the analysis of the said emotion models, we have made the decision to choose Plutchik’s wheel of emotions for our research. The ability to analyze human emotional, affective, and physiological states in an integrated manner offered by this model is one of the main reasons of our choice. The wheel is briefly discussed below.

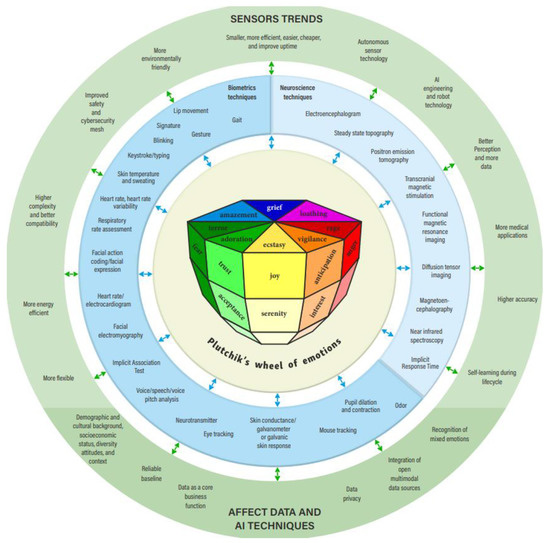

Several ways to classify emotions have been proposed in the field of psychology. For that purpose, the basic emotions are first identified and then they allow clustering with any other more complex emotion [101]. Plutchik [65] proposed a classification scheme based on eight basic emotions arranged in a wheel of emotions, similar to a color wheel. Just like complementary colors, this setup allows the conceptualization of primary emotions by placing similar emotions next to each other and opposites 180 degree apart. Plutchik’s wheel of emotions classifies these eight basic emotions grounded on the physiological aim [102]. Emotions are coordinated with the body’s physiological responses. For example, when you are scared, your heart rate typically increases and your palms become sweaty. There is ample empirical evidence that suggests that physiological responses accompany emotion [103]. Another parallel with colors is the fact that some emotions are primary emotions and other emotions are derived by combining these primary emotions. The two models share important similarities, and such modelling can also serve as an analytical tool to understand personality. In this case, a third dimension has been added to the circumplex model to represent the intensity of emotions. The structural model of emotions is, therefore, shaped like a cone [104]. Figure 1 demonstrates Plutchik’s wheel of emotions, biometrics and brain sensors, and trends and interdependence in this Big Picture stage. At the center of the circles is Plutchik’s wheel of emotions. Plutchik’s wheel of emotions also includes affective attitudes (interest, boredom). Plutchik [65] notes that the same instinctual source of energy is discharged as part of the emotion felt and the underlying peripheral physiological process. Emotions can be of various levels of arousal or degrees of intensity [105]. Looking at the intensity of Plutchik’s eight basic emotions, Kušen et al. [106] identified variations in emotional valence. The first circle, therefore, analyses, directly or indirectly, human arousal, valence, affective attitudes, and emotional and physiological states (AFFECT). Human AFFECT can be measured by means of neuroscience and biometric techniques. The market and global trends are a constant force affecting neuroscience and biometric technologies and their improvement. Based on the analysis of global sources [107,108,109,110] and our experience, Figure 1 presents brain and biometric sensors, as well as technique trends. Sensors will be able to integrate more and more new technologies and collect a greater variety of data, as they will become more accurate, more flexible, cheaper, smaller, greener, and more energy-efficient [108,109,110]. Network neuroscience, a new explicitly integrative approach towards brain structure and function, seeks new ways to record, map, model, and analyze what constitutes neurobiological systems and what interactions happen inside them. The computational tools and theoretical framework of modern network science, as well as the availability of new empirical tools to map extensively and record the way shifting patterns link molecules, neurons, brain areas and social systems, are two trends enabling and driving this approach [107].

Figure 1.

Plutchik’s wheel of emotions, biometrics and neuroscience sensors, and trends.

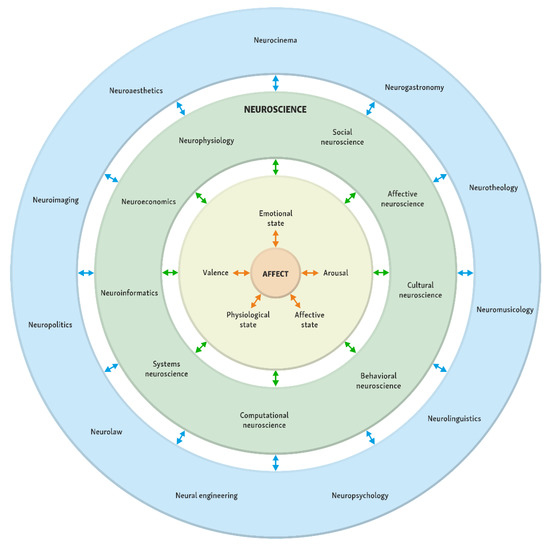

Figure 2 shows numerous sciences and areas in which neuroscience and biometrics analyze the AFFECT. According to Sebastian [111], neuroeconomics is the study of the effect of anticipating money decisions on our brain. It has solidified as an entirely academic and unifying field that ventures to describe the techniques of the decision-making process; and reiterates economic behavior and decision-making process with economic disposition. The procedure of neuroeconomics involves the integration of behavioral experiments and brain imaging in order to more clearly appreciate the workings behind individual and collective decision-making [112]. Serra [113] reported that neuroeconomics researchers utilize neuroimaging devices such as functional magnetic resonance imaging (fMRI), magnetic resonance imaging (MRI), transcranial magnetic stimulation (rTMS), and transcranial direct-current stimulation (tDCS), positron emission tomography (PET) and electroencephalography (EEG). The majority of challenges probed by neuroeconomics researchers are basically similar to the problems a marketing researcher would acknowledge as aspects of their functional domain [114]. Kenning and Plassmann [115] has also defined neuroeconomics as the implementation of neuroscientific methods in the evaluation and appreciation of economically significant behavior.

Figure 2.

Neuroscience and biometric branches analyzing AFFECT in various sciences and fields.

According to Wirdayanti and Ghoni [116], neuromanagement entails psychology, the biological aspect of humans for decision-making in management sciences. As stated Teacu Parincu et al. [117], neuromanagement is targeted at investigating the acts of the human brain and mental performances whenever people are confronted with management challenges, using cognitive neuroscience, in addition to other scientific disciplines and technology, to evaluate economic and managerial problems. Its focal point is on neurological activities that are related to decision-making and develops personal as well as organizational intelligence (team intelligence). It also centers on the planning and management of people (for example, selection, training, group interaction and leadership) [118].

Neuro-Information Science can be defined as the science that observes neurophysiological reactions that are connected with the peripheral nervous system; that is then connected to conventional cognitive activities. Michalczyk et al. [119] stated that neuro-information-systems research has developed into a conventional approach in the information systems (IS) discipline for evaluating and appreciating user behavior. Riedl et al. [120] and Michalczyk et al. [119] concluded that Neuro-information-systems comprise studies that are centered on all types of neurophysiological techniques, such as functional magnetic resonance imaging (fMRI), electroencephalograhy (EEG), fNIRS (functional near-infrared spectroscopy), electromyography (EMG), hormone studies, or skin conductance and heart rate evaluations, as well as magnetoencephalography (MEG) and eye-tracking (ET).

Neuro-Industrial Engineering brought about by the synergy between neuroscience and industrial engineering has afforded resolutions centered on the physiological status of people. Ma et al. [121] reported that NeuroIE secures its objective and real data by analyzing human brain and physiological indexes with advanced brain AFFECT devices and biofeedback technology, evaluating the data, adding neural activities as well as physiological status in the process of evaluation; as new constituents of operations management, and finally understanding better human–machine integration by modifying work environment and production system in line with people’s reaction to the system, preventing mishaps and enhancing efficiency and quality. According to Ma et al. [121], Neuro-Industrial Engineering is centered on humans and lays hold of human physiological status data (e.g., EEG, EMG, GSR and Temp). Zev Rymer [122] also stated that the application of Neuro-Industrial Engineering is multidisciplinary in that it cuts across the neurological sciences (particularly neurology and neurobiology) in addition to different fields of engineering disciplines such as simulation, systems modeling, robotics, signal processing, material sciences, and computer sciences. The area encompasses a range of topics and applications; for example, neurorobotics, neuroinformatics, neuroimaging, neural tissue engineering, and brain–computer interfaces.

As soon as a user contacts an insurer, a bank or any other call center, a version of Cogito’s software known as Dialog could be active in the background, assisting the client service agent to deal with the client. Should the user become upset or angry, the client service agent can ensure that necessary actions are taken to satisfy the client. According to Cogito, this service is known as “digital intuition”. Its usefulness in call centers cannot be overemphasized as it can give feedback about real-time communications. The speed at which speeches are made by the callers as well as the dynamic range of their voices can also be analyzed by the software. For example, significant variations in pitch and stresses in caller’s tones could signify excitement or anger. Less significant dynamism, a monotonous flat tone, could imply a lack of interest or unconcern. Some companies make use of the software to assist their employees engage new patients for healthcare projects that help control health challenges such as obesity or asthma. Cogito is among recent profit-based research companies whose focus are on the evaluation of signals subconsciously given off by people which exposes their mindset. The evaluation of these kinds of social-signals is beneficial beyond call centers and meeting rooms. According to Hodson [123], keeping track of conversations during surgeries or plane cockpits could assist surgeons and pilots to be aware of whether their colleagues are really attentive to their directives, possibly preserving lives.

Several areas where we can apply the technology of recognizing emotions from speech include human–computer interactions and call centers [124].

4. Brain and Biometric AFFECT Sensors

4.1. Classifications

Globally, several classifications of biometric and neuroscience methods and technologies are used. Our research focuses on neuroscience methods that are non-invasive. The use of non-invasive brain stimulation is widespread in studies of neuroscience [125]. The non-invasive neuroscience methods are: transcranial magnetic stimulation (TMS), electroencephalography (EEG), magnetoencephalography (MEG), positron emission tomography (PET), functional magnetic resonance imaging (fMRI), near infrared spectroscopy (NIRS), diffusion tensor imaging (DTI), steady-state topography (SST), and others [126,127,128,129,130,131,132,133,134]. These non-invasive neuroscience methods are described in detail in Section 3. In the future, the authors of this article plan to analyze invasive neuroscience methods, too.

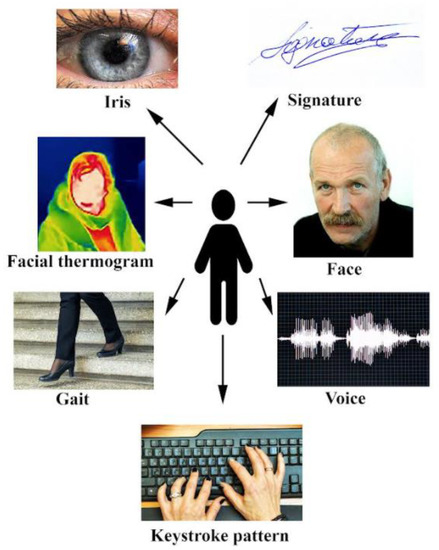

Biometrics can be physical or behavioral. In the first case, emotions can be identified by their physical features, including face, and in the second case by their behavioral characteristics, including gait, voice, signature, and typing patterns [135]. Various sensors can measure physiological signals, known as biometrics, capturing the response of bodily systems to things that are experienced through our senses, but also things imagined, by tracking sleep architecture, heart rate variability (HRV), respiratory rate (RR), and heart rate (RHR) [136].

Scientific literature classifies biometrics into certain types. Stephen and Reddy [137] and Banirostam et al. [138], for instance, classify biometrics into three categories: physiological, behavioral, and chemical/biological. Yang et al. [139] distinguish physiological and behavior traits. Kodituwakku [140] believes biometric technology can be classified into two general categories: physiological biometric techniques and behavioral biometric techniques. Jain et al. [141] and Choudhary and Naik [142] also classify biometrics into two categories: physiological and behavioral. In the literature, not only signature, voice, and gait are considered behavioral biometric features, but also ECG, EMG, and EEG [143], while other authors distinguish cognitive biometrics [144,145], including electroencephalography (EEG), electrocardiography (ECG), electrodermal response (EDR), blood pulse volume (BVP), near-infrared spectroscopy (NIR), electromyography (EMG), eye trackers (pupillometry), hemoencephalography (HEG), and related technologies [145]. Some scientific sources claim that eye tracking is a behavioral biometric [146], while others claim that it is a measurement in physiological computing [147]. Physiological biometrics measures the physiological signals to determine identity as well as authenticating and analyzing users emotions. Respiration, perspiration, heartbeat, eye-reactions to light, brain activity, emotions, and even body odor can be measured for numerous purposes, including physical and logical access control, payments, health monitoring, liveness detection, and neuromarketing among them [136].

Scientists identify the following AFFECT biometric types [139,140,141,142,148,149,150]:

- Physiological features: facial patterns, odor, pupil dilation and contraction, skin conductance, heart rate, respiratory rate, temperature, blood volume pulse, and others.

- Behavioral features: gait, keystroke, mouse tracking, signature, handwriting, speech/voice, and others.

- The authors of this article have used the classification of biometrics proposed by the abovementioned authors (physiological and behavioral features).

Biometric technologies are usually divided into those of first and second generation [151]. First-generation biometrics can confirm a person’s identity in a quick and reliable way, or authenticate them in different contexts, and law enforcement is one of the areas where such solutions are employed in practice [152]. The primary purpose of first-generation biometrics is identity verification, such as facial recognition, and the technology is built around simple sensors that capture physical features and store them for later use [153]. Second-generation biometrics can also be used to detect emotions, with electro-physiologic and behavioral biometrics (e.g., based on ECG, EEG, and EMG) as examples of such technologies [154]. Second-generation biometrics measure individual patterns of learned behavior or physiological processes, rather than physical traits, and are also known as behavioral biometrics [155]. Second-generation biometrics usage has the ability to analyze/evaluate emotions and detect intentions [156]. The use of second-generation biometrics enables wireless data collection regarding the body. The data can then be used to infer an individual’s intent and emotions, as well as emotion tracking across spaces [151,157]. We examine only physiological effects affected by emotional reactions (i.e., second-generation biometrics), and the use of biometric patterns for the identification of individuals is not discussed in this study.

A diverse range of AI algorithms have been applied for AFFECT recognition, for example machine learning, artificial neural networks, search algorithms, expert systems, evolutionary computing, natural language processing, metaheuristics, fuzzy logic, genetic algorithms, and others. Some of the most important supervised (classification, regression), unsupervised (clustering), and reinforcement learning algorithms of machine learning are common as tools in biometrics or neuroscience research to detect emotions and affective attitudes, and are listed below:

- Among classification algorithms the most common choices are: naïve Bayes [158,159,160], Decision Tree [161,162,163], Random Forest [164,165,166], Support Vector Machines [167,168,169], and K Nearest Neighbors [170,171,172].

- Among regression algorithms the usual choices are: linear regression [173,174,175], Lasso Regression [176,177], Logistic Regression [178,179,180], Multivariate Regression [181,182], and Multiple Regression Algorithm [183,184].

- Among clustering algorithms the most common choices in biometrics or neuroscience research are: K-Means Clustering [185,186,187], Fuzzy C-means Algorithm [188,189], Expectation-Maximization (EM) Algorithm [190], and Hierarchical Clustering Algorithm [188,191,192].

- Among reinforcement learning algorithms the most common choices are: deep reinforcement learning [193,194,195] and inverse reinforcement learning [196].

4.2. Brain AFFECT Devices and Sensors

Neuroscience is associated with multiple fields of science, for example chemistry, computation, psychology, philosophy, and linguistics. Various research areas of neuroscience include behavioral, molecular, operative, evolutionary, cellular, and therapeutic features of the neurotic system. The neuroscience market encompasses technology (electrophysiology, neuro-microscopy, whole-brain imaging, neuroproteomics analysis, animal behavior analysis, neuro-functional study, etc.), components (services, instrument, and software) and end-users (healthcare centers, research institutions and academic, diagnostic laboratories, etc.) [197]. Global Industry Analysts Inc. (San Jose, CA, USA) [197] has previously grouped the global neuroscience market into instrument, software, and services based on components.

Neuroscience provides valuable perceptions concerning the structural design of the brain and neurological, physical, and psychological activities. It helps neurologists to appreciate the various components of the brain that can assist in the development of medications and techniques to handle and avoid many neurological anomalies. The rising death rate as a result of several neurological disorders, such as Parkinson’s disease, Alzheimer’s, schizophrenia, and other brain-related health challenges, represents the basic factor controlling the neuroscience market growth [198]. According to Neuroscience Market [198], the increasing request for neuroimaging devices and the progressive brain mapping research and evaluation projects are other crucial growth-inducing factors.

Neuroscience covers a whole range of branches, such as, neuroevolution, neuroanatomy, developmental neuroscience, neuroimmunology, cellular neuroscience, neuropharmacology, clinical neuroscience, cognitive neuroscience, nanoneuroscience, molecular neuroscience, neurogenetics, neuroethology, neurochemistry, neurophysics, paleoneurobiology, neurology, and neuro-ophthalmology.

Other branches of neuroscience analyze AFFECT in various related sciences and fields, such as affective neuroscience [199,200], neuroinformatics [201,202], neuroimaging [203,204], systems neuroscience [205,206], computational neuroscience [207,208], neurophysiology [51,209], behavioral neuroscience [210,211], neural engineering [212,213], neuroeconomics [214,215], neurolinguistics [216,217], neuropsychology [218,219,220], neurophilosophy [221,222,223], neuroaesthetics [224,225,226], neurotheology [227,228,229], neuropolitics [230,231,232], neurolaw [233,234,235], social neuroscience [236,237], cultural neuroscience [238,239], neuroliterature [240,241,242], neurocinema [243,244,245], neuromusicology [246,247,248], and neurogastronomy [249,250].

For example, Lim [251] identifies the following neuroscientific techniques for neuromarketing:

- Electromagnetic methods, including magnetoencephalography (MEG), electroencephalography (EEG), and steady-state topography (SST). MEG involves the magnetic fields produced by the brain (its natural electrical currents) and is used to track the changes that occur when participants see or interact with various presentation outputs. EEG is related to the ways in which brainwaves change and is used to detect changes when participant see or interact with various promoting outputs (an electrode band or helmet is used for this purpose). SST measures a steady-state visually evoked potential, and is used to determine how brain activities change depending on the task;

- Metabolic methods, including positron emission tomography (PET) and functional magnetic resonance imaging (fMRI). PET is used to examine the metabolism of glucose within the brain with great accuracy by tracing radiation pulses, while fMRI is used to measure blood flow in the brain to determine changes in brain activity;

- Electrocardiography (ECG), which uses external skin electrodes to measure electrical changes related to cardiac cycles;

- Facial electromyography (fEMG), which amplifies tiny electrical impulses to record the physiological properties of the facial muscles;

- Transcranial Magnetic Stimulation (TMS), which is used to observe the effects of promoting output on behavior by temporarily disrupting specific brain activities. TMS is a non-invasive, safe brain stimulation method. By means of a strong electromagnet, this technique momentarily generates a short-lived virtual lesion, i.e., disrupts information processing in one of brain regions. If stimulation interferes with performing a certain task, the affected brain region is, then, necessary for normal performance of the task [252].

Table 1 demonstrates traditional non-invasive neuroscience methods.

Table 1.

Traditional non-invasive neuroscience methods.

For clarity, several descriptions of traditional neuroscience methods are presented below.

Wearable healthcare devices store a lot of sensitive personal information which makes the security of these devices very essential. Sun et al. [272] proposed an acceleration-based gait recognition method to improve gait-based elderly recognition. Gait is also a good indicator in health assessment, Majumder et al. [273] created a simple wearable gait analyzer for the elderly to support healthcare needs.

Lim [251] states that neuroscientific methods and tools include those that track, chart, and record the activity of a person’s neural system and brain in relation to a certain behavior, and neurological representations of this activity can then be generated to shed light on how an individual’s brain and nervous system respond when the person is exposed to a stimulus. In this way, neuroscientists can observe the neural processes as they happen in real time. There are three main types of neuroscientific method: those that track what is happening inside the brain (metabolic and electromagnetic activity); those that track what is happening at the neural level outside the brain; and those that can influence neural activity (Table 1, Figure 1).

Non-invasive neuroscience technical information is provided in detail in various research literature about the origin of the measured signal and the engineering/physical principle of the sensors for EEG [274,275,276], MEG [277,278,279], TMS [280,281,282], etc.

Gannouni et al. [283] have proposed a new approach with EEG signals used in emotion recognition. To achieve better emotion recognition using brain signals, Gannouni et al. [283] applied a novel adaptive channel selection method. The basis of this method is the acknowledgment that different persons have unique brain activity that also differs from one emotional state to another. Gannouni et al. [283] argue that emotion recognition using EEG signals needs a multi-disciplinary approach, encompassing areas such as psychology, engineering, neuroscience, and computer science. With the aim of improving the reproducibility of emotion measurement based on EEG, Apicella et al. [35] have proposed an emotional valence detection method for a system based on EEG, and their experiments proved an accuracy of 80.2% in cross-subject analysis and 96.1% in within-subject analysis. Dixson et al. [284] have pointed out that facial hair may interfere with detection of emotional expressions in a visual search. However, facial hair may also interfere with the detection of happy expressions within the face in the crowd paradigm, rather than facilitating an effect of anger superiority as a potential system for threat detection.

Wang et al. [285] introduced an EEG-based emotion recognition system to classify four emotion states (joy, sadness, fear, and relaxed). Their experiments used movie elicitation to acquire EEG signals from their subjects [285]. The way in which meditation influences emotional response was investigated via EEG functional connectivity of selected brain regions as the subjects experienced happiness, anger, sadness or were relaxed, before and after meditation.

Neurometrics is a quantitative EEG method. Looking at individual records, this method provides a reproducible, precise estimate of deviations from normal. Only sufficient amount of good quality raw data transformed for Gaussian distributions, correlated with age, and corrected taking into account intercorrelations among measures ensure meaningful and reliable results [286]. Businesses, government agencies, and individuals use neurometric information when they need timely and profitable decisions. Techniques based on neurometric information are applied to make profitable business decisions. These techniques are based on biometric information, eye tracking, facial action coding and implicit response testing, and are used to understand and record human sentiments and other related feedback [161].

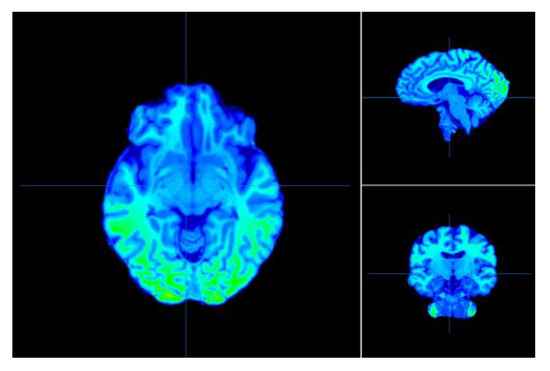

The fronto-striatal network is involved in a range of cognitive, emotional, and motor processes, such as decision-making, working memory, emotion regulation, and spatial attention. Practice shows that intermittent theta burst transcranial magnetic stimulation (iTBS) modulates the functional connectivity of brain networks. Treatments of mood disorders usually involve high stimulation intensities and long stimulation intervals in transcranial magnetic stimulation (TMS) (Figure 3) therapy [287].

Figure 3.

Resting state TMS brain scan image [287].

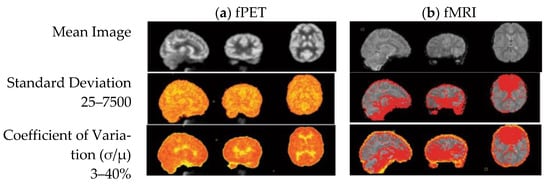

One of imaging techniques is FDG-PET/fMRI (simultaneous [18F]-fluorodeoxyglucose positron emission tomography and functional magnetic resonance imaging). This technique makes it possible to image the cerebrovascular hemodynamic response and cerebral glucose uptake. These two sources of energy dynamics in the brain can provide useful information. Another greatly useful technique for characterizing interactions between distributed brain regions in humans has been resting-state fMRI connectivity, while metabolic connectivity can be a complementary measure to investigate the dynamics of the brain network. Functional PET (fPET), a new approach with high temporal resolution, can be used to measure fluoro-d-glucose (FDG) uptake and looks like a promising method to assess the dynamics of neural metabolism [288]. Figure 4 shows raw images of signal intensity variation across the brain for one individual subject.

Figure 4.

Raw images of fPET and fMRI scans [288].

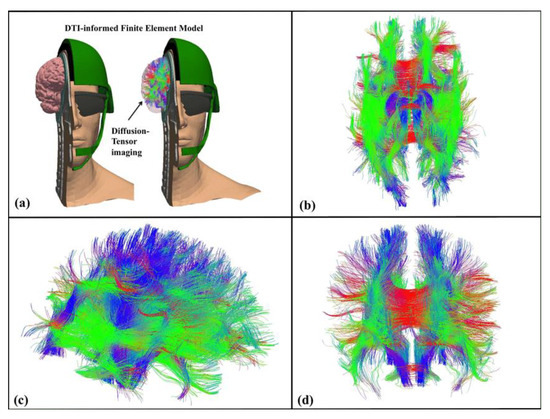

Many biological tissues comprised of fibers, which are groups of cells aligned in a uniform direction, have anisotropic properties. In the human brain, for instance, within its white matte regions, axons usually form complex fiber tracts that enable anatomical communication and connectivity. Non-invasive tools can show the groups of axonal fibers visually. One of them is diffusion tensor magnetic resonance medical imaging (DTI), which is one particular method or application of the broader Diffusion-Weighted Imaging (DWI). The basic principle behind this technique is that water diffuses more slowly as it moves perpendicular to the preferred direction, whereas in the direction aligned with the internal structure the diffusion is more rapid. The DTI outputs can be further used to compute diffusion anisotropy measures such as the fractional anisotropy (FA). The principal direction of the diffusion tensor can also be used to obtain estimates related to the white matter connectivity in the brain. Figure 5 shows an example of DTI tractography, or visualization of the white matter connectivity [289].

Figure 5.

DTI can be used to construct a transversely isotropic model by overlaying axonal fiber tractography on a finite element mesh: (a) DTI-informed Finite Element Model; tractography shows complex fibers from (b) the dorsal view, (c) the right lateral side view, and (d) the posterior view. Cartography of the tracts’ position, direction by color: red for right-left, blue for foot-head, green for anterior-posterior [289].

4.3. Physiological and Behavioral Biometrics

Physiological biometrics (as opposed to behavioral biometrics) is a category of approaches that refers to physical measurements of the human body, including face, pupil constriction and dilation [290]. When a recognition system is based on physiological characteristics it can ensure a comparatively high accuracy [291]. The ubiquity of electronics such as cell phones and computers, and evolving sensor technology offer human beings new possibilities to track their behavioral and physiological features and evaluate the associated biometric results. Advances in mobile devices mean they now have many efficient and complex sensors. Biometric technology often contributes to mobile application growth, including online transaction efficiency, mobile banking, and voting. The global market for biometric systems is wide and comprises many different segments such as healthcare, transportation and logistics, security, military and defense, government, consumer electronics, and banking and finance [292].

Table 2 presents widely used physiological and behavioral biometrics.

Table 2.

Physiological and behavioral biometrics.

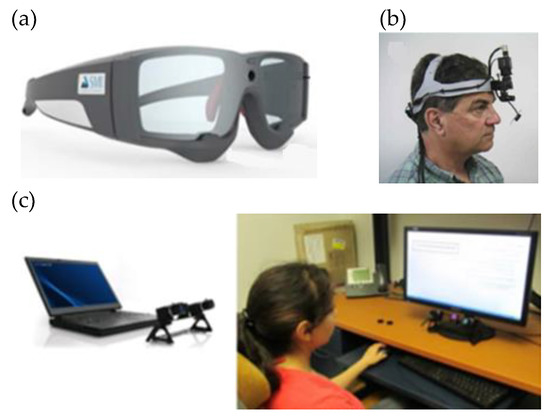

Most of today’s eye tracking systems are video-based, with an eye video camera and infrared illumination. Eye tracking systems can be categorized as tower-mounted, mobile, or remote based on how they interface with the environment and the user (Figure 6) and different video-based eye tracking systems are required depending on the experiment, the environment, and the type of activity to be studied [313]. Researchers have used eye-tracking for behavioral research.

Figure 6.

Sample of various kinds of eye-tracking tools: (a) eye-tracking glasses [314]; (b) helmet-mounted [315]; (c) remote or table [316].

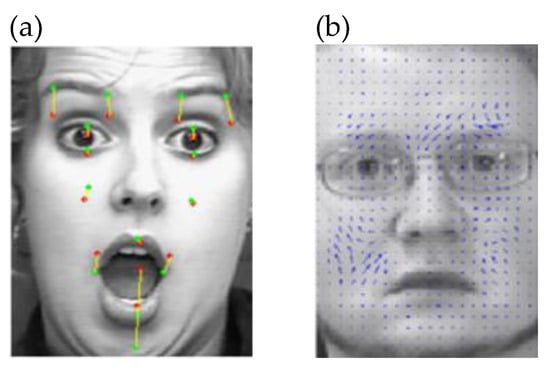

The left image in Figure 7 shows the last frame of an expression showing surprise on a sample face from Cohn–Kanade database and highlights the trajectories (the bright lines that change color from darker to brighter from their start to end) followed by each tracked feature point. Figure 7. The application of the dense flow method (right) and the result of applying the feature optical flow on the subset of 15 points (left) [317].

Figure 7.

Facial expression recognition: (a) feature point tracking; (b) dense flow tracking [317].

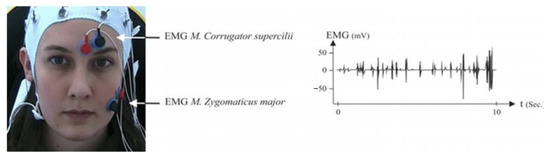

A group of participants were tested to record the facial EMG (fEMG) activity. Following the guidelines for fEMG placement recommended by Fridlund and Cacioppo, two 4-mm bipolar miniature silver/silver chloride (Ag/AgCl) skin electrodes were placed on their left corrugator supercilii and zygomaticus major muscle regions (Figure 7) [318]. To avoid bad signals or other unwanted influences, the BioTrace software (on NeXus-32) was used to visualize and, if necessary, correct the biosignals before each recording. Figure 8 shows the arrangement of fEMG electrodes on the M. zygomaticus major and M. corrugator supercilii. An example of a filtered electromyography (EMG) signal is shown on the right side [319].

Figure 8.

Placement of fEMG electrodes and a sample of a filtered EMG signal [319].

Humans have a range of biometric traits that can be a basis for various biometric recognition systems (Figure 9). The other biometrics traits are iris, face thermogram, gait, keystroke pattern, voice, face, and signature. They can have different significance. For example, iris scan has high accuracy, medium long term stability and medium security level, while voice recognition has low accuracy, low long term stability and low security level [320]. The choice of the biometric traits, however, invariably depends on the availability of the dataset’s samples, the application, the value of tolerance accepted, and the level of complexities [150].

Figure 9.

Other examples of biometric traits.

Biometric sensors are transducers that change the biometric traits of a person, such as face, voice, and other characteristics, into an electrical signal. These sensors read or measure speed, temperature, electrical capacity, light, and other types of energy. Different technologies are available with digital cameras, sensor networks, and complex combinations. One type of sensor is required in every biometric device, and biometric sensors are a key feature of emotions recognition technology. Biometrics can be used in a microphone for voice capture or in a high-definition camera for facial recognition [321].

Jain et al. [141] state that enrolment and emotions recognition are two main phases in biometric emotions recognition systems. The enrolment phase means acquiring an individual’s biometric data to be stored in the database along with the emotions recognition details. The recognition phase uses the stored data to compare the data with the re-acquired biometric data of the same individual, to determine emotions. A biometric system is, therefore, a pattern recognition system consisting of a database, sensors, a feature extractor, and a matcher.

Loaiza [322] states that overall physiological effects related to emotional reactions depend on three types of autonomic variables: (1) the cardiac system, including blood pressure, cardiac cycles, and heart rate variability; (2) respiration, including amplitude, respiration period, and respiratory cycles; and (3) electrodermal activity, including resistance, responses, and skin conductance levels. Ekman [77] report that different emotions can have very different autonomic variables. For instance, in contrast to someone in a happy state, an angry person had a higher heart rate and temperature. Furthermore, the feeling of fear was also accompanied by higher heart rate. Pace-Schott et al. [323] argue that the ability to regulate physiological state and regulation of emotion are two inseparable features. Physiological feelings contribute to emotion regulation, reproduction, and survival.

Many works have focused on emotion detection using different techniques [35,283,284,324,325,326,327]. Specific tasks (e.g., WASSA-2017, SemEval) have also included emotion detection tasks that cover four categories of emotions (anger, fear, sadness, and joy) [320]. According to Saganowski et al. [326], the most common approach to the use of physiological signals in emotion recognition is to (1) collect and clean data; (2) to preprocess, synchronize, and integrate signal; (3) to extract and select features; and (4) to train and validate machine learning models.

Signals are a natural expression of the human body; they can be used with great success in the classification of emotional states. EEGs, temperature measurements, or electrocardiograms (ECGs) are examples of such physiological signals. They can help us to classify emotional states such as anger, sadness, or happiness, and can be captured by different sensors to identify individual differences. The goal of all of these physiological methods is to evaluate consumer attention and to obtain a particular message noticed, and their performance in this area is commendable. The advantages of these techniques include their creative and versatile placement, the stimulation of interest through novel means that capture attention, the ability to directly target and personalize messages, and lower implementation costs [328]. To study marketing trends, Singh et al. [328] recommend avoiding costly research methods such as fMRI and EEG, and instead using smaller and cheaper galvanic readings and eye tracking (ET) to investigate brain responses. These authors also propose a fuzzy rule-based algorithm to anticipate consumer behavior by detecting six facial expressions from still images.

Various organizations are contributing to the progress of biometric standards, such as international standards organizations (International Electrotechnical Commission, ISO-JTC1/SC37, London, UK), national standards bodies (American National Standards Institute, New York, NY, USA), standards-developing organizations (International Committee for Information Technology Standards, American National Institute of Standards and Technology, Information Technology Laboratory), and other related organizations (International Biometrics and Identification Association, International Biometric Group, Biometric Consortium, Biometric Center of Excellence) [329]. De Angel et al. [330] give rise to numerous recommendations to begin improving the generalizability of the research and generating a more standardized approach to sensing in depression.

- Sample recommendations include reporting on recruitment strategies, sampling frames and participation rates; increasing the diversity of the study population by enrolling participants of different ages and ethnicities; reporting basic demographic data such as age, gender, ethnicity, and comorbidities; and measuring and reporting participant engagement and acceptability in terms of attrition rates, missing data, and/or qualitative data.

- Furthermore, in machine learning models—describing the model selection strategy, performance metrics and parameter estimates in the model with confidence intervals or nonparametric equivalents.

- Recommendations for data collection and analysis include using established and validated scales for depression assessment; presenting any available evidence on the validity and reliability of the sensor or device used; describing in sufficient detail so as to enable replication, data processing and feature construction; and providing a definition and description of how missing data is handled.

- Recommendations for data sharing include making the code used for feature extraction available within an open science framework and sharing anonymized datasets in data repositories.

- The key recommendation is recognizing the need for consistent reporting in this area. The fact that many studies—especially in the field of computer science—fail to report basic demographic information. A common framework should be developed that has standardized assessment and analysis tools and reliable feature extraction and missing data descriptions, and has been tested in more representative populations.

Neuromarketing, neuroeconomics, neuromanagement, neuro-information systems, neuro-industrial engineering, products, services, call centers studies use various instruments and techniques to measure user psychological states. Some of these tools are more complex than others, and the results that are produced can vary widely [331]. They fall into three major categories: the first two contain tools used for neuroimaging (medical devices offering in vivo information on the nervous system) and use techniques that measure brain electrical activity and neuronal metabolism, while the third contains tools used to evaluate neurophysiological indicators of the mental states of an individual. Leading neuroimaging tools such as fMRI and PET fall into the first category, while EEG, MEG, and other less invasive and cheaper neuroimaging devices that measure electrical activity in the brain [332] fall into the second category, and tools that track and record individual signals of broader physiological reaction and response measurements (e.g., electro-dermal activity, ET, etc.) fall into the third category.

Next, we overview the literature and examine the various types of arousal, valence, affective attitudes, and emotional and physiological states (AFFECT) recognition methods in more detail. A summary of the outcomes is provided in Table 3.

Table 3.

An overview of studies on arousal, valence, affective attitudes, and emotional and physiological states (AFFECT) recognition.

The combination of several different approaches to the recognition and classification of emotional state (also known as multimodal emotion recognition) is currently a research area of great interest, especially since the use of different physiological signals can provide huge amounts of data. Since each physiological can make a significant impact on the ability to classify emotions [333]. Table 3 presents an overview of studies related to the recognition of valence, arousal, emotional states, physiological states, and affective attitudes (affect). A brief overview of some of these studies follows.

Many scientists and practitioners have earned acclaim and honor for their research in areas such as diagnostics, large-scale screening, analysis, monitoring, and categorizations of people by COVID-19 symptoms. Their work relied on early warning systems, wearable technologies, the Internet of Medical Things, IoT based systems, biometric monitoring technologies, and other tools that can assist in the COVID-19 pandemic. Javaid et al. [438] review how different industry 4.0 technologies (e.g., AI, IoT, Big data, Virtual Reality, etc.) can help reduce the spread of disease. Kalhori et al. [439] and Rahman et al. [440] discuss the digital health tools to fight COVID-19. Various sensors and mobile devices to detect the disease, reduce its spread, and measure different symptoms are also widely discussed. Rajeesh Kumar et al. [441] propose a system to identify asymptotic patients using IoT-based sensors, measuring blood oxygen level, body temperature, blood pressure, and heartbeat. Stojanović et al. [442] propose a phone headset to collect information about respiratory rate and cough, Xian et al. [443] present a portable biosensor to test saliva. Chamberlain et al. [444] presented distributed networks of Smart thermometers track COVID-19 transmission epicenters in real-time.

Neurotransmitters (NT) are billions of molecules constantly needed to keep human brains functioning. They are chemical messengers that carry, balance, and boost signals travelling between nerve cells (neurons) and other cells in the body. Many different psychological and physical functions can be affected by these chemical messengers, including fear, appetite, mood, sleep, heart rate, breathing rate, concentration and learning [445]. Lim [251] has also outlined new ways of exploiting neuromarketing research to achieve a better understanding of the brain and neural activity and hence advance marketing science. Lim [251] highlighted three main aspects: (i) antecedents (such as the product, physical evidence, the price of the product, the place where everything is happening, promotion, the process involved, people); (ii) the process; and (iii) the consequences for the target market (behavioral outcomes before, during and after the act of buying) and the marketing organization (visits, sales, awareness, equity). Agarwal and Xavier [253] described the most popular neuromarketing tools, including event-related potential (ERP) (P300), EEG, and fMRI, and explained how these tools could be applied in marketing. A business and marketing article [256] lists the three categories of neuroscientific techniques that are applied in business and advertising research (Table 1 and Table 2, Figure 1) as follows:

- Methods that monitor what is happening in the brain (i.e., the physiological activity of the CNS);

- Methods that record what is happening elsewhere in the body (i.e., the physiological activity of the PNS);

- Other techniques for tracking behavior and conduct.

Ganapathy [260] groups neuromarketing tools into three categories (Table 1 and Table 2). Farnsworth [258] gives information that can be essential when deciding on the best neuromarketing method or technique to help stakeholders understand research methods relating to human behavior at a glance, while Saltini [264] gives a short list of neuromarketing tools (Table 1 and Table 2). A system developed by CoolTool [257] allows several neuromarketing tools to be used separately or combined.

Although individual neuroscientific tools for neuromarketing, neuroeconomics, neuromanagement, neuro-information systems, neuro-industrial engineering, products, services, call centers have been developed by many researchers (for example [111,251,253,254,255,256,257,258,259,260,261,262,263,264,265,266,267,268,269,270,293,298,299,300,303,309,311,312,328,446,447,448], a review and analysis of the complete range of tools used in neuromarketing, neuroeconomics, neuromanagement, neuro-information systems, neuro-industrial engineering, products, services, call centers research has not yet been carried out. Thorough examinations of the range of research tool alternatives that are available for neuroscience are also often missing from research in this area. We have therefore compiled a complete list of neuroscience techniques for neuromarketing, neuroeconomics, neuromanagement, neuro-information systems, neuro-industrial engineering, products, services, call centers. Humans experience emotions and their associated feelings (e.g., gratitude, curiosity, fear, sadness, disgust, happiness, and pride) on a daily basis. Yet, in case of affective disorders such as depression and anxiety, emotions can become destructive. Thus the focus on understanding emotional responsiveness is not surprising in neuroscience and psychological science [449]. So neuroscience techniques analyze emotional, affective and physiological states tracking neural/electrical activity [335,336,337,338,339,340,450,451] or neural/metabolic activity [341,342,343,344,349,447,452,453] within the brain. This is also presented in Table 3.

For example, neuromarketing techniques can complement business decisions and make them more profitable, using the automated mining of opinions, attitudes, emotions and expressions from speech, text, emotions, neuron activity and other database-fed sources. Advertisements that are adjusted based on such information can engage the target audience more effectively and make a better impact on the audience, and this may translate into better sales and higher margins. In an attempt to enhance corporate branding and advertising routines, various factors have been studied, such as emotional appeal and sensory branding, to ensure that companies deliver the right message and that customers perceive the right message [171].

Affect recognition is widely used in gaming to create affect-aware video games and other software. Alhargan et al. [454] present affect recognition in an interactive gaming environment using eye-tracking. Szwoch and Szwoch [455] give a review of automatic multimodal affect recognition of facial expressions and emotions. Krol et al. [456] combined eye-tracking and brain–computer interface (BCI) and created a completely hands-free game Tetris clone where traditional actions (i.e., block manipulation) are performed using gaze control. Elor et al. [457] measure heart rate and galvanic skin response (GSR) with Immersive Virtual Reality (iVR) Head-Mounted Display (HMD) systems paired with exercise games to show how exercise games can positively affect physical rehabilitation.

Stress is a relevant health problem among students, so Tiwari, Agarwal [458] present a stress analysis system to detect stressful conditions of the student, including measurement of GSR and electrocardiogram (ECG) data. Nakayama et al. [459] suggest measuring heart rate variability as a method to evaluate nursing students stress during simulation to provide a better way to learn.

A literature review can reveal the most popular types of traditional and non-traditional neuromarketing methods. According to Sebastian [111], focus groups are one of the more traditional marketing methods, while various neuroscience techniques have also been applied to record the metabolic activity of the body and the electrical activity of the brain (transcranial magnetic stimulation (TMS), electroencephalography (EEG), functional magnetic resonance imaging, magnetoencephalography (MEG), and positron-emission tomography (PET)).

Electronic platforms are not the only possibility for non-traditional marketing, and Tautchin and Dussome [460] believe that traditional media can also be reimagined in new forms, such as guerrilla marketing, local displays, vehicle wraps, scaffolding, and even bubble cloud ads or aerial banners. In addition to giving high-quality feedback data, non-traditional techniques can also help in the evaluation of business decisions and conclusions [328].

Based on factors such as skin texture, gender, and SC, wearable biometric GSR sensors could be used to identify whether a person is in a sad, neutral, or happy emotional state. To understand marketing strategies better and to improve ads, other biometric sensors such as pulse oximeters and health bands could be used in the future to make automated predictions of emotions [461]. The galvanic skin response (GSR) method has an important limitation—it does not provide information on valence. The usual way to address this issue is to use other emotion recognition methods. They provide additional details and thus enable detailed analysis. Table 3 lists studies where GSR is used to measure emotions.

Eye tracking (ET) is used to record the frequencies of choices; sensor features are extracted and matched with certain preference labels to determine mutual dependences and to discover which brain regions are active when a certain choice task is performed. High values for alpha, beta and theta waves have been reported in the occipital and frontal brain regions, with a high degree of synchronization. A hidden Markov model is a popular tool for time-series data modeling, and researchers have successfully used this approach to build brain–computer-interface tools with EEG signals, counting mental task classification, medical applications and eye movement tracking [462].

A classification model based on SVM architecture, developed by Lakhan et al. [463], can predict the level of arousal and valence in recorded EEG data. Its core is a feature extraction algorithm based on power spectral density (PSD).