Spatio-Temporal Neural Dynamics of Observing Non-Tool Manipulable Objects and Interactions

Abstract

1. Introduction

2. Materials and Methods

2.1. Experiments

2.2. Data Analysis

- 1.

- For two independent sample sets, and , where , was calculated as follows:

- 2.

- and were put into the same group. Then, the elements of this group were randomly divided into two sub-groups: and , which had the same size. The new statistic of test was calculated as follows:

- 3.

- Step b was repeated 10,000 times to obtain ;

- 4.

- The values were sorted in ascending manner, and the sequence number of the first value that was greater than was identified as the “ ”.The p-value of the statistic test was calculated as follows:

- 1.

- For two paired sample sets, and , where , we constructed a paired sample set , as follows:

- 2.

- Resampling was performed from with a replacement to generate a new sample set, ; then, its mean value was calculated as follows:

- 3.

- The last step was repeated to obtain , which were then sorted in ascending manner, and then, the index of the first value that was greater than zero was identified as the . The p-value of this test was calculated as follows:

- Values during the baseline period were extracted and were put into the baseline vector;

- Resampling was performed from with a replacement to obtain a new vector with the same size;

- The mean across time was calculated;

- Steps 2–3 were repeated 10,000 times and then a grand mean value of the results in step 3 was obtained.

3. Results

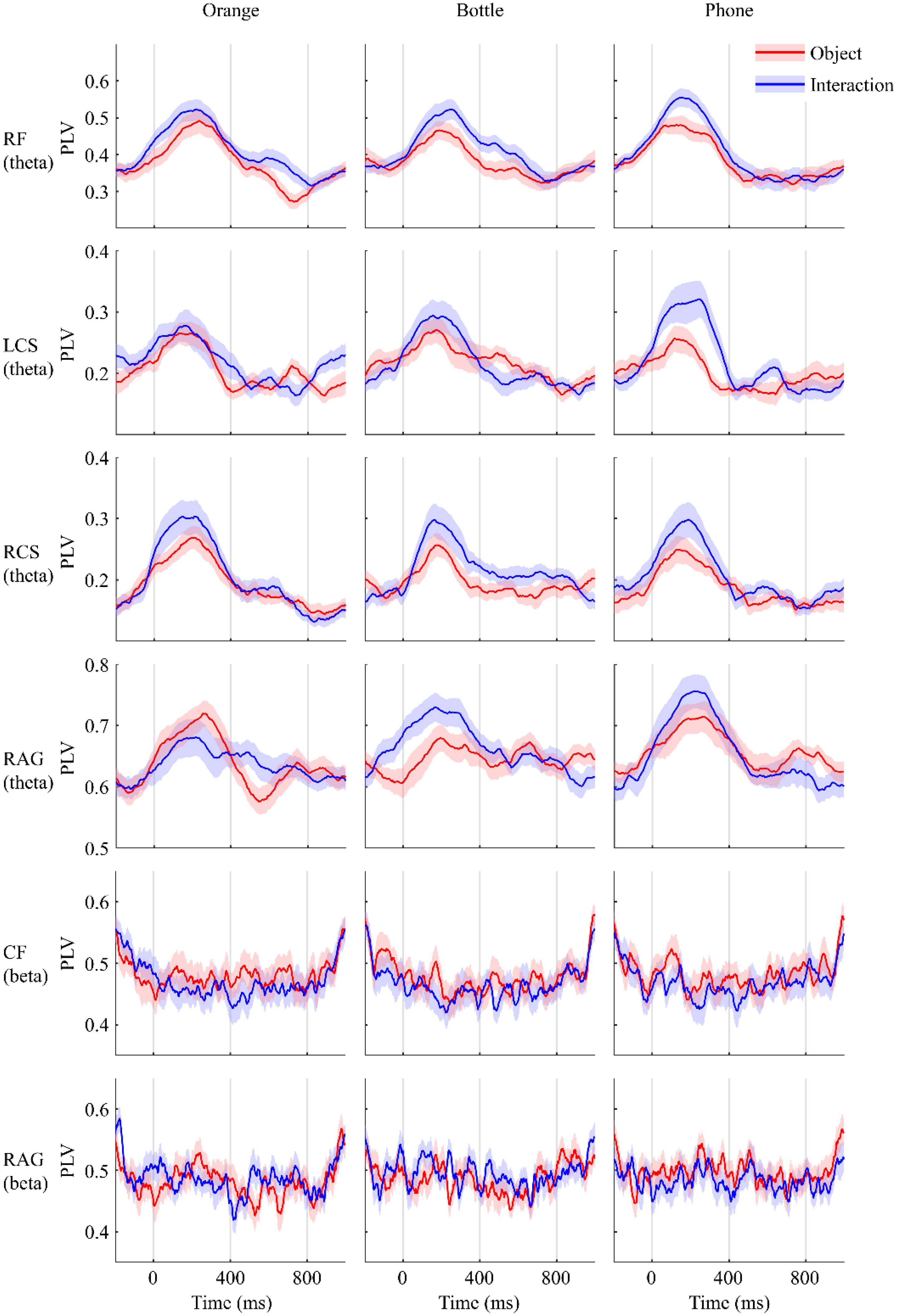

3.1. Functional Connectivity

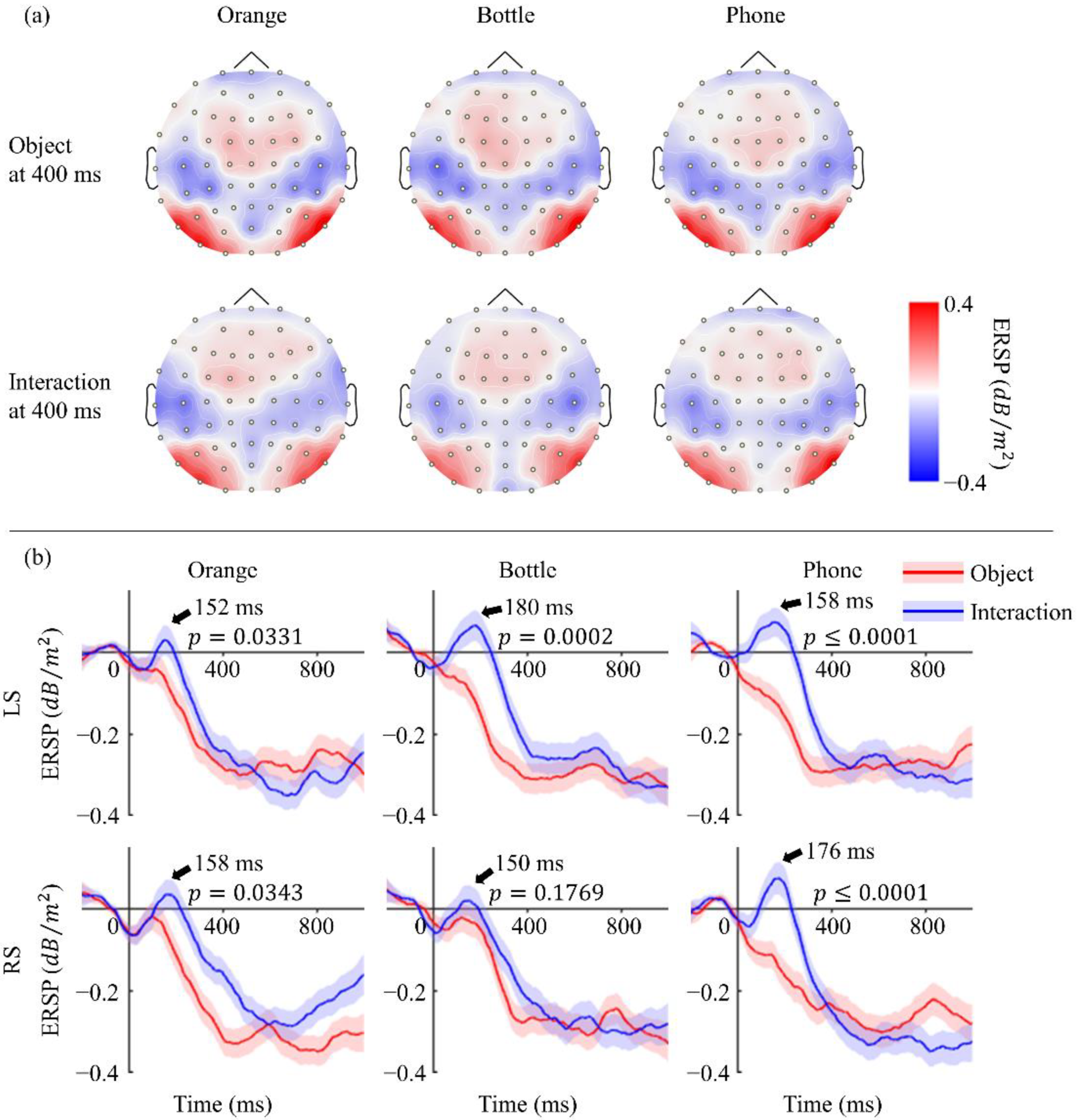

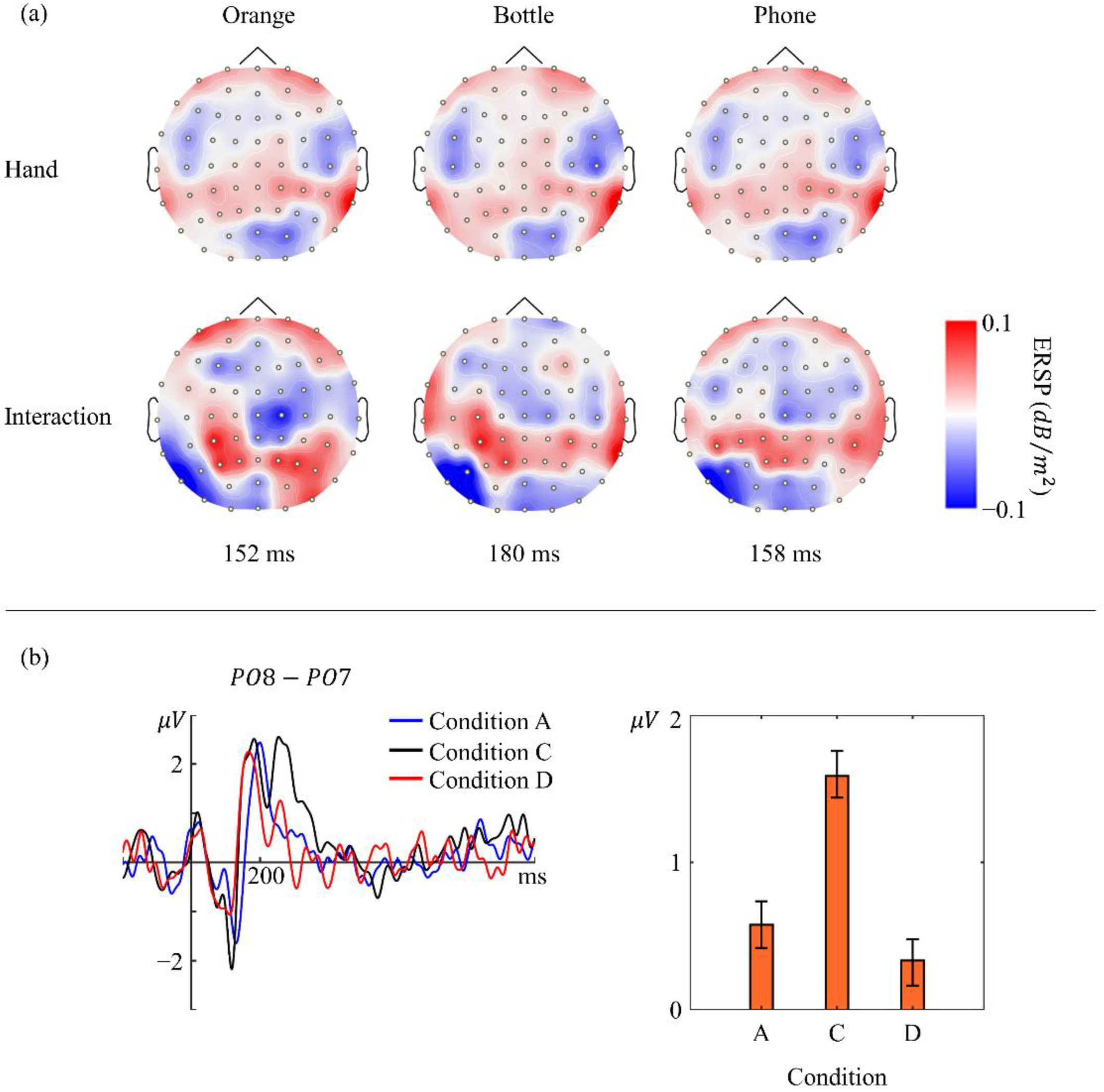

3.2. Power Variations

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cardinali, L.; Frassinetti, F.; Brozzoli, C.; Urquizar, C.; Roy, A.C.; Farnè, A. Tool-Use Induces Morphological Updating of the Body Schema. Curr. Biol. 2009, 19, R478–R479. [Google Scholar] [CrossRef] [PubMed]

- Verma, A.; Brysbaert, M. A Right Visual Field Advantage for Tool-Recognition in the Visual Half-Field Paradigm. Neuropsychologia 2011, 49, 2342–2348. [Google Scholar] [CrossRef] [PubMed]

- Chao, L.L.; Martin, A. Representation of Manipulable Man-Made Objects in the Dorsal Stream. Neuroimage 2000, 12, 478–484. [Google Scholar] [CrossRef]

- Garcea, F.E.; Almeida, J.; Mahon, B.Z. A Right Visual Field Advantage for Visual Processing of Manipulable Objects. Cogn. Affect. Behav. Neurosci. 2012, 12, 813–825. [Google Scholar] [CrossRef] [PubMed]

- McNair, N.A.; Harris, I.M. Disentangling the Contributions of Grasp and Action Representations in the Recognition of Manipulable Objects. Exp. Brain Res. 2012, 220, 71–77. [Google Scholar] [CrossRef] [PubMed]

- Ni, L.; Liu, Y.; Yu, W. The Dominant Role of Functional Action Representation in Object Recognition. Exp. Brain Res. 2019, 237, 363–375. [Google Scholar] [CrossRef] [PubMed]

- Marty, B.; Bourguignon, M.; Jousmäki, V.; Wens, V.; de Beeck, M.O.; Van Bogaert, P.; Goldman, S.; Hari, R.; De Tiège, X. Cortical Kinematic Processing of Executed and Observed Goal-Directed Hand Actions. Neuroimage 2015, 119, 221–228. [Google Scholar] [CrossRef]

- Buccino, G. Action Observation Treatment: A Novel Tool in Neurorehabilitation. Philos. Trans. R. Soc. B Biol. Sci. 2014, 369. [Google Scholar] [CrossRef]

- Vogt, S.; Di Rienzo, F.; Collet, C.; Collins, A.; Guillot, A. Multiple Roles of Motor Imagery during Action Observation. Front. Hum. Neurosci. 2013, 7, 807. [Google Scholar] [CrossRef]

- Rüther, N.N.; Brown, E.C.; Klepp, A.; Bellebaum, C. Observed Manipulation of Novel Tools Leads to Mu Rhythm Suppression over Sensory-Motor Cortices. Behav. Brain Res. 2014, 261, 328–335. [Google Scholar] [CrossRef]

- Tong, L.; Liu, R.-w.; Soon, V.C.; Huang, Y.F. Indeterminacy and Identifiability of Blind Identification. IEEE Trans. Circuits Syst. 1991, 38, 499–509. [Google Scholar] [CrossRef]

- Maris, E.; Oostenveld, R. Nonparametric Statistical Testing of EEG- and MEG-Data. J. Neurosci. Methods 2007, 164, 177–190. [Google Scholar] [CrossRef] [PubMed]

- Groppe, D.M.; Urbach, T.P.; Kutas, M. Mass Univariate Analysis of Event-Related Brain Potentials/Fields I: A Critical Tutorial Review. Psychophysiology 2011, 48, 1711–1725. [Google Scholar] [CrossRef]

- Graimann, B.; Huggins, J.E.; Levine, S.P.; Pfurtscheller, G. Visualization of Significant ERD/ERS Patterns in Multichannel EEG and ECoG Data. Clin. Neurophysiol. 2002, 113, 43–47. [Google Scholar] [CrossRef]

- Darvas, F.; Pantazis, D.; Kucukaltun-Yildirim, E.; Leahy, R.M. Mapping Human Brain Function with MEG and EEG: Methods and Validation. Neuroimage 2004, 23, S289–S299. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Catrambone, V.; Greco, A.; Averta, G.; Bianchi, M.; Valenza, G.; Scilingo, E.P. Predicting Object-Mediated Gestures from Brain Activity: An EEG Study on Gender Differences. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 411–418. [Google Scholar] [CrossRef]

- Lachaux, J.-P.; Rodriguez, E.; Martinerie, J.; Varela, F.J. Measuring Phase Synchrony in Brain Signals. Hum Brain Mapp. 1999, 8, 194–208. [Google Scholar] [CrossRef]

- Makeig, S. Auditory Event-Related Dynamics of the EEG Spectrum and Effects of Exposure to Tones. Electroencephalogr. Clin. Neurophysiol. 1993, 86, 283–293. [Google Scholar] [CrossRef]

- Pernier, J.; Perrin, F.; Bertrand, O. Scalp Current Density Fields: Concept and Properties. Electroencephalogr. Clin. Neurophysiol. 1988, 69, 385–389. [Google Scholar] [CrossRef]

- Nunez, P.L.; Westdorp, A.F. The Surface Laplacian, High Resolution EEG and Controversies. Brain Topogr. 1994, 6, 221–226. [Google Scholar] [CrossRef] [PubMed]

- Carvalhaes, C.; De Barros, J.A. The Surface Laplacian Technique in EEG: Theory and Methods. Int. J. Psychophysiol. 2015, 97, 174–188. [Google Scholar] [CrossRef]

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.M. FieldTrip: Open Source Software for Advanced Analysis of MEG, EEG, and Invasive Electrophysiological Data. Comput. Intell. Neurosci. 2011, 2011. [Google Scholar] [CrossRef]

- Pockett, S.; Bold, G.E.J.; Freeman, W.J. EEG Synchrony during a Perceptual-Cognitive Task: Widespread Phase Synchrony at All Frequencies. Clin. Neurophysiol. 2009, 120, 695–708. [Google Scholar] [CrossRef]

- Sauseng, P.; Klimesch, W.; Schabus, M.; Doppelmayr, M. Fronto-Parietal EEG Coherence in Theta and Upper Alpha Reflect Central Executive Functions of Working Memory. Int. J. Psychophysiol. 2005, 57, 97–103. [Google Scholar] [CrossRef]

- Murias, M.; Webb, S.J.; Greenson, J.; Dawson, G. Resting State Cortical Connectivity Reflected in EEG Coherence in Individuals with Autism. Biol. Psychiatry 2007, 62, 270–273. [Google Scholar] [CrossRef]

- Fellrath, J.; Mottaz, A.; Schnider, A.; Guggisberg, A.G.; Ptak, R. Theta-Band Functional Connectivity in the Dorsal Fronto-Parietal Network Predicts Goal-Directed Attention. Neuropsychologia 2016, 92, 20–30. [Google Scholar] [CrossRef] [PubMed]

- Fadiga, L.; Craighero, L.; D’Ausilio, A. Broca’s Area in Language, Action, and Music. Ann. N. Y. Acad. Sci. 2009, 1169, 448–458. [Google Scholar] [CrossRef] [PubMed]

- Rizzolatti, G.; Fadiga, L.; Gallese, V.; Fogassi, L. Premotor Cortex and the Recognition of Motor Actions. Cogn. Brain Res. 1996, 3, 131–141. [Google Scholar] [CrossRef]

- Fadiga, L.; Craighero, L. Hand Actions and Speech Representation in Broca’s Area. Cortex 2006, 42, 486–490. [Google Scholar] [CrossRef]

- Fazio, P.; Cantagallo, A.; Craighero, L.; D’ausilio, A.; Roy, A.C.; Pozzo, T.; Calzolari, F.; Granieri, E.; Fadiga, L. Encoding of Human Action in Broca’s Area. Brain 2009, 132, 1980–1988. [Google Scholar] [CrossRef]

- Jeannerod, M. The Representing Brain: Neural Correlates of Motor Intention and Imagery. Behav. Brain Sci. 1994, 17, 187–202. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C. Motor Imagery Activates Primary Sensorimotor Area in Humans. Neurosci. Lett. 1997, 239, 65–68. [Google Scholar] [CrossRef]

- Caldara, R.; Deiber, M.P.; Andrey, C.; Michel, C.M.; Thut, G.; Hauert, C.A. Actual and Mental Motor Preparation and Execution: A Spatiotemporal ERP Study. Exp. Brain Res. 2004, 159, 389–399. [Google Scholar] [CrossRef]

- Oostenveld, R.; Praamstra, P. The Five Percent Electrode System for High-Resolution EEG and ERP Measurements. Clin. Neurophysiol. 2001, 112, 713–719. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Adorni, R.; D’Aniello, G.E. 250 Ms to Code for Action Affordance during Observation of Manipulable Objects. Neuropsychologia 2011, 49, 2711–2717. [Google Scholar] [CrossRef]

- Proverbio, A.M. Tool Perception Suppresses 10-12Hz μ Rhythm of EEG over the Somatosensory Area. Biol. Psychol. 2012, 91, 1–7. [Google Scholar] [CrossRef]

- Creem-Regehr, S.H.; Lee, J.N. Neural Representations of Graspable Objects: Are Tools Special? Cogn. Brain Res. 2005, 22, 457–469. [Google Scholar] [CrossRef] [PubMed]

- Rizzolatti, G.; Craighero, L. The Mirror-Neuron System. Annu. Rev. Neurosci. 2004, 27, 169–192. [Google Scholar] [CrossRef]

- Gallese, V.; Fadiga, L.; Fogassi, L.; Rizzolatti, G. Action Recognition in the Premotor Cortex. Brain 1996, 119, 593–609. [Google Scholar] [CrossRef]

- Cerri, G.; Cabinio, M.; Blasi, V.; Borroni, P.; Iadanza, A.; Fava, E.; Fornia, L.; Ferpozzi, V.; Riva, M.; Casarotti, A.; et al. The Mirror Neuron System and the Strange Case of Broca’s Area. Hum. Brain Mapp. 2015, 36, 1010–1027. [Google Scholar] [CrossRef] [PubMed]

- Papitto, G.; Friederici, A.D.; Zaccarella, E. The Topographical Organization of Motor Processing: An ALE Meta-Analysis on Six Action Domains and the Relevance of Broca’s Region. Neuroimage 2020, 206, 116321. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Iramina, K. Spatio-Temporal Neural Dynamics of Observing Non-Tool Manipulable Objects and Interactions. Sensors 2022, 22, 7771. https://doi.org/10.3390/s22207771

Li Z, Iramina K. Spatio-Temporal Neural Dynamics of Observing Non-Tool Manipulable Objects and Interactions. Sensors. 2022; 22(20):7771. https://doi.org/10.3390/s22207771

Chicago/Turabian StyleLi, Zhaoxuan, and Keiji Iramina. 2022. "Spatio-Temporal Neural Dynamics of Observing Non-Tool Manipulable Objects and Interactions" Sensors 22, no. 20: 7771. https://doi.org/10.3390/s22207771

APA StyleLi, Z., & Iramina, K. (2022). Spatio-Temporal Neural Dynamics of Observing Non-Tool Manipulable Objects and Interactions. Sensors, 22(20), 7771. https://doi.org/10.3390/s22207771