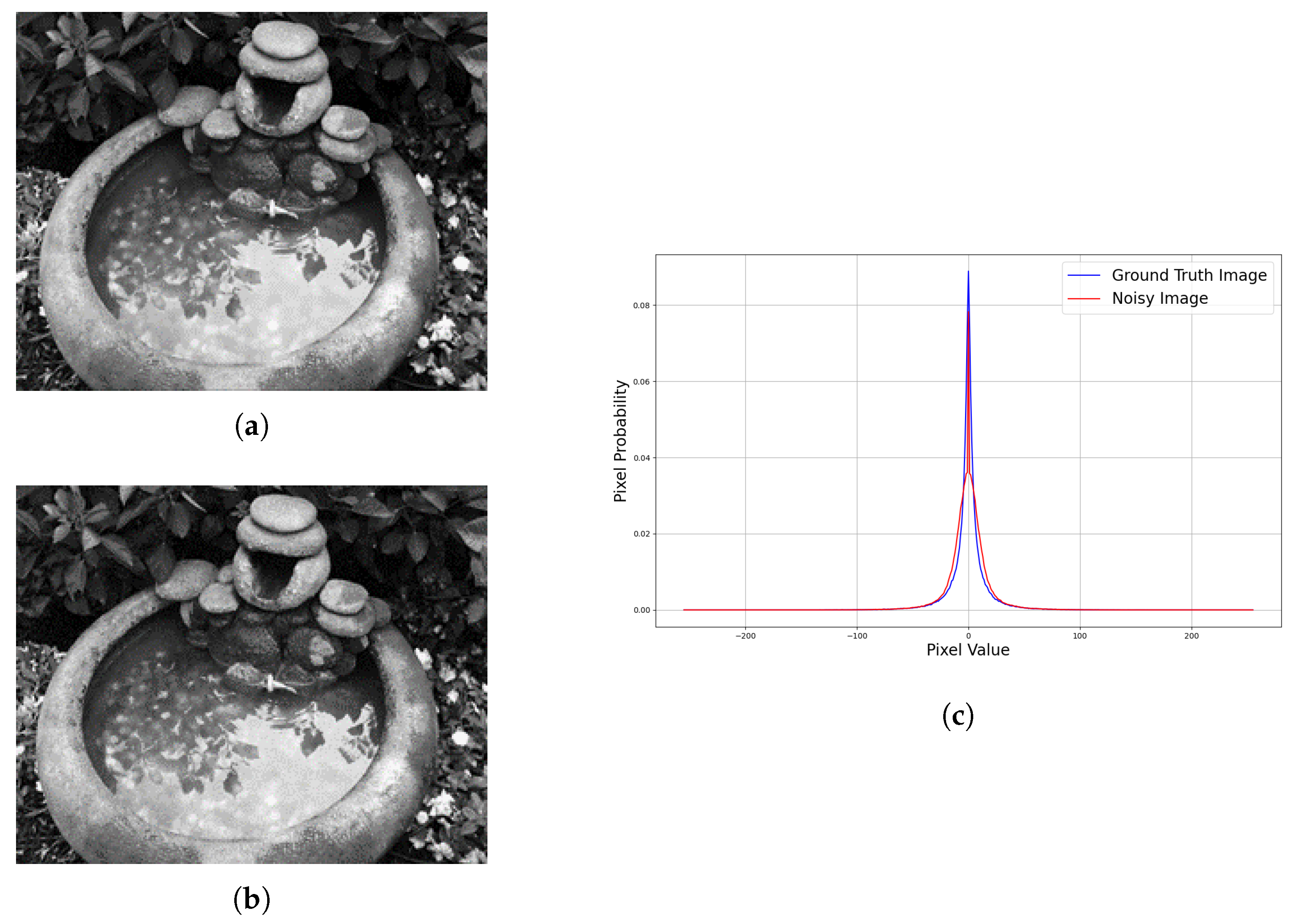

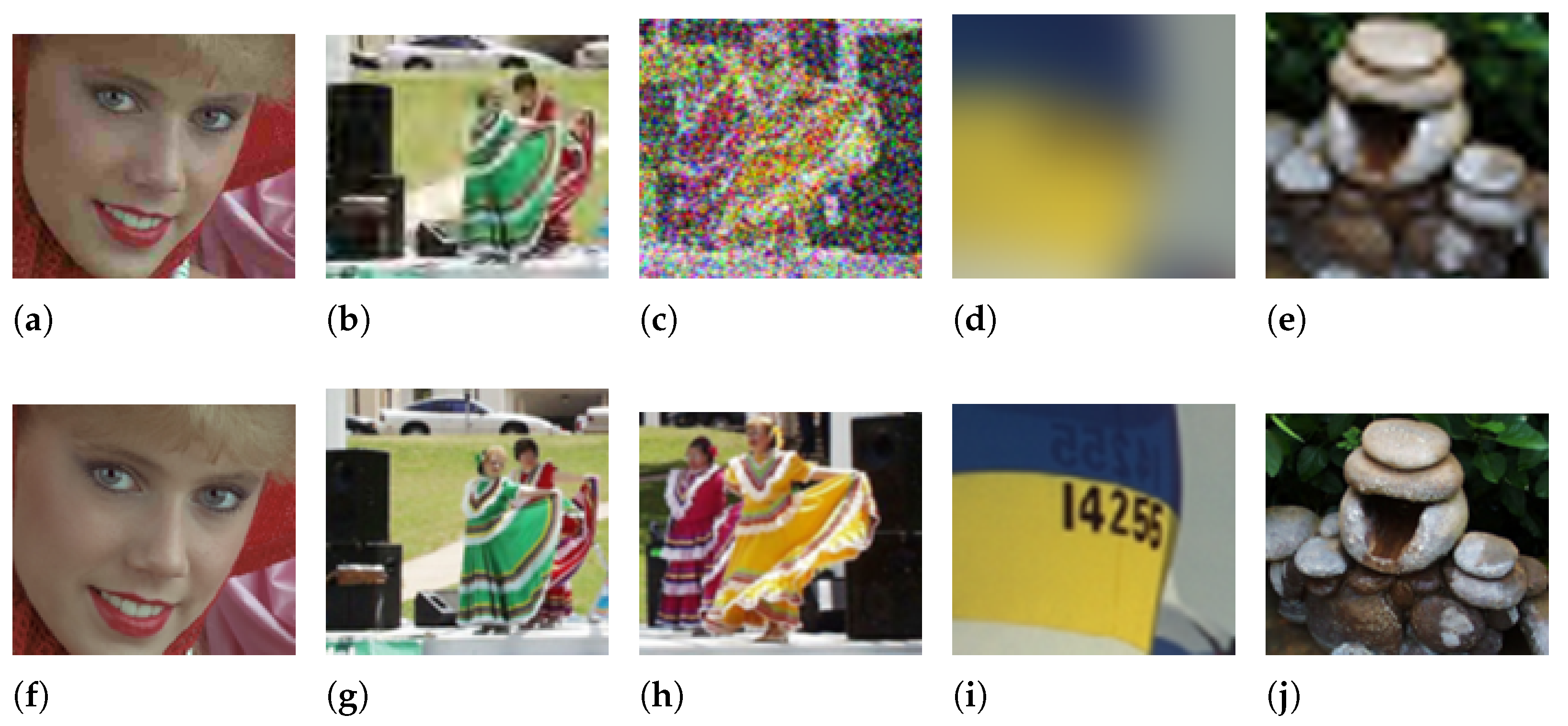

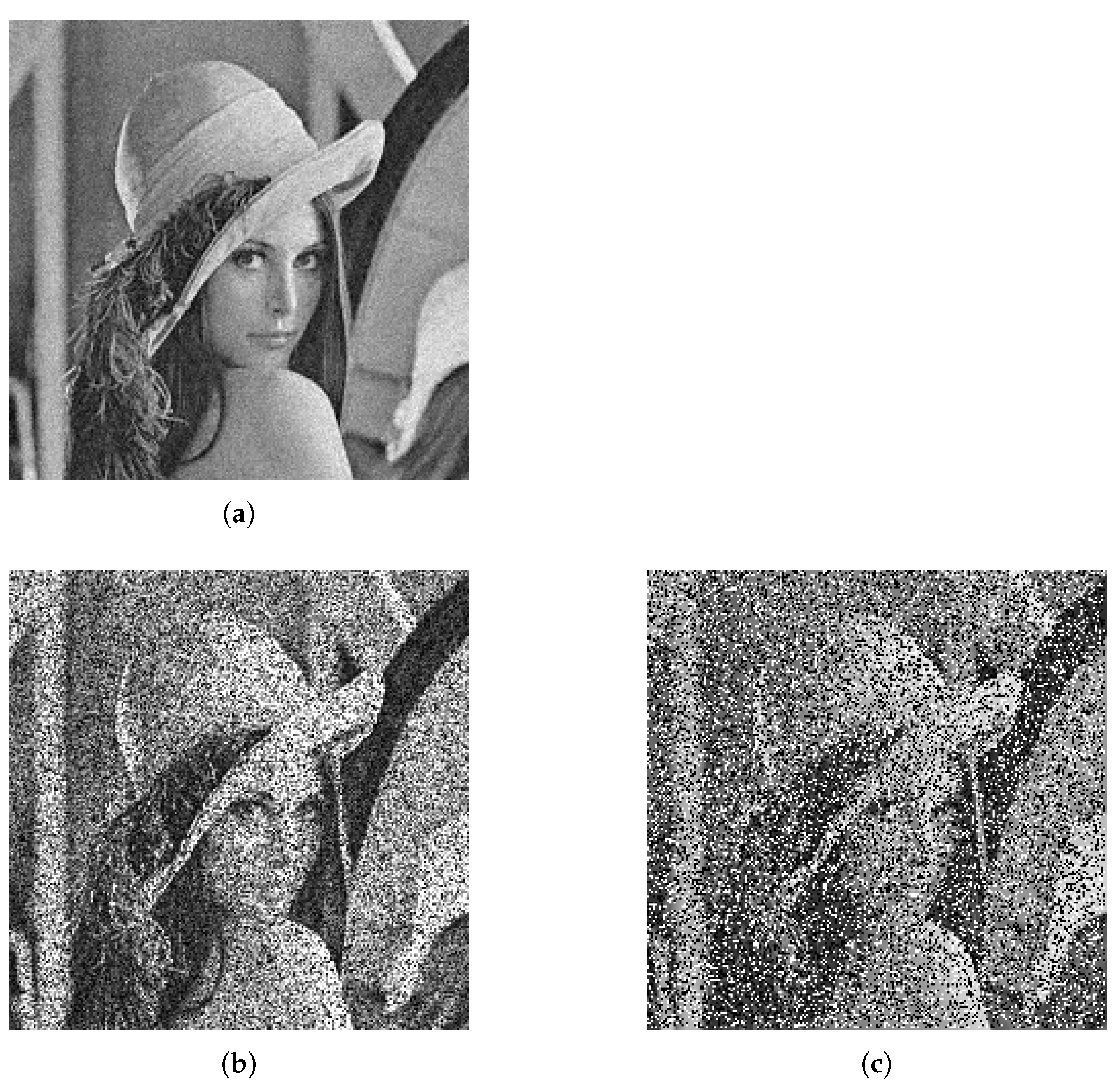

Noise is a major source of corruption in images. A noisy image can cause information loss. Noises can be classified into two major classes: additive noise and multiplicative noise. Additive noises are noises that are added to the image such as Gaussian noise. Multiplicative noise is multiplied with the ground truth image such as speckle noise [

14]. Other distortions such as blurring are modeled by taking the convolution of the point spread function with the ground truth image [

15]. The point spread function is a 2-dimensional array describing the radiation intensity distribution in the image of a point source [

15].

We name these corruptions as noise and distortion respectively in this paper. Reference [

14] discussed noise models such as Gaussian noise, salt-and-pepper noise, and grain noise. Reference [

16] updated some noise models that have been added throughout the years and provided a quick review of the noise models. Reference [

17] proposed a Monte Carlo simulation approach for rendering these film grain noises using a Poisson distribution and other algorithms.

X-rays are commonly used in medical images such as Magnetic Resonance Imaging (MRI) and Advance X-ray Imaging (AXI) for silicon defect detection. X-ray doses are desired to be as low as possible to reduce harm towards the subject, but as the dose decreases, the X-ray noise increases. These noises are known as quantum noise and are often modeled with Poisson noise [

18]. Electronics’ noises were described by [

19] as false bit-flips due to thermal heating in electronics. Salt-and-pepper noise is used to model these false bit-flips [

20]. Reference [

20] also presented a way to perform a median filter at the circuit level to remove such noise in an image.

2.1. Noise Parameter Estimation

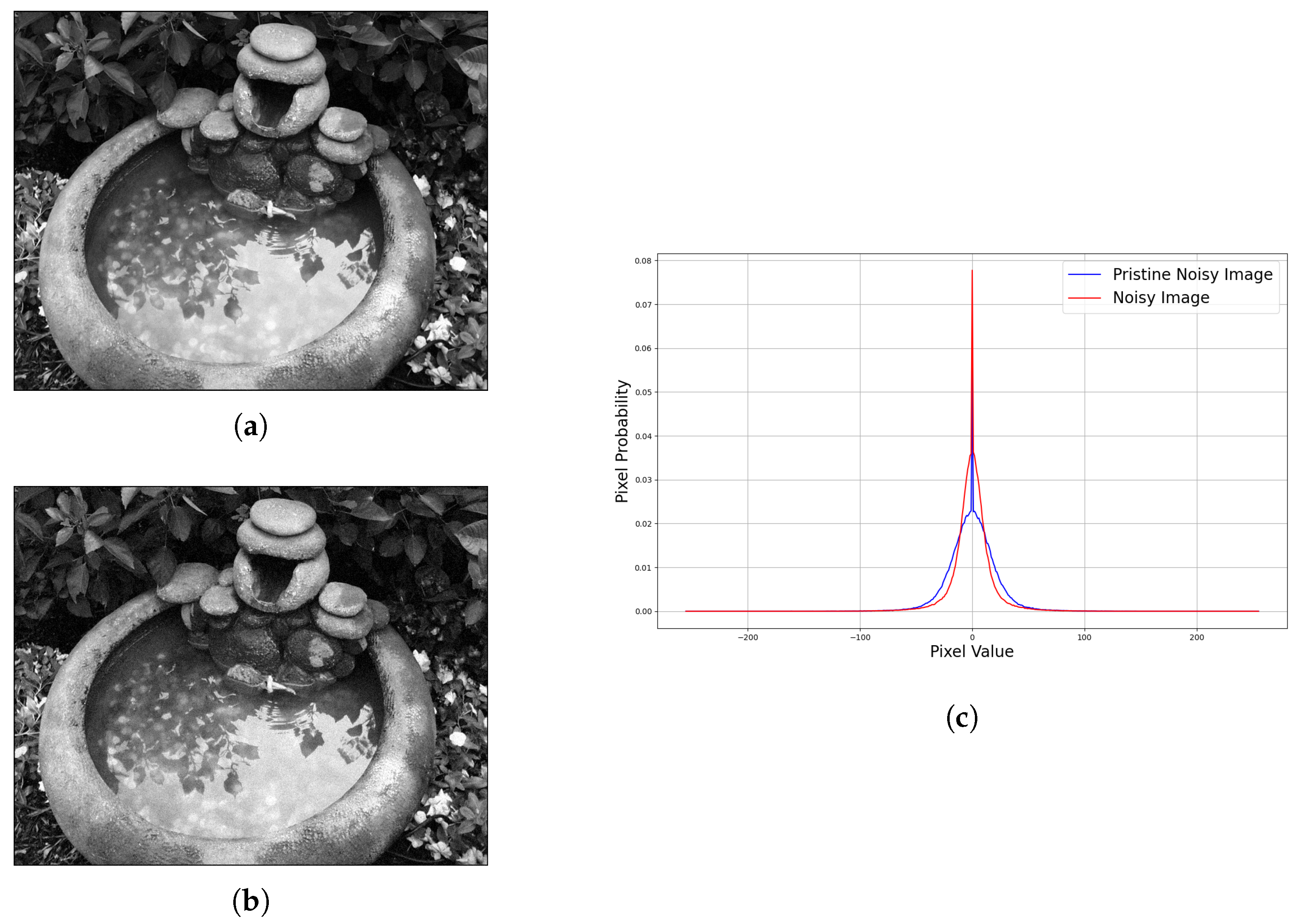

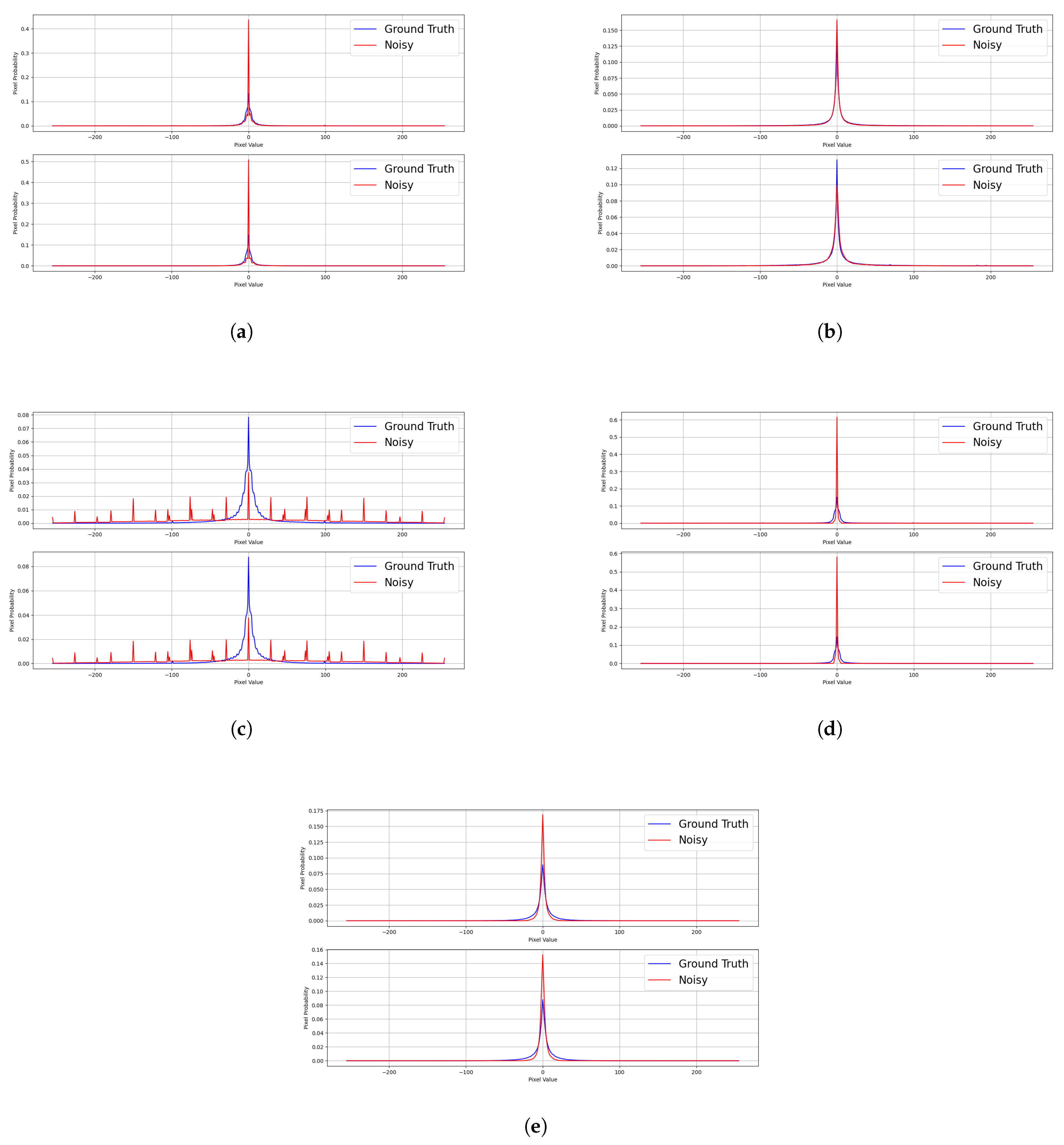

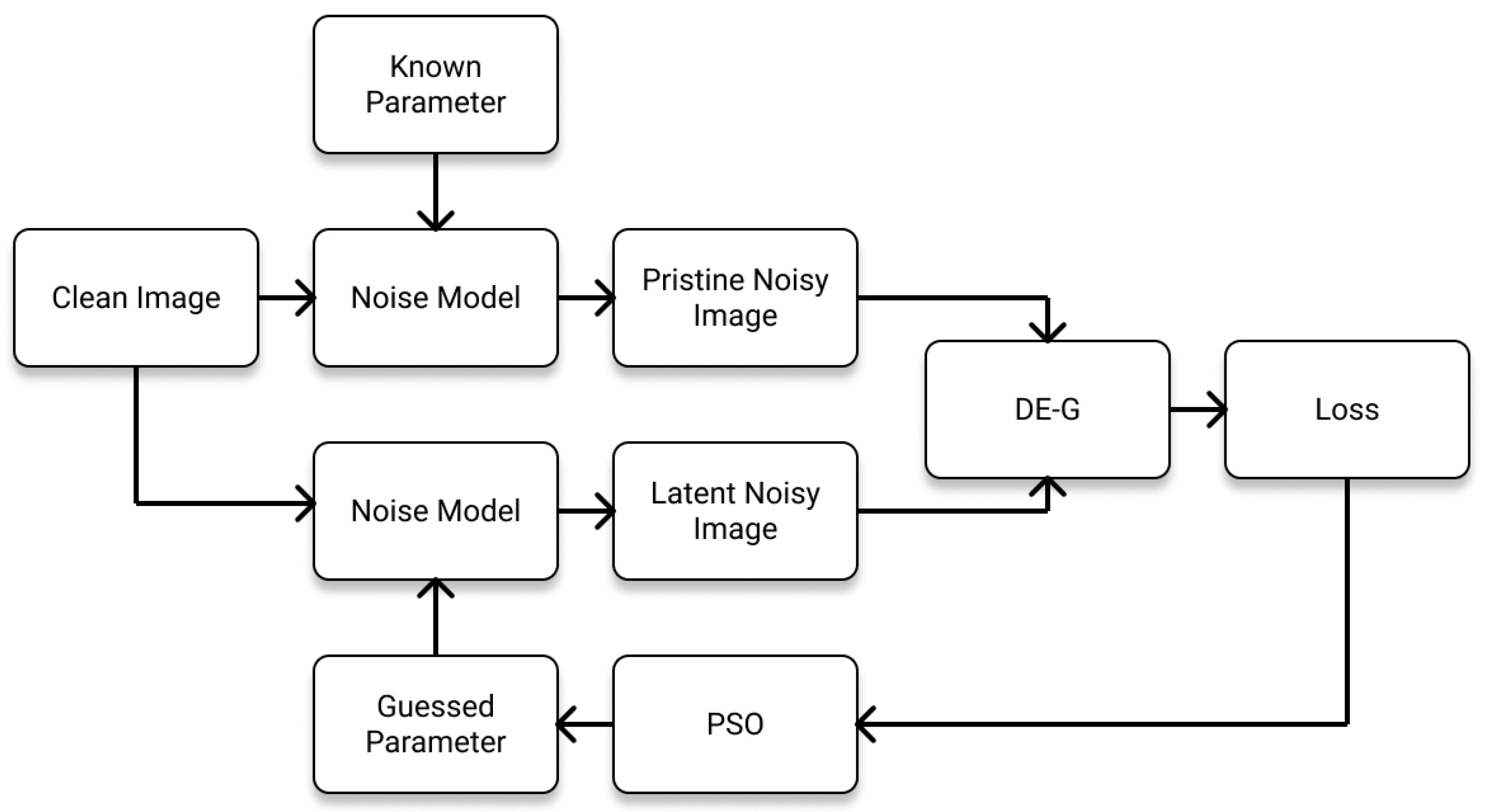

Noise can be mathematically modeled, and its strength is dependent on the input parameters, for example random noise that is modeled using a Gaussian distribution. As the standard deviation of the Gaussian noise model increases, the image will suffer from a noisier corruption. Noise parameter estimation estimates these noises accurately at a high repeatability. Noise parameter estimation is important for the correct noise modeling of the image such that the latent noisy image is not overly noisy and close to reality.

Reference [

21] proposed a noise estimation method by using Bayesian MAP inferencing on a distorted image and performed a review on noise modeling in a CCD camera. The authors proposed that noises that are seen from the images are mostly from the propagation stage. Noise can be irradiance-dependent or independent noise. These noises are additive and passed through a Camera Response Function (CRF). The authors ignored the additive noise that is added to the image after the image passes through the CRF as they claimed that with modern-day cameras, additive noise that affects the image is very small. One of the most widely used methods for estimating noise is through the Mean Absolute Deviation (MAD). However, this method is commonly used in local and smooth kernels. Reference [

22] decomposed an image into the wavelet components and trained with a set of 13 images. Reference [

22] used wavelet transform as a feature extractor from the degraded image and used these features to fit a final output value. However, the model can only predict noise parameters that are in the training dataset and is not as versatile asother noise models.

The median absolute deviation counters the idea of the mean absolute deviation in estimating noise [

23]. The median absolute deviation is more robust than the mean absolute deviation and is less affected by outliers, which makes the edges in non-smooth regions less affected by the overall score. Reference [

24] challenged the median absolute deviation method and proposed the Residual Autocorrelation Power (RAP). The RAP can estimate the additive noise standard deviation to a high level of accuracy when compared with the median absolute deviation. Reference [

25] suggested that the noise estimation of colored images and grayscale images is different as colored images have multiple channels to take under consideration, while grayscale images, which are commonly used in the medical field, have a single channel. Reference [

26] used a multivariate Gaussian noise model to visualize the pixel spread of each channel and estimate the covariance of noise by comparing each channel. Through an iterative process, the noise covariance was estimated. Reference [

27] used the mean deviation at a certain Region Of Interest (ROI) and computed the estimated standard deviation of the Gaussian noise added to the image. The result showed high consistency and accuracy at low-level noise (

= 5), but started to deviate at higher noise levels (

= 10,

= 15). Reference [

28] performed a blind quality assessment using the method of moments to measure the signal-to-noise ratio of the one-dimensional signal of a cosine waveform. Reference [

29] used a statistics-based approach for noise estimation using the skewness of the image pair and estimated the strength of the noise of the Additive White Gaussian Noise (AWGN). Reference [

30] performed noise estimation on mammograms by using Rician noise modeling and used the estimated value to perform image denoising using a nonlocal mean denoising filter.

Noise study and strength estimation have been given much attention because noise allows researchers to have a better understanding of the environment that causes noise and the effect of fine-tuning these physical parameters’ relationship on the image quality. Much work has been performed in noise estimation working on specific noises such as X-ray noise, described by different distributions, and general noise, modeled using Gaussian noise. The ability of these methods to predict other forms of noise has not been discussed formally, and their capability of estimating combined noise has also not been explored.

2.2. Usage of Parameter Estimation

An accurate prediction of the noise level from the image allows for less human intervention in performing image processing and environment physics study. Classical image denoising algorithms are widely used in performing image recovery. Image denoising requires some prior information from the user to perform image denoising. The Gaussian low-pass filter is one of the most well-known filters that removes high-frequency noise from the image, but the structural integrity of the image will be sacrificed when the denoising strength is too strong. An improved version of the Gaussian filter is the bilateral filter, which takes a spatial standard deviation and a range standard deviation. The bilateral filter is a nonlinear and edge-preserving denoising algorithm, but the denoising strength must be set correctly or it will approximate the Gaussian blur [

31]. The adaptive Wiener filter and the Modified Median Wiener Filter (MMWF) perform denoising by taking the noise variance of the image [

32,

33]. BM3D requires the user to input a standard deviation that directly relates to the noise level of the image [

12]. These denoising algorithms are very popular in the medical imaging chain. The input parameters of the denoising algorithm have a high correlation with the noise model parameters. Another image processing algorithm that needs the noise information is image segmentation. Reference [

34] used a fuzzy C-means clustering algorithm to perform image segmentation on images that were corrupted by impulsive noise. The method can be further improved by providing the algorithm with the noise information. Hence, noise information is crucial in performing image processing algorithms.

The root cause of noise can be due to the physics of the surrounding environment or during the conversion of photons to pixel values in the camera. Conventionally, brighter pixels are due to more photons received by the camera sensor during the shot duration. The camera setting can significantly affect the image quality, for example increasing the shutter speed will reduce the exposure time. Noise will become more apparent compared to the overall signal received. The overall Signal-to-Noise Ratio (SNR) [

35] will decrease with increasing exposure time. Light travels differently at different frequencies. High-frequency light will be able to penetrate opaque objects and allow us to see through the object. A well-known example of the real-life usage of these properties is in defect detection using X-rays in AXI. The X-ray projection noise is caused by the scattering of X-ray photons when they pass through an object. The scattering angle is dependent on the energy of the X-rays (frequency) and the type of material being used as the X-ray source [

36]. Hence, the study of the noise model parameter can be of great help in determining the noise level of the X-ray projection image and selecting an optimal standard deviation for the denoising algorithm without the need for human intervention.

2.3. Image Quality Assessment

The image gradient gives more insight into the physical structure of an image by showing the edges of an image using filters such as the Sobel, Prewitt, and Laplace filter. This gives rise to the idea that the image gradient can be used to measure the image quality. For example, blurred images have less edge information than clear images. Reference [

37] proposed image quality assessment using gradient similarity, which utilizes the image gradient to compute an overall score from the luminance, contrast, and structure. Reference [

38] proposed the Gradient Magnitude Standard Deviation (GMSD), which calculates the standard deviation from the quality map of a gradient of the image. Structural Similarity Index Measure (SSIM) compares the ground truth image with the restored image with three metrics, luminance, contrast, and structural similarity; all three metrics are consolidated into one overall score [

39]. The Multi-scale SSIM (MS-SSIM) extends the idea of the SSIM and changes the final consolidation score formula to obtain a more accurate grading [

40]. Information-Weighted SSIM (IW-SSIM) also extends the SSIM by adding a weighted pooling to the SSIM [

41]. These image quality assessments show a high correlation with the mean opinion score of humans. However, the type and strength of distortion of the image is not specifically estimated.

The Laplacian of Gaussian (LoG) and the Difference of Gaussian (DoG) pass the images through a Gaussian blur, effectively removing noise in the image and comparing the structural difference between the reference image and the filtered image [

42]. Recent advancements include using visual saliency, chrominance, and gradient magnitude to grade an image in CIELAB, as proposed by [

43]. Reference [

44] proposed the SuperPixel-based SIMilarity Index (SPSIM), which obtains a set of image pixels that have the same visual characteristics, and called them superpixels. Each superpixel is graded with the superpixel luminance similarity, superpixel chrominance, and gradient similarity.

The Human Visual System (HVS) is extremely complex at perceiving information from the real world and transmitting the scenario that we see in our daily lives to our brain for processing. Image quality assessment grading is performed by comparing the objective scores of the subjects (participants in the experiment) and the given score of the image quality assessment. The free-energy principle would suggest that when a human sees a corrupted image, he/she will have a mental picture of how clean the image should look [

45]. By comparing the mental picture and the given corrupted image, humans can give a score to the corrupted image. The Mean Opinion Score (MOS) and Difference Mean Opinion Score (DMOS) are two common metrics computed with the result obtained from the human subjective experiment [

46]. To mimic the complex HVS, visual saliency is used to put more emphasis on the foreground of the image, rather than the background of the image. Reference [

47] proposed using deep neural networks for both no-reference and full-reference image quality assessment. Before the pooling layer arrives at the final score, a fully connected neural network is used to estimate the weights of each patch. Reference [

48] proposed using an attention residual network for full-reference image quality prediction, which maps out the saliency for final grading.

We classify these image quality assessments into two different types of distortion: (i) noise and (ii) structural difference. Noise includes all types of noise, Gaussian noise, Poisson noise, etc, and structural distortion includes Gaussian blur, compression distortion, etc. Image quality assessment is considered as an overall score for the noisy image, and the score is obtained through the combination of the information retained, feature similarity, etc. Image quality assessment can be used as a guide for designing a good feature extractor. Image quality assessment that is based on the image gradient exhibits a good response towards noise such as in the GMSD. The noisier the noisy image is, the greater the GMSD score. Hence, we used the image gradient as a method of feature extraction for estimating the noise parameters.