Point Cloud Completion Network Applied to Vehicle Data

Abstract

:1. Introduction

- (1)

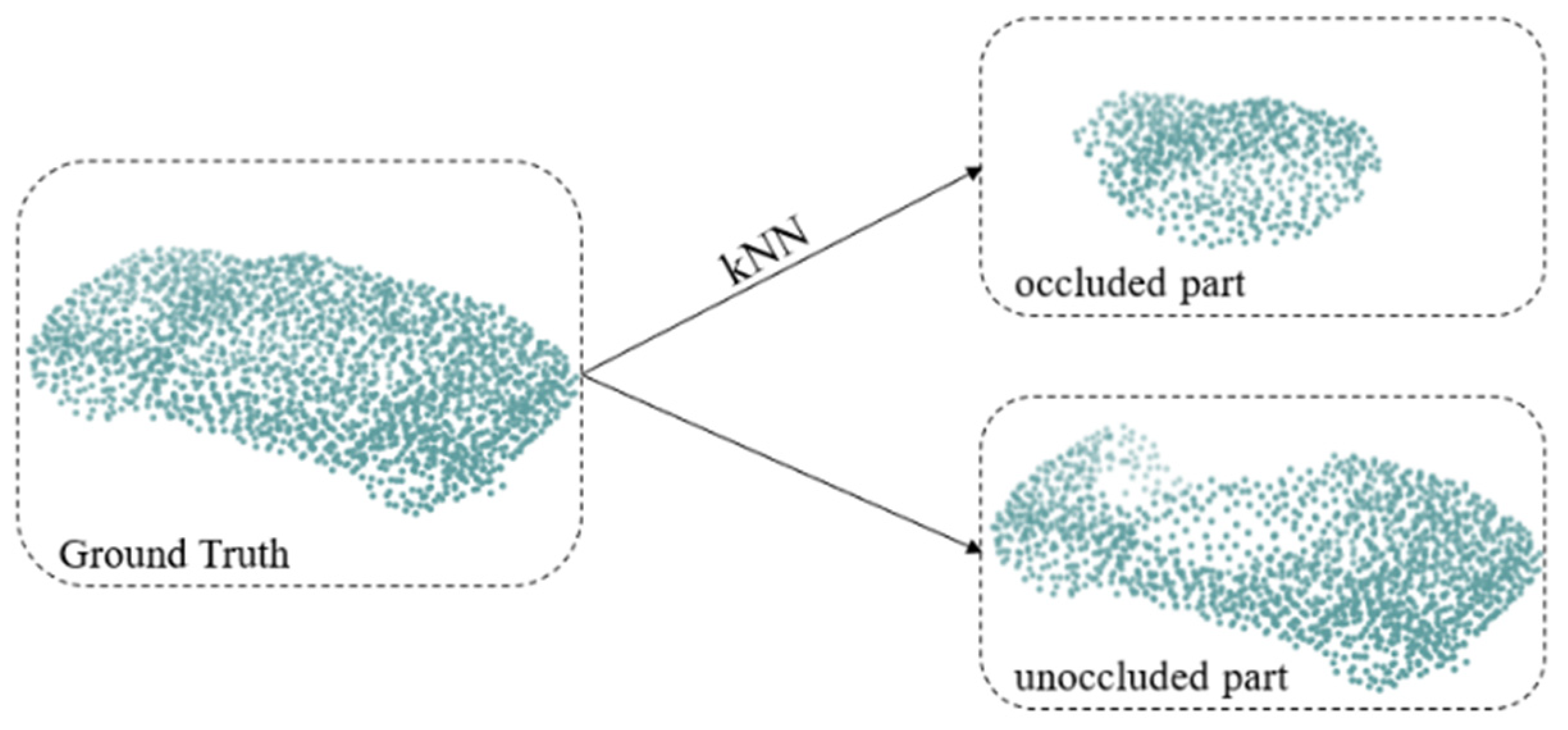

- We think that the unoccluded part of the point cloud does not need to be generated by the network; hence, our network only predicts the occluded part and then stitches the output of the network with the unoccluded part into a complete point cloud of the shape.

- (2)

- We replaced the 2D lattice in the FoldingNet decoder with a 3D lattice and directly deformed the three-dimensional point cloud into a point cloud of the occluded part. This can simplify network training and improve network performance.

- (3)

- The feature extraction capability of the MLP encoder is limited, and to improve it, we used a transformer module as the encoder of our completion network.

2. Related Work

2.1. Traditional Completion Methods

2.2. Learning-Based Methods

3. Methods

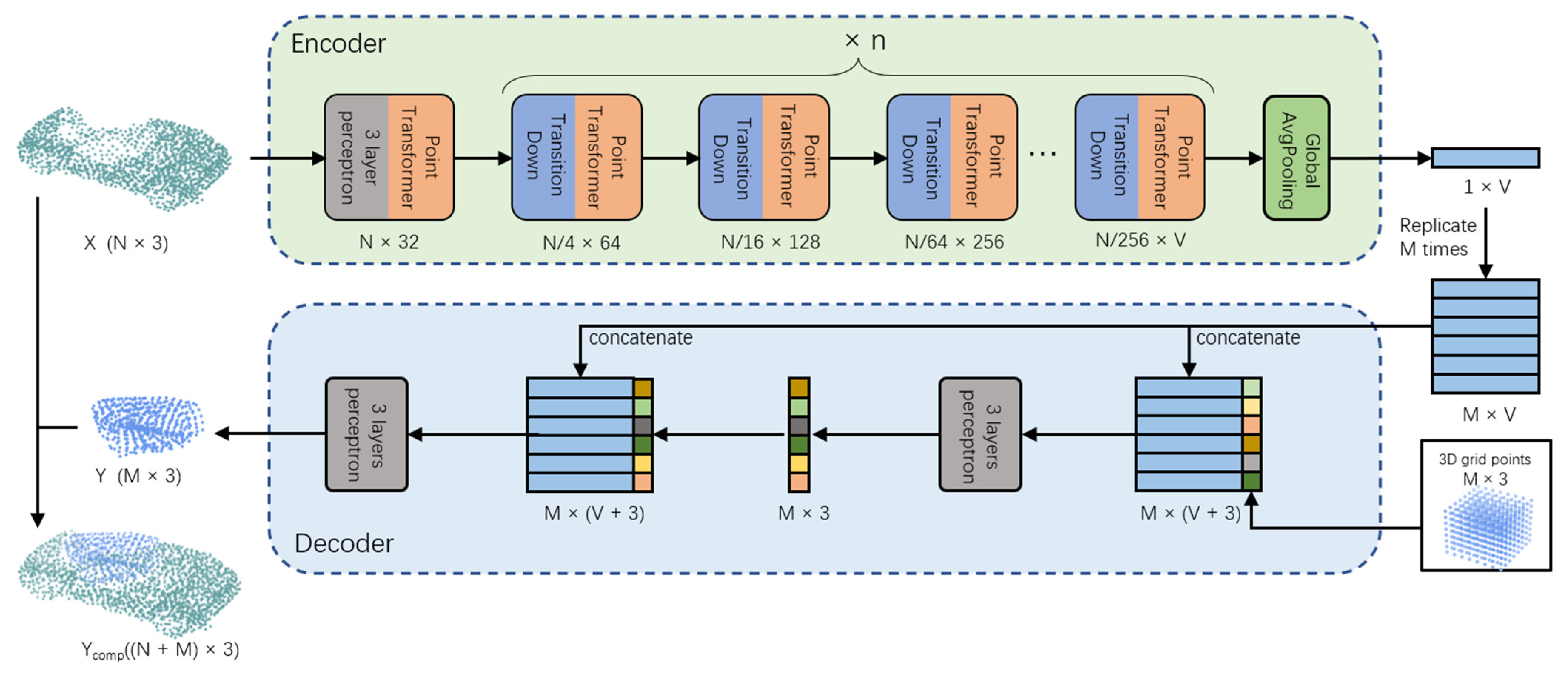

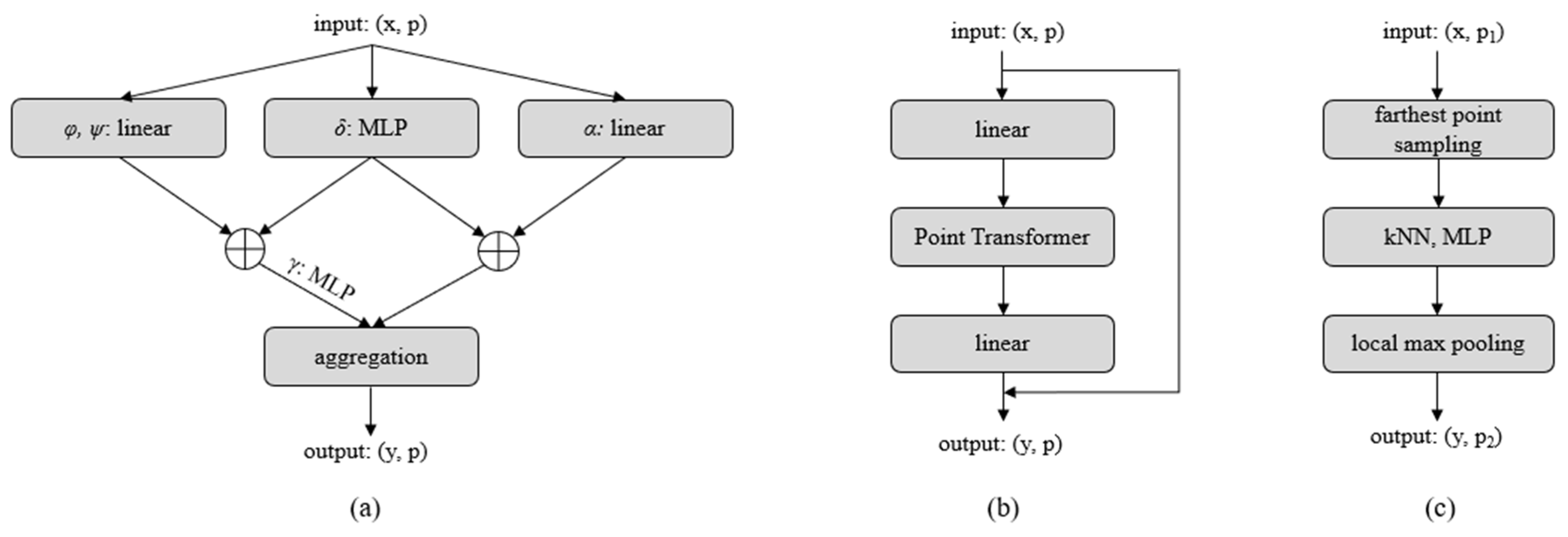

3.1. Encoder

3.2. Decoder

3.3. Loss Function

4. Experience and Results

4.1. Environment and Network Parameters

4.1.1. System Environment

4.1.2. Network Specific Parameters

4.1.3. Model Training Parameters

4.2. Data Generation and Implementation Detail

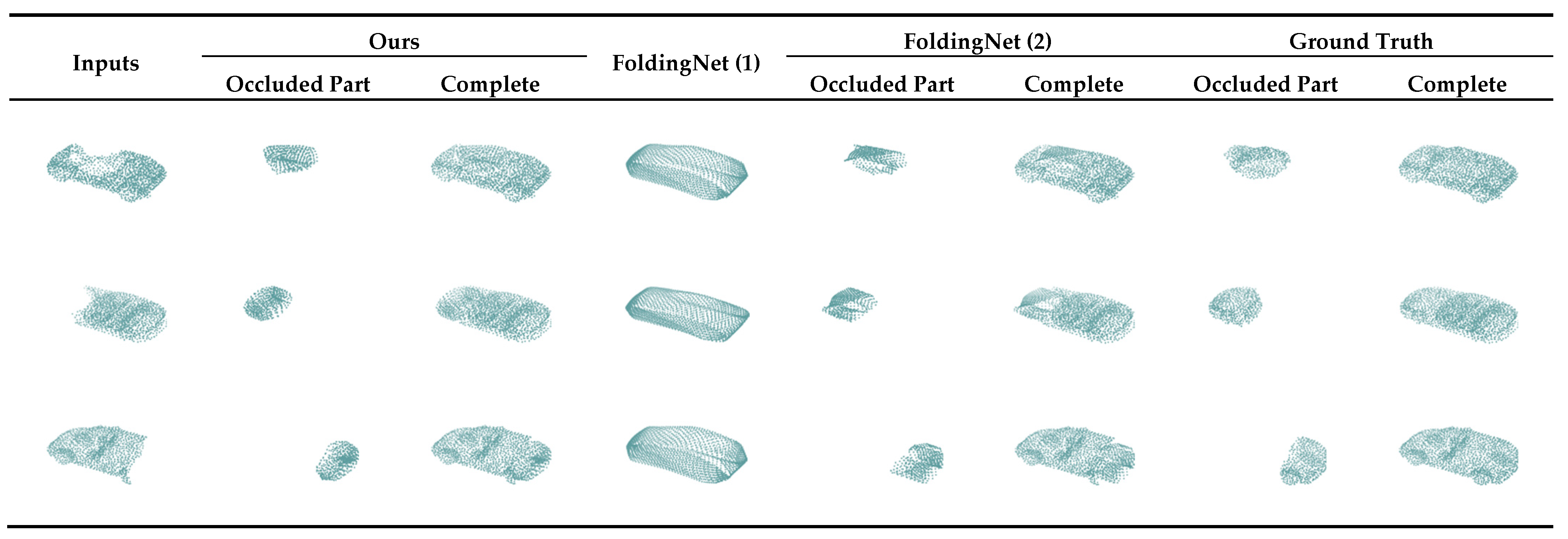

4.3. Results

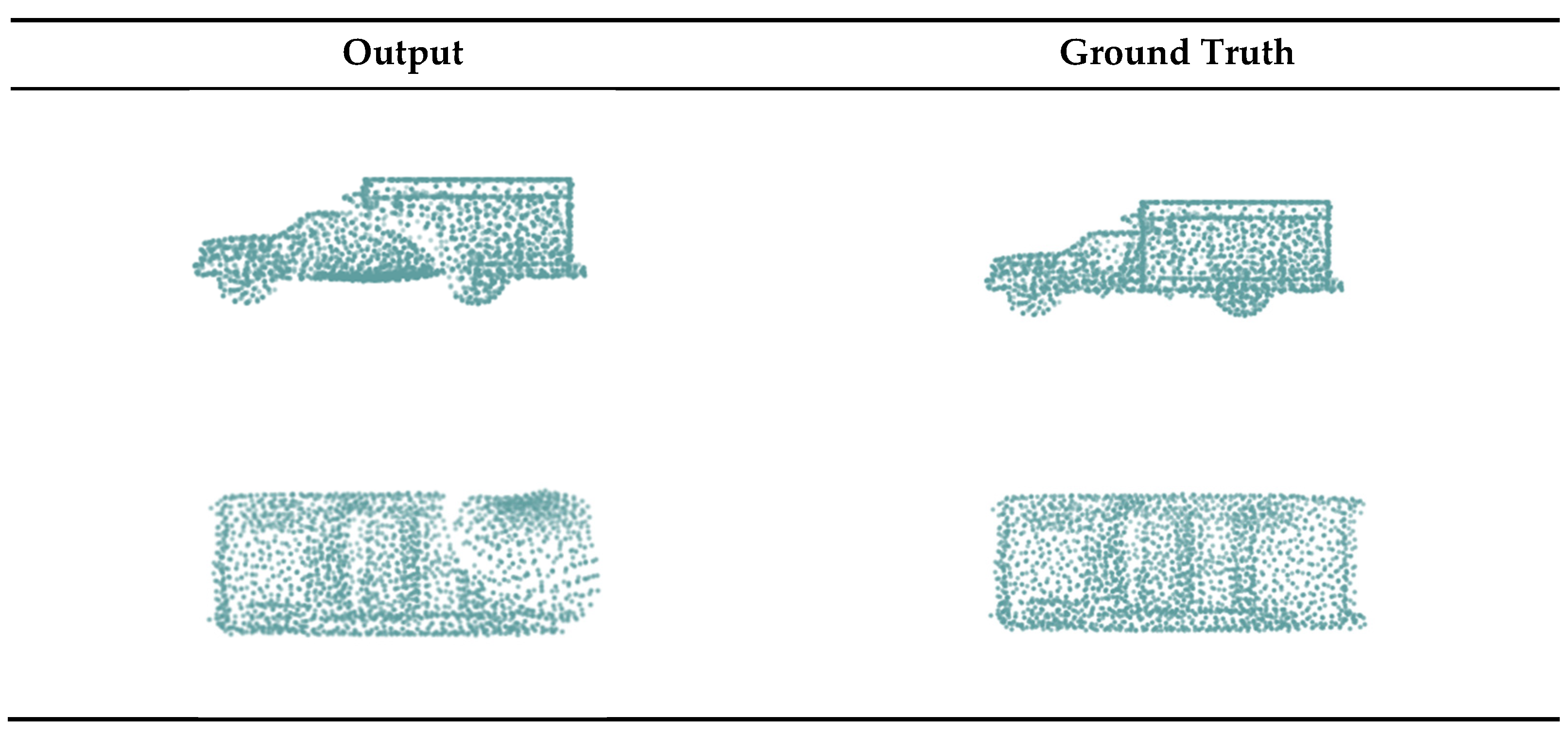

4.4. Ablation Study

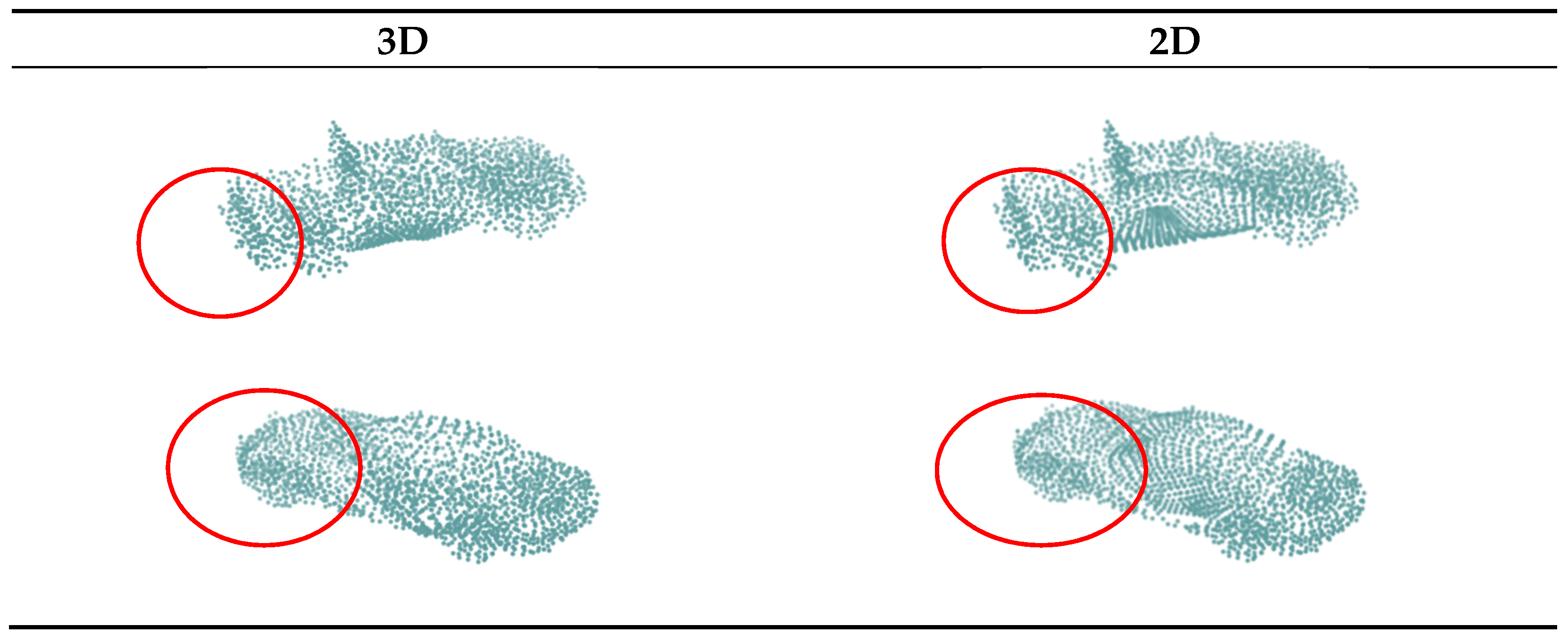

4.4.1. Transformer Encoder

4.4.2. 3D Lattice

5. Discussion

5.1. Some Poor Completion

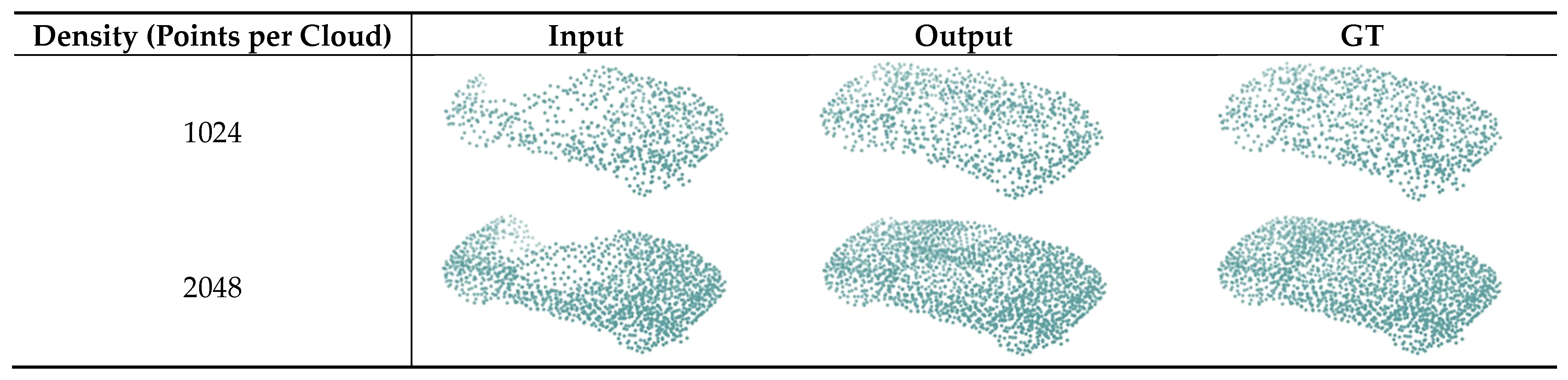

5.2. The Effect of Density

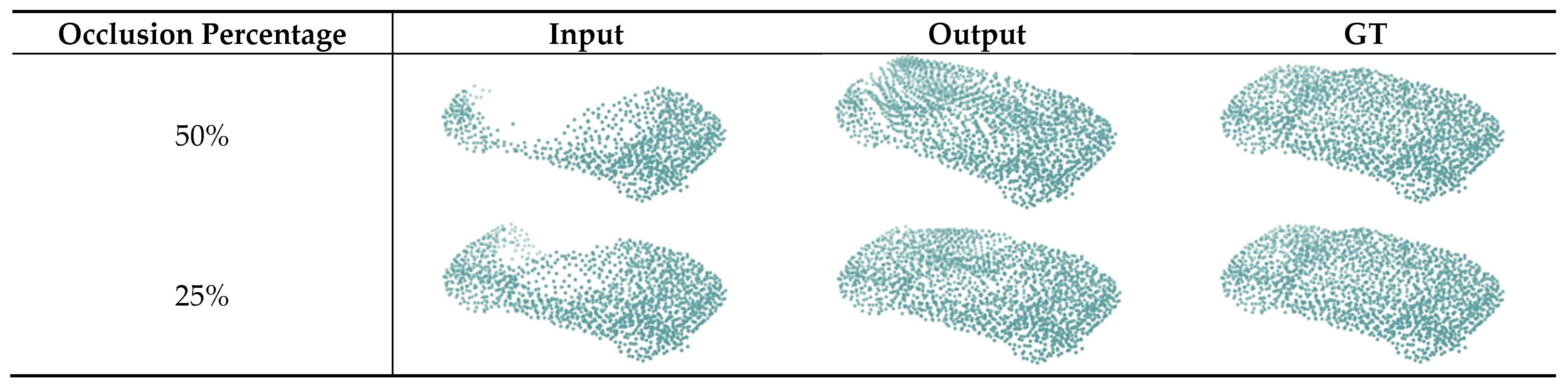

5.3. The Effect of the Scale of Occlusion

5.4. The Behavior of the Network on the Other Categories

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hegde, V.; Zadeh, R. FusionNet: 3D Object Classification Using Multiple Data Representations. arXiv 2016, arXiv:1607.05695. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, Y.; Tan, D.J.; Navab, N.; Tombari, F. SoftPoolNet: Shape Descriptor for Point Cloud Completion and Classification. In Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet plus plus: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Atik, M.E.; Duran, Z. An Efficient Ensemble Deep Learning Approach for Semantic Point Cloud Segmentation Based on 3D Geometric Features and Range Images. Sensors 2022, 22, 6210. [Google Scholar] [CrossRef] [PubMed]

- Mousavian, A.; Anguelov, D.; Flynn, J.; Kosecka, J. 3D bounding box estimation using deep learning and geometry. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7074–7082. [Google Scholar]

- Li, Y.; Snavely, N.; Huttenlocher, D.; Fua, P. Worldwide pose estimation using 3d point clouds. In Computer Vision—ECCV 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 15–29. [Google Scholar]

- Alhamzi, K.; Elmogy, M.; Barakat, S. 3d object recognition based on local and global features using point cloud library. Int. J. Adv. Comput. Technol. 2015, 7, 43. [Google Scholar]

- Wang, D.Z.; Posner, I. Voting for voting in online point cloud object detection. Robot. Sci. Syst. 2015, 1, 10–15. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Cárdenas-Robledo, L.A.; Hernández-Uribe, O.; Reta, C.; Cantoral-Ceballos, J.A. Extended reality applications in industry 4.0.—A systematic literature review. Telemat. Inform. 2022, 73, 101863. [Google Scholar] [CrossRef]

- Tsaramirsis, G.; Kantaros, A.; Al-Darraji, I.; Piromalis, D.; Apostolopoulos, C.; Pavlopoulou, A.; Alrammal, M.; Ismail, Z.; Buhari, S.M.; Stojmenovic, M.; et al. A modern approach towards an industry 4.0 model: From driving technologies to management. J. Sens. 2022, 2022, 5023011. [Google Scholar] [CrossRef]

- Kum, S.; Oh, S.; Yeom, J.; Moon, J. Optimization of Edge Resources for Deep Learning Application with Batch and Model Management. Sensors 2022, 22, 6717. [Google Scholar] [CrossRef] [PubMed]

- Piromalis, D.; Kantaros, A. Digital Twins in the Automotive Industry: The Road toward Physical-Digital Convergence. Appl. Syst. Innov. 2022, 5, 65. [Google Scholar] [CrossRef]

- Martínez-Olvera, C. Towards the Development of a Digital Twin for a Sustainable Mass Customization 4.0 Environment: A Literature Review of Relevant Concepts. Automation 2022, 3, 197–222. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth Map Prediction from a Single Image using a Multi-Scale Deep Network. In Proceedings of the 28th Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Gregor, R.; Schreck, T.; Sipiran, I. Approximate Symmetry Detection in Partial 3D Meshes. Comput. Graph. Forum J. Eur. Assoc. Comput. Graph. 2014, 33, 131–140. [Google Scholar]

- Wang, N.; Zhang, Y.; Li, Z.; Fu, Y.; Liu, W.; Jiang, Y.-G. Pixel2Mesh: Generating 3D Mesh Models from Single RGB Images. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 December 2018. [Google Scholar]

- You, C.C.; Lim, S.P.; Lim, S.C.; Tan, J.S.; Lee, C.K.; Min, Y.; Khaw, Y.M.J. A Survey on Surface Reconstruction Techniques for Structured and Unstructured Data. In Proceedings of the 2020 IEEE Conference on Open Systems (ICOS), Kota Kinabalu, Malaysia, 17–19 November 2020. [Google Scholar]

- Yang, Y.; Feng, C.; Shen, Y.; Tian, D. FoldingNet: Point Cloud Auto-Encoder via Deep Grid Deformation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 206–215. [Google Scholar]

- Yuan, W.; Khot, T.; Held, D.; Mertz, C.; Hebert, M. PCN: Point Completion Network. In Proceedings of the 6th International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.H.S.; Koltun, V. Point Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021. [Google Scholar]

- Sarkar, K.; Varanasi, K.; Stricker, D. Learning quadrangulated patches for 3D shape parameterization and completion. In Proceedings of the International Conference on 3D Vision (3DV), Qingdao, China, 7 June 2018. [Google Scholar]

- Berger, M.; Tagliassacchi, A.; Seversky, L.; Alliez, P.; Levine, J.; Sharf, A.; Silva, C. State of the Art in Surface Reconstruction from Point Clouds. In Proceedings of the Eurographics 2014—State of the Art Reports, Strasbourg, France, 7–11 April 2014. [Google Scholar]

- Sung, M.; Kim, V.G.; Angst, R.; Guibas, L. Data-driven structural priors for shape completion. ACM Trans. Graph. 2015, 34, 1–11. [Google Scholar] [CrossRef]

- Li, Y.; Dai, A.; Guibas, L.; Niebner, M. Database-Assisted Object Retrieval for Real-Time 3D Reconstruction. Comput. Graph. Forum 2015, 34, 435–446. [Google Scholar] [CrossRef]

- Nan, L.; Xie, K.; Sharf, A. A search-classify approach for cluttered indoor scene understanding. ACM Trans. Graph. 2012, 31, 137. [Google Scholar] [CrossRef]

- Martinovic, A.; Gool, L.V. Bayesian Grammar Learning for Inverse Procedural Modeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23 June 2013. [Google Scholar]

- Gupta, S.; Arbeláez, P.; Girshick, R.; Malik, J. Aligning 3D models to RGB-D images of cluttered scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Rock, J.; Gupta, T.; Thorsen, J.; Gwak, J.; Shin, D.; Hoiem, D. Completing 3D object shape from one depth image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Yin, K.; Huang, H.; Zhang, H.; Gong, M.; Cohen-Or, D.; Chen, B. Morfit: Interactive surface reconstruction from incomplete point clouds with curve-driven topology and geometry control. Acm Trans. Graph. 2014, 33, 202. [Google Scholar] [CrossRef]

- Mitra, N.J.; Pauly, M.; Wand, M.; Ceylan, D. Symmetry in 3D Geometry: Extraction and Applications. Comput. Graph. Forum 2013, 32, 1–23. [Google Scholar] [CrossRef]

- Sharma, A.; Grau, O.; Fritz, M. VConv-DAE: Deep Volumetric Shape Learning Without Object Labels. In Computer Vision—ECCV 2016 Workshops; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D ShapeNets: A Deep Representation for Volumetric Shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Tchapmi, L.P.; Kosaraju, V.; Rezatofighi, H.; Reid, I.; Savarese, S. TopNet: Structural Point Cloud Decoder. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Yang, Y.B.; Zhang, Q.L. SA-Net: Shuffle Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 13 May 2021. [Google Scholar]

- Huang, Z.; Yu, Y.; Xu, J.; Ni, F.; Le, X. PF-Net: Point Fractal Network for 3D Point Cloud Completion. arXiv 2020, arXiv:2003.00410. [Google Scholar]

- Xiang, P.; Wen, X.; Liu, Y.S.; Cao, Y.P.; Wan, P.; Zheng, W.; Han, Z. SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer. arXiv 2021, arXiv:2108.04444. [Google Scholar]

| Methods | ||

|---|---|---|

| FoldingNet (1) | — | 4.403 |

| FoldingNet (2) | 4.461 | 1.034 |

| Ours | 4.104 | 0.965 |

| Methods | ||

|---|---|---|

| Ours | 4.104 | 0.964 |

| Without Transformer | 4.228 | 0.984 |

| Without 3D | 4.747 | 1.088 |

| Category | |

|---|---|

| Airplane | 2.720 |

| Cabinet | 6.099 |

| Chair | 5.965 |

| Lamp | 5.925 |

| Sofa | 5.374 |

| Table | 5.563 |

| Watercraft | 4.411 |

| Car | 4.104 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, X.; Li, X.; Song, J. Point Cloud Completion Network Applied to Vehicle Data. Sensors 2022, 22, 7346. https://doi.org/10.3390/s22197346

Ma X, Li X, Song J. Point Cloud Completion Network Applied to Vehicle Data. Sensors. 2022; 22(19):7346. https://doi.org/10.3390/s22197346

Chicago/Turabian StyleMa, Xuehan, Xueyan Li, and Junfeng Song. 2022. "Point Cloud Completion Network Applied to Vehicle Data" Sensors 22, no. 19: 7346. https://doi.org/10.3390/s22197346

APA StyleMa, X., Li, X., & Song, J. (2022). Point Cloud Completion Network Applied to Vehicle Data. Sensors, 22(19), 7346. https://doi.org/10.3390/s22197346