1. Introduction

Internet of things (IoT), an emerging computing paradigm, integrates various sensors over a wireless network. The traditional IoT transfers the collected data by sensors to the cloud. However, with an increase in the number of IoT devices, it becomes difficult to centrally process the collected data in the cloud for a variety of reasons, such as the massive workload on the IoT network, latency, and privacy concerns [

1]. Edge computing moves the data processing from the cloud to the edge nodes close to the sensors. The data collected by sensors can be processed locally or transferred to the cloud after the local preprocessing. An artificial neural network (ANN) has been deployed on IoT devices to perform special tasks such as voice recognition and verification [

2]. However, the intensive memory and processing requirements of conventional ANNs have made it difficult to deploy deep networks to resource-constrained and power-constrained IoT devices.

The spiking neural network (SNN), known as the third generation of the neural network, has been introduced into many application fields including electrocardiogram heartbeat classification [

3], object recognition [

4], waveform analysis [

5], odor data classification [

6], and image classification [

7]. SNN has the potential to effectively process spatial-temporal information. Compared with ANN, SNN has the characteristics of lower power consumption and smaller computation load. Neurons in an SNN communicate with each other by sending spikes across synapses. A spiking neuron accepts spikes from its presynaptic neurons and integrates the corresponding weights to update its membrane potential. A neuron fires a spike when its membrane potential reaches the firing threshold and then the membrane potential is reset.

Neuromorphic hardware, the new generation of brain-inspired non-von Neumann computing system, has the potential to perform complex computations with less memory footprint, more energy efficiency, and faster than conventional architectures. Neuromorphic hardware implements spiking neurons and synapses, which makes them suitable for executing SNN-based applications. Recently, many neuromorphic processors have been developed, such as TrueNorth [

8], Loihi [

9], SpiNNaker [

10], Unicorn [

11], and DYNAPs [

12]. Zhang et al. [

13] propose a scalable, cost-efficient, and high-speed VLSI architecture to accelerate deep spiking convolution neural networks (SCNN). The neuromorphic hardware typically consists of multiple cores and each core can only accommodate a limited number of neurons. For example, the TrueNorth includes 4096 neurosynaptic cores and a single core has 256 axons, a 256 × 256 synapse crossbar, and 256 neurons. To support the inter-core communication, the Network-on-Chip (NoC) [

14] is adopted as an interconnecting mechanism in the neuromorphic hardware.

Before an SNN is executed on neuromorphic hardware, the neurons of the SNN should be assigned to the target neuromorphic hardware. This step is typically segmented into two substeps. First, a large-scale SNN is partitioned into multiple clusters so that the number of neurons per cluster does not exceed the capacity of a single neuromorphic core. Second, it selects appropriate cores for the execution of clusters present in the partitioned SNN-based application.

Recently, numerous methods [

15,

16,

17,

18,

19,

20] have been proposed to map SNN-based applications to neuromorphic hardware. PACMAN [

15] is proposed to map SNNs onto SpiNNaker. Corelet [

16] is a proprietary tool to map SNNs to TrueNorth. Some general-purpose mapping approaches [

17,

18,

19,

20] employ heuristic algorithms [

21,

22,

23] to partition an SNN into multiple clusters, with the objective of minimizing the spike communication between partitioned clusters. After the partition, they use meta-heuristic algorithms [

21,

24] to search for the best

-

-

mapping scheme.

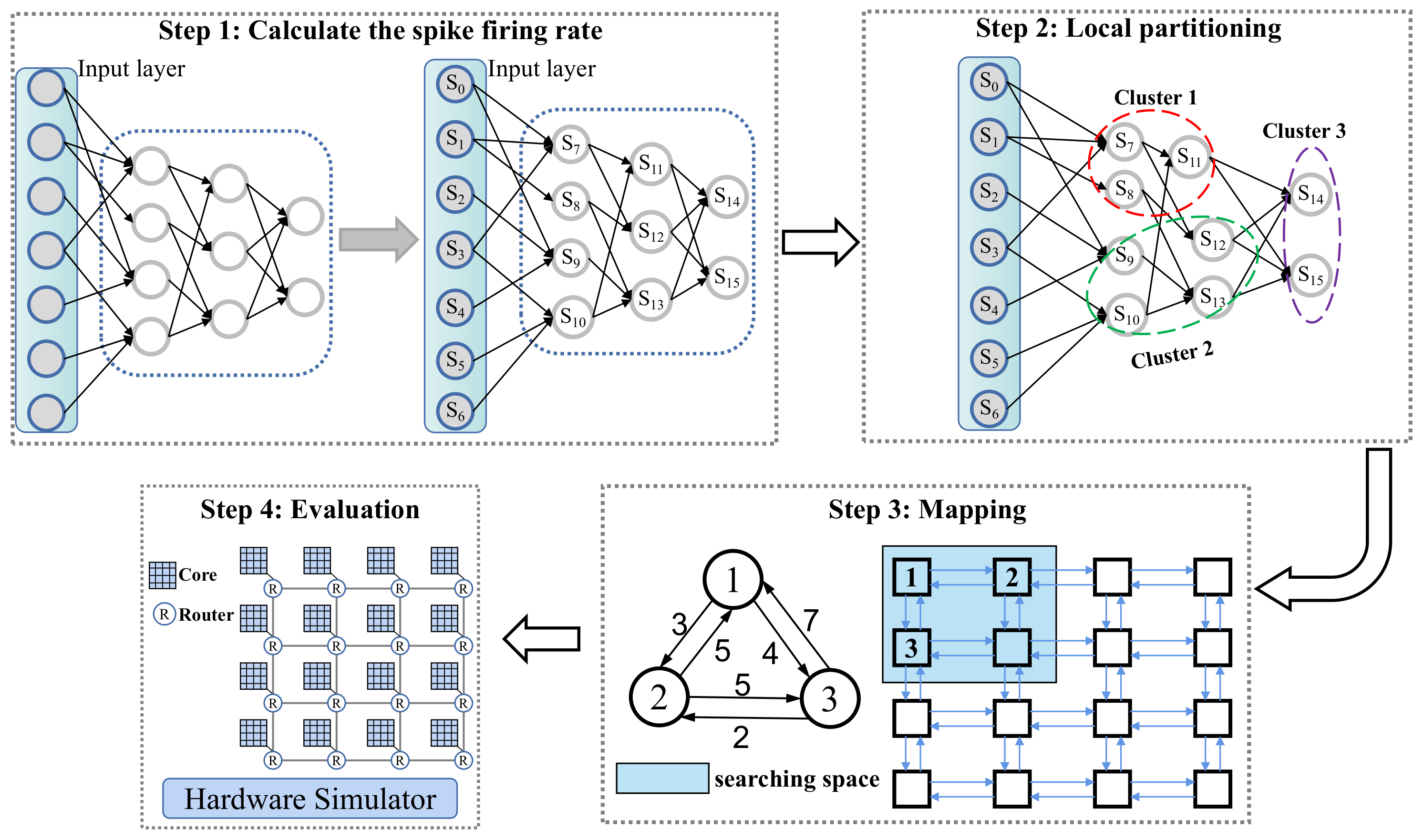

Figure 1 shows the high-level overview of some existing SNN mapping approaches. Before the partitioning stage, those methods need to simulate an SNN, using SNN software simulators such as Brian2 [

25] and CARLsim [

26], to statistically obtain communication patterns (i.e., the spike times of all neurons). Before the simulation, researchers should build the given SNN using the application programming interfaces (APIs) of the specific simulator, which may be challenging for researchers who are unfamiliar with the simulator. In addition, it will spend lots of time simulating a large-scale SNN on a software simulator. The simulation process is also included in PSOPART and NEUTAMS.

The second limitation of prior works is that they treat an SNN as a graph and partition the entire graph into multiple clusters directly, ignoring the characteristic of synapses. Exploiting the characteristics of synapses can further reduce the spike communication between clusters. The third limitation is that they always search for the best -- scheme in the entire neuromorphic hardware, which makes them prone to trapping in the local optimum.

In this paper, we propose an efficient toolchain for mapping SNN-based applications to the neuromorphic hardware, called NeuMap (Optimal Mapping of Spiking Neural Network to Neuromorphic Hardware for Edge-AI). NeuMap focuses on SNN-based applications with a feed-forward topology. NeuMap first obtains the communication patterns of an SNN by calculation, instead of simulation. Based on the calculated spike firing rates, NeuMap then partitions the SNN into multiple clusters using a greedy algorithm, minimizing the communication traffic between clusters. Finally, NeuMap narrows the searching space and employs a meta-heuristic algorithm to seek the best -- scheme. The main contributions of this paper can be summarized as follows:

- (1)

We study the impact of different parameters of an SNN on the spike firing rate and obtain the communication patterns of an SNN by calculation, instead of simulation. The calculation can simplify the end-to-end mapping process and get rid of challenges derived from the simulation in specific simulators.

- (2)

We exploit the characteristic of synapse and propose the strategy, which first divides the entire network into several sub-networks and partitions each sub-network into multiple clusters. The strategy further reduces the spike communication between neuromorphic cores.

- (3)

Instead of searching for the best -- scheme across all neuromorphic cores, we reduce the searching space in advance and employ a meta-heuristic algorithm with two optimization objectives to seek the best mapping scheme. The reduction in searching space helps to avoid trapping in the local optimum.

We evaluate NeuMap with six SNN-based applications. The experimental results show that, compared to SpiNeMap and SNEAP, NeuMap reduces the average energy consumption by 84% and 17% and has 55% and 12% lower spike latency, respectively.

The remainder of this paper is organized as follows:

Section 2 introduces the background and related works.

Section 3 details the proposed toolchain. The experimental setup and experimental results are discussed in

Section 4 and

Section 5, respectively. Finally,

Section 6 concludes the paper.

3. Methods

Figure 4 shows the high-level overview of our proposed approach which is composed of four steps, including obtaining the spike firing rates of all neurons, partitioning the SNNs, mapping the clusters to the target neuromorphic hardware, and evaluating.

For an incoming SNN-based application, NeuMap first extracts the connections and synaptic weights between neurons. NeuMap counts the spike firing times of the input neurons and then calculates the spike firing rates of other neurons. NeuMap uses a heuristic algorithm to partition an SNN into multiple clusters, minimizing the inter-cluster spike communication. By reducing the inter-cluster communication, NeuMap reduces the energy consumption and latency on NoC. Next, NeuMap uses a link congestion-aware algorithm to map the clusters to the selected cores, minimizing the hop distance that each spike message traverses before reaching its destination.

3.1. Calculating the Spike Firing Rates

As shown in

Section 2.1, a spiking neuron accepts spikes from its presynaptic neurons, integrates the corresponding weights to update its membrane potential, fires a spike when reaching the firing threshold, and resets the membrane potential. Therefore, there are three factors affecting the firing of neurons: the synaptic weights, the external input (i.e. spikes from presynaptic neurons), and the difference between the threshold potential and resting potential. Increasing the synaptic weights or the spike firing rates of presynaptic neurons will stimulate the postsynaptic neurons to fire more frequently. On the contrary, increasing the difference between the threshold potential and resting potential reduces the spike firing times. This is because after firing a spike and resetting the membrane potential, the neuron needs to receive more spikes to reach the threshold potential again. Therefore, the spike firing rate of one neuron is positively associated with the synaptic weights and the spike firing rates of its presynaptic neurons and negatively correlated with the difference between the threshold potential and resting potential.

For an SNN-based application with

N neurons, including

m input neurons, we first extract the connections and synaptic weights in the network. NeuMap builds an adjacent matrix

, where nodes are spiking neurons and edge weights between nodes are synaptic weights. In terms of the spike firing rates of all neurons, NeuMap constructs a spike firing rate vector

S:

S

is the spike firing rate of the

ith neuron.

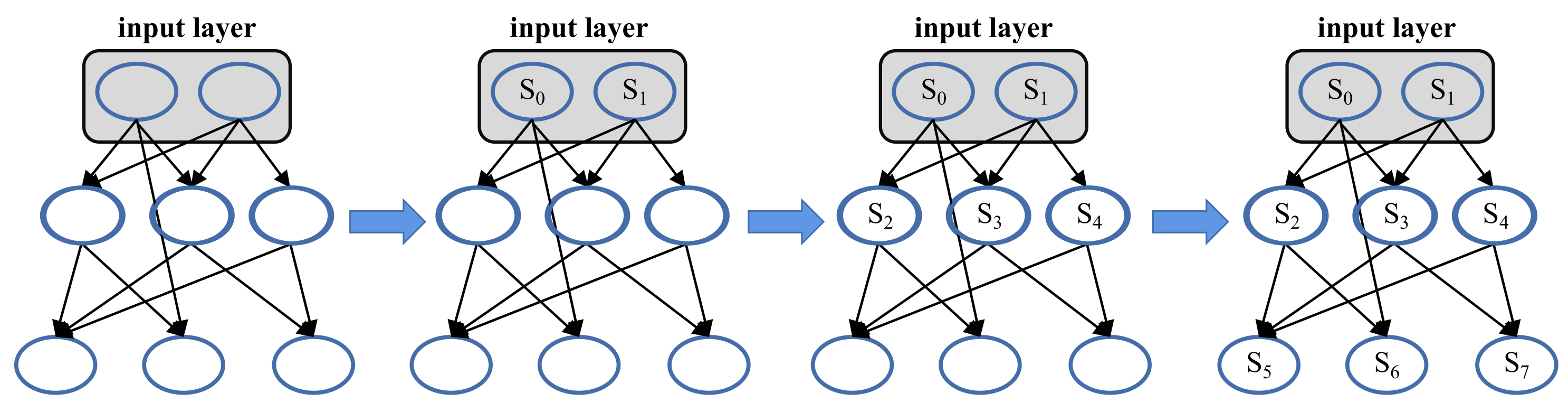

As shown in

Figure 5, NeuMap transforms the network without recurrent connections into a tree structure. The root nodes are the neurons from the input layer. Before calculating the spike firing rate of a neuron, the spike firing rates of its all presynaptic neurons should be calculated in advance. Therefore, the calculation of spike firing rates is from up to down.

In the beginning, NeuMap counts the spike firing times of the input neurons. The representative samples from the training dataset or validation dataset are transformed into Poisson-distributed spike trains, with firing rates proportional to the intensity. NeuMap adds up the total firing times in all representative samples for every input neuron. The spike firing rates of all input neurons can be formulated as

where

K is the number of input samples and

T is the time step for a single sample.

After calculating the firing rates of input neurons, NeuMap calculates the spike firing rates of other spiking neurons from up to down. The computation of the spike firing rates of other spiking neurons is based on the above analysis. The computation can be formulated as

The

jth neuron is one of the presynaptic neurons of the

ith neuron and w

is the synaptic weight between the

jth and the

ith neuron. It should be noted that the spike firing rate cannot be more than 1 and less than 0. Therefore, when the computed firing rate exceeds 1, it will be set to 1. When the computed firing rate is less than 0, it will be set to 0. We compare the calculated spike firing rates and actual spike count in

Section 5.1.

After calculating the spike firing rates of all neurons, NeuMap replaces the synaptic weights with the computed spike firing rates. For the synapse from the ith neuron to the jth neuron, the weight is replaced by . After the transformation, the given SNN is represented as a graph where is the set of nodes and is the set of synapses.

3.2. Local Partitioning

Let be the partitioned SNN with a set of clusters and a set E of edges between clusters. The SNN partitioning problem is transformed into G(N, S) →, which is a classical graph partitioning problem. The graph partitioning problem has been proven to be an NP-complete problem.

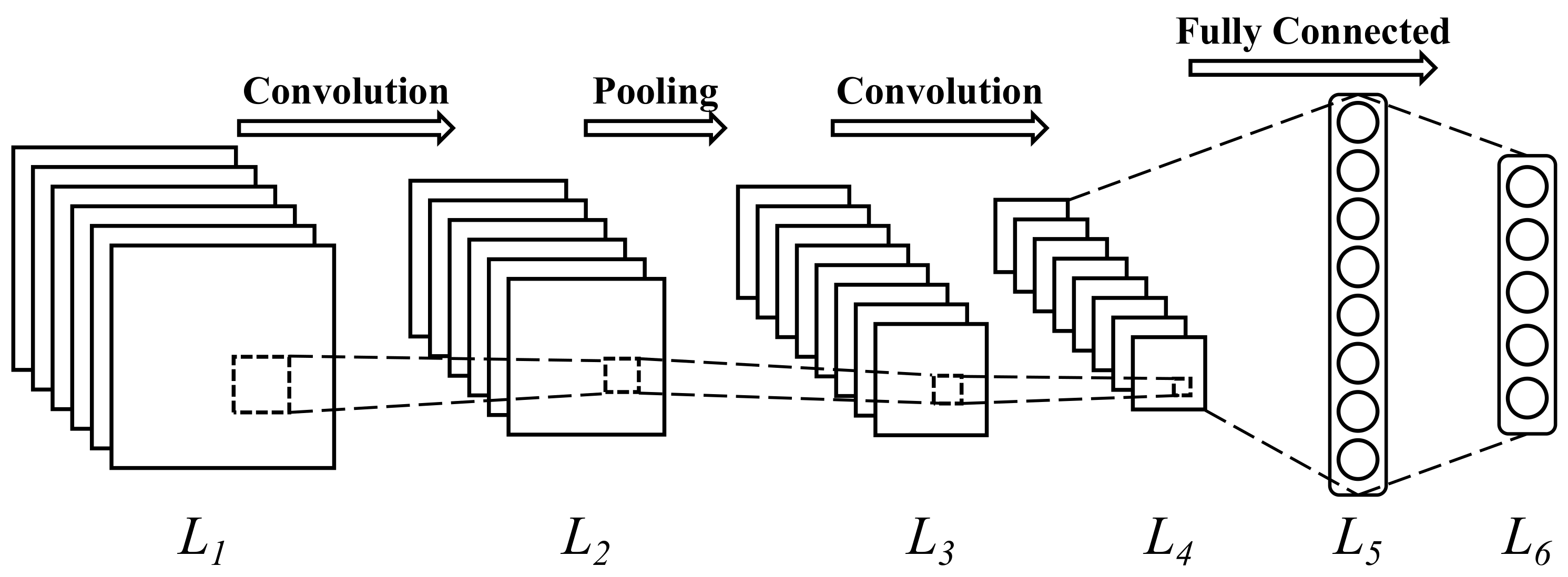

The connections in the SNNs are localized. Take the spiking convolutional neural network, shown in

Figure 6, for example. The spiking neurons in the first layer

only connect to the second layer

. In terms of the neurons in the second layer, the presynaptic neurons are located in the first layer and the postsynaptic neurons are distributed in the third layer

. Therefore, the synapses are distributed in the neighboring layers.

Prior works directly partition an entire SNN, ignoring the localized connections. They traverse all neurons contained in the SNN and put neurons with high-frequency communication in the same cluster. The global searching strategy ignores the local property and often puts the neurons from multiple layers in the same cluster, which may scatter the neurons from the adjacent layers in multiple clusters.

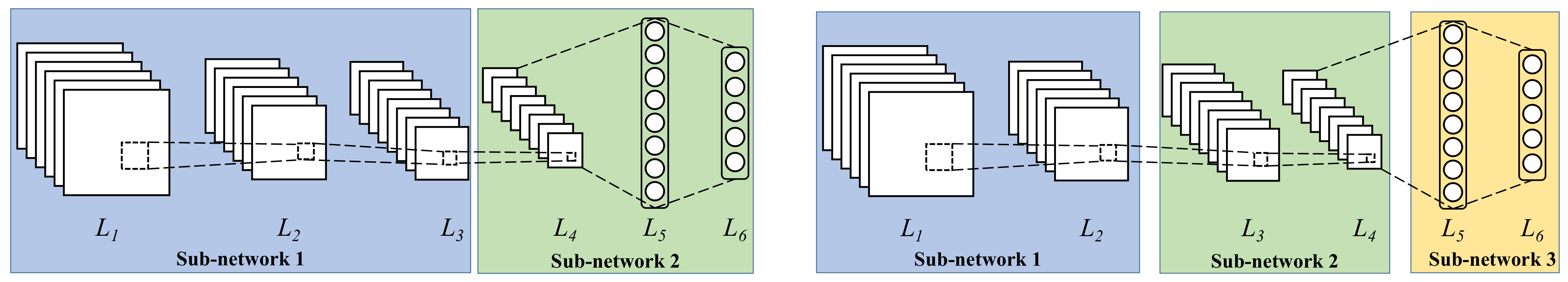

Based on this knowledge, instead of directly partitioning the entire network into multiple clusters, we first divide the network into several sub-networks, shown in

Figure 7. A sub-network is comprised of multiple adjacent layers. For an SNN with

L layers, there is at least one sub-network after the division (i.e., the entire network) and at most

L layers (i.e., every layer is treated as a sub-network). Therefore, the number of sub-networks ranges from 1 to

L, i.e.,

where

is the number of divided sub-networks.

We formalize the entire partitioning process in Algorithm 1. The algorithm increases the size of sub-networks from 1 to

L in turn and divides the entire SNN into ⌈

⌉ sub-networks (line 2). The variable

in the algorithm is the number of layers contained in each sub-network. After the division, only the connections between the neurons belonging to a sub-network are preserved in the sub-network. Then, we employ the multi-level graph partitioning algorithm to partition each sub-network into multiple clusters while satisfying the hardware resource constraints (lines 3–5). After partitioning all sub-networks into multiple clusters, the algorithm calculates and records the sum of spike firing rates between clusters (line 7). Finally, the algorithm selects the partitioning scheme which has the minimum spike communication between clusters (lines 9–10).

| Algorithm 1 Partitioning algorithm |

- 1:

for = 1 to L do - 2:

into ⌈⌉ sub-networks - 3:

for each - in the divided sub-networks do - 4:

(-) - 5:

end for - 6:

the partitioning result - 7:

and the sum of spike firing rates between clusters - 8:

end for - 9:

the minimum - 10:

return

|

After selecting the partitioning scheme, we calculate the spike communication frequency between all pairs of clusters and assign it to the corresponding edge in .

3.3. Mapping

After an SNN is divided into multiple clusters, the next step is to map all the clusters to the multicore neuromorphic hardware. The NoC-based neuromorphic hardware can be represented as a graph

, where

C is the set of neuromorphic cores and

I is the set of physical links between those cores. Mapping of an SNN onto the neuromorphic hardware is defined as a one-to-one from the set of partitioned clusters to the set of cores:

Different mapping schemes lead to different utilizations of interconnect segments, which impacts both energy consumption and spike latency.

Figure 8 shows that an SNN has been partitioned into three clusters and the neuromorphic hardware has nine cores arranged in

mesh topology. This case uses the X–Y routing algorithm, a deterministic dimensional routing algorithm. The number attached to each edge is the sum of spike messages passing through the physical link. There are three candidate mapping schemes for the partitioned SNN on the right-hand side of

Figure 8. Compared with

and

, the maximum link workload of

is higher. The

and

have the same maximum link workload. Unfortunately, compared with

, the clusters in

are mapped to distant cores, which leads to higher spike latency and energy consumption on NoC. Therefore, the

is the best mapping scheme among the three candidates.

Communication latency and energy consumption are two main concerns of the on-chip domain. Therefore, the spike latency and energy consumption are the most direct and effective optimization goals. Unfortunately, the evaluation of spike latency and energy consumption is time-consuming because those metrics should be obtained by simulation in the software simulator or the real hardware.

Placing communicating clusters in close proximity will decrease the energy consumption and the congestion probability on NoC. Furthermore, compared with spike latency and energy consumption, the calculation of the average hop of all spike messages takes less time. Therefore, in this paper, the average hop is adopted as one of the optimization objectives.

On the other hand, unbalanced link load distribution may cause severe local congestion on NoC such as the link from

to

in

. Hence, balancing link load is selected as another optimization goal in this paper. Instead of balancing the link loads directly, we minimize the maximum link load. After determining the partitioning scheme, the sum of spike messages on NoC is constant. The maximum possible number of spike messages on a single link is the sum of spike messages on NoC and the minimum is 0. Minimizing the maximum link load can narrow the gap between the maximum and minimum link loads, which balances the link load indirectly. The optimization objective of average hop can be formulated as:

where

is the average hop in the

ith mapping scheme. The optimization objective of maximum link load can be formulated as:

where

is the maximum link load in the the

ith mapping scheme.

In this paper, we employ a meta-heuristic algorithm, the Tabu Search (TS) algorithm [

32], to search for the best mapping scheme. For the partitioned SNN

and the target neuromorphic hardware

, there are

-

-

mapping schemes. Iterating over all mapping schemes is time-consuming, especially when the sizes of the SNN and neuromorphic hardware are large. Furthermore, when there are more alternative mapping schemes, the search algorithm is more likely to fall into the local optimum.

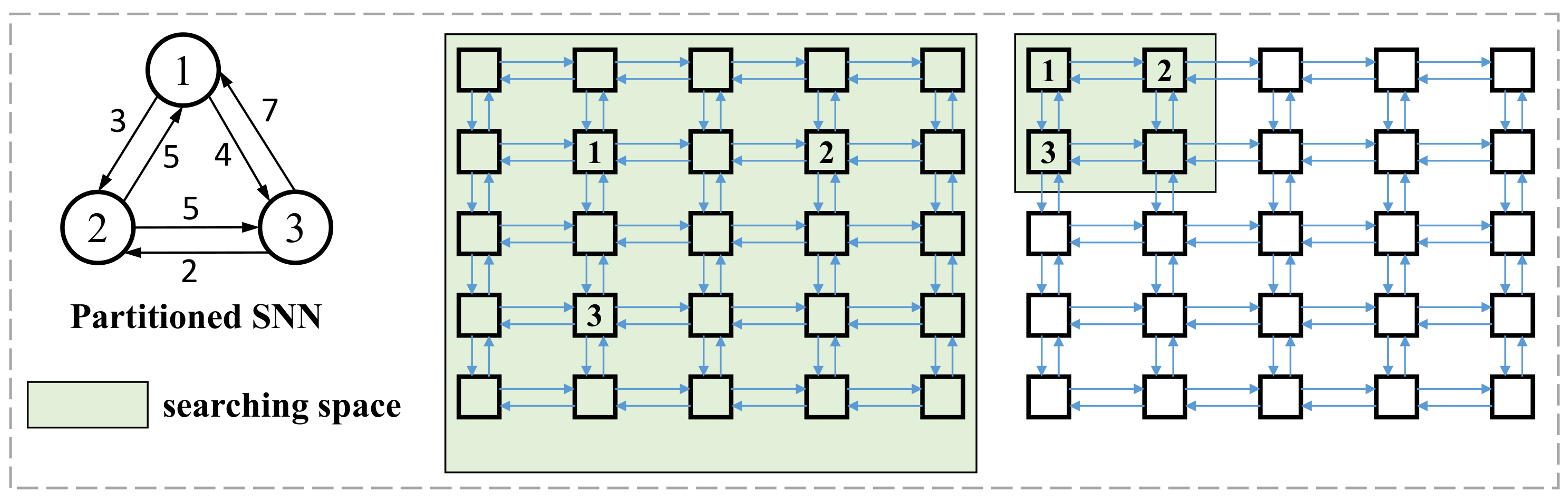

To avoid trapping in the local optimum, we first reduce the searching space.

Figure 9 shows two searching spaces in the mapping stage. If the searching space is set as the entire neuromorphic hardware (shown in the middle subfigure), the mapping algorithm is easily trapped in the local optimum. As shown in the right subfigure of

Figure 9, the searching space is set as the

square region in the upper-left corner, which contributes to a better mapping scheme. The reduction in the searching space not only helps to seek a better mapping scheme but decreases the time consumed in the mapping stage. In this paper, the searching space is set as

. After setting the searching space, we use the TS algorithm, with two fitness functions

and

, to search for the best

-

-

scheme.

5. Results and Discussion

In this section, we show all experimental results, including the accuracy of the calculated spike firing rates, the number of spike messages on NoC, the average hop of spike messages, the spike latency on NoC, and the energy consumption on NoC.

5.1. Accuracy of the Calculated Spike Firing Rates

We count the spike firing times at different layers from different applications when executing the applications.

Figure 10 and

Figure 11 show the calculated spike firing rates and the actual spike count of two different layers from two applications, CNN-Fashion-MNIST and LeNet-5-CIFAR10. It can be seen that the calculated spike firing rates are nearly consistent with the actual spike count. The precondition of accurate calculation for a neuron’s spike firing rate is that the spike firing rates of presynaptic neurons are computed accurately. Therefore, in the computing process, NeuMap first counts the actual firing times of the input neurons, which ensures the accuracy of the input layer. After that, NeuMap calculates the spike firing rates of other neurons from up to down.

Prior works, such as SNEAP, SpiNeMap, and PSOPART, obtain the communication patterns of an SNN by simulating the SNN in a software simulator. Researchers need to be familiar with the APIs of the specific simulator and reproduce the SNN before the simulation. We can get rid of the simulating process and obtain the communication patterns by calculating the spike firing rates using representative data.

5.2. Partitioning Performance

In the partitioning stage, all evaluated methods try to minimize the spike communication between the partitioned clusters while meeting the hardware resource constraints.

We illustrate the total number of spike messages in

Figure 12. Compared with SpiNeMap, SNEAP has an average 63% lower spike count. This improvement is due to the ML algorithm outperforming the KL algorithm. The KL algorithm arbitrarily distributes neurons to

K clusters on initialization. Next, three random exchange strategies are applied to fine tune the clusters to minimize the number of spikes between clusters. The ML algorithm iteratively folds two adjacent nodes with high-frequency communication into a new node. Compared with the KL algorithm, the ML algorithm reduces more spike messages.

Compared with SNEAP, NeuMap has an average 7% lower spike count. Both SNEAP and NeuMap partition the SNNs using the multi-level graph partitioning algorithm. Different from SNEAP, NeuMap exploits the local property of connections and divides the entire network into several sub-networks. The partition is applied to each sub-network, which avoids the dispersion of neurons from the adjacent layers. It should be noted that in both MLP-MNIST and MLP-Fashion-MNIST applications, NeuMap achieves a 9% and 37% reduction in spike messages. This is because the MLP is a kind of synapse-intensive network. The neurons of the same layer are easily distributed to many clusters when partitioning the entire network directly.

5.3. Mapping Performance

The hop of one spike message is the number of routers from the source core to the destination core. We illustrate the average hop of all spike messages in

Figure 13. NeuMap significantly reduces the average hop for all evaluated applications. The three evaluated methods employ different meta-heuristics algorithms to search for the

-

-

mapping scheme. SpiNeMap, SNEAP, and NeuMap employ the particle swarm optimization, simulated annealing, and tabu search algorithms to search for the best mapping scheme. The three searching algorithms are neighborhood search algorithms and aim to obtain the global optimum solution from the solution space. Unfortunately, as the solution space increases, the probability of those algorithms falling into the local optimum increases.

Both SpiNeMap and SNEAP treat all neuromorphic cores as the searching space, which makes them easily trapped in the local optimum. Furthermore, the probability of trapping in the local optimum is greater when the size of the neuromorphic hardware far exceeds the size of SNNs, such as MLP-MNIST and MLP-Fashion-MNIST. An effective practice to avoid trapping in the local optimum is to narrow the solution space. NeuMap sets the searching space as a square region, which guarantees that the selected cores can accommodate all partitioned clusters. Therefore, the searching space is only related to the size of SNNs. Therefore, even if the neuromorphic cores greatly outnumber the partitioned clusters, NeuMap can search for a better mapping solution than SpiNeMap and SNEAP. The reduction in average hop improves both spike latency and energy consumption on NoC.

5.4. Spike Latency on NoC

Figure 14 reports the spike latency of the six applications for the three evaluated approaches normalized to SpiNeMap. We make the following two observations.

First, the average spike latency of SNEAP is 42% lower than SpiNeMap. The main reason is that SNEAP reduces more spike messages than SpiNeMap, which alleviates the NoC congestion and, consequently, decreases the time to transmit the spike packets from the source core to the destination core. Second, NeuMap has the lowest average spike latency among all the evaluated methods (12% lower than SNEAP, 55% lower than SpiNeMap). There are three reasons accounting for this improvement. Firstly, NeuMap reduces the most spike messages among the three methods by using a local partitioning strategy. Secondly, in the mapping stage, NeuMap narrows the searching space, which avoids trapping in the local optimum. Thirdly, both the maximum link load and average hop are adopted as the optimization objectives in the searching process. Reducing the maximum link load can relieve the local congestion and balance the load of all physical links indirectly. Decreasing the average hop shortens the route path from the source core to the destination core. Furthermore, a short route path covers a few physical links, which decreases the congestion probability of the entire NoC.

5.5. Energy Consumption on NoC

This is the total energy consumption for transmitting the spike messages from the source core to the destination core.

Figure 15 illustrates the energy consumption of all the evaluated applications for three proposed methods normalized to SpiNeMap. We make the following two observations.

First, SNEAP has an average 67% lower energy consumption than SpiNeMap. This reduction is because SNEAP reduces more spike messages than SpiNeMap. Second, NeuMap has the lowest energy consumption of all our evaluated approaches (on average, 84% lower than SpiNeMap, 17% lower than SNEAP). Two reasons are responsible for this improvement. Firstly, NeuMap exploits the localized connections and reduces the most spike messages among the three mapping methods. Second, NeuMap narrows the searching space before seeking the

-

-

mapping scheme. The reduction in searching space helps NeuMap to avoid falling into the local optimum and increases the probability of obtaining a better mapping scheme. As shown in

Section 5.3, NeuMap significantly reduces the average route path of spike messages, which is the main reason for the reduction in power dissipation on NoC.

5.6. Performance Comparison on Recurrent SNN

Liquid state machine (LSM) was first proposed by Maass [

37], and is mainly composed of the

input,

liquid, and

readout layers. The synapses within the liquid layer are randomly generated and remain unchanged, leading to many recurrent connections in the liquid layer.

We use the Brian2 to create two LSM networks (800 excitatory and 200 inhibitory neurons in the liquid layer) with the LIF model. NMNIST [

38] and FSDD (

https://github.com/Jakobovski/free-spoken-digit-dataset, accessed on 26 July 2022), two spike-based datasets, are fed into the LSM networks, respectively.

Table 2 shows the comparison between the three mapping methods. We compare the number of spike messages on NoC under four partitioning methods, including SpiNeMap, SNEAP, NeuMap and random partition. As shown in

Table 2, there are the same number of spike messages for the four partitioning methods. This is because each neuron has large numbers of connections compared to the others and there are many recurrent connections in LSM. After the partition, the post-synaptic neurons of each neuron are distributed to all clusters.

In terms of the spike latency and energy consumption on NoC, NeuMap is superior to SpiNeMap and SNEAP. This is because NeuMap narrows the solution space and searches for a better mapping scheme than SpiNeMap and SNEAP.

6. Conclusions and Future Work

In this work, we introduce NeuMap, a toolchain to map SNN-based applications to neuromorphic hardware. NeuMap calculates the spike firing rates of all neurons to obtain communication patterns, which simplifies the mapping process. NeuMap then makes use of the local nature of connections and aggregates adjacent layers into a sub-network. The partition is only applied to each sub-network, which reduces the dispersion of neurons from the adjacent layers. Finally, NeuMap employs a meta-heuristic algorithm to search for the best -- mapping scheme in the narrowed search space. We evaluated NeuMap using six SNN-based applications. We showed that, compared to SpiNeMap and SNEAP, NeuMap reduces average energy consumption by 84% and 17% and has 55% and 12% lower spike latency, respectively. In this paper, the calculation of spike firing rate is only applied to the SNNs with a feed-forward topology such as spiking convolutional neural network.

In the future, we will exploit, such as the recurrent topology. In addition, other meta-heuristics algorithms, such as the hybrid harmony search algorithm [

39], can be used to find the best mapping scheme.