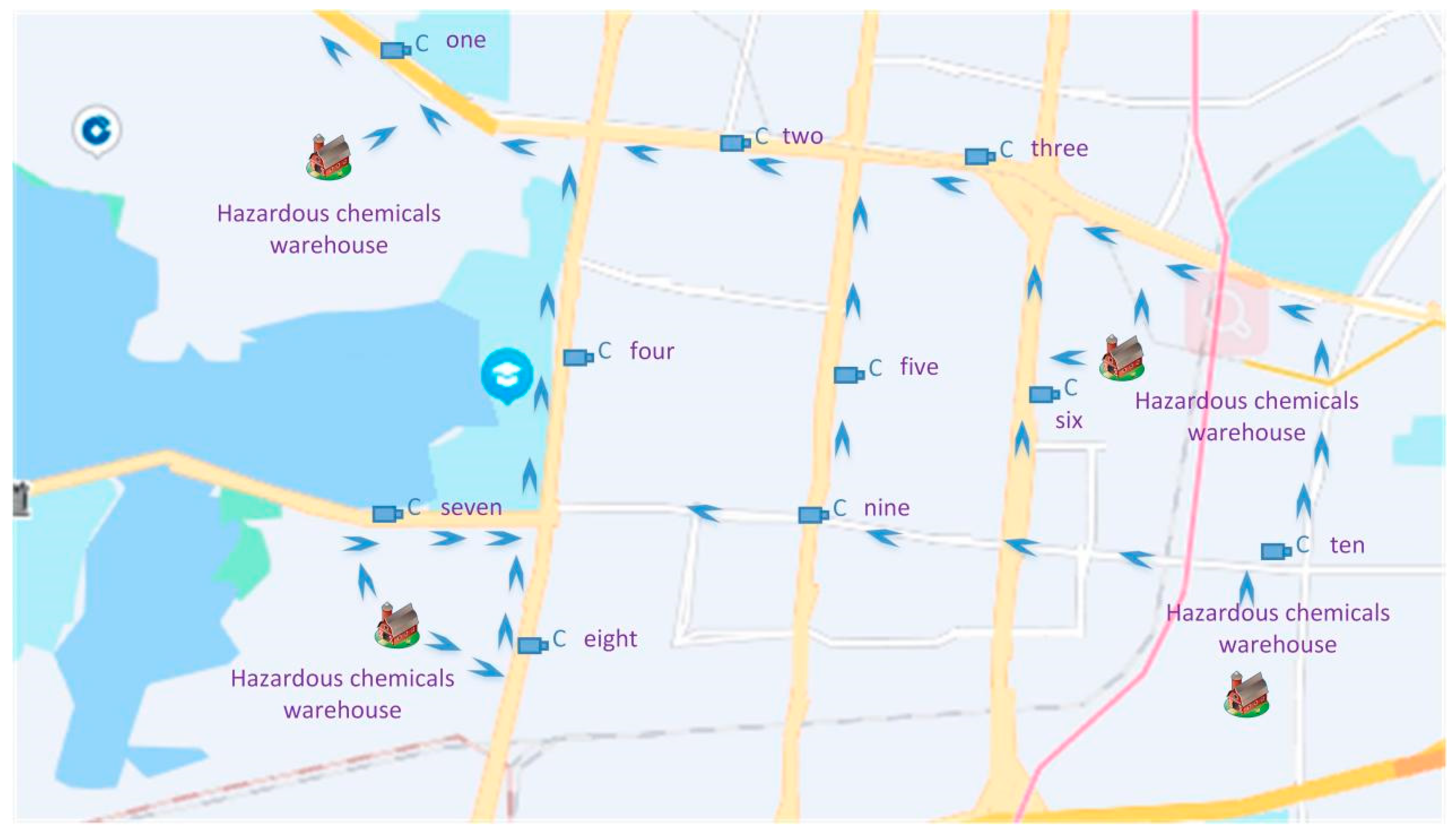

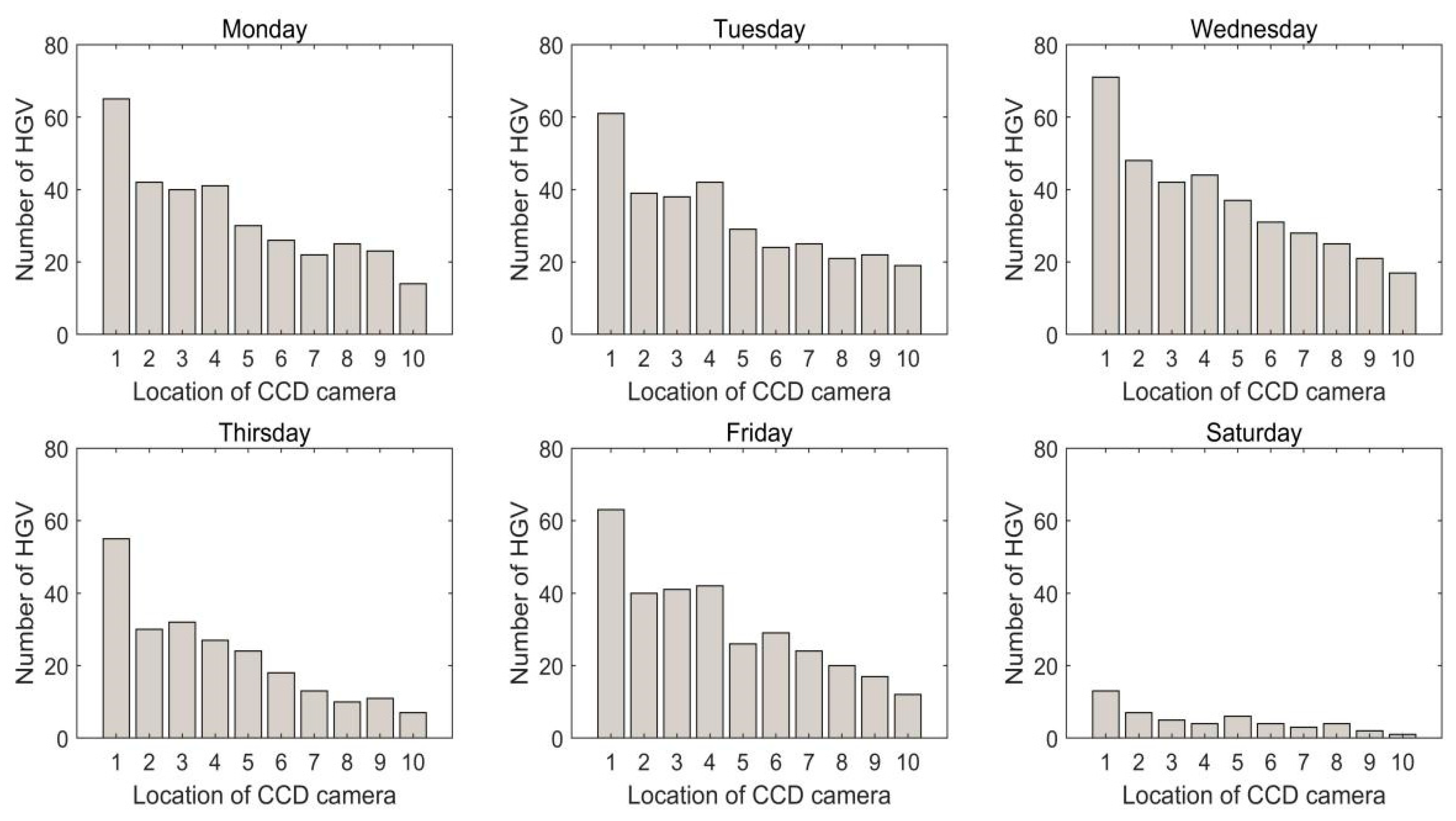

The safe production of road transportation for hazardous goods is related to the safety of life and property of the country and people, as well as national economic development and social harmony and stability. Hazardous goods are in a hazardous process, from coming out of the warehouse to arriving at the destination through vehicle transportation. Therefore, it is particularly important to supervise the transportation process of hazardous goods vehicles. The supervision of the transportation process of hazardous goods vehicles involves supervision of vehicle travel dynamics, the frequency of vehicles passing through a certain place, and accidents. The dynamic supervision of hazardous goods vehicle travel is realized mainly based on the GPS positioning of the hazardous goods vehicle. The specific frequency of passing through a certain place and accident conditions of the hazardous goods vehicles can be achieved by the number of times and continuous detection time of the hazardous goods vehicle detected by the camera. Due to the influence of environmental factors such as lighting conditions, partial occlusion, and messy background, the accuracy of hazardous goods vehicle detection will be greatly affected. In order to improve the reliability and accuracy of hazardous goods vehicle detection, foreign scholars have carried out a great deal of research on it.

Vehicle detection methods mainly include image-based detection methods and deep learning-based detection methods. The image-based detection method mainly detects vehicle targets through vehicle image features and directional gradient histogram features. For example, Arthi R et al. [

1] used the feature transformation of the image for vehicle classification and detection. Matos F et al. [

2] achieved vehicle detection by analyzing the edge features of vehicle images and combining principal component analysis. Although the vehicle detection method based on image features has low computational complexity and can detect vehicles quickly, it is difficult to detect it in the area where the vehicle has partial occlusion or illumination change. In view of this, Iqbal U et al. [

3] enhanced vehicle image features by fusing Sobel and SIFT features to realize vehicle detection. M.T. Pei et al. [

4] used Sobel edge detection to detect vehicles in parking spaces as well as realized the detection scores of different types of vehicles. S. Ghaffarian [

5] used a classifier based on fuzzy c-means clustering and super parameter optimization to detect vehicles and completed the location of vehicles in a parking space based on the vehicle detection results. Because the image of the vehicle itself has unique texture features, vehicle detection can be realized according to the vehicle texture features [

6]. At present, the main disadvantage of vehicle detection methods based on vehicle image texture features and vehicle edge features is that they are greatly affected by illumination and vehicle integrity. With the continuous development of deep learning [

7,

8,

9,

10], more and more scholars have begun to study vehicle detection based on the deep learning method. X.J. Shen et al. [

11] used a convolutional neural network to train vehicle images and trained models for vehicle detection. X. Xiang et al. [

12] proposed a vehicle detection method based on the Haar–Adaboosting algorithm and convolutional neural network. Tang T et al. [

13] proposed a super region candidate network, which can detect small vehicles photographed by distant cameras. In the process of vehicle detection, the vehicle is easily obscured by other objects. In order to solve the problem that the vehicle can be detected in the case of occlusion, Wang X et al. [

14] introduced countermeasure learning into the process of RCNN target detection, which improves the accuracy of vehicle detection. The advantage of the RCNN algorithm is high detection accuracy, but its detection speed is slow, so it is difficult to implement real-time detection of vehicles. In order to improve the detection efficiency, Lu J et al. [

15] introduced Yolo series algorithms to realize vehicle detection. The detection network is mainly based on an SSD network, which can detect vehicles quickly, but its accuracy is not particularly high. In order to improve the detection accuracy, Cao G et al. [

16] integrated cascade modules and element modules to improve the SSD network and realize high-precision vehicle detection. However, due to the integration of more modules, the detection speed decreases. At present, deep learning [

17,

18,

19] has received extensive attention in the field of vehicle detection. In the process of vehicle detection, a two-time-scale discrete-time system with multiple agents was used to optimize multi-vehicle detection [

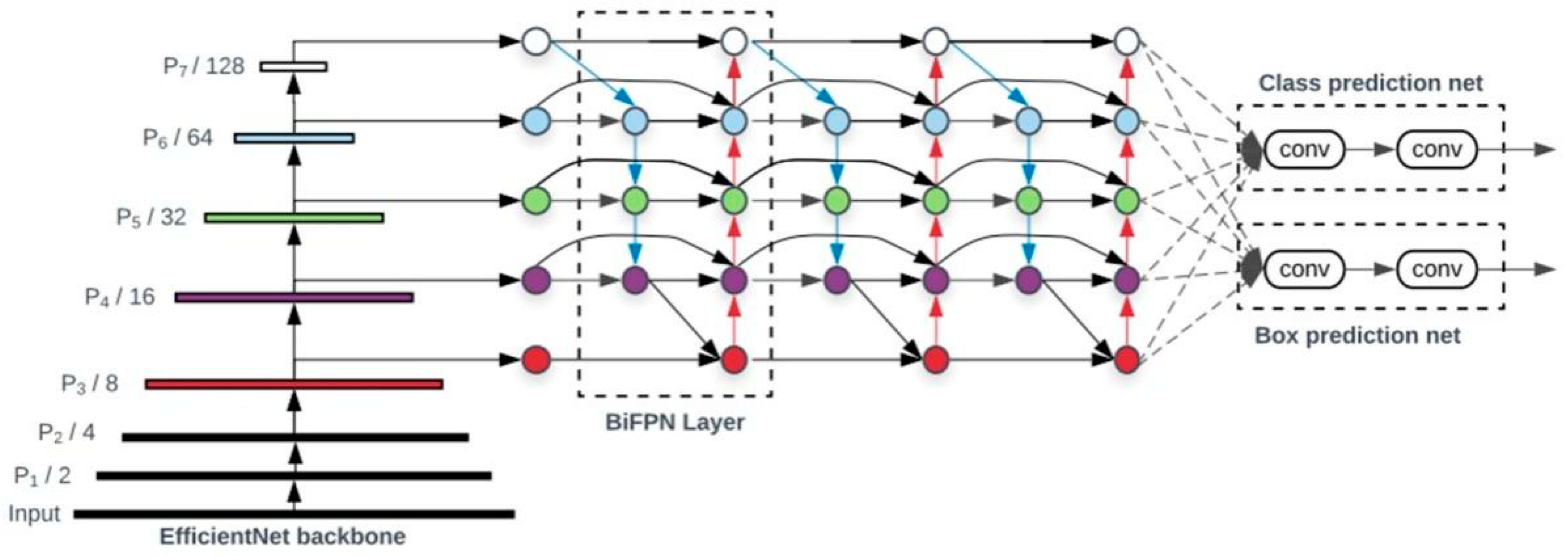

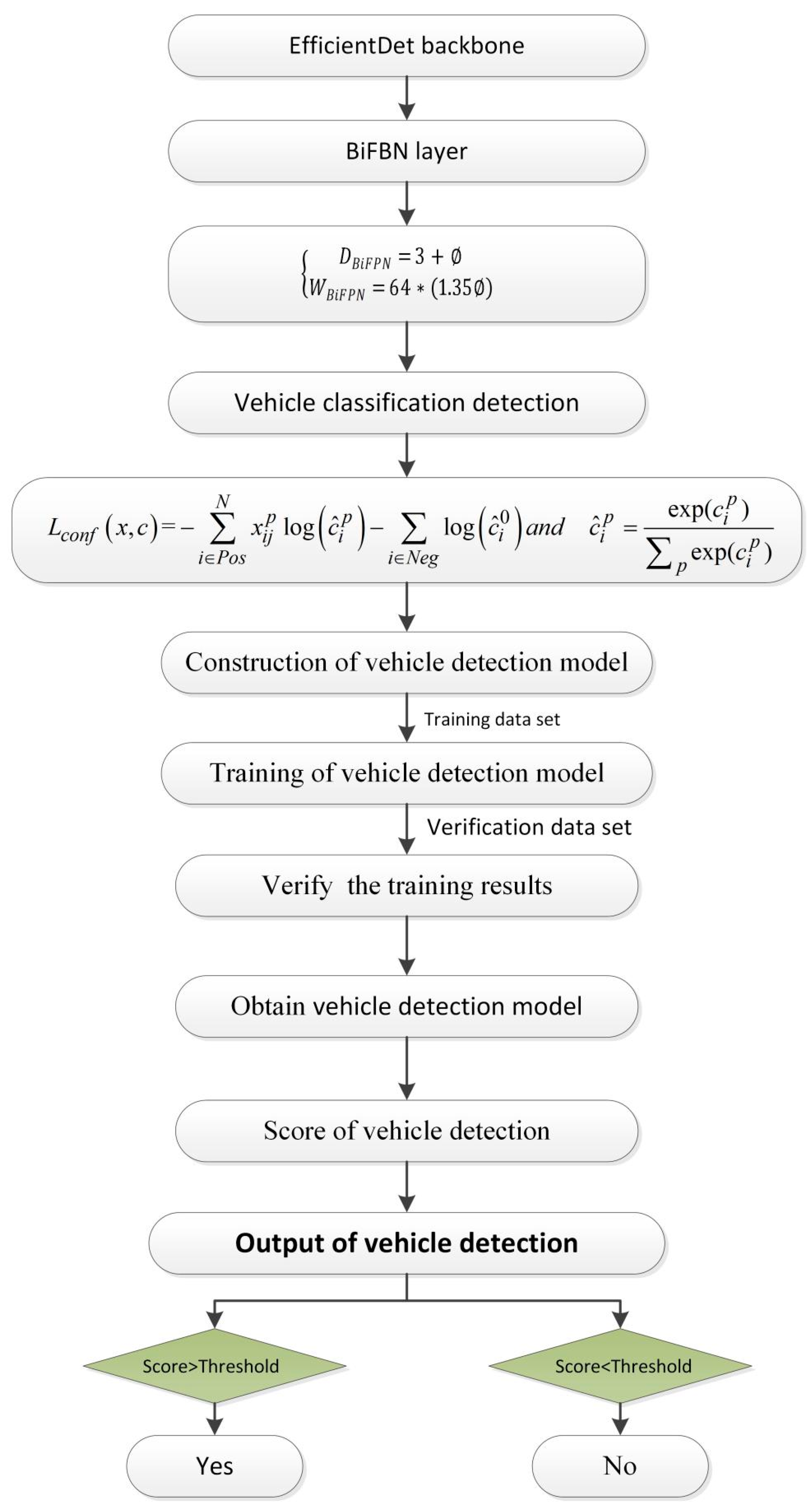

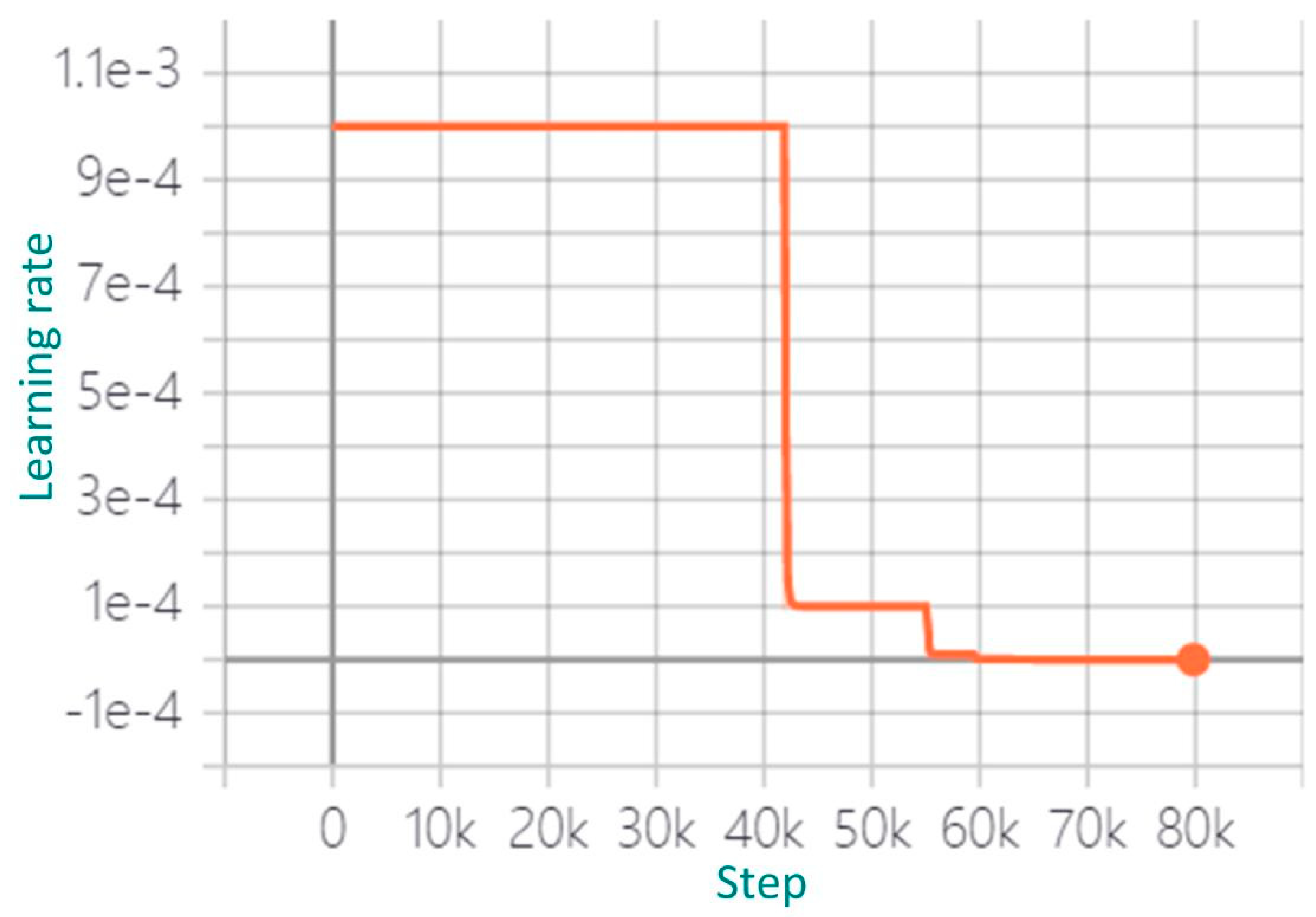

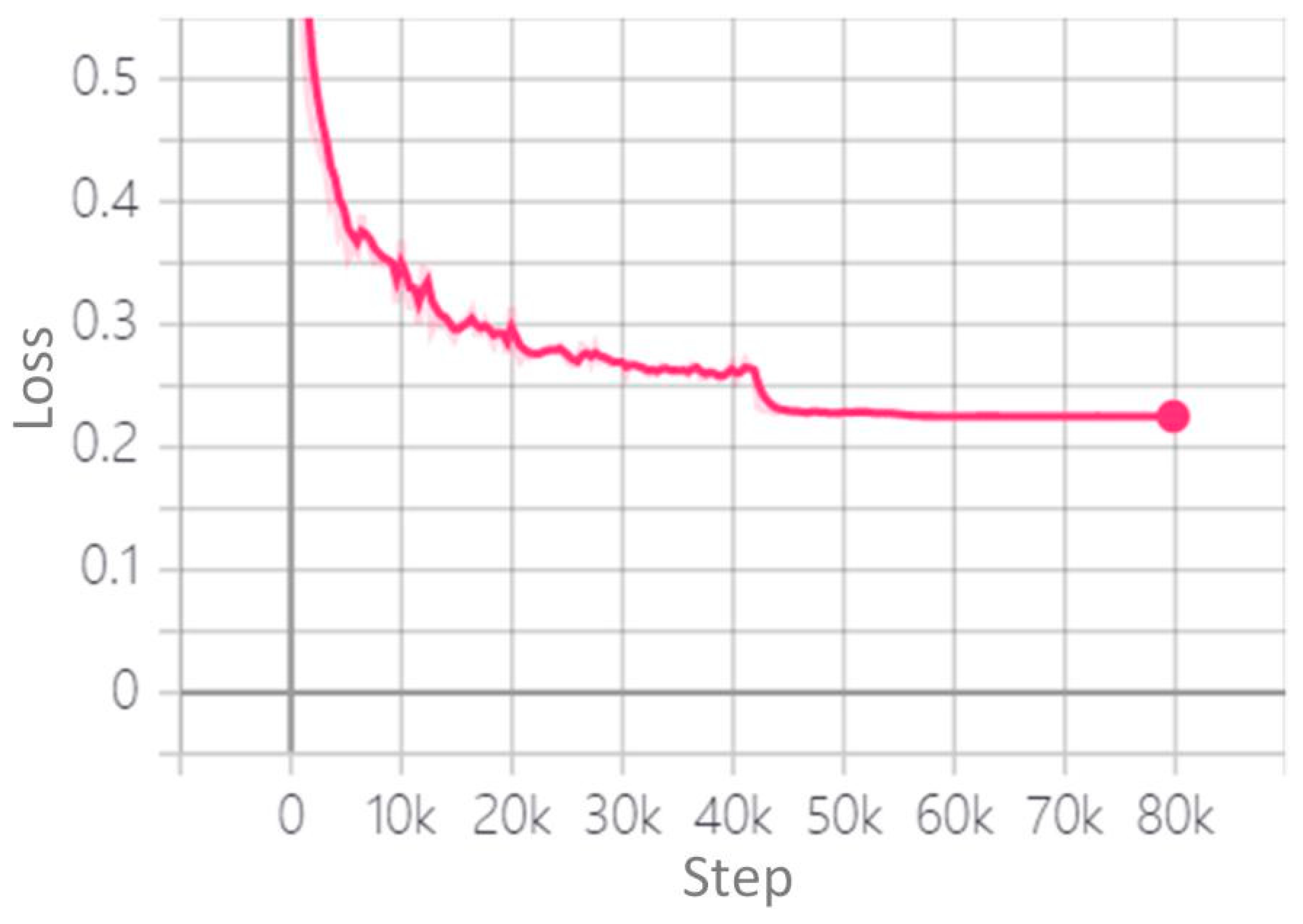

20]. Therefore, this paper will also use the deep learning method to implement vehicle detection. Specifically, we optimize the training stage of the deep learning EfficientDet model and build a phased training model to realize fast and accurate vehicle detection.