Abstract

In this paper, a cooperative search method for multiple UAVs is proposed to solve the problem of low efficiency of multi-UAV task execution by using a cooperative game with incomplete information. To improve search efficiency, CBBA (Consensus-Based Bundle Algorithm) is applied to designate the tasks area for each UAV. Then, Independent Deep Reinforcement Learning (IDRL) is used to solve Nash equilibrium to improve UAVs’ collaborations. The proposed reward function is smartly developed to guide UAVs to fly along the path with higher reward value while avoiding the collisions between UAVs during flights. Finally, extensive experiments are carried out to compare our proposed method with other algorithms. Simulation results show that the proposed method can obtain more rewards in the same period of time as other algorithms.

1. Introduction

Nowadays, unmanned aerial vehicles (UAVs), or drones, are widely used in the witness of a fast-paced development [1]. Equipped with radar, cameras and other equipment, UAVs can be used in military areas [2] such as for tracking, positioning and battlefield detection. However, due to the limitation of fuel load, it is difficult for a single UAV to search a large area. Compared with a single UAV, multiple UAVs can perform more complex tasks. Multiple UAVs sharing information and searching cooperatively can improve the efficiency of task execution. In the process of the search task, the path planning of the multi-UAV is a crucial problem [3].

For the above problem, many scholars have proposed some multi-UAV path planning algorithms. For instance, hierarchical decomposition is one of the effective way to solve the problem. The clustering algorithm is first used for the multi-UAV task assignment. Then the path planning is based on the Voronoi diagram [4] or genetic algorithm [5]. However, these path planning algorithms require a prior knowledge about the environment and centralized task assignment algorithms require a control center to communicate among UAVs, which is not suitable in dynamic scenarios. On the other hand, multi-agent reinforcement learning (MARL) is effective to solve the above problem. The essence of MARL is a stochastic game. MARL combines the Nash strategies of each state into a strategy for an agent while constantly interacting with the environment to update the Q value function in each state of the game. Nash equilibrium solution in MARL can replace the optimal solution to obtain an effective strategy [6].

In this paper, we explicit Independent Deep Reinforcement Learning (IDRL) to solve the problem of low efficiency when multiple UAVs perform tasks simultaneously. CBBA [7] (Consensus-Based Bundle Algorithm) is first used for task assignment for multiple UAVs under constraints of time and fuel consumption. Then the UAV chooses the best strategy to complete the task based on the states and actions of other UAVs. A new reward function is developed to guide the UAV to choose the path with high value and punish collisions between UAVs.

The main contributions of this paper are summarized as follows:

- (1)

- Different from the centralized path planning algorithm that a central controller is required for task allocation, a distributed path planning algorithm is designed in this paper. UAVs can communicate with each other for task allocation and path planning in a more flexible way.

- (2)

- A cooperative search method is proposed. Before selecting the next action, the UAV needs to adopt the corresponding cooperative method according to the incomplete information obtained to improve the efficiency of task execution.

- (3)

- A new reward function is proposed to avoid collisions between UAVs while guiding UAVs to target points.

2. Related Work

In this section, we review the literature that settles multi-UAV target assignment and path planning (MUTAPP), figure out their pros and cons and clarify the remaining gaps and challenges for further investigations.

MUTAPP problem is an NP-hard problem in essence, which implies there is no perfect solution to an NP-hard problem. However, for small- and/or medium-sized problems, it is possible to be solved. Hierarchical decomposition is one of the effective methods to solve MUTAPP [8], which decomposes the MUTAPP problem into task assignment and path planning.

At present, MUTAPP is mainly divided into traditional MTSP and objective function optimization problems. For traditional MTSP, Wang et al. [9] try to use genetic algorithms for task assignment and cubic spline interpolation for path planning. In [10], Liu et al. use Overall Partition Algorithm (OPA) for task assignment and use cycle transitions to generate shortest paths. Simulation results show that the proposed algorithm achieves better performance than traditional algorithms based on GA to solve MTSP problems. In [11], Dubins curves are used to model the UAV kinematics model to make the generated path more realistic. The improved particle swarm optimization algorithm based on heuristic information is proposed to solve MTSP. The results show that the proposed algorithm can generate paths in a small number of iterations. However, in practical applications, the multi-UAV system not only needs to consider the total flight distance, but also the efficiency of the task which is usually evaluated by the objective function.

With a single UAV and no altitude effects, the standard coverage path planning (CPP) problem has been studied extensively in the literature [12,13]. The objective function of CPP is defined as the area of the covered region. Miles et al. [14] proposes rectangle partition relaxation (RPR) algorithm to divide the UAV flight area. In [15], based on the single UAV algorithm, a density-based sub-region of UAV coverage with a unique role is proposed to optimize the coverage area. Xie et al. [16] provides a mixed-integer programming formula for CPP and develops two algorithms based on this method to solve the TSP-CPP problem. Based on this research, Xie extends the proposed algorithm in [17] and proposes a branch-and-bound-based algorithm to find the optimal route. Although these algorithms continuously optimize the UAV coverage area, it is difficult to evaluate the efficiency of UAV execution with a single constraint.

In [18], the objective function of the multi-UAV system is to minimize energy loss. K-means is used to assign tasks to multiple UAVs, and then genetic algorithm is used to generate specific paths. In [19], simulated annealing algorithm is used to increase the coverage area of the UAV. Reference [18] uses the more advanced k-means++; the experimental results show that the generated path is shorter than k-means. In [20], the Minimum Spanning Tree (MST) is used to generate trajectories and simulation results show that compared with other algorithms, the generated trajectories can obtain more rewards during task execution. Also, there are some algorithms [21,22,23,24,25] that use clustering algorithm to solve MUTAPP related problems. However, clustering algorithm is sensitive to noise points. If the task point is far from the central point, it will be assigned to the UAV separately, which is unrealistic.

Wang et al. [26] use MST to decompose MTSP into multiple TSPs, and then Ant colony algorithm is used to solve TSP. In [27], a fuzzy approach with a linear complexity level is used to convert the MTSP to several TSPs, then Simulated Annealing (SA) is used to solve each problem. Similarly, Cheng et al. [28] decouples the MTSP problem into TSP and solves the subproblems through sequential convex programming. Reference [29] propose a task allocation algorithm based on maximum entropy principle (MEP). Simulation results show that the proposed MEP algorithm achieves better performance than SA algorithm. Cao et al. [30] introduces Voronoi diagram method into Ant colony algorithm and the unmanned aerial vehicle cooperative task scheduling strategy which conclude task allocation and path planning is gained. Compared with clustering algorithm, these algorithms are more flexible in task allocation and the number of tasks performed by each agent is reduced through reasonable task allocation, which increases the execution efficiency of the algorithm. However, since the cooperation among agents is not considered, which would affect the efficacy of these algorithms.

MARL provides a new solution for MUTAPP problems; it models the decision-making process in the multi-agent environment as a random game where each agent needs to make decisions according to the strategies of other agents. MARL has become a prevalent method to solve the problem of multi-agent cooperation.

In [31], DRL is used to generate paths for data collected by multiple UAVs without prior knowledge. Reference [32] uses MADDPG for the cooperative control of four agents; the experimental results show that MADDPG has good performance in complex environments and successfully learns the strategy of multi-agent collaboration. However, with the instability of the environment caused by the increase in the number of agents, the proposed algorithm has certain difficulties in the joint action space. In [33], MADDPG is used to control the formation of multiple agents during transportation in order to prevent the agent from colliding with other agents on the way to the target point. Chen et al. [34] use MARL for the collaborative welding of multiple robots. The way of cooperation between robots is also to prevent collisions between agents.

Han et al. [35] use MADDPG for both task assignment and path planning, and a reward value function is designed to guide the UAV to the target point and avoid collisions between UAVs. In fact, the cooperative approach of avoiding conflict can improve the success rate of task execution but does not directly affect the efficiency of task execution. Also, the proposed algorithm only works in environments where each agent performs one task and cannot be used to solve the multiple traveling salesman problem. Moreover, the performance of value-based reinforcement learning is better than that of policy-based reinforcement learning in the task environment with few actions.

3. Overview of CBBA

In this section, we will review the CBBA algorithm, which is generally divided into two parts: bundle construction and conflict resolution.

3.1. Bundle Construction

In the process, each CBBA agent creates only one bundle and updates it during the allocation process. In the first phase of the algorithm, each agent keeps adding tasks to its bundle set until no other tasks can be added.

During the task assignment process, each agent needs to store and update the following four necessary information vectors: a bundle , the corresponding path , the winning agent list and the winning score list .

The sequence of tasks in the bundle is arranged according to the order in which the tasks are added to the collection, and the tasks in the path are arranged according to the order in which the tasks are best executed. Note that the vector size of and cannot be greater than the maximum assigned task number . is defined as the total reward score value of the task performing the task along the path . In CBBA, adding task j to bundle will result in an increase in marginal scores:

where |·| represents the vector size of the list, represents the insertion of a new element after the n-th element of the vector (in the later part of this article, will also be used to indicate the addition of a new element at the end of the vector). CBBA’s bundle scoring scheme inserts a new task into the position where the highest score increases, which will be the marginal score associated with the task in a given path. Therefore, if the task is already included in the path, there will be no extra scores.

The score function is initialized as , and the path and bundle are recursively updated to

where , , indicates an index function that having a value of 1 when the judgment result is true and a value of 0 when the judgment result is false. The bundle algorithm is continuously looped until or .

3.2. Conflict Resolution

In the conflict resolution phase, there are three aspects that need to be communicated to reach a consensus. The two vectors that have been introduced are the winning score list and the winning agent list . The third vector represents the timestamp of the last information update from each other agent. The time vector is updated by:

where is the message reception time.

When agent i receives a message from agent k, and are used to determine the information of which agent in each task is up to date. For task j, agent i has three possible actions:

- Update:

- Reset:

- Leave:

Table 1 in [7] outlines the decision rules for information interaction between agents.

Table 1.

Task area coordinates and ROR of Map (a).

If the elements in the winning score list change due to communication, each agent will check whether the updated or reset tasks were in their bundle. If the task is actually in the bundle, then this task and all other tasks added to the bundle later will be released:

where represents the n-th element of the bundle, and . It should be noted that the task that adding to the winning agent and the winning list after will be reset because the deletion can change all the task scores after . After completing the second phase of conflict resolution, the algorithm will return to the first phase and add a new task.

4. IDRL Based Path Planning Algorithm

Independent Reinforcement Learning (IRL) is widely and successfully applied in the field of multi-agent autonomous decision-making. This paper uses IDRL to solve Nash equilibrium in a cooperative game with incomplete information, and each UAV chooses the optimal strategy according to the states and actions of other UAVs to maximize the total rewards.

4.1. System Model

In this paper, we establish a model based on IDRL to enhance the efficiency of task execution through multi-UAV cooperation. We make the following assumptions:

- (1)

- Any two UAVs with intersected flight paths can communicate with each other to know the states and actions when the distance between them is less than a threshold. The game between UAVs belongs to incomplete information games.

- (2)

- Each UAV can choose the optimal strategy according to the state and action of other UAVs, so the game between UAVs belongs to cooperative games.

- (3)

- The UAVs do not choose actions at the same time, so the game between UAVs belongs to dynamic games.

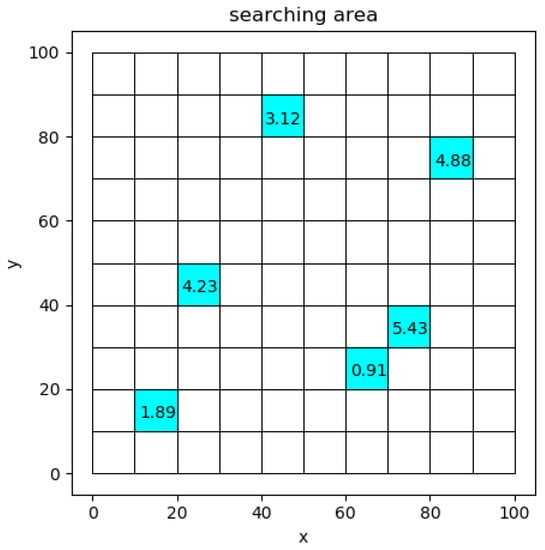

The task environment of multiple UAVs is briefly divided into two-dimensional grids, as shown in Figure 1. The blue part represents the task area to be executed.

Figure 1.

Grid partition of a searching area.

In the cooperative game with incomplete information, the objective function of UAVs is to maximize the search efficiency. ROR and revenue defined in [36] are used to evaluate the search efficiency of UAVs.

The detection function is used to estimate the detection ability in a probable target grid with time consumption . A common exponential form of regular detection function is given as:

where is a parameter related to the UAV equipment, represents time consumption.

When an UAV is searching in grid j, the revenue function defined as

where p(j) represents the target probability in grid .

The efficiency of a multi-UAV system to perform a search task is assessed by the amount of reward per unit of time earned by multiple UAVs. Therefore, the ROR of grid is introduced with a definition as:

where indicates that the ROR value decreases as the search time z increases. In other words, a lower ROR indicates that the area is searched more thoroughly.

We assume that each UAV knows the ROR value of all grids, as shown in Figure 1. The problem can be solved into strategies on CBBA and DRL.

4.2. Nash Equilibrium in MARL

In MARL, represents the expected reward of -th agent under the joint strategy . In a matrix game, if the joint strategy satisfies Equation (9), then the strategy is a Nash equilibrium.

The essence of MARL is a stochastic game. MARL combines the Nash strategies of each state into a strategy for an agent and constantly interacts with the environment to update the Q value function in each state of the game.

The random game consists of a tuple , represents the number of agents, is the state space of the environment, and is the action space of agent , is the probability matrix of state transition, is the reward function of agent i, and 𝛾 is the discount factor. For multi-agent reinforcement learning, the goal is to solve the Nash equilibrium strategy in each stage game and combine these strategies.

The optimal strategy of multi-agent reinforcement learning can be written as and for , it have to satisfy Equation (10).

represents the action value function. In each phase game of state , the Nash equilibrium strategy is solved by using as the reward of the game. According to Bellman’s formula in reinforcement learning, MARL’s Nash strategy can be rewritten as Equation (11).

In a random game, if the reward function of each agent is the same, the game is called complete cooperative game or team game. In order to solve the random game, stage game at each state needs to be solved, and the reward obtained by taking an action is .

4.3. Path Planning Algorithm Based on IDRL

4.3.1. Environment States

In the cooperative game, UAVs need to choose the optimal strategy according to the state and action of other UAVs. Thus, at timestep k, the state vector of the j-UAV is represented by:

where and represent the abscissa and ordinate of the j-UAV, respectively. represent the coordinates of the nearest UAV, represents the action of the nearest UAV at timestep k. represent the current task coordinates of j-UAV. The value of is 0 or 1, indicating whether the area surrounding the UAV has been searched by other UAVs.

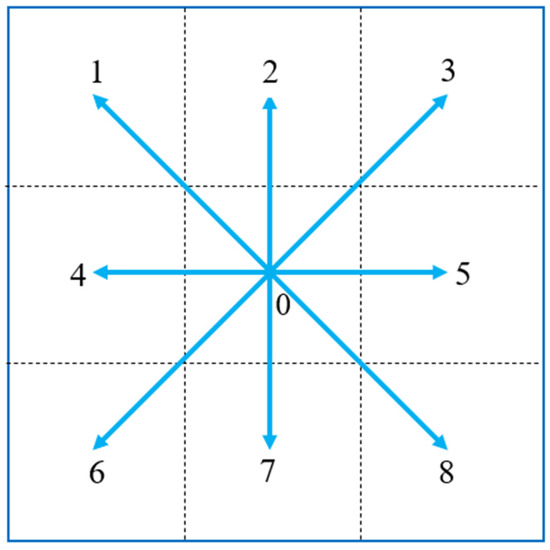

4.3.2. Discrete Action Set

Since the length of the grid in the task environment is much larger than the turning radius of the UAV, it can be assumed that the UAV moves in a straight line in the grid. As shown in Figure 2, when the UAV is in the grid 0, it can perform eight actions to go to the corresponding grid. The numbers in the grids represent eight actions, including: left up, up, right up, left, right, left down, down, and right down.

Figure 2.

The action set of the UAV. The numbers represent the numbers of the 9 grids respectively.

4.3.3. Reward Function

The reward function is used to evaluate the quality of the action. In fact, there are many factors that could affect the action selection of UAV, but within the scope of research, the following three factors are mainly considered:

- Choosing the shortest path to the destination.

- Encouraging actions passing high ROR areas.

- Preventing collisions between UAVs.

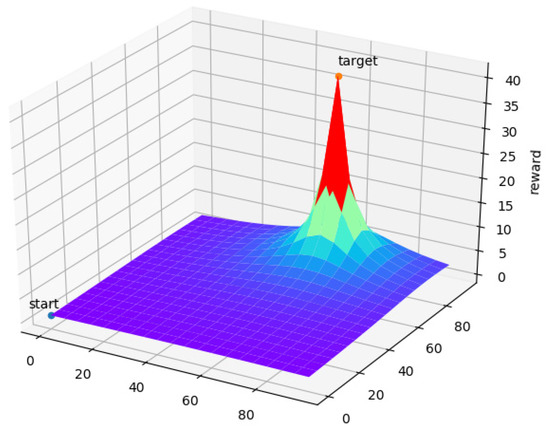

Choosing the shortest path to the target area is not always optimized for path planning, but still has a very high priority in the process. In order to prevent the reward value from being too sparse and speed up the convergence of the IDRL algorithm, a continuous reward function is proposed for the discrete environment. The reward for taking the shortest path is formulated as follows:

In which, is the integer that increases with the distance of UAV from the target point, is the current Euclidean distance from the UAV to the target point. We set the coordinates of -UAV as (), and the coordinates of the target point as (), then

In the process of reward value learning, if the reward values of adjacent states are too close, the algorithm may fall into the trap of local optimization due to insufficient training samples. Therefore, for the discovery rate ε, the discovery rate is set to 0.4 to encourage exploration at the beginning of searching for the optimal path. When the algorithm tends to converge, the discovery rate should be reduced to make it approach 0.

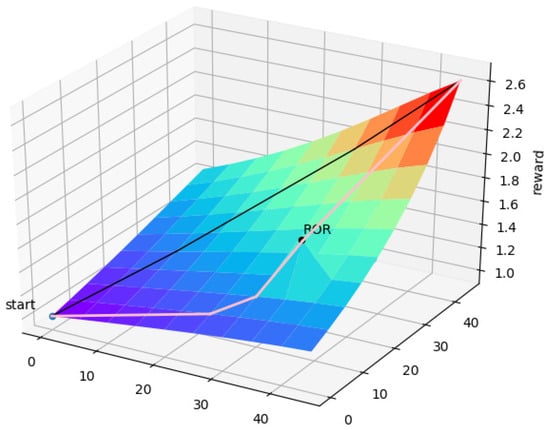

The reward function of is shown in Figure 3. By using the reward function , the UAV can choose the shortest path to the target point according to the reward value obtained.

Figure 3.

Image of reward function .

When UAVs perform search tasks in the same area without a preset mode of cooperation, different UAVs may detect repeated messages, causing meaningless time loss. In addition, performing search tasks in the same area can easily lead to UAV collisions.

To prevent collisions between UAVs, we add a small penalty when the distance between two UAVs is less than () in length.

where is the minimum distance between the -th UAV and the nearest UAV. is the length of the grid in the task model.

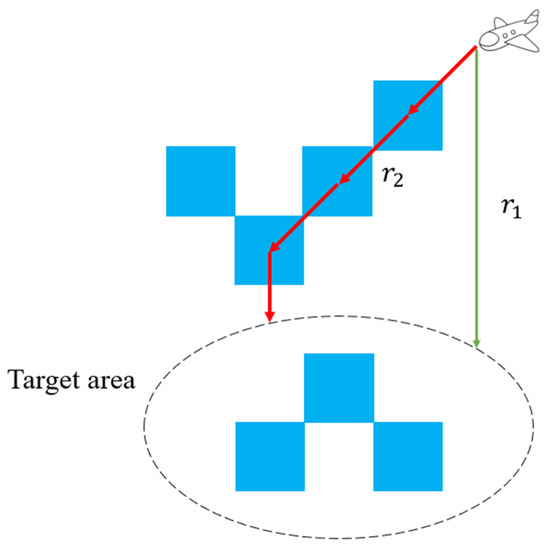

When an UAV flies to the assigned target area, the UAV needs to choose a reasonable path. Specifically, UAVs need to make decisions before moving to target areas. As shown in Figure 4, is the shortest path for the UAV to the target area. If the path is always the shortest route, the UAV will sometimes miss the target grids with high ROR values. Compared with the path , the path is a more reasonable path. In order to improve the efficiency of UAVs to perform search tasks, the reward function needs to guide the UAV to the target point while passing through the high ROR area on the way. Thus, the reward function is related to the ROR value of each grid. The combination of reward functions and is shown in Figure 5.

Figure 4.

Path planning.

Figure 5.

The combination of reward functions and .

When the reward function is used to train the UAV, the UAV will choose the straight path. When is combined with , the UAV will choose the detour path and will not fall into the local optimum.

However, when a task area has been searched by UAV, it will waste time for other UAVs to search this area again, so it is more reasonable to choose path . Therefore, UAV needs to decide which path to choose according to the following formula:

Therefore, the final reward value function is the sum of the reward values of all parts, each part of the reward value multiplied by an appropriate coefficient.

where is the coefficient for rewarding of each reward.

These coefficients represent the proportion of importance of each reward, which can be different between UAVs. Variation of these coefficients could alternate the output results. For example, getting more rewards can use a high value for the coefficient of , while avoiding collisions that can use a low value for the coefficient of .

5. Experiments and Discussions

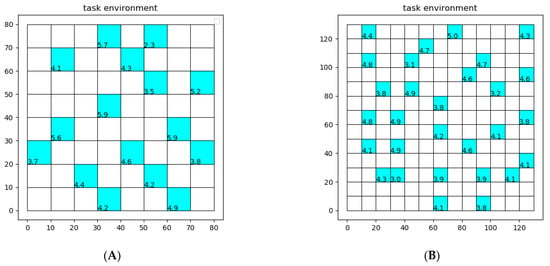

In this section, we build two simulated search task environments, with 16 points and 29 points, respectively. Experiments are carried out using the above method as well as other algorithms.

5.1. Simulation Environment

Map (a) in Figure 6A is 80 × 80 () while Map (b) in Figure 6B is 130 × 130 (). The coordinate and ROR of each task area in two maps are shown in Table 1 and Table 2.

Figure 6.

Task environment of two maps. (A) task environment of Map (a). (B) task environment of Map (b).

Table 2.

Task area coordinates and ROR of Map (b).

5.2. Parameters Setting

The parameter Settings of the experiment are shown in Table 3. In the experiment, parameters of CBBA and IDRL need to be set, respectively. For CBBA, the maximum number of tasks each UAV can carry out is 9. There is no termination time for each task, and the end condition of the UAV search task in each area is that the ROR of the current task area is less than 0.15 times the initial ROR of the task area.

Table 3.

Experimental parameter setting.

For IDRL, the number of iterations of UAV is 20,000 times. When each UAV completes its task, it stops moving and communicating.

5.3. Results and Discussions

We first compare our proposed algorithm with k-means algorithm and minimum spanning tree algorithm in the same simulation environment.

In terms of task allocation, the results of using the clustering algorithm on two maps are shown in Figure 7.

Figure 7.

Task assignment using clustering algorithm on two maps. (A) Map (a). (B) Map (b).

Compared with CBBA, the advantage of using clustering algorithm for task allocation is that the task areas of each UAV are concentrated, and the UAV will not collide with other UAVs during flight. However, the dispersed task area makes it difficult to cooperate among multiple UAVs.

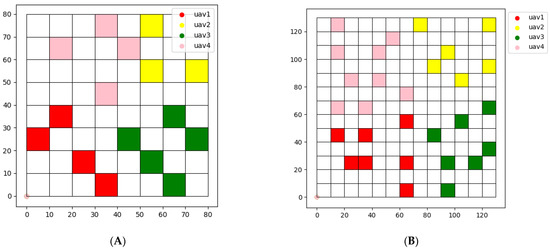

The result of using CBBA for task allocation is shown in Figure 8. For CBBA, since CBBA is essentially an auction algorithm, each UAV chooses tasks with the goal of maximizing rewards. Compared with clustering algorithm, the task area assigned by CBBA is more dispersed. At the same time, due to the consideration of time constraints, multiple UAVs can complete the tasks around the same time. The task completion time of each UAV using CBBA algorithm is shown in Table 4.

Figure 8.

Task assignment using CBBA on two maps. (A) Map (a). (B) Map (b).

Table 4.

CBBA task assignment results.

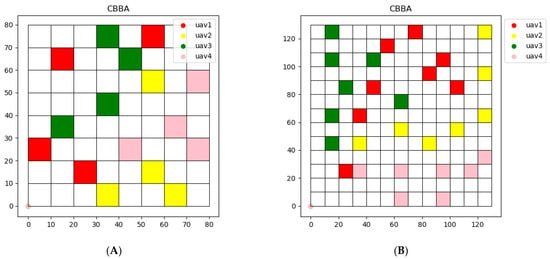

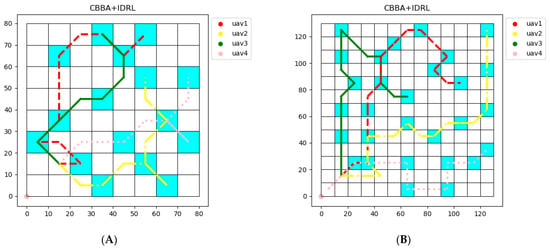

However, the disadvantage of using CBBA for path planning is that UAVs are prone to collision and crash, and the cooperation between UAVs is not considered in CBBA. IDRL overcomes the above shortcomings. As shown in Figure 9, collisions between UAVs can be avoided by using IDRL and UAVs can choose the path with higher rewards.

Figure 9.

Paths generated using CBBA and IDRL. (A) Map (a). (B) Map (b).

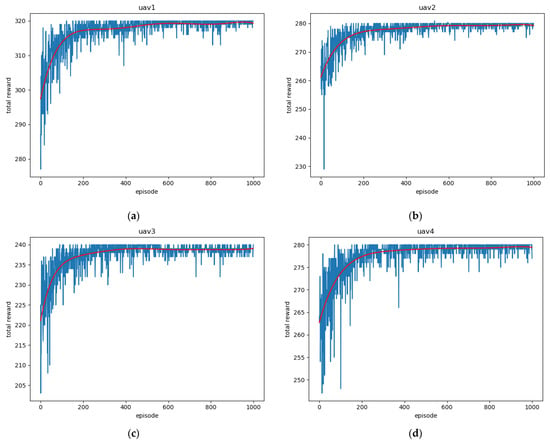

The reward value curves of UAVs in the training process are shown in Figure 10. The reward curve of the UAV in the training process represents the convergence of the algorithm. For map (b), with a more complex task model, as shown in Figure 9, the four agents will undergo a lot of trial and error at the beginning of training. The proposed algorithm is applied to map (b), convergence can be achieved in 1000 iterations, and the collision-free path can be formed eventually.

Figure 10.

Reward value curves of four UAVs during training: (a) UAV1; (b) UAV2; (c) UAV3 (d) UAV4.

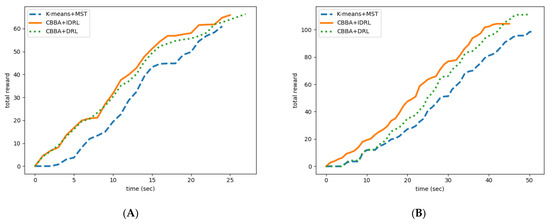

The changing rules of total revenue of the different algorithms are shown in Figure 11. In two experimental environments, our proposed algorithm can obtain more rewards in the same time period than the K-means+MST algorithm. It is proved that our proposed algorithm has higher search efficiency. In addition, in order to prove that the proposed algorithm can improve the efficiency of search task execution through cooperation, we compared the proposed algorithm with the classical DRL algorithm under the premise that CBBA task assignment is also adopted. The results show that although DRL can get more reward value after completing the task, the time to complete the task is higher than IDRL and the reward value obtained by DRL is lower than IDRL in the same time. It is proved that IDRL can improve the efficiency of task execution through cooperation.

Figure 11.

Total revenue variation in different algorithms. (A) Map (a). (B) Map (b).

The residual ROR after simulations is shown in Table 5. For CBBA and IDRL, the ROR of most target grids can be reduced to a lower level due to the reasonable path optimization. Though some grids are also fully searched in another algorithm, there are more target grids with high ROR. The results show that our proposed algorithm can search the area more thoroughly.

Table 5.

Task area coordinates and ROR.

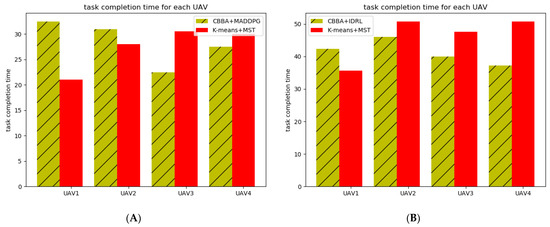

For a multi-UAV system, the time for each UAV to complete the task needs to be as short and close as possible. We compare the time for each UAV to complete the task between the two algorithms. As shown in Figure 12a, in small-scale scenarios, the proposed algorithm is close to the MST method in terms of time variance and mean value. However, in large-scale scenarios, our proposed algorithm shows obvious advantages, compared with k-means and MST algorithms, and the average time to complete the task with IDRL and the variance of the time to complete the task with four UAVs are smaller.

Figure 12.

Task completion time for each UAV. (A) Map (a). (B) Map (b).

6. Conclusions

This paper first summarizes the existing path planning algorithms and points out their shortcomings. Then the search task model is introduced. On this basis, a cooperative search method of multiple UAVs is proposed. For task points with different reward values, CBBA is first used for task assignment. Then we use IDRL for UAV path planning and propose a new reward function. The proposed reward function consists of three parts, which are respectively used to guide UAV to the target point, avoid collision between UAVs and encourage UAV to choose the path with higher rewards. Experimental results show that compared with the other method, our proposed method can obtain more reward values in the same time and it is feasible and effective for multi-UAV path planning. In our future work, our focus will be on the constraints of the UAV kinematics by integrat-ing the Dubins curve model, which would make the proposed framework more practical.

Author Contributions

Methodology, M.G.; writing—original draft preparation, X.Z.; writing—review and editing, X.Z. and M.G.; visualization, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Nomenclature

| Parameter | Definition |

| s | state of UAV |

| a | action of UAV |

| R | reward function |

| d | euclidean distance |

| ρ | switch for R3 |

| k | discount factor |

| π | strategy of the agent |

| p | action choice probability |

| yi | winning score list |

| zi | winning agent list |

| V | state value function |

| Q | action value function |

| P | state transition matrix |

| e | revenue function of the searched area |

| z | time |

| ε | search capability of UAV |

| α | learning rate |

| bi | bundle of agent |

| cij[bi] | score function |

| Lt | maximum assigned task number |

Abbreviations

The following abbreviations are used in this manuscript:

| CBBA | Consensus-based bundle algorithm |

| UAV | Unmanned aerial vehicle |

| IDRL | Independent deep reinforcement learning |

| MARL | Multi-agent reinforcement learning |

| MUTAPP | Multi-UAV target assignment and path planning |

| TSP | Traveling salesman problem |

| MTSP | Multiple traveling salesman problem |

| GA | Genetic algorithm |

| OPA | Overall partition algorithm |

| MST | Minimum spanning tree |

| MEP | Maximum entropy principle |

| SA | Simulated annealing |

| MADDPG | Multi-agent deep deterministic policy gradient |

| ROR | Rate of return |

| CPP | Coverage path planning |

References

- Moon, J.; Papaioannou, S.; Laoudias, C.; Kolios, P.; Kim, S. Deep Reinforcement Learning Multi-UAV Trajectory Control for Target Tracking. IEEE Internet Things J. 2021, 8, 15441–15455. [Google Scholar] [CrossRef]

- Yan, C.; Xiang, X. A Path Planning Algorithm for UAV Based on Improved Q-Learning. In Proceedings of the 2018 2nd International Conference on Robotics and Automation Sciences (ICRAS), Wuhan, China, 23–25 June 2018; pp. 1–5. [Google Scholar]

- Pei-bei, M.; Zuo-e, F.; Jun, J. Cooperative control of multi-UAV with time constraint in the threat environment. In Proceedings of the 2014 IEEE Chinese Guidance, Navigation and Control Conference, Yantai, China, 8–10 August 2014; pp. 2424–2428. [Google Scholar]

- Schouwenaars, T.; How, J.; Feron, E. Decentralized Cooperative Trajectory Planning of Multiple Aircraft with Hard Safety Guarantees. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, American Institute of Aeronautics and Astronautics, Providence, RI, USA, 16–19 August 2004. [Google Scholar]

- Li, L.; Gu, Q.; Liu, L. Research on Path Planning Algorithm for Multi-UAV Maritime Targets Search Based on Genetic Algorithm. In Proceedings of the 2020 IEEE International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 26–29 May 2020; pp. 840–843. [Google Scholar]

- Zhao, H.; Liu, Q.; Ge, Y.; Kong, R.; Chen, E. Group Preference Aggregation: A Nash Equilibrium Approach. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016; pp. 679–688. [Google Scholar]

- Choi, H.-L.; Brunet, L.; How, J.P. Consensus-Based Decentralized Auctions for Robust Task Allocation. IEEE Trans. Robot. 2009, 25, 912–926. [Google Scholar] [CrossRef]

- Chaieb, M.; Jemai, J.; Mellouli, K. A Hierarchical Decomposition Framework for Modeling Combinatorial Optimization Problems. Procedia Comput. Sci. 2015, 60, 478–487. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, W.; Zheng, Y.R. Dubins curves for 3D multi-vehicle path planning using spline interpolation. In Proceedings of the OCEANS 2017-Anchorage, Anchorage, AK, USA, 18–21 September 2017; pp. 1–5. [Google Scholar]

- Liu, J.; Zhang, Y.; Wang, X.; Xu, C.; Ma, X. Min-max Path Planning of Multiple UAVs for Autonomous Inspection. In Proceedings of the 2020 International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 21–23 October 2020; pp. 1058–1063. [Google Scholar]

- Qingtian, H. Research on Cooperate Search Path Planning of Multiple UAVs Using Dubins Curve. In Proceedings of the 2021 IEEE International Conference on Power Electronics, Computer Applications (ICPECA), Bhubaneswar, India, 22–24 January 2021; pp. 584–588. [Google Scholar]

- Han, W.; Li, W. Research on Path Planning Problem of Multi-UAV Data Acquisition System for Emergency Scenario. In Proceedings of the 2021 International Conference on Electronic Information Technology and Smart Agriculture (ICEITSA), Huaihua, China, 10–12 December 2021; pp. 210–215. [Google Scholar]

- Yaguchi, Y.; Tomeba, T. Region Coverage Flight Path Planning Using Multiple UAVs to Monitor the Huge Areas. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 1677–1682. [Google Scholar]

- Krusniak, M.; James, A.; Flores, A.; Shang, Y. A Multiple UAV Path-Planning Approach to Small Object Counting with Aerial Images. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–12 January 2021; pp. 1–6. [Google Scholar]

- Shao, X.-X.; Gong, Y.-J.; Zhan, Z.-H.; Zhang, J. Bipartite Cooperative Coevolution for Energy-Aware Coverage Path Planning of UAVs. IEEE Trans. Artif. Intell. 2022, 3, 29–42. [Google Scholar] [CrossRef]

- Xie, J.; Carrillo, L.R.G.; Jin, L. Path Planning for UAV to Cover Multiple Separated Convex Polygonal Regions. IEEE Access 2020, 8, 51770–51785. [Google Scholar] [CrossRef]

- Xie, J.; Chen, J. Multiregional Coverage Path Planning for Multiple Energy Constrained UAVs. IEEE Trans. Intell. Transp. Syst. 2022. [Google Scholar] [CrossRef]

- Pan, S. UAV Delivery Planning Based on K-Means++ Clustering and Genetic Algorithm. In Proceedings of the 2019 5th International Conference on Control Science and Systems Engineering (ICCSSE), Shanghai, China, 14–16 August 2019; pp. 14–18. [Google Scholar]

- Yue, X.; Zhang, W. UAV Path Planning Based on K-Means Algorithm and Simulated Annealing Algorithm. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 2290–2295. [Google Scholar]

- Ling, H.; Zhu, T.; He, W.; Zhang, Z.; Luo, H. Cooperative search method for multiple AUVs based on target clustering and path optimization. Nat. Comput. 2019, 20, 3–10. [Google Scholar] [CrossRef]

- Steven, A.; Hertono, G.F.; Handari, B.D. Implementation of clustered ant colony optimization in solving fixed destination multiple depot multiple traveling salesman problem. In Proceedings of the 2017 1st International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 15–16 November 2017; pp. 137–140. [Google Scholar]

- Almansoor, M.; Harrath, Y. Big Data Analytics, Greedy Approach, and Clustering Algorithms for Real-Time Cash Management of Automated Teller Machines. In Proceedings of the 2021 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT), Zallaq, Bahrain, 29–30 September 2021; pp. 631–637. [Google Scholar]

- Zhou, S.; Lin, K.-J.; Shih, C.-S. Device clustering for fault monitoring in Internet of Things systems. In Proceedings of the 2015 IEEE 2nd World Forum on Internet of Things (WF-IoT), Milan, Italy, 14–16 December 2015; pp. 228–233. [Google Scholar]

- Trigui, S.; Koubâa, A.; Cheikhrouhou, O.; Qureshi, B.; Youssef, H. A Clustering Market-Based Approach for Multi-robot Emergency Response Applications. In Proceedings of the 2016 International Conference on Autonomous Robot Systems and Competitions (ICARSC), Bragana, Portugal, 4–6 May 2016; pp. 137–143. [Google Scholar]

- Tang, Y. UAV Detection Based on Clustering Analysis and Improved Genetic Algorithm. In Proceedings of the 2021 International Conference on Electronic Communications, Internet of Things and Big Data (ICEIB), Yilan County, Taiwan, 10–12 December 2021; pp. 4–9. [Google Scholar]

- Wang, J.; Meng, Q.H. Path Planning for Nonholonomic Multiple Mobile Robot System with Applications to Robotic Autonomous Luggage Trolley Collection at Airports. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 2726–2733. [Google Scholar]

- Hassanpour, F.; Akbarzadeh-T, M.-R. Solving a Multi-Traveling Salesmen Problem using a Mamdani Fuzzy Inference Engine and Simulated Annealing Search Algorithm. In Proceedings of the 2020 10th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 29–30 October 2020; pp. 648–653. [Google Scholar]

- Cheng, Z.; Zhao, L.; Shi, Z. Decentralized Multi-UAV Path Planning Based on Two-Layer Coordinative Framework for Formation Rendezvous. IEEE Access 2022, 10, 45695–45708. [Google Scholar] [CrossRef]

- Baranwal, M.; Roehl, B.; Salapaka, S.M. Multiple traveling salesmen and related problems: A maximum-entropy principle based approach. In Proceedings of the 2017 American Control Conference (ACC), Seattle, WA, USA, 24–26 May 2017; pp. 3944–3949. [Google Scholar]

- Ze-ling, C.; Qi, W.; Ye-qing, Y. Research on Optimization Method of Multi-UAV Collaborative Task Planning. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; pp. 1–6. [Google Scholar]

- Bayerlein, H.; Theile, M.; Caccamo, M.; Gesbert, D. Multi-UAV Path Planning for Wireless Data Harvesting with Deep Reinforcement Learning. IEEE Open J. Commun. Soc. 2021, 2, 1171–1187. [Google Scholar] [CrossRef]

- Wang, Z.; Wan, R.; Gui, X.; Zhou, G. Deep Reinforcement Learning of Cooperative Control with Four Robotic Agents by MADDPG. In Proceedings of the 2020 International Conference on Computer Engineering and Intelligent Control (ICCEIC), Chongqing, China, 6–8 November 2020; pp. 287–290. [Google Scholar]

- Miyazaki, K.; Matsunaga, N.; Murata, K. Formation path learning for cooperative transportation of multiple robots using MADDPG. In Proceedings of the 2021 21st International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, 12–15 October 2021; pp. 1619–1623. [Google Scholar]

- Chen, W.; Hua, L.; Xu, L.; Zhang, B.; Li, M.; Ma, T.; Chen, Y.-Y. MADDPG Algorithm for Coordinated Welding of Multiple Robots. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), Dalian, China, 15–17 July 2021; pp. 1–5. [Google Scholar]

- Han, Q.; Shi, D.; Shen, T.; Xu, X.; Li, Y.; Wang, L. Joint Optimization of Multi-UAV Target Assignment and Path Planning Based on Multi-Agent Reinforcement Learning. IEEE Access 2019, 7, 146264–146272. [Google Scholar]

- Baum, M.; Passino, K. A Search-Theoretic Approach to Cooperative Control for Uninhabited Air Vehicles. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Monterey, CA, USA, 5–8 August 2002. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).