Real-Time Prediction of Growth Characteristics for Individual Fruits Using Deep Learning

Abstract

:1. Introduction

2. Material and Methods

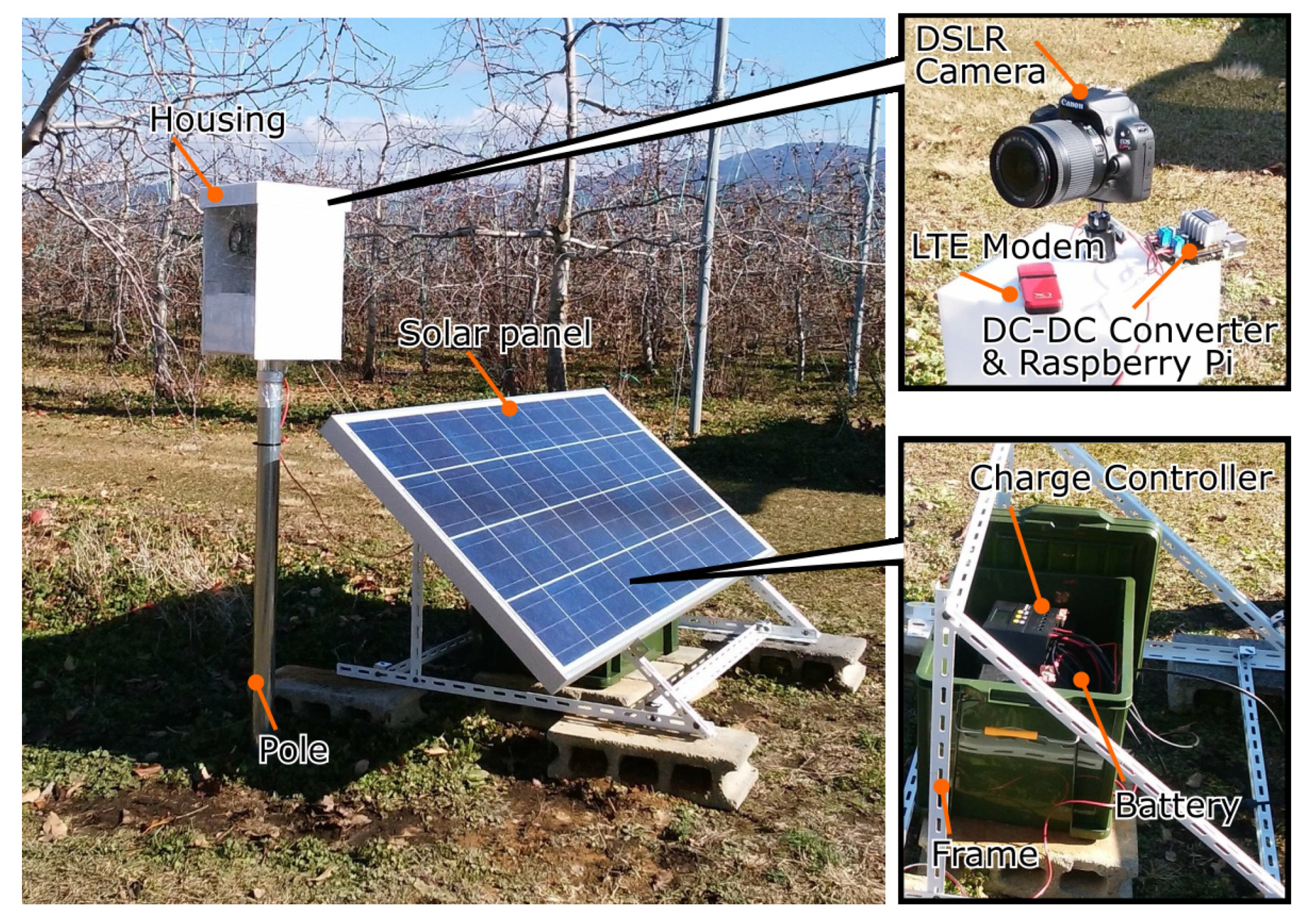

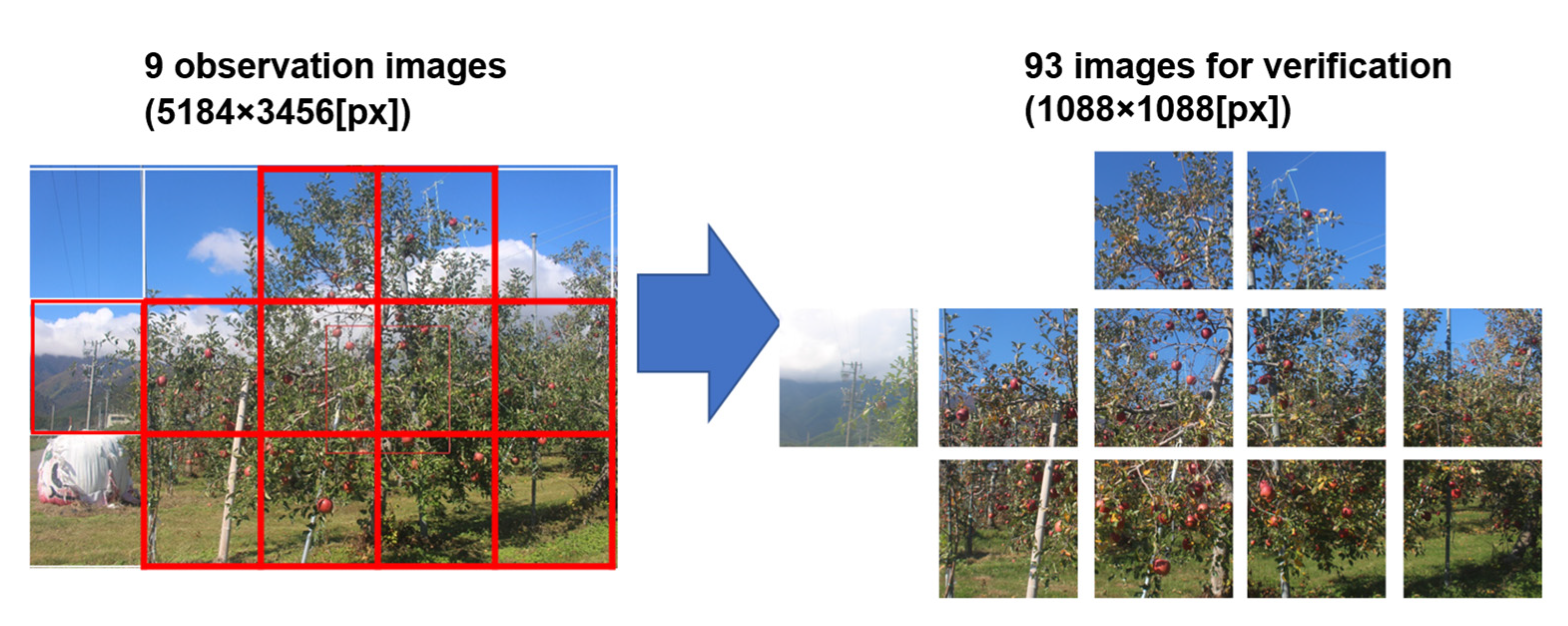

2.1. Monitoring System and Data Set

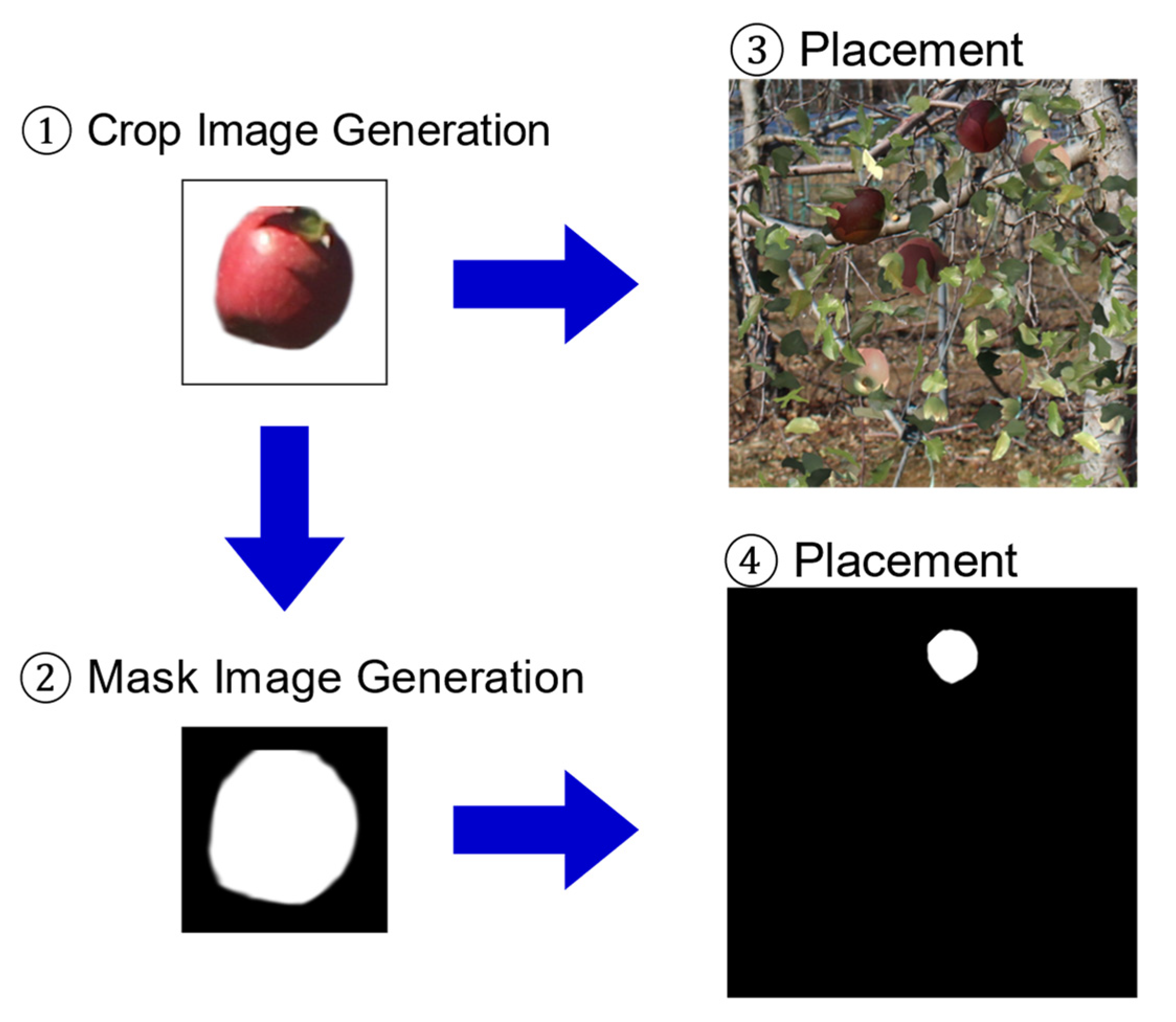

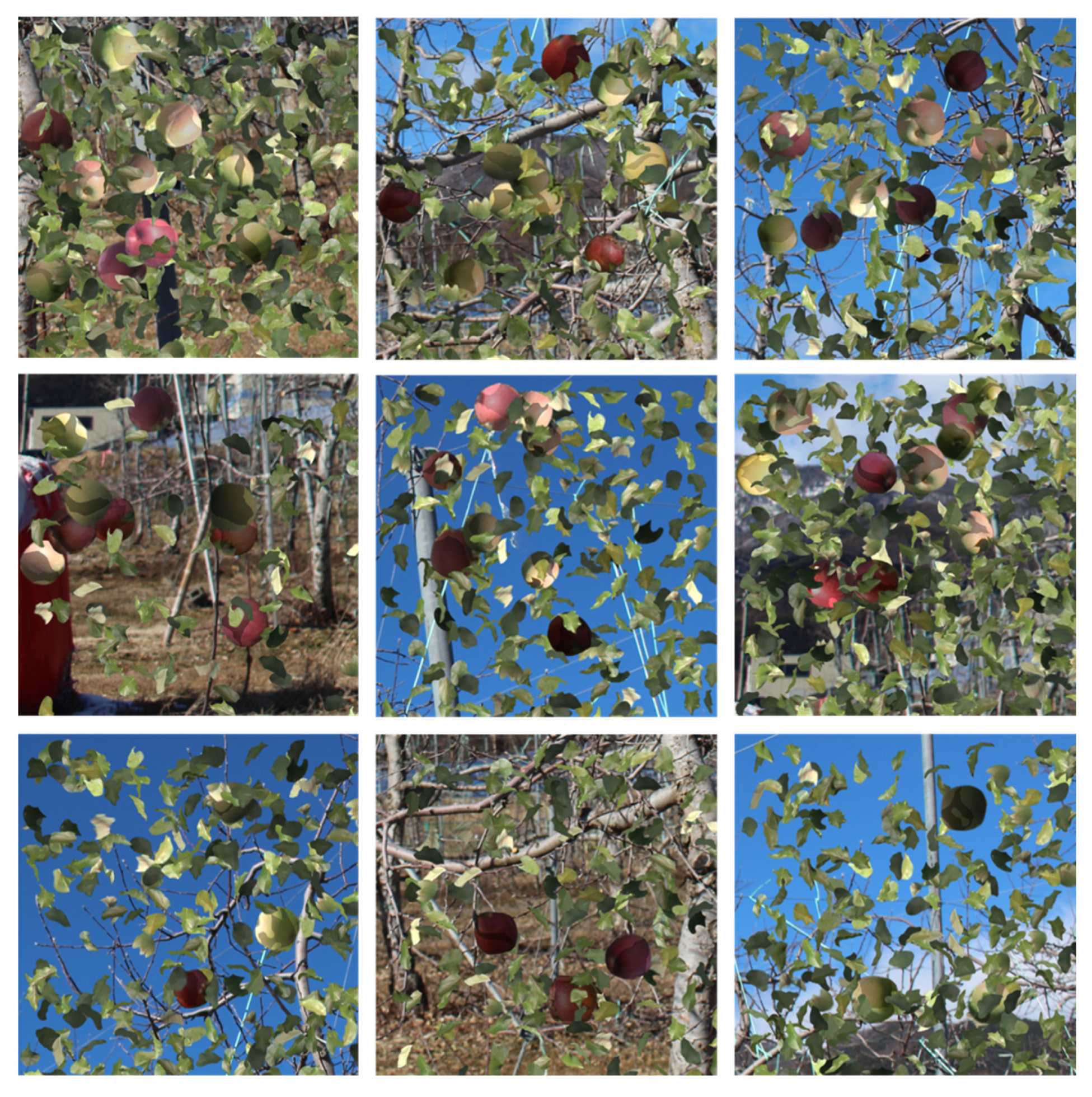

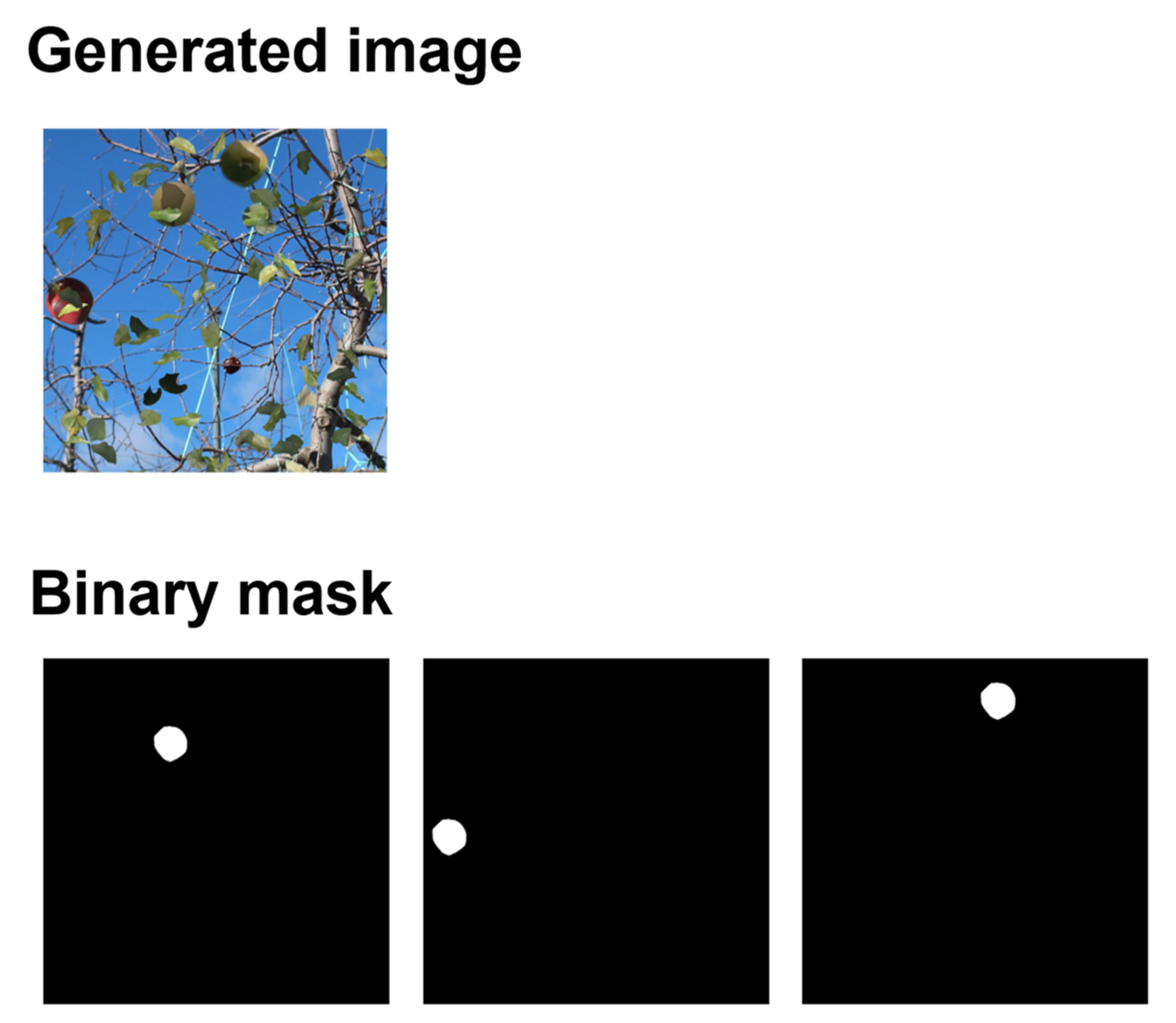

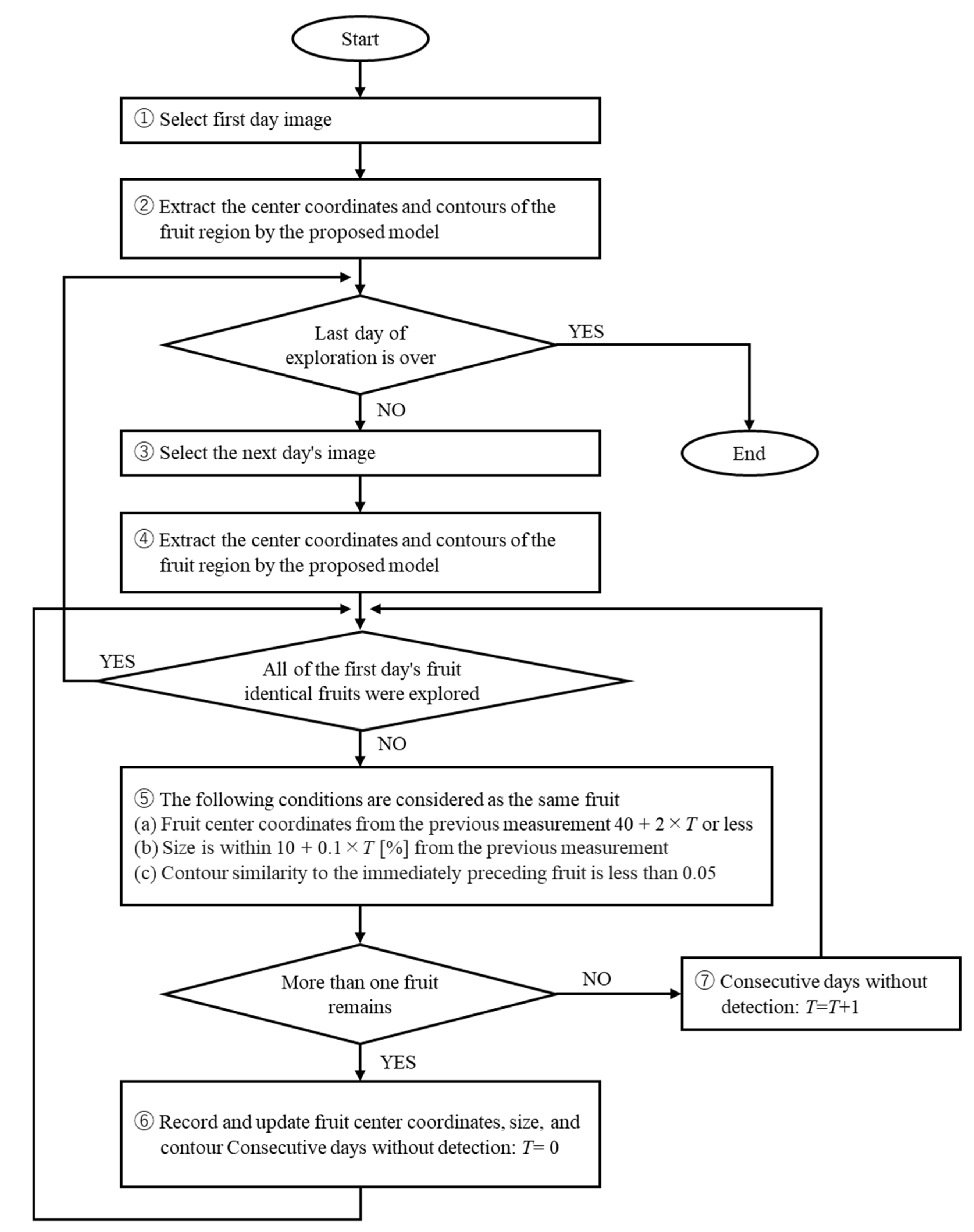

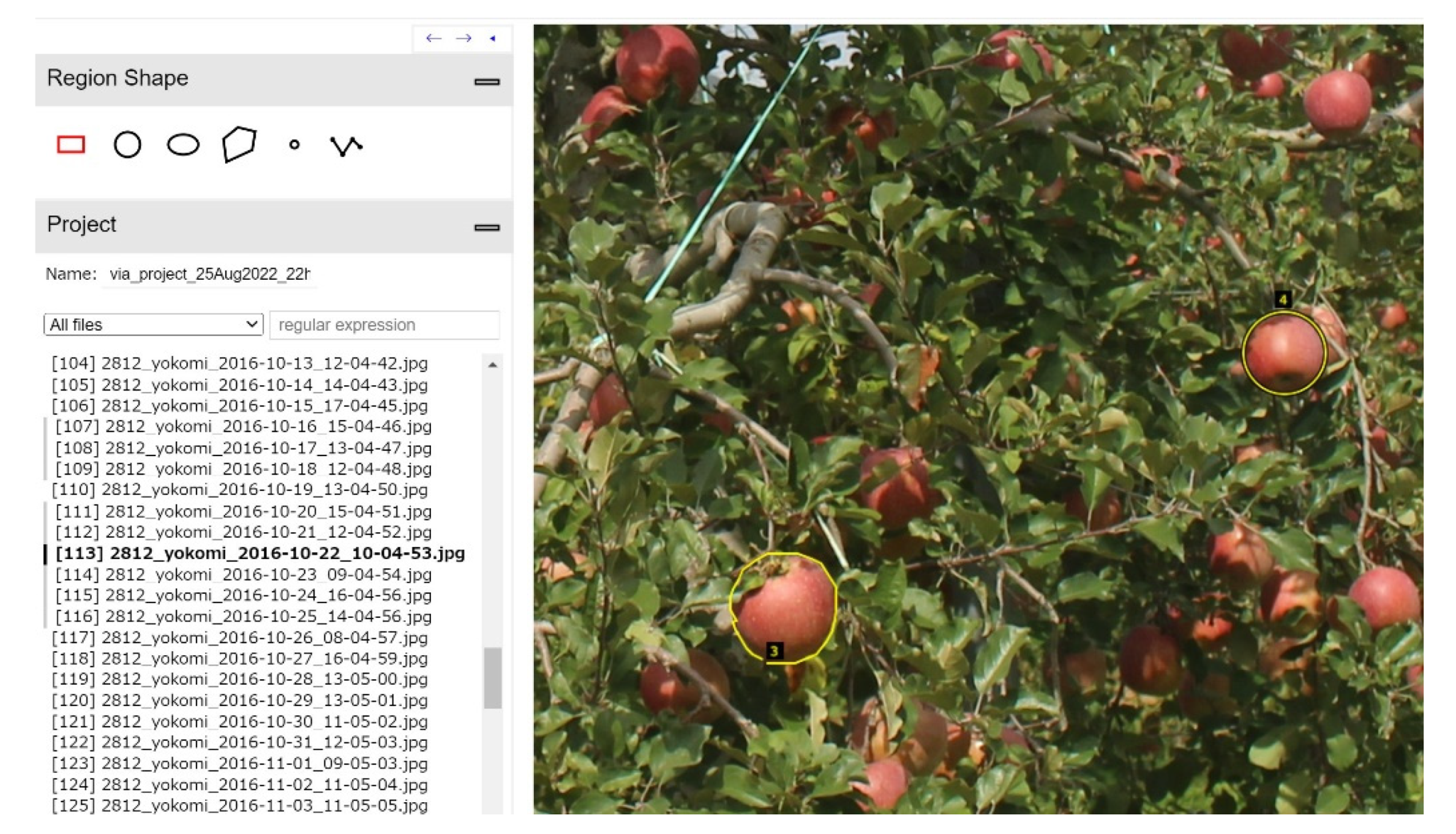

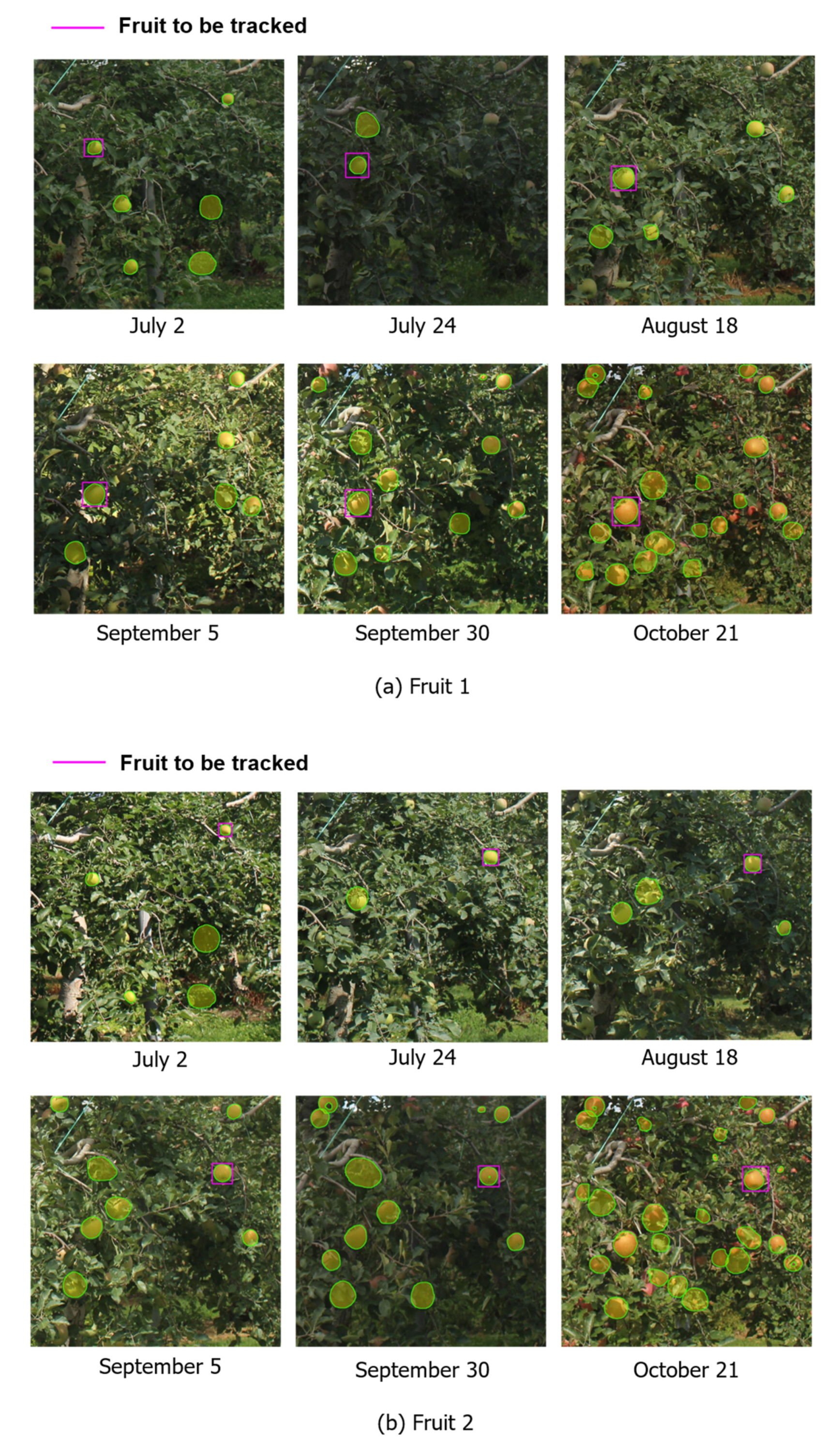

2.2. Training Data Generation and Individual Fruit Identification System

2.3. Verification of the Accuracy of Fruit Detection

2.4. Verification of the Accuracy of Growth Curve Predictions

3. Results and Discussion

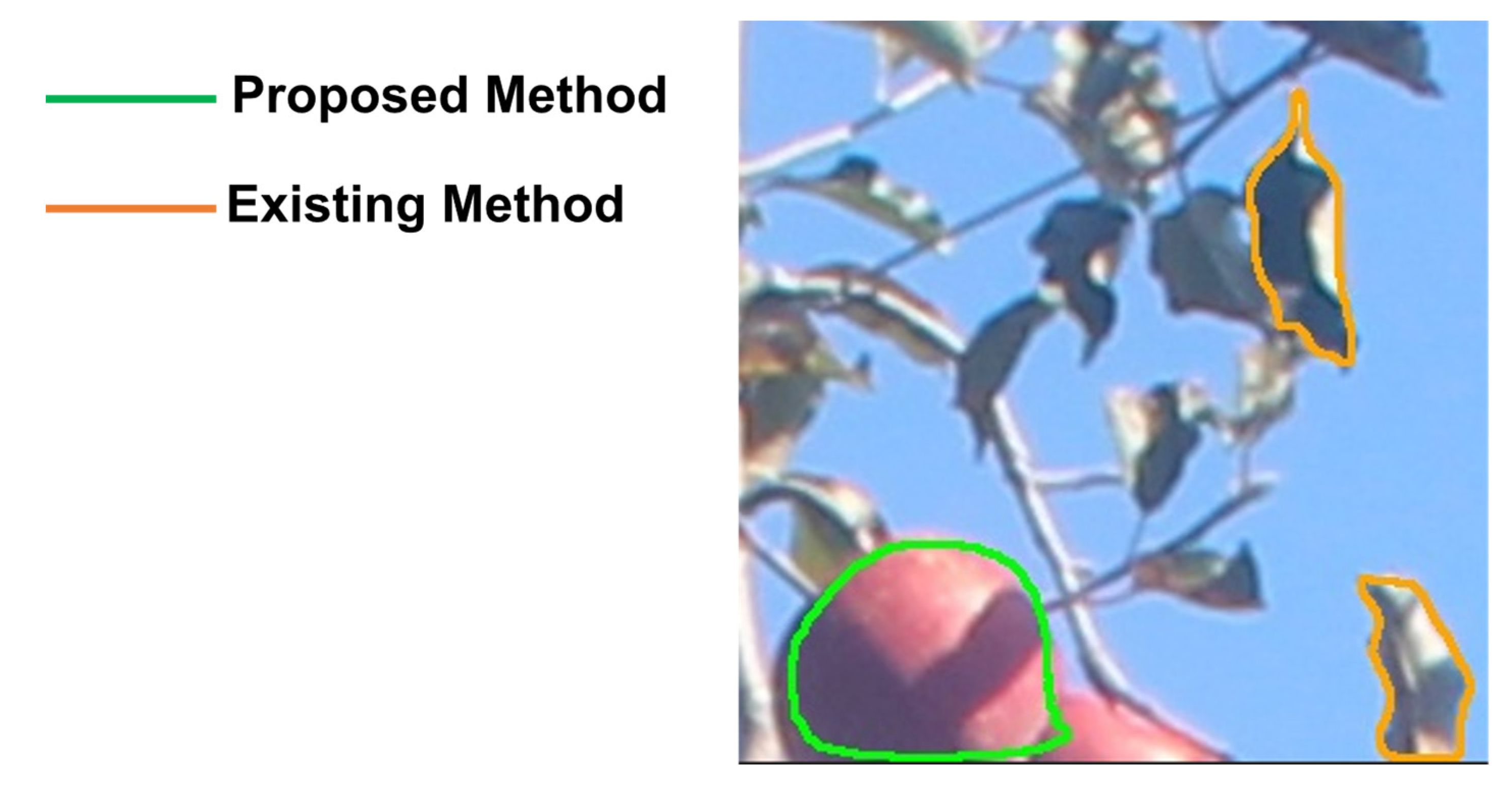

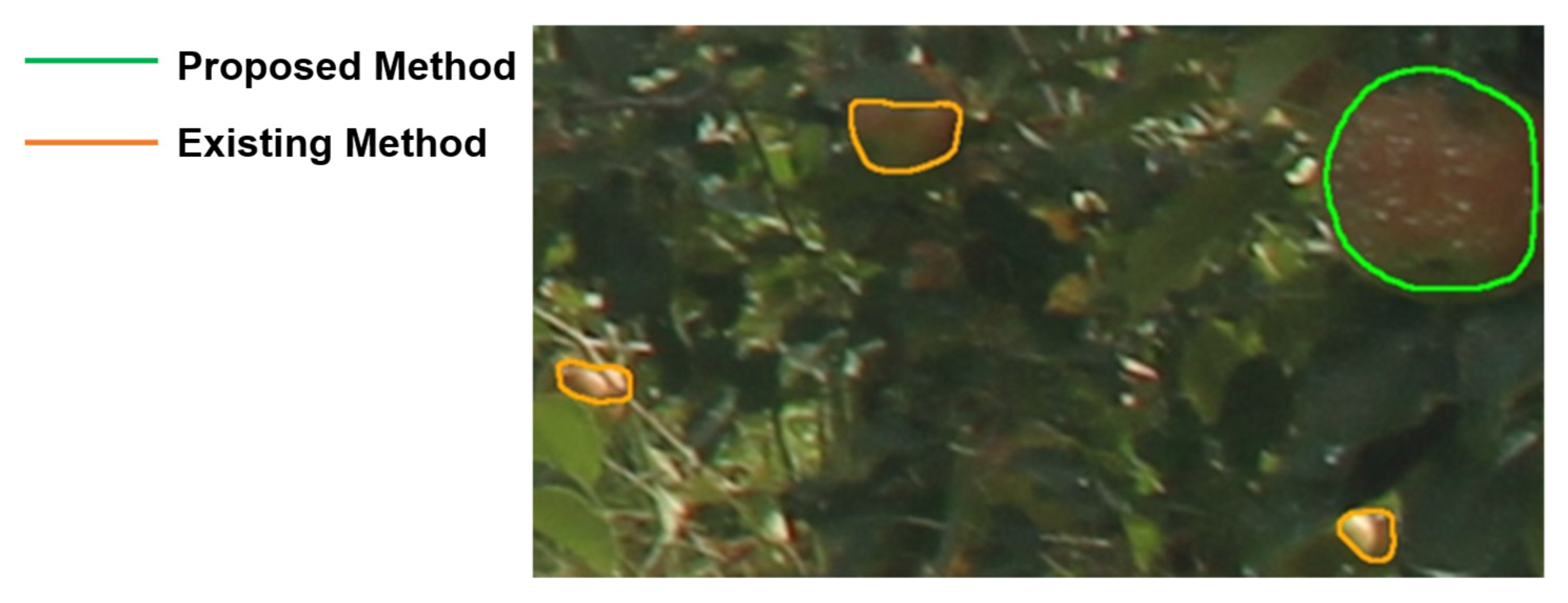

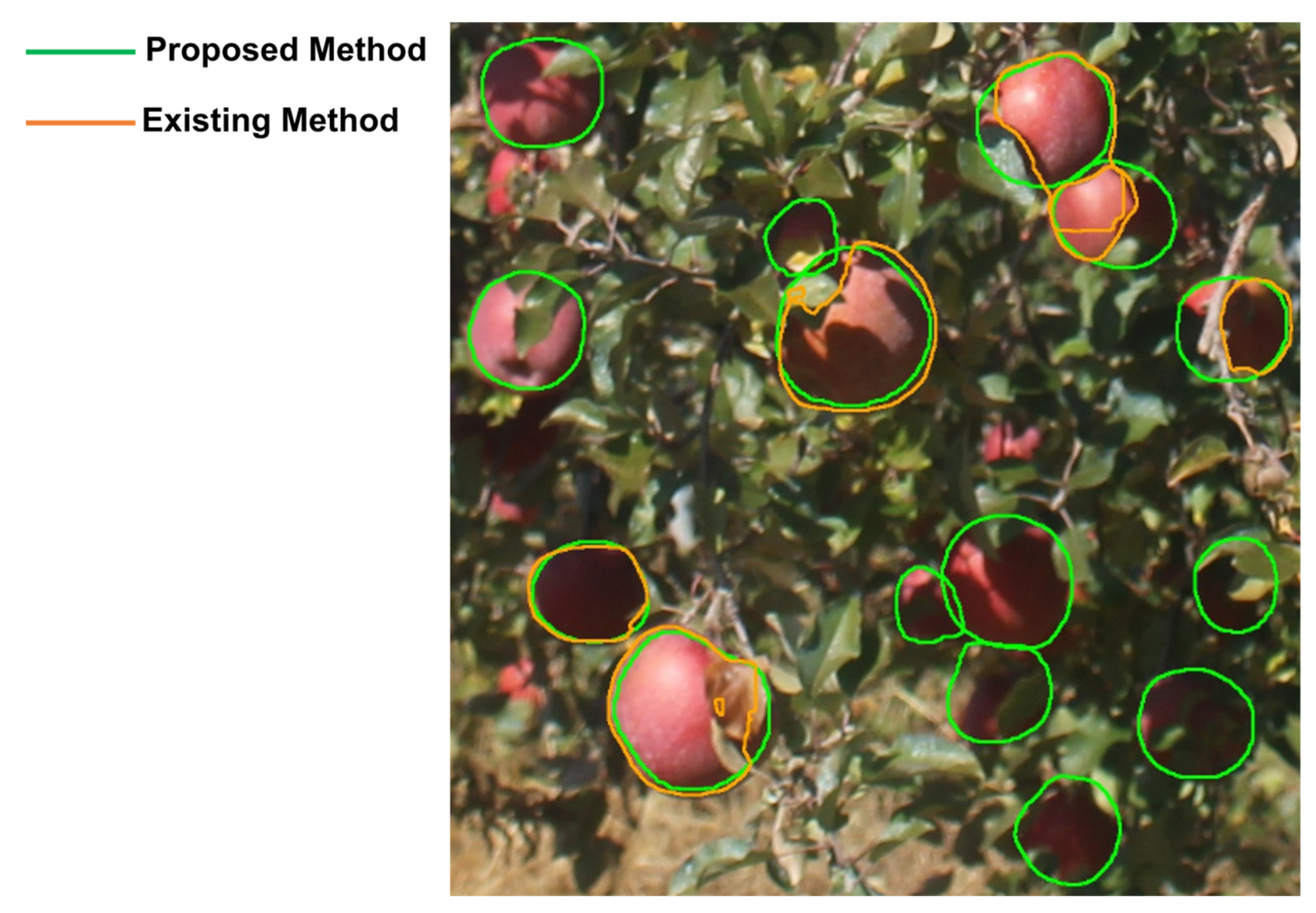

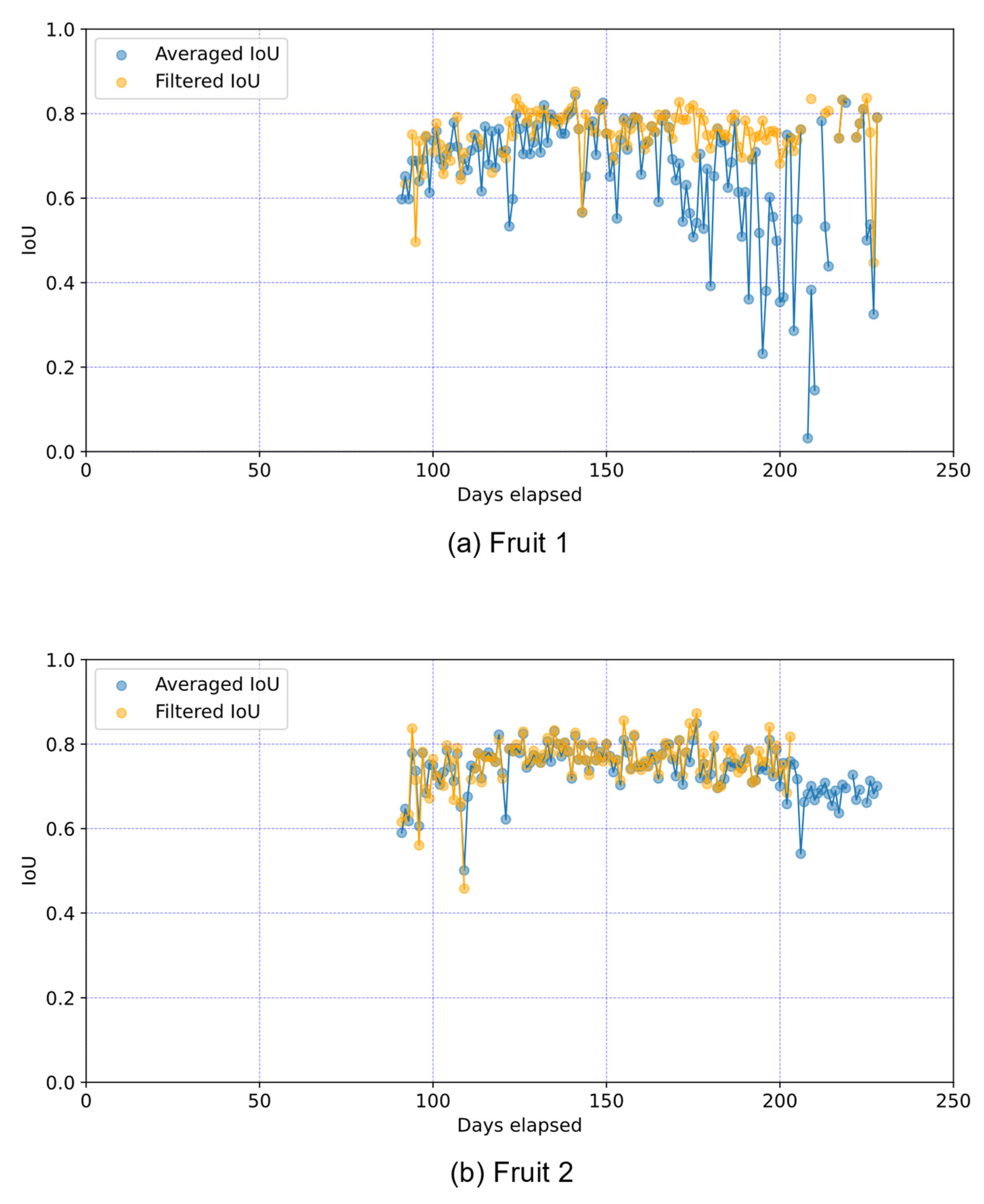

3.1. Verifying the Accuracy of Fruit Detection

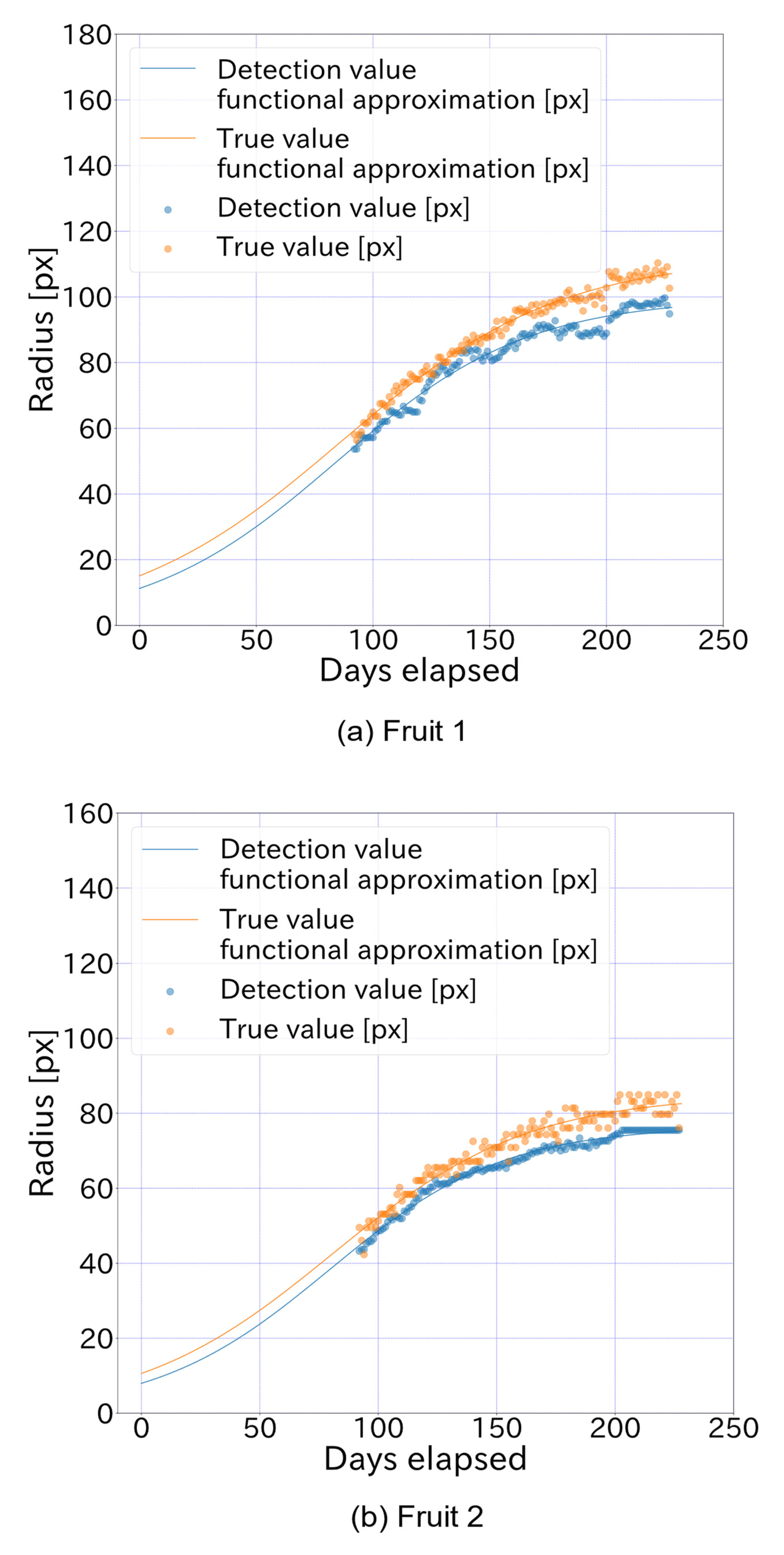

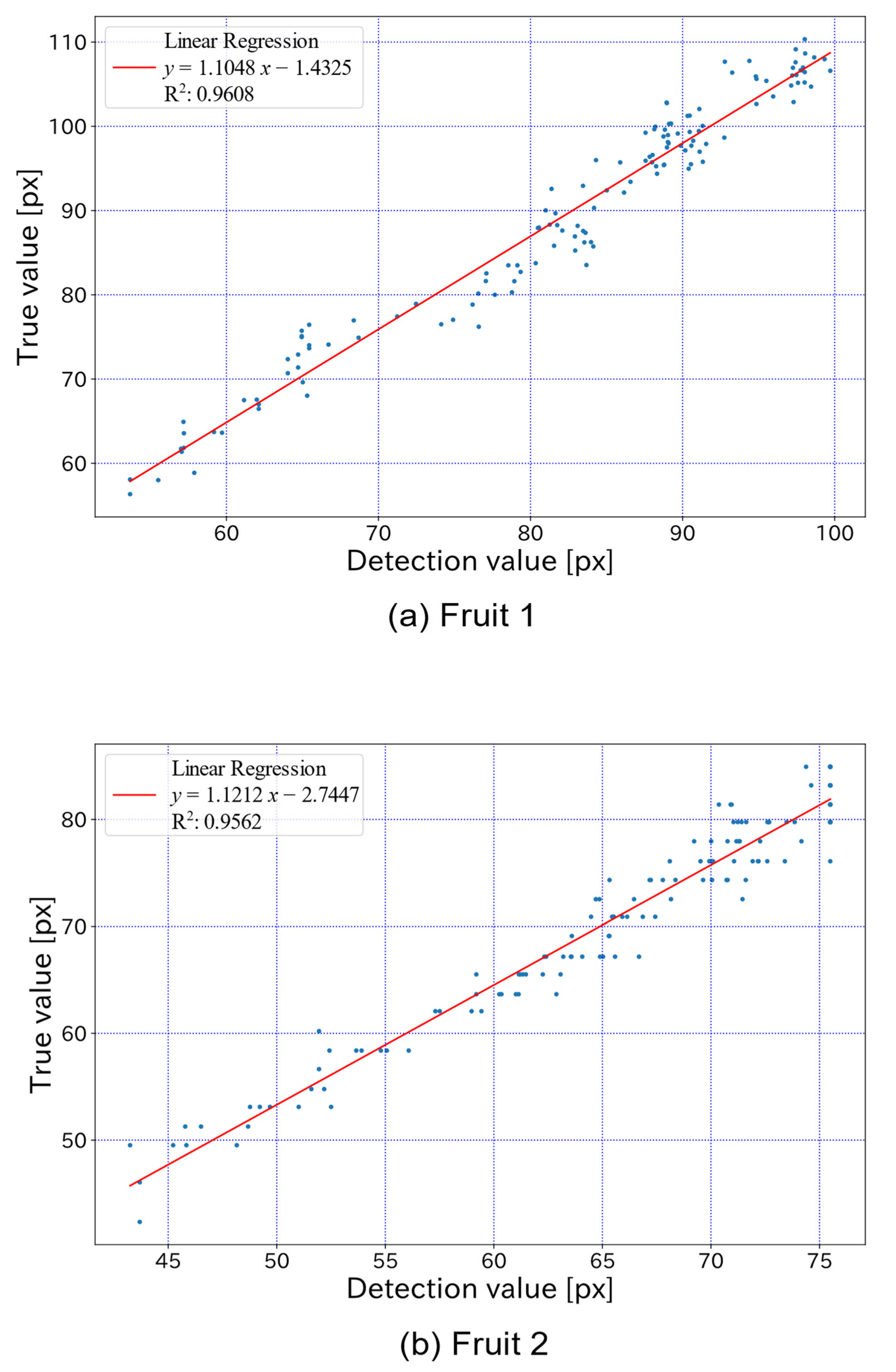

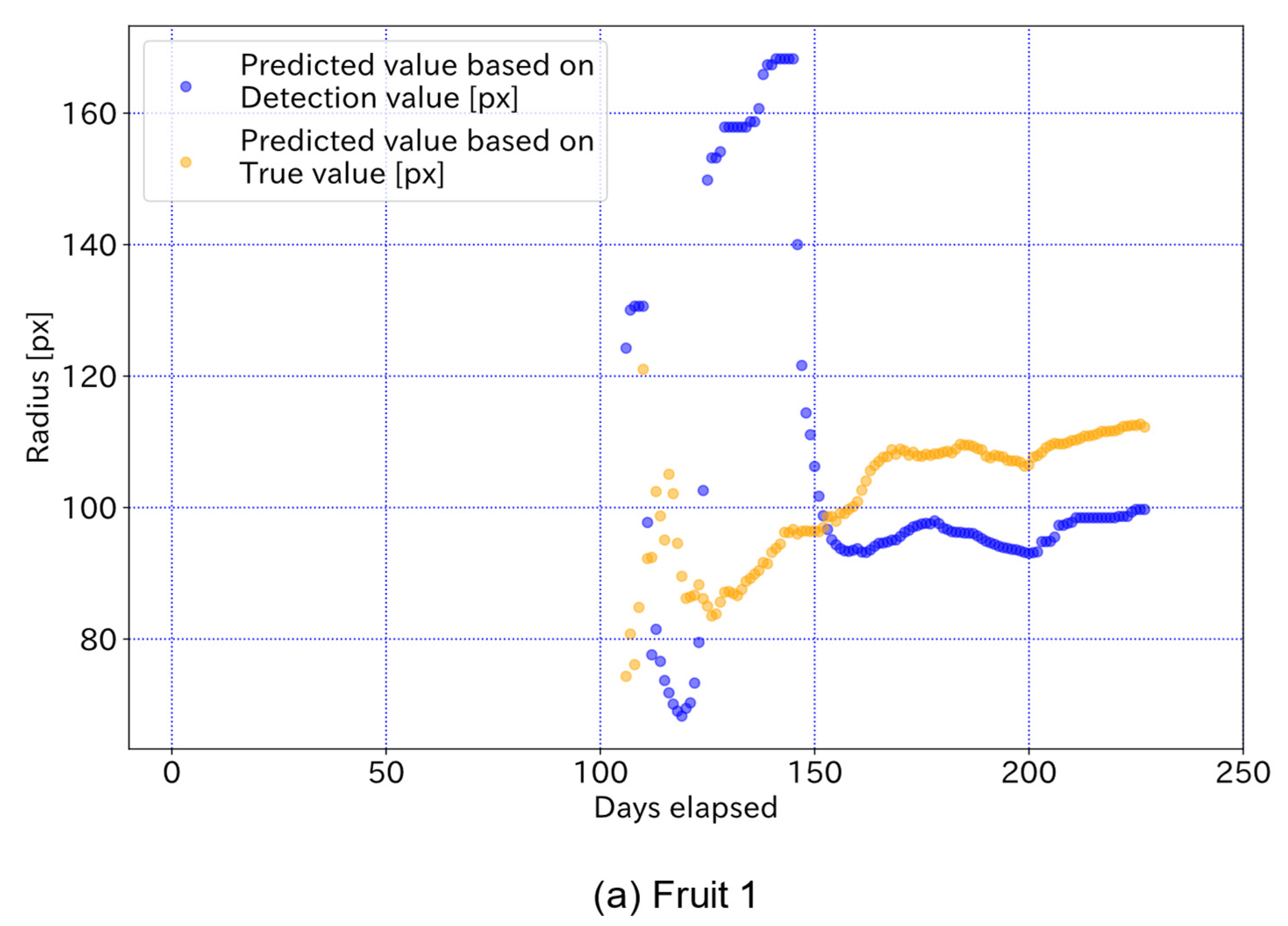

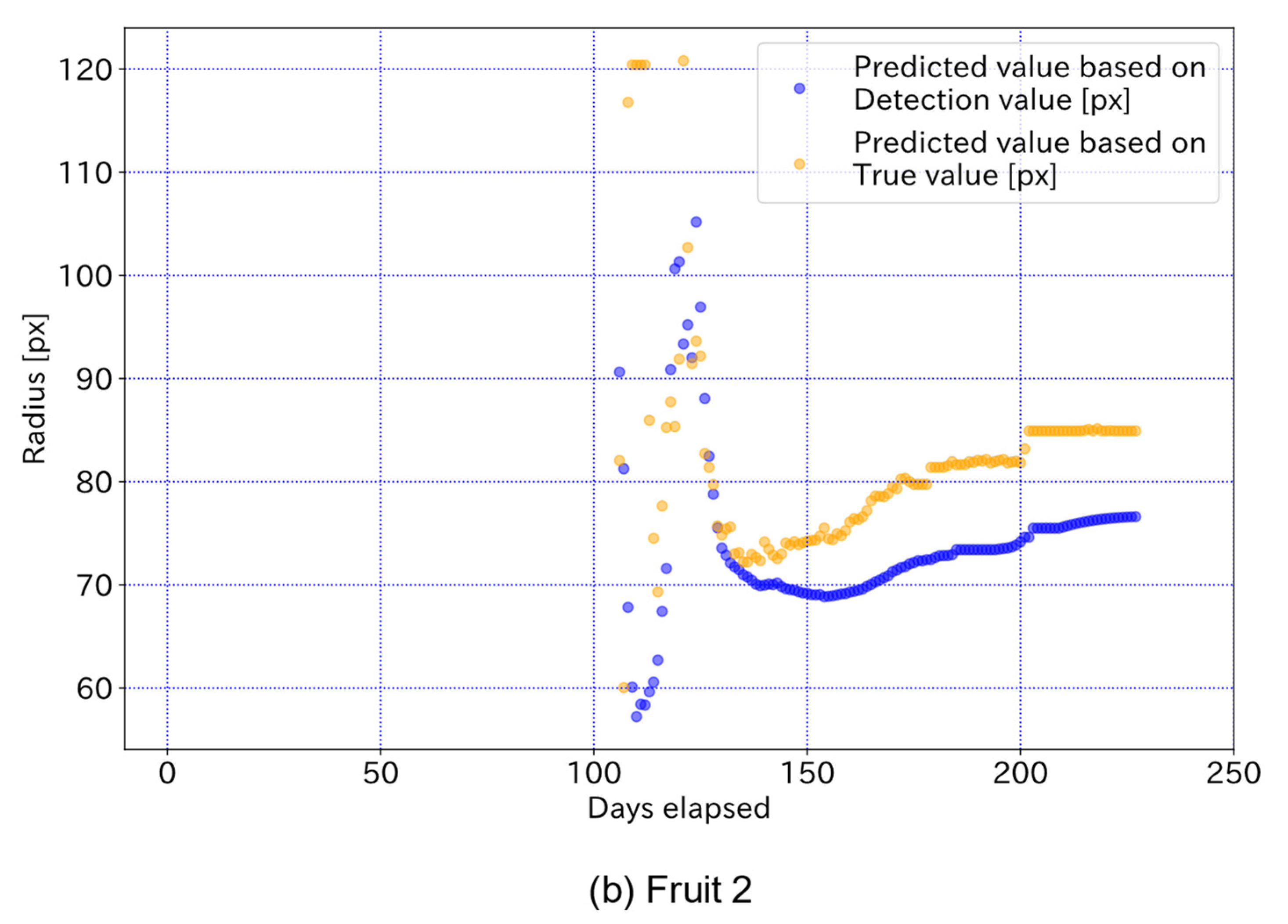

3.2. Verification of the Accuracy of Growth Curve Predictions

4. Conclusions

- The proposed model was capable of detecting hidden fruit with high accuracy, measuring fruit size in real time, and predicting the radius at harvest based on fruit size time series data.

- The model using automatically generated training data results was higher in precision and IoU compared to the existing model, which was affected by the parameters used to generate the synthetic farm images.

- In individual fruit identification, the same fruit could be identified at different dates and times by using filters for the amount of change in fruit position, size, and contour similarity.

- In real-time fruit radius measurements, the MAPE of the true and detection values was less than 0.079, and the coefficient of determination for linear regression was more than 0.95 for both Fruit 1 and 2, indicating that the fruit radius can be measured with high accuracy.

- In the real-time prediction of the radius at harvest, each prediction can capture a relative growth curve that is close to a true one after approximately 150 elapsed days.

- The proposed fruit detection algorithm enabled the tracking of even partially hidden fruit with sufficient prediction accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| T | The number of non-detect days [day] |

| TP | True Positives |

| FP | False Positives |

| FN | False Negatives |

| IoU | Intersection over Union |

| CA | Correct Area [m2] |

| PA | Prediction Area [m2] |

| X | The number of elapsed days [day] |

| Y | The radius of apple fruit [m] |

| a | Growth curve coefficient [-] |

| b | Growth curve coefficient [-] |

| c | Growth curve coefficient [-] |

References

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and Systems for Fruit Detection and Localization: A Review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Fang, Y. Color-, Depth-, and Shape-Based 3D Fruit Detection. Precis. Agric. 2020, 21, 1–17. [Google Scholar] [CrossRef]

- Chaivivatrakul, S.; Dailey, M.N. Texture-Based Fruit Detection. Precis. Agric. 2014, 15, 662–683. [Google Scholar] [CrossRef]

- Kobayashi, K.; Toda, S.; Kobayashi, F.; Saito, Y. Web-Based Image Viewer for Monitoring High-Definition Agricultural Images. SICE. J. Control. Meas. Syst. Integr. 2012, 5, 13–17. [Google Scholar] [CrossRef]

- Genno, H.; Kobayashi, K. Apple Growth Evaluated Automatically with High-Definition Field Monitoring Images. Comput. Electron. Agric. 2019, 164, 104895. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J. Deep Fruit Detection in Orchards. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE Publications: Piscataway, NJ, USA, 2017; Volume 2017, pp. 3626–3633. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J.P. Image Segmentation for Fruit Detection and Yield Estimation in Apple Orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef]

- Fu, L.; Feng, Y.; Majeed, Y.; Zhang, X.; Zhang, J.; Karkee, M.; Zhang, Q. Kiwifruit Detection in Field Images Using Faster R-CNN With ZFNet. IFAC-PapersOnLine 2018, 51, 45–50. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fast Implementation of Real-Time Fruit Detection in Apple Orchards Using Deep Learning. Comput. Electron. Agric. 2020, 168, 105108. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [Green Version]

- Häni, N.; Roy, P.; Isler, V. A Comparative Study of Fruit Detection and Counting Methods for Yield Mapping in Apple Orchards. J. Field Robot. 2020, 37, 263–282. [Google Scholar] [CrossRef]

- Anderson, N.T.; Walsh, K.B.; Wulfsohn, D. Technologies for Forecasting Tree Fruit Load and Harvest Timing—From Ground, Sky and Time. Agronomy 2021, 11, 1409. [Google Scholar] [CrossRef]

- Gongal, A.; Silwal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Apple Crop-Load Estimation with Over-the-Row Machine Vision System. Comput. Electron. Agric. 2016, 120, 26–35. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, J.; Liu, Y.; Chen, K.; Li, H.; Duan, Y.; Wu, W.; Shi, Y.; Guo, W. Deep-Learning-Based in-Field Citrus Fruit Detection and Tracking. Hortic. Res. 2022, 9, uhac003. [Google Scholar] [CrossRef] [PubMed]

- Marini, R.P.; Schupp, J.R.; Baugher, T.A.; Crassweller, R. Relationships between fruit weight and diameter at 60 days after bloom and at harvest for three apple cultivars. HortScience 2019, 54, 86–91. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Piotr, D.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit Detection for Strawberry Harvesting Robot in Nonstructural Environment Based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Ni, X.; Li, C.; Jiang, H.; Takeda, F. Deep Learning Image Segmentation and Extraction of Blueberry Fruit Traits Associated with Harvestability and Yield. Hortic. Res. 2020, 7, 110. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Lecture Notes in Computer Science European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. Available online: https://link.springer.com/chapter/10.1007/978-3-319-10602-1_48 (accessed on 30 May 2022).

- Zhang, W.; Chen, K.; Wang, J.; Shi, Y.; Guo, W. Easy Domain Adaptation Method for Filling the Species Gap in Deep Learning-Based Fruit Detection. Hortic. Res. 2021, 8, 119. [Google Scholar] [CrossRef]

- Dolata, P.; Wróblewski, P.; Mrzygłód, M.; Reiner, J. Instance Segmentation of Root Crops and Simulation-Based Learning to Estimate Their Physical Dimensions for On-Line Machine Vision Yield Monitoring. Comput. Electron. Agric. 2021, 190, 106451. [Google Scholar] [CrossRef]

- Liu, S.; Guo, H.; Hu, J.G.; Zhao, X.; Zhao, C.; Wang, T.; Zhu, Y.; Wang, J.; Tang, M. A Novel Data Augmentation Scheme for Pedestrian Detection with Attribute Preserving GAN. Neurocomputing 2020, 401, 123–132. [Google Scholar] [CrossRef]

- Ji, W.; Pan, Y.; Xu, B.; Wang, J. A Real-Time Apple Targets Detection Method for Picking Robot Based on ShufflenetV2-YOLOX. Agriculture 2022, 12, 856. [Google Scholar] [CrossRef]

- Tanco, M.M.; Tejera, G.; Di Martino, J. Computer Vision based System for Apple Detection in Crops. In Proceedings of the VISIGRAPP 2018-International Conference on Computer Vision Theory and Applications, Funchal, Portugal, 27–29 January 2018; pp. 239–249. [Google Scholar] [CrossRef]

- Zartash, K.; Abdul, B.; Muhammad, J.; Ihsan, U.; Anwar, A.S. Overlapped Apple Fruit Yield Estimation using Pixel Classification and Hough Transform. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 567–573. [Google Scholar] [CrossRef] [Green Version]

- Ooyu Farm. Apple Size Comparison. 2022. Available online: https://www.ooyufarm.com/c/about/size (accessed on 30 May 2022).

| Subject | Height [px] | Width [px] | Brightness [%] | Angle [°] | Number of Fruits | Number of Leaves on the Fruit Foreground | Number of Leaves on Fruit Background | Selection Probability of Red Apple [%] |

|---|---|---|---|---|---|---|---|---|

| Fruit | 140~190 | 140~190 | 60~120 | −90~90 | 3~8 | 55.3 | ||

| Leaf | 60~90 | 60~90 | 60~120 | −90~90 | 80 | 0, 100, 200, 400 |

| Item | Precision | Recall | IoU Average | IoU Median | IoU Variance |

|---|---|---|---|---|---|

| COCO model | 0.864 | 0.338 | 0.554 | 0.596 | 0.065 |

| Proposed model | 0.955 | 0.317 | 0.653 | 0.720 | 0.046 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hondo, T.; Kobayashi, K.; Aoyagi, Y. Real-Time Prediction of Growth Characteristics for Individual Fruits Using Deep Learning. Sensors 2022, 22, 6473. https://doi.org/10.3390/s22176473

Hondo T, Kobayashi K, Aoyagi Y. Real-Time Prediction of Growth Characteristics for Individual Fruits Using Deep Learning. Sensors. 2022; 22(17):6473. https://doi.org/10.3390/s22176473

Chicago/Turabian StyleHondo, Takaya, Kazuki Kobayashi, and Yuya Aoyagi. 2022. "Real-Time Prediction of Growth Characteristics for Individual Fruits Using Deep Learning" Sensors 22, no. 17: 6473. https://doi.org/10.3390/s22176473

APA StyleHondo, T., Kobayashi, K., & Aoyagi, Y. (2022). Real-Time Prediction of Growth Characteristics for Individual Fruits Using Deep Learning. Sensors, 22(17), 6473. https://doi.org/10.3390/s22176473