1. Introduction

Architectural heritage is a resource of multiple dimensions (cultural, social, territorial, and economic) that greatly enriches and protects the societies in which the sites are located [

1]. These heritages, symbols of regions, cities, and towns, are linked to their social sentiment and cultural identity and are often the basis for the development of activities on which their economy is based, such as tourism [

2]. Monumental heritage is a reflection of the history of a territory, capable of tracing the passage of different civilizations. Its value is unquestionable, but its conservation and maintenance are not always feasible. They are very fragile elements that have suffered the effects and consequences of historical and natural events that have modified, altered, and, in the worst of cases, even destroyed them [

3]. The conservation of this built heritage is one of the aspects that any advanced society must inevitably address. Today, apart from the deterioration due to the passage of time and the impact of meteorological agents and the effects of climate change, these assets are exposed to other constant threats, such as: (a) their abandonment (due to depopulation or lack of funds for their maintenance) or loss of functionality; (b) destructive actions towards these assets (vandalism and armed conflicts); (c) accidental destructive events (such as the fire that happened at Notre Dame Cathedral in Paris); (d) other types of hazards that are difficult to predict, such as natural catastrophes, earthquakes, among others; (e) threats linked to human activities, such as poor planning, management, or maintenance of these assets (uncontrolled tourism, corrective repairs, etc.) [

4]. All these factors make the protection, management, research, dissemination, improvement, conservation, and safeguarding of architectural heritage at a global level a highly complex task [

4].

Considering these circumstances, different international organizations and institutions (UNESCO or ICOMOS, among other international bodies), together with national and regional administrations, have promoted international charters [

5,

6], norms, and laws that attempt to deal with this problem. In almost all of them, apart from speaking of the values and importance of cultural heritage for today’s society and the need to preserve this historical legacy of past generations, they promote research and knowledge transfer from other fields of science, engineering, and other disciplines for the adaptation or creation of new methodologies and techniques of analysis, which favor and improve the current methods of intervention, conservation, and management of cultural heritage.

Among this range of techniques and methodologies that improve studies and research on cultural heritage, geomatics plays a key role in capturing, processing, analyzing, interpreting, modeling, and disseminating digital geospatial information [

3,

7,

8,

9,

10,

11]. These sciences and technologies are particularly useful in the cultural heritage sector as they provide information on the current state of heritage assets and make it possible to highlight and quantify the changes that these assets may undergo in space and time [

7,

8,

9,

10,

11,

12,

13,

14].

Currently, the documentation, conservation, restoration, and dissemination actions carried out in the cultural heritage field avail the use of 3D recording strategies that allow the creation of digital replicas of assets in an accurate and fast way. These 3D recording strategies provide important information with metric properties of cultural heritage geometrically, structurally, dimensionally, and figuratively [

3,

7,

8,

9,

10,

11,

12,

13,

14]. Thus, we have managed to improve graphic representation resources with more detailed planimetry and with the option of generating a 3D model from which we can obtain longitudinal and transversal profiles, virtual tours, calculation of surfaces, volumes, orthophotographs, monitoring of the object under study to check its deterioration, etc. [

3,

7,

8,

9,

10,

11,

12,

13,

14]. Products can be used to support in-depth analysis of the architectural, engineering, and artistic features of the objects. In this context, 3D recording strategies have become indispensable tools and methodologies when carrying out studies on assets, conservation or restoration work, or their dissemination [

12,

13,

14].

Nowadays, it is possible to find numerous investigations and works in which these techniques are applied in architectures of cultural and historical relevance. These heritage assets, in most cases, are located in cities or towns and their surroundings [

7,

8,

9,

10,

11,

12,

13,

14], where around 55% of the world’s population is concentrated [

15]. However, it is rare to find projects dealing with the management and safeguarding of cultural heritage located in remote rural areas, such as mountain areas or formerly inhabited places, which are currently abandoned. As a general rule, the population in the 1960s left these territories (in which there were hardly any services) and moved to the cities, looking for new opportunities for their families, taking advantage of the restructuring of large industries [

16]. This led to the disappearance of many historic mountain villages, which based their economy on artisanal activities, such as the exploitation of wood or the extraction of raw materials from the subsoil (mining) [

17]. At present, many of these villages and the remains of the industrial craft activities they carried out are in a deplorable state of preservation (some of them have disappeared) and are invaded by vegetation and have been absorbed by wooded areas, making their 3D documentation very complicated.

In recent years, the geomatics sector has undergone a significant transformation, which has made it possible to improve 3D digitizing methods, opening up the possibility of researching and progressing in the documentation of these complex scenarios. Firstly, the improvement of static scanning systems and the appearance of new dynamic scanners, known as mobile mapping systems (MMSs) [

10,

12,

13,

14], have made it possible to speed up the reconstruction of complex scenarios with a simple walk. This is thanks to the combination of an inertial measurement unit (IMU), a LiDAR scanning system, and the application of SLAM (simultaneous location and mapping) techniques and visual odometry for the processing of these data. MMSs are mostly based on ROS (robotic operative system) being widely used in robotic navigation and in autonomous driving. These systems allow to perform dead-reckoning position estimation without the necessity of a GNSS receiver and implement novel algorithms for registering point clouds and extracting maps [

10,

12,

13,

14]. In terms of data quality, these devices usually offer centimeter accuracy in contrast with the last generation terrestrial laser scanner (TLS), which reaches a millimeter or sub-centimeter level of accuracy [

10,

12,

13,

14]. Moreover, point cloud resolution depends on the acquisition rate, the distance to the object at any given time, and the number of passages. Although these devices are more suitable for indoor use, due to their higher productivity and efficiency than outdoor use, they have been successfully used for reconstructing outdoor scenarios, such as archaeological sites, churches, civil engineering elements, among others [

10,

12,

13,

14].

Secondly, the rise of commercial off-the-shelf (COTS) drones, together with a substantial improvement in their performance and the miniaturization of the electronic components, has allowed installing an airborne LiDAR sensor (ALS) on a flying platform, ensuring greater flight autonomy, precise positioning in flight (thanks to GNSS—global navigation satellite systems- or INS—inertial navigation system), and a lower cost compared to classic alternatives (such as satellite images or photogrammetric flights).

In relation to the above, it may seem strange not to mention photogrammetric methods, which have revolutionized 3D documentation in cultural heritage [

7,

18,

19,

20]. It is true that new developments in photogrammetry (SFM—structure from motion-, computer vision… [

21]) have made it possible that almost anyone with little knowledge in the field, with an image capturing device (cell phone, tablet, camera, or commercial drone with camera), following a simple protocol in data collection, and with the help of commercial or free software [

21], is able to obtain point clouds or 3D models of objects or simple scenarios [

3,

22,

23]. However, it is more than demonstrated that, to date, these techniques, which use images (such as input), cannot provide information beyond what appears in them [

21]. Since we are dealing with cultural properties covered by vegetation and absorbed by wooded areas, if we use these methods, we would be documenting all the biological parts that cover and alter the heritage element and not the element itself.

This research aims to compare the results obtained using several active systems of massive data capture: a TLS, a WMMS, and a drone ALS, which allow the documentation of the cultural heritage even if it is covered by biological invasions [

24]. Consequently, it is necessary to analyze in depth the possibilities offered by these systems for the documentation of this type of scenario, as well as their limitations, and to evaluate their advantages and disadvantages as a possible step toward strengthening the management of endangered cultural heritage. As can be seen,

Section 2 introduces the study case and

Section 3 defines the materials and methods used to digitalize it.

Section 4 describes the experimental results obtained andincludes the discussion, and, finally, some conclusions obtained from the present work are drawn within

Section 5.

2. Case Study

2.1. Monte Pietraborga, Trana, Provincia di Torino, Piemonte, Italy

The Monte Pietraborga is a low-altitude small mountain system that is part of the Eastern Italian Alps (Central Cozie Alps). It is composed of several ridges and peaks (the highest being about 926 m). It is located at the head of the Sangone Valley, within the municipalities of Trana (mostly), Sangano, and Piossasco in the Metropolitan City of Torino in the Piedmont region. It lies a few kilometers southwest of Torino (

Figure 1). From the plain where the city of Turin is located, the skyline of Monte Pietraborga and its neighbor Monte San Giorgio (837 m high at its highest peak) can be seen. Its advanced position with respect to the plain represents an important visual reference point for the surrounding area.

The rocks that compose the mountain are part of the Massiccio Ultrabasico di Lanzo, a geological formation of ancient origin. The predominant rocks, the peridotite, are very rich in magnesium, and this also influences the nature of the soils formed by their degradation. Thanks to this contribution of substrates, the vegetation present in the mountain is quite lush, highlighting the chestnut, oak, and hazel, among other vegetation.

In Monte Pietraborga, several historical villages are almost uninhabited or completely uninhabited, among which stands out the village of Pratovigero, located on the northwest slope. The access to this village is from Trana, by a poorly preserved dirt road. Other villages are Prese de Piossasco and Prese di Sangano. These are made up of small groups of houses located at different points on the southern slope of Monte Pietraborga. Their access is very complex due to the poor preservation of the roads, some of them nowadays blocked by obstacles, such as fallen trees. Historical documents confirm that some of these centers have been inhabited since the 10th century.

Apart from the aforementioned population centers, there are other heritage elements of historical importance on Monte Pietraborga, such as: (i) the Cappella Madonna della Neve, built in 1700 (in a poor state of preservation); (ii) several fountains and springs; (iii) an area with remains of Celtic vestiges (dolmens and menhirs arranged in a circle, known as Sito dei Celti, and dating back to the period between 4000 and 2800 BC).

Most of the people who lived in this mountainous environment were engaged in primary sector activities: (i) they had domestic livestock and cultivated the fields to feed the animals (rye, fodder, etc.) and for their consumption (wheat, potatoes, turnips); (ii) they were collectors of the fruits provided by the mountain flora, such as: hazelnuts, walnuts, chestnuts, and other types of cultivated fruits, such as pears, apples, and cherries; (iii) or were dedicated to the exploitation of the resources and raw materials of the area (wood, coal, serpentinite).

Since the beginning of the twentieth century, due to the absence of fundamental services, the inhabitants of these territories abandoned them and moved to the city of Turin in search of new opportunities for their families, taking advantage of the restructuring of the large industries [

17,

25,

26]. This exodus worsened after the end of the Second World War.

Today, most of the heritage assets of this territory are abandoned and in a poor state of conservation, mostly invaded by vegetation or absorbed by wooded areas.

Study Area Selection

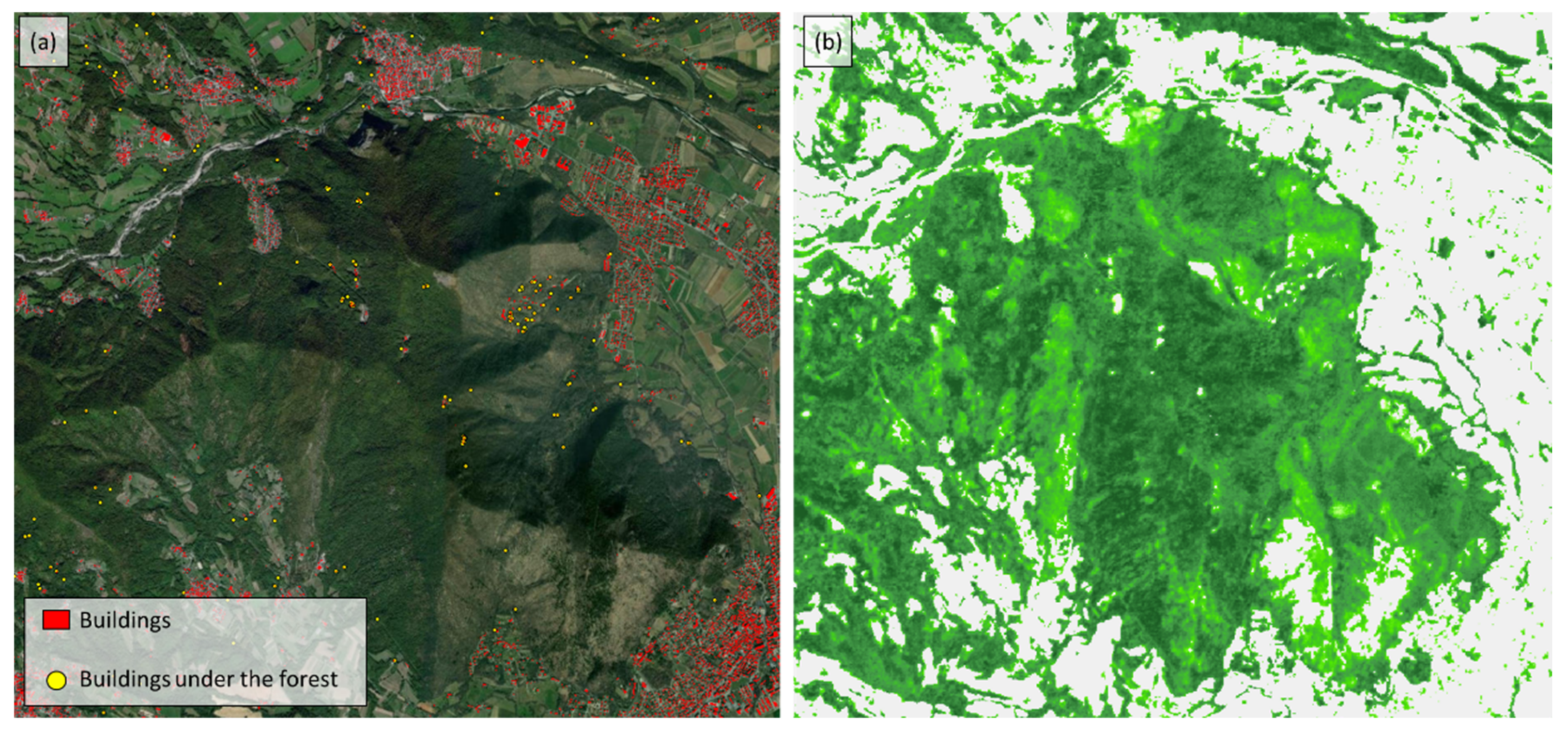

In order to select a suitable site for testing the different geomatic sensors, a study was carried out to locate all the buildings or constructive elements covered by vegetation located on Monte Pietraborga. The data used for this study are based on: (i) the vector layer of the buildings registered in the Cadastre of the Province of Turin (

Figure 2a, elements in red); (ii) and the high-resolution raster layers of the forest cover density, provided by the COPERNICUS service of the European Union (

https://land.copernicus.eu/, accessed on 22 July 2022) (

Figure 2b). The first step that has been carried out is the creation of the centroids of the vector layer of the buildings registered in the Cadastre. Subsequently, the extracted points were assigned the value of the raster layer of the tree cover density. Finally, a selection of points was made to ensure that they were covered by more than 70% of the tree cover density (points in yellow in

Figure 2).

Among those points, the site that best fits the scope of our research has been selected after a survey in the field.

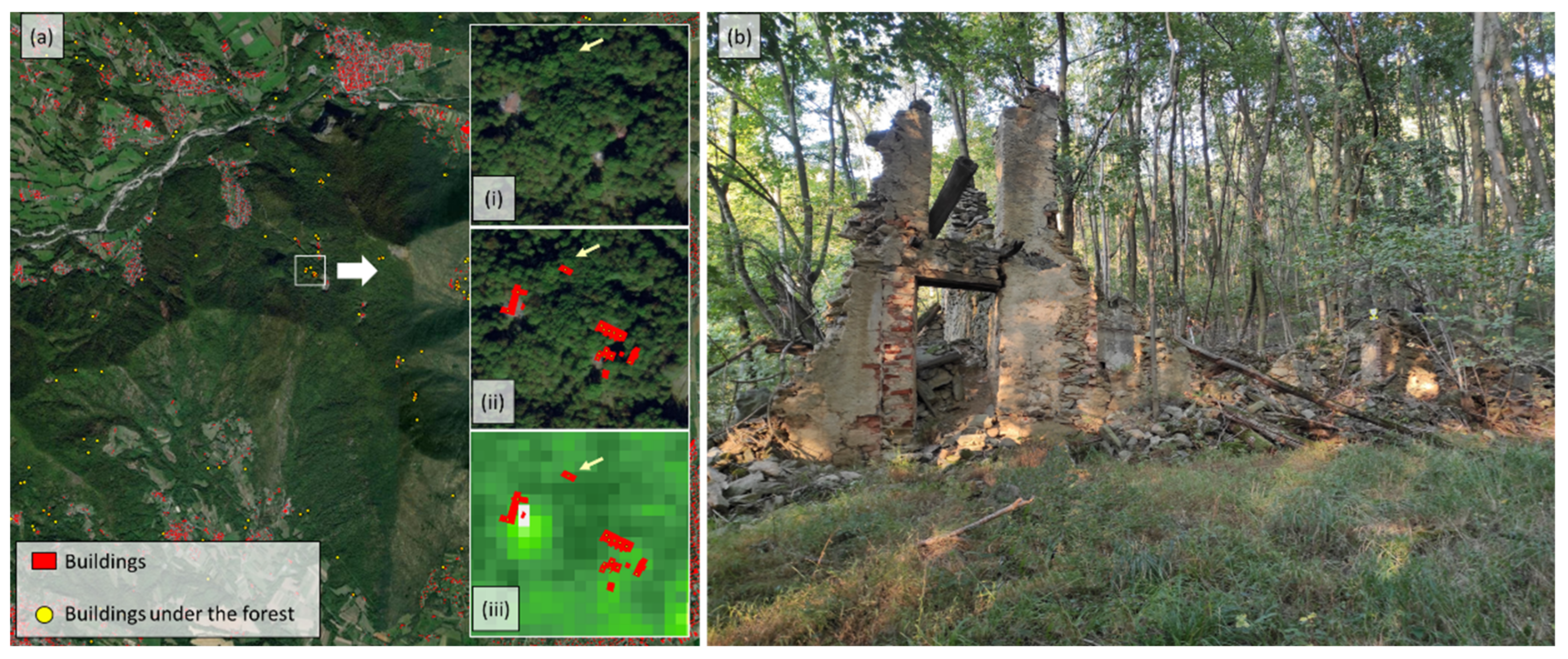

Figure 3 shows the selected heritage element. It is a historical building with a typical construction of the territory of Monte Pietraborga architecture. It is located near the village of Pratovigero. At present, this building is abandoned and in ruins. As can be seen, the forest has covered it entirely, and part of it is covered by the surrounding vegetation.

4. Results and Discussion

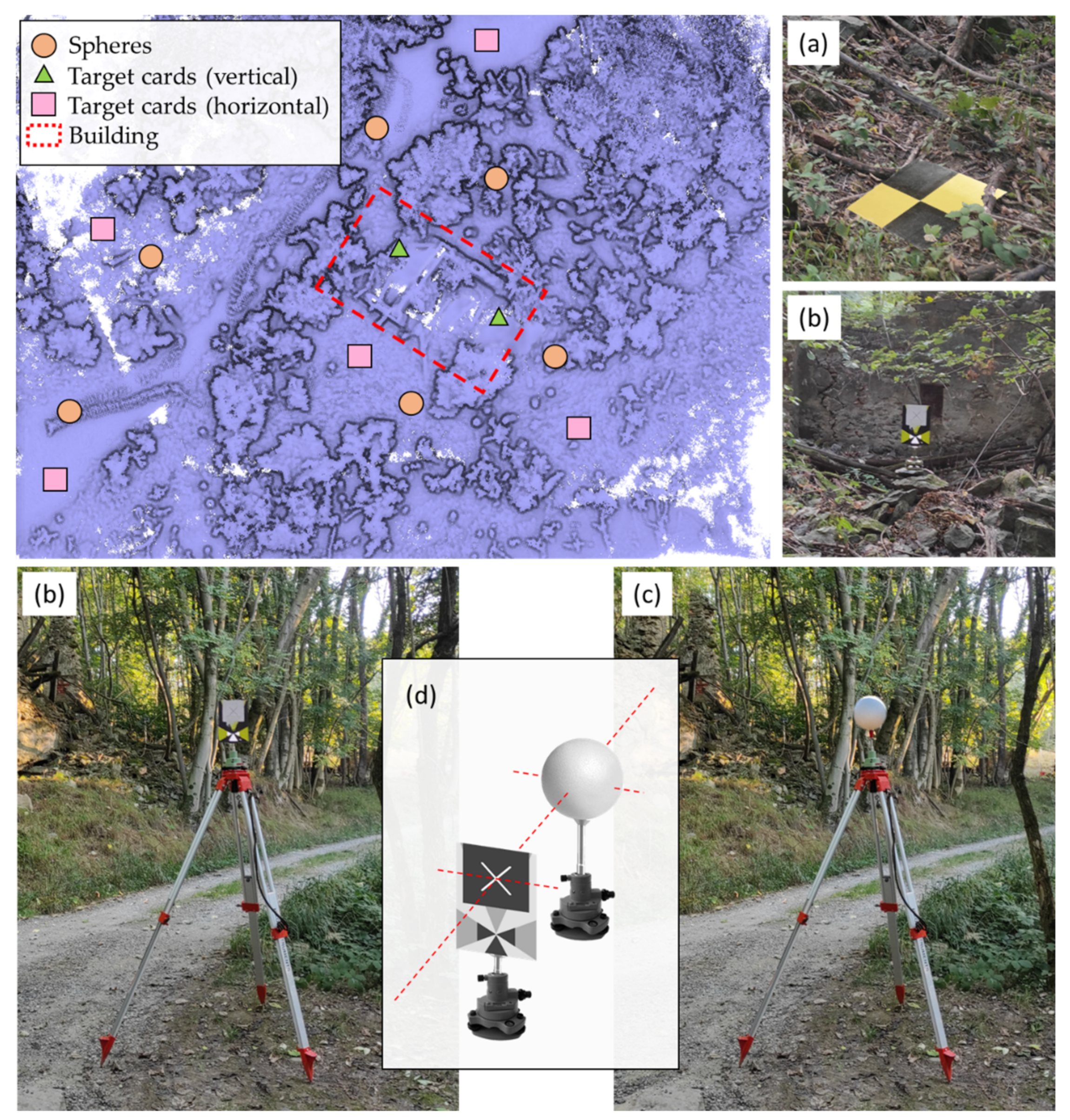

Previous to the data collection with the different LiDAR sensors, a network of registration spheres and target cards (horizontally and vertically) was placed along the scene with the aim of aligning the different point clouds generated in a common global coordinate system (

Figure 9). Distributed throughout the scene, five markers have been placed horizontally on the ground in areas clear of wooded vegetation (

Figure 9a), and the coordinates of these points have been obtained with a GNSS network real time kinematic (NRTK) survey for which a geodetic receiver, the multi-frequency, multi-constellation Leica GS18, has been used, receiving differential correction from the network service of continuous operating reference stations (CORS) provided by the Piedmont region (SPIN reference). The marker location accuracy was 1.2 cm in planimetry and 2.5 cm in altimetry. With these points and thanks to a total station survey (Leica MS50, Wetzlar, Germany), the global coordinates of the rest of the vertical target cards have been obtained thanks to the topographic radiation method (

Figure 9b). These points have been obtained with millimeter precision.

All these control points have been used in two phases of the work: (i) as ground control points to check if the global reference system of the point cloud obtained by the airborne LiDAR sensor on the RTK drone coincides with the global reference system of the ground data acquisition; (ii) as control points to compare the data obtained from the TLS with respect to the data of the MMS; (iii) to align the different TLS scans with the classical point-based coregistration procedure.

Regarding point (i), the estimated control points (marker, vertical target, and sphere) will allow us to check if the independent geo-referencing procedure of the RTK drone (cm-level of accuracy) is consistent with the GNSS survey on the ground. The RTK positioning performed directly on the fly by the DJI system is mandatory when no ground markers are visible from the drone point of view due to the massive presence of trees and vegetation. This is especially interesting in this work since the building is located in a wooded area where it is difficult to position ground control points.

Regarding the vertical targets, two of them have been fixed to the building, while the others have been placed on topographical tripods, where, later, the spheres were placed. It should be noted that the vertical targets central point coincides with the center of the sphere (with precision of ±1 mm) (

Figure 9d). These devices have been calibrated in the topography laboratory of the Department of Environmental Engineering, Territory and Infrastructure (DIATI) of the Politecnico di Torino. In total, six registration spheres with a diameter of 200 mm have been placed along the scene, guaranteeing their visibility from different positions (

Figure 9c).

4.1. TLS, MMS, and ALS Survey

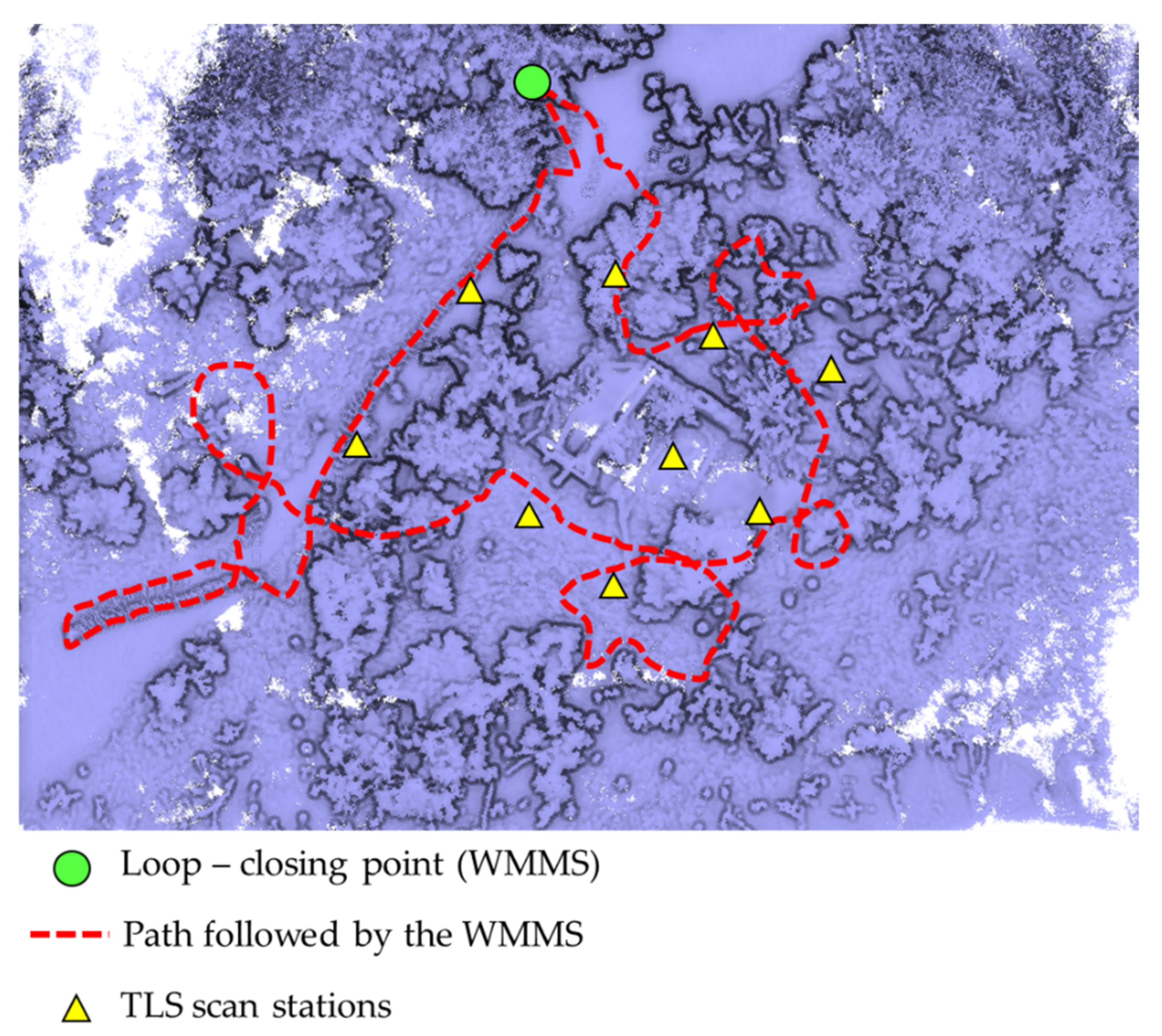

In order to digitalize the entire building and its surroundings, nine scans have been made around the object, as shown in

Figure 10 (yellow triangle). The high number of scans required for completing the building survey is due to the difficulties in observing directly the object in a woody area. The alignment of these scan stations was carried out by means of the target-based registration method. This method allows for aligning different point clouds through the use of geometrical features coming from artificial targets, such as planar targets or registration spheres. For the present study case, a target-based registration approach able to use the centroid of each registration sphere was used as a control point for the alignment between point clouds. Within this framework, the centroid of each sphere was extracted by the RANSAC shape detector algorithm [

37]. As a result, it was possible to align all the point clouds with an accuracy of ±3 mm. The resulting point cloud is composed of 47,368,018 points. The field data acquisition of the nine scans took about 100 min (around 8 min per scan), and approximately 150 min for the post-processing of the merging of the different point clouds.

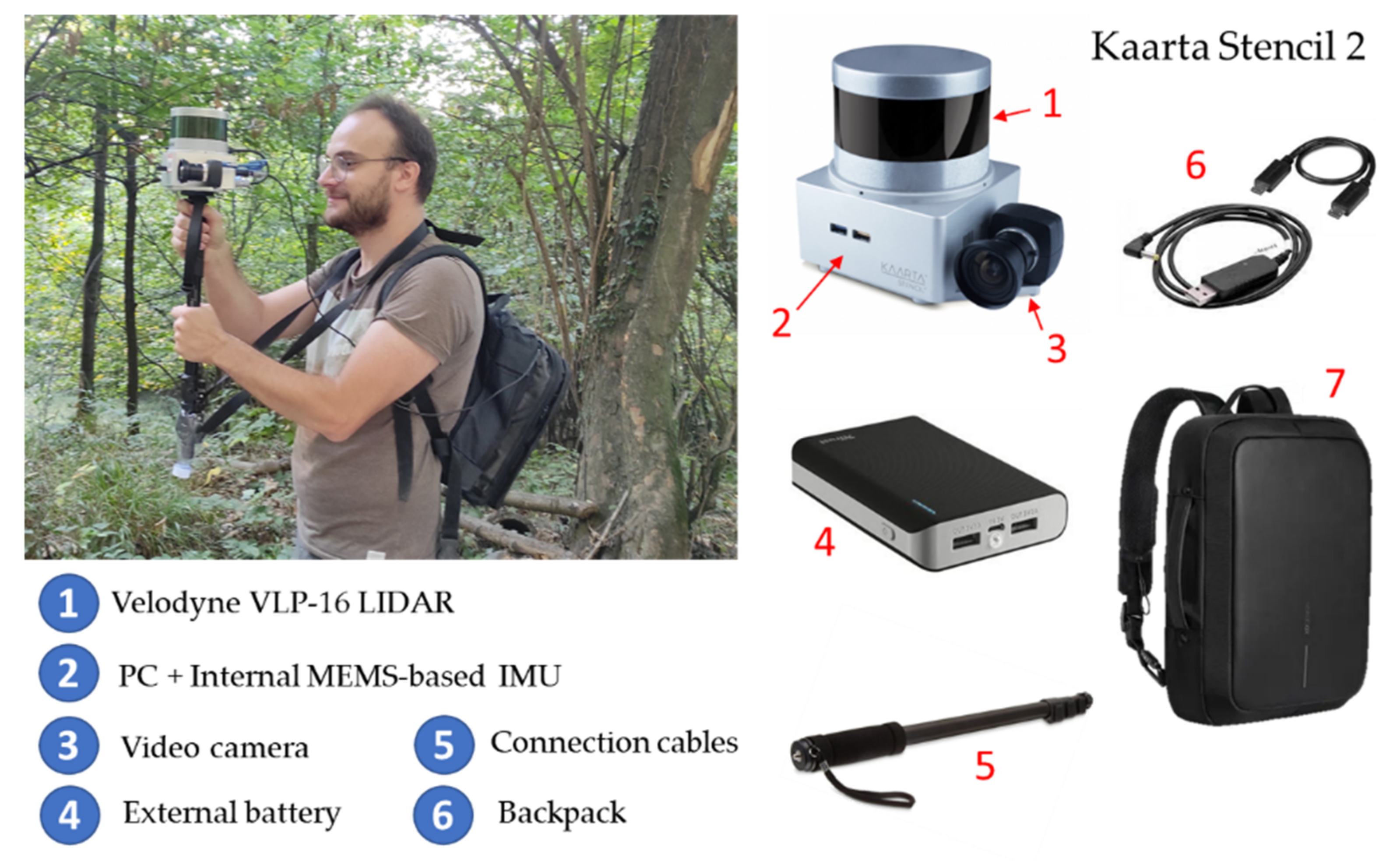

In addition, data acquisition was carried out with the Kaarta Stencil 2 mobile mapping system. Prior to the data acquisition with the MMS device, an on-site inspection was carried out with the aim of designing the most appropriate data acquisition protocol, taking into account the suggestions proposed by Di Filippo et al. [

13], with the following statements standing out: (i) ensuring accessibility to all the areas, (ii) removing obstacles along the way, and (iii) planning a closed-loop in order to compensate for the error accumulation. During the data acquisition, a closed-loop path was followed with the aim of compensating possible error accumulations (

Figure 10). In order to ensure a homogenous density of the point cloud, the walking speed was constant, paying special attention to transition areas.

Taking these considerations into account, a single loop was necessary to digitize the building and its surroundings, investing a total of 12 min.

Data acquisition with the Kaarta Stencil 2 device first required PC initialization. Along with this power-up, the IMU was started to establish the reference coordinate system, the tracking camera, and the LiDAR sensor. At the end of the acquisition phase with Kaarta Stencil 2, information about the configuration setting, the 3D point cloud characteristics, and the estimated trajectory is stored in a folder automatically created by the WMMS processer at every operation of the survey. Subsequently, precise processing of the field data was carried out in the laboratory, which took 40 min. The total resulting point cloud is composed of 63,256,457 points.

The UAS LiDAR-based survey was performed using a DJI Matrice 300 RTK drone piloted in manual mode to prevent collision in such a harsh environment. The real-time kinematic features allow to connect the drone to any RTK server via NTRIP protocol and, therefore, provide differential correction to the GNSS positioning system in real time. In a high vegetated environment, where GCP are not visible from the UAS, this feature allows direct geo-referencing with a high level of accuracy (few centimeters). This is the case in our study, where all the acquired data and derived products are geo-referenced directly without exploiting the GCPs under the canopy.

Those data were acquired with the DJI Zenmuse L1 commercial system, a portable multi-sensor platform composed of a Livox LiDAR module, a CMOS RGB imaging sensor, and an IMU. As the L1 sensor supports multi-beam LiDAR acquisition, the parameters were set to acquire three return signals (maximum value) with a scanning frequency of 240 K/s to test its ability to penetrate vegetation. The raw data acquired are 5473 × 3648 RGB jpeg images, a proprietary data format for LiDAR data, inertial, and GNSS positioning data. The raw LiDAR data are converted in standard point cloud format (.las) with DJI Terra software, which also processes all the RTK and IMU data with the images to color the LiDAR point cloud. The obtained point cloud was composed of 8,768,243 points with associated RGB and intensity channels, as well as scan angle, time of acquisition, and return number. The UAS flight survey required about 15 min and the post-processing approximately 60 min.

Figure 11 shows the obtained point cloud in RGB visualization and with signal return classification, from which is evident the difficulties to penetrate the vegetation in such a challenging scenario, where the building is beneath a dense forest and covered by the foliage. For this reason, the GCPs and the marker used in the previous analysis have not been used, and direct geo-referencing of the data has been performed.

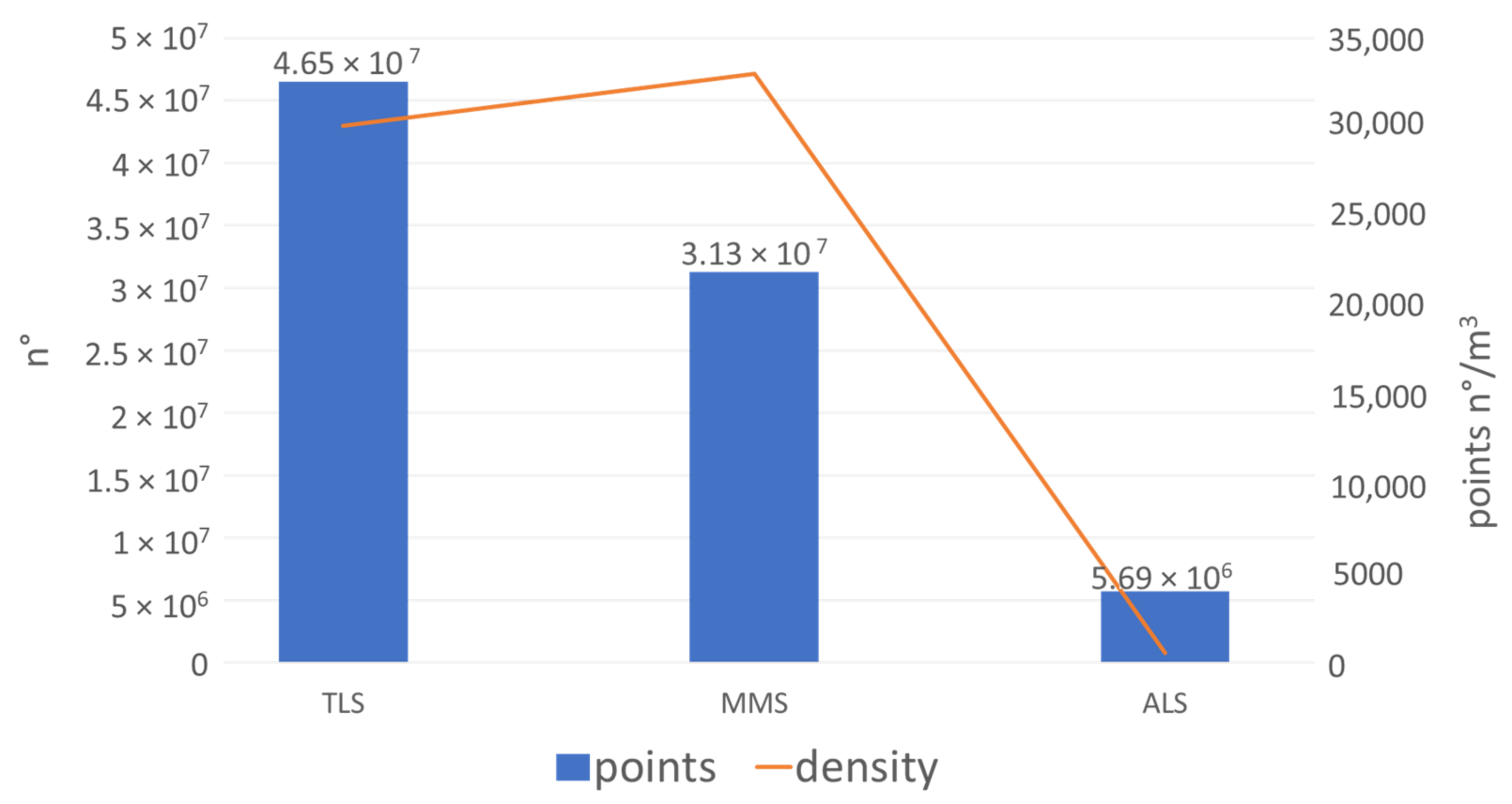

Analyzing the results of the raw data acquisition for each sensor, a higher number of cloud points are provided by the TLS Faro Focus, as expected. The Faro LiDAR sensor acquires 976,000 pts/sec with an angular resolution of 0.009 deg for each direction, the Velodyne VLP-16 integrated into the Kaarta Stencil acquires 300,000 pts/s with an angular resolution of 0.1 deg along the azimuth and 0.4 deg along the zenith, and, finally, the DJI L1 sensor acquires 240,000 pts/s. These characteristics are reflected in the number of points for each data type, as described in

Figure 12. Regarding the density computed as the number of neighbors in a volume of 1 m

3, the Kaarta Stencil is the most dense data acquired. The reason is due to the acquisition methodology. While the TLS point cloud is obtained co-registering a certain number of scans acquired from a fixed position, the MMS point cloud is obtained by the sum of the continuous acquisition process performed during the movement. This means that the density of the data could be increased by simply observing the same area more times during the walking path.

4.2. Data Comparison Analysis

4.2.1. TLS vs. MMS

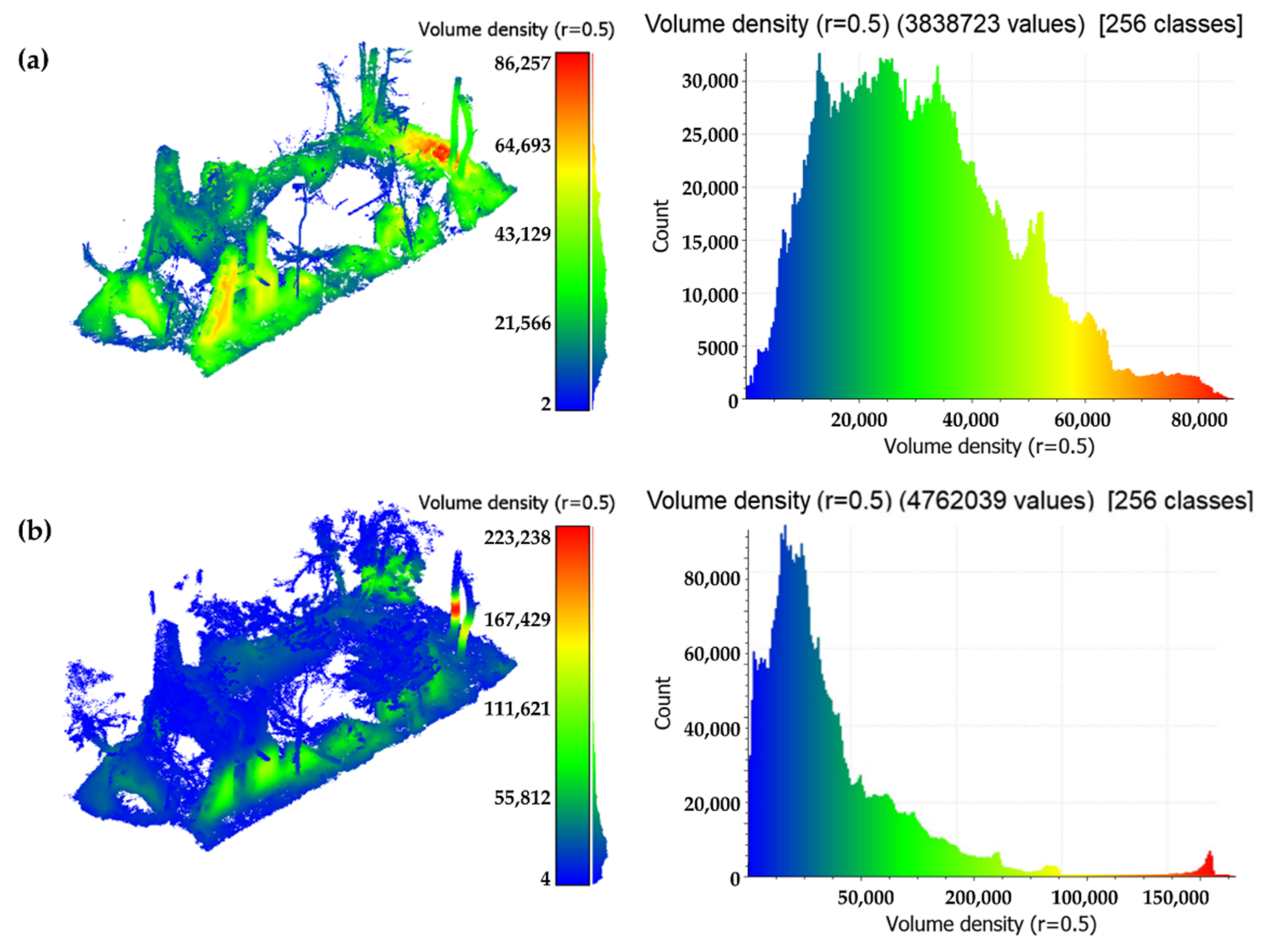

The data comparison analysis was performed in a selected portion of the LiDAR survey, in particular on the building covered by the vegetation. The first analysis compared the volume density of the TLS cloud with respect to the MMS one to investigate the performances of the two systems in the interest area. The density, expressed in pts/m

3, has been computed from (Equation (1)), and the results are expressed in

Table 5. Observing the density distribution evidences the difference between the two on-field data acquisition methods (

Figure 13). As expected, the density values derived from the TLS survey present a high-tailed distribution, indicating more dense data in the overlapping areas and sparse data where the object is observed just from one scan position. On the other hand, the MMS has a Gaussian mixture distribution of the density value, with areas of high density, medium density, and lower density. Moreover, the mean density value is greater in the MMS cloud (

Table 5). This is typical of an iterative LiDAR acquisition performed from a pedestrian, which cannot be constant in the velocity movement and, therefore, can cause different behaviors in the density and numerosity of the acquired points.

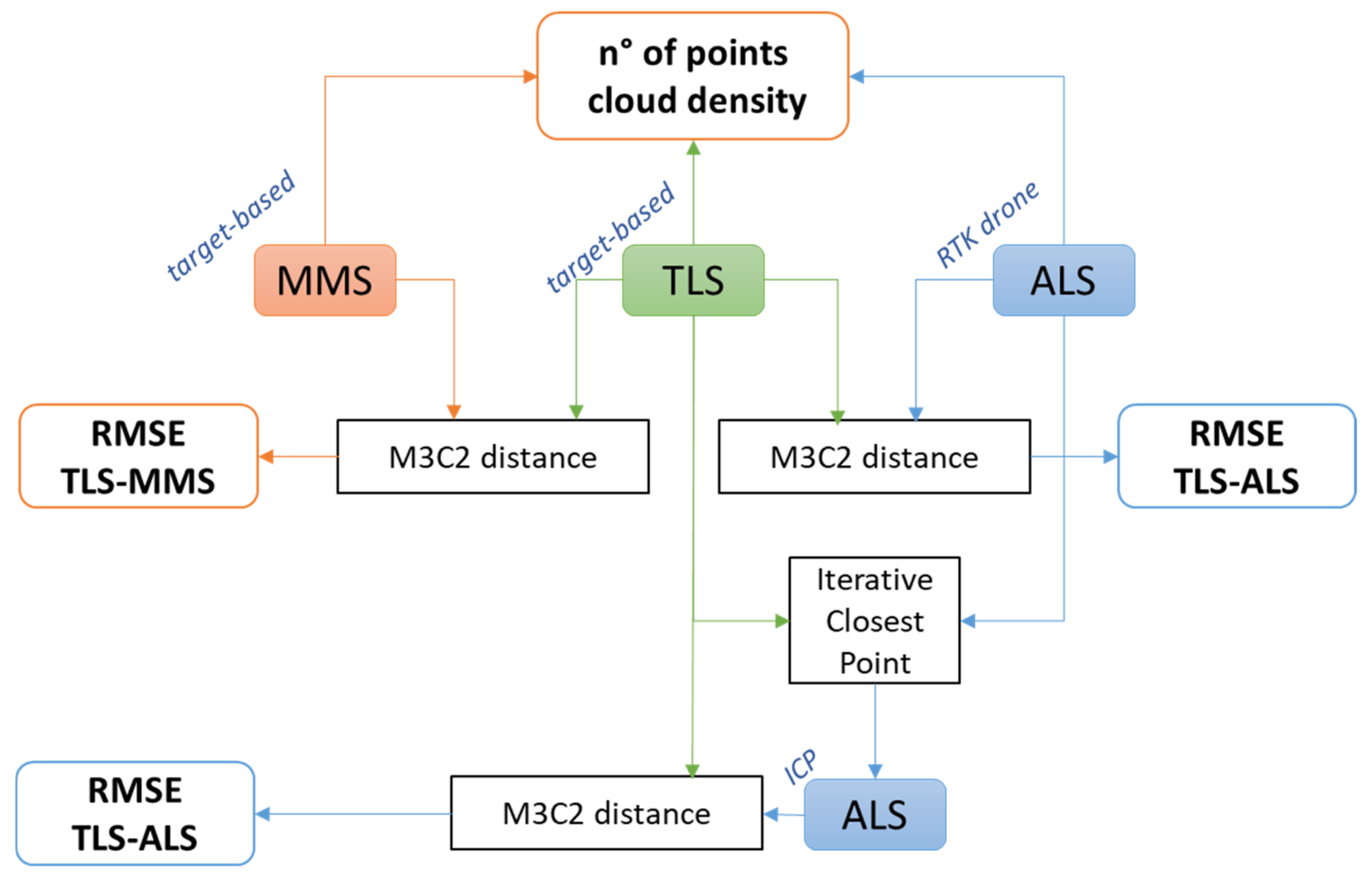

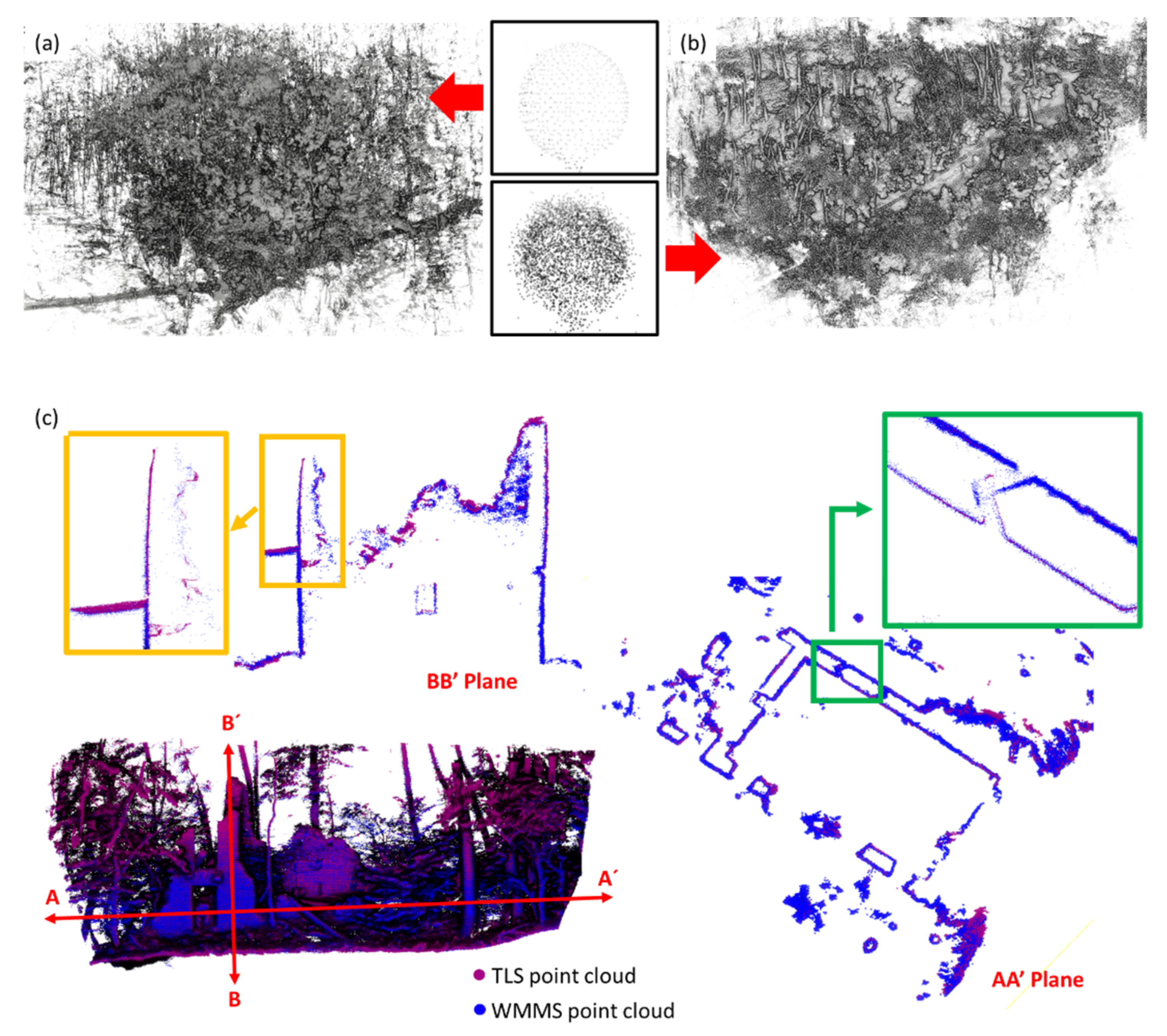

In order to align the point cloud obtained by the MMS with the TLS point clouds, another target-based registration phase was carried out, using to this end the spheres (

Figure 9). The root mean square error (RMSE) of this registration phase was 0.01 m. As stated before, the registration spheres were equipped on topographic tripods with special platforms that allow for geo-referencing the centroid of each sphere. Thanks to this, it was possible to geo-reference the point cloud obtained by means of a six-parameter Helmert transformation (three translations and three rotations), allowing for placing both LiDAR models in the same coordinate system.

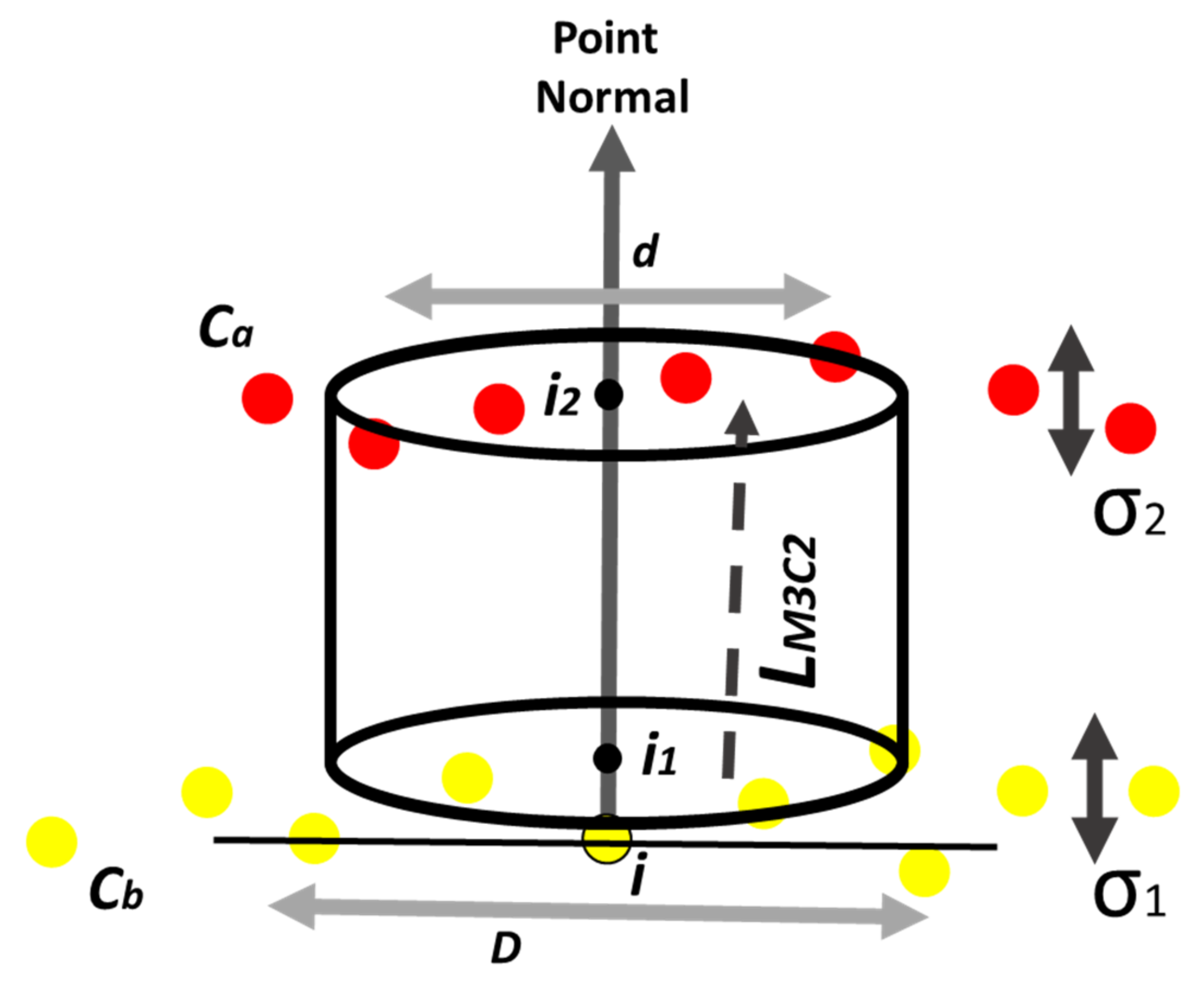

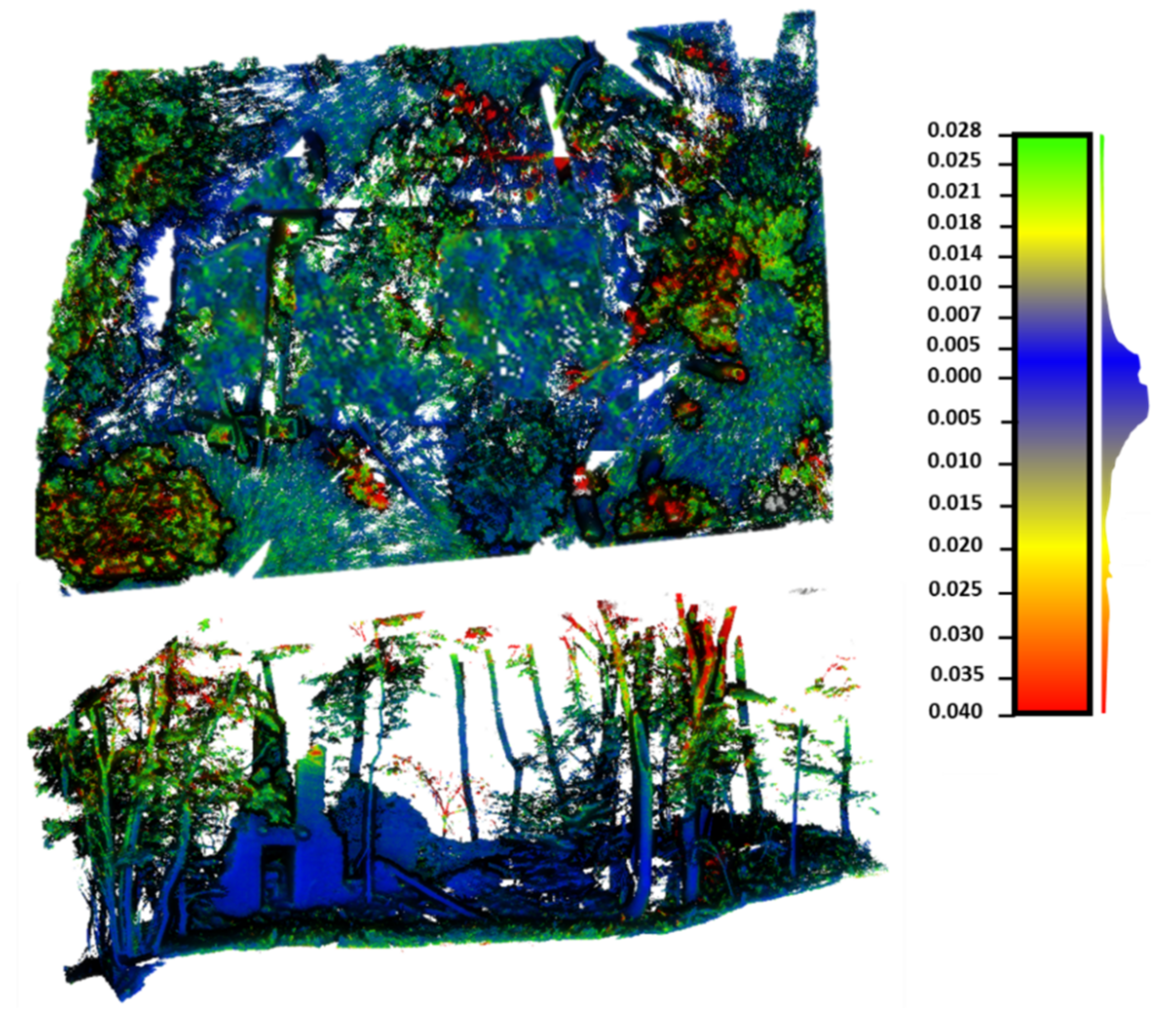

A point-to-point comparison was carried out to obtain a more in-depth evaluation of the potential of the MMS solution for mapping these spaces. During this stage, the multiscale model to model cloud comparison (

M3C2) algorithm was used [

29]. This approach allows for estimating the observed discrepancies between the MLS and TLS point clouds (

Figure 14), and it is implemented in the open-source software CloudCompare v2.10 [

27]. In order to obtain reliable results, the two point clouds were segmented by eliminating the non-common areas. The comparison of both point clouds provided an RMSE of 0.01 m (

Figure 15), in line with the RMSE obtained during the alignment phase.

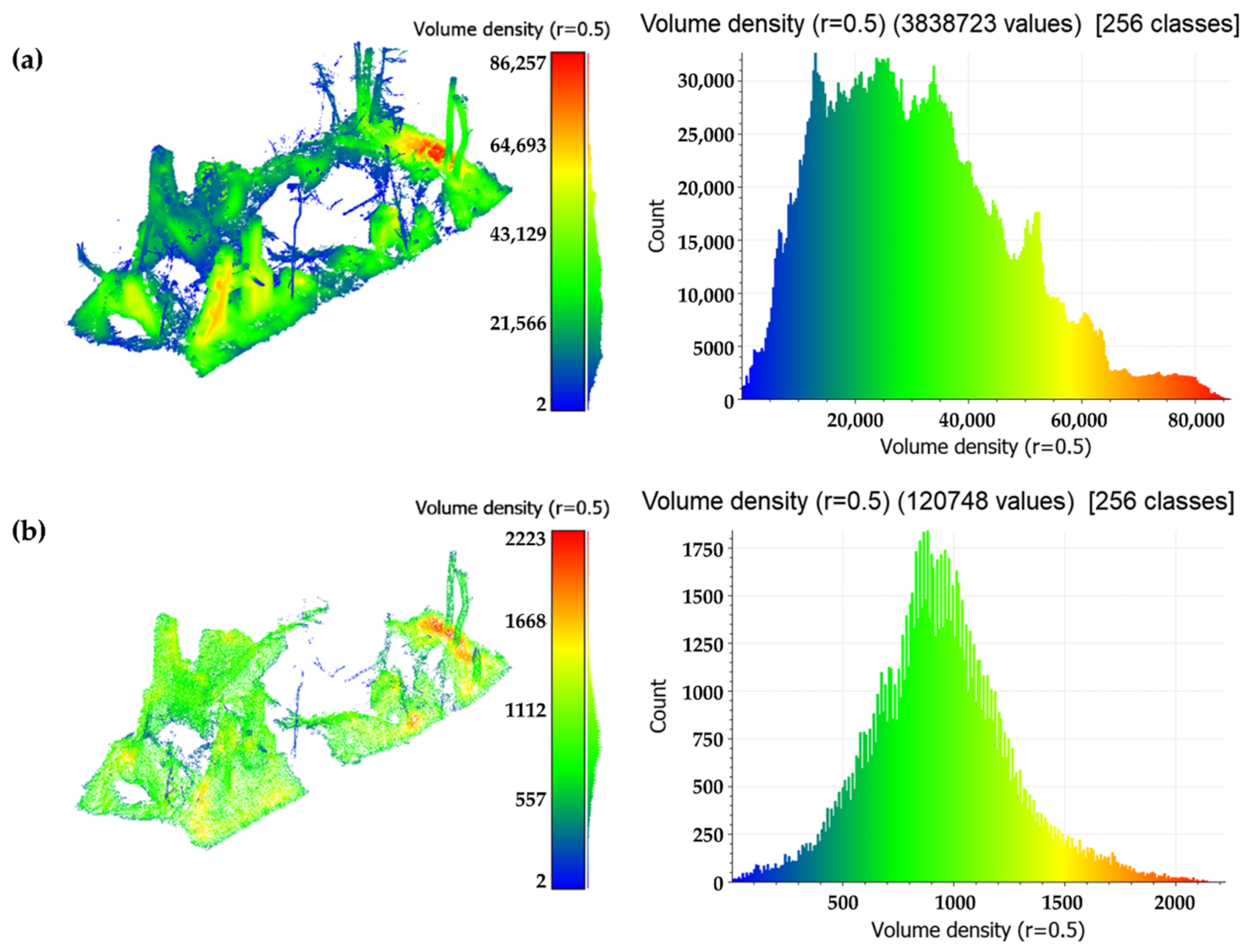

4.2.2. TLS vs. ALS

As for the MMS data, the L1 point cloud was compared with the TLS point cloud using the latter as a reference. Firstly, the clouds were compared by number of points and density metrics. Point density has been computed in terms of the number of neighbors (NoN) detected in a 3D sphere of radius 0.5 m. The analysis is performed in the building area of the survey, identifying a common portion of the cloud (

Figure 16). In this case, L1 data present a Gaussian density distribution with almost 30 times fewer points than the TLS data (

Table 6).

Following the density analysis, a point-to-point comparison was carried out, exploiting again the

M3C2 algorithm implemented in CloudCompare software as homologous points cannot be defined. The

M3C2 distance computation calculates the changes between two point clouds along the normal direction of a mean surface at a scale consistent with the local surface variations. If the reference cloud is dense enough, then the nearest neighbor distance will be close to the “true” distance to the underlying surface. The first point-to-point comparison was performed considering the entire region of interest, i.e., the building under the canopy, where the TLS point cloud was used as a reference. Subsequently, point-to-point comparison was performed on different portions of the building (façade and planar section) to provide

M3C2 distance statistics also along the principal direction of the cloud. This allows to analyze the data acquired by the L1 sensor considering the vegetation density of the surrounding environment, which could differently affect the scanned surface. Moreover, dividing the analysis on a different portion allows to obtain a less error-prone statistical value. In

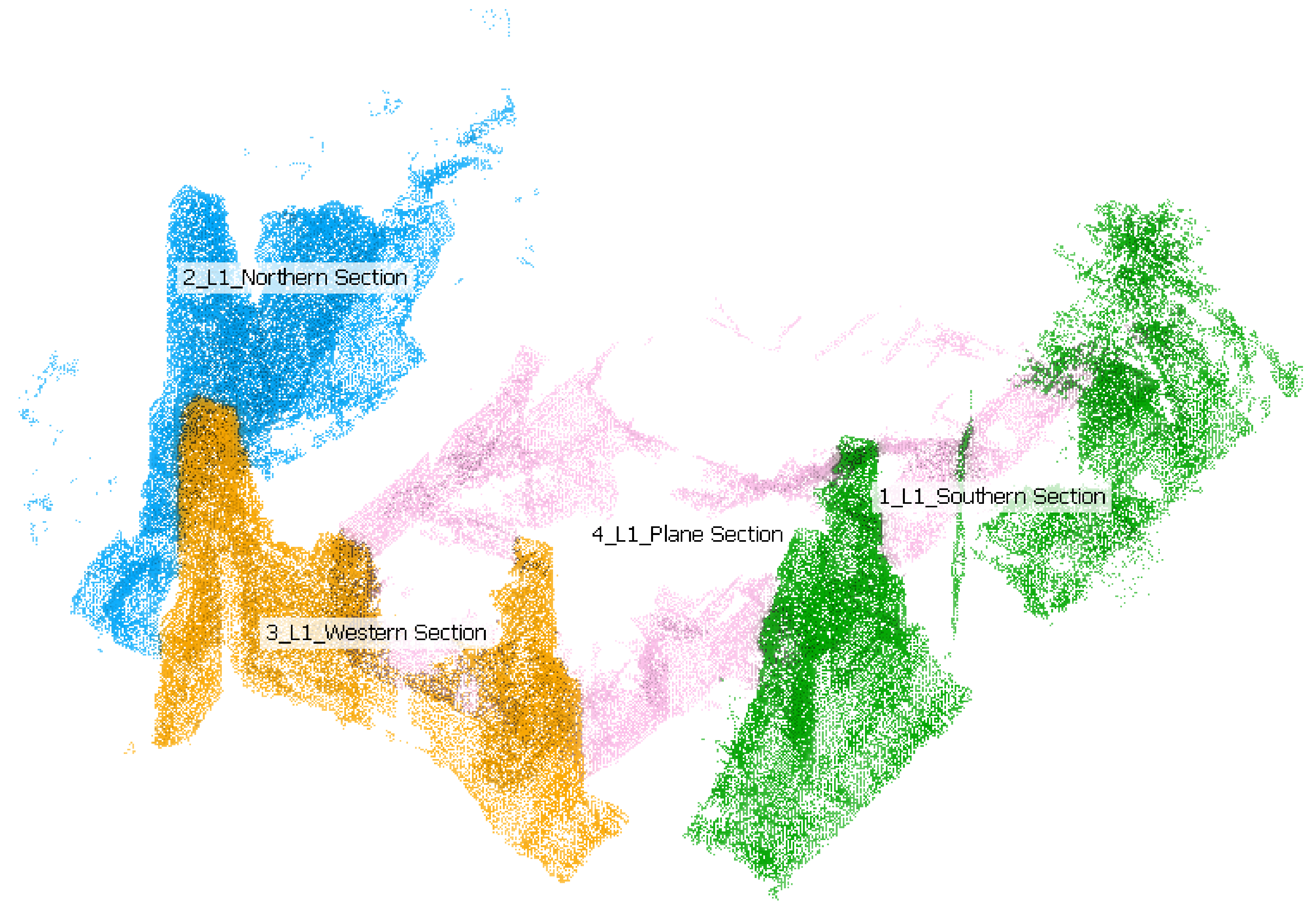

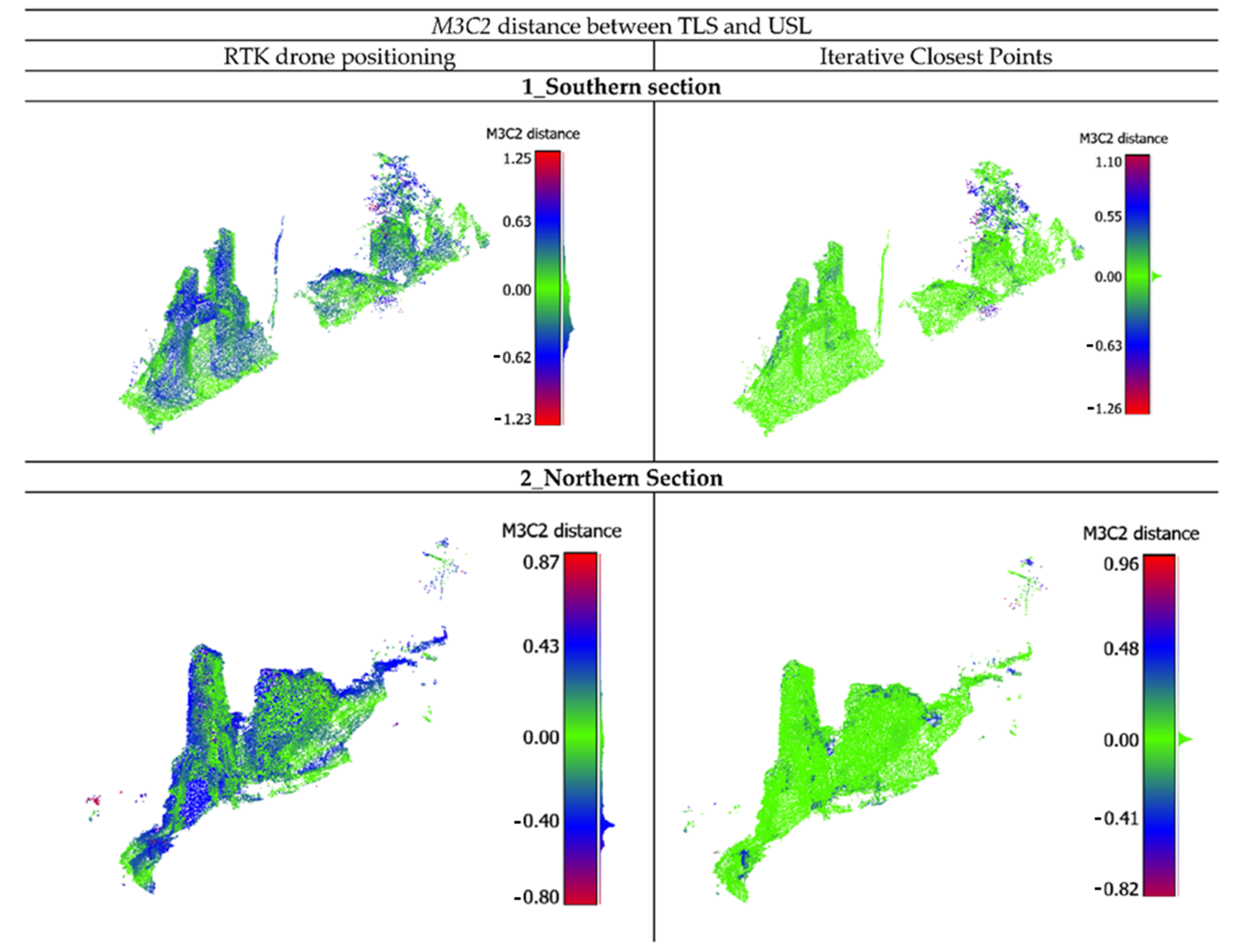

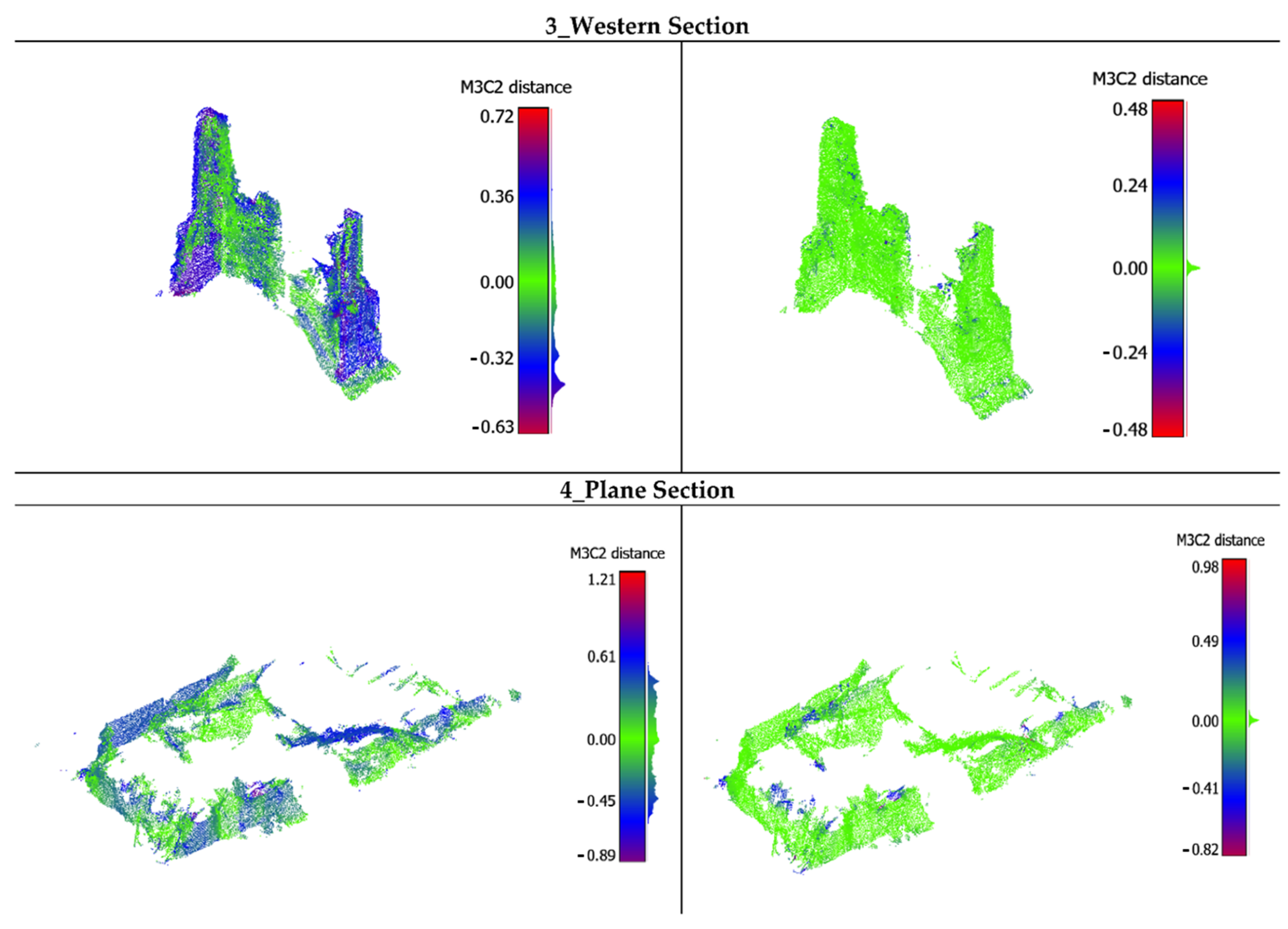

Figure 17 is shown the different regions of interest.

The geo-referenced TLS model and the L1 point cloud were both projected into the UTM cartographic representation (East, North UTMWGS84-32N ETRF2000) with elevation transformed from ellipsoidal height to orthometric height using regular interpolation grids provided by the Istituto Geografico Militare (IGM) (

https://www.igmi.org/, accessed on 20 July 2022). Considering the different geo-referencing procedure (GCP-based vs. direct RTK), the L1 data must be evaluated referring not only to the measurements’ accuracy but also to the geo-referencing accuracy. In the first case, the

M3C2 was applied directly on the L1 data without any pre-processing (a), while, in the second case, the

M3C2 algorithm was preceded by a relative fine registering of L1 data to TLS data using an iterative closest point procedure (ICP) (b). For both analyses, a common portion of the surveyed area was selected and segmented. The parameters of the alignment process were optimized by minimizing the ICP alignment error. In particular, the number of iterations of the algorithm was fixed to the default value of 20, the optimum threshold for minimizing the root mean square (RMS) difference was found to be 10

−5 cm, and the final overlap was set to 60%. With these parameters, 106,957 points out of 120,748 were selected for registration and the final RMS was 0.042 cm. The results of the analysis of the

M3C2 distance are statistically represented in

Table 7 and visually reported in

Figure 18.

The

M3C2 distance analysis (

Table 8) clearly shows the potentiality of the L1 sensor to penetrate the vegetation and, at the same time, obtain an accurate survey. The point cloud obtained by the direct geo-referencing process presents some deviation with 50% of the points with a distance less than 24 cm. This value highly decreases after ICP computation reaching 1 cm of distance. In this case, 95% of the points have a distance less than 7 cm, which means an overall good performance of this system. It must be highlighted that the comparison has been made in a highly vegetated environment; therefore, these errors are affected by some noise related to the environment.

Finally, to provide insight regarding the penetration capability of the L1 sensor, the three signal returns have been analyzed again, computing the number of points and the cloud density for each beam (

Table 9). As you can see, L1 is able to gather behind the canopy, also reaching a portion of the building, although with some void.

5. Conclusions

In the present work, three LiDAR technologies (Faro Focus 3D X130 TLS, Kaarta Stencil 2–16 MMS, and DJI Zenmuse L1 ALS) have been tested and compared in order to assess the performances in surveying built heritage in vegetated areas. Each LiDAR surveying technique applies a different on-field and post-processing methodology due to the difference in the technological features. In the field, the rapidity and ease of data acquisition represent fundamental aspects, while, in post-processing, computing cost and automation of workflow are essential. In this regard, moving LiDAR technologies have a great advantage with respect to TLS: they can be easily deployed in the field and the data acquisition can be performed in a few minutes. Moreover, the processing cost is lower thanks to iterative co-registration algorithms, which allow scans to update in a dead reckoning fashion. In more detail, the MMSs are more versatile and can be mounted on aerial and ground vehicles or carried by a pedestrian, solving the problem of surveying in more scenarios. ALS, on the other hand, by taking advantage of the moving agent represented by the UAV, can cover large expanses of land, but only by returning an overhead view. In our specific scenario, i.e., a built heritage submerged by dense vegetation, the ALS demonstrated good penetration ability overall, generating a point cloud representative of the object, albeit sometimes pierced. Much better performing were the ground-based systems: both TLS and MMS allowed the laser scanner to be positioned/moved around the building, choosing the best vantage points or paths for the specific task. Indeed, a limitation of these terrestrial tools lies in the inability to acquire the higher portions of the buildings, thus generating incomplete object geometries.

Regarding the technical performances of the three LiDAR models, the DJI Zenmuse L1 has the lowest performance in terms of level of detail, density, number of points, and related noise. Although the Kaarta Stencil MMS performs less well than Faro Focus TLS in terms of the density and resolution of the acquired data, it manages to compensate because of the acquisition methodology, which allows user movement to increase/decrease the density and amount of acquired data. On the other hand, the MMS lacks an imaging sensor able to colorize the point cloud, while L1 has visible images that also allow photogrammetric survey.

Concerning the processing, the MMS dataset was the fastest in processing time thanks to the high-level SLAM and loop closure algorithm implemented in the post-processing routine. Moreover, the ALS dataset can be processed relatively fast and, thanks to the UAS RTK positioning, automatically. The TLS required both more human effort and processing time (for point cloud registration, filtering, and segmentation).

The resulting point clouds have been analyzed and compared, focusing attention on the number of points acquired by the different systems, the density, and the nearest neighbor distance. The TLS survey is more accurate and provides a higher number of points, but the overall mean density is less than the MMS cloud. The ALS is less dense and has almost 30 times fewer points than the TLS data.

The M3C2 cloud-to-cloud distance computation algorithm has been applied to compare MMS and ALS with the TLS used as a ground truth. The results highlight that the direct geo-referencing of the RTK positioning performed by the UAV receiver is not enough to obtain reliable data as the mean difference between the reference TLS cloud (geo-referenced with the geodetic GNSS survey) and the ALS cloud is about 30 cm. Therefore, a ground GNSS survey should always be performed using markers or reflective targets visible from the DJI L1 sensor to be used as a GCP. Despite this, geo-referencing performed on the fly can be an excellent starting point to quickly apply co-registration algorithms. In this work, ICP was applied to aerial survey results, resulting in an average distance between point clouds of less than 1 cm. The L1 sensor can exploit the three different return signals to acquire data in forestry and densely vegetated areas. In this work, it has been observed that the system is able to gather data beneath the canopy, also reaching that portion of the building.