Machine Learning and 3D Reconstruction of Materials Surface for Nondestructive Inspection

Abstract

:1. Introduction

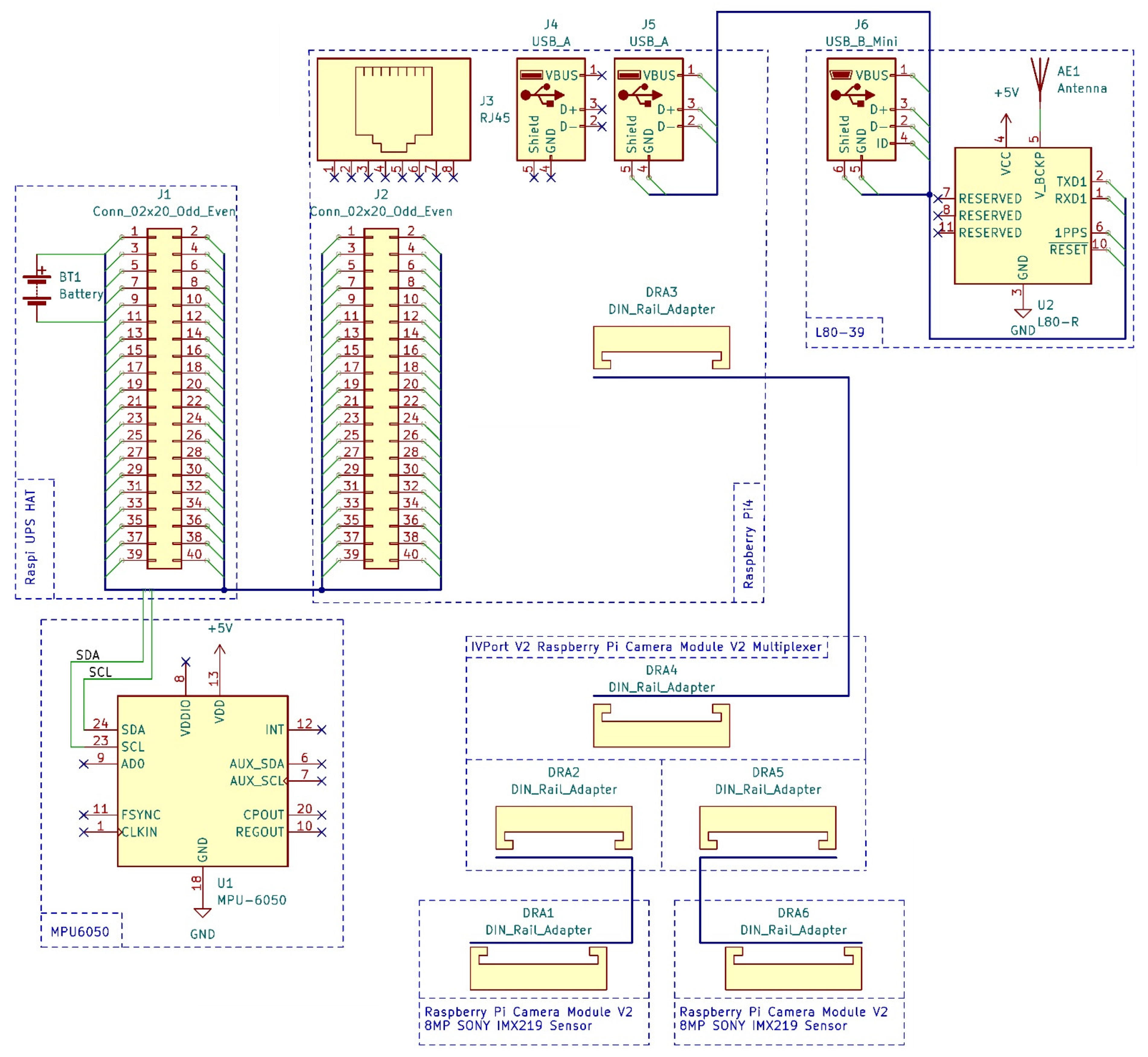

2. Existing Solutions Mini-Review

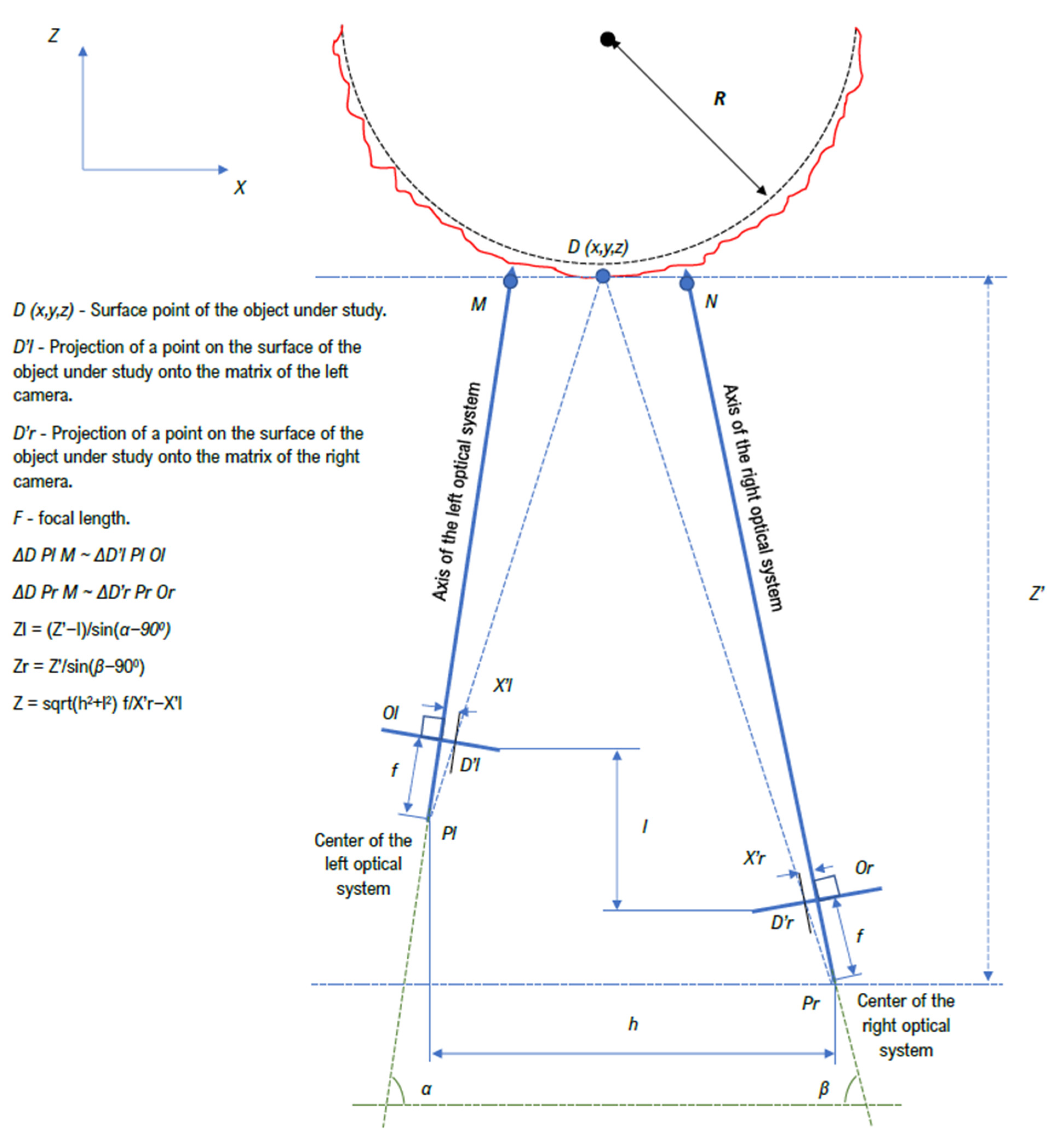

3. Materials and Methods

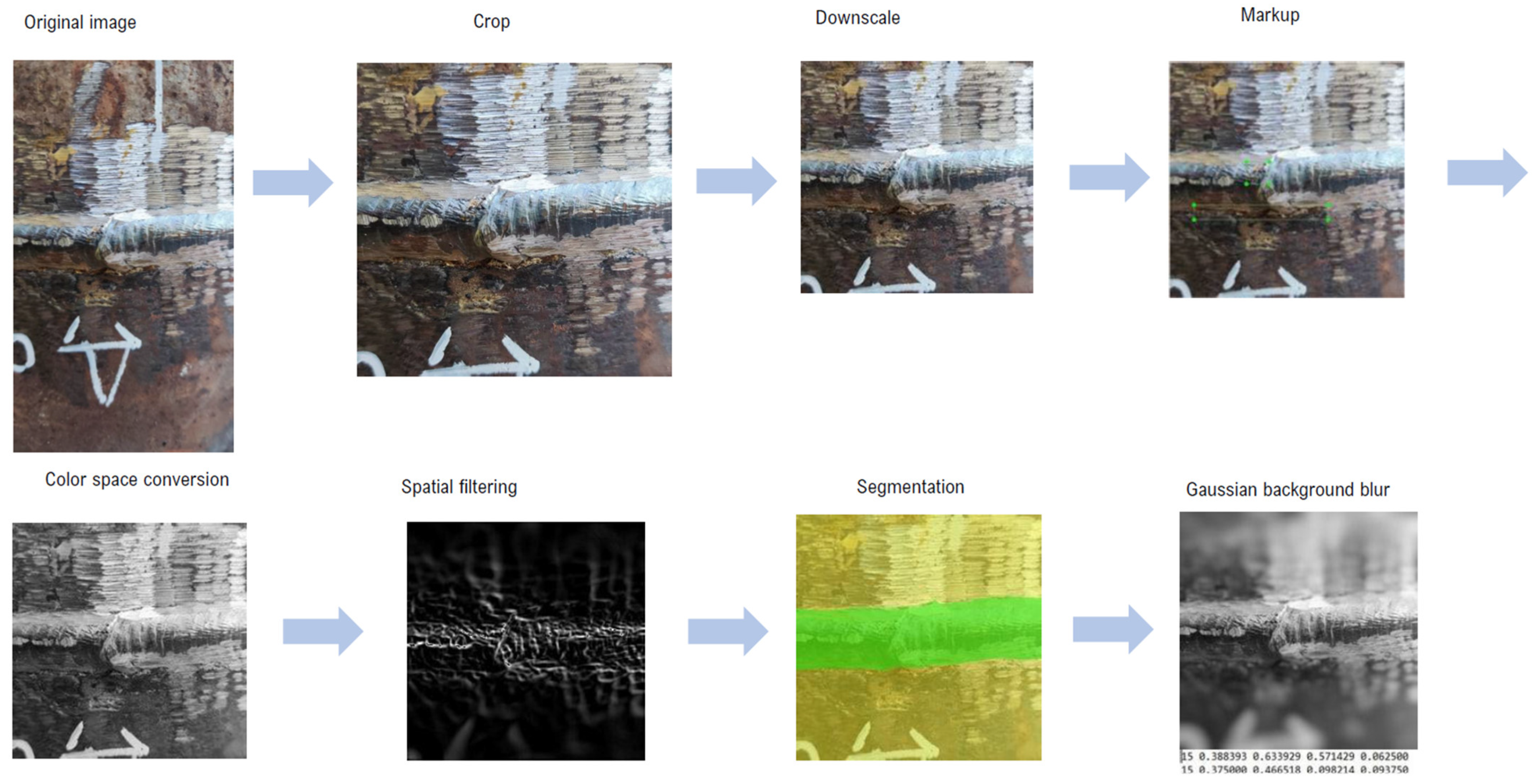

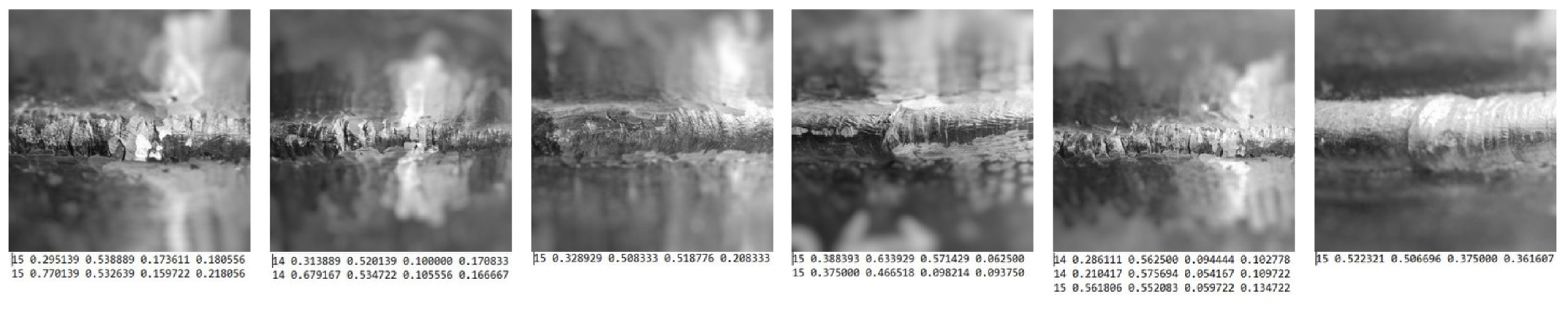

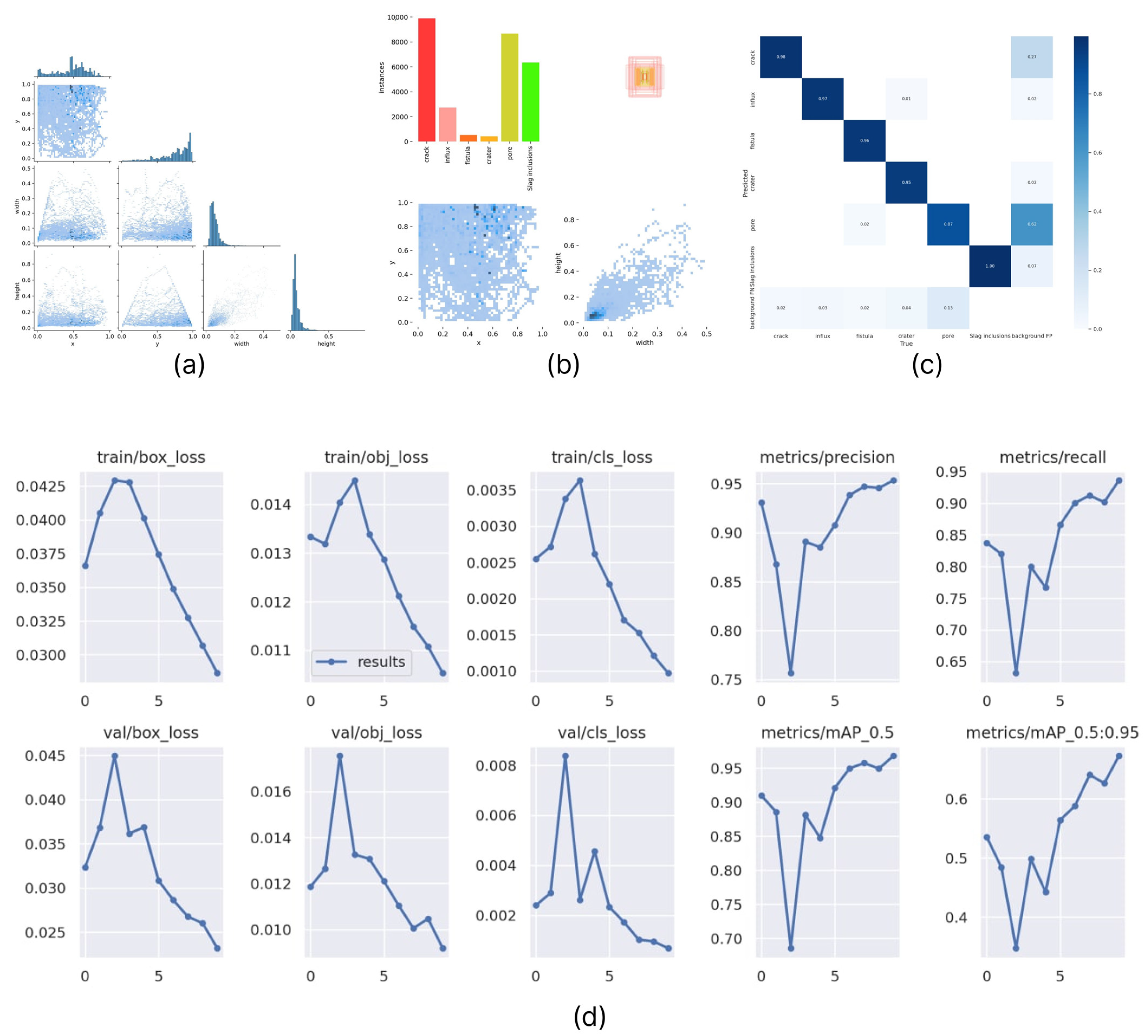

4. Data Preparation

| Algorithm 1. Calculation and presentation of points of a three-dimensional controlled object/procedure CalculatePointCloud(imgL, imgR). |

| INPUT: Two images of calibrated cameras OUTPUT: Point cloud imgL:= ConvertColor(imgL, GRAY) imgR:= ConvertColor(imgR, GRAY) keypoints_1:= SiftDetectAndCompute(imgL) keypoints_2:= SiftDetectAndCompute(imgR) keypoints_1, keypoints_2:= Match(keypoints_1, keypoints_2) F:= FindFundamentalMat(keypoints_1, keypoints_2) pointCloud:= Triangulation(F, keypoints_1, keypoints_2) return pointCloud end procedure |

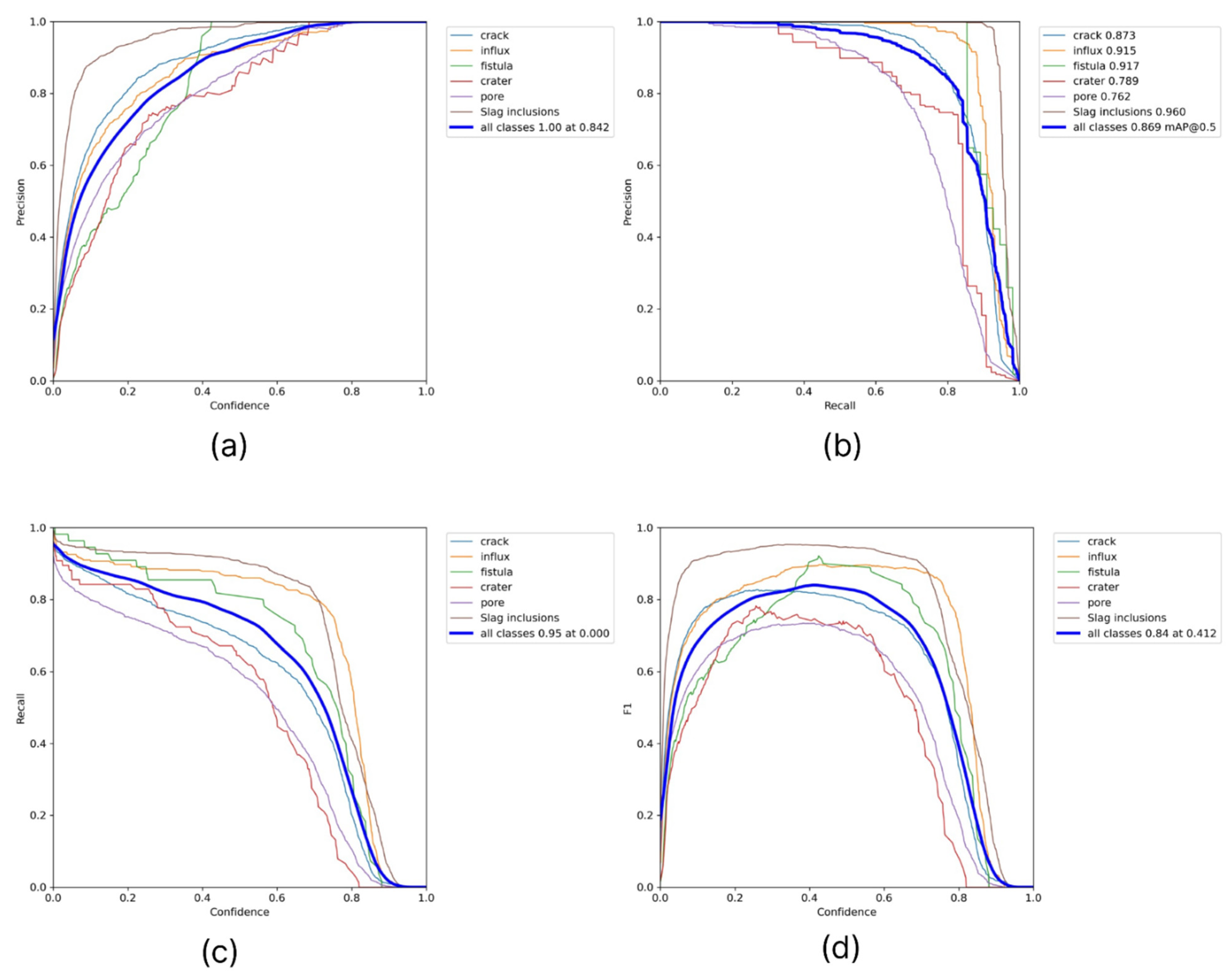

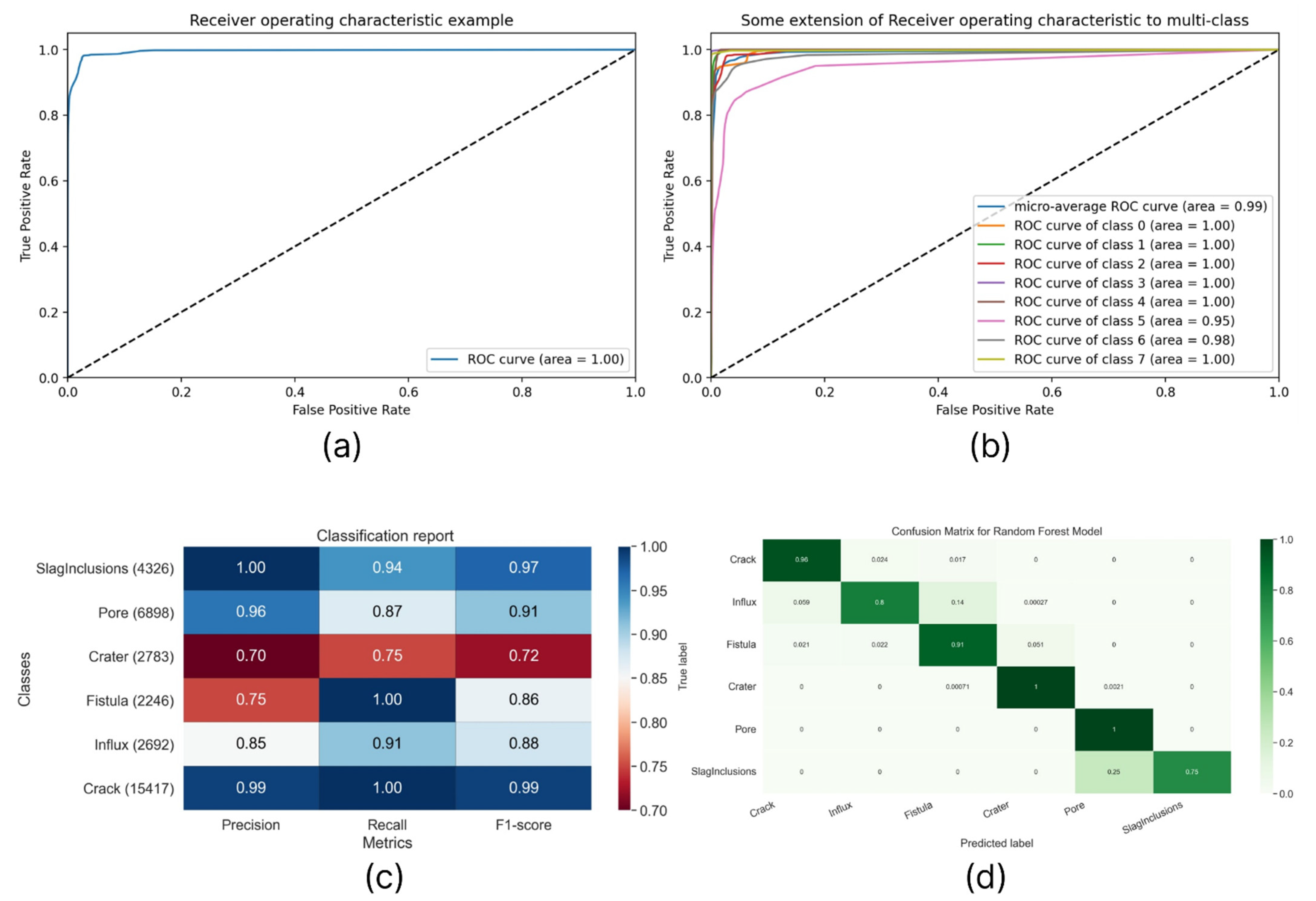

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Csontos, B.; Halász, L.; Heckl, I. Event-Driven Simulation Method for Fuel Transport in a Mesh-like Pipeline Network. Comput. Chem. Eng. 2022, 157, 107611. [Google Scholar] [CrossRef]

- Johnstone, R.W. Transportation Systems and Security Risks. In Protecting Transportation; Elsevier: Amsterdam, The Netherlands, 2015; pp. 73–106. ISBN 978-0-12-408101-7. [Google Scholar]

- Das, D.; Das, S.K.; Parhi, P.K.; Dan, A.K.; Mishra, S.; Misra, P.K. Green Strategies in Formulating, Stabilizing and Pipeline Transportation of Coal Water Slurry in the Framework of WATER-ENERGY NEXUS: A State of the Art Review. Energy Nexus 2021, 4, 100025. [Google Scholar] [CrossRef]

- Umar, H.A.; Abdul Khanan, M.F.; Ogbonnaya, C.; Shiru, M.S.; Ahmad, A.; Baba, A.I. Environmental and Socioeconomic Impacts of Pipeline Transport Interdiction in Niger Delta, Nigeria. Heliyon 2021, 7, e06999. [Google Scholar] [CrossRef] [PubMed]

- Murrill, B.J. Pipeline Transportation of Natural Gas and Crude Oil: Federal and State Regulatory Authority; Library of Congress, Congressional Research Service: Washington, DC, USA, 2016. [Google Scholar]

- Petro, P.P. Study of Plastic vs. Steel Pipe Performance. Gas Dig. 1975, 1. Available online: https://www.osti.gov/biblio/7219274 (accessed on 24 July 2022).

- Sotoodeh, K. Piping and Valve Corrosion Study. In A Practical Guide to Piping and Valves for the Oil and Gas Industry; Elsevier: Amsterdam, The Netherlands, 2021; pp. 585–627. ISBN 978-0-12-823796-0. [Google Scholar]

- Annila, L. Nondestructive Testing of Pipelines; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Becht, C. Process Piping: The Complete Guide to ASME B31.3, 3rd ed.; ASME Press: New York, NY, USA, 2009; ISBN 978-0-7918-0286-1. [Google Scholar]

- Carvalho, A.A.; Rebello, J.M.A.; Souza, M.P.V.; Sagrilo, L.V.S.; Soares, S.D. Reliability of Nondestructive Test Techniques in the Inspection of Pipelines Used in the Oil Industry. Int. J. Press. Vessel. Pip. 2008, 85, 745–751. [Google Scholar] [CrossRef]

- Lumb, R.F. Nondestructive Testing of High-Pressure Gas Pipelines. Nondestruct. Test. 1969, 2, 259–268. [Google Scholar] [CrossRef]

- Zhang, B.; Liu, F.; Liu, C.; Li, J.; Zhang, B.; Zhou, Q.; Han, X.; Zhao, Y. An Ultrasonic Nondestructive Testing Method for the Measurement of Weld Width in Laser Welding of Stainless Steel. In Proceedings of the AIP Conference Proceedings, Wuhan, China, 7–9 April 2017; p. 040082. [Google Scholar]

- Ma, Q.; Tian, G.; Zeng, Y.; Li, R.; Song, H.; Wang, Z.; Gao, B.; Zeng, K. Pipeline In-Line Inspection Method, Instrumentation and Data Management. Sensors 2021, 21, 3862. [Google Scholar] [CrossRef]

- Vishal, V.; Ramya, R.; Vinay Srinivas, P.; Vimal Samsingh, R. A Review of Implementation of Artificial Intelligence Systems for Weld Defect Classification. Mater. Today Proc. 2019, 16, 579–583. [Google Scholar] [CrossRef]

- Fioravanti, C.C.B.; Centeno, T.M.; De Biase Da Silva Delgado, M.R. A Deep Artificial Immune System to Detect Weld Defects in DWDI Radiographic Images of Petroleum Pipes. IEEE Access 2019, 7, 180947–180964. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Lv, P.; Wang, H. Detection of Line Weld Defects Based on Multiple Thresholds and Support Vector Machine. NDT E Int. 2008, 41, 517–524. [Google Scholar] [CrossRef]

- Cassels, B. Weld Defect Detection Using Ultrasonic Phased Arrays; University of Central Lancashire: Preston, UK, 2018. [Google Scholar]

- Ajmi, C.; Zapata, J.; Elferchichi, S.; Zaafouri, A.; Laabidi, K. Deep Learning Technology for Weld Defects Classification Based on Transfer Learning and Activation Features. Adv. Mater. Sci. Eng. 2020, 2020, 1574350. [Google Scholar] [CrossRef]

- Yang, D.; Cui, Y.; Yu, Z.; Yuan, H. Deep Learning Based Steel Pipe Weld Defect Detection. Appl. Artif. Intell. 2021, 35, 1237–1249. [Google Scholar] [CrossRef]

- Sizyakin, R.; Voronin, V.V.; Gapon, N.; Zelensky, A.; Pižurica, A. Automatic Detection of Welding Defects Using the Convolutional Neural Network. In Proceedings of the Automated Visual Inspection and Machine Vision III, Munich, Germany, 27 June 2019; Beyerer, J., Puente León, F., Eds.; SPIE: Munich, Germany, 2019; p. 14. [Google Scholar]

- Cui, W.; Wang, K.; Zhang, Q.; Zhang, P. A Recognition Algorithm to Detect Pipe Weld Defects. Teh. Vjesn. 2017, 24, 1969–1975. [Google Scholar] [CrossRef]

- Oh, S.; Jung, M.; Lim, C.; Shin, S. Automatic Detection of Welding Defects Using Faster R-CNN. Appl. Sci. 2020, 10, 8629. [Google Scholar] [CrossRef]

- Kumar, R.; Somkuva, V.; Nitttr, B. A Review on Analysis, Monitoring and Detection of Weld Defect Products. IJERT 2015, V4, IJERTV4IS110556. [Google Scholar] [CrossRef]

- Chen, P.; Li, R.; Fu, K.; Zhao, X. Research and Method for In-Line Inspection Technology of Girth Weld in Long-Distance Oil and Gas Pipeline. J. Phys. Conf. Ser. 2021, 1986, 012052. [Google Scholar] [CrossRef]

- Gaol, A.L.; Sutanto, H.; Basuki, W.W. Analysis of Welding Disabilities on Carbon Steel Pipes with SMAW Reviewed from Radiography Test Results. Int. J. Appl. Eng. Res. 2019, 14, 7. [Google Scholar]

- Moghaddam, A.A.; Rangarajan, L. Classification of Welding Defects in Radiographic Images. Pattern Recognit. Image Anal. 2016, 26, 54–60. [Google Scholar] [CrossRef]

- Kumaresan, S.; Aultrin, K.S.J.; Kumar, S.S.; Anand, M.D. Transfer Learning with CNN for Classification of Weld Defect. IEEE Access 2021, 9, 95097–95108. [Google Scholar] [CrossRef]

- Chady, T.; Sikora, R.; Misztal, L.; Grochowalska, B.; Grzywacz, B.; Szydłowski, M.; Waszczuk, P.; Szwagiel, M. The Application of Rough Sets Theory to Design of Weld Defect Classifiers. J. Nondestruct. Eval. 2017, 36, 40. [Google Scholar] [CrossRef]

- Chang, J.; Kang, M.; Park, D. Low-Power On-Chip Implementation of Enhanced SVM Algorithm for Sensors Fusion-Based Activity Classification in Lightweighted Edge Devices. Electronics 2022, 11, 139. [Google Scholar] [CrossRef]

- Tarek, H.; Aly, H.; Eisa, S.; Abul-Soud, M. Optimized Deep Learning Algorithms for Tomato Leaf Disease Detection with Hardware Deployment. Electronics 2022, 11, 140. [Google Scholar] [CrossRef]

- Meivel, S.; Indira Devi, K.; Muthamil Selvam, T.; Uma Maheswari, S. Real Time Analysis of Unmask Face Detection in Human Skin Using Tensor Flow Package and IoT Algorithm. Mater. Today Proc. 2021, S2214785320405826. [Google Scholar] [CrossRef]

- Abed, A.M.; Gitaffa, S.A.; Issa, A.H. Robust Geophone String Sensors Fault Detection and Isolation Using Pattern Recognition Techniques Based on Raspberry Pi4. Mater. Today Proc. 2021, S2214785321032806. [Google Scholar] [CrossRef]

- Asanza, V.; Avilés-Mendoza, K.; Trivino-Gonzalez, H.; Rosales-Uribe, F.; Torres-Brunes, J.; Loayza, F.R.; Peláez, E.; Cajo, R.; Tinoco-Egas, R. SSVEP-EEG Signal Classification Based on Emotiv EPOC BCI and Raspberry Pi. IFAC-Pap. 2021, 54, 388–393. [Google Scholar] [CrossRef]

- Gaggion, N.; Ariel, F.; Daric, V.; Lambert, É.; Legendre, S.; Roulé, T.; Camoirano, A.; Milone, D.H.; Crespi, M.; Blein, T.; et al. ChronoRoot: High-Throughput Phenotyping by Deep Segmentation Networks Reveals Novel Temporal Parameters of Plant Root System Architecture; Plant Biology: Portland, OR, USA, 2020. [Google Scholar]

- Rouhi, A.H.; Thom, J.A. Encoder Settings Impact on Intra-Prediction-Based Descriptors for Video Retrieval. J. Vis. Commun. Image Represent. 2018, 50, 263–269. [Google Scholar] [CrossRef]

- Appiah, O.; Asante, M.; Hayfron-Acquah, J.B. Improved Approximated Median Filter Algorithm for Real-Time Computer Vision Applications. J. King Saud Univ. Comput. Inf. Sci. 2020, 34, 782–792. [Google Scholar] [CrossRef]

- Mery, D.; Riffo, V.; Zscherpel, U.; Mondragón, G.; Lillo, I.; Zuccar, I.; Lobel, H.; Carrasco, M. GDXray: The database of X-ray images for nondestructive testing. J. Nondestruct. Eval. 2015, 4, 1–12. [Google Scholar] [CrossRef]

- Mohamed, E.; Shaker, A.; El-Sallab, A.; Hadhoud, M. INSTA-YOLO: Real-Time In-stance Segmentation. arXiv 2021, arXiv:2102.06777. [Google Scholar]

- Liao, L.; Tang, S.; Liao, J.; Li, X.; Wang, W.; Li, Y.; Guo, R. A Supervoxel-Based Random Forest Method for Robust and Effective Airborne LiDAR Point Cloud Classification. Remote Sens. 2022, 14, 1516. [Google Scholar] [CrossRef]

- Xue, D.; Cheng, Y.; Shi, X.; Fei, Y.; Wen, P. An Improved Random Forest Model Applied to Point Cloud Classification. IOP Conf. Ser. Mater. Sci. Eng. 2020, 768, 072037. [Google Scholar] [CrossRef]

- Zeybek, M. Classification of UAV Point Clouds by Random Forest Machine Learning Algorithm. Turk. J. Eng. 2021, 5, 48–57. [Google Scholar] [CrossRef]

- Geiger, A.; Roser, M.; Urtasun, R. Efficient Large-Scale Stereo Matching. In Computer Vision—ACCV 2010; Kimmel, R., Klette, R., Sugimoto, A., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6492, pp. 25–38. ISBN 978-3-642-19314-9. [Google Scholar]

- Kaczmarek, A.L. Stereo Vision with Equal Baseline Multiple Camera Set (EBMCS) for Obtaining Depth Maps of Plants. Comput. Electron. Agric. 2017, 135, 23–37. [Google Scholar] [CrossRef]

- Chmelar, P.; Beran, L.; Rejfek, L. The Depth Map Construction from a 3D Point Cloud. MATEC Web Conf. 2016, 75, 03005. [Google Scholar] [CrossRef]

- Whebell, R.M.; Moroney, T.J.; Turner, I.W.; Pethiyagoda, R.; McCue, S.W. Implicit Reconstructions of Thin Leaf Surfaces from Large, Noisy Point Clouds. Appl. Math. Model. 2021, 98, 416–434. [Google Scholar] [CrossRef]

- Wu, H.; Xu, Z.; Liu, C.; Akbar, A.; Yue, H.; Zeng, D.; Yang, H. LV-GCNN: A Lossless Voxelization Integrated Graph Convolutional Neural Network for Surface Reconstruction from Point Clouds. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102504. [Google Scholar] [CrossRef]

| x | y | z | nx | ny | nz | Red | Green | Blue | Alpha | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | −0.27675 | 2.1516 | 3.5621 | 4.520603 | 4.28289 | 3.83560 | 20 | 47 | 103 | 255 |

| 1 | −0.27750 | 2.1557 | 3.5664 | 4.749300 | 3.10760 | 3.18440 | 36 | 35 | 96 | 255 |

| 2 | −0.28250 | 2.1602 | 3.5701 | 3.159200 | 2.98990 | 4.15230 | 45 | 51 | 110 | 255 |

| 3 | −0.28900 | 2.1697 | 3.5709 | 4.062900 | 4.11780 | 2.99978 | 23 | 49 | 99 | 255 |

| 4 | −0.28947 | 2.1697 | 3.5718 | 4.131200 | 4.56719 | 4.36810 | 19 | 37 | 106 | 255 |

| Parameter | Meaning |

|---|---|

| Number of epochs | 50 |

| Batch size | 64 |

| Learning rate | 0.01 |

| Momentum | 0.937 |

| Weight decay | 0.005 |

| Image caching | Yes |

| Parameter | Meaning |

|---|---|

| Number of epochs | 25 |

| Batch size | 64 |

| Learning rate | 0.01 |

| Momentum | 0.937 |

| Weight decay | 0.005 |

| Image caching | Yes |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kartashov, O.O.; Chernov, A.V.; Alexandrov, A.A.; Polyanichenko, D.S.; Ierusalimov, V.S.; Petrov, S.A.; Butakova, M.A. Machine Learning and 3D Reconstruction of Materials Surface for Nondestructive Inspection. Sensors 2022, 22, 6201. https://doi.org/10.3390/s22166201

Kartashov OO, Chernov AV, Alexandrov AA, Polyanichenko DS, Ierusalimov VS, Petrov SA, Butakova MA. Machine Learning and 3D Reconstruction of Materials Surface for Nondestructive Inspection. Sensors. 2022; 22(16):6201. https://doi.org/10.3390/s22166201

Chicago/Turabian StyleKartashov, Oleg O., Andrey V. Chernov, Alexander A. Alexandrov, Dmitry S. Polyanichenko, Vladislav S. Ierusalimov, Semyon A. Petrov, and Maria A. Butakova. 2022. "Machine Learning and 3D Reconstruction of Materials Surface for Nondestructive Inspection" Sensors 22, no. 16: 6201. https://doi.org/10.3390/s22166201

APA StyleKartashov, O. O., Chernov, A. V., Alexandrov, A. A., Polyanichenko, D. S., Ierusalimov, V. S., Petrov, S. A., & Butakova, M. A. (2022). Machine Learning and 3D Reconstruction of Materials Surface for Nondestructive Inspection. Sensors, 22(16), 6201. https://doi.org/10.3390/s22166201