Abstract

Brain decoding is a process of decoding human cognitive contents from brain activities. However, improving the accuracy of brain decoding remains difficult due to the unique characteristics of the brain, such as the small sample size and high dimensionality of brain activities. Therefore, this paper proposes a method that effectively uses multi-subject brain activities to improve brain decoding accuracy. Specifically, we distinguish between the shared information common to multi-subject brain activities and the individual information based on each subject’s brain activities, and both types of information are used to decode human visual cognition. Both types of information are extracted as features belonging to a latent space using a probabilistic generative model. In the experiment, an publicly available dataset and five subjects were used, and the estimation accuracy was validated on the basis of a confidence score ranging from 0 to 1, and a large value indicates superiority. The proposed method achieved a confidence score of 0.867 for the best subject and an average of 0.813 for the five subjects, which was the best compared to other methods. The experimental results show that the proposed method can accurately decode visual cognition compared with other existing methods in which the shared information is not distinguished from the individual information.

1. Introduction

Brain decoding estimates human cognition from brain activities and has been actively studied. There has been recent research progress in measuring human brain activities. Certain measuring methods have been used in this regard, including the implantable microelectrode array (MEA) [1] and other noninvasive measuring methods, such as nearinfrared spectroscopy [2], electroencephalogram [3], functional magnetic resonance imaging (fMRI) [4,5,6,7,8,9,10], and magnetoencephalography (MEG) [11,12]. MEA is an invasive measurement method, and the merit of MEA is its robustness to noise during brain activity measurement. However, it necessitates the implantation of microelectrodes in a subject’s body, which is its demerit, as this imposes a significant physical burden on the subject. Therefore, noninvasive methods, such as fMRI and MEG, which are less likely to directly harm a subject, are more widely used than invasive methods. fMRI in particular is frequently used to measure brain activities and can obtain brain activities with high-spatial resolutions. Compared with MEA, the demerits of fMRI are its sensitivity to noise and the large size of the measurement equipment. MEG is superior to fMRI in terms of temporal resolution and has reasonable spatial resolutions [13,14].

Several positive results have been reported by using machine learning methods to analyze brain activities from these measuring methods. For example, emotion analysis methods have been proposed from brain activities [15,16,17]. Some techniques have been proposed to generate an image caption and reconstruct an image using brain activities while seeing the image [9,18,19,20,21,22]. In addition, image reconstruction is attempted on the basis of brain activities while imagining [20]. Researchers believe that the advancement of machine-learning-based brain decoding will reveal the human brain mechanism. The revelation of the human brain’s mechanism is expected to contribute to a society in which everyone lives comfortably by realizing effective devices that use brain activities as input. For example, a brain–machine interface (BMI) aims for humans to directly operate and communicate with external machines without physical movement, which can assist the daily lives of people with handicaps [23,24,25].

The estimation of visual perception from fMRI data has been actively researched in the field of brain decoding to take advantage of excellent spatial resolutions [6,7,8,26]. fMRI data vary depending on the imaging object [6]. Previous studies [7,26] have attempted to analyze visual perception using classical methods, such as a support vector machine [27] and a Gabor wavelet filter [28]. There is a relationship between visual information extracted from a convolutional neural network (CNN) [29] and fMRI data obtained while seeing an object [8,30]. This relationship suggests that a CNN mimics the visual perception system in the human brain, and visual features extracted by a CNN are essential in estimating visual perception. Previous studies have attempted to estimate CNN-based visual features of images using fMRI data collected while subjects see the images [8,31,32,33,34]. In a previous study [8], the authors constructed a decoder that learns the relationship between each subject’s fMRI data and the visual features of a seen image, and the decoder can estimate the visual features of the seen image from the fMRI data. Their method is based on linear regression. Although this decoder can estimate visual features, the estimation accuracy strongly depends on the size of the training set that consists of fMRI data. However, it is essential to lie in a closed and narrow space for a long period to measure fMRI data. Preparing a large amount of fMRI data also places a psychological and time burden on a subject.

Meanwhile, it is still challenging to estimate visual perception using a limited amount of data. Some studies [31,32] have used multi-subject fMRI data obtained when multiple subjects see the same image. These methods construct the latent space to extract the common features (shared features) from multi-subject fMRI data. These methods are based on the generative model, and they can stably train models using multiple inputs, even though the size of training data is limited. They emphasized the concept of shared features as the common information between multi-subject fMRI data. However, they have not considered individual features on which the researchers [8,35] focused as the subjectspecific information in single-subject fMRI data. Each feature contains different information, and it is possible that accuracy is improved by combining shared and individual features in terms of differences in expressive ability.

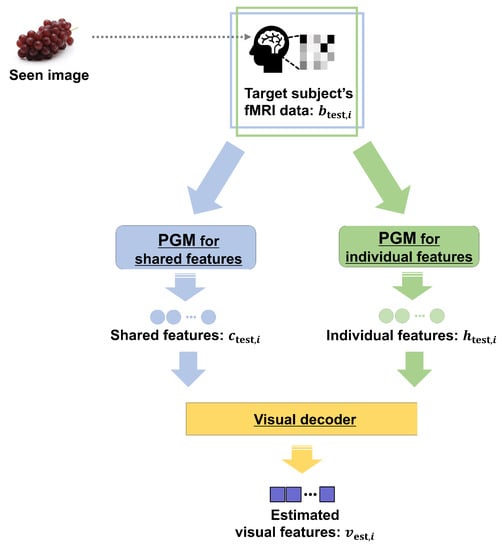

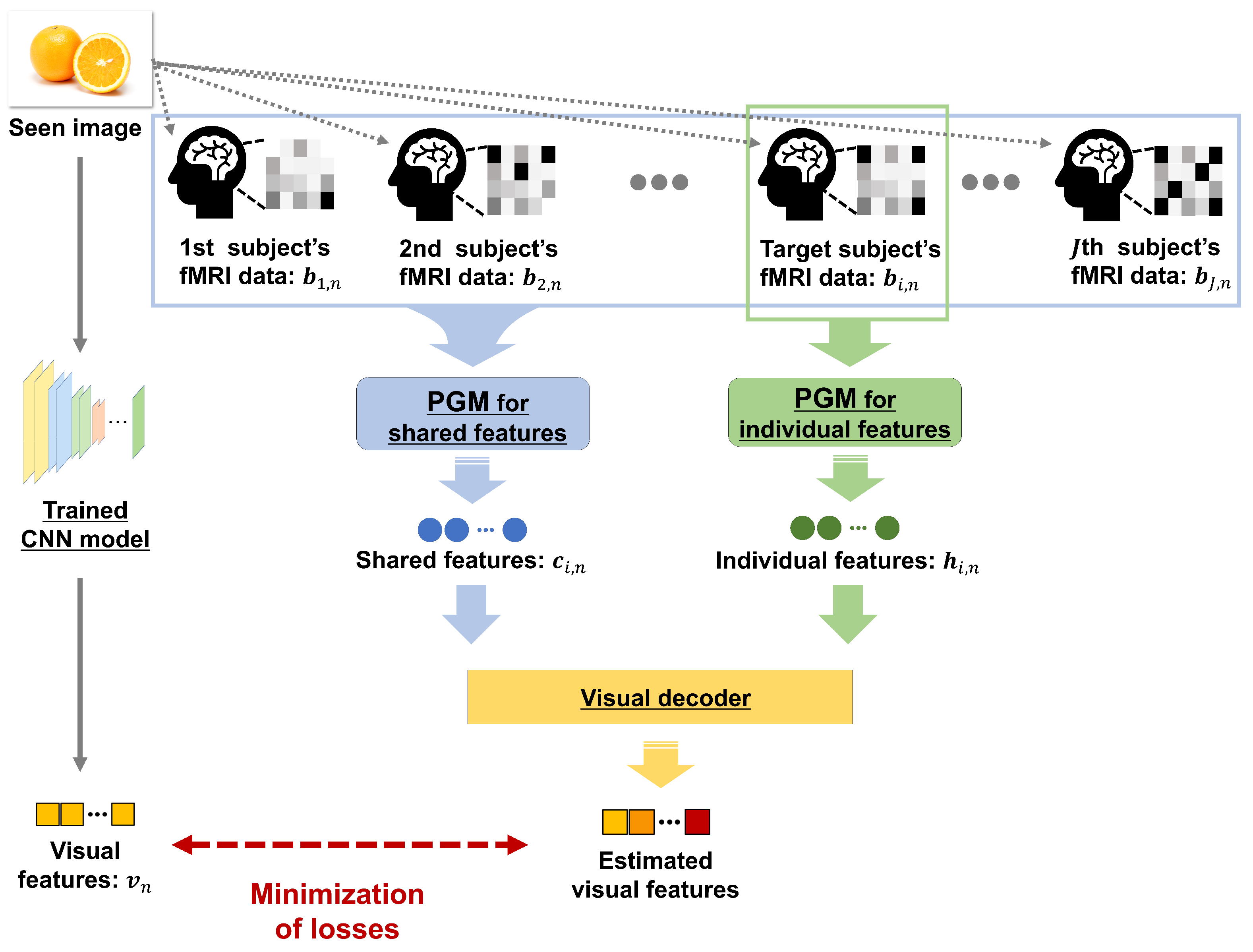

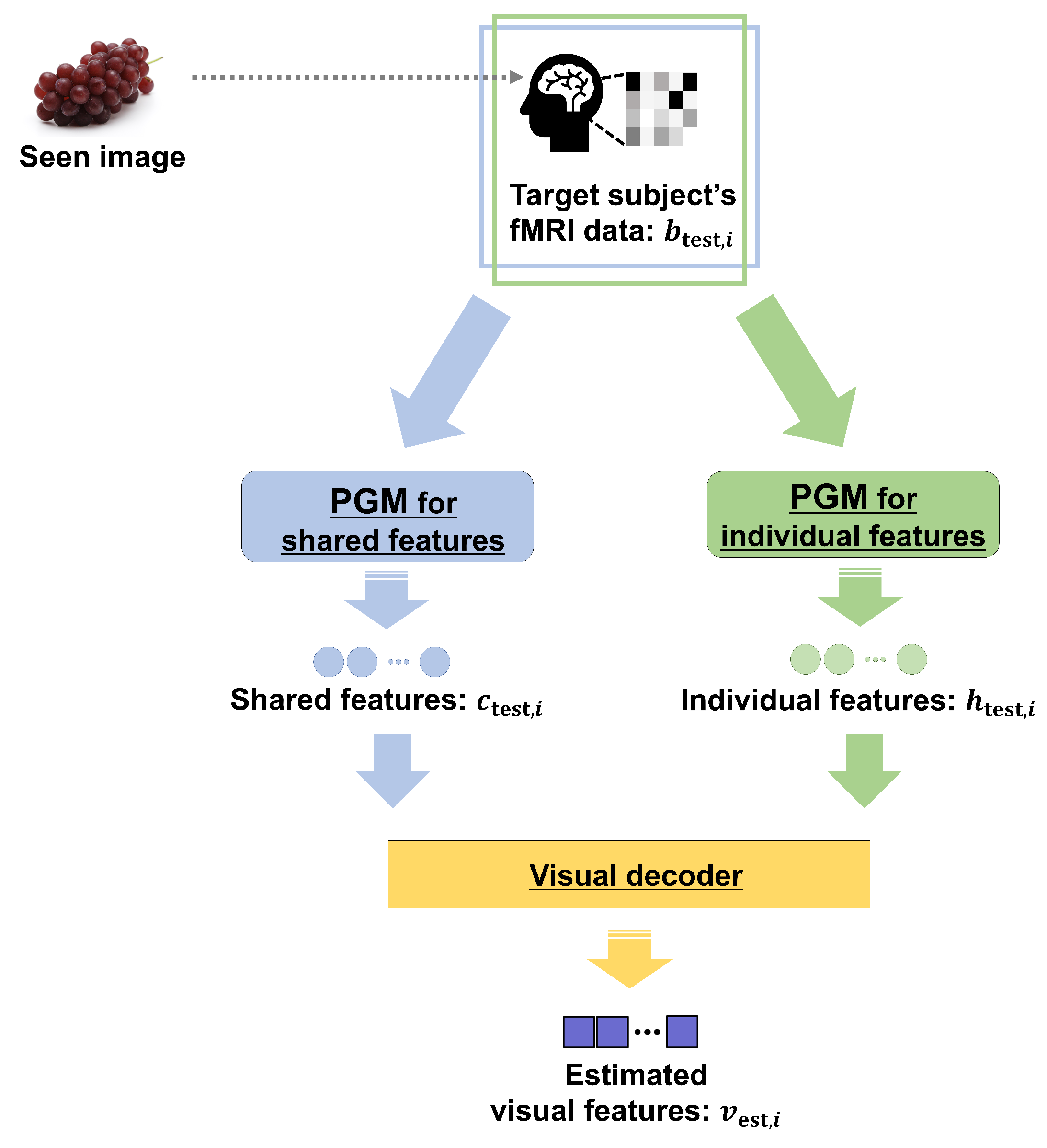

In this study, we propose a novel method for estimating the visual features of a seen image from multi-subject fMRI data. To improve the estimation accuracy, we introduce the idea of focusing on multi-subject and single-subject fMRI data. We calculate the latent space based on fMRI data and extract shared and individual features using a generative model. The generative model assumes the distributions of the extracted features and can extract effective features from a limited amount of data. We can train decoders to estimate visual features using the extracted shared and individual features. In addition, it is possible to use common cognitive information from multi-subject fMRI data and subject-specific cognitive information from single-subject fMRI data.

3. Experimental Results

This section presents the experimental results of the image category estimation. In Section 3.1, the datasets used in constructing the proposed method are explained. In Section 3.2, the experimental conditions are described. In Section 3.3, the comparison methods are explained. In Section 3.4, the experimental results are presented.

3.1. Dataset

In this experiment, we used the fMRI data (approximately 4500-dimensional vectors) published in a previous study [8]. fMRI data comprise data on visual cortex activities of five subjects while observing images with measuring equipment (Siemens MAGNETOM Prisma (https://www.siemens-healthineers.com/jp/magnetic-resonance-imaging/research-systems/magnetom-prisma (accessed on 10 August 2022))). To obtain fMRI data in [8], four males and one female between the ages of 23 and 38 were chosen as the subjects, and the functional localizer [38,39,40] and the standard retinotopy [4,41] experiments were conducted to identify the visual cortex of each subject. There were 1200 seen images of 150 categories collected in ImageNet [42] (eight images per category). We used these images as pairs of fMRI data.

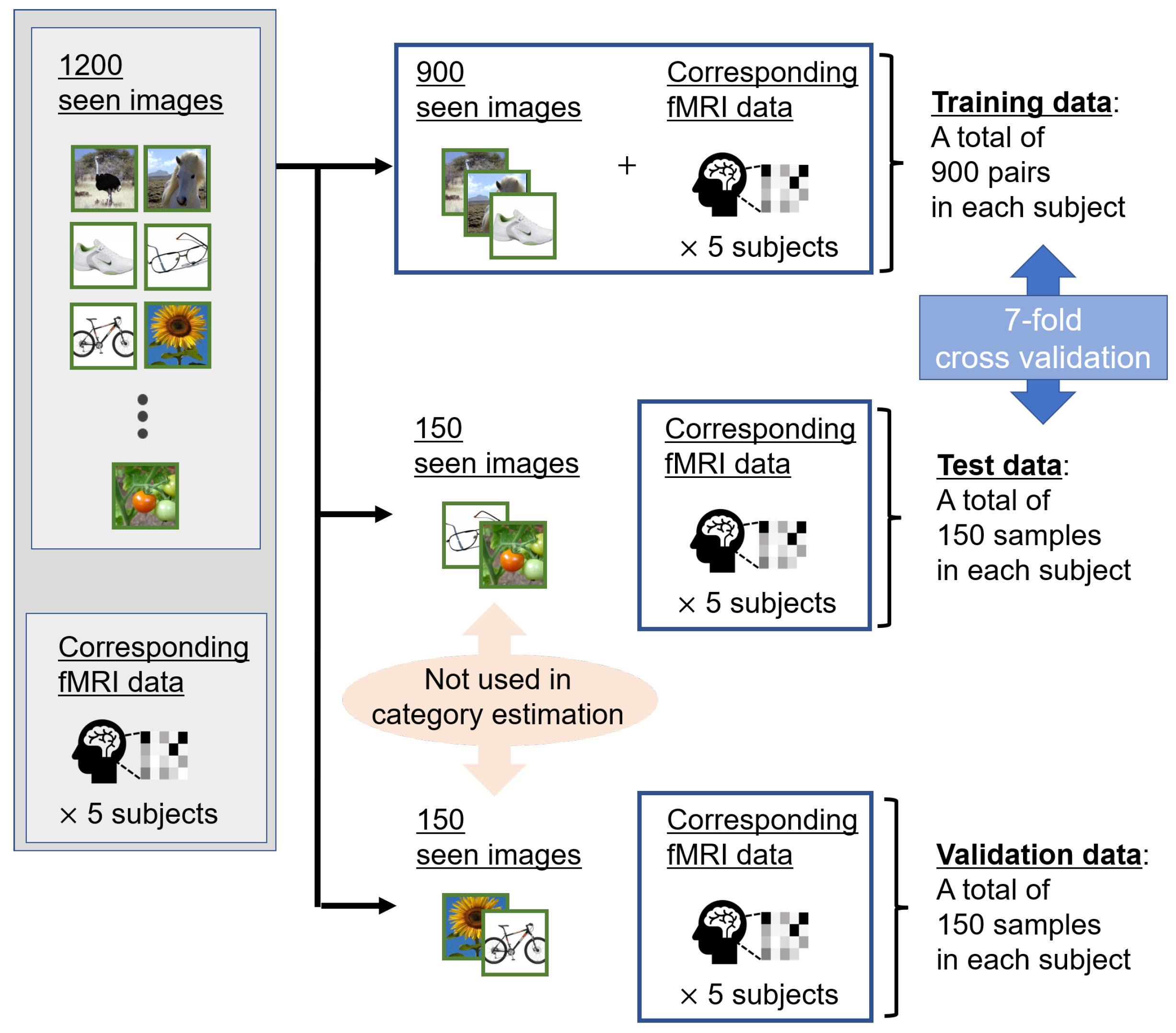

We performed cross-validation to examine the effectiveness of the proposed method through unbiased experiments. Due to the significant burden on the subject during brain activity acquisition, preparing several samples for the fMRI dataset is difficult. Therefore, as shown in Figure 3, we divided these 1200 pairs into 900, 150, and 150 pairs as training, validation, and test data, respectively. All categories were equally divided into the training, validation, and test data. In addition, we applied 7-fold cross-validation to 1050 pairs consisting of training and test data, respectively. If the training and validation data are interchanged, as is widely done in the machine learning field, the validity of the proposed method on small amounts of test data would be verified.

Figure 3.

Overview of fMRI datasets of five subjects. We divided a total of 1200 seen images corresponding to measured fMRI data into 900, 150, and 150 images as training, test, and validation data, respectively. The validation data were fixed, and 7-fold cross-validation was applied to 1050 pairs of the training and test data. For category estimation, we used the candidate visual features averaged from other images belonging to the same seen category. Thus, the seen images were not included in the test and validation data.

First, 4096-dimensional visual features were extracted from VGG19 [43]. VGG19 was generally pre-trained for the 1000 categories in ImageNet, and the fully connected layers that extracted visual features were selected farther from the output. Furthermore, the principal component analysis (PCA) [44] was applied to the visual features. The visual features have high dimensions, and we used PCA to prevent overfitting. We selected the cumulative contribution ratio of PCA as 0.8 (the dimensions of visual features applied PCA , being approximately 70).

3.2. Experimental Conditions

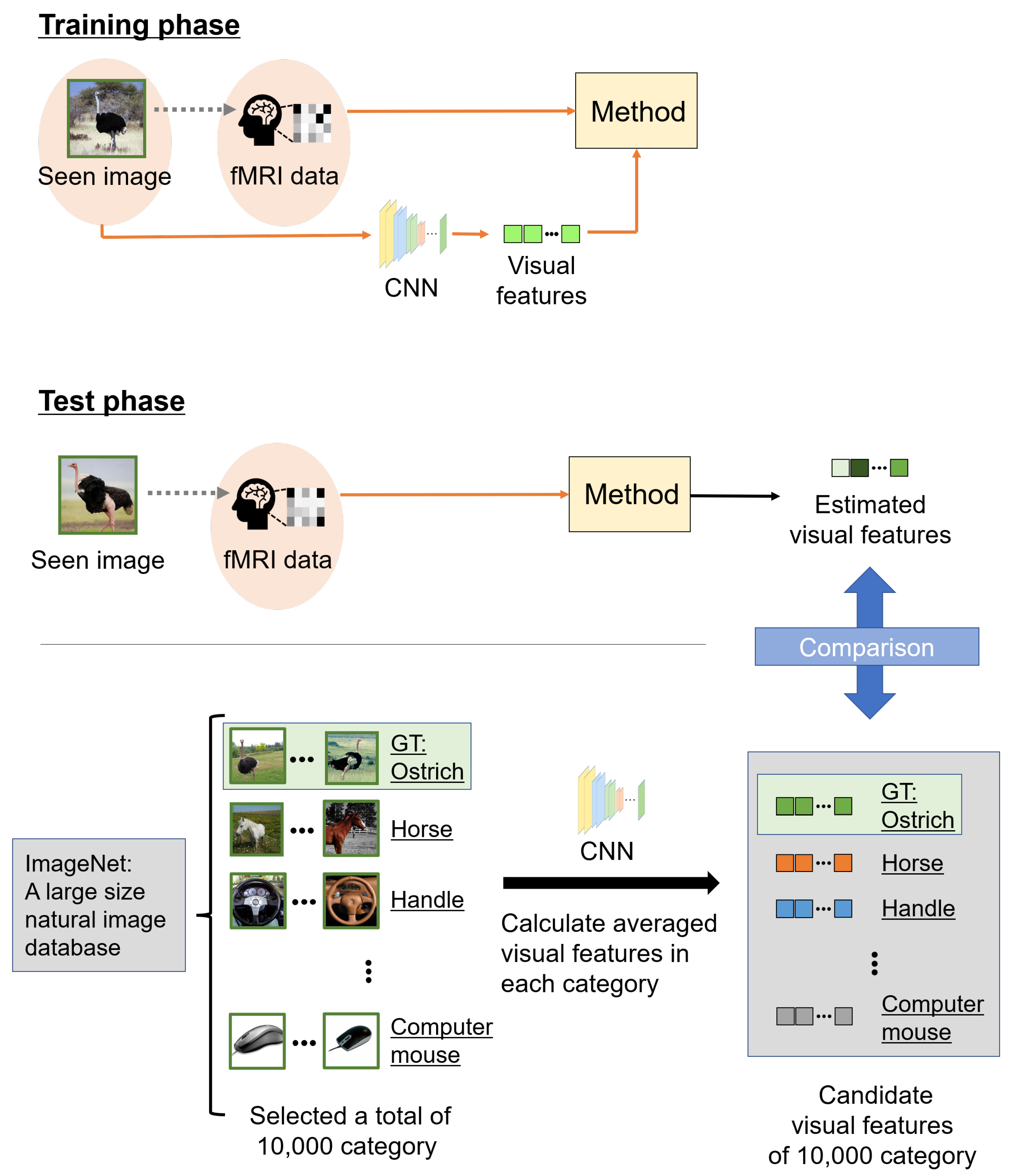

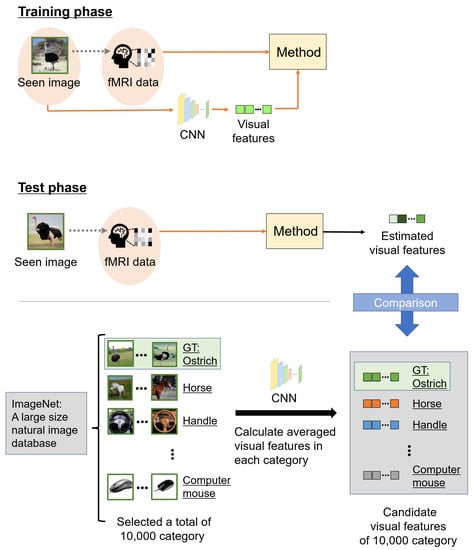

The estimated accuracy was evaluated by image category estimation. CNNs are mostly trained for image categorization, and category estimation is an appropriate evaluation metric for the representation ability of visual features extracted from a pre-trained CNN. Among the categories of seen images of the fMRI data used in this experiment, some were not used for the pre-trained CNN classifiers. Therefore, we evaluated the estimated accuracy using visual features. Figure 4 shows an overview of category estimation. The image category ranks of the estimated and candidate visual features indicate categories via VGG19 based on the correlations. We selected from 10,000 categories in the fMRI dataset and calculated averaged visual features in each category as candidate visual features. Note that of the 10,000 categories, 150 image categories were used in the fMRI dataset in the test phase. Candidate visual features were 5–10 images chosen at random from each category. The ranks of the estimated visual features and 10,000 candidate visual features were calculated and rearranged in descending order; the ground truth (GT) rank was defined as the image category rank. Finally, the confidence category score S was calculated from the image category rank G as follows:

where M represents the total number of image categories, and we set M to 10,000 in this experiment. The confidence category score S approaches 1 for better image category ranks G and 0 for worse. The confidence category scores were averaged in 150 test data points, 7-fold cross-validation sets, and five subjects. We used this metric for the experiment evaluation.

Figure 4.

Overview of the scheme of category estimation. In the training phase, the relationship between a seen image and the corresponding fMRI data in each subject was learned. In the test phase, we estimated visual features using fMRI data based on the learned relationship. However, the visual features to be compared were computed from images chosen at random from ImageNet. These other images were 5–10 samples in each category, and we selected 10,000 categories from ImageNet. The 10,000 categories included 150 image categories belonging to the fMRI data in the test phase. We defined candidate visual features, averaged visual features, extracted from these other images in each category, and compared them with estimated visual features from fMRI data. Finally, we calculated the correlations between the estimated and candidate visual features, and the accuracy of the estimations was evaluated with the seen image category as the ground truth (GT).

3.3. Comparison Methods

We compared the proposed method (hereafter denoted as PM) with several comparison methods (hereafter denoted as CMs) based on the evaluation metric to validate the effectiveness of the PM. The CMs have seven patterns. Two CMs use multi-subject fMRI data and five CMs use single-subject fMRI data.

- Multi-subject probabilistic generative model (MSPGM):

MSPGM is a method based on the PGM, and PM uses multi-subject fMRI data. Visual features are estimated from shared features using ridge regression [45]. We set the number of dimensions in the latent space to the same number of dimensions of the PM and searched for in the regularization parameter of ridge regression.

- Multi-view Bayesian generative model for multi-subject fMRI Data (MVBGM-MS):

MVBGM-MS [46] exhibited state-of-the-art performance in the field of brain decoding for visual cognitive contents. MVBGM-MS uses multi-subject fMRI data, and the generative model estimates visual features via the latent space. MVBGM-MS uses visual features, multi-subject fMRI data, and semantic features extracted by inputting image category names into Word2vec [47] to improve accuracy. Therefore, for a fair evaluation of the PM, we used MVBGM-MS without semantic features. We set the number of dimensions in the latent space in the same manner as in the previous study [46].

- Single-subject probabilistic generative model (SSPGM):

The SSPGM method is based on fMRI data and uses single-subject fMRI data. Visual features are estimated from individual features using ridge regression. We set the number of dimensions in the latent space to the same number of dimensions of the PM and searched for in the regularization parameter of the ridge regression.

- Sparse linear regression (SLR):

SLR [8] is a baseline method in the field of brain decoding for visual cognitive contents and directly estimated visual features from fMRI data. We estimated visual features by using voxels consisting of fMRI data with a high correlation to the features. Voxels were selected in the order of increasing correlation, and the total number of voxels to be selected was set as a hyperparameter. We searched the number of voxels for .

- Canonical correlation analysis (CCA):

CCA [48] is a baseline method for calculating the latent space from multi-modal features. Visual features and fMRI data are converted into features belonging to the latent space, and accuracy is evaluated in the space. We searched for in the number of dimensions in the latent space.

- Bayesian CCA (BCCA):

The BCCA [49] method is an extension of CCA that adopts Bayesian learning. BCCA is a generative model. The latent space consists of visual features and fMRI data, and visual features can be estimated from fMRI data via the space. We searched for in the number of dimensions in the latent space.

- Deep CCA (Deep CCA):The Deep CCA [50] method is also an extension of CCA that adopts deep learning. Similarly to CCA, visual features and fMRI data are converted into features belonging to the latent space, and accuracy is evaluated in the space. We searched for in the number of dimensions in the latent space.

3.4. Results and Discussion

3.4.1. Estimation Performance Evaluation

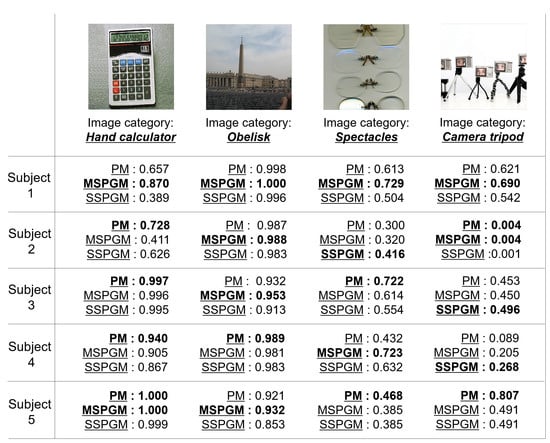

Table 1 shows the accuracy of the category estimation in the PM and CMs. Note that the average scores of 150 test images were calculated according to each subject as the evaluation metric. These scores range from 0 to 1, and large values indicate superiority. In the PM, MSPGM, and SSPGM, we set , , and the number of iterations in the EM algorithm to and 10. In addition, in the PM’s visual decoder, we searched each regularization parameter and for .

Table 1.

Confidence category scores were averaged for 150 test images and five subjects in the PM and all CMs (The best scores for each subject, and the averages of all subjects are shown in bold).

In Table 1, the scores, of most subjects and the averages of five subjects in the PM are superior to those in MVBGM-MS and MSPGM based on multi-subject fMRI data. The PM’s superior scores indicate its effectiveness in distinguishing between shared and individual features in multi-subject fMRI data. Furthermore, MVBGM-MS is state-of-the-art, but the PM outperformed it. The scores of all subjects and the averages of the PM are superior to those of SSPGM, SLR, CCA, Deep CCA, and BCCA based on single-subject fMRI data. The SSPGM method is based on the PGM and PM, and its effectiveness in combining shared and individual features is exhibited. The effectiveness of the PM in estimating the visual features of seen images from fMRI data was confirmed compared with SLR, and based on the quantitative accuracy of the PM, it is reliable. Moreover, compared with CCA and Deep CCA, our method can derive their latent spaces successfully. In particular, the PM significantly outperformed Deep CCA, which is the only model that incorporates deep learning among the CMs. Deep CCA is also inferior to simple CCA in terms of score. Deep learning may not be compatible with fMRI data, for which only a small sample size is available. Furthermore, compared with BCCA as a generative model, we can confirm the superiority of our generative model for extracting shared and individual features.

3.4.2. Qualitative Evaluation

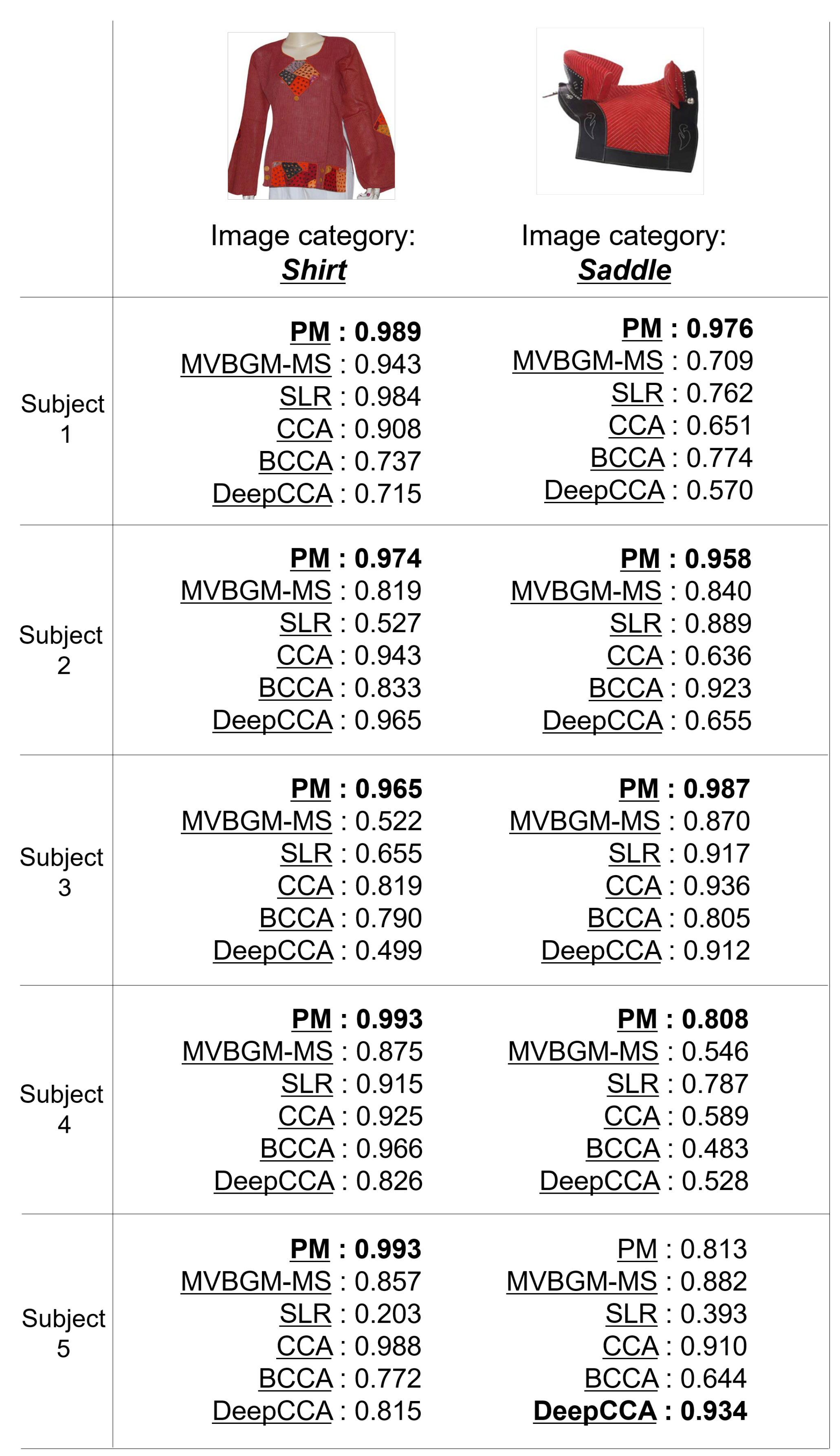

Figure 5 shows the qualitative evaluation of PM and MVBGM-MS, SLR, CCA, BCCA, and Deep CCA, which are methods based on other studies [8,46,48,49,50,51]. For the image categories of “shirt” and “saddle”, the PM has the best confidence category scores for most subjects. These results demonstrate the effectiveness of the PGM as a feature extractor and the idea of using shared and individual features.

Figure 5.

Examples of the category estimation results of PM and five CMs (MVBGM-MS, SLR, CCA, BCCA, and Deep CCA) in the quantitative evaluation. The confidence category scores range from 0 to 1, and the best scores for each subject are shown in bold.

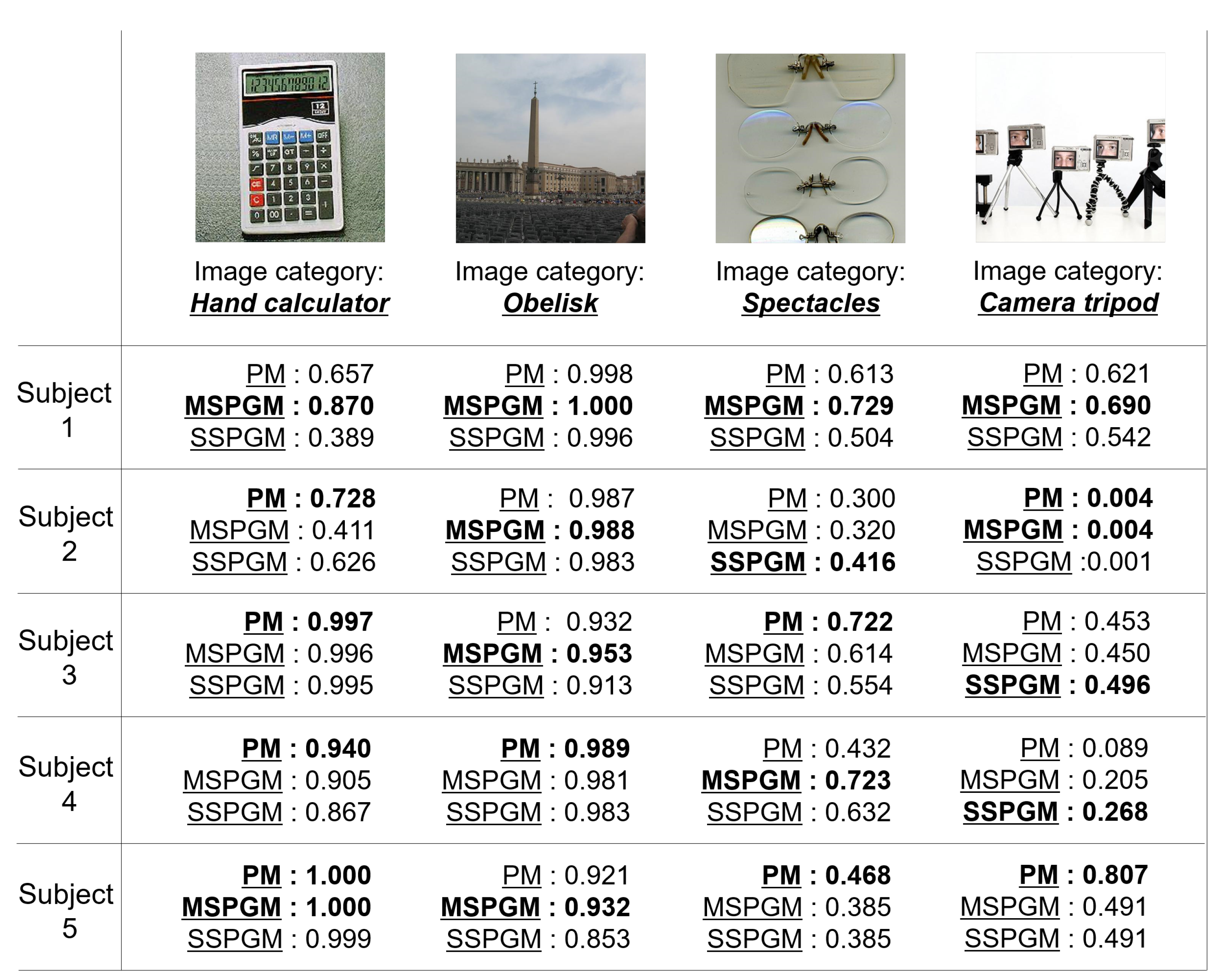

Figure 6 shows the qualitative evaluation of the PM, MSPGM, and SSPGM based on our PGM. For the image category of “hand calculator”, PM has the best confidence category scores for most subjects. However, for the image category of “obelisk”, MSPGM has the best confidence category scores for most subjects. These results indicate that although the PGM can extract valid shared features, there exists a possibility that it cannot extract individual features. Due to its characteristics, the PGM is superior in extracting shared features from multi-subject fMRI data. For the image categories “spectacles” and “camera tripod”, all methods did not achieve sufficient confidence category scores compared with the quantitative evaluation in most subjects in Table 1. These images contained multiple objects, and a subject’s gaze may not be focused on a single object during fMRI data acquisition. In addition, for the category of “camera tripod”, a part of a human face also appears in the image, which may have affected the subjects’ cognition. Category estimation may still be a difficult task when seeing images containing multiple objects or objects not related to the image categories.

Figure 6.

Examples of the category estimation results of PM and two CMs (MSPGM and SSPGM) based on PGM. The confidence category scores range from 0 to 1, and the best scores for each subject are shown in bold.

4. Conclusions and Future Work

In this article, we proposed a method for estimating visual information from multisubject fMRI data obtained while subjects observed images. The PM estimated visual features using shared features in multi-subject fMRI data and individual features in singlesubject fMRI data. The PGMs were constructed with respect to each feature from fMRI data and used as effective feature extractors. In addition, we constructed the visual decoder using the shared and individual features to estimate visual features. The experimental results verified the effectiveness of the proposed approaches. Although fMRI data tend to contain measured noises and large individual differences compared with other biological activities, such as an eye gaze, this experiment confirmed the effectiveness of combining multi-subject fMRI data. These findings validated the use of machine learning for biological activity analysis with time and physical factor constraints.

Apart from the increase in the sample size due to the expansion of the fMRI dataset, using modalities other than fMRI data may provide a hint as to how to improve the accuracy. In particular, introducing other information that represents an image, such as image captions, is expected to improve the results. For example, some theories suggest estimating visual features directly from fMRI data and caption features using an image captioning model (caption features) or constructing a latent space combining fMRI data and visual and caption features to improve expressive ability. The human brain contains regions specialized for object recognition related to an image category and regions related to lower-order information, such as object color and shape [41]. Although visual features extracted using a CNN contain information specific to image category classification, they may not contain sufficient information, such as an image color and shape. Therefore, the introduction of image captions that can represent the colors and shapes in images is considered an effective method for extracting information related to images in fMRI data obtained while subjects see the images.

Author Contributions

Conceptualization, T.H., K.M., T.O. and M.H.; methodology, T.H., K.M., T.O. and M.H.; software, T.H.; validation, T.H., K.M., T.O. and M.H.; data curation, T.H.; writing—original draft preparation, T.H.; writing—review and editing, K.M., T.O. and M.H; visualization, T.H.; funding acquisition, T.O. and M.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partly supported by JSPS KAKENHI, grant number JP21H03456, and AMED, grant number JP21zf0127004.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available dataset was analyzed in this study. This data can be found here: https://github.com/KamitaniLab/GenericObjectDecoding (accessed on 10 August 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

List of variables used for the proposed method in the training and test phases corresponds to Section 2.1 and Section 2.2.

Table A1.

List of variables used for the proposed method in the training and test phases corresponds to Section 2.1 and Section 2.2.

| Section 2.1.1: Construction of PGM | |

|---|---|

| fMRI data corresponding to nth image in ith subject | |

| fMRI data in ith subject () | |

| Shared features corresponding to nth image | |

| Shared features () | |

| Projection matrix that transforms fMRI data in ith subject into shared features | |

| I | Identity matrix |

| Covariance matrix of shared features | |

| Mean of fMRI data in ith subject | |

| Variance of fMRI data in ith subject | |

| Concatenated fMRI data corresponding to nth image for total J subjects | |

| Concatenated mean for total J subjects | |

| Concatenated projection matrix for total J subjects | |

| Error term of shared features | |

| Joint covariance | |

| Expected value of expectation maximization (EM) algorithm | |

| Variance of EM algorithm | |

| Expected value in maximization step of EM algorithm | |

| Updated projection matrix that transforms fMRI data in ith subject | |

| Updated variance of fMRI data in ith subject | |

| Updated covariance matrix of shared features | |

| Estimated shared features corresponding to nth image in ith subject | |

| J | Number of subjects |

| N | Number of seen images |

| n | Index of seen images () |

| i | Index of subjects () |

| Dimensions of shared features | |

| Dimensions of fMRI data in ith subject | |

| Sum of dimensions for total J subjects | |

| Updated PGM parameters (, , ) | |

| PGM parameters before update () | |

| Individual features corresponding to nth image in ith subject | |

| Individual features in ith subject () | |

| Projection matrix that transforms fMRI data in ith subject into individual features | |

| Variance of fMRI data in ith subject | |

| Covariance matrix of individual features | |

| Joint covariance in ith subject | |

| Error term of individual features in ith subject | |

| Dimensions of individual features | |

| Section 2.1.2: Construction of visual decoder | |

| Visual features of nth image | |

| Visual features () | |

| Estimated shared features in ith subject () | |

| Projection matrix that transforms shared features into visual features in ith subject | |

| Projection matrix that transforms individual features into visual features in ith subject | |

| Regularization parameter corresponding to shared features in ith subject | |

| Regularization parameter corresponding to individual features in ith subject | |

| Dimensions of visual features | |

| Section 2.2.1: Extraction of shared and individual features | |

| fMRI data in ith subject | |

| Shared features in ith subject | |

| Individual features in ith subject | |

| Section 2.2.2: Estimation of visual features | |

| Estimated visual features by visual decoder in ith subject | |

References

- Ponce, C.R.; Xiao, W.; Schade, P.F.; Hartmann, T.S.; Kreiman, G.; Livingstone, M.S. Evolving images for visual neurons using a deep generative network reveals coding principles and neuronal preferences. Cell 2019, 177, 999–1009. [Google Scholar] [CrossRef] [PubMed]

- Fazli, S.; Mehnert, J.; Steinbrink, J.; Curio, G.; Villringer, A.; Müller, K.R.; Blankertz, B. Enhanced performance by a hybrid NIRS–EEG brain computer interface. Neuroimage 2012, 59, 519–529. [Google Scholar] [CrossRef] [PubMed]

- Müller, K.R.; Tangermann, M.; Dornhege, G.; Krauledat, M.; Curio, G.; Blankertz, B. Machine learning for real-time single-trial EEG-analysis: From brain–computer interfacing to mental state monitoring. J. Neurosci. Methods 2008, 167, 82–90. [Google Scholar] [CrossRef]

- Engel, S.A.; Rumelhart, D.E.; Wandell, B.A.; Lee, A.T.; Glover, G.H.; Chichilnisky, E.J.; Shadlen, M.N. fMRI of human visual cortex. Nature 1994, 369, 525. [Google Scholar] [CrossRef] [PubMed]

- Engel, S.A.; Glover, G.H.; Wandell, B.A. Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cereb. Cortex 1997, 7, 181–192. [Google Scholar] [CrossRef] [PubMed]

- Haxby, J.V.; Gobbini, M.I.; Furey, M.L.; Ishai, A.; Schouten, J.L.; Pietrini, P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 2001, 293, 2425–2430. [Google Scholar] [CrossRef]

- Kay, K.N.; Naselaris, T.; Prenger, R.J.; Gallant, J.L. Identifying natural images from human brain activity. Nature 2008, 452, 352–355. [Google Scholar] [CrossRef]

- Horikawa, T.; Kamitani, Y. Generic decoding of seen and imagined objects using hierarchical visual features. Nat. Commun. 2017, 8, 15037. [Google Scholar] [CrossRef]

- Shen, G.; Dwivedi, K.; Majima, K.; Horikawa, T.; Kamitani, Y. End-to-end deep image reconstruction from human brain activity. Front. Comput. Neurosci. 2019, 13, 21. [Google Scholar] [CrossRef]

- Han, K.; Wen, H.; Shi, J.; Lu, K.H.; Zhang, Y.; Fu, D.; Liu, Z. Variational autoencoder: An unsupervised model for encoding and decoding fMRI activity in visual cortex. NeuroImage 2019, 198, 125–136. [Google Scholar] [CrossRef]

- Baillet, S. Magnetoencephalography for brain electrophysiology and imaging. Nat. Neurosci. 2017, 20, 327–339. [Google Scholar] [CrossRef] [PubMed]

- Zarief, C.N.; Hussein, W. Decoding the human brain activity and predicting the visual stimuli from magnetoencephalography (meg) recordings. In Proceedings of the 2019 International Conference on Intelligent Medicine and Image Processing, Bali, Indonesia, 19–22 April 2019; pp. 35–42. [Google Scholar]

- Liljeström, M.; Hulten, A.; Parkkonen, L.; Salmelin, R. Comparing MEG and fMRI views to naming actions and objects. Hum. Brain Mapp. 2009, 30, 1845–1856. [Google Scholar] [CrossRef] [PubMed]

- Taylor, M.; Donner, E.; Pang, E. fMRI and MEG in the study of typical and atypical cognitive development. Neurophysiol. Clin./Clin. Neurophysiol. 2012, 42, 19–25. [Google Scholar]

- Zheng, W.L.; Santana, R.; Lu, B.L. Comparison of classification methods for EEG-based emotion recognition. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering, Toronto, ON, Canada, 7–12 June 2015; pp. 1184–1187. [Google Scholar]

- Zheng, W.L.; Zhu, J.Y.; Lu, B.L. Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2017, 10, 417–429. [Google Scholar] [CrossRef]

- Hemanth, D.J.; Anitha, J. Brain signal based human emotion analysis by circular back propagation and Deep Kohonen Neural Networks. Comput. Electr. Eng. 2018, 68, 170–180. [Google Scholar] [CrossRef]

- Matsuo, E.; Kobayashi, I.; Nishimoto, S.; Nishida, S.; Asoh, H. Describing semantic representations of brain activity evoked by visual stimuli. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 576–583. [Google Scholar]

- Takada, S.; Togo, R.; Ogawa, T.; Haseyama, M. Generation of viewed image captions from human brain activity via unsupervised text latent space. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 2521–2525. [Google Scholar]

- Shen, G.; Horikawa, T.; Majima, K.; Kamitani, Y. Deep image reconstruction from human brain activity. PLoS Comput. Biol. 2019, 15, e1006633. [Google Scholar] [CrossRef]

- Beliy, R.; Gaziv, G.; Hoogi, A.; Strappini, F.; Golan, T.; Irani, M. From voxels to pixels and back: Self-supervision in natural-image reconstruction from fMRI. In Proceedings of the Advances in Neural Information Processing Systems 32, Annual Conference on Neural Information Processing Systems 2019, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Ren, Z.; Li, J.; Xue, X.; Li, X.; Yang, F.; Jiao, Z.; Gao, X. Reconstructing seen image from brain activity by visually-guided cognitive representation and adversarial learning. NeuroImage 2021, 228, 117602. [Google Scholar]

- Nicolelis, M.A. Actions from thoughts. Nature 2001, 409, 403–407. [Google Scholar] [CrossRef]

- Lebedev, M.A.; Nicolelis, M.A. Brain-machine interfaces: Past, present and future. Trends Neurosci. 2006, 29, 536–546. [Google Scholar] [CrossRef]

- Patil, P.G.; Turner, D.A. The development of brain-machine interface neuroprosthetic devices. Neurotherapeutics 2008, 5, 137–146. [Google Scholar] [CrossRef]

- Cox, D.D.; Savoy, R.L. Functional magnetic resonance imaging (fMRI) ‘brain reading’: Detecting and classifying distributed patterns of fMRI activity in human visual cortex. Neuroimage 2003, 19, 261–270. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Daugman, J.G. Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters. JOSA A 1985, 2, 1160–1169. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25: 26th Annual Conference on Neural Information Processing Systems 2012, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Nonaka, S.; Majima, K.; Aoki, S.C.; Kamitani, Y. Brain Hierarchy Score: Which Deep Neural Networks Are Hierarchically Brain-Like? IScience 2020, 24, 103013. [Google Scholar] [CrossRef] [PubMed]

- Akamatsu, Y.; Harakawa, R.; Ogawa, T.; Haseyama, M. Brain decoding of viewed image categories via semi-supervised multi-view Bayesian generative model. IEEE Trans. Signal Process. 2020, 68, 5769–5781. [Google Scholar] [CrossRef]

- Chen, P.H.C.; Chen, J.; Yeshurun, Y.; Hasson, U.; Haxby, J.; Ramadge, P.J. A reduced-dimension fMRI shared response model. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 460–468. [Google Scholar]

- Higashi, T.; Maeda, K.; Ogawa, T.; Haseyama, M. Estimation of viewed images using individual and shared brain responses. In Proceedings of the IEEE 9th Global Conference on Consumer Electronics, Kobe, Japan, 13–16 October 2020; pp. 716–717. [Google Scholar]

- Higashi, T.; Maeda, K.; Ogawa, T.; Haseyama, M. Estimation of Visual Features of Viewed Image From Individual and Shared Brain Information Based on FMRI Data Using Probabilistic Generative Model. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Toronto, ON, Canada, 6–11 June 2021; pp. 1335–1339. [Google Scholar]

- Papadimitriou, A.; Passalis, N.; Tefas, A. Visual representation decoding from human brain activity using machine learning: A baseline study. Pattern Recognit. Lett. 2019, 128, 38–44. [Google Scholar] [CrossRef]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4. [Google Scholar]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B (Methodol.) 1977, 39, 1–22. [Google Scholar]

- Kourtzi, Z.; Kanwisher, N. Cortical regions involved in perceiving object shape. J. Neurosci. 2000, 20, 3310–3318. [Google Scholar] [CrossRef]

- Kanwisher, N.; McDermott, J.; Chun, M.M. The fusiform face area: A module in human extrastriate cortex specialized for face perception. J. Neurosci. 1997, 17, 4302–4311. [Google Scholar] [CrossRef]

- Epstein, R.; Kanwisher, N. A cortical representation of the local visual environment. Nature 1998, 392, 598–601. [Google Scholar] [CrossRef]

- Sereno, M.I.; Dale, A.; Reppas, J.; Kwong, K.; Belliveau, J.; Brady, T.; Rosen, B.; Tootell, R. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science 1995, 268, 889–893. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Hoerl, A.E.; Kennard, R.W. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Akamatsu, Y.; Harakawa, R.; Ogawa, T.; Haseyama, M. Multi-View bayesian generative model for multi-Subject fMRI data on brain decoding of viewed image categories. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 4–8 May 2020; pp. 1215–1219. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the Advances in Neural Information Processing Systems 26: 27th Annual Conference on Neural Information Processing Systems 2013, Lake Tahoe, NV, USA, 5–10 December 2013. [Google Scholar]

- Hotelling, H. Relations between two sets of variates. In Breakthroughs in Statistics; Springer: Berlin/Heidelberg, Germany, 1992; pp. 162–190. [Google Scholar]

- Fujiwara, Y.; Miyawaki, Y.; Kamitani, Y. Modular encoding and decoding models derived from Bayesian canonical correlation analysis. Neural Comput. 2013, 25, 979–1005. [Google Scholar] [CrossRef]

- Andrew, G.; Arora, R.; Bilmes, J.; Livescu, K. Deep canonical correlation analysis. In Proceedings of the International Conference on Machine Learning, Miami, FL, USA, 4–7 December 2013; pp. 1247–1255. [Google Scholar]

- Ek, C.H.; Torr, P.H.; Lawrence, N.D. Gaussian process latent variable models for human pose estimation. In Proceedings of the International Workshop on Machine Learning for Multi-modal Interaction, Brno, Czech Republic, 28–30 June 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 132–143. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).