A Detection and Tracking Method Based on Heterogeneous Multi-Sensor Fusion for Unmanned Mining Trucks

Abstract

:1. Introduction

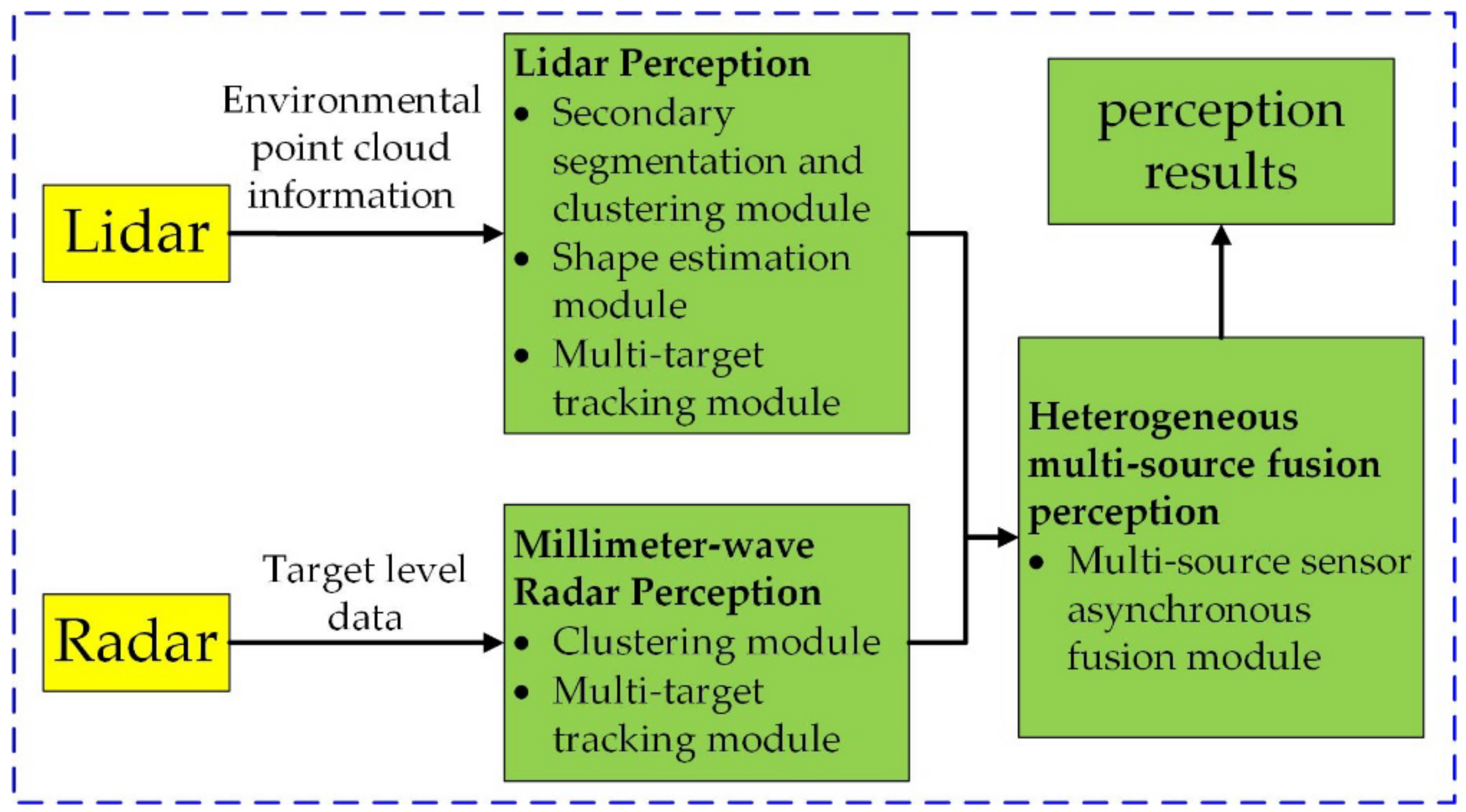

2. Method

2.1. System Framework

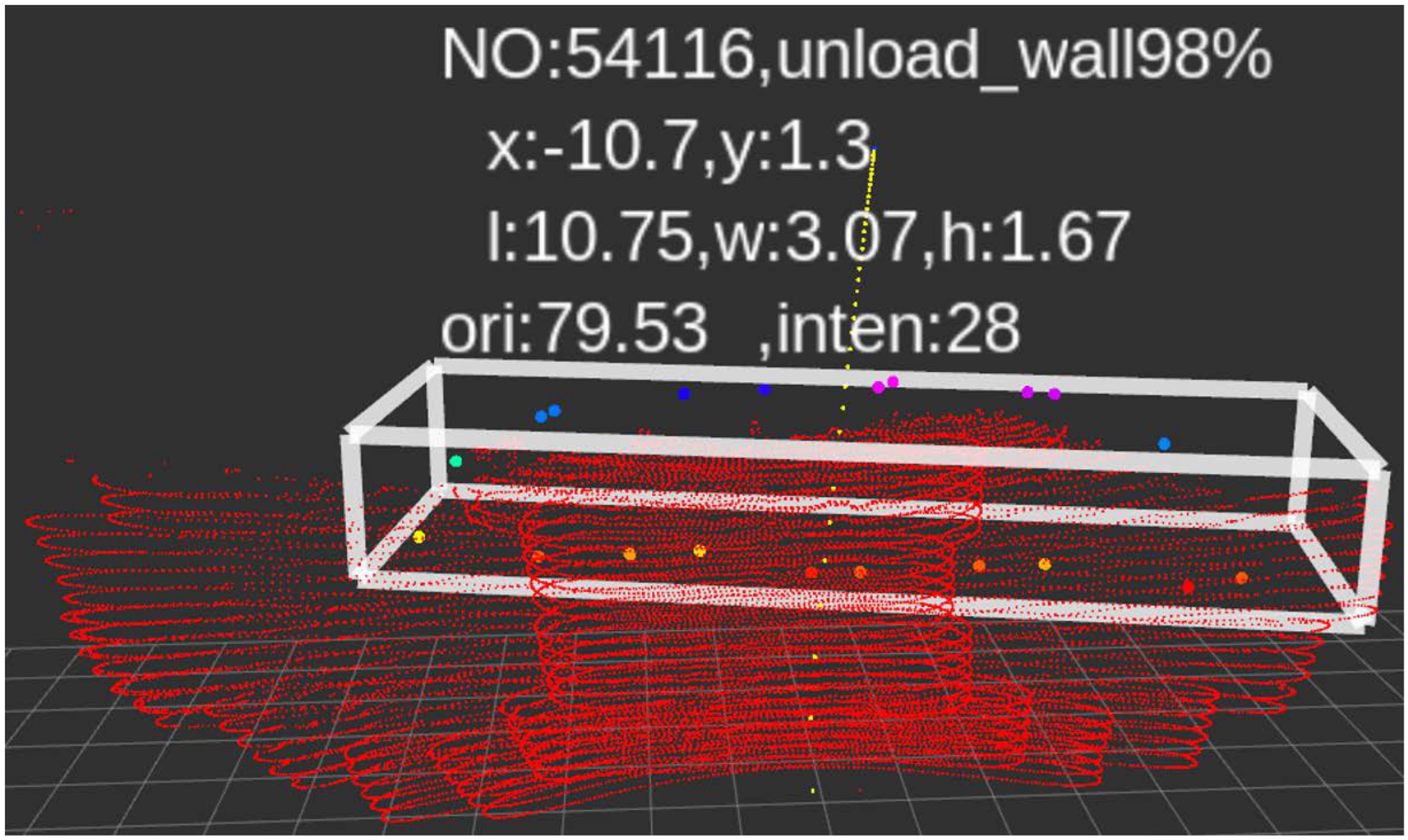

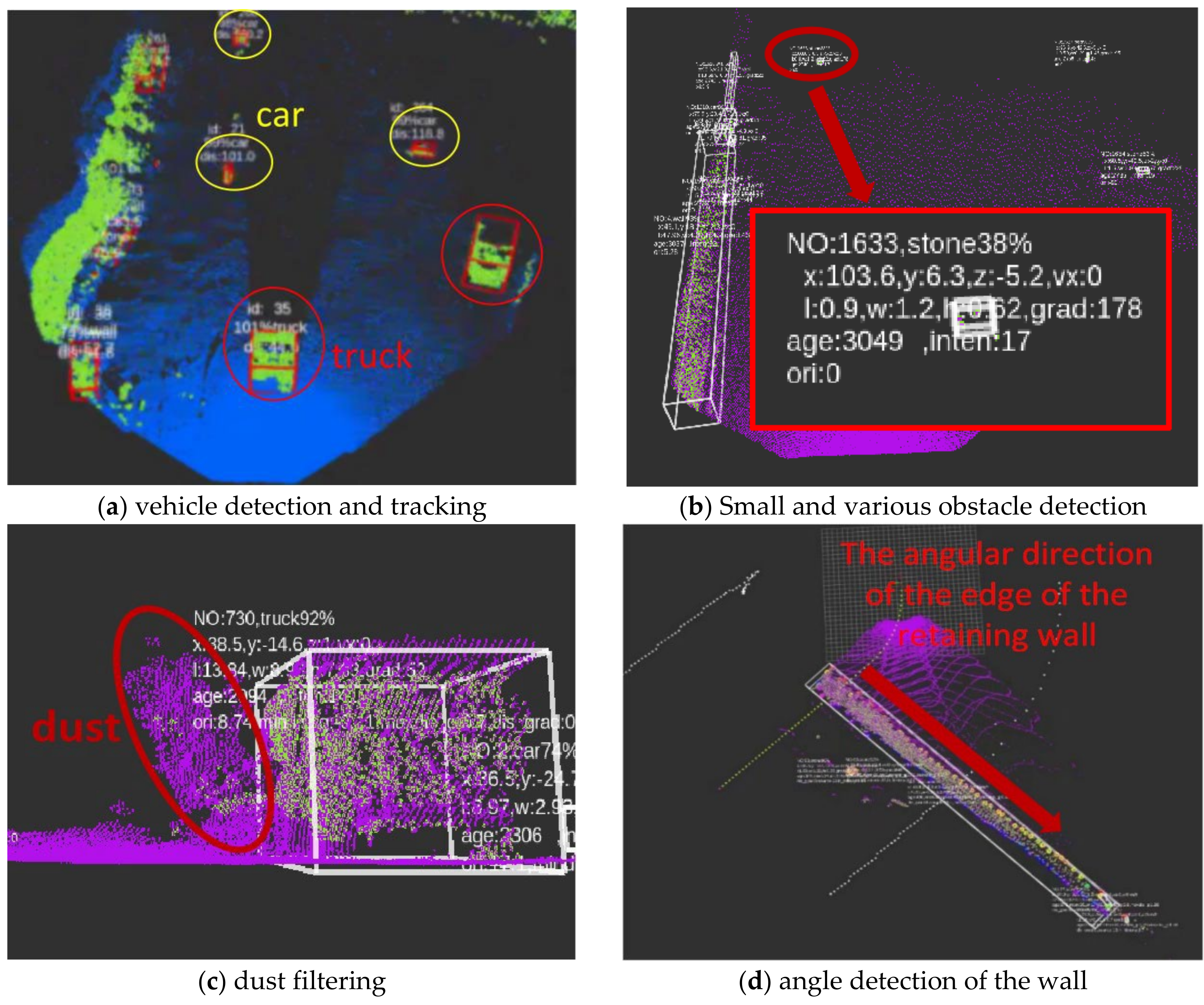

2.1.1. Lidar Perception

2.1.2. Millimeter-Wave Radar Perception

2.1.3. Heterogeneous Multi-Source Fusion Perception

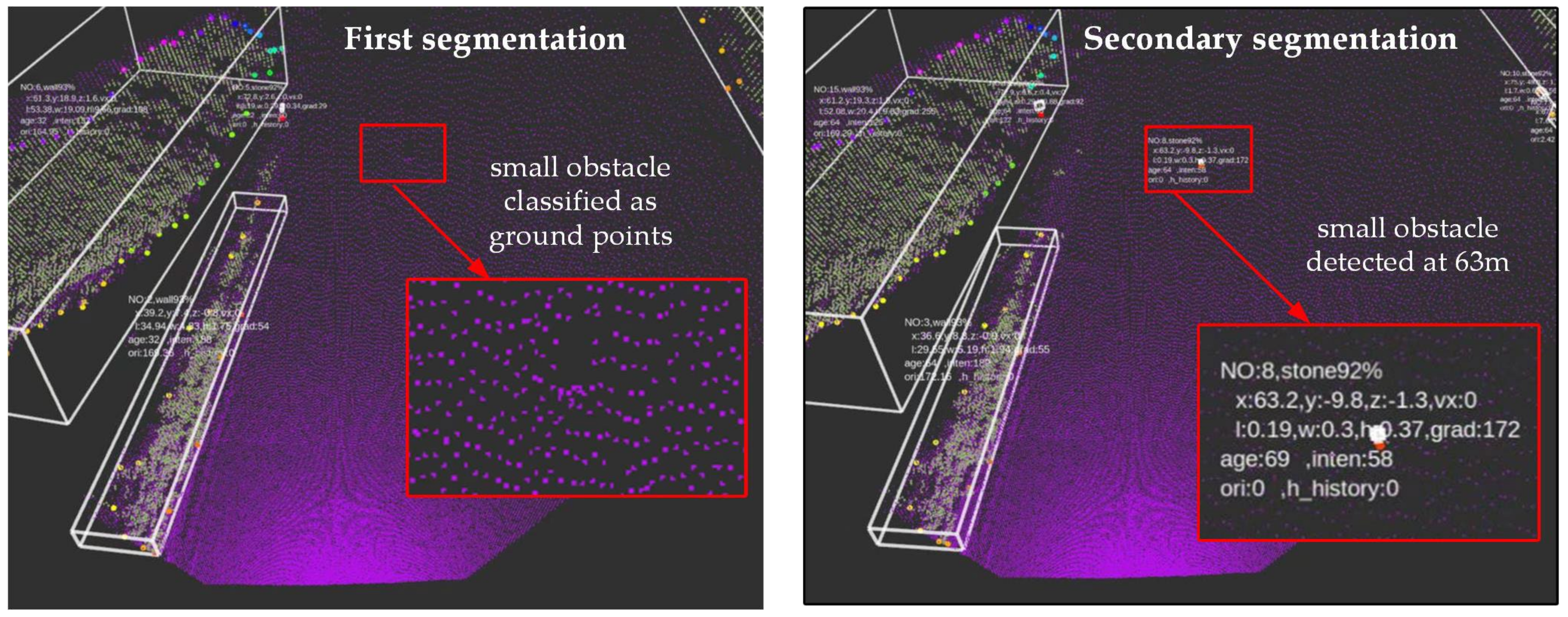

2.2. Secondary Segmentation and Clustering Module

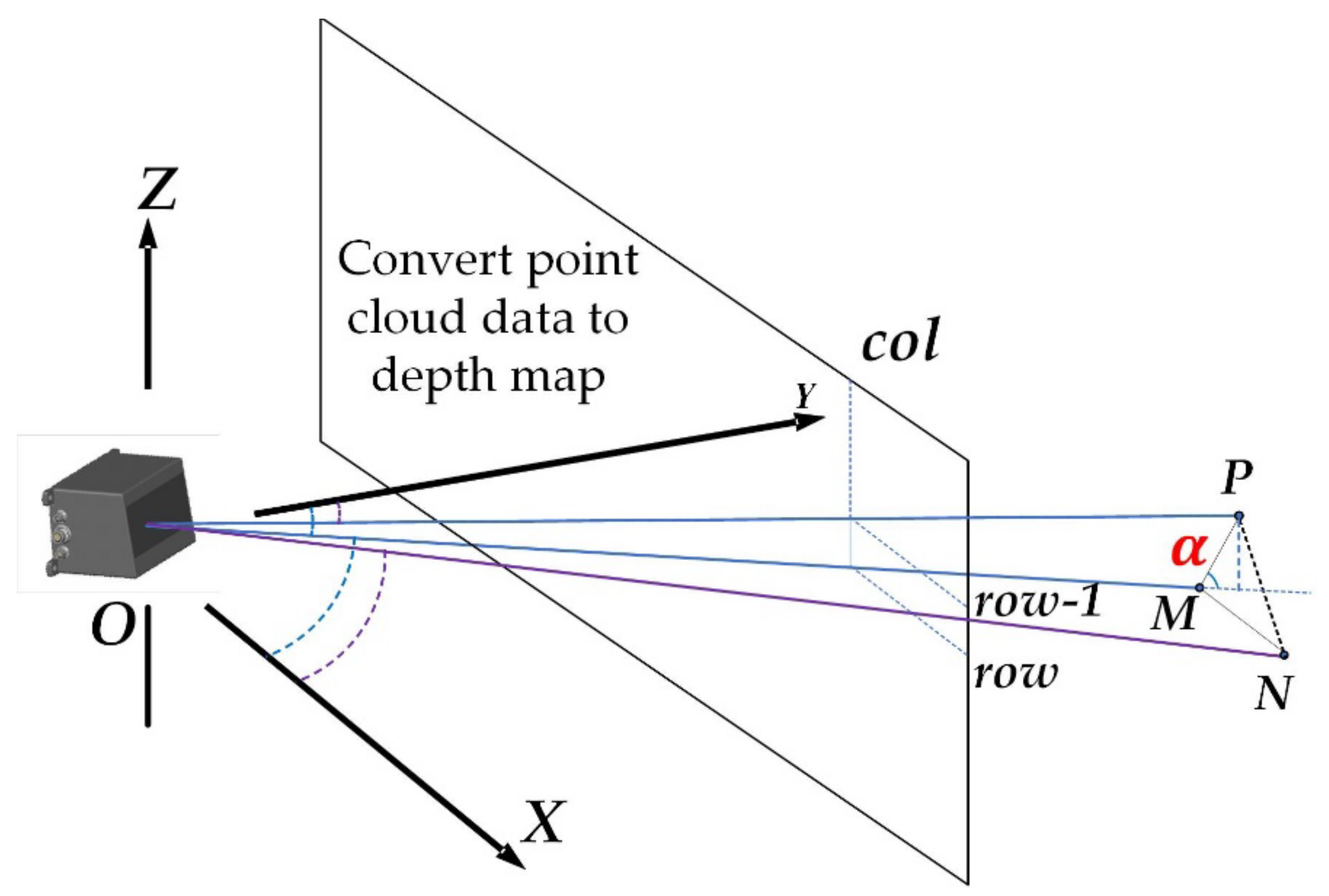

2.2.1. Point Cloud Depth Map Construction

2.2.2. Point Cloud First Segmentation

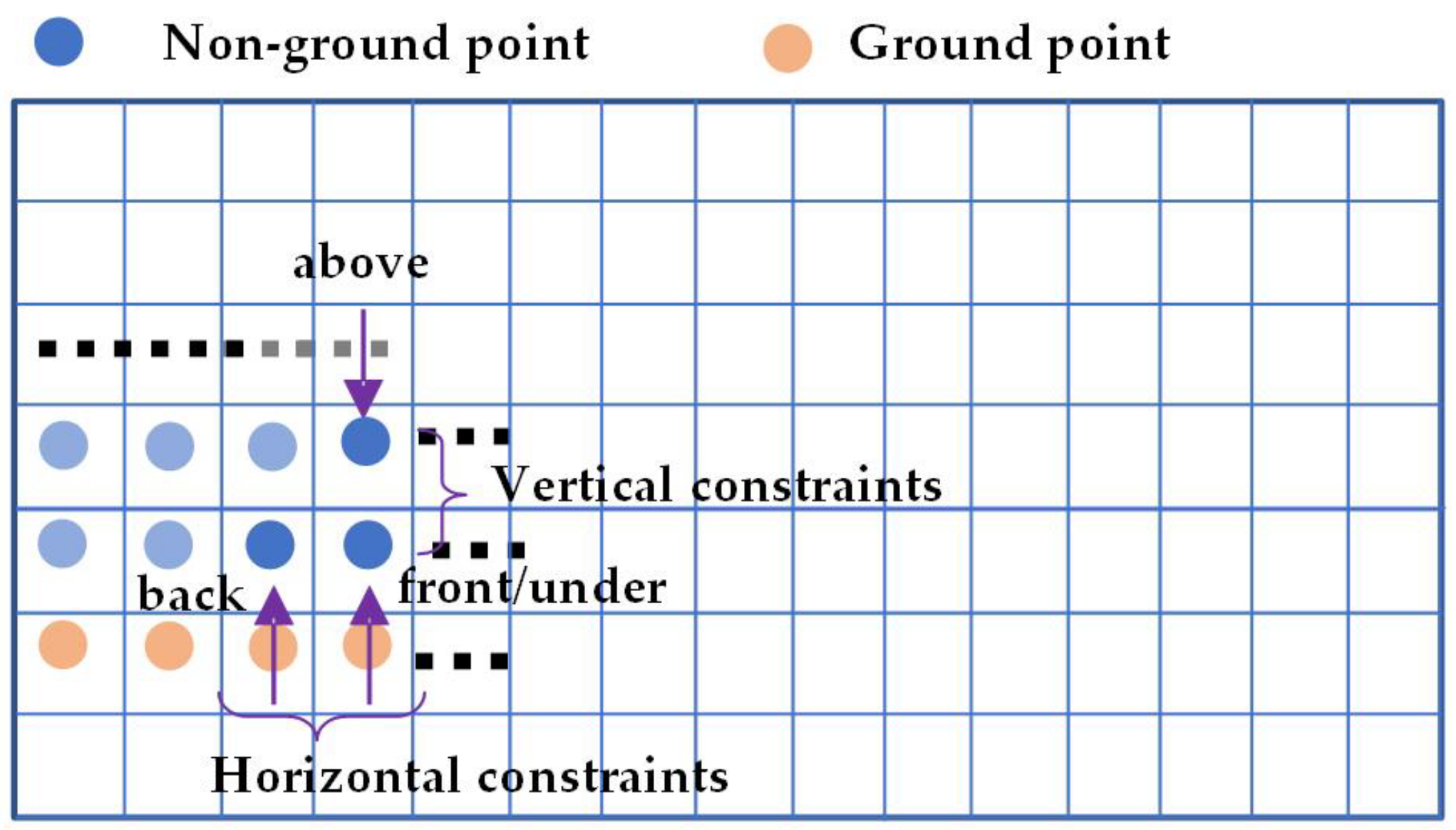

2.2.3. Secondary Segmentation

2.3. Clustering

2.4. Shape Estimation Module

2.5. Multi-Target Tracking Module

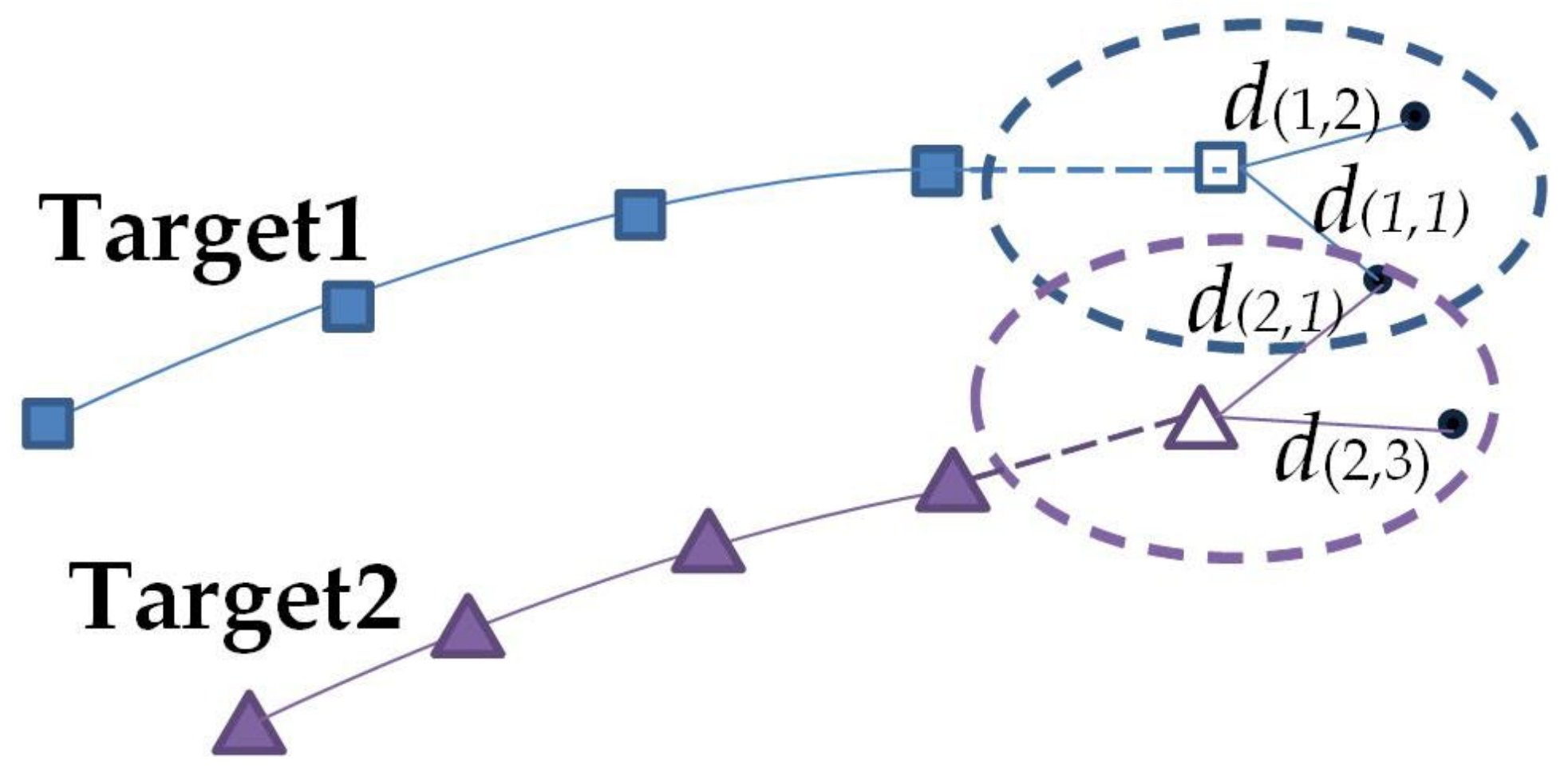

2.5.1. Data Association

2.5.2. Track Management

2.6. Multi-Source Sensor Asynchronous Fusion Module

2.6.1. Time-Space Synchronization of Multi-Source Sensors

2.6.2. Target State Fusion

| Algorithm 1 The fusion algorithm for dust scenes. |

| Input: Lidar sensing results, Radar sensing results, matching results, tracking results, dust warning information. |

| Output: Fusion perception results. |

| # Calculate the influence factor value of each item. |

| 1: length influence factor value = obj_length/length_threshold |

| 2: width influence factor value = obj_width/width_threshold |

| 3: height influence factor value = obj_height/height_threshold |

| 4: velocity influence factor value = obj_vel/vel_thresold |

| 5: track influence factor value = obj_ track_frames ^ 1.5/maximum track length |

| 6: source of object influence factor value: only Lidar = 0, only Radar = 1, Lidar and Radar = 2 |

| 7: match influence factor value = obj_match_frames ^ 2/maximum track length |

| # Set the default value of the influence coefficient corresponding to each influence factor. |

| 8: Default: coeff(length) = coeff(width) = 0.3, coeff(height) = 0.5, coeff(velocity) = 0.5, coeff(track) = 1.0, coeff(source) = 0, coeff(match) = 1.0 |

| # Determine if there is a high concentration of dust ahead blocking the view. |

| # Calculate the confidence of object. |

| 9: if high concentration dust == true |

| 10: then |

| 11: coeff(source) = 1.0, coeff(velocity) = 1.0, coeff(match) = 0, coeff(track) = 1.5, coeff(height) = 0 |

| 12: obj_confidence = Σ influence factor * coeff(influence factor) |

| 13: if obj_confidence > confidence _threshold |

| 14: then |

| 15: output obj |

| 16: end if |

| 17: else |

| 18: obj_confidence = Σ influence factor * coeff(influence factor)* |

| 19: if obj_confidence > confidence _threshold |

| 20: then |

| 21: output obj |

| 22: end if |

| 23: end if |

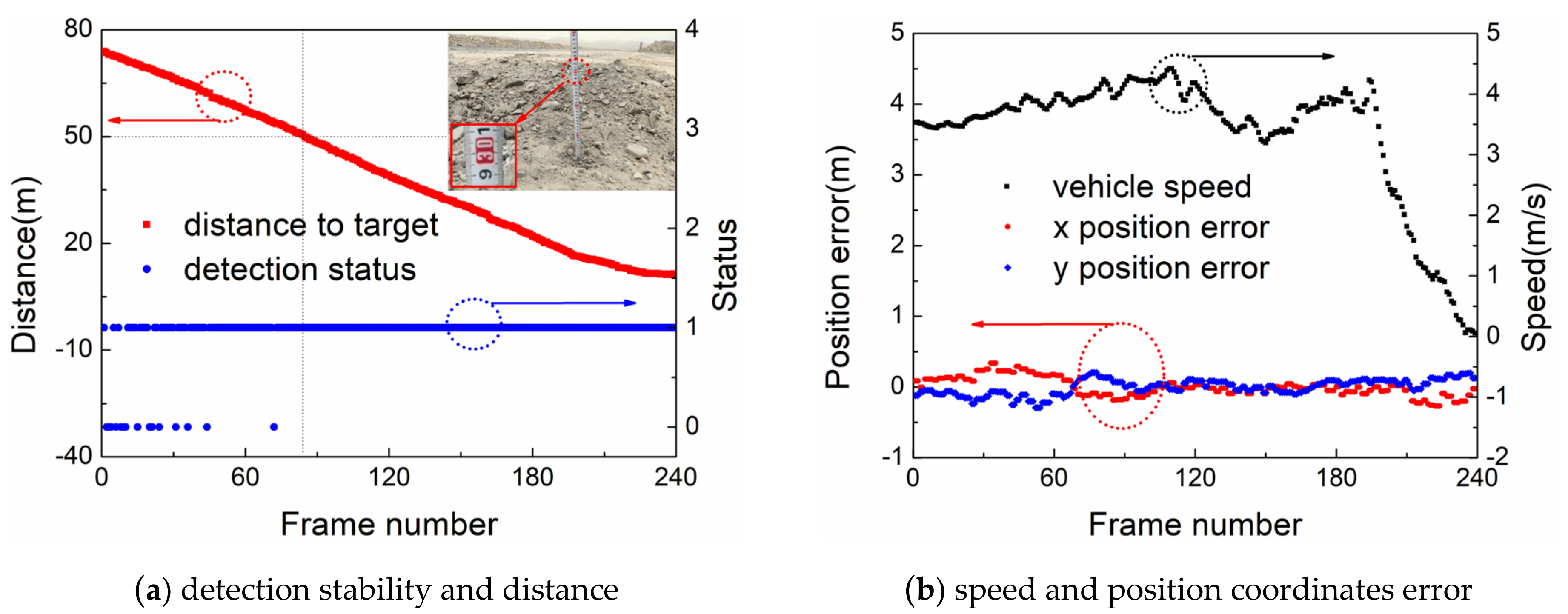

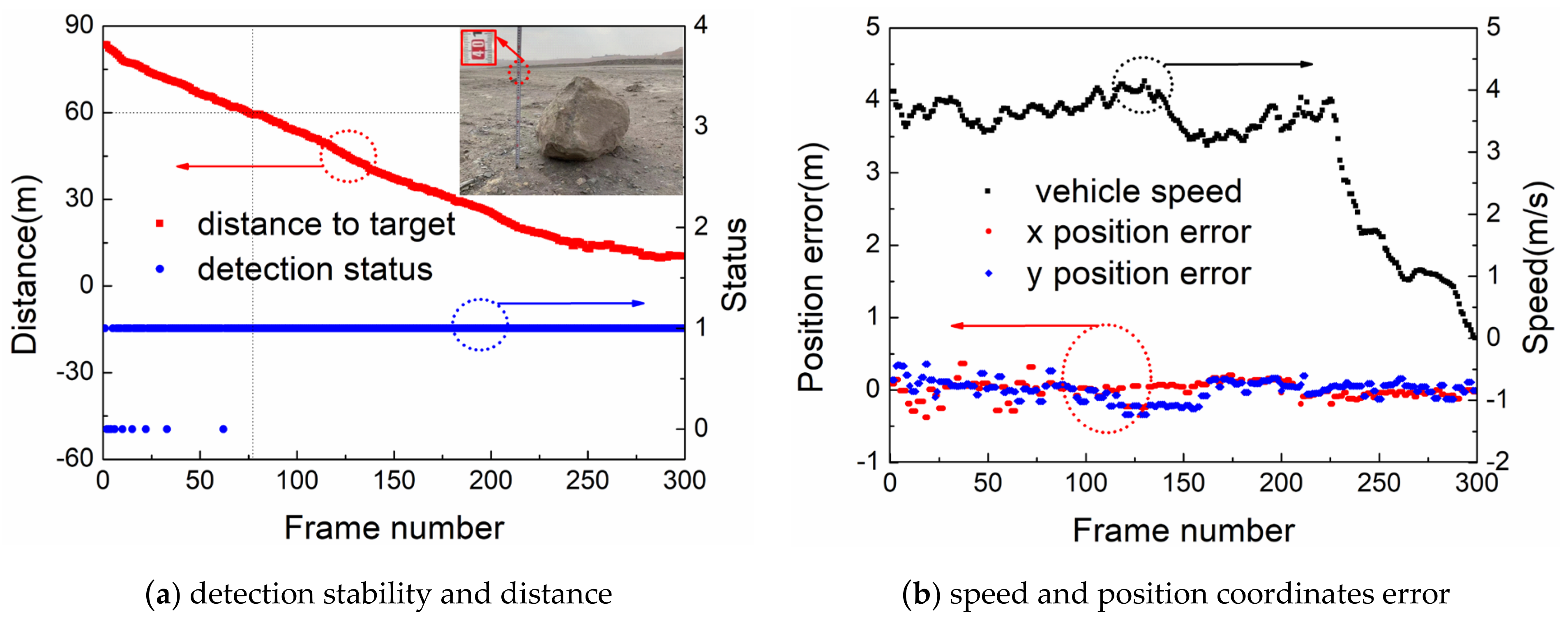

3. Experiments and Analysis

4. Discussion

4.1. Results

4.2. Time Analysis

5. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yuan, Y. Unmanned technology for mining trucks. Min. Equip. 2013, 10, 72–74. [Google Scholar]

- Widdififield, L.; Riggle, R. The Big Picture: An Overview Approach to Surface Mining. Min. Eng. 2016, 24, 3–5. [Google Scholar]

- Asvadi, A.; Peixoto, P.; Nunes, U. Two-stage static/dynamic environment modeling using voxel representation. In Robot 2015: Second Iberian Robotics Conference; Springer: Cham, Denmark, 2016; Volume 417, pp. 465–476. [Google Scholar]

- Asvadi, A.; Cristiano, P.; Peixoto, P.; Nunes, U. 3D Lidar-Based Static and Moving Obstacle Detection in Driving Environments: An Approach Based on Voxels and Multi-Region Ground Planes. Robot. Auton. Syst. 2016, 83, 299–311. [Google Scholar] [CrossRef]

- Dairi, A.; Harrou, F.; Senouci, M.; Sun, Y. Unsupervised Obstacle Detection in Driving Environments Using Deep-Learning-Based Stereovision. Robot. Auton. Syst. 2018, 100, 287–301. [Google Scholar] [CrossRef] [Green Version]

- Darms, M.S.; Rybski, P.E.; Baker, C.; Urmson, C. Obstacle Detection and Tracking for the Urban Challenge. IEEE Trans. Intell. Transp. Syst. 2009, 10, 475–485. [Google Scholar] [CrossRef]

- Wei, H.L.; Guo, A.B.; Dong, W.B.; Wang, X.J.; Huang, G.K. Research and application of millimeter wave radar collision avoidance system for mining truck. Saf. Coal Mines 2015, 46, 43–47. [Google Scholar]

- Zhou, T.; Yang, M.; Jiang, K.; Wong, H.; Yang, D. MMW Radar-Based Technologies in Autonomous Driving: A Review. Sensors 2020, 20, 7283. [Google Scholar] [CrossRef] [PubMed]

- Lin, B. A New Challenge: Detection of Small-Scale Falling Rocks on Transportation Roads in Open-Pit Mines. Sensors 2021, 21, 3548. [Google Scholar]

- Xiao, D.; Shan, F.; Li, Z.; Le, B.T.; Liu, X.; Li, X. A Target Detection Model Based on Improved Tiny-Yolov3 Under the Environment of Mining Truck. IEEE Access 2019, 7, 123757. [Google Scholar] [CrossRef]

- Lu, S.; Luo, Z.; Gao, F.; Liu, M.; Chang, K.; Piao, C. A Fast and Robust Lane Detection Method Based on Semantic Segmentation and Optical Flow Estimation. Sensors 2021, 21, 400. [Google Scholar] [CrossRef] [PubMed]

- Brabandere, B.D.; Neven, D.; Gool, L.V. Semantic instance segmentation for autonomous driving. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Eindhoven, The Netherlands, 21–26 July 2017; pp. 478–480. [Google Scholar]

- Liu, P.; King, I.; Lyu, M.R.; Xu, J. DDFlow: Learning optical flow with unlabeled data distillation. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8770–8777. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Q.; Lu, X.; He, Z.; Zhang, C.; Chen, W.S. Deep convolutional neural networks for thermal infrared object tracking. Knowl. -Based Syst. 2017, 134, 189–198. [Google Scholar] [CrossRef]

- Liu, Q.; Li, X.; He, Z.; Fan, N.; Yuan, D.; Wang, H. Learning deep multi-level similarity for thermal infrared object tracking. IEEE Trans. Multimed. 2020, 23, 2114–2126. [Google Scholar] [CrossRef]

- Liu, Q.; Yuan, D.; Fan, N.; Gao, P.; Li, X.; He, Z. Learning dual-level deep representation for thermal infrared tracking. IEEE Trans. Multimed. 2022. [Google Scholar] [CrossRef]

- Minemura, K.; Liau, H.F.; Monrroy, A.; Kato, S. Lmnet: Real-Time Multiclass Object Detection on Cpu Using 3D Lidar. In Proceedings of the 2018 3rd Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), Singapore, 21–23 July 2018; pp. 28–34. [Google Scholar]

- Beltran, J.; Guindel, C.; Moreno, F.M.; Cruzado, D.; Garcia, F.; de la Escalera, A. Birdnet: A 3D Object Detection Framework from Lidar Information. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 December 2018; pp. 3517–3523. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection from Point Cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 770–779. [Google Scholar]

- Rummelhard, L.; Paigwar, A.; Negre, A.; Laugier, C. Ground Estimation and Point Cloud Segmentation using SpatioTemporal Conditional Random Field. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1105–1110. [Google Scholar]

- Urmson, C.; Anhalt, J.; Bagnell, D.; Baker, C.; Bittner, R.; Clark, M.N.; Dolan, J.; Duggins, D.; Galatali, T.; Geyer, C.; et al. Autonomous driving in urban environments: Boss and the urban challenge. J. Field Robot. 2008, 25, 425–466. [Google Scholar] [CrossRef] [Green Version]

- Narksri, P.; Takeuchi, E.; Ninomiya, Y.; Morales, Y.; Akai, N.; Kawaguchi, N. A Slope-robust Cascaded Ground Segmentation in 3D Point Cloud for Autonomous Vehicles. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 December 2018; pp. 497–504. [Google Scholar]

- Chen, T.T.; Dai, B.; Wang, R.L.; Liu, D.X. Gaussian-Process-Based Real-Time Ground Segmentation for Autonomous Land Vehicles. J. Intell. Robot. Syst. 2014, 76, 563–582. [Google Scholar] [CrossRef]

- Li, M.; Stolz, M.; Feng, Z.; Kunert, M.; Henze, R.; Küçükay, F. An adaptive 3D grid-based clustering algorithm for automotive high resolution radar sensor. In Proceedings of the IEEE International Conference on Vehicular Electronics and Safety (ICVES), Madrid, Spain, 12–14 September 2018; pp. 1–7. [Google Scholar]

- Scheel, A.; Knill, C.; Reuter, S.; Dietmayer, K. Multi-sensor multi-object tracking of vehicles using high-resolution radars. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 558–565. [Google Scholar]

- Dickmann, J.; Klappstein, J.; Hahn, M.; Appenrodt, N.; Bloecher, H.L.; Werber, K.; Sailer, A. Automotive radar the key technology for autonomous driving: From detection and ranging to environmental understanding. In Proceedings of the IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 1–6 May 2016; pp. 1–6. [Google Scholar]

- Voronov, Y.; Voronov, A.; Makhambayev, D. Current State and Development Prospects of Autonomous Haulage at Surface Mines. E3S Web Conf. EDP Sci. 2020, 174, 1–7. [Google Scholar] [CrossRef]

- Haris, M.; Glowacz, A. Navigating an Automated Driving Vehicle via the Early Fusion of Multi-Modality. Sensors 2022, 22, 1425. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-sensor fusion in automated driving: A survey. IEEE Access 2019, 8, 2847–2868. [Google Scholar] [CrossRef]

- Prakash, A.; Chitta, K.; Geiger, A. Multi-modal fusion transformer for end-to-end autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7077–7087. [Google Scholar]

- Li, Y.J.; Park, J.; O’Toole, M.; Kitani, K. Modality-Agnostic Learning for Radar-Lidar Fusion in Vehicle Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 918–927. [Google Scholar]

- Lee, H.; Chae, H.; Yi, K. A geometric model based 2D LiDAR/radar sensor fusion for tracking surrounding vehicles. IFAC-PapersOnLine 2019, 52, 130–135. [Google Scholar] [CrossRef]

- Wagner, T.; Feger, R.; Stelzer, A. Modifications of the OPTICS clustering algorithm for short-range radar tracking applications. In Proceedings of the 2018 15th European Radar Conference (EuRAD), Madrid, Spain, 26–28 September 2018; IEEE: Piscataway, NJ, USA; pp. 91–94. [Google Scholar]

- Deng, D. DBSCAN clustering algorithm based on density. In Proceedings of the 2020 7th International Forum on Electrical Engineering and Automation (IFEEA), Hefei, China, 25–27 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 949–953. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Pan, W.; Hu, Y.; Li, C.; Yuan, X.; Long, T. A Detection and Tracking Method Based on Heterogeneous Multi-Sensor Fusion for Unmanned Mining Trucks. Sensors 2022, 22, 5989. https://doi.org/10.3390/s22165989

Liu H, Pan W, Hu Y, Li C, Yuan X, Long T. A Detection and Tracking Method Based on Heterogeneous Multi-Sensor Fusion for Unmanned Mining Trucks. Sensors. 2022; 22(16):5989. https://doi.org/10.3390/s22165989

Chicago/Turabian StyleLiu, Haitao, Wenbo Pan, Yunqing Hu, Cheng Li, Xiwen Yuan, and Teng Long. 2022. "A Detection and Tracking Method Based on Heterogeneous Multi-Sensor Fusion for Unmanned Mining Trucks" Sensors 22, no. 16: 5989. https://doi.org/10.3390/s22165989

APA StyleLiu, H., Pan, W., Hu, Y., Li, C., Yuan, X., & Long, T. (2022). A Detection and Tracking Method Based on Heterogeneous Multi-Sensor Fusion for Unmanned Mining Trucks. Sensors, 22(16), 5989. https://doi.org/10.3390/s22165989