Image Enhancement of Maritime Infrared Targets Based on Scene Discrimination

Abstract

:1. Introduction

- The existing methods do not consider the particular circumstances of marine rescue. The target is usually embedded in the background of the waves. The characteristics of the targets under different wave clutter intensities are different and cannot be processed by a single method.

- The information in the maritime target area is relatively weak. While enhancing the detailed information of the target, it is necessary to overcome noise interference.

- The target size is uncertain, and the larger target contour is more complex; the smaller target is generally a dim point, so the target feature extraction is more complicated.

- In the marine rescue operation, it is necessary to realize the positioning and continuous tracking of the target. Therefore, essential features in the background need to be preserved to avoid image distortion.

- 1.

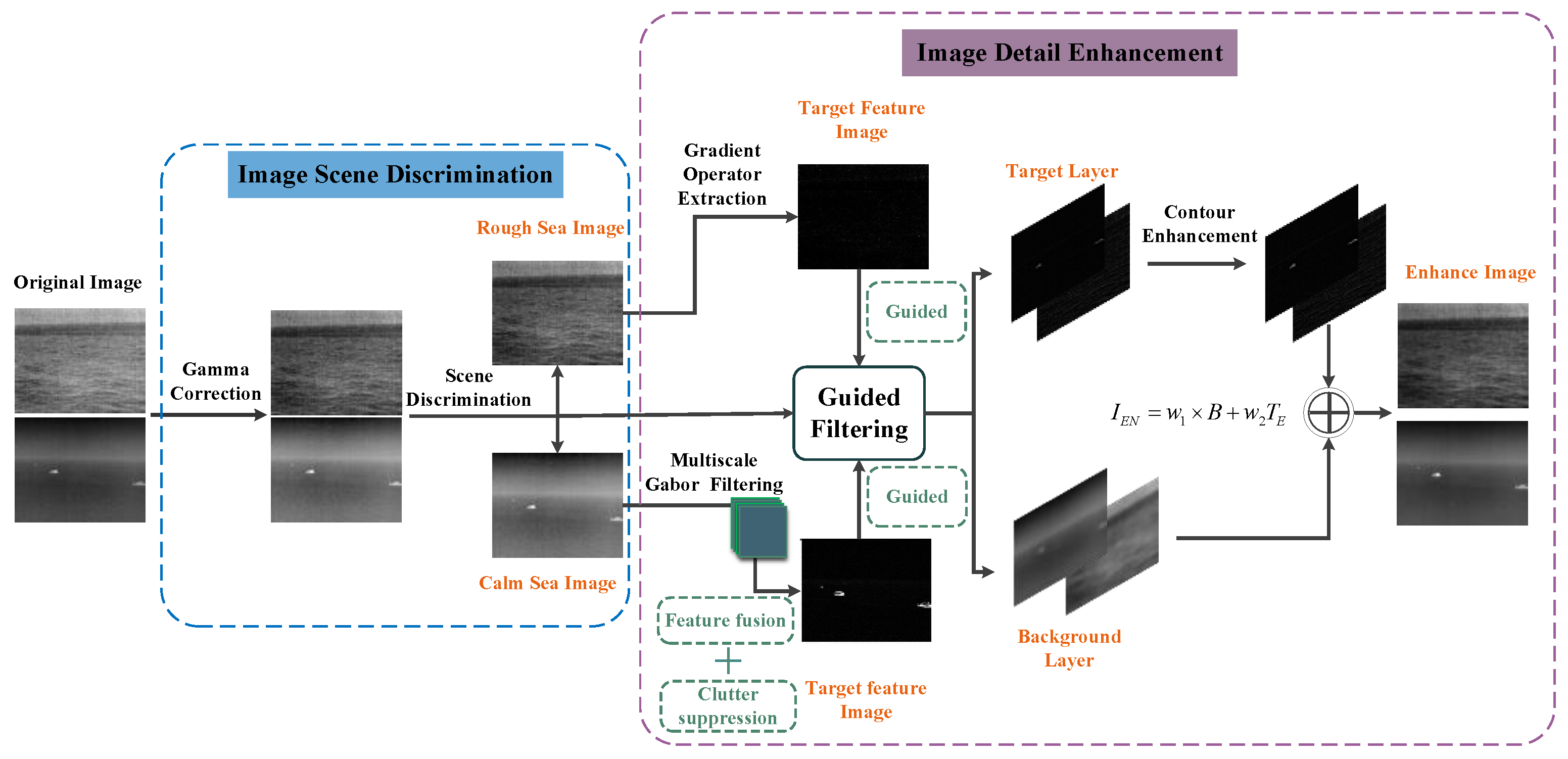

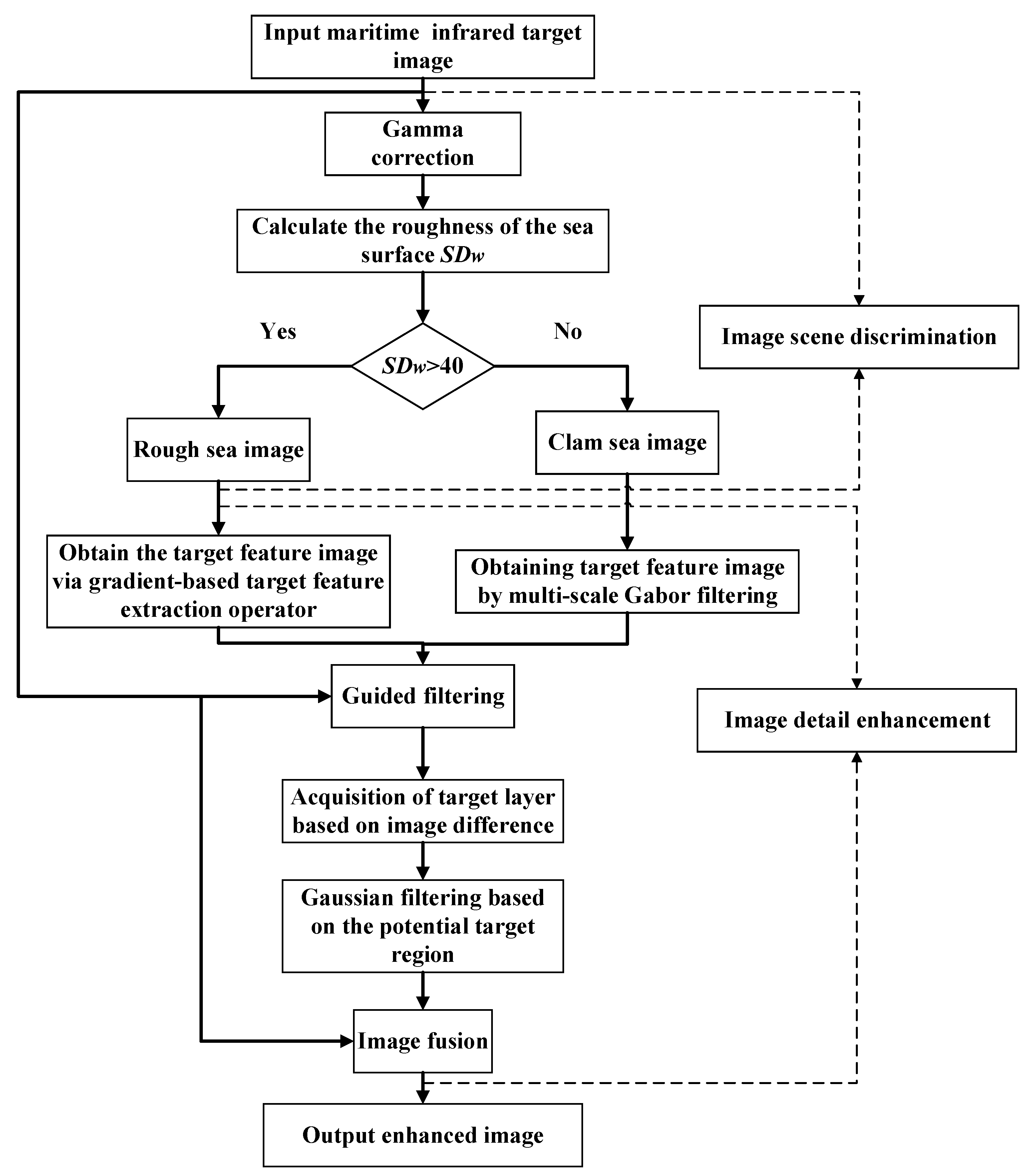

- For the first problem, an in-depth analysis of the relationship between the characteristics of the target and the sea clutter to improve the generalization of the algorithm to the sea environment, according to the texture roughness of the waves in the local sea area, the scene of the image can be adaptively discriminated, the image is divided into a calm sea image with smooth sea surface and rough sea image with large sea clutter.

- 2.

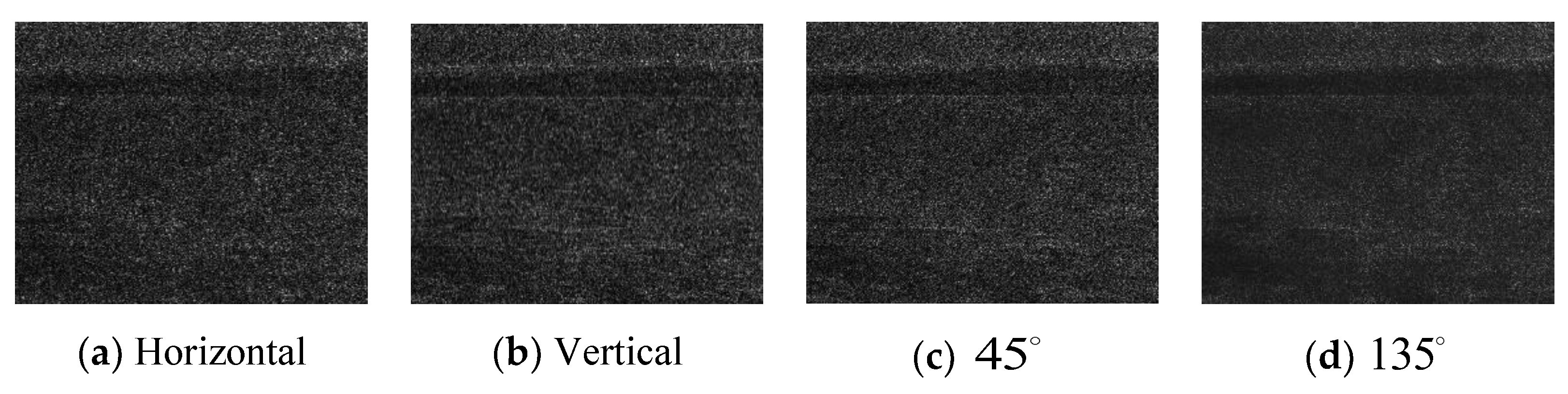

- For the second and third problems, according to the difference between the target and background texture features in the two types of scenes, the target features in the two types of images are extracted by the imaginary part of the Gabor filter at a specific scale and the gradient-based target feature operator proposed in this paper, respectively, set different clutter suppression and feature fusion strategies, obtain the target feature image of multi-scale fusion and only enhance the target features.

- 3.

- For the fourth problem, the target feature image is used as the guide image to conduct guided filtering, and the target layer with similar texture to the guide image is extracted from the original image, which solves the image distortion that is prone to multi-scale feature extraction.

- 4.

- Finally, according to the principle of thermal conduction in infrared imaging, the blurred background around the target contour is extracted by Gaussian filtering based on the potential target area, the blurred background of the target layer is removed by differential operation, and the appropriate weight is used to fuse with the background layer. It retains the natural environment characteristics in the background.

2. Local Gradient Saliency and Multi-Directional Texture Features

2.1. Local Gradient Saliency

2.2. Orientation Texture Feature of Target

- 1.

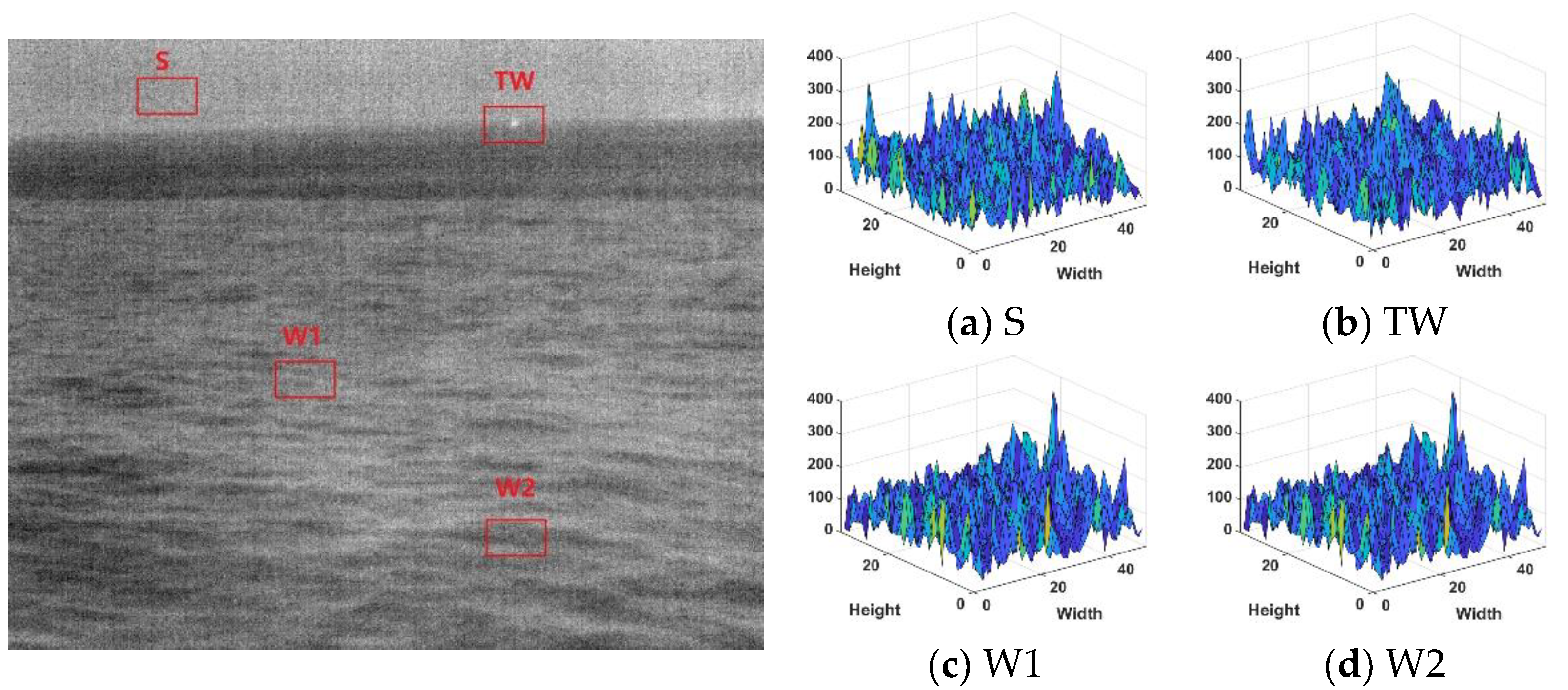

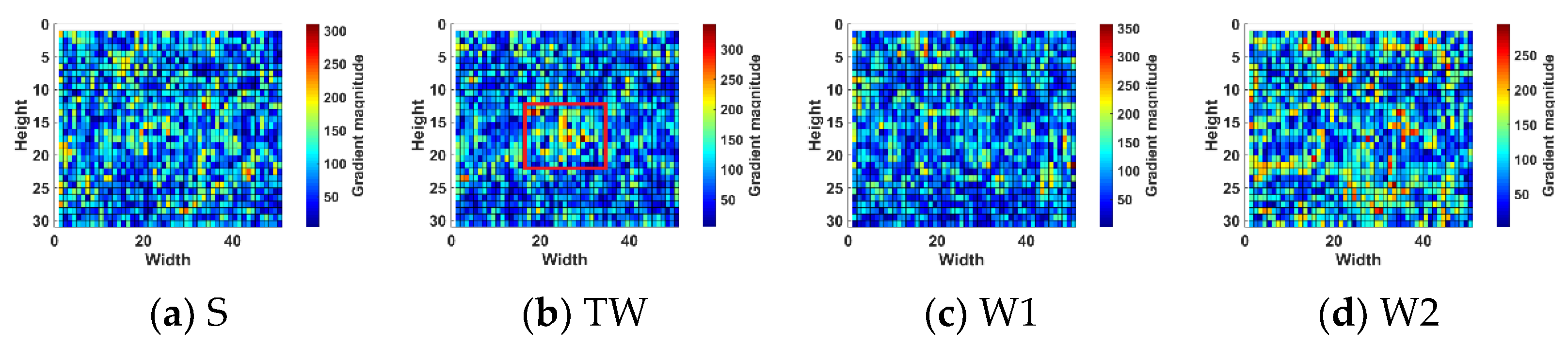

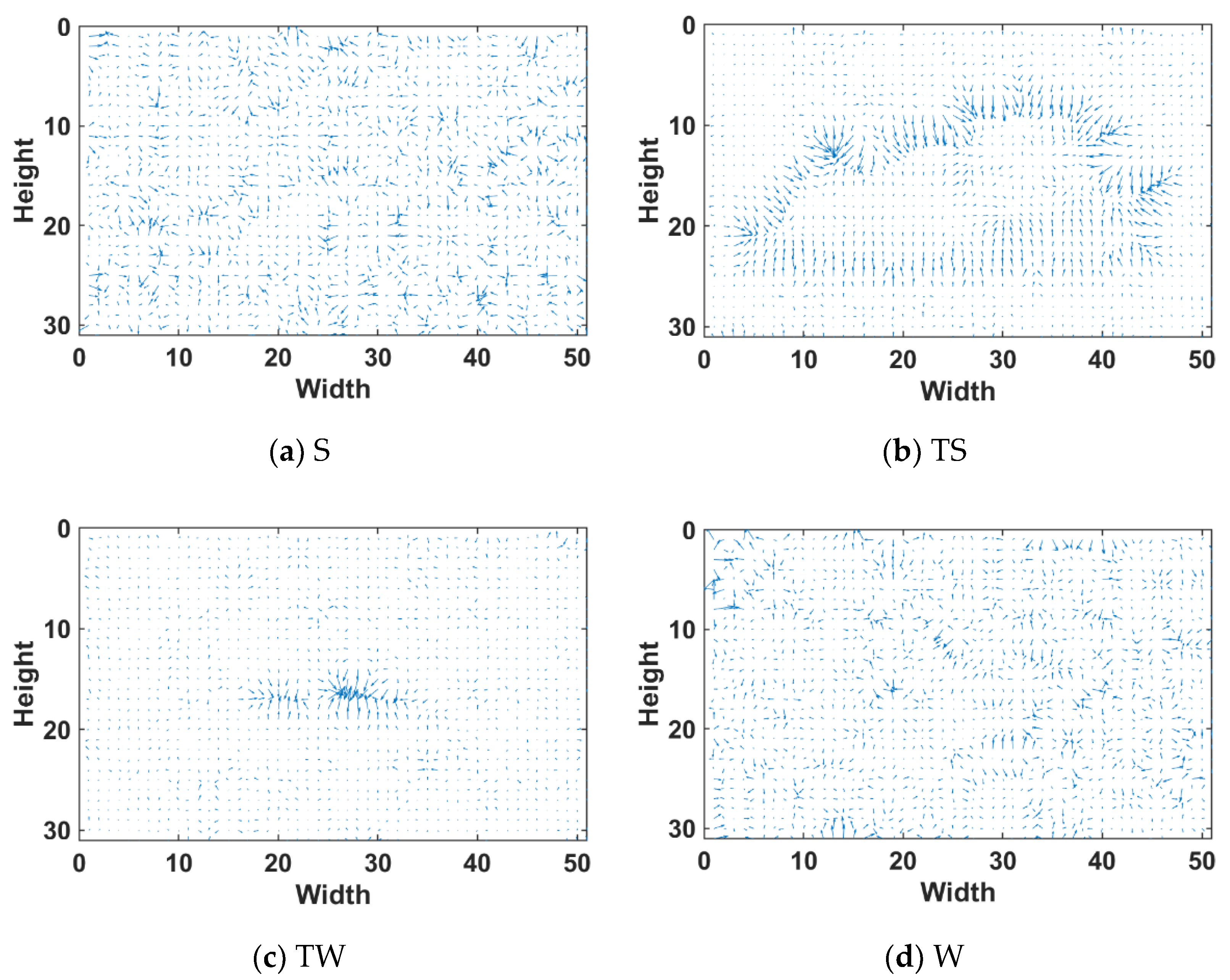

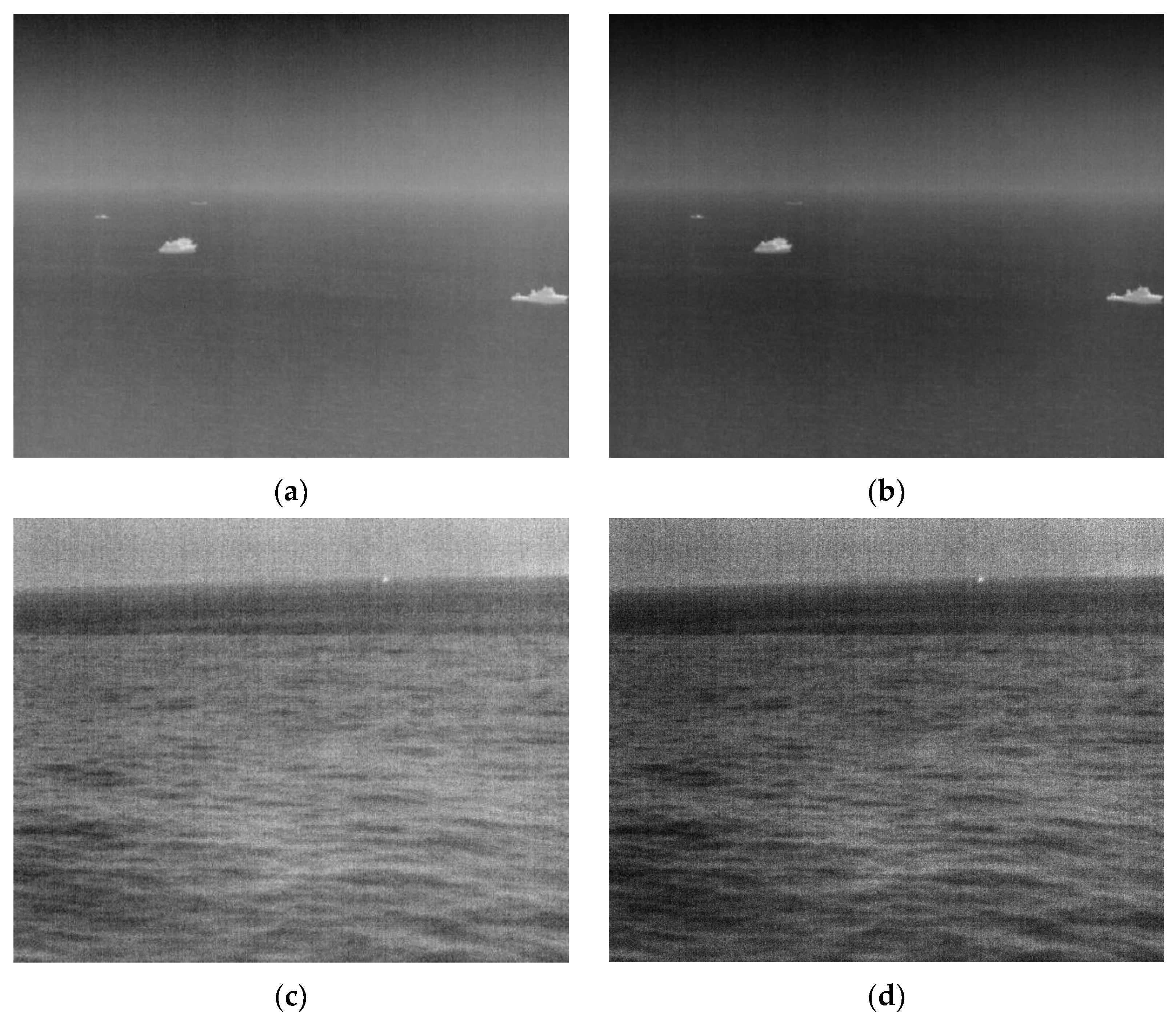

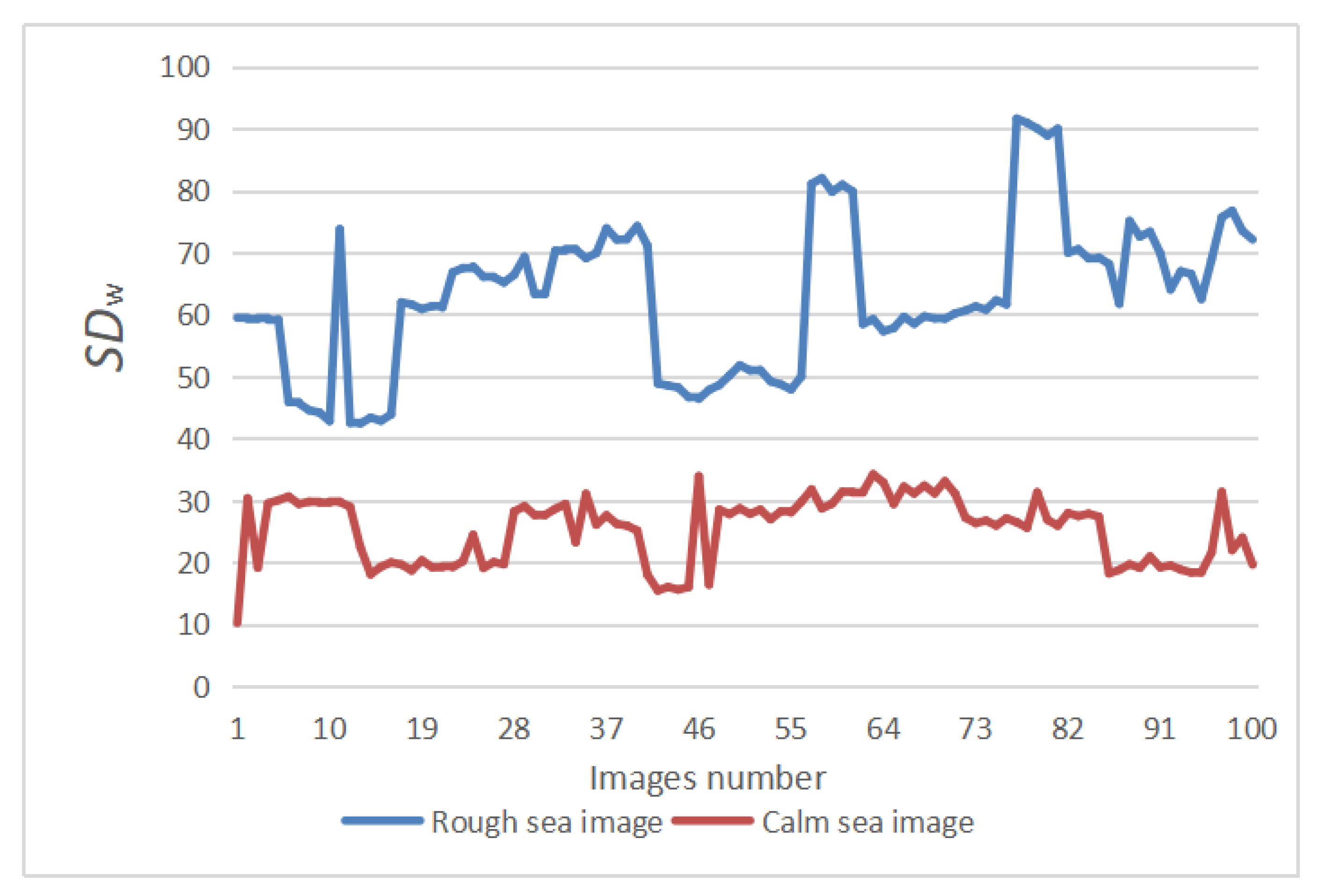

- Compared with the calm sea image, the rough sea image has a more significant fluctuation in the local gradient magnitude of the sea surface area, the sea surface is rougher, and the texture information is richer.

- 2.

- The target in the calm sea image is less disturbed by the wave clutter, and the contour of the target is also more complicated, which is also the difficulty of enhancement. The contour of the target extends in multiple directions, which can be fully extracted through multi-scale feature extraction.

- 3.

- The targets in the rough sea images are mostly dim point targets disturbed by the waves. The difficulty of enhancement lies in the suppression of background clutter in the feature extraction. The gradient of the target in the direction has stronger significance and can better suppress clutter.

3. Algorithm Principle

3.1. Scene Discrimination

3.2. Image Detail Enhancement

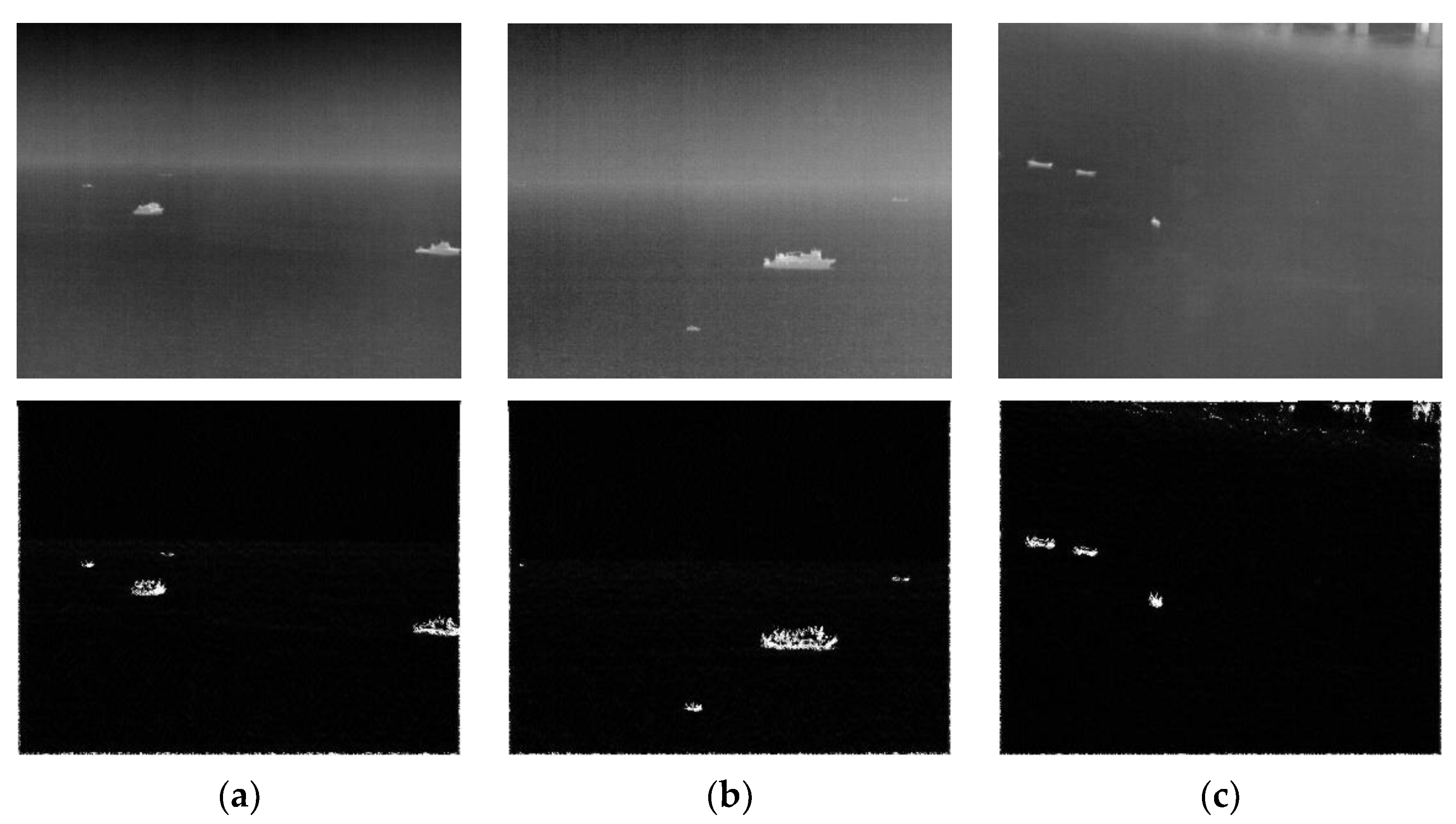

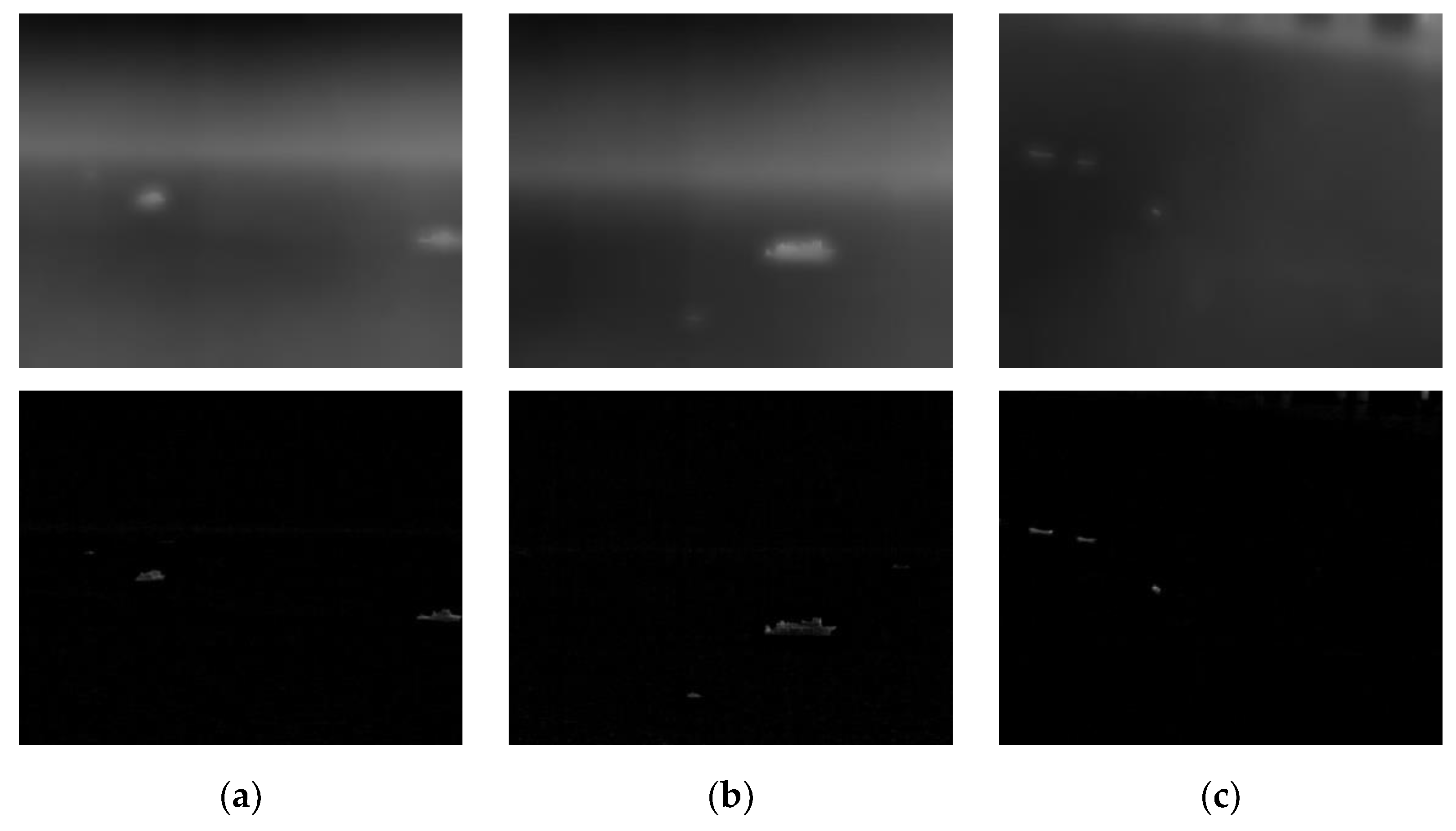

3.2.1. Calm Sea Images

3.2.2. Rough Sea Images

4. Experimental Analysis and Results

4.1. Experiment Settings

4.1.1. Experimental Data

4.1.2. Evaluation Metrics

4.1.3. Contrast Method

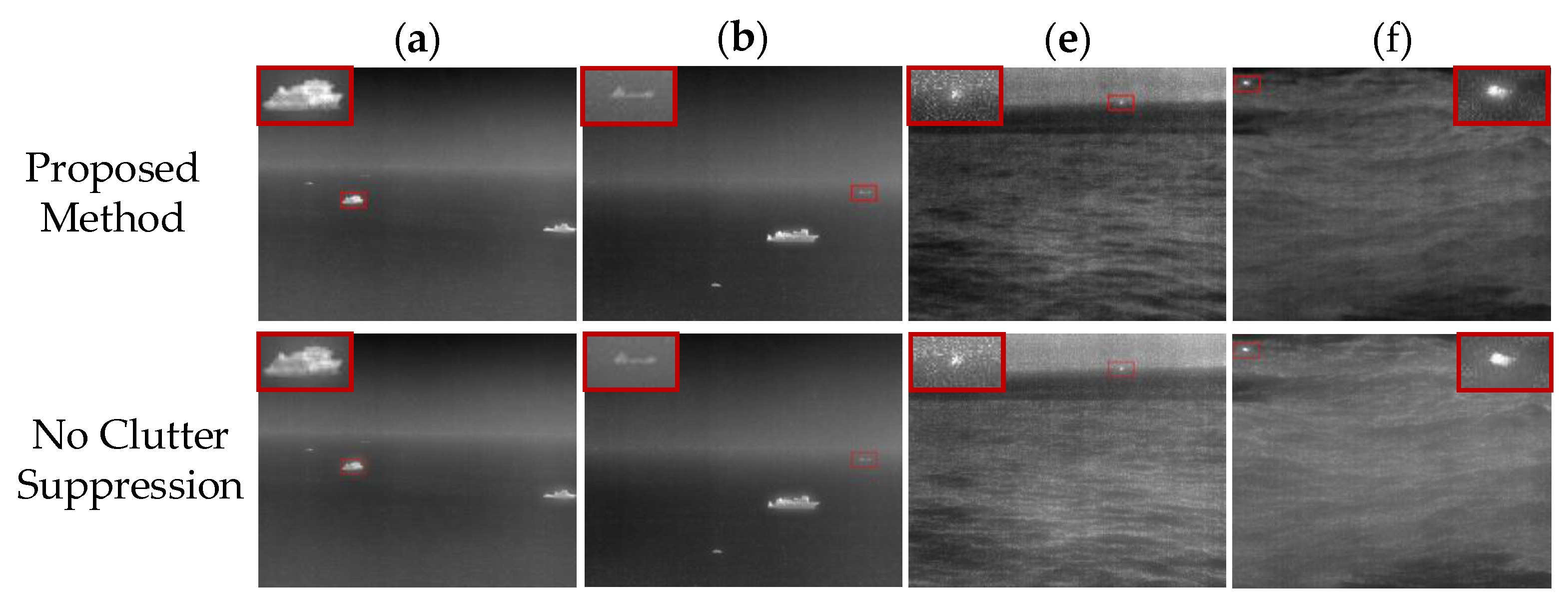

4.2. Ablation Study

4.3. Comparison of Experimental Results

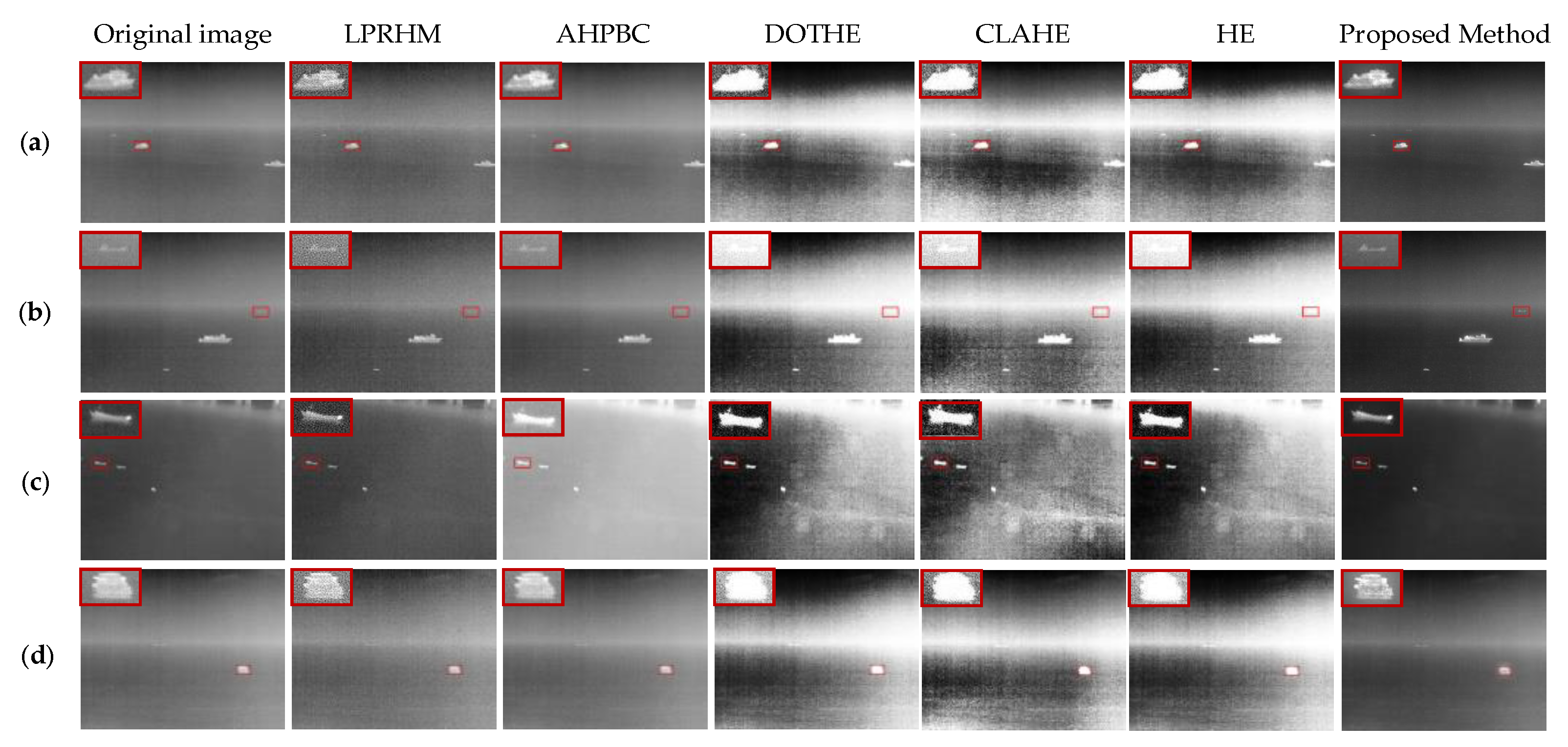

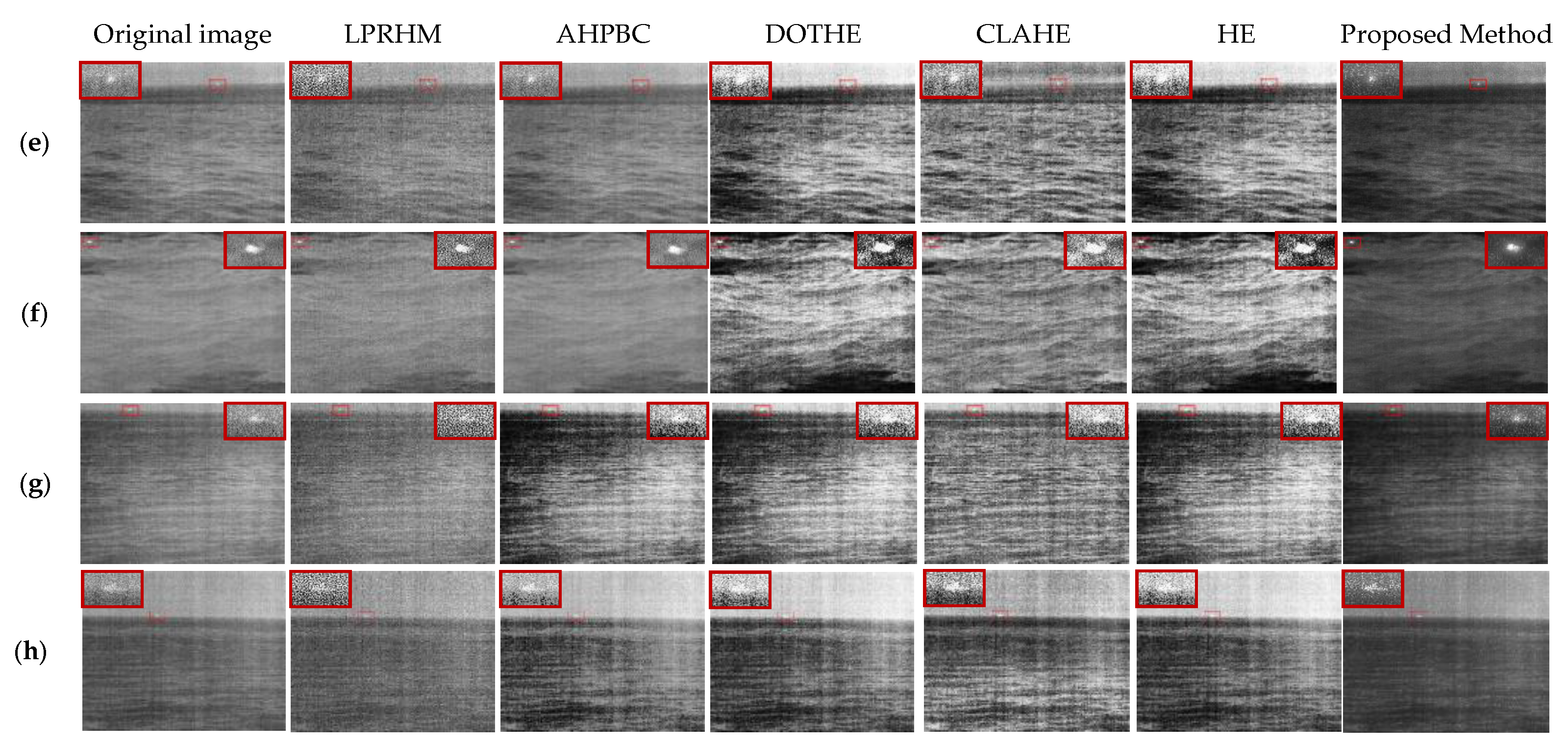

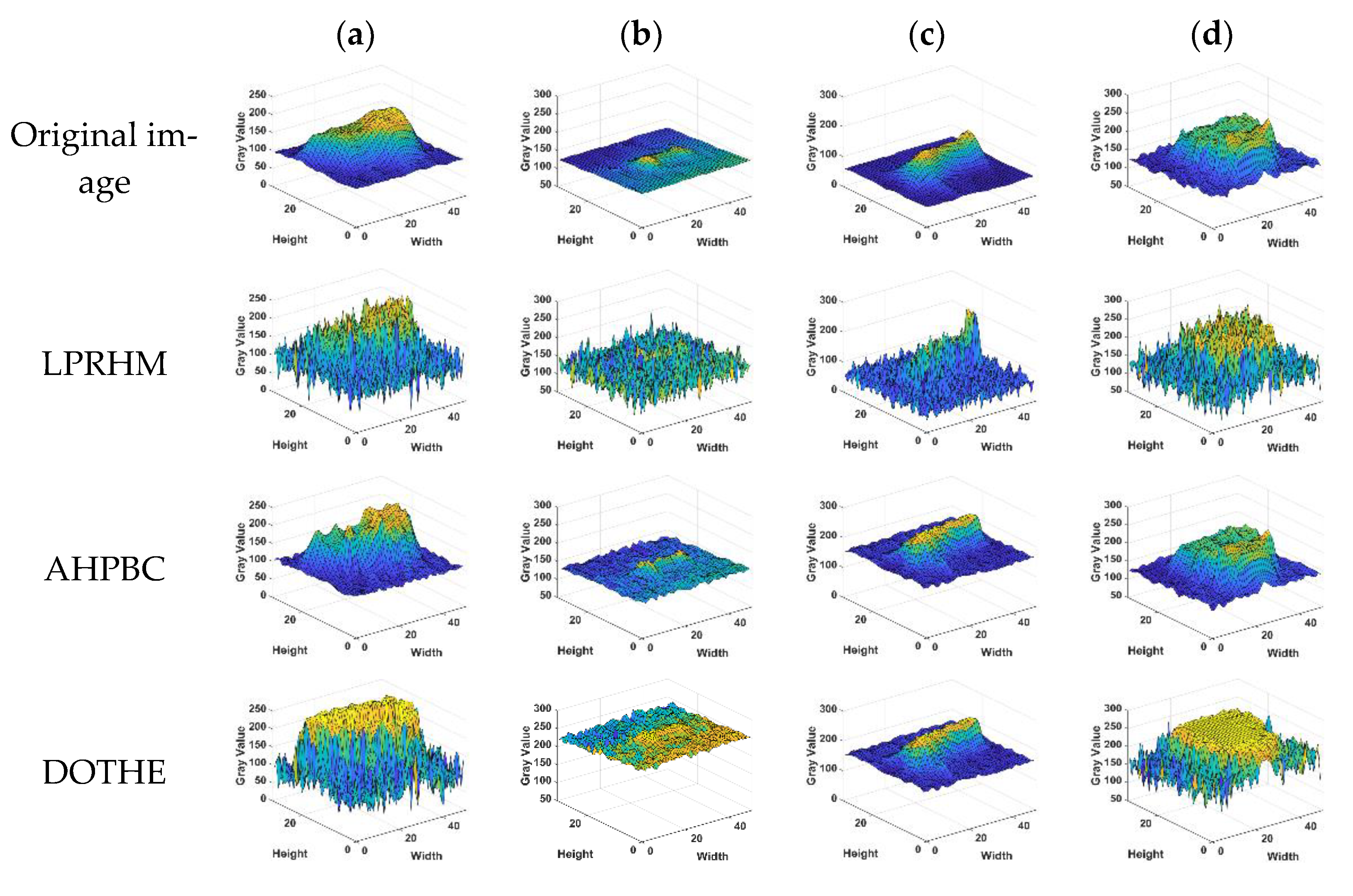

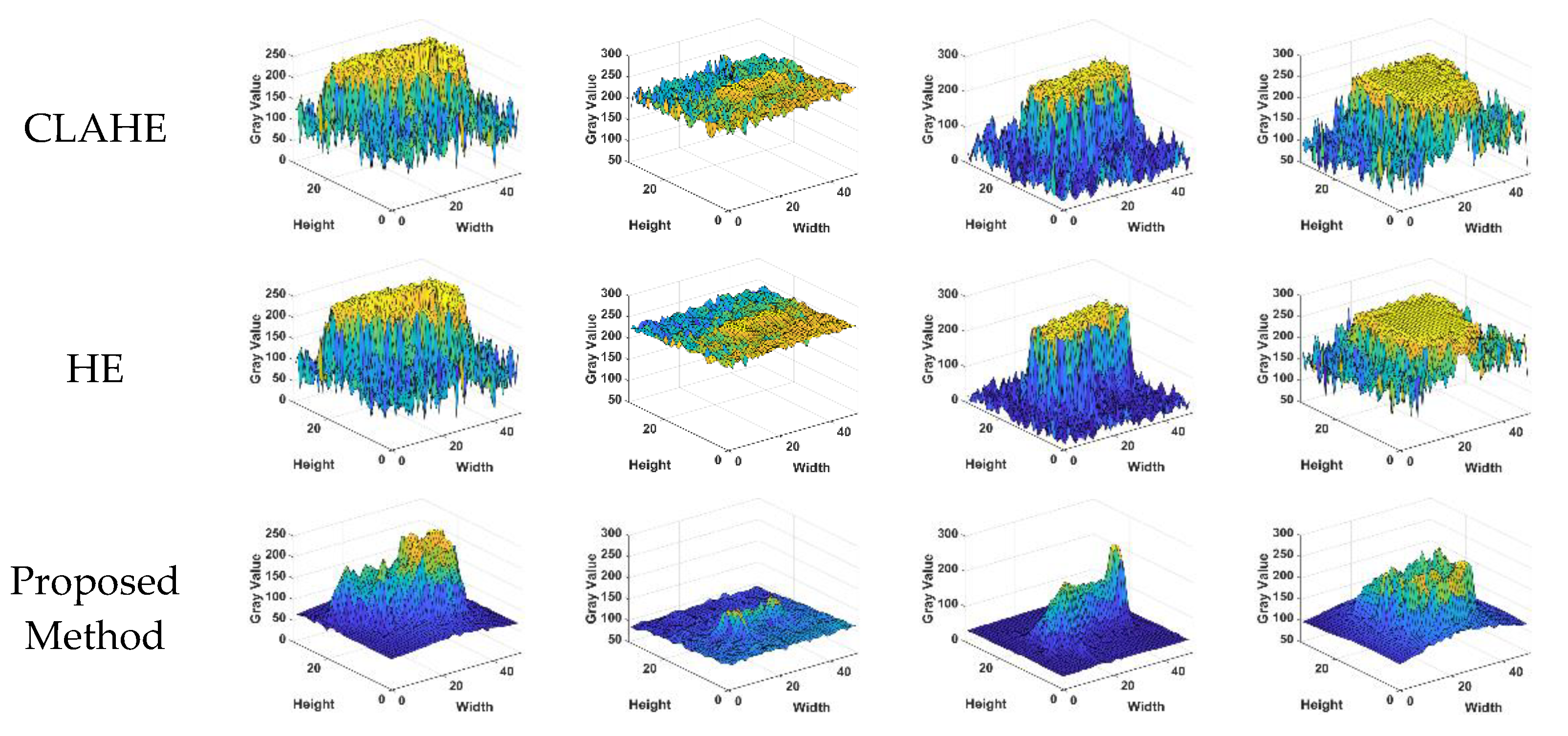

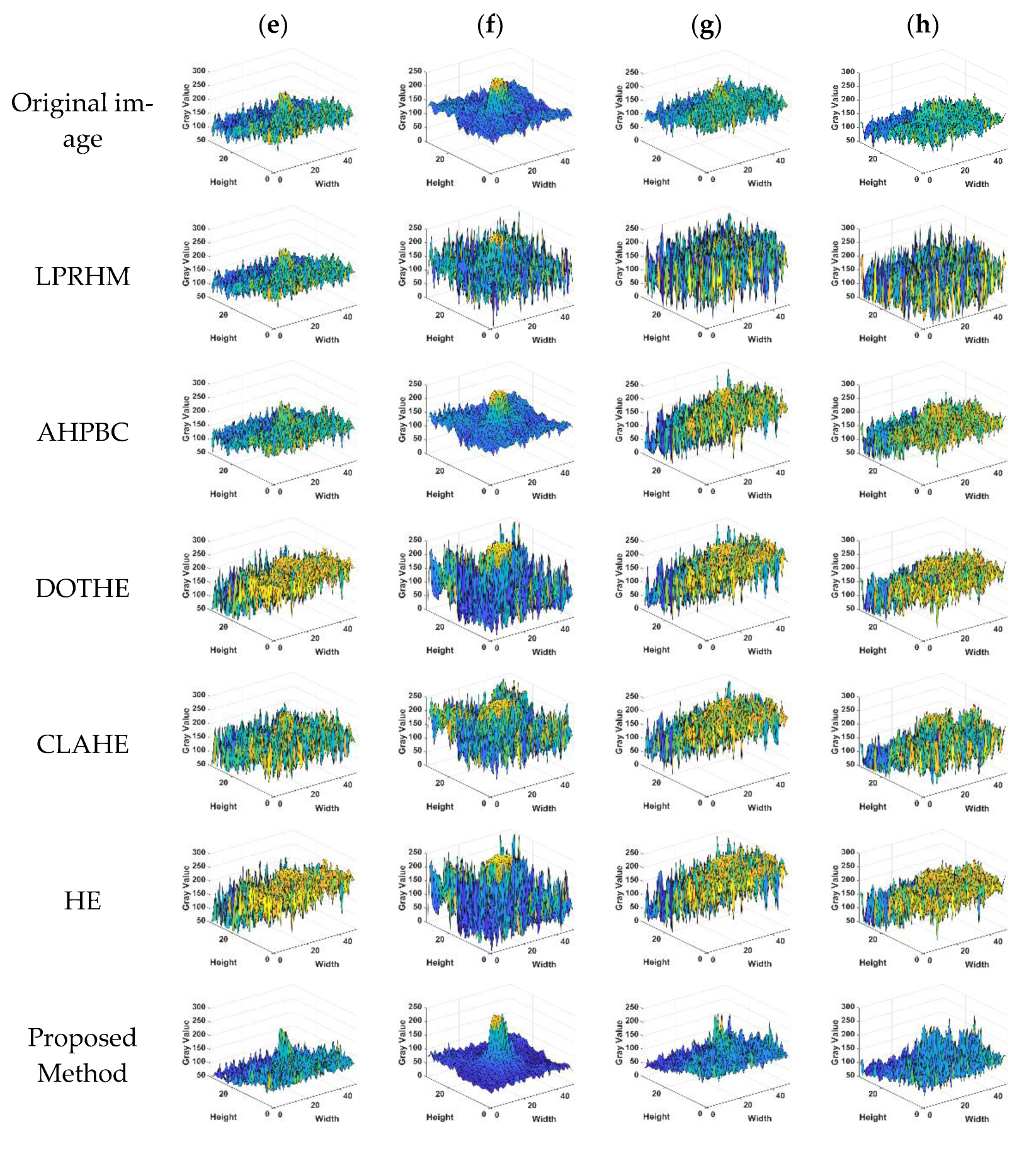

4.3.1. Qualitative Comparison

4.3.2. Quantitative Comparison

4.3.3. Running Time

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Leonard, C.L.; DeWeert, M.J.; Gradie, J.; Iokepa, J.; Stalder, C.L. Performance of an EO/IR sensor system in marine search and rescue. In Proceedings of the Conference on Airborne Intelligence, Surveillance, Reconnaissance (ISR) Systems and Applications II, Orlando, FL, USA, 29–30 March 2005; pp. 122–133. [Google Scholar]

- Zheng, L.; Xu, W. An Improved Adaptive Spatial Preprocessing Method for Remote Sensing Images. Sensors 2021, 21, 5684. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Dong, L.L.; Zheng, H.; Xu, W.H. Infrared maritime small target detection based on edge and local intensity features. Infrared Phys. Technol. 2021, 119, 103940. [Google Scholar] [CrossRef]

- Pisano, E.D.; Zong, S.Q.; Hemminger, B.M.; DeLuca, M.; Johnston, R.E.; Muller, K.; Braeuning, M.P.; Pizer, S.M. Contrast limited adaptive histogram equalization image processing to improve the detection of simulated spiculations in dense mammograms. J. Digit. Imaging 1998, 11, 193–200. [Google Scholar] [CrossRef] [Green Version]

- Singh, K.; Vishwakarma, D.K.; Walia, G.S.; Kapoor, R. Contrast enhancement via texture region based histogram equalization. J. Mod. Opt. 2016, 63, 1444–1450. [Google Scholar] [CrossRef]

- Wan, M.J.; Gu, G.H.; Qian, W.X.; Ren, K.; Chen, Q.; Maldague, X. Infrared Image Enhancement Using Adaptive Histogram Partition and Brightness Correction. Remote Sens. 2018, 10, 682. [Google Scholar] [CrossRef] [Green Version]

- Ashiba, H.I.; Ashiba, M.I. Super-efficient enhancement algorithm for infrared night vision imaging system. Multimed. Tools Appl. 2021, 80, 9721–9747. [Google Scholar] [CrossRef]

- Mesev, V. Morphological image analysis: Principles and applications. Environ. Plan. B-Plan. Des. 2001, 28, 800–801. [Google Scholar]

- Bai, X.Z.; Zhou, F.G.; Xie, Y.C.; Jin, T. Enhanced detectability of point target using adaptive morphological clutter elimination by importing the properties of the target region. Signal Process. 2009, 89, 1973–1989. [Google Scholar] [CrossRef]

- Bai, X.Z.; Zhou, F.G. Top-hat selection transformation for infrared dim small target enhancement. Imaging Sci. J. 2010, 58, 112–117. [Google Scholar] [CrossRef]

- Bai, X.Z.; Liu, H.N. Edge enhanced morphology for infrared image analysis. Infrared Phys. Technol. 2017, 80, 44–57. [Google Scholar] [CrossRef]

- Shao, G.F.; Gao, F.Q.; Li, T.J.; Zhu, R.; Pan, T.; Chen, Y.W.; IEEE. An Adaptive Image Contrast Enhancement Algorithm Based on Retinex. In Proceedings of the Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; pp. 6294–6299. [Google Scholar]

- Liu, M.; Chen, S.; Lu, F.; Xing, M.; Wei, J. Realizing Target Detection in SAR Images Based on Multiscale Superpixel Fusion. Sensors 2021, 21, 1643. [Google Scholar] [CrossRef]

- Li, T.; Zhao, J.F.; Wu, X.H.; Mao, H.F.; Cui, G.M. Infrared imaging enhancement through local window-based saliency extraction with spatial weight. Iet Image Process. 2021, 15, 2910–2925. [Google Scholar] [CrossRef]

- Bai, X.Z. Morphological infrared image enhancement based on multi-scale sequential toggle operator using opening and closing as primitives. Infrared Phys. Technol. 2015, 68, 143–151. [Google Scholar] [CrossRef]

- Babatunde, I.G.; Charles, A.O.; Kayode, A.B.; Olatubosun, O. A Multi-Level Model for Fingerprint Image Enhancement. In Proceedings of the International MultiConference of Engineers and Computer Scientists (IMECS 2012), Hong Kong, China, 14–16 March 2012; pp. 730–735. [Google Scholar]

- Voronin, V.; Semenishchev, E.; Cen, Y.G.; Zelensky, A.; Agaian, S. Near-Infrared Image Enhancement Through Multi-Scale Alpha-Rooting Processing for Remote Sensing Application. In Proceedings of the Conference on Applications of Digital Image Processing XLIII, Electr Network, 24 August–4 September 2020. [Google Scholar]

- Guo, C.L.; Li, C.Y.; Guo, J.C.; Loy, C.C.; Hou, J.H.; Kwong, S.; Cong, R.M.; IEEE. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 1777–1786. [Google Scholar]

- Kuang, X.D.; Sui, X.B.; Liu, Y.; Chen, Q.; Gu, G.H. Single infrared image enhancement using a deep convolutional neural network. Neurocomputing 2019, 332, 119–128. [Google Scholar] [CrossRef]

- Wang, D.; Lai, R.; Guan, J.T. Target attention deep neural network for infrared image enhancement. Infrared Phys. Technol. 2021, 115, 103690. [Google Scholar] [CrossRef]

- Wang, J.; Hu, Y.Z. An Improved Enhancement Algorithm Based on CNN Applicable for Weak Contrast Images. IEEE Access 2020, 8, 8459–8476. [Google Scholar] [CrossRef]

- Wang, L.W.; Liu, Z.S.; Siu, W.C.; Lun, D.P.K. Lightening Network for Low-Light Image Enhancement. IEEE Trans. Image Process. 2020, 29, 7984–7996. [Google Scholar] [CrossRef]

- Jiang, Y.F.; Gong, X.Y.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.H.; Yang, J.C.; Zhou, P.; Wang, Z.Y. EnlightenGAN: Deep Light Enhancement Without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Zhao, Y.F.; Cheng, J.C.; Zhou, W.; Zhang, C.X.; Pan, X. Infrared Pedestrian Detection with Converted Temperature Map. In Proceedings of the Annual Summit and Conference of the Asia-Pacific-Signal-and-Information-Processing-Association (APSIPA ASC), Lanzhou, China, 18–21 November 2019; pp. 2025–2031. [Google Scholar]

- Chen, X.; Liu, L.; Zhang, J.Z.; Shao, W.B. Infrared image denoising based on the variance-stabilizing transform and the dual-domain filter. Digit. Signal Process. 2021, 113, 103012. [Google Scholar] [CrossRef]

- Yu, B.B. An improved infrared image processing method based on adaptive threshold denoising. Eurasip J. Image Video Process. 2019, 1, 1–12. [Google Scholar] [CrossRef]

- Jiang, H.; Asad, M.; Huang, X.L.; Yang, J. Learning in-place residual homogeneity for single image detail enhancement. J. Electron. Imaging 2020, 29, 043016. [Google Scholar] [CrossRef]

- Wang, Z.J.; Luo, Y.Y.; Jiang, S.Z.; Xiong, N.F.; Wan, L.T. An Improved Algorithm for Adaptive Infrared Image Enhancement Based on Guided Filtering. Spectrosc. Spectr. Anal. 2020, 40, 3463–3467. [Google Scholar] [CrossRef]

- Zhang, F.; Li, C.F.; Shi, L. Detecting and tracking dim moving point target in IR image sequence. Infrared Phys. Technol. 2005, 46, 323–328. [Google Scholar] [CrossRef]

- Lu, Y.W.; Dong, L.L.; Zhang, T.; Xu, W.H. A Robust Detection Algorithm for Infrared Maritime Small and Dim Targets. Sensors 2020, 20, 1237. [Google Scholar] [CrossRef] [Green Version]

- Lai, R.; Yang, Y.T.; Wang, B.J.; Zhou, H.X. A quantitative measure based infrared image enhancement algorithm using plateau histogram. Opt. Commun. 2010, 283, 4283–4288. [Google Scholar] [CrossRef]

- Bai, X.Z.; Zhou, F.G.; Xue, B.D. Infrared image enhancement through contrast enhancement by using multiscale new top-hat transform. Infrared Phys. Technol. 2011, 54, 61–69. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Chen, Q. Infrared small target enhancement: Grey level mapping based on improved sigmoid transformation and saliency histogram. J. Mod. Opt. 2018, 65, 1161–1179. [Google Scholar] [CrossRef]

- Roman, J.C.M.; Noguera, J.L.V.; Legal-Ayala, H.; Pinto-Roa, D.P.; Gomez-Guerrero, S.; Torres, M.G. Entropy and Contrast Enhancement of Infrared Thermal Images Using the Multiscale Top-Hat Transform. Entropy 2019, 21, 244. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Deng, H.; Sun, X.P.; Liu, M.L.; Ye, C.H.; Zhou, X. Entropy-based window selection for detecting dim and small infrared targets. Pattern Recognit. 2017, 61, 66–77. [Google Scholar] [CrossRef]

- Deng, H.; Sun, X.; Zhou, X. A Multiscale Fuzzy Metric for Detecting Small Infrared Targets Against Chaotic Cloudy/Sea-Sky Backgrounds. IEEE Trans. Cybern. 2019, 49, 1694–1707. [Google Scholar] [CrossRef]

- Wang, C.L.; Wang, L.P. Multidirectional Ring Top-Hat Transformation for Infrared Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8077–8088. [Google Scholar] [CrossRef]

- Soni, T.; Zeidler, J.R.; Ku, W.H. Performance evaluation of 2-D adaptive prediction filters for detection of small objects in image data. IEEE Trans. Image Processing A Publ. IEEE Signal Process. Soc. 1993, 2, 327–340. [Google Scholar] [CrossRef] [PubMed]

- Bai, X.; Zhou, F.; Xie, Y. New class of top-hat transformation to enhance infrared small targets. J. Electron. Imaging 2008, 17, 030501. [Google Scholar] [CrossRef] [Green Version]

- Ffrench, P.A.; Zeidler, J.R.; Ku, W.H. Enhanced detectability of small objects in correlated clutter using an improved 2-D adaptive lattice algorithm. IEEE Trans. Image Process. 1997, 6, 383–397. [Google Scholar] [CrossRef]

| Methods | BSF | ||

|---|---|---|---|

| (a) | Proposed Method | 0.1951 | 1.0870 |

| No clutter suppression | 0.2046 | 0.9978 | |

| (b) | Proposed Method | 0.1558 | 1.0859 |

| No clutter suppression | 0.1663 | 0.9915 | |

| (e) | Proposed Method | 0.2352 | 1.0296 |

| No clutter suppression | 0.3928 | 0.6785 | |

| (f) | Proposed Method | 0.2150 | 1.1421 |

| No clutter suppression | 0.3857 | 1.1203 |

| Methods | BSF | LSBR | ||

|---|---|---|---|---|

| (a) | Original image | 0.4003 | - | 45.0666 |

| LPRHM | 0.4135 | 0.7405 | 50.0929 | |

| AHPBC | 0.4264 | 0.9964 | 47.8712 | |

| DOTHE | 0.4039 | 0.3304 | 46.1790 | |

| CLAHE | 0.4030 | 0.3341 | 46.9209 | |

| HE | 0.3944 | 0.3257 | 46.2043 | |

| Proposed Method | 0.1951 | 1.0870 | 48.1291 | |

| (b) | Original image | 0.3355 | - | 52.0933 |

| LPRHM | 0.3529 | 0.7152 | 56.2390 | |

| AHPBC | 0.3793 | 0.9990 | 53.7169 | |

| DOTHE | 0.3985 | 0.3406 | 51.9819 | |

| CLAHE | 0.4281 | 0.3693 | 52.4233 | |

| HE | 0.3907 | 0.3350 | 51.7469 | |

| Proposed Method | 0.1558 | 1.0859 | 55.8531 | |

| (c) | Original image | 0.2564 | - | 43.4092 |

| LPRHM | 0.2659 | 0.7141 | 48.7776 | |

| AHPBC | 0.8101 | 0.9853 | 41.9225 | |

| DOTHE | 0.4111 | 0.2412 | 40.1073 | |

| CLAHE | 0.4792 | 0.2812 | 41.3876 | |

| HE | 0.3985 | 0.2377 | 39.8709 | |

| Proposed Method | 0.0991 | 1.0750 | 44.4311 | |

| (d) | Original image | 0.5182 | - | 41.6032 |

| LPRHM | 0.5110 | 0.7649 | 47.3336 | |

| AHPBC | 0.5210 | 0.9959 | 44.7392 | |

| DOTHE | 0.3957 | 0.4030 | 44.0986 | |

| CLAHE | 0.4201 | 0.4330 | 43.1713 | |

| HE | 0.3882 | 0.3966 | 45.0032 | |

| Proposed Method | 0.2941 | 1.0104 | 44.0418 |

| Methods | BSF | LSBR | ||

|---|---|---|---|---|

| (e) | Original image | 0.5610 | - | 46.9881 |

| LPRHM | 0.4413 | 0.4940 | 42.8645 | |

| AHPBC | 0.5617 | 0.9647 | 50.5993 | |

| DOTHE | 0.4015 | 0.4422 | 42.9489 | |

| CLAHE | 0.4596 | 0.5064 | 46.2738 | |

| HE | 0.3923 | 0.4357 | 42.9340 | |

| Proposed Method | 0.2352 | 1.0296 | 49.9856 | |

| (f) | Original image | 0.6199 | - | 46.6623 |

| LPRHM | 0.5551 | 0.4826 | 46.0825 | |

| AHPBC | 0.6291 | 1.0015 | 49.0523 | |

| DOTHE | 0.4009 | 0.3009 | 42.6890 | |

| CLAHE | 0.5380 | 0.4382 | 44.5729 | |

| HE | 0.3935 | 0.2973 | 42.5791 | |

| Proposed Method | 0.2150 | 1.1421 | 47.6391 | |

| (g) | Original image | 0.5611 | - | 47.9276 |

| LPRHM | 0.4363 | 0.4818 | 42.6438 | |

| AHPBC | 0.4498 | 0.4557 | 42.3627 | |

| DOTHE | 0.4009 | 0.4379 | 42.4675 | |

| CLAHE | 0.4477 | 0.4921 | 43.7997 | |

| HE | 0.3927 | 0.4313 | 42.2609 | |

| Proposed Method | 0.2363 | 1.0306 | 50.9625 | |

| (h) | Original image | 0.4939 | - | 45.2540 |

| LPRHM | 0.4075 | 0.5717 | 42.8781 | |

| AHPBC | 0.5191 | 0.6064 | 46.5587 | |

| DOTHE | 0.4023 | 0.5326 | 44.4906 | |

| CLAHE | 0.4385 | 0.5761 | 44.3757 | |

| HE | 0.3950 | 0.5241 | 44.3416 | |

| Proposed Method | 0.3534 | 1.0701 | 47.7911 |

| LPRHM | AHPBC | DOTHE | CLAHE | HE | Proposed Method | |

|---|---|---|---|---|---|---|

| Average running time (s) | 0.0268 | 27.7543 | 0.2352 | 0.1960 | 0.0066 | 1.3298 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Y.; Dong, L.; Liang, J. Image Enhancement of Maritime Infrared Targets Based on Scene Discrimination. Sensors 2022, 22, 5873. https://doi.org/10.3390/s22155873

Jiang Y, Dong L, Liang J. Image Enhancement of Maritime Infrared Targets Based on Scene Discrimination. Sensors. 2022; 22(15):5873. https://doi.org/10.3390/s22155873

Chicago/Turabian StyleJiang, Yingqi, Lili Dong, and Junke Liang. 2022. "Image Enhancement of Maritime Infrared Targets Based on Scene Discrimination" Sensors 22, no. 15: 5873. https://doi.org/10.3390/s22155873

APA StyleJiang, Y., Dong, L., & Liang, J. (2022). Image Enhancement of Maritime Infrared Targets Based on Scene Discrimination. Sensors, 22(15), 5873. https://doi.org/10.3390/s22155873