UAV Localization Algorithm Based on Factor Graph Optimization in Complex Scenes

Abstract

:1. Introduction

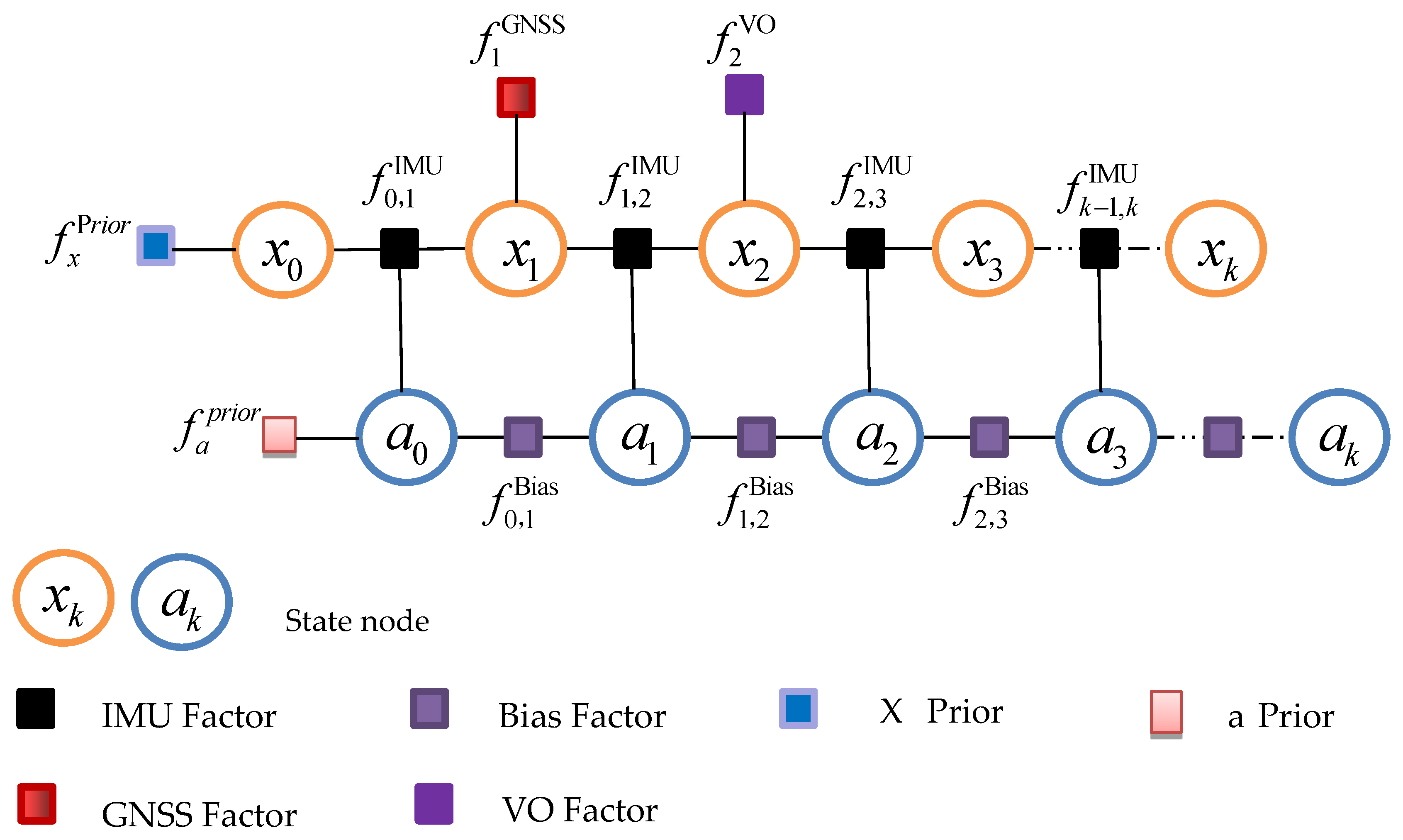

2. Factor Graph Model

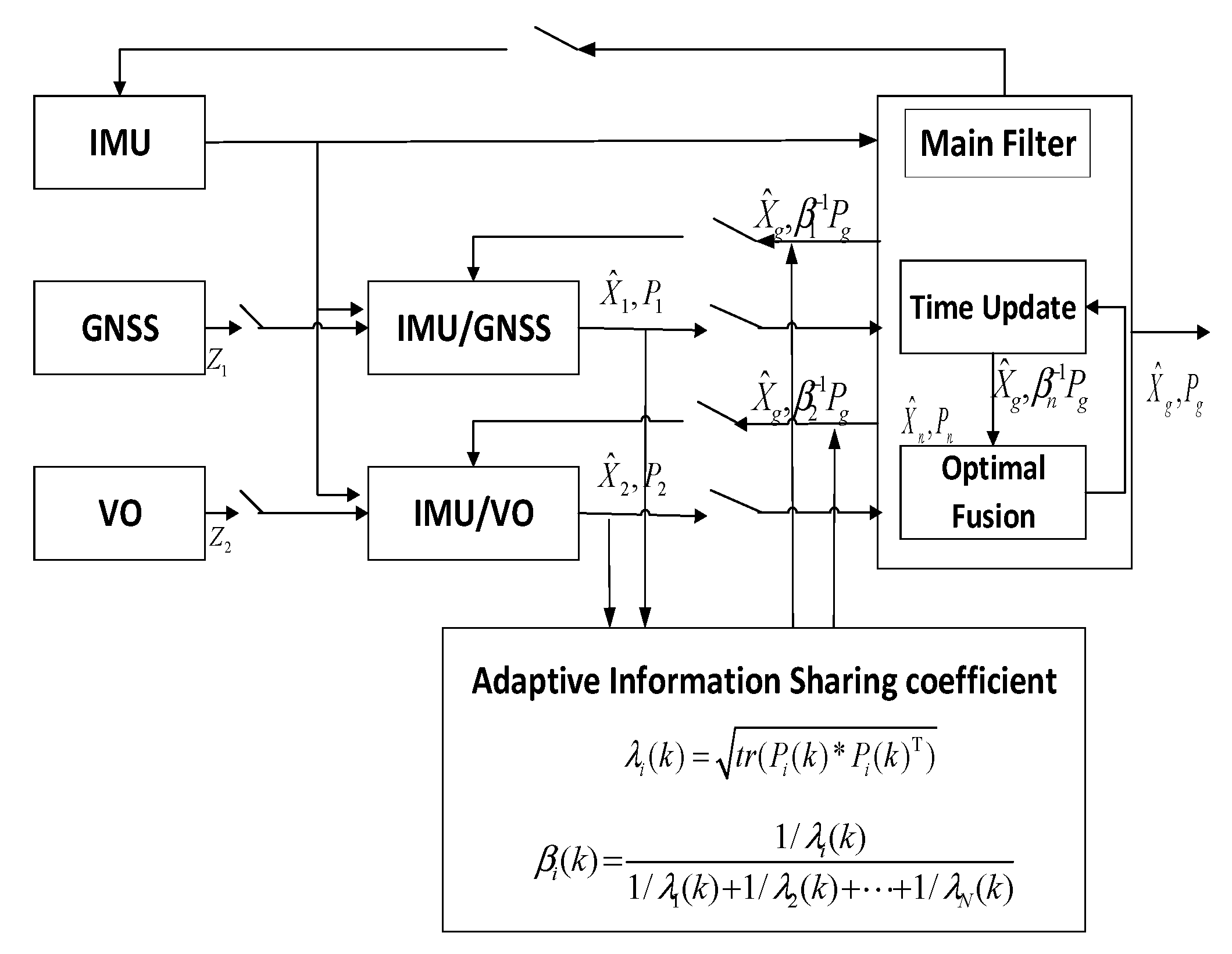

2.1. Factor Graph Algorithm

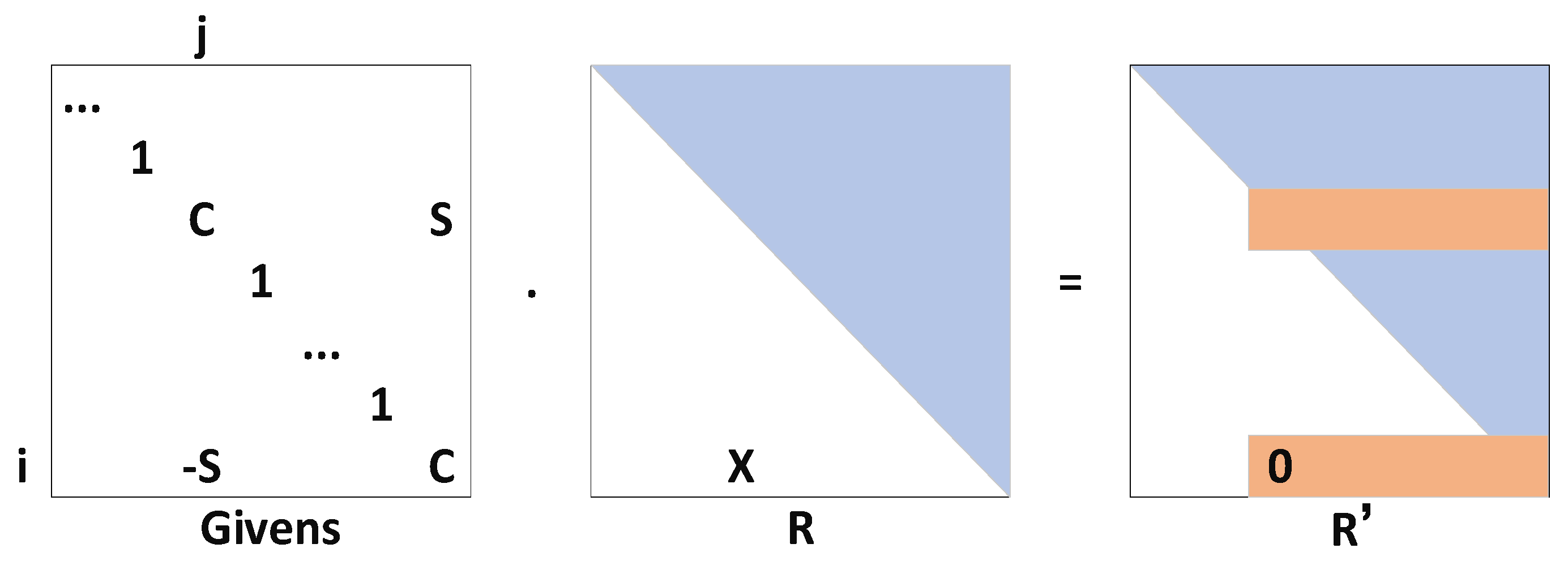

2.2. Incremental Smoothing and Mapping (iSAM)

3. Factor Graph Multi-Source Fusion Model Framework

3.1. IMU Pre-Integration Factor

3.2. IMU Bias Factor

3.3. GNSS Factor

3.4. VO Factor

4. Federated Kalman Filter and Adaptive Federated Kalman Filter (AFKF)

5. Simulation Experiment Verification

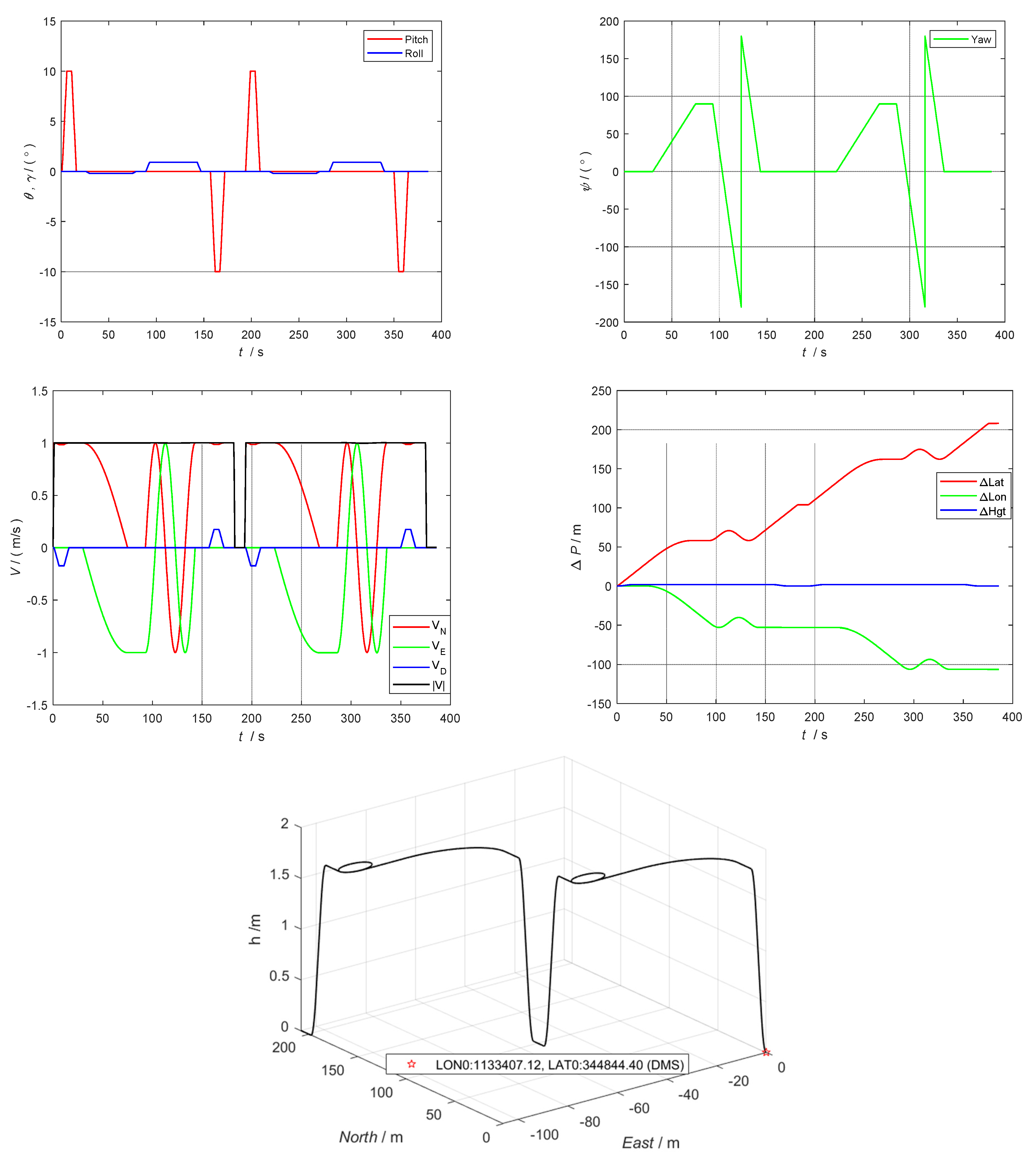

5.1. Trajectory Simulation Settings

5.2. Simulation Parameter Settings

5.3. Simulation Scene Settings

5.4. Experimental Results and Discussion

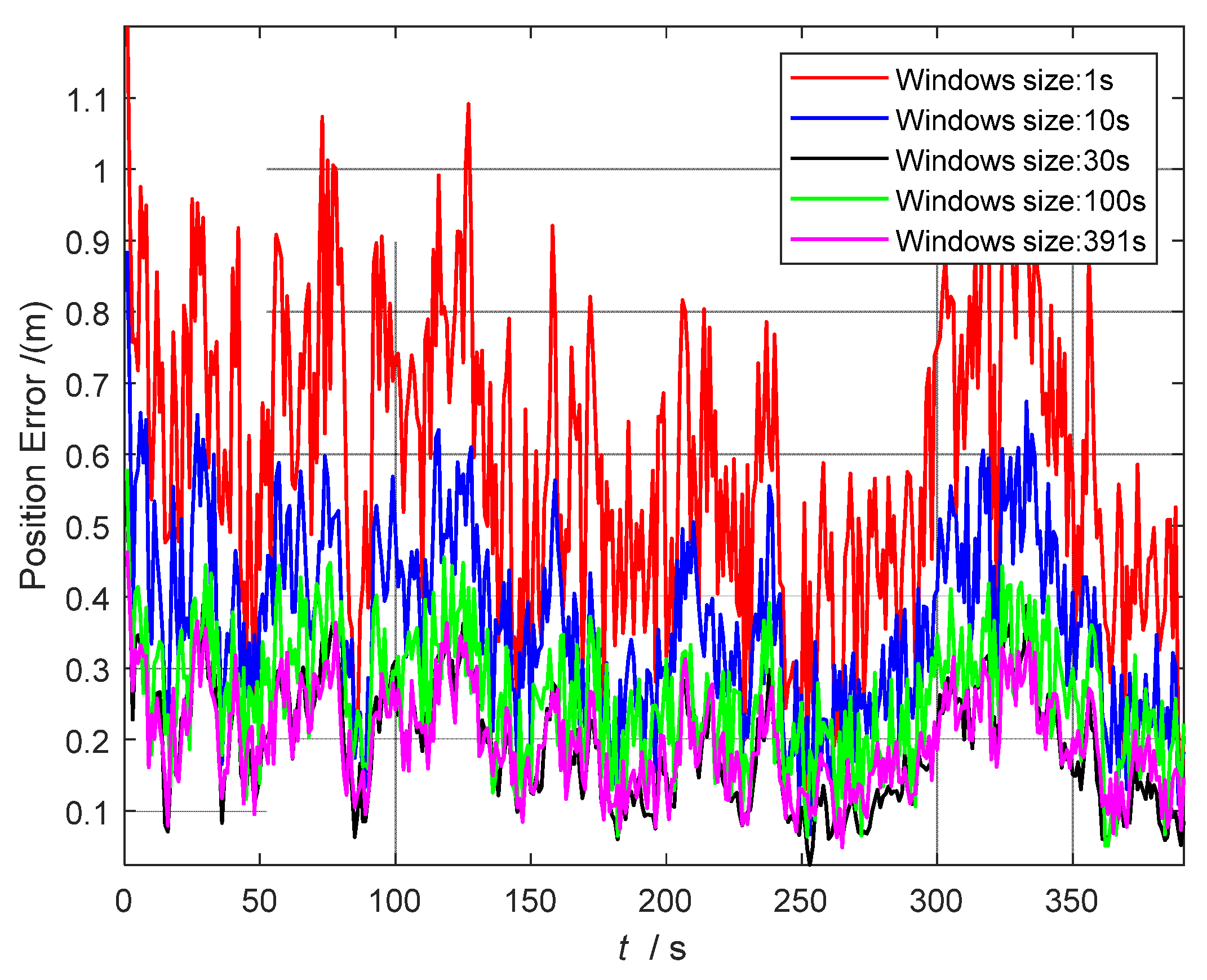

5.4.1. Comparative Analysis of FGO Sliding Window Size

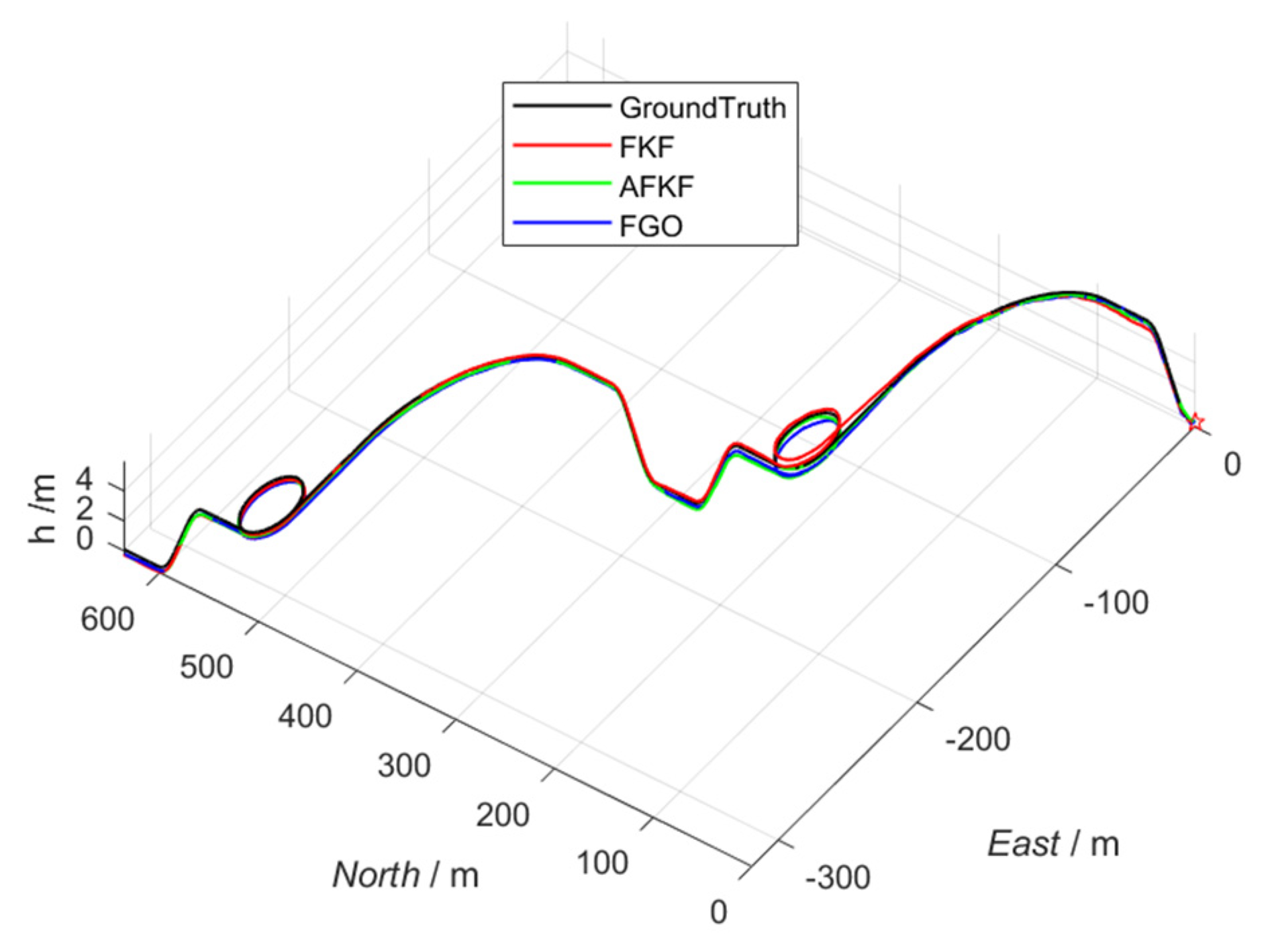

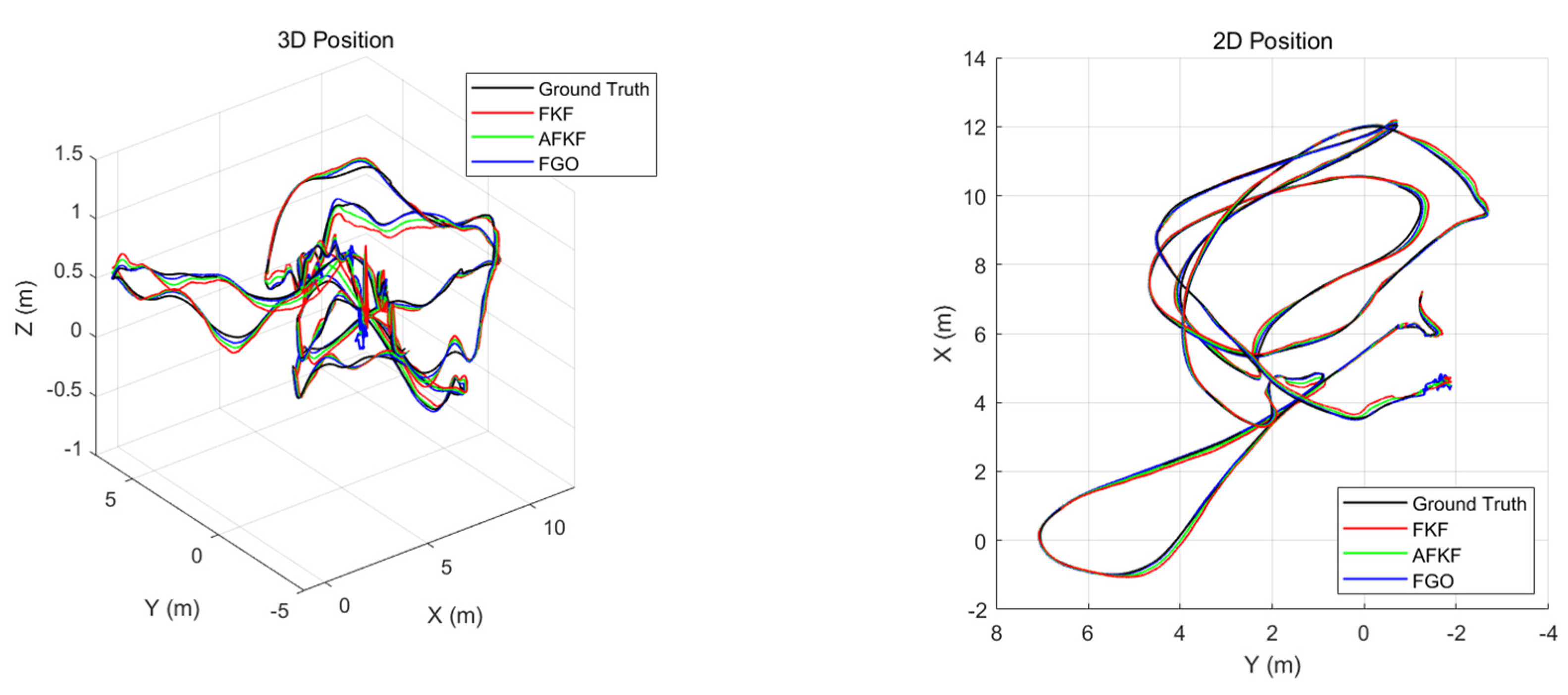

5.4.2. Track Comparison Results

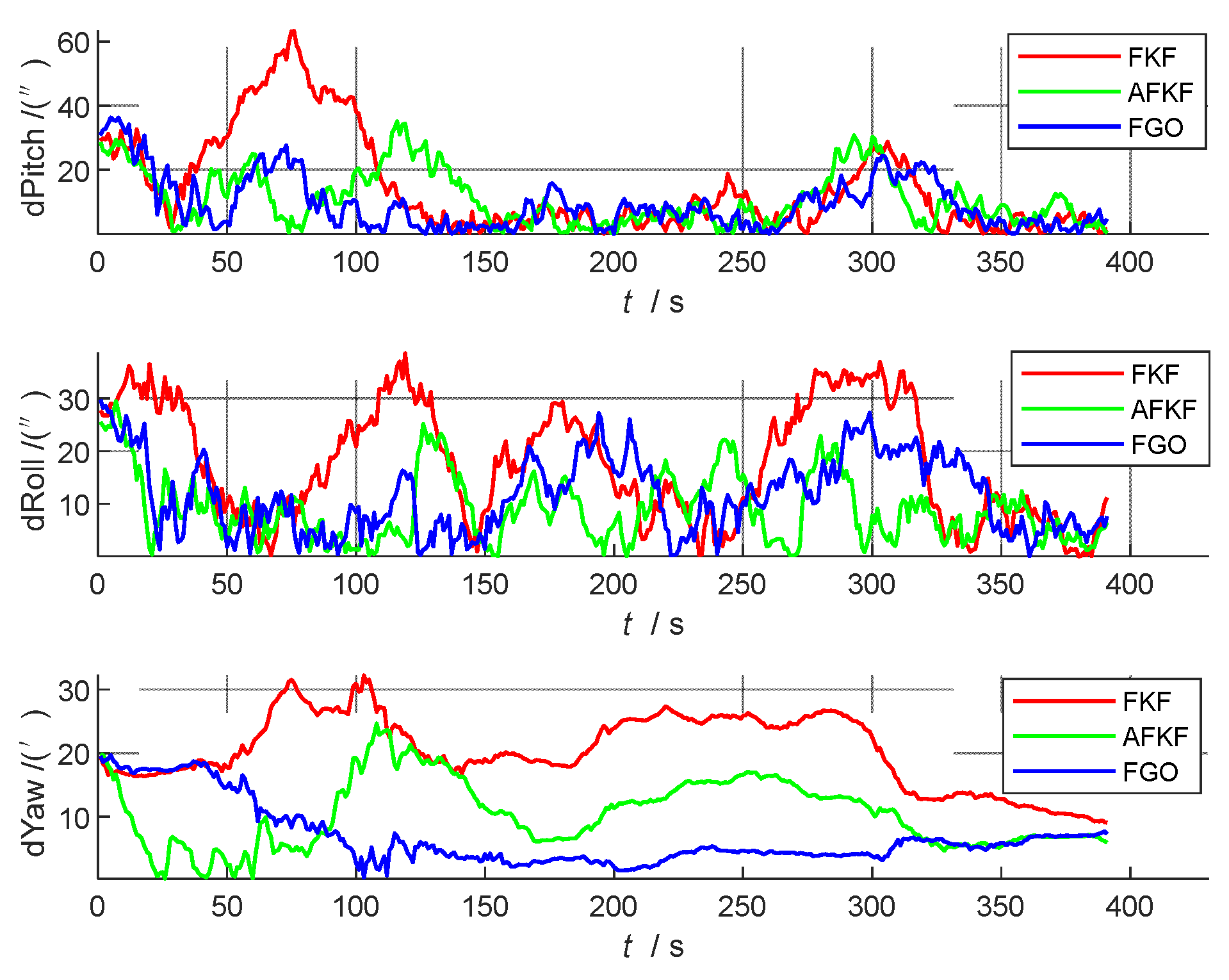

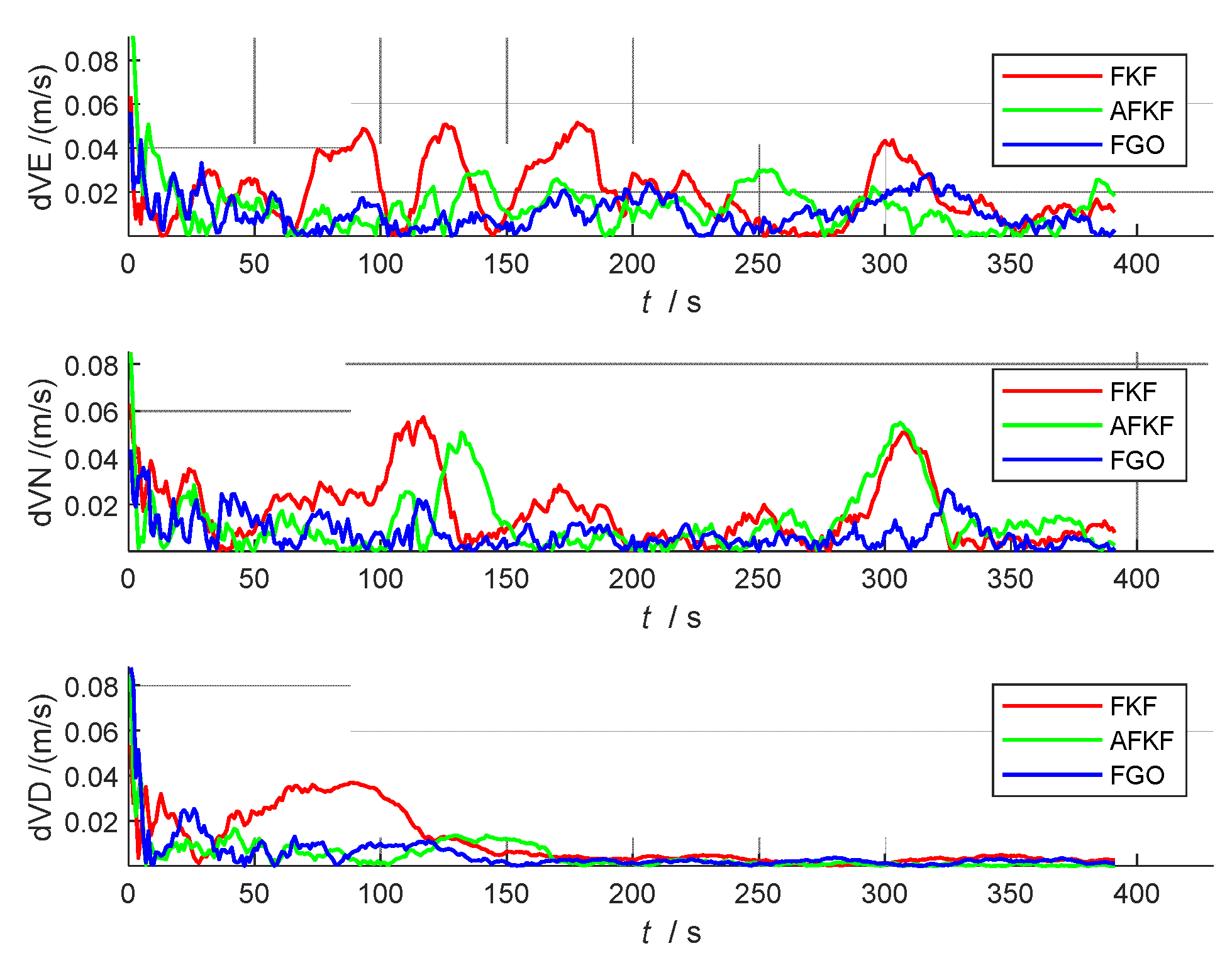

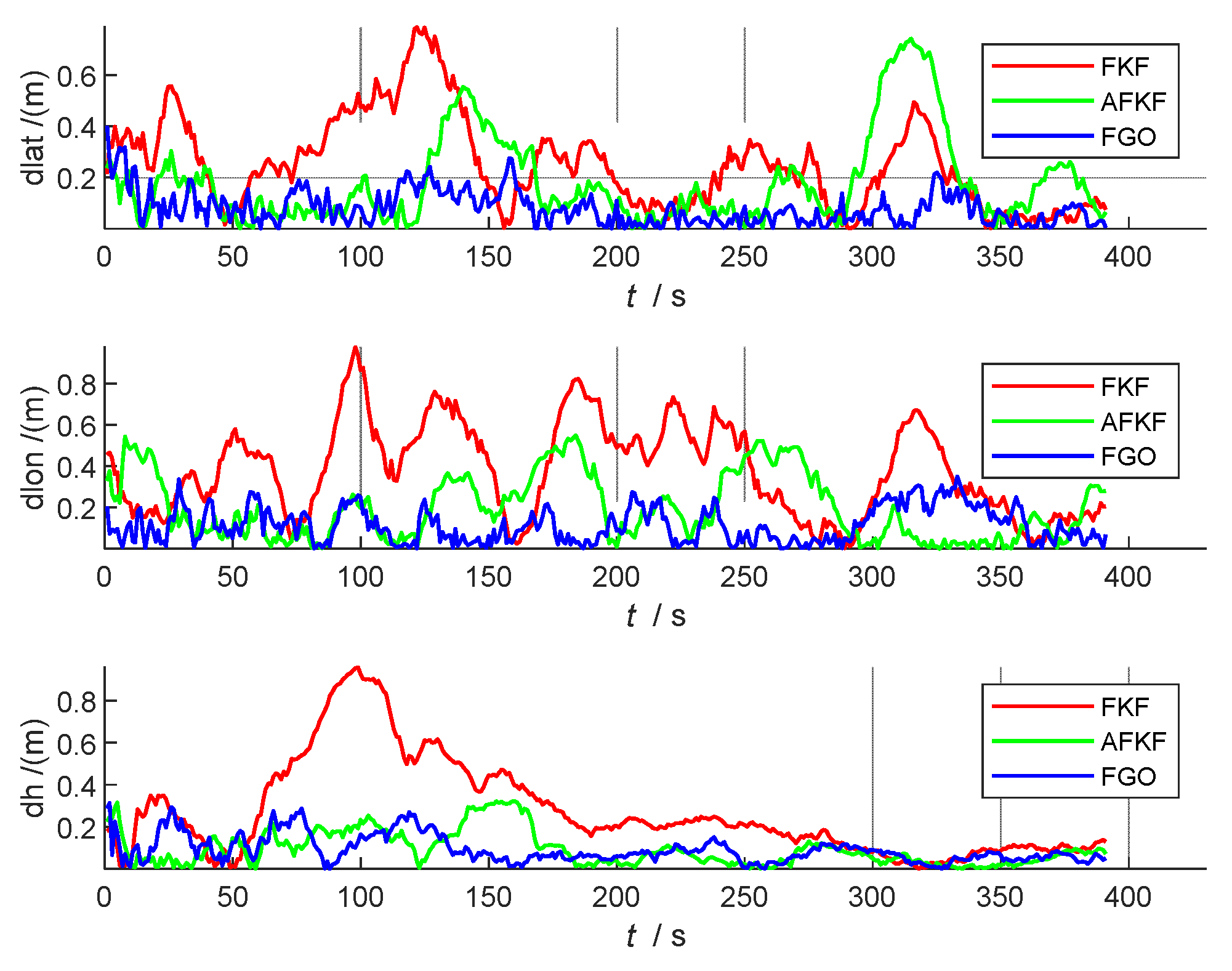

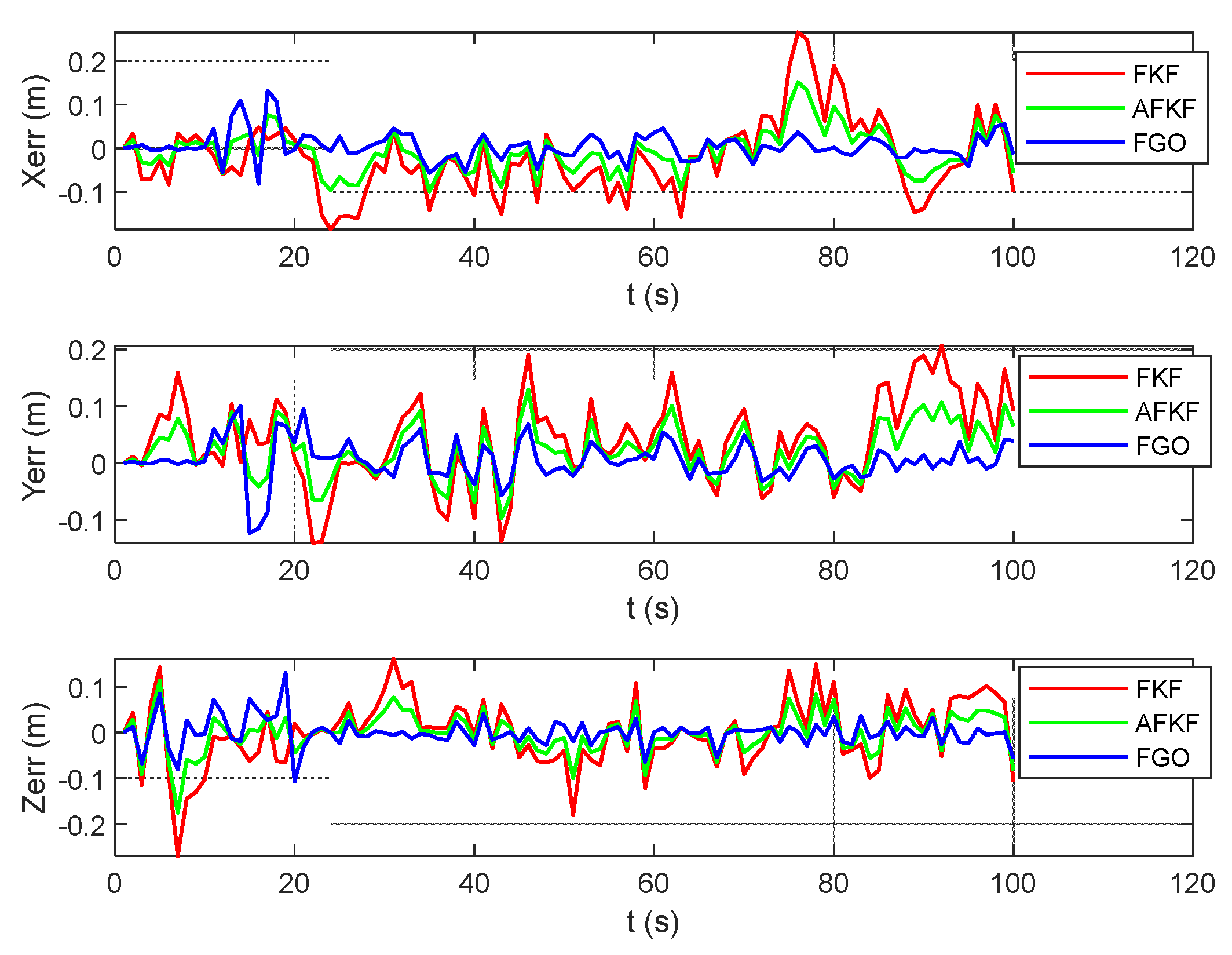

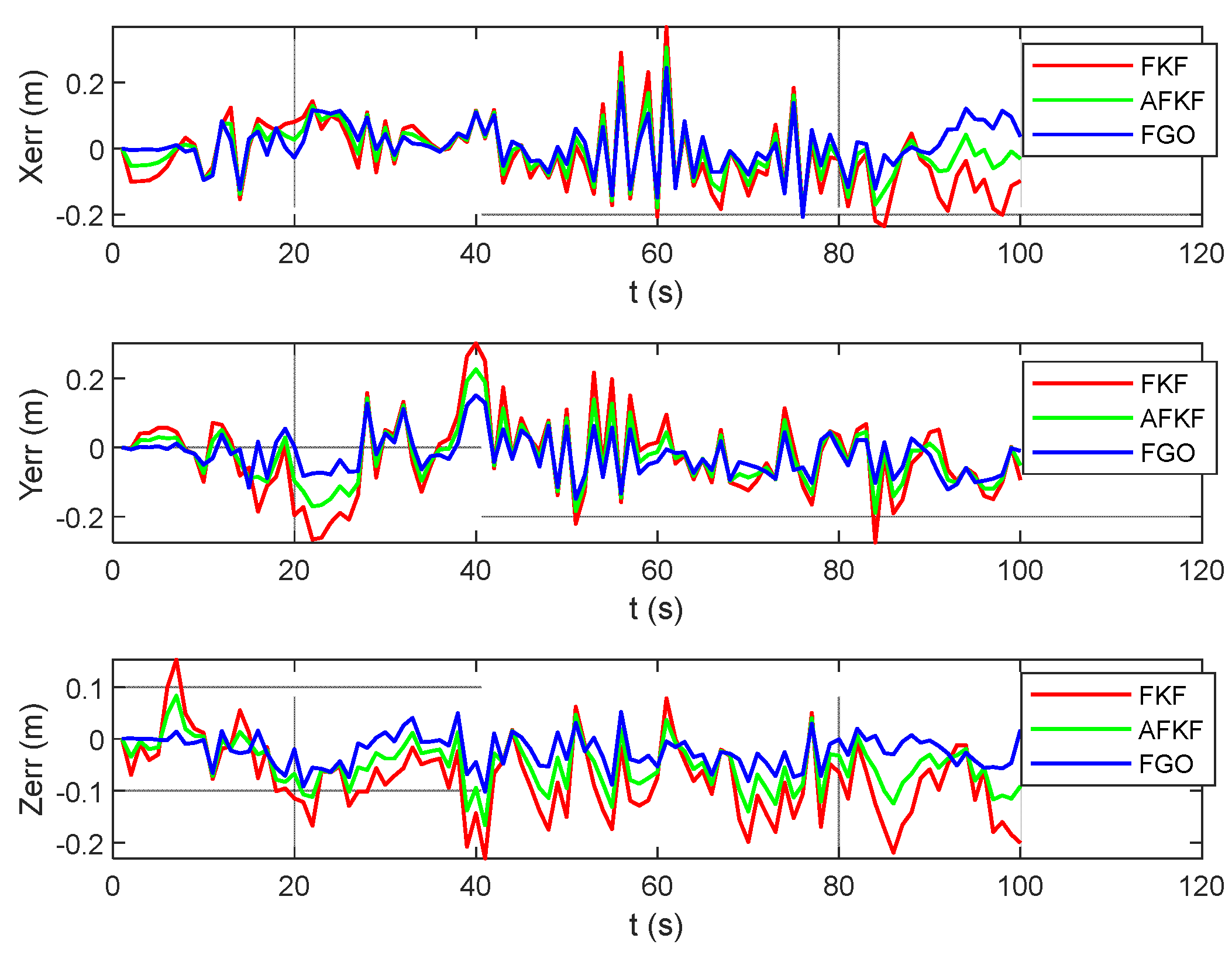

5.4.3. Error Analysis and Precision Statistics

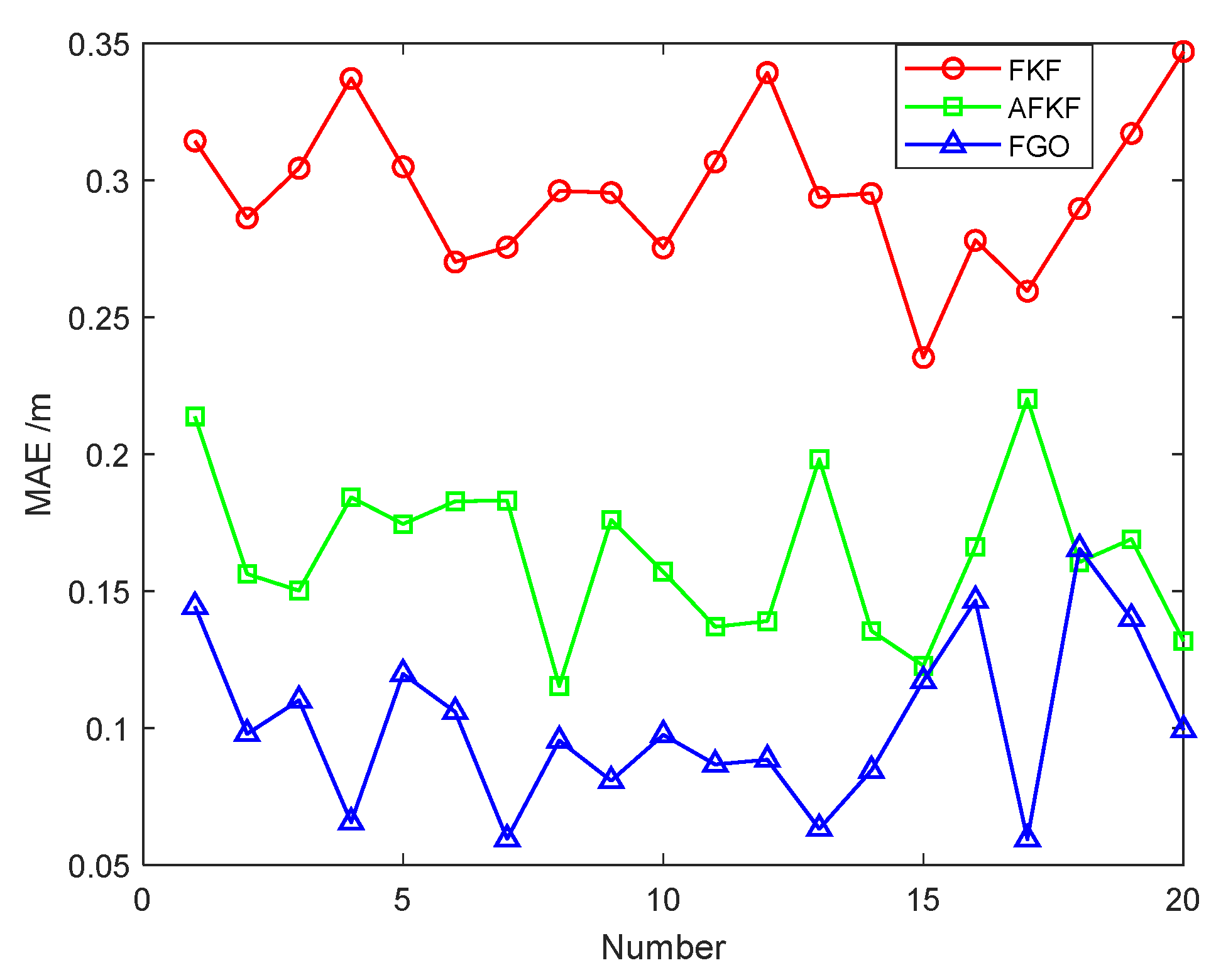

5.4.4. Statistical Analysis of Multiple Experiments

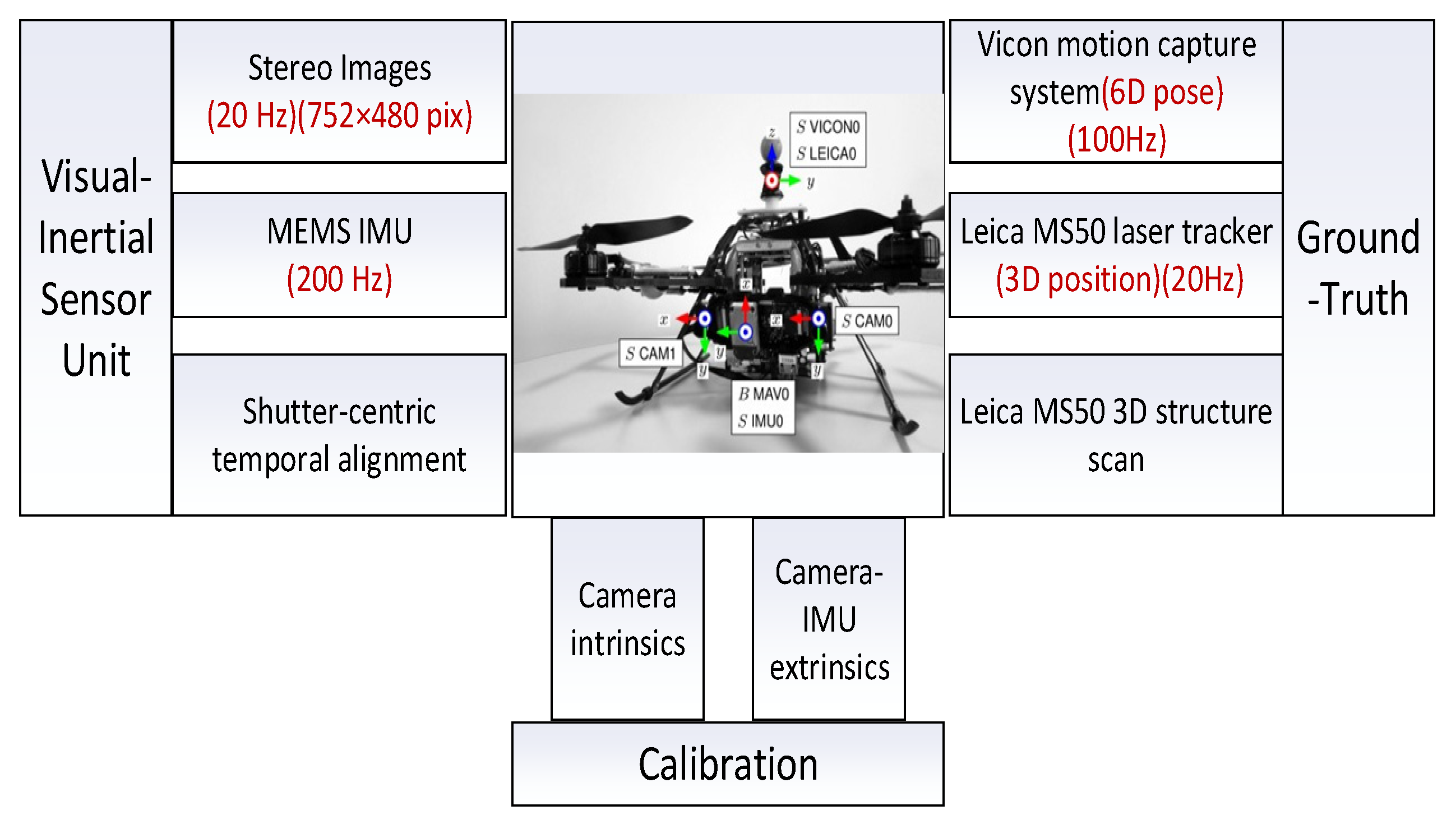

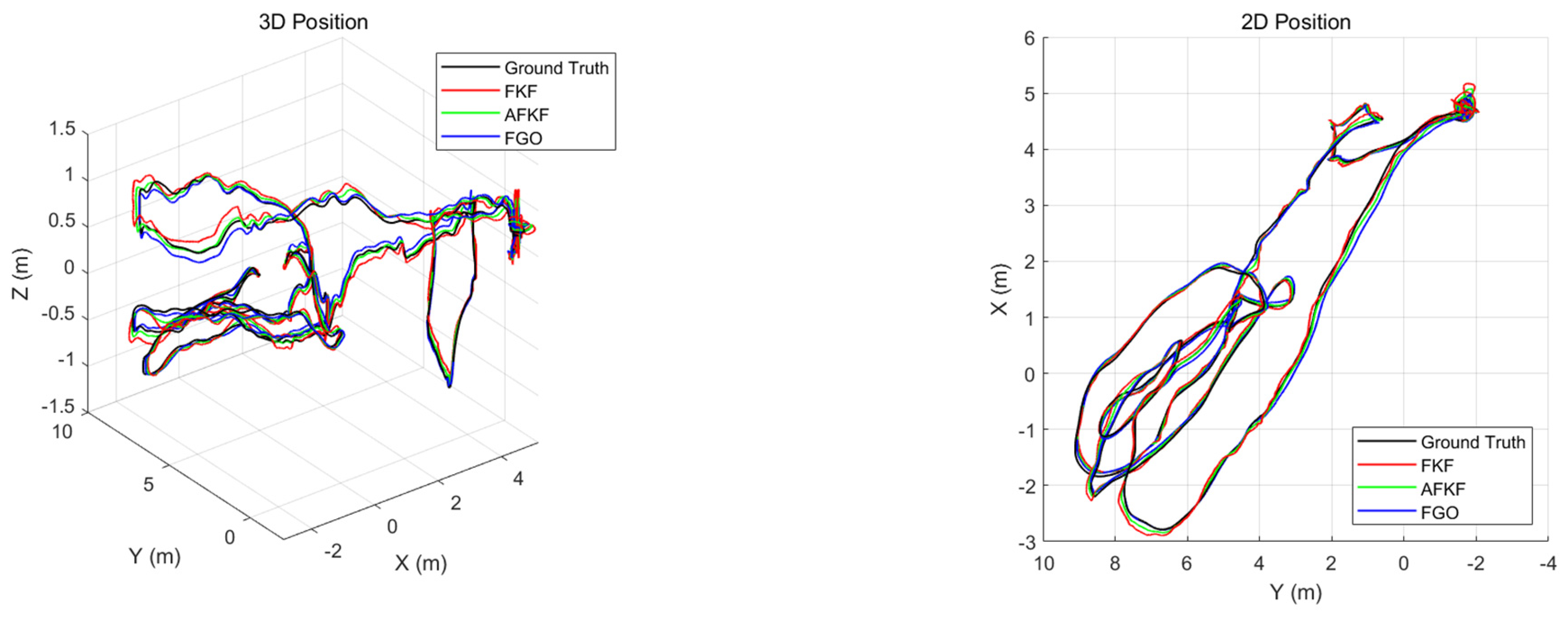

6. Dataset Validation

6.1. MH_01_Easy Scene

6.2. MH_03_Medium Scene

6.3. V1_03_Difficult Scene

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, Y.; Song, L.; Wang, G. Overview of the development of foreign ground unmanned autonomous systems in 2019. Aerodyn. Missile J. 2020, 1, 30–34. [Google Scholar]

- Zhang, T.; Li, Q.; Zhang, C.S.; Liang, H.W.; Li, P.; Wang, T.M.; Li, S.; Zhu, Y.L.; Wu, C. Current Trends in the Development of Intelligent Unmanned Autonomous Systems. Unmanned Syst. Technol. 2018, 18, 68–85. [Google Scholar] [CrossRef] [Green Version]

- Guo, C. Key Technical Research of Information Fusion for Multiple Source Integrated Navigation System. Ph.D. Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2018. [Google Scholar]

- Tang, L.; Tang, X.; Li, B.; Liu, X. A Survey of Fusion Algorithms for Multi-source Fusion Navigation Systems. GNSS Word China 2018, 43, 39–44. [Google Scholar]

- Wang, Q.; Cui, X.; Li, Y.; Ye, F. Performance Enhancement of a USV INS/CNS/DVL Integration Navigation System Based on an Adaptive Information Sharing Factor Federated Filter. Sensors 2017, 17, 239. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, X.; Pang, F.; Ran, Y.; Bai, Y.; Zhang, L.; Tan, Z.; Wei, C.; Luo, M. An Indoor Mobile Robot Positioning Algorithm Based on Adaptive Federated Kalman Filter. IEEE Sens. J. 2021, 21, 23098–23107. [Google Scholar] [CrossRef]

- Frank, D.; Michael, K. Factor Graphs for Robot Perception; Foundations and Trends® in Robotics Series; Now Publishers: Delft, The Netherlands, 2017; Volume 6, pp. 1–139. [Google Scholar]

- Zhu, X.; Chen, S.; Jiang, C. Integrated navigation based on graph optimization method and its feasibility. Electron. Opt. Control 2019, 26, 66–70. [Google Scholar]

- Wang, M.; Li, Y.; Feng, G. Key technologies of GNSS/INS/VO deep integration for UGV navigation in urban canyon. In Proceedings of the 2017 11th Asian Control Conference, Gold Coast, Australia, 7–20 December 2017; pp. 2546–2551. [Google Scholar]

- Xu, H.; Lian, B.; Liu, S. Multi-source Combined Navigation Factor Graph Fusion Algorithm Based on Sliding Window Iterative Maximum Posterior Estimation. J. Mil. Eng. 2019, 40, 807–819. [Google Scholar]

- Levinson, J.; Montemerlo, M.; Thrun, S. Map-Based Precision Vehicle Localization in Urban Environments. In Robotics: Science & Systems; Georgia Institute of Technology: Atlanta, GA, USA, 2007. [Google Scholar]

- Levinson, J.; Thrun, S. Robust Vehicle Localization in Urban Environments Using Probabilistic Maps. In Proceedings of the IEEE International Conference on Robotics & Automation, Anchorage, AK, USA, 3–7 May 2010. [Google Scholar]

- Ding, W.; Hou, S.; Gao, H. LiDAR Inertial Odometry Aided Robust LiDAR Localization System in Changing City Scenes. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Pfeifer, T.; Protzel, P. Robust Sensor Fusion with Self-Tuning Mixture Models. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Wang, H.; Zeng, Q.; Liu, J. Research on the key technology of UAV of all source position navigation based on factor graph. Navig. Control 2017, 16, 1–5. [Google Scholar]

- Chen, M.; Xiong, Z.; Liu, J. Distributed cooperative navigation method of UAV swarm based on factor graph. J. Chin. Inert. Technol. 2020, 28, 456–461. [Google Scholar]

- Tang, C.; Zhang, L.; Lian, B. Cooperation factor map of co-location aided single satellite navigation algorithm. Syst. Eng. Electron. 2017, 39, 1085–1090. [Google Scholar]

- Gao, J.; Tang, X.; Zhang, H. Vehicle INS/GNSS/OD integrated navigation algorithm based on factor graph. Syst. Eng. Electron. 2018, 40, 2547–2554. [Google Scholar]

- Indelman, V.; Williams, S.; Kaess, M. Information Fusion in Navigation Systems via Factor Graph Based Incremental Smoothing. Robot. Auton. Syst. 2013, 61, 721–738. [Google Scholar] [CrossRef]

- Xu, J.; Yang, G.; Sun, Y. A multi-sensor information fusion method based on factor graph for integrated navigation system. IEEE Access 2021, 9, 12044–12054. [Google Scholar] [CrossRef]

- Wei, X.; Li, J.; Zhang, D. An improved integrated navigation method with enhanced robustness based on factor graph. Mech. Syst. Signal Process. 2021, 155, 107565. [Google Scholar] [CrossRef]

- Yang, S.; Tan, J.; Chen, B. Robust Spike-Based Continual Meta-Learning Improved by Restricted Minimum Error Entropy Criterion. Entropy 2022, 24, 455. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Linares-Barranco, B.; Chen, B. Heterogeneous Ensemble-Based Spike-Driven Few-Shot Online Learning. Front. Neuro. 2022, 16, 850932. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wei, Z.; Li, Z. SAM: A Self-adaptive Attention Module for Context-Aware Recommendation System. arXiv 2021, arXiv:2110.00452. [Google Scholar]

- Yang, S.; Gao, T.; Wang, J.; Deng, B.; Lansdell, B.; Linares-Barranco, B. Effificient Spike-Driven Learning with Dendritic Event-Based Processing. Front. Neurosci. 2021, 15, 601109. [Google Scholar] [CrossRef] [PubMed]

- Zeng, Q.; Chen, W.; Liu, J. An improved multi-sensor fusion navigation algorithm based on the factor graph. Sensors 2017, 17, 641. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yao, Z.; Liu, Y.; Guo, J. Multi-source heterogeneous information fusion algorithm for autonomous navigation based on factor graph. Electron. Meas. Technol. 2021, 3, 130–134. [Google Scholar]

- Luo, Z.; Chen, S.; Wang, G. A review of factor graph algorithms for multi-source fusion navigation systems. Navig. Control 2021, 20, 9–16. [Google Scholar]

- Zhang, J.; Wang, X.; Deng, Z. An asynchronous information fusion positioning algorithm based on factor graph. Missiles Space Veh. 2019, 3, 89–95. [Google Scholar]

- Zhao, W.; Meng, W.; Chi, Y.; Han, S. Factor Graph based Multi-source Data Fusion for Wireless Localization. In Proceedings of the IEEE Wireless Communications and Networking Conference (WCNC), Doha, Qatar, 3–6 April 2016; pp. 592–597. [Google Scholar]

- Wu, X.; Xiao, B.; Wu, C. Factor graph based navigation and positioning for control system design: A review-ScienceDirect. Chin. J. Aeronaut. 2021, 35, 25–39. [Google Scholar] [CrossRef]

- Frey, B.J.; Kschischang, F.; Loeliger, H. Factor graphs and algorithms. In Proceedings of the 35th Allerton Conference on Communications, Control, and Computing, Monticello, IL, USA, 22–24 September 1999; pp. 666–680. [Google Scholar]

- Koetter, R. Factor graphs and iterative algorithms. In Proceedings of the 1999 Information Theory and Networking Workshop, Metsovo, Greece, 27 June–1 July 1999. [Google Scholar]

- Christian, S.; Lance, P. Factor Graphs. In Trellis and Turbo Coding; IEEE: Piscataway, NJ, USA, 2004; pp. 227–249. [Google Scholar] [CrossRef]

- Kaess, M.; Ranganathan, A.; Dellaert, F. Incremental Smoothing and Mapping. IEEE Trans. Robot. 2008, 24, 1365–1378. [Google Scholar] [CrossRef]

- Kaess, M.; Johannsson, H.; Roberts, R. iSAM2: Incremental Smoothing and Mapping with Fluid Relinearization and Incremental Variable Reordering. In Proceedings of the IEEE International Conference on Robotics & Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Kaess, M.; Ila, V.; Roberts, R. The Bayes Tree: An Algorithmic Foundation for Probabilistic Robot Mapping. In Algorithmic Foundations of Robotics IX-Selected Contributions of the Ninth International Workshop on the Algorithmic Foundations of Robotics; WAFR: Singapore, 2010. [Google Scholar]

- Dai, J.; Hao, X.; Liu, S.; Ren, Z. Research on UAV Robust Adaptive Positioning Algorithm Based on IMU/GNSS/VO in Complex Scenes. Sensors 2022, 22, 2832. [Google Scholar] [CrossRef]

- Yan, G.; Deng, Y. Review on practical Kalman filtering techniques in traditional integrated navigation system. Navig. Position Timing 2020, 7, 50–64. [Google Scholar]

- Dellaert, F. Factor Graphs and GTSAM: A Hands-on Introduction; Georgia Institute of Technology: Atlanta, GA, USA, 2012. [Google Scholar]

- Lange, S.; Nderhauf, S.U.; Protzel, P. Incremental smoothing vs. filtering for sensor fusion on an indoor UAV. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1773–1778. [Google Scholar]

- Burri, J.; Nikolic, P.; Gohl, T.; Schneider, J.; Rehder, S.; Omari, M.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Wen, W.; Pfeifer, T.; Bai, X. Factor graph optimization for GNSS/INS integration: A comparison with the extended Kalman filter. Navigation 2021, 68, 315–331. [Google Scholar] [CrossRef]

- Wen, W.; Kan, Y.; Hsu, L. Performance Comparison of GNSS/INS Integration Based on EKF and Factor Graph Optimization. In Proceedings of the 32nd International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS+ 2019), Miami, FL, USA, 16–20 September 2019. [Google Scholar]

- Shan, G.; Park, B.H.; Nam, S.H. A 3-dimensional triangulation scheme to improve the accuracy of indoor localization for IoT services. In Proceedings of the 2015 IEEE Pacific Rim Conference on Communications, Computers and Signal Processing (PACRIM), Victoria, BC, Canada, 24–26 August 2015. [Google Scholar]

| Sensor Type | Parameter | Value | |

|---|---|---|---|

| IMU | Gyro error (x-, y-, z-) | bias | |

| random walk | |||

| Accelerometer error (x-, y-, z-) | bias | ||

| random walk | |||

| Frequency | 100 Hz | ||

| GNSS | Location (longitude, latitude, altitude) | [1 m; 1 m; 2 m] | |

| Speed (north, east, down) | [0.1 m/s; 0.1 m/s; 0.1 m/s] | ||

| Frequency | 1 Hz | ||

| VO | Location (x-, y-, z-) | [0.5 m; 0.5 m; 0.5 m] | |

| Attitude (pitch-, yaw-, roll-) | [0.5°; 0.5°; 0.5°] | ||

| Frequency | 1 Hz | ||

| Sensor Type | 40~170 s | 410~500 s |

|---|---|---|

| VO | ||

| GNSS | 20 × |

| Windows Size (s) | 1 | 10 | 30 | 100 | 391 |

| Position Error (m) | 0.24 | 0.17 | 0.12 | 0.10 | 0.09 |

| The Used Time (s) | 5.6971 × 10−2 | 6.9302 × 10−2 | 7.4211 × 10−2 | 9.8083 × 10−2 | 1.23402 × 10−1 |

| Error | Dlat (m) | Dlon (m) | dH (m) | dVN (m/s) | dVE (m/s) | dVD (m/s) | Dpith (′) | Dyaw (′) | Droll (′) | |

|---|---|---|---|---|---|---|---|---|---|---|

| FKF | AME | 0.25 | 0.37 | 0.27 | 0.02 | 0.02 | 0.01 | 14.55 | 17.66 | 20.33 |

| RMSE | 0.31 | 0.44 | 0.36 | 0.02 | 0.02 | 0.02 | 20.91 | 20.70 | 21.07 | |

| STD | 0.18 | 0.22 | 0.23 | 0.06 | 0.06 | 0.02 | 19.47 | 19.18 | 15.53 | |

| AFKF | AME | 0.18 | 0.19 | 0.09 | 0.01 | 0.01 | 0.00 | 11.06 | 8.98 | 10.43 |

| RMSE | 0.25 | 0.25 | 0.12 | 0.02 | 0.02 | 0.01 | 13.86 | 10.93 | 11.67 | |

| STD | 0.17 | 0.15 | 0.08 | 0.03 | 0.05 | 0.02 | 13.90 | 10.98 | 6.68 | |

| FGO | AME | 0.08 | 0.11 | 0.09 | 0.01 | 0.01 | 0.01 | 9.33 | 11.59 | 6.81 |

| RMSE | 0.10 | 0.14 | 0.11 | 0.01 | 0.01 | 0.01 | 12.31 | 13.52 | 8.31 | |

| STD | 0.07 | 0.08 | 0.06 | 0.01 | 0.01 | 0.01 | 12.34 | 11.60 | 3.99 | |

| Number | FKF | AFKF | FGO |

|---|---|---|---|

| 1 | 0.3144 | 0.2138 | 0.1444 |

| 2 | 0.2862 | 0.1563 | 0.0979 |

| 3 | 0.3044 | 0.1502 | 0.1101 |

| 4 | 0.3372 | 0.1843 | 0.0656 |

| 5 | 0.3049 | 0.1745 | 0.1198 |

| 6 | 0.2702 | 0.1828 | 0.1059 |

| 7 | 0.2756 | 0.1831 | 0.0595 |

| 8 | 0.2962 | 0.1154 | 0.0954 |

| 9 | 0.2955 | 0.1760 | 0.0807 |

| 10 | 0.2753 | 0.1571 | 0.0975 |

| 11 | 0.3067 | 0.1370 | 0.0867 |

| 12 | 0.3393 | 0.1390 | 0.0885 |

| 13 | 0.2938 | 0.1982 | 0.0632 |

| 14 | 0.2952 | 0.1355 | 0.0845 |

| 15 | 0.2353 | 0.1227 | 0.1172 |

| 16 | 0.2781 | 0.1662 | 0.1465 |

| 17 | 0.2595 | 0.2201 | 0.0594 |

| 18 | 0.2897 | 0.1606 | 0.1655 |

| 19 | 0.3171 | 0.1690 | 0.1400 |

| 20 | 0.3470 | 0.1317 | 0.0993 |

| Parameter | Value | |

|---|---|---|

| Camera | Resolution | |

| intrinsics | ||

| distortion_coefficients | ||

| IMU | gyroscope_noise_density | |

| gyroscope_random_walk | ||

| accelerometer_noise_density | ||

| accelerometer_random_walk | ||

| Camera-IMU extrinsics | ||

| Scenes | Sensor Type | Value | Period |

|---|---|---|---|

| MH_01_easy | GNSS | 40–100 s | |

| VO | 140–180 s | ||

| MH_03_medium | GNSS | 20–40 s | |

| VO | 60–90 s | ||

| V1_03_difficult | GNSS | 20–40 s | |

| VO | 70–90 s |

| Experimental Scene | Algorithm | Position Error (m) | ||

|---|---|---|---|---|

| x | y | z | ||

| MH_01_easy | FKF | 0.07 | 0.07 | 0.07 |

| AFKF | 0.06 | 0.06 | 0.05 | |

| FGO | 0.04 | 0.04 | 0.03 | |

| MH_03_medium | FKF | 0.09 | 0.08 | 0.07 |

| AFKF | 0.07 | 0.06 | 0.05 | |

| FGO | 0.05 | 0.05 | 0.03 | |

| V1_03_difficult | FKF | 0.12 | 0.14 | 0.11 |

| AFKF | 0.10 | 0.11 | 0.08 | |

| FGO | 0.09 | 0.09 | 0.05 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, J.; Liu, S.; Hao, X.; Ren, Z.; Yang, X. UAV Localization Algorithm Based on Factor Graph Optimization in Complex Scenes. Sensors 2022, 22, 5862. https://doi.org/10.3390/s22155862

Dai J, Liu S, Hao X, Ren Z, Yang X. UAV Localization Algorithm Based on Factor Graph Optimization in Complex Scenes. Sensors. 2022; 22(15):5862. https://doi.org/10.3390/s22155862

Chicago/Turabian StyleDai, Jun, Songlin Liu, Xiangyang Hao, Zongbin Ren, and Xiao Yang. 2022. "UAV Localization Algorithm Based on Factor Graph Optimization in Complex Scenes" Sensors 22, no. 15: 5862. https://doi.org/10.3390/s22155862

APA StyleDai, J., Liu, S., Hao, X., Ren, Z., & Yang, X. (2022). UAV Localization Algorithm Based on Factor Graph Optimization in Complex Scenes. Sensors, 22(15), 5862. https://doi.org/10.3390/s22155862