An Effective Camera-to-Lidar Spatiotemporal Calibration Based on a Simple Calibration Target

Abstract

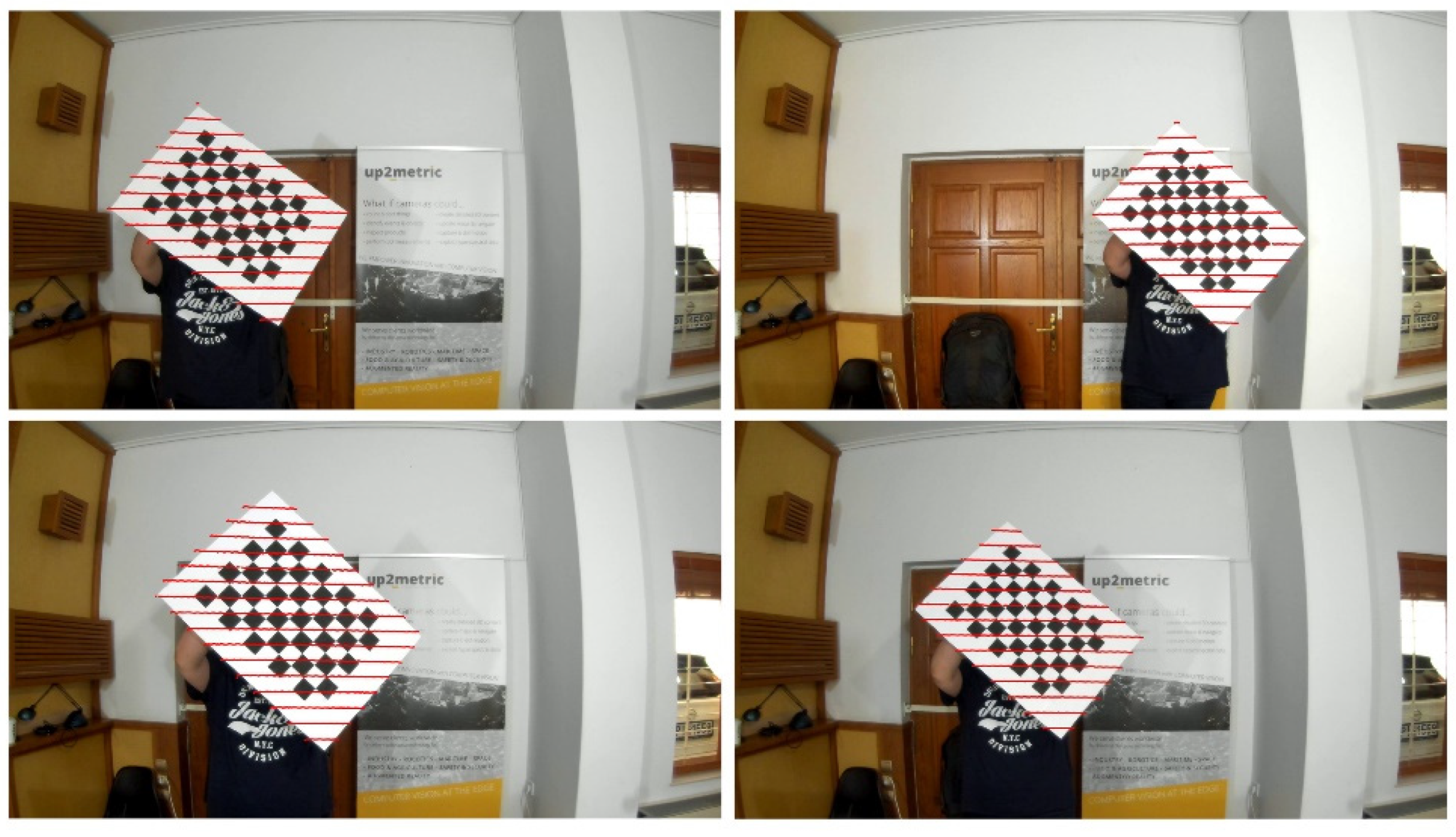

:1. Introduction

- (i)

- The achievable accuracy of object-based calibration techniques is more controllable by the user compared with that of targetless approaches. An extra capture of the target at a new viewing position may significantly increase the calibration result with practically zero overhead of capturing and processing time.

- (ii)

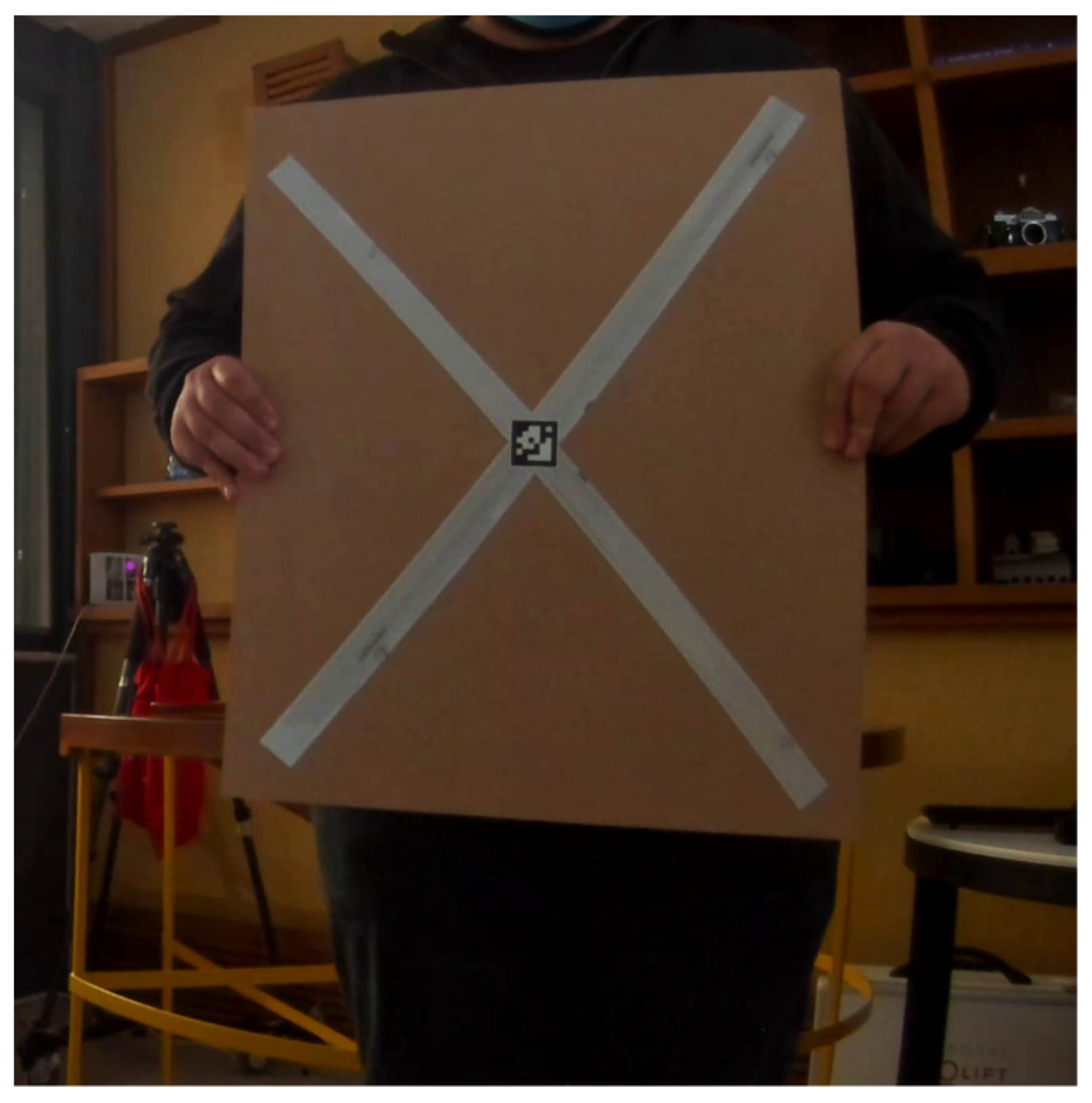

- The construction of the target should be simple for non-specialized users and should include readily available and low-cost materials. Planar boards are prevalent calibration targets; however, complicated patterns, such as those with circular holes [11,17], require elaborate construction and specialized equipment. Additionally, the size of the proposed target should be kept relatively small compared with the existing calibration approaches of planar targets, allowing a more versatile calibration procedure for outdoor and indoor environments.

- (iii)

- The dimensions of the calibration object are arbitrary (unstructured object) in contrast to most target-based approaches (e.g., [7,11,12,14,17]). In this way, the construction of the target remains as simple as possible, and the calibration results are not affected by any possible manufacturing defect or inaccurate measurement.

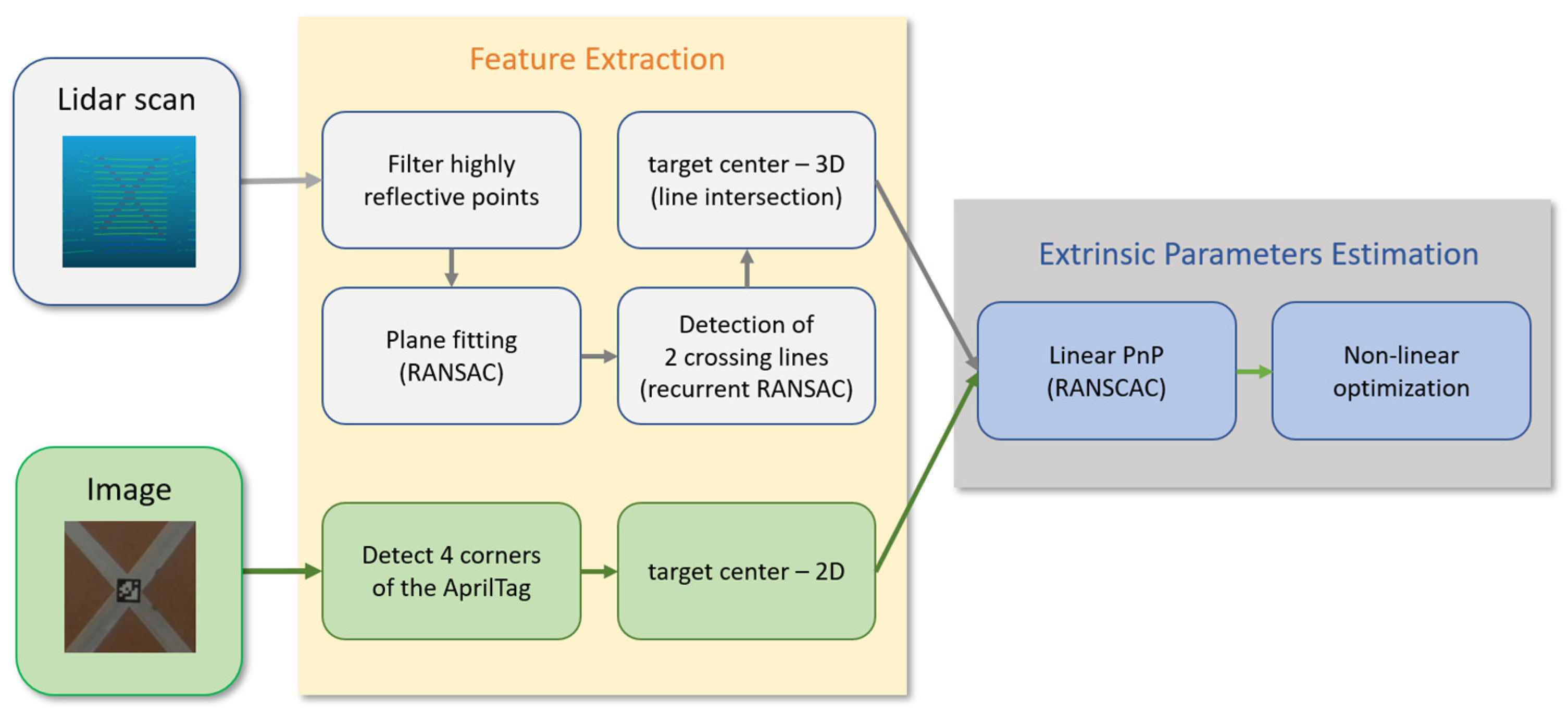

2. Methodology

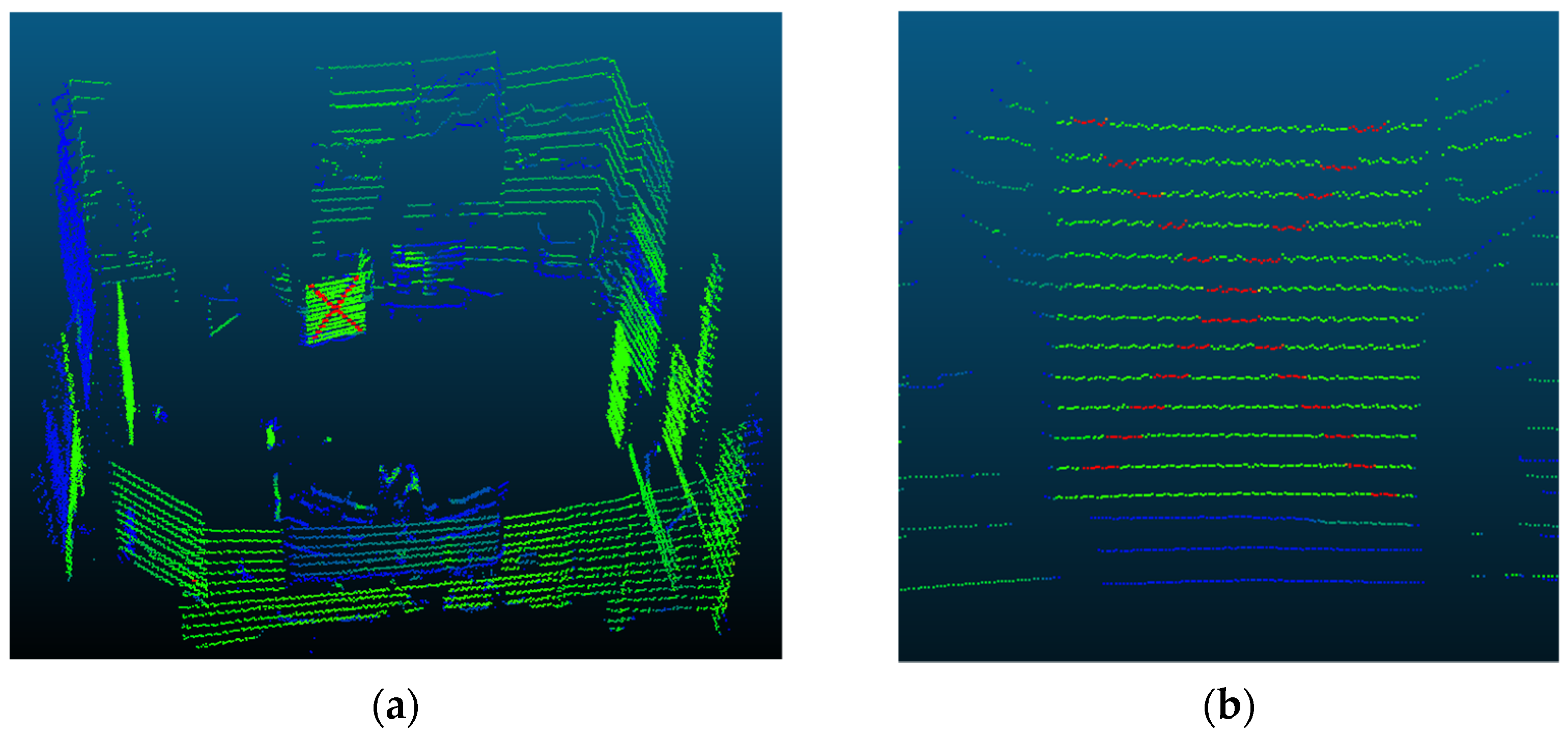

2.1. Feature Points Extraction

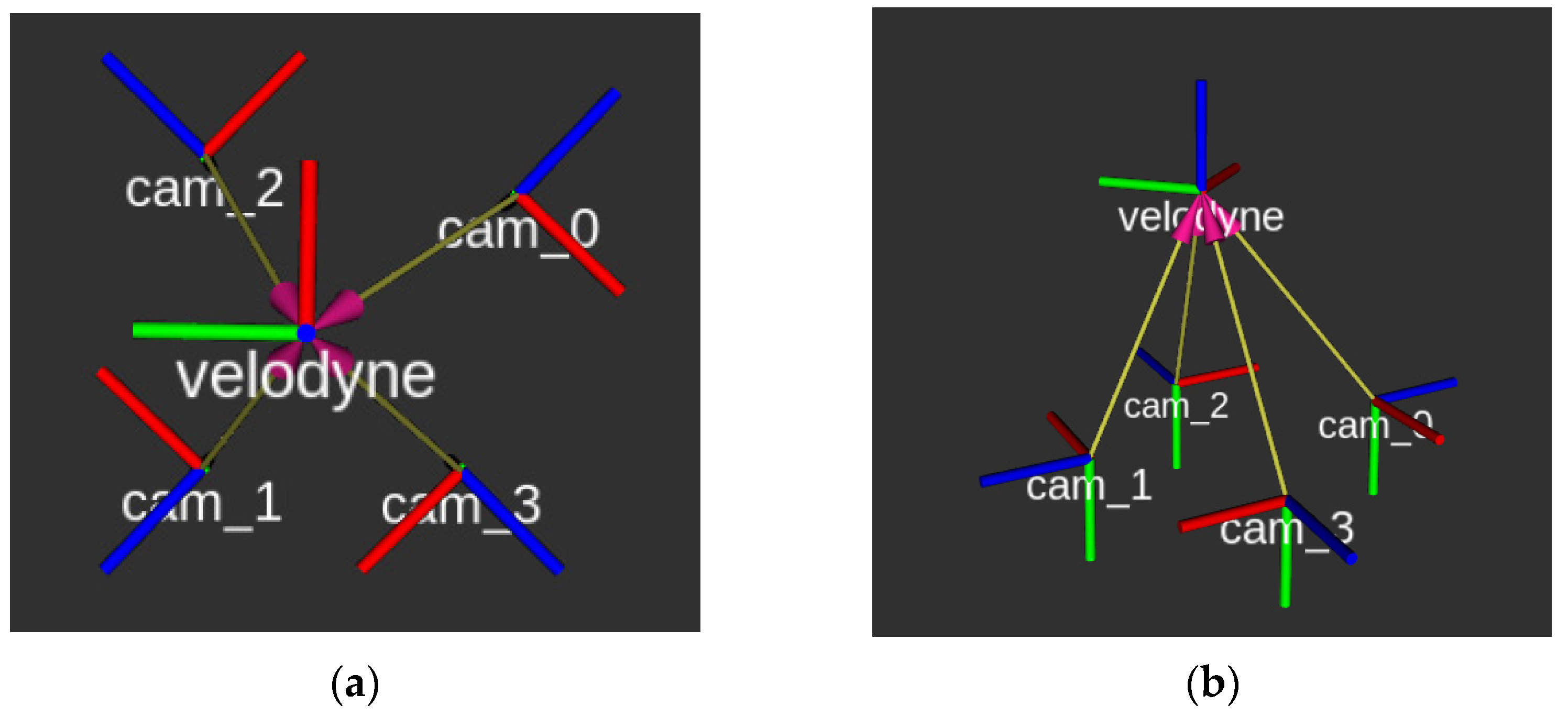

2.2. Geometrical Calibration

2.3. Temporal Calibration

3. Calibration Workflow

4. Evaluation and Further Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Puente, I.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. Review of mobile mapping and surveying technologies. Measurement 2013, 46, 2127–2145. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Q.; Zhu, Q.; Liu, L.; Li, C.; Zheng, D. A survey of mobile laser scanning applications and key techniques over urban areas. Remote Sens. 2019, 11, 1540. [Google Scholar] [CrossRef] [Green Version]

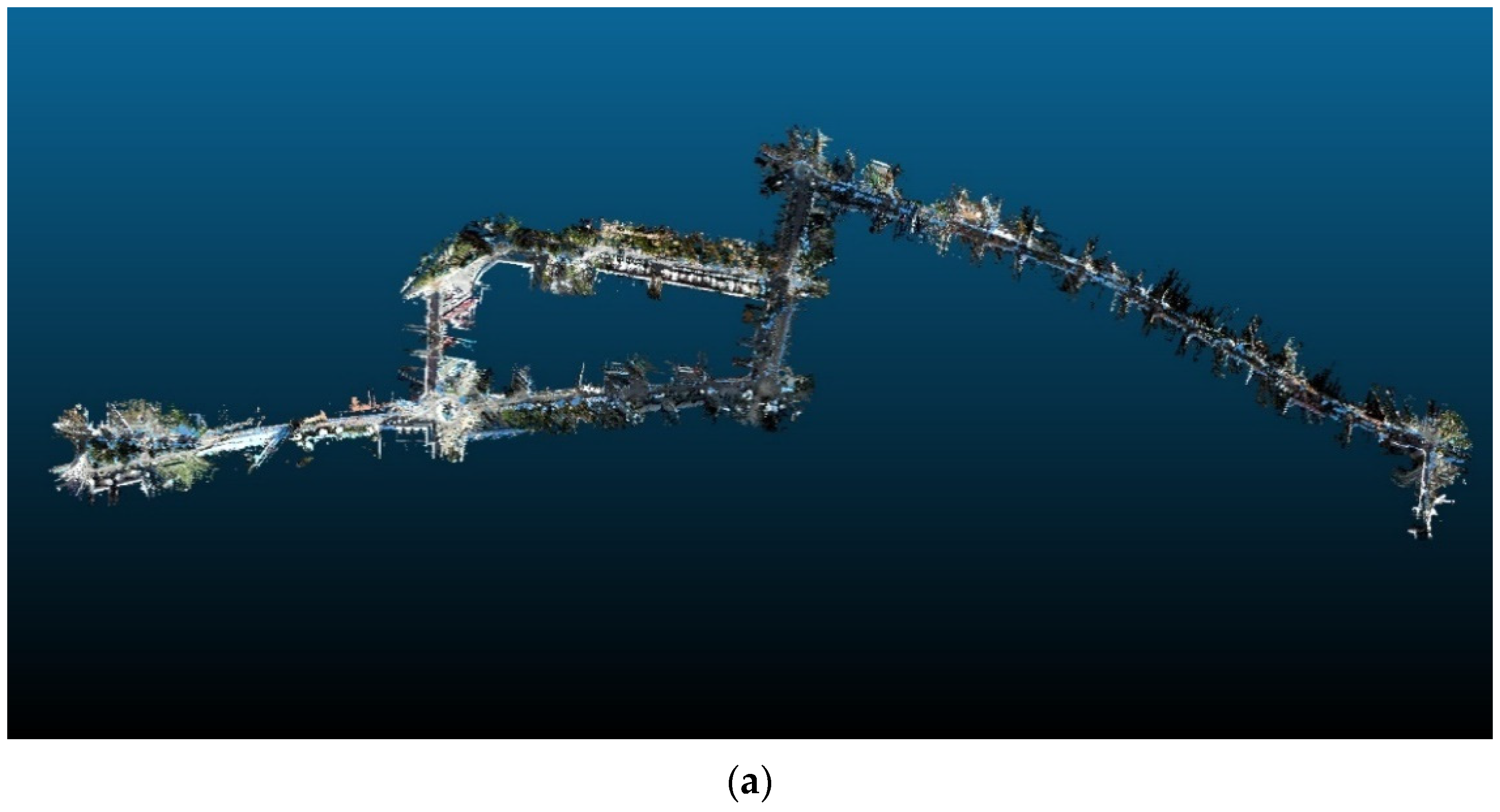

- Kalisperakis, I.; Mandilaras, T.; El Saer, A.; Stamatopoulou, P.; Stentoumis, C.; Bourou, S.; Grammatikopoulos, L. A Modular Mobile Mapping Platform for Complex Indoor and Outdoor Environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 243–250. [Google Scholar] [CrossRef]

- Unnikrishnan, R.; Hebert, M. Fast Extrinsic Calibration of a Laser Rangefinder to a Camera; Robotics Institute: Pittsburgh, PA, USA, 2005; Technical Report; CMU-RI-TR-05-09. [Google Scholar]

- Scaramuzza, D.; Harati, A.; Siegwart, R. Extrinsic self calibration of a camera and a 3d laser range finder from natural scenes. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 4164–4169. [Google Scholar]

- Zhang, Q.; Pless, R. Extrinsic calibration of a camera and laser range finder (improves camera calibration). In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 3, pp. 2301–2306. [Google Scholar]

- Pandey, G.; McBride, J.; Savarese, S.; Eustice, R. Extrinsic calibration of a 3d laser scanner and an omnidirectional camera. IFAC Proc. Vol. 2010, 43, 336–341. [Google Scholar] [CrossRef] [Green Version]

- Debattisti, S.; Mazzei, L.; Panciroli, M. Automated extrinsic laser and camera inter-calibration using triangular targets. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 696–701. [Google Scholar]

- Park, Y.; Yun, S.; Won, C.S.; Cho, K.; Um, K.; Sim, S. Calibration between color camera and 3D LIDAR instruments with a polygonal planar board. Sensors 2014, 14, 5333. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pereira, M.; Silva, D.; Santos, V.; Dias, P. Self calibration of multiple LIDARs and cameras on autonomous vehicles. Robot. Auton. Syst. 2016, 83, 326–337. [Google Scholar] [CrossRef]

- Guindel, C.; Beltrán, J.; Martín, D.; García, F. Automatic extrinsic calibration for lidar-stereo vehicle sensor setups. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Dhall, A.; Chelani, K.; Radhakrishnan, V.; Krishna, K.M. LiDAR-camera calibration using 3D-3D point correspondences. arXiv 2017, arXiv:1705.09785. [Google Scholar]

- Kim, E.S.; Park, S.Y. Extrinsic calibration between camera and LiDAR sensors by matching multiple 3D planes. Sensors 2019, 20, 52. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, L.; Li, Z.; Kaess, M. Automatic extrinsic calibration of a camera and a 3d lidar using line and plane correspondences. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 5562–5569. [Google Scholar]

- Matlab Lidar Camera Calibrator. Available online: https://www.mathworks.com/help/lidar/ref/lidarcameracalibrator-app.html (accessed on 20 June 2022).

- Geiger, A.; Moosmann, F.; Car, Ö.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3936–3943. [Google Scholar]

- Beltrán, J.; Guindel, C.; García, F. Automatic extrinsic calibration method for lidar and camera sensor setups. In IEEE Transactions on Intelligent Transportation Systems; IEEE: Piscataway, NJ, USA, 2022; pp. 1–13. [Google Scholar]

- Moghadam, P.; Bosse, M.; Zlot, R. Line-based extrinsic calibration of range and image sensors. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 3685–3691. [Google Scholar]

- Pandey, G.; McBride, J.R.; Savarese, S.; Eustice, R.M. Automatic extrinsic calibration of vision and lidar by maximizing mutual information. J. Field Robot. 2015, 32, 696–722. [Google Scholar] [CrossRef] [Green Version]

- Lv, X.; Wang, S.; Ye, D. CFNet: LiDAR-camera registration using calibration flow network. Sensors 2021, 21, 8112. [Google Scholar] [CrossRef] [PubMed]

- Park, C.; Moghadam, P.; Kim, S.; Sridharan, S.; Fookes, C. Spatiotemporal camera-LiDAR calibration: A targetless and structureless approach. IEEE Robot. Autom. Lett. 2020, 5, 1556–1563. [Google Scholar] [CrossRef] [Green Version]

- OpenCV Camera Calibration. Available online: https://docs.opencv.org/4.x/dc/dbb/tutorial_py_calibration.html (accessed on 20 June 2022).

- Douskos, V.; Grammatikopoulos, L.; Kalisperakis, I.; Karras, G.; Petsa, E. FAUCCAL: An open source toolbox for fully automatic camera calibration. In Proceedings of the XXII CIPA Symposium on Digital Documentation, Interpretation & Presentation of Cultural Heritage, Kyoto, Japan, 11–15 October 2009. [Google Scholar]

- Grammatikopoulos, L.; Adam, K.; Petsa, E.; Karras, G. Camera calibration using multiple unordered coplanar chessboards. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 59–66. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Olson, E. AprilTag 2: Efficient and robust fiducial detection. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4193–4198. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- ROS Camera Calibration. Available online: http://wiki.ros.org/camera_calibration (accessed on 20 June 2022).

- Matlab Camera Calibrator. Available online: https://www.mathworks.com/help/vision/ref/cameracalibrator-app.html (accessed on 20 June 2022).

- Quan, L.; Lan, Z. Linear n-point camera pose determination. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 774–780. [Google Scholar] [CrossRef] [Green Version]

- Perspective-n-Point (PnP) Pose Computation. Available online: https://docs.opencv.org/4.x/d5/d1f/calib3d_solvePnP.html (accessed on 20 June 2022).

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

| Camera Id | cam_0 | cam_1 | cam_2 | cam_3 |

|---|---|---|---|---|

| σο (pixel) | 3.7 | 4.4 | 3.2 | 4.1 |

| ΔΧ (cm) | 3.7 | −3.3 | 3.4 | −2.8 |

| ΔΥ (cm) | −3.5 | 3.6 | 2.7 | −2.3 |

| ΔΖ (cm) | −7.0 | −7.4 | −7.2 | −7.3 |

| omega (deg) | 179.70 | 1.14 | −0.52 | −179.92 |

| phi (deg) | 46.81 | −48.19 | 43.77 | −44.05 |

| kappa (deg) | 89.94 | −88.50 | −89.32 | 89.38 |

| Zhou Appr. | Our Appr. | |

|---|---|---|

| Num of checkpoints | 22 | 22 |

| RMSE_x (pixel) | 14.2 | 6.3 |

| RMSE_y (pixel) | 16.4 | 9.6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grammatikopoulos, L.; Papanagnou, A.; Venianakis, A.; Kalisperakis, I.; Stentoumis, C. An Effective Camera-to-Lidar Spatiotemporal Calibration Based on a Simple Calibration Target. Sensors 2022, 22, 5576. https://doi.org/10.3390/s22155576

Grammatikopoulos L, Papanagnou A, Venianakis A, Kalisperakis I, Stentoumis C. An Effective Camera-to-Lidar Spatiotemporal Calibration Based on a Simple Calibration Target. Sensors. 2022; 22(15):5576. https://doi.org/10.3390/s22155576

Chicago/Turabian StyleGrammatikopoulos, Lazaros, Anastasios Papanagnou, Antonios Venianakis, Ilias Kalisperakis, and Christos Stentoumis. 2022. "An Effective Camera-to-Lidar Spatiotemporal Calibration Based on a Simple Calibration Target" Sensors 22, no. 15: 5576. https://doi.org/10.3390/s22155576

APA StyleGrammatikopoulos, L., Papanagnou, A., Venianakis, A., Kalisperakis, I., & Stentoumis, C. (2022). An Effective Camera-to-Lidar Spatiotemporal Calibration Based on a Simple Calibration Target. Sensors, 22(15), 5576. https://doi.org/10.3390/s22155576