Abstract

While recent deep learning-based stereo-matching networks have shown outstanding advances, there are still some unsolved challenges. First, most state-of-the-art stereo models employ 3D convolutions for 4D cost volume aggregation, which limit the deployment of networks for resource-limited mobile environments owing to heavy consumption of computation and memory. Although there are some efficient networks, most of them still require a heavy computational cost to incorporate them to mobile computing devices in real-time. Second, most stereo networks indirectly supervise cost volumes through disparity regression loss by using the softargmax function. This causes problems in ambiguous regions, such as the boundaries of objects, because there are many possibilities for unreasonable cost distributions which result in overfitting problem. A few works deal with this problem by generating artificial cost distribution using only the ground truth disparity value that is insufficient to fully regularize the cost volume. To address these problems, we first propose an efficient multi-scale sequential feature fusion network (MSFFNet). Specifically, we connect multi-scale SFF modules in parallel with a cross-scale fusion function to generate a set of cost volumes with different scales. These cost volumes are then effectively combined using the proposed interlaced concatenation method. Second, we propose an adaptive cost-volume-filtering (ACVF) loss function that directly supervises our estimated cost volume. The proposed ACVF loss directly adds constraints to the cost volume using the probability distribution generated from the ground truth disparity map and that estimated from the teacher network which achieves higher accuracy. Results of several experiments using representative datasets for stereo matching show that our proposed method is more efficient than previous methods. Our network architecture consumes fewer parameters and generates reasonable disparity maps with faster speed compared with the existing state-of-the art stereo models. Concretely, our network achieves 1.01 EPE with runtime of 42 ms, 2.92 M parameters, and 97.96 G FLOPs on the Scene Flow test set. Compared with PSMNet, our method is 89% faster and 7% more accurate with 45% fewer parameters.

1. Introduction

Estimating depth from a stereo image has been a fundamental and classic computer vision problem for decades [1]. Stereo matching aims to estimate the correspondence between the pixels of a rectified stereo image pair. If a pixel at in the reference left image matches a pixel at in the target right image, the horizontal difference d between the corresponding pixels is a disparity. Using disparity d, camera focal length f, and distance between cameras B, the depth of the pixel can be calculated as . Because 3D depth information is essential for various real-world applications, including robot navigation [2], augmented/virtual reality (AR/VR) [3,4], autonomous driving for vehicles [5], and network security domains [6,7], reliable real-time stereo matching processing in restricted hardware environments is important.

Since the introduction of the seminal work known as MC-CNN [8], convolutional neural networks (CNNs) have been used to learn strong feature representation [8,9] to compute the cost of corresponding patches in the input stereo image pair. Consequently, they achieve more significant increases in accuracy than traditional stereo matching algorithms. However, because most of them adopt handcrafted cost aggregation methods and do not realize the fully end-to-end networks, they suffer from errors for ambiguous regions such as textureless regions.

DispNet [10] presented the first end-to-end stereo-matching network by building a 3D cost volume with a correlation layer followed by 2D convolution layers to estimate a disparity map and showed significant improvement in accuracy compared with previous patch-wise CNNs [8,9]. Instead of the 3D cost volume with the correlation layer, refs. [11,12] built a 4D cost volume by concatenating the left and right feature maps along the disparity levels. Subsequently, by processing the cost volume through a series of 3D convolution layers, they achieved better accuracy than 3D cost-volume-based methods. Although these networks that process 4D cost volume with 3D convolutions achieved state-of-the-art accuracy, heavy computation and memory consumption prevent them from running in mobile environments and real-time processing.

To address this problem, various efficient stereo-matching networks [13,14,15,16,17] have recently been proposed. Although these networks have significantly improved performance in terms of efficiency compared with previous networks, they still require a heavy computational cost to incorporate them to mobile computing devices in real time. Even though some of them are sufficiently light to run in mobile environments, there is a significant decrease in accuracy.

On the other hand, since DispNet [11] proposed the softargmin function that robustly calculates continuous disparity values with subpixel precision from a cost distribution, it has been adopted in most stereo-matching networks to regress a continuous disparity map. Most of them trained their networks in an end-to-end manner by defining the loss function using the difference between the predictive disparity map and the ground truth disparity map. The networks learn the cost distribution in an indirect supervision manner by reducing this loss function. Because this indirect supervision results in many possibilities for unreasonable cost distributions as long as the result after regression is correct, learning with these flexible cost volumes leads to overfitting [18]. Thus, direct supervision is required for cost volume regularization with probability distribution which peaks at the true disparity especially at the pixels where a multi-modal probability distribution is predicted, such as the boundaries of the object [19].

There are a few works that handle this problem. AcfNet [18] adds a direct constraint to the predicted cost volume by generating a unimodal probability distribution that peaks at the ground truth disparity and filters the predicted cost volume through it. CDN [19] addresses the problem by directly taking the mode as a predicted disparity value without a regression function, such as the softargmin operation. However, these methods still perform insufficient regularization for the cost volume because the artificial cost distribution generated using only the ground truth disparity value does not contain information such as similarity among different disparities [20,21,22,23].

In this paper, to solve the problems mentioned above, we propose a new efficient network architecture called a Multi-scale Sequential Feature Fusion Network (MSFFNet) and a new loss function called adaptive cost-volume-filtering (ACVF) loss for direct cost volume supervision. MSFFNet consists of a proposed Multi-scale Sequential Feature Fusion (MSFF) module which connects SFF modules [17] with different scales in parallel for an efficient and accurate multi-scale network architecture. For higher efficiency, only the smallest scale in the MSFF module is processed for the entire disparity search range. The other scales are processed for the odd disparities among the entire disparity range. In addition, the cross-scale fusion [24] is adopted at the end of the MSFF module to compensate for the lack of scale information and allow each cost volume with different scale to interact with each other. In addition, to effectively combine the multi-scale cost volumes generated from different scales, we present an interlaced cost concatenation method.

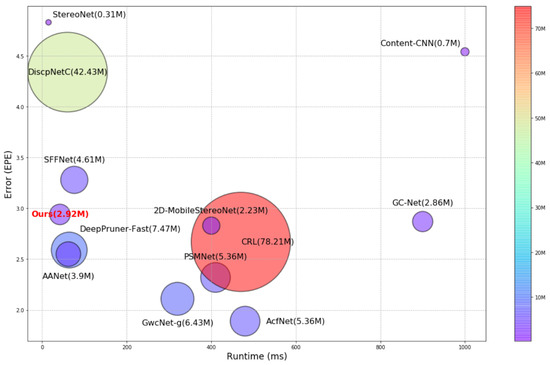

Moreover, the proposed ACVF loss adds a constraint to the estimated cost distribution, using both the ground truth disparity map and the probability distribution map generated from the teacher network with higher accuracy. Thus, a unimodal distribution is generated with the ground truth disparity value [18], and the distribution generated from the teacher network is utilized for knowledge distribution [21,22,23] which transfers the dark knowledge of the cumbersome teacher network to our network as student. However, there are some pixels for which the teacher network is not accurate and that negatively affect the student network. To avoid the negative effects of the distillation [22], the distribution from the teacher network is adaptively transferred using the ratio between the errors of the teacher and our proposed networks for each pixel. Because the proposed loss function does not require additional parameters and computations for inference, it allows us to consider the effectiveness of the direct supervision of the cost volume while maintaining the efficiency of our network. As shown in Figure 1, our proposed network predicts a reasonably accurate disparity map with an extremely fast runtime using small numbers of parameters compared with other efficient stereo-matching networks.

Figure 1.

Visualization of runtime, accuracy, and number of parameters of stereo-matching networks, including Content-CNN [9], GC-Net [11], PSMNet [12], StereoNet [13], GwcNet-g [15], DeepPruner-Fast [26], AANet [16], AcfNet [18], DispNetC [10], CRL [27], SFFNet [17], 2D-MobileStereoNet [28], and our proposed method, on KITTI 2015 leader board [25].

The main contributions of this paper are summarized as follows:

- We propose an efficient multi-scale stereo-matching network, MSFFNet, that connects multi-scale SFF modules in parallel and effectively generates a cost volume using the proposed interlaced cost concatenation method.

- We propose an adaptive cost-volume-filtering loss that adaptively filters the estimated cost volume using a ground truth disparity map and an accurate teacher network for direct supervision of the estimated cost volume.

- We achieved competitive results on the Scene Flow [10] and KITTI-2015 [25] test sets. The proposed MSFFNet has only 2.92 M parameters with a runtime of 42 ms, which shows that our method is more efficient and reasonably accurate compared with other stereo-matching networks.

The following sections of this paper are organized as follows. We introduce several related works in Section 2. Section 3 introduces the details about methodology and implementation of our proposed method. Various experiments including comparative results with previous methods and ablation studies are demonstrated in Section 4. Finally we conclude the paper in Section 5.

2. Related Works

Our proposed method includes an efficient network architecture for stereo matching and a new loss function which filters the cost volume for increasing accuracy. Thus, in this section, the relevant works about efficient stereo-matching networks and cost volume filtering are separately discussed. Table 1 summarizes the representative related works in terms of types of cost volume, network architecture, cost volume filtering, and datasets used for training such as Scene Flow [10] and KITTI [25,29].

Table 1.

A comparison of relevant stereo-matching networks.

2.1. Efficient Stereo-Matching Networks

Since the first end-to-end training for a stereo-matching network [10] was proposed, stereo-matching networks usually generate a single-scale cost volume for matching cost computation. Refs. [10,27,33,34] constructed a 3D cost volume using a correlation operation between corresponding left and right features. Although a large amount of useful feature information for cost aggregation is lost through correlation, there is an advantage in terms of computational cost because the generated 3D cost volume is processed using 2D convolutions. Meanwhile, refs. [8,12] generated a 4D cost volume by concatenating two corresponding features and achieved higher accuracy compared with 3D cost-volume-based methods. However, 3D convolutions are required for processing the 4D cost volume, leading to a significant increase in computing resources and runtime.

There is a trade-off between efficiency and accuracy according to the choice of the dimension of the cost volume. Thus, various efficient stereo-matching networks have been studied extensively in recent years [14,16]. To efficiently process the 4D cost volume with 3D convolutions, several networks adopted a coarse to fine architecture that leverages multi-scale pyramid cost volumes. This method can enhance accuracy without expensive computational complexity and memory consumption. Moreover, multi-scale cost volumes are more sufficient to utilize spatial information of the stereo image pair. These networks using multi-scale cost volumes are classified as sequential method and parallel method according to the type of connecting multi-scale feature maps.

Sequential method networks [14,31,32] generate and aggregate the cost volume over the full disparity range only at the initial coarsest scale. Then, to correct the error of the disparity map generated from the previous scale, the succeeding networks warp the right feature map using the disparity map and process the residuals that cover only the offset range of each disparity search range. The rough disparity map from the network that covers the coarse scale is iteratively refined through the succeeding networks towards the bottom of the pyramid in the original resolution by adding the residual to the previous disparity result. Because the computational complexity of cost aggregation with 3D convolution increases cubically with resolution and linearly with disparity range, this sequential method considerably reduces the computational cost and memory consumption. However, the sequential method has the following disadvantages. The cost aggregation conducted only at the first stage with the smallest resolution is insufficient to estimate accurate disparity for sharp regions. In addition, the refinement using the networks of succeeding stages with respect to the offset range can propagate errors when the error is larger than the offset.

Parallel method networks [16,30] perform aggregation and estimate the disparity map at each scale of the multi-scale cost volume. These methods can capture both robust global features and detailed local feature representations by integrating all the different scales of cost volumes. Parallel method networks regularize cost volumes and predict disparity maps with high accuracy by utilizing these rich representations. Although there is an advantage in terms of accuracy, they still require a large amount of computation because the cost aggregation is performed at all scales, even though it has decreased compared with the single scale-based networks [10,17].

Our network efficiently combines the sequential and parallel methods. To make our network more efficient than other parallel networks, we process the entire disparity search range only at the initial coarsest scale similar to the sequential methods. The other higher scales are processed only half of the full disparity range. In addition, using a new interlaced concatenation method, we effectively combine the multi-scale cost volumes which include the entire disparity range.

2.2. Cost Volume Filtering

Most deep-learning-based stereo-matching networks use loss functions based on the difference between the true and predicted disparities because they consider stereo matching as a regression problem. However, there are only a few methods that supervise the cost volume. Because a disparity is the regression result from the cost volume, learning cost volume indirectly through the disparity regression loss easily causes an overfitting problem [18,19], especially around the edge regions.

To mitigate this problem, the cost volume filtering for direct constraints on the cost volume was first proposed in AcfNet [18]. Through the addition of a cost-volume-filtering module, which filters the estimated cost volume with a unimodal distribution that peaks at the ground truth disparity, direct supervision of the cost volume is conducted. CDN [19] also addresses this problem by proposing a new network architecture for stereo matching that can estimates arbitrary disparity values. Thus, the mode value can be directly chosen as a disparity without a regression step.

Unlike refs. [18,19] which uses the artificially generated cost distributions using the ground truth disparity map, we perform direct supervision to the cost volume through a loss function which includes two kinds of filtering using the cost volume from the ground truth disparity map and that estimated from the teacher network with higher accuracy via knowledge distillation.

3. Proposed Method

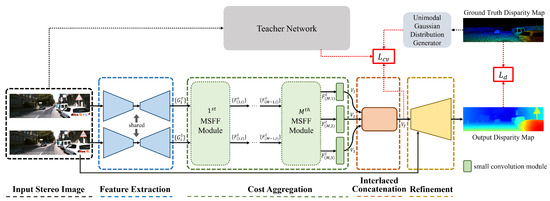

Figure 2 presents an overview of the proposed network. Given a rectified stereo image pair, each image is inserted into a U-Net [35] feature extractor to generate multi-scale feature maps. Using these feature maps as inputs, a cost aggregation module aggregates the matching costs and generates multi-scale cost volumes, where a MSFF module is proposed to efficiently generate them by connecting the SFF [17] modules of different scales in parallel. The final cost volume for disparity regression is obtained by concatenating the cost volume using the proposed interlaced concatenation method. Finally, an initial disparity map is regressed with the softargmax function from the cost volume and hierarchically upsampled and refined with a refinement network [13] to produce a final disparity map. To train the network, we define a loss function that consists of a disparity regression loss and an adaptive cost-volume-filtering (ACVF) loss. Specifically, to supervise the cost volume, the proposed ACVF utilizes both the ground truth disparity map and probability distribution map generated from a teacher network with higher accuracy. Detailed explanations of each part are provided in the following subsections.

Figure 2.

An overview of the proposed method. A path using dotted arrows is required only for the training phase.

3.1. Feature Extractor

Given a rectified stereo image pair and , each image is inserted into a U-Net [35] feature extractor, to generate feature maps and , respectively. Here, i represents the scale index, and and are times the width and height of the original input image, respectively. The channel size is fixed to 32 for all scales. Similar to [14,16], and are extracted from the different positions of the decoder of the feature extractor network, where the corresponding spatial resolutions of the feature maps for each scale are 1/16, 1/8, and 1/4 times the original spatial resolution of the input image, respectively.

3.2. Cost Aggregation

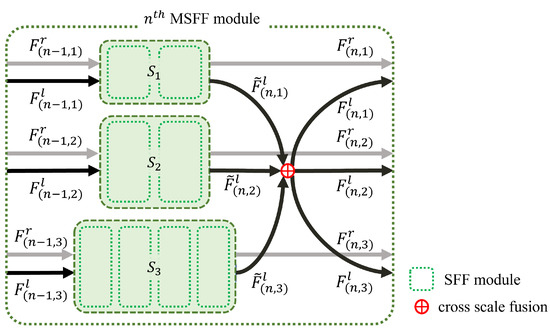

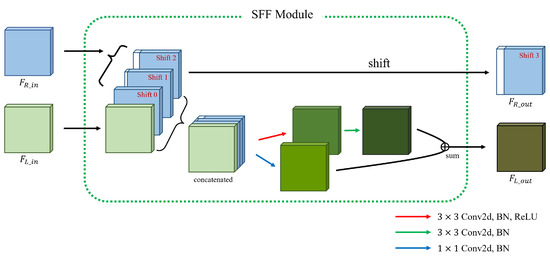

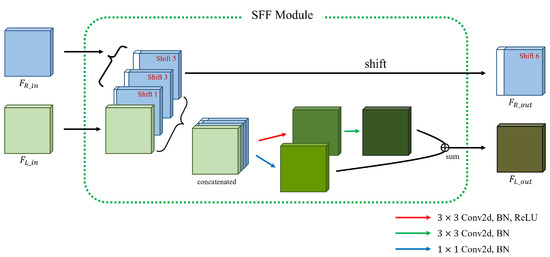

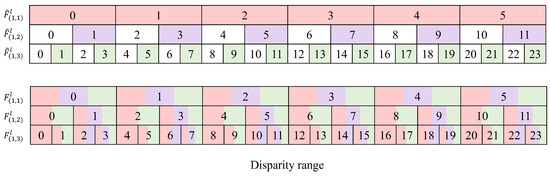

From the multi-scale feature maps and obtained using the feature extractor module, the proposed cost aggregation module generates various cost volumes with different spatial resolutions. To this end, we propose an MSFF module that acts as a building block for the cost aggregation module. Concretely, the cost aggregation module consists of a series of M MSFF modules followed by a small convolution layer. As shown in Figure 3, each MSFF module consists of combined SFF modules [17] with different scales and a cross-scale fusion operation that combines them so that they share different scale information. Specifically, MSFF module generates feature maps and which are inserted to the next MSFF module from and , where the input of the first MSFF module is the feature maps and , that is, and . In the MSFF module, generates the intermediate feature maps and from and . To prevent heavy computations and memory consumption, only with the smallest scale processes the entire disparity range, whereas the others, including and , process only odd disparities of the full search range. In more detail, only processes the entire disparity range, which amounts to 1/16 of the maximum disparity range through two SFF modules [17]. Thus, as shown in Figure 4, the input right feature is shifted by only one pixel to the right at a time in the SFF module. However, as shown in Figure 5, and process only odd disparities by shifting and 2 pixels to the right, respectively, unlike the original SFF module [17]. This reduces the computation and parameters of and by half compared with processing the entire disparity range at that scale.

Figure 3.

Details of the proposed MSFF module.

Figure 4.

Details of a single SFF module in the .

Figure 5.

Details of a single SFF module in the and .

Next, the output feature map of scale s in the nth MSFF module is obtained by fusing the intermediate feature maps with the cross-scale fusion function , which is defined as

where is a function for the adaptive fusion of multi-scale features, similar to [24], that is defined as

where I is an identity function, represents a series of numbers of convolutions with stride 2 for times downsampling to make the scale consistent, and represents bilinear upsampling for scale consistency, then convolution is followed. It is noteworthy that fuses the features from different scales to share their information. By fusing these feature maps, some information about absent disparity is compensated using those of other scales. That is, this fusion is conducted so that each feature map obtains global and robust semantic information which covers broader spatial and disparity ranges from features of smaller scale. Furthermore, precise and local information for the fine detail is compensated from features of larger scale.

Figure 6 illustrates the disparity range covered in the first MSFF module, where bins with white color represent absent disparity values that are not included in the feature maps. A disparity value d in corresponds to for and corresponds to for owing to scale difference. For the first MSFF module, the feature map from module includes the disparity range of on the scale because each SFF module in covers three disparity values, and two SFF modules are serially connected. Similarly, covers disparity range in the scale using two SFF modules without even disparity values. Similarly, covers the disparity range of on the scale with only odd disparity values. As shown in Figure 6, after the cross-scale fusion process, there is not absent disparity value for each feature map , which compensates for the lack of even values of disparity information by fusing information from different scales.

Figure 6.

Details of multi-scale features before and after cross-scale fusion in the disparity domain.

Note that the left output feature map of the last MSFF module contains the aggregated cost distribution through the property of the SFF module [17]. Thus, the output cost volume is obtained using a series of two convolutions from to render the channel dimension of equal to the disparity range at that scale. Specifically, the channel number of the output cost volume is the same as the full disparity range for its own scale, whereas those of and are half of the disparity range at those scales.

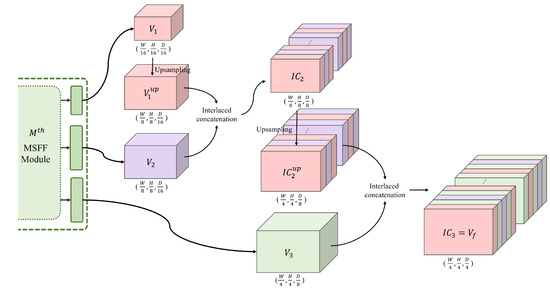

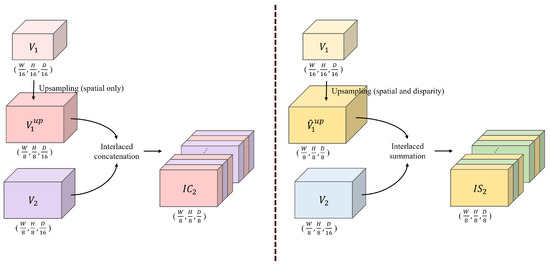

3.3. Interlaced Cost Volume

We propose an interlaced concatenation method to combine the multi-scale cost volumes and produce the final cost volume for disparity regression. Here, i represents the scale index, and and are times the width and height of the input image , respectively. , , and are , , and times the maximum disparity search range, respectively. As shown in Figure 7, is obtained by upsampling the cost volume only in the spatial domain to achieve the same spatial resolution as while maintaining the channel dimension. Then, the upsampled and are interlaced along the channel direction to generate an interlaced cost volume , where the value of of spatial position and channel is defined by

where n denotes the channel index of and . When the interlaced concatenation is performed, the disparity channels from the lower scale are placed at the even number disparity. The final cost volume , which is also , can be generated in the same manner using and .

Figure 7.

Process of interlaced concatenation.

3.4. Disparity Regression and Refinement

For each pixel, contains a -length vector that contains the matching costs of the disparity range . This vector is converted to a probability vector using the softmax operation, where the probability of disparity d is defined as

where is the matching cost in for disparity d. To estimate an initial disparity map that contains a continuous disparity value for each pixel, the following softargmax function [11] is applied:

where is the probability corresponding to a candidate disparity d. The estimated disparity is obtained using the weighted summation, as shown in Equation (5). This regression-based formulation can produce more robust disparity values with sub-pixel precision than classification-based stereo-matching methods [11].

To obtain a final disparity map D with increased accuracy, we use a refinement module for the initial disparity map similar to [13]. In this module, the initial disparity map is upsampled to the same scale as and concatenated with as an input to the refinement module, where a series of convolution layers are followed to generate a final disparity map.

3.5. Loss Function

As shown in Figure 2, to train the network, we employ two loss functions: a disparity regression loss and an ACVF loss. The loss functions are described in detail in the following subsections.

3.5.1. Disparity Regression Loss

The first loss function is a disparity regression loss , which is adopted in most networks [12,16,26] and is defined as

where and are the ground truth disparity and the estimated disparity for jth pixel, respectively. N is the total number of pixels in and D. The smooth loss function is widely used owing to its robustness and low sensitivity and is defined as

Note that in Equation (6) is fully differentiable and useful for smooth disparity estimation.

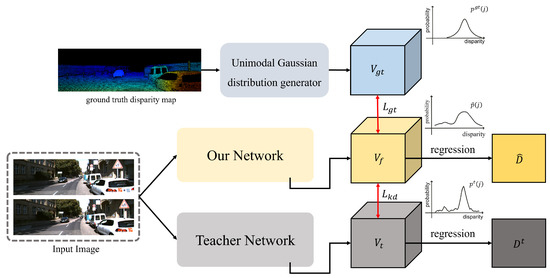

3.5.2. Adaptive Cost-Volume-Filtering Loss

As shown in Figure 8, to directly supervise the estimated cost volume, we propose to filter the cost volume using two types of cost volumes , generated using the ground truth disparity map, and , generated from the teacher network with higher accuracy. The reason for using both types is that the ways cost volumes are generated are different, and the goal of usage is also different. is a unimodal distribution with a peak at the ground truth disparity value; thus, it can help filter the estimated cost volume for reducing the bias error aspect. In contrast, because is the cost volume estimated from the teacher network, it can be helpful in reducing variance errors [22].

Figure 8.

Process of adaptive cost volume filtering.

Cost Volume Filtering Using Ground Truth Disparity

To filter the cost volume using the ground truth disparity map, we generate a unimodal cost volume similar to [18]. Given a ground truth disparity map , we first downsample it four times to generate which has the same spatial resolution as . Then, the unimodal distribution value for pixel and disparity d is defined using the softmax operation as follows:

where is the ground truth disparity of jth pixel, and is the variance related to the sharpness of the distribution. From Equation (8), the Laplacian unimodal distribution has a peak near the true disparity value.

With the estimated probability distribution map which is generated from the cost volume on the softmax operation and the probability distribution generated from the ground truth disparity map, we define a unimodal cost-volume-filtering loss using the KL-divergence between them, as follows:

where and are the probability value of and , respectively, for jth pixel and disparity d. N is the total number of pixels in and .

Cost Volume Filtering Using Knowledge Distillation

Using only the Laplacian unimodal, cost distribution from the ground truth disparity is not sufficient to effectively regularize the cost volume of the proposed network. For some pixels at the boundaries of objects, a multi-modal distribution would be more appropriate to help the network estimate accurate disparity [19]. However, the artificially generated distribution would not contain useful information for non-true disparities such as correlation among different disparities. Unlike [18,19] which uses artificially generated distributions, we perform knowledge distillation [21] to regularize the cost volume. The distribution generated from the teacher network with higher accuracy includes dark knowledge even for the non-true disparities which is helpful to further regularize the cost distributions for some pixels [20,21,22,23]. Thus, given a probability distribution map from the teacher network, the cost-volume-filtering loss using knowledge distillation via weighted KL-divergence is defined as follows:

where and are the probability values of the teacher network and the estimated cost volume at pixel j for disparity d, respectively. Here, is a weighting factor of pixel j that is defined similar to [22] as follows:

where and are disparity values for jth pixel of and obtained from and after performing the softargmax operation, respectively. The absolute differences and are calculated at pixel j with the ground truth disparity map . The weight is determined according the ratio of the error of both networks. It is worthy to note that the role of this weighting factor is to avoid the negative effects of the distillation for pixels that the teacher network is less accurate than those of our network as student. At jth pixel, when the teacher network predicts a disparity with higher error than that of the proposed network, the weight decreases, and vice versa. Thus, the knowledge from the teacher network is adaptively transferred using the ratio between the errors of the teacher and our proposed networks for each pixel [22].

Now, the ACVF loss is defined by summing and as follows:

where and are the weighting factors for and , respectively. Note that is a combination of two cost-volume-filtering losses, and , where is the loss between and , and is the loss between and . This loss is reminiscent of a loss function that uses the soft label with knowledge distillation scheme for the classification problem. Most classification methods usually combine a cross-entropy loss using a one-hot distribution with a ground truth label and a knowledge distillation loss using a teacher network to reduce both bias and variance errors [22]. Unlike in classification problems, to regress continuous disparity values, we use a unimodal distribution generated using ground truth disparity instead of a one-hot distribution. In addition, for each pixel, we adaptively combine the knowledge distillation loss based on the ratio between the accuracies of the teacher and student networks similar to [22].

3.5.3. Total Loss Function

To train the proposed network in an end-to-end manner, we employ two types of losses; the disparity regression loss described in Equation (6) and the ACVF loss in Equation (12). The final total loss is a combination of these two losses, defined as

where is a hyperparameter used to balance the two different losses.

4. Experiments and Results

4.1. Datasets and Evaluation Metrics

To evaluate the performance of the proposed method, we conducted various comparative experiments using two stereo datasets, Scene Flow and KITTI 2015. In addition, ablation studies were conducted on the Scene Flow dataset with various settings.

- Scene Flow [10]: A large-scale synthetic dataset with dense ground truth disparity maps. It contains 35,454 training image pairs and 4370 test image pairs to train the network directly. The end point error (EPE), which is the average disparity error in pixels of the estimated disparity map, was used as an evaluation metric.

- KITTI: KITTI 2015 [25] and KITTI 2012 [29] are real-world datasets with outdoor views captured from a driving car. KITTI 2015 contains 200 training stereo image pairs with sparse ground truth disparity maps and 200 image pairs for testing. KITTI 2012 contains 194 training image pairs with sparse ground truth disparity maps and 195 testing image pairs. The 3-Pixel-Error (3PE), the percentage of pixels with a disparity error larger than three pixels, was used as an evaluation metric.

4.2. Implementation Details

We trained the network using the PyTorch [36] framework in an end-to-end manner, with Adam [37] (, ) as the optimizer. For the Scene Flow dataset, we trained the network with a batch size of 12 using all training sets (35,454 image pairs) from scratch on an NVIDIA GeForce RTX GPU for 120 epochs, and evaluated it using the test set (4370 image pairs). For the preprocessing step, the raw input images were randomly cropped to a size of 256 × 512 and normalized using ImageNet [38] statistics (mean:[0.485, 0.456, 0.406]; std:[0.229, 0.224, 0.225]). The learning rate started at 0.001 and decreased by half at the 20th, 40th, and 60th epochs. We used this dataset for ablation and pretraining for KITTI. For the KITTI dataset, we first fine-tuned the pretrained model for Scene Flow on the mixed KITTI 2012 and 2015 training sets for 1000 epochs. Then, an additional 1000 epochs were fine-tuned on the KITTI 2015 training set. For the mixed KITTI 2012 and KITTI 2015 training sets, input images were randomly cropped to a size of 336 × 960; the initial learning rate was 0.0001 and then decreased by half at the 200th, 400th, and 600th epochs. The same normalization method as the Scene Flow dataset was used for preprocessing. The loss weights for Equation (12) were set to and , and value for Equation (13) was set as . The maximum disparity of the original input image size was set to 192. The number M of MSFF modules in the MSFFNet are set as which covers the full disparity range of 192 for of the input image size, because a single MSFF module covers a disparity range of 24. For the teacher network, GwcNet-g [15] was used, and the cost volume was obtained from the teacher network before performing the softargmax regression for the resultant disparity map.

4.3. Comparative Result

4.3.1. Scene Flow Dataset

We compared the performance of our proposed method with that of other stereo-matching networks on the Scene Flow test set. Table 2 presents the comparative results of our network and various other 2D/3D convolution-based networks. “Ours” means the EPE of the predicted disparity map of the proposed network. As shown in Table 2, our MSFFNet outperforms all other 2D convolution-based models [10,17,27,28] and some 3D convolution-based models [12,13]. Among the 2D convolution networks, compared with SFFNet [17] which proposed the SFF module, the proposed MSFFNet shows better accuracy with lower EPE. Furthermore, among the 3D convolution networks, MSFFNet results in better accuracy compared with PSMNet [12] and better accuracy compared with StereoNet [13].

Table 2.

Comparison of EPEs of various methods on the Scene Flow test set.

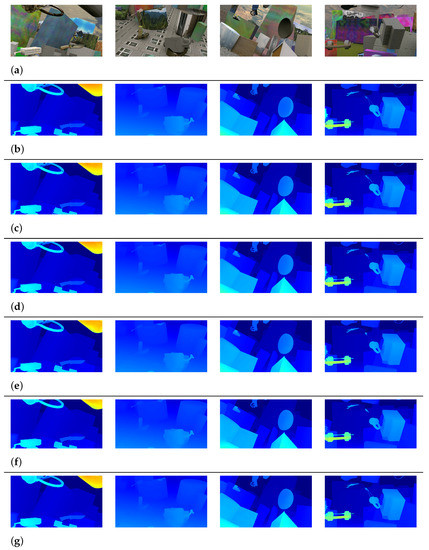

Figure 9 shows a qualitative comparison between the MSFFNet and other networks [12,17,26,28] on the Scene Flow test set. This comparison demonstrates that our network predicts disparity maps comparable to other 2D/3D convolution networks for most regions.

Figure 9.

Qualitative comparison on the Scene Flow test set. (a) Input Image. (b) Ground-truth image. (c) PSMNet [12]. (d) DeepPruner-Fast [26]. (e) SFFNet [17]. (f) 2D-MobileStereoNet [28]. (g) Ours.

4.3.2. KITTI-2015 Dataset

We compared the performance of our method with other methods on the KITTI 2015 stereo benchmark [25]. Table 3 shows the comparison of various indicators including runtime, 3PE, number of parameters, and FLOPs. In this table, bg, fg and all indicate the percentage of error pixels averaged over the background pixels, foreground pixels, and all ground truth pixels, respectively. In addition, Noc (%) and All (%) represent the percentages of error pixels for non-occluded regions and all pixels, respectively. As shown in Table 3, our method achieves faster runtime and requires smaller number of parameters and smaller FLOPs with comparable accuracy compared with most 3D convolution-based networks. Specifically, compared with AANet [16] which connects multi-scale networks in parallel, our method has better efficiency with faster runtime and smaller number of parameters. In addition, compared with AcfNet [18] which directly supervises the predicted cost volume, our method has better efficiency with faster runtime and smaller number of parameters. Table 3 shows that the proposed MSFFNet is the fastest network using the smallest FLOPs with comparable accuracy among the 2D convolution-based methods. Especially, compared with SFFNet [17], our method has better performance in all indicators with better accuracy, smaller number of parameters, and smaller FLOPs.

Table 3.

Quantitative comparison results on the KITTI 2015 test set.

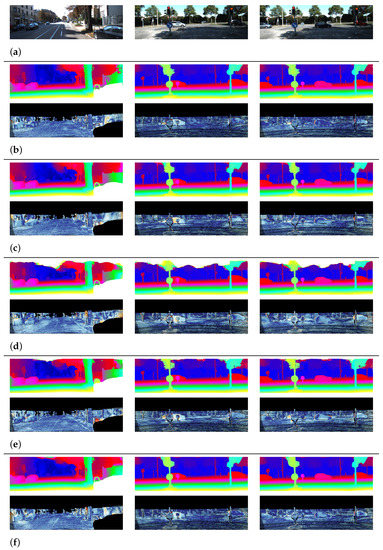

Figure 10 illustrates some of the qualitative comparison results of the MSFFNet and other networks [12,17,26,28] on the KITTI 2015 benchmark [25]. All figures are reported from the KITTI 2015 evaluation server. The images for each network represent the predicted disparity maps and error maps of the predicted disparity maps for the left input images. In the error maps, a pixel color closer to red indicates a region with a larger error. From this comparison, it is observed that our method generates comparable disparity maps with other methods for various scenes.

Figure 10.

Qualitative comparison on the KITTI-2015 test set. (a) Input Image. (b) PSMNet [12]. (c) DeepPruner-Fast [26]. (d) SFFNet [17]. (e) 2D-MobileStereoNet [28]. (f) Ours.

4.4. Ablation Study

As shown in Table 4, we conducted various experiments with a controlled setup to verify the effectiveness of our proposed method on the Scene Flow dataset. First, we checked the effectiveness of the interlaced concatenation method in our network and then verified the effectiveness of the ACVF loss. All the methods list in Table 4 included the disparity regression loss in Equation (6) for training networks as a baseline loss function.

Table 4.

Ablation study results of the network architecture and the adaptive cost-volume-filtering loss on the Scene Flow test set.

4.4.1. Effect of Interlaced Cost Concatenation

To investigate the effectiveness of the proposed interlaced concatenation method defined in Equation (3), we conducted two experiments with the interlaced concatenation method denoted as “Concatenation” and the simple summation method denoted as “Summation” on the Scene Flow dataset, as listed in Table 4. Figure 11 compares the details of these two methods. The proposed concatenation method upsamples the cost volume to obtain only in the spatial domain without changing the channel dimension. Subsequently, with the upsampled and , interlaced concatenation is performed using Equation (3) to generate the fused cost volume . However, the naive summation method upsamples the cost volume in both the spatial and disparity domains to produce which has the same size as the cost volume . Then, is obtained by simple summation defined as

where values for odd disparities in are computed by summing the elements of and those of for odd disparities. The cost values for even disparities of are established using the interpolated cost volume . The “Summation” method uses instead of for building the cost volumes.

Figure 11.

Details of interlaced concatenation and interlaced summation.

Table 4 shows that the proposed concatenation method achieves a lower EPE (1.098) than the EPE (1.174) of the simple summation method on the Scene Flow test set. The interpolation of along the disparity channel smoothens the cost of each odd disparity, which disturbs the cost of through a summation. Thus, the proposed concatenation method which maintains the disparity channels of each cost volume generates more accurate results.

4.4.2. Effect of Adaptive Cost-Volume-Filtering Loss

The proposed ACVF loss in Equation (12) consists of two loss components such as using the ground truth disparity map and using the teacher network. To verify the effect of each component, we conducted experiments in which we added each loss and one at a time to the ACVF loss. Using the baseline method where MSFFNet is trained with only disparity regression loss in Equation (6), we gradually added only one component of the ACVF loss and then finally added both of them. As shown in Table 4, the addition of each component improves the EPE for the Scene Flow test set compared with the baseline method. Because adds a constraint to the predicted cost volume in terms of reducing bias errors, the EPE of using only is reduced by . Meanwhile, is helpful for reducing variance errors, EPE is reduced by . The adaptive addition of both components achieves the best EPE of which is reduced by compared with the baseline method.

5. Conclusions

In this paper, we presented an efficient MSFFNet for stereo matching. The network consists of a series of MSFF modules that efficiently connect multi-scale SFF modules in parallel to generate multi-scale cost volumes. In addition, an interlaced concatenation method is presented to generate a final cost volume which contains the full disparity range by combining the multi-scale cost volumes that include information for only parts of the disparity range. To train the network, an ACVF loss function was proposed to regularize the estimated cost volume using both the ground truth disparity map and the probability distribution map generated from the teacher network with higher accuracy. This loss function adaptively utilizes them based on the error ratio of disparity maps generated from the teacher network and the proposed network. Results of various comparative experiments show that the proposed method is more efficient than previous methods because our network consumes fewer parameters and runs at a faster speed with reasonable accuracy. For example, our network achieves 1.01 EPE with 42 ms runtime, 2.92M parameters and 97.96G FLOPs on the Scene Flow test set. It is 89% faster and 7% more accurate with 45% fewer parameters than PSMNet [12] which is one of the representative 3D convolution-based methods on the Scene Flow test set. Compared with SFFNet [17] which is one of the representative 2D convolution-based methods, our method has better performance with 44% faster runtime, 10% better accuracy, and 36% fewer parameters.

Despite the performance improvements of various aspects, the proposed network has a limitation in that the accuracy of our method is relatively lower than some of heavy 3D convolution-based networks. In future work, the accuracy of our method should be improved by employing a more accurate teacher model to train our network. We believe that our method sheds light on developing a practical stereo-matching network that generates accurate depth information in real time. In addition, our method can be adopted in a variety of applications that require real-time 3D depth information.

Author Contributions

Conceptualization, S.J. and Y.S.H.; software, S.J.; validation, Y.S.H.; investigation, S.J.; writing—original draft preparation, S.J.; writing—review and editing, Y.S.H.; supervision, Y.S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported in part by the BK21 FOUR program of the National Research Foundation of Korea funded by the Ministry of Education(NRF5199991014091) and in part by Basic Science Research Program through the National Research Foundation of Korea(NRF) funded by the Ministry of Education(2022R1F1A1065702).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the anonymous reviewers for their constructive comments and recommendations.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | Convolutional Neural Network |

| SFF module | Sequential Feature Fusion module |

| SFFNet | Sequential Feature Fusion Network |

| MSFF module | Multi-scale Sequential Feature Fusion module |

| MSFFNet | Multi-scale Sequential Feature Fusion Network |

| ACVF | Adaptive Cost Volume Filtering |

| EPE | End Point Error |

| 3PE | 3-Pixel Error |

| FLOPs | FLoating point Operations Per Second |

References

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Huang, J.; Tang, S.; Liu, Q.; Tong, M. Stereo matching algorithm for autonomous positioning of underground mine robots. In Proceedings of the International Conference on Robots & Intelligent System, Changsha, China, 26–27 May 2018; pp. 40–43. [Google Scholar]

- Zenati, N.; Zerhouni, N. Dense stereo matching with application to augmented reality. In Proceedings of the IEEE International Conference on Signal Processing and Communications, Dubai, United Arab Emirates, 24–27 November 2007; pp. 1503–1506. [Google Scholar]

- El Jamiy, F.; Marsh, R. Distance estimation in virtual reality and augmented reality: A survey. In Proceedings of the IEEE International Conference on Electro Information Technology, Brookings, SD, USA, 20–22 May 2019; pp. 063–068. [Google Scholar]

- Chen, C.; Seff, A.; Kornhauser, A.; Xiao, J. Deepdriving: Learning affordance for direct perception in autonomous driving. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2722–2730. [Google Scholar]

- Wong, A.; Mundhra, M.; Soatto, S. Stereopagnosia: Fooling stereo networks with adversarial perturbations. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 2879–2888. [Google Scholar]

- Kwon, H.; Kim, Y. BlindNet backdoor: Attack on deep neural network using blind watermark. Multimed. Tools Appl. 2022, 81, 6217–6234. [Google Scholar] [CrossRef]

- Zbontar, J.; LeCun, Y. Stereo Matching by Training a Convolutional Neural Network to Compare Image Patches. J. Mach. Learn. Res. 2016, 17, 2287–2318. [Google Scholar]

- Luo, W.; Schwing, A.G.; Urtasun, R. Efficient deep learning for stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5695–5703. [Google Scholar]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- Kendall, A.; Martirosyan, H.; Dasgupta, S.; Henry, P.; Kennedy, R.; Bachrach, A.; Bry, A. End-to-end learning of geometry and context for deep stereo regression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 66–75. [Google Scholar]

- Chang, J.R.; Chen, Y.S. Pyramid stereo-matching network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5410–5418. [Google Scholar]

- Khamis, S.; Fanello, S.; Rhemann, C.; Kowdle, A.; Valentin, J.; Izadi, S. Stereonet: Guided hierarchical refinement for real-time edge-aware depth prediction. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 573–590. [Google Scholar]

- Wang, Y.; Lai, Z.; Huang, G.; Wang, B.H.; Van Der Maaten, L.; Campbell, M.; Weinberger, K.Q. Anytime stereo image depth estimation on mobile devices. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5893–5900. [Google Scholar]

- Guo, X.; Yang, K.; Yang, W.; Wang, X.; Li, H. Group-wise correlation stereo network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3273–3282. [Google Scholar]

- Xu, H.; Zhang, J. Aanet: Adaptive aggregation network for efficient stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1959–1968. [Google Scholar]

- Jeong, J.; Jeon, S.; Heo, Y.S. An Efficient stereo-matching network Using Sequential Feature Fusion. Electronics 2021, 10, 1045. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Y.; Bai, X.; Yu, S.; Yu, K.; Li, Z.; Yang, K. Adaptive unimodal cost volume filtering for deep stereo matching. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12926–12934. [Google Scholar]

- Garg, D.; Wang, Y.; Hariharan, B.; Campbell, M.; Weinberger, K.Q.; Chao, W.L. Wasserstein distances for stereo disparity estimation. Adv. Neural Inf. Process. Syst. 2020, 33, 22517–22529. [Google Scholar]

- Yuan, L.; Tay, F.E.; Li, G.; Wang, T.; Feng, J. Revisiting Knowledge Distillation via Label Smoothing Regularization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 3903–3911. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Zhou, H.; Song, L.; Chen, J.; Zhou, Y.; Wang, G.; Yuan, J.; Zhang, Q. Rethinking soft labels for knowledge distillation: A bias-variance tradeoff perspective. arXiv 2021, arXiv:2102.00650. [Google Scholar]

- Zhao, B.; Cui, Q.; Song, R.; Qiu, Y.; Liang, J. Decoupled Knowledge Distillation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; 2022; pp. 11953–11962. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Menze, M.; Geiger, A. Object scene flow for autonomous vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3061–3070. [Google Scholar]

- Duggal, S.; Wang, S.; Ma, W.C.; Hu, R.; Urtasun, R. Deeppruner: Learning efficient stereo matching via differentiable patchmatch. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 4384–4393. [Google Scholar]

- Pang, J.; Sun, W.; Ren, J.S.; Yang, C.; Yan, Q. Cascade Residual Learning: A Two-Stage Convolutional Neural Network for Stereo Matching. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 887–895. [Google Scholar]

- Shamsafar, F.; Woerz, S.; Rahim, R.; Zell, A. MobileStereoNet: Towards Lightweight Deep Networks for Stereo Matching. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 2417–2426. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Shen, Z.; Dai, Y.; Rao, Z. Msmd-net: Deep stereo matching with multi-scale and multi-dimension cost volume. arXiv 2020, arXiv:2006.12797. [Google Scholar]

- Gao, Q.; Zhou, Y.; Li, G.; Tong, T. Compact StereoNet: Stereo Disparity Estimation via Knowledge Distillation and Compact Feature Extractor. IEEE Access 2020, 8, 192141–192154. [Google Scholar] [CrossRef]

- Mao, Y.; Liu, Z.; Li, W.; Dai, Y.; Wang, Q.; Kim, Y.T.; Lee, H.S. UASNet: Uncertainty Adaptive Sampling Network for Deep Stereo Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 6311–6319. [Google Scholar]

- Liang, Z.; Feng, Y.; Guo, Y.; Liu, H.; Chen, W.; Qiao, L.; Zhou, L.; Zhang, J. Learning for disparity estimation through feature constancy. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2811–2820. [Google Scholar]

- Yang, G.; Zhao, H.; Shi, J.; Deng, Z.; Jia, J. Segstereo: Exploiting semantic information for disparity estimation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 636–651. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations (Poster), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Zhang, F.; Prisacariu, V.; Yang, R.; Torr, P.H. Ga-net: Guided aggregation net for end-to-end stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 185–194. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).