Automated Chicago Classification for Esophageal Motility Disorder Diagnosis Using Machine Learning

Abstract

:1. Introduction

1.1. Background

1.2. Related Works

1.3. Research Gap

1.4. Contribution

2. Materials and Methods

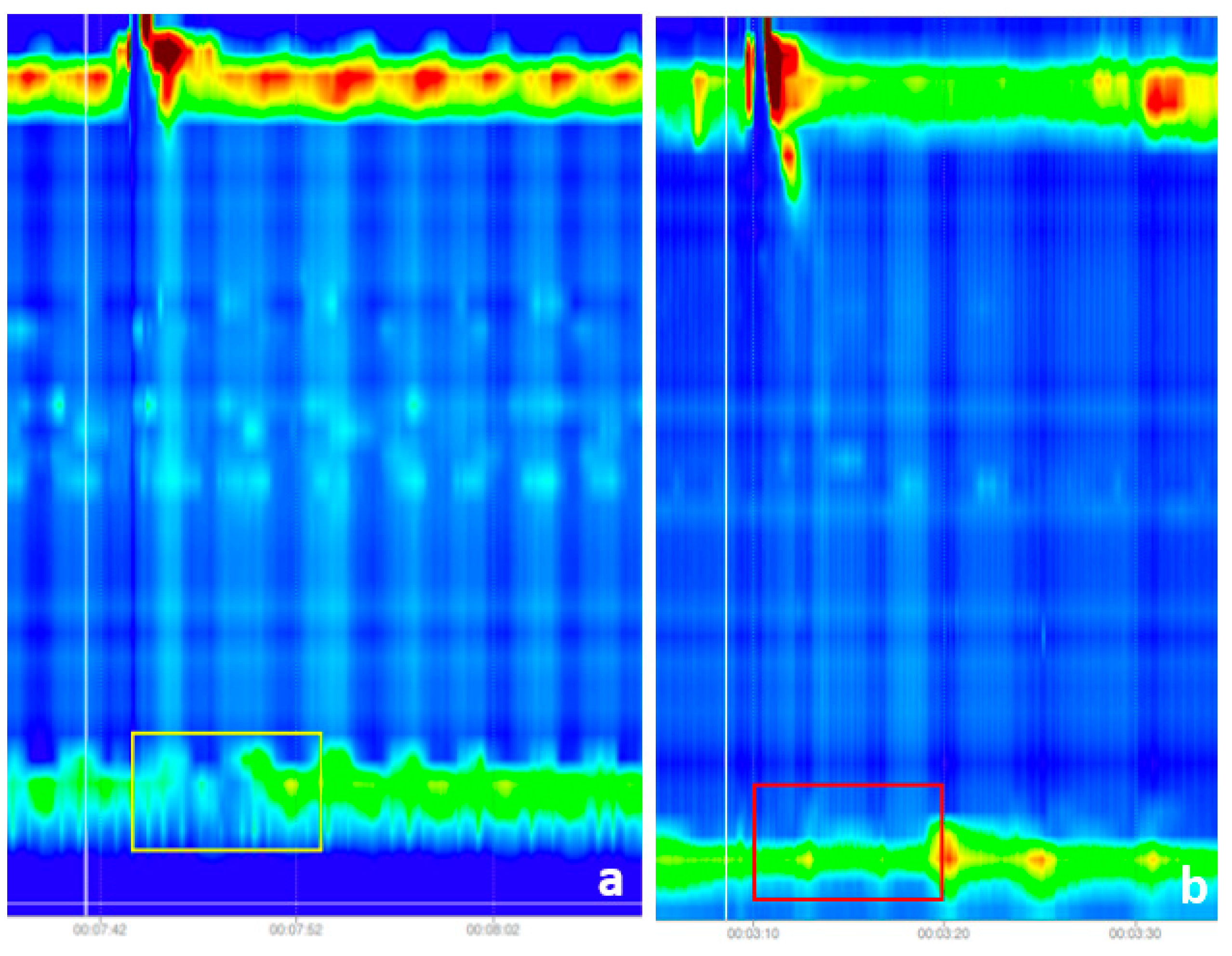

2.1. Raw Data Analysis

- Panesophageal pressurization (n = 256)

- Premature contractions (n = 27)

- Weak contractions (n = 54)

- Fragmented contractions (n = 58)

- DCI (distal contractile integral) greater than 8000 mmHg·cm·s (n = 21)

- Failed peristalsis (n = 1119)

2.2. Input Image Preprocessing

| Algorithm 1: Decision tree for HRM classification |

|

- Image pre-processing and input dataset preparation: in this step, we removed the noise from the images by cropping out the unusable regions, selected the region of interest, especially for the IRP classification and we scaled and normalized the images to match the input requirements of the two CNN architectures, namely the DenseNet121 and the InveptionV3 models.

- Feature extraction: in this step we used the DenseNet121 model for extraction of the features for the swallowing disorder classification task and the InveptionV3 model for extracting the features for the IRP classification.

- Classification of the images: the custom fully connected layers that we added to the DenseNet121 and to the InceptionV3 models. More information can be found in the next paragraphs.

- Decision tree for the Chicago classification: a custom-made decision tree that works on batches of images (10 images per batch) and accepts as input the class for the IRP and the class for the swallowing disorder. The output of this decision tree is the actual class of the esophageal disorder. A detailed description of this decision tree can be found in the pseudocode below.

3. Results

3.1. Solution Pipeline

- Achalasia Type I: if no images were classified with panesophageal pressurization

- Achalasia Type II: if at least 2 images were classified with panesophageal pressurization

- Achalasia Type III: if at least 3 images were classified with premature contractions

- EGJ Outflow Obstruction: if none of the above rules were present

- Distal esophageal spasm: if at least 3 images were classified as premature contractions

- Hypercontractile esophagus: if at least 2 images were classified as DCI greater than 8000 mmHg·cm·s

- Ineffective Esophageal Motility: if at least 5 images were classified as week

- Fragmented peristalsis: if at least 5 images were classified as fragmented contractions

- Normal esophageal motility: None of the above rules can be applied

3.2. Metrics

- Accuracy: The proportion of correct classifications to the total number of instances. The automatic classification made by the neural network was compared with the diagnosis of human experts.

- Precision: The percentage of correctly recognized positives relative to the total number of positive classifications.

- Recall: The fraction of positives accurately detected relative to the total number of positives in the dataset.

- F1-Score: The median between Precision and Recall.

- Confusion Matrix: A confusion matrix summarizes the results of categorization problem prediction. The number of accurate and incorrect predictions is summed using count values and then split by class.

3.3. Integrated Relaxation Pressure Classification Results

- Accuracy—96.87%

- Precision—100.00%

- Recall—80.00%

- F1-score—88.88%

3.4. Swallowing Disorders Classification

3.5. Esophageal Motility Disorders Classification

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pandolfino, J.E.; Fox, M.R.; Bredenoord, A.J.; Kahrilas, P.J. High-resolution manometry in clinical practice: Utilizing pressure topography to classify oesophageal motility abnormalities. Neurogastroenterol. Motil. Off. J. Eur. Gastrointest. Motil. Soc. 2009, 21, 796–806. [Google Scholar] [CrossRef] [PubMed]

- Fox, M.R.; Sweis, R.; Yadlapati, R.; Pandolfino, J.; Hani, A.; Defilippi, C.; Jan, T.; Rommel, N. Chicago classification version 4.0© technical review: Update on standard high-resolution manometry protocol for the assessment of esophageal motility. Neurogastroenterol. Motil. Off. J. Eur. Gastrointest. Motil. Soc. 2021, 33, e14120. [Google Scholar] [CrossRef] [PubMed]

- Kou, W.; Carlson, D.A.; Baumann, A.J.; Donnan, E.; Luo, Y.; Pandolfino, J.E.; Etemadi, M. A deep-learning-based unsupervised model on esophageal manometry using variational autoencoder. Artif. Intell. Med. 2021, 112, 102006. [Google Scholar] [CrossRef] [PubMed]

- Kou, W.; Carlson, D.A.; Baumann, A.J.; Donnan, E.N.; Schauer, J.M.; Etemadi, M.; Pandolfino, J.E. A multi-stage machine learning model for diagnosis of esophageal manometry. Artif. Intell. Med. 2022, 124, 102233. [Google Scholar] [CrossRef]

- Kou, W.; Galal, G.O.; Klug, M.W.; Mukhin, V.; Carlson, D.A.; Etemadi, M.; Kahrilas, P.J.; Pandolfino, J.E. Deep learning-based artificial intelligence model for identifying swallow types in esophageal high-resolution manometry. Neurogastroenterol. Motil. 2021, 34, e14290. [Google Scholar] [CrossRef]

- Jungheim, M.; Busche, A.; Miller, S.; Schilling, N.; Schmidt-Thieme, L.; Ptok, M. Calculation of upper esophageal sphincter restitution time from high resolution manometry data using machine learning. Physiol. Behav. 2016, 165, 413–424. [Google Scholar] [CrossRef]

- Lee, T.H.; Lee, J.S.; Hong, S.J.; Lee, J.S.; Jeon, S.R.; Kim, W.J.; Kim, H.G.; Cho, J.Y.; Kim, J.O.; Cho, J.H.; et al. High-resolution manometry: Reliability of automated analysis of upper esophageal sphincter relaxation parameters. Turk. J. Gastroenterol. Off. J. Turk. Soc. Gastroenterol. 2014, 25, 473–480. [Google Scholar] [CrossRef] [Green Version]

- Czako, Z.; Surdea-Blaga, T.; Sebestyen, G.; Hangan, A.; Dumitrascu, D.L.; David, L.; Chiarioni, G.; Savarino, E.; Popa, S.L. Integrated Relaxation Pressure Classification and Probe Positioning Failure Detection in High-Resolution Esophageal Manometry Using Machine Learning. Sensors 2021, 22, 253. [Google Scholar] [CrossRef]

- Kim, J.H.; Kim, S.E.; Cho, Y.K.; Lim, C.H.; Park, M.I.; Hwang, J.W.; Jang, J.S.; Oh, M. Motility Study Club of Korean Society of Neurogastroenterology and Motility. Factors Determining the Inter-observer Variability and Diagnostic Accuracy of High-resolution Manometry for Esophageal Motility Disorders. J. Neurogastroenterol. Motil. 2018, 24, 506. [Google Scholar] [CrossRef]

- Fox, M.R.; Pandolfino, J.E.; Sweis, R.; Sauter, M.; Abreu Y Abreu, A.T.; Anggiansah, A.; Bogte, A. Bredenoord Inter-observer agreement for diagnostic classification of esophageal motility disorders defined in high-resolution manometry. Dis. Esophagus Off. J. Int. Soc. Dis. Esophagus 2015, 28, 711–719. [Google Scholar] [CrossRef]

- Bogte, A.; Bredenoord, A.J.; Oors, J.; Siersema, P.D.; Smout, A.J. Reproducibility of esophageal high-resolution manometry. Neurogastroenterol. Motil. Off. J. Eur. Gastrointest. Motil. Soc. 2011, 23, e271–e276. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Zeng, N.; Liu, S.; Zhang, Y.D. Utilization of DenseNet201 for diagnosis of breast abnormality. Mach. Vis. Appl. 2019, 30, 1135–1144. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Lu, J.; Behbood, V.; Hao, P.; Zuo, H.; Xue, S.; Zhang, G. Transfer learning using computational intelligence: A survey. Knowl.-Based Syst. 2015, 80, 14–23. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Li, C.; Liu, Z.; Ren, J.; Wang, W.; Xu, J. A Feature Optimization Approach Based on Inter-Class and Intra-Class Distance for Ship Type Classification. Sensors 2020, 20, 5429. [Google Scholar] [CrossRef]

- Jell, A.; Kuttler, C.; Ostler, D.; Hüser, N. How to Cope with Big Data in Functional Analysis of the Esophagus. Visc. Med. 2020, 36, 439–442. [Google Scholar] [CrossRef]

- Frigo, A.; Costantini, M.; Fontanella, C.G.; Salvador, R.; Merigliano, S.; Carniel, E.L. A Procedure for the Automatic Analysis of High-Resolution Manometry Data to Support the Clinical Diagnosis of Esophageal Motility Disorders. IEEE Trans. Biomed. Eng. 2018, 65, 1476–1485. [Google Scholar] [CrossRef]

- Wang, Z.; Hou, M.; Yan, L.; Dai, Y.; Yin, Y.; Liu, X. Deep learning for tracing esophageal motility function over time. Comput. Methods Programs Biomed. 2021, 207, 106212. [Google Scholar] [CrossRef]

- Mielens, J.D.; Hoffman, M.R.; Ciucci, M.R.; McCulloch, T.M.; Jiang, J.J. Application of classification models to pharyngeal high-resolution manometry. J. Speech Lang. Hear. Res. JSLHR 2012, 55, 892–902. [Google Scholar] [CrossRef] [Green Version]

- Geng, Z.; Hoffman, M.R.; Jones, C.A.; McCulloch, T.M.; Jiang, J.J. Three-dimensional analysis of pharyngeal high-resolution manometry data. Laryngoscope 2013, 123, 1746–1753. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hoffman, M.R.; Mielens, J.D.; Omari, T.I.; Rommel, N.; Jiang, J.J.; McCulloch, T.M. Artificial neural network classification of pharyngeal high-resolution manometry with impedance data. Laryngoscope 2013, 123, 713–720. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Author (Year) | Number of Patients | Characteristics | Main Purpose | Outcomes | Technology |

|---|---|---|---|---|---|

| Kou et al. [3]; (2021) | 2161 | A generative model using the approach of variational auto-encoder was developed, for an automatic diagnosis of raw esophageal manometry data The purpose was to model and understand swallow-level data, that would be further used to develop study-level models for automatic diagnosis | To identify the swallowing type There were 6 swallow types: normal, weak, failed, fragmented, premature, or hypercontractile, and 3 pressurization types: normal, compartmental pressurization, panesophageal pressurization | The overall accuracy for the train/validation/test dataset was 0.64/ 0.63/0.64 for predicting the 6-class swallow type Overall accuracy for train/ validation/test dataset was 0.87/0.86/0.87 for predicting the 3-class swallow pressurization | DL |

| Kou et al. [4]; (2022) | 1741 | Swallow-level stage: 3 models based on Convolutional Neural Networks (CNNs) were developed to predict swallow type, swallow pressurization (classifi cation model) and integrated relaxation pressure (regression model) At the study-level stage, the models were: the rule-based model (combined with probabilities), xg-boost model and arti cial neural network (ANN) | To diagnose esophageal motility disorders Model-predicted swallow-level outcomes formed the input data of study-level models, in training and validation The blended models were weighted by precision scores. | The best performance on the test dataset, in blended models, was 0.81 in top-1 prediction, and 0.92 in top-2 prediction (xgb+ann-1) | Combines DL and ML |

| Kou et al. [5]; (2021) | 1741 | An AI-based system that automatically classifies swallow types based on raw data from HREM | To automatically classify swallow types: normal, hypercontractile, weak-fragmented, failed, and premature | Swallow type accuracies from the train/validation/test datasets of 0.86/0.81/0.83 | DL |

| Frigo et al. [20] (2018) | 226 | Created a physio-mechanical model of esophageal function, and a database with parameters from healthy subjects, and different motility disorders In the first step, the relationships between the identified model parameters and pathologies were found In the second step, a decision support system was developed | Patients parameters are compared with the database and the group with the highest similarity index is chosen | Correct diagnosis in 86% of cases | Rule-based model |

| Wang et al. [21]; (2021) | 229 | A DL computational model, which leverages three-dimensional convolution and bidirectional convolutional long-short-term-memory models were used for HREM automatic diagnosis | To identify whether the esophageal function was normal, or there was a minor or major motility disorder. No final diagnosis of motility disorders was performed | Overall accuracy of the proposed model was 91.32% with 90.5% sensitivity and 95.87% specificity. | DL |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Surdea-Blaga, T.; Sebestyen, G.; Czako, Z.; Hangan, A.; Dumitrascu, D.L.; Ismaiel, A.; David, L.; Zsigmond, I.; Chiarioni, G.; Savarino, E.; et al. Automated Chicago Classification for Esophageal Motility Disorder Diagnosis Using Machine Learning. Sensors 2022, 22, 5227. https://doi.org/10.3390/s22145227

Surdea-Blaga T, Sebestyen G, Czako Z, Hangan A, Dumitrascu DL, Ismaiel A, David L, Zsigmond I, Chiarioni G, Savarino E, et al. Automated Chicago Classification for Esophageal Motility Disorder Diagnosis Using Machine Learning. Sensors. 2022; 22(14):5227. https://doi.org/10.3390/s22145227

Chicago/Turabian StyleSurdea-Blaga, Teodora, Gheorghe Sebestyen, Zoltan Czako, Anca Hangan, Dan Lucian Dumitrascu, Abdulrahman Ismaiel, Liliana David, Imre Zsigmond, Giuseppe Chiarioni, Edoardo Savarino, and et al. 2022. "Automated Chicago Classification for Esophageal Motility Disorder Diagnosis Using Machine Learning" Sensors 22, no. 14: 5227. https://doi.org/10.3390/s22145227

APA StyleSurdea-Blaga, T., Sebestyen, G., Czako, Z., Hangan, A., Dumitrascu, D. L., Ismaiel, A., David, L., Zsigmond, I., Chiarioni, G., Savarino, E., Leucuta, D. C., & Popa, S. L. (2022). Automated Chicago Classification for Esophageal Motility Disorder Diagnosis Using Machine Learning. Sensors, 22(14), 5227. https://doi.org/10.3390/s22145227