Abstract

Robotics has been successfully applied in the design of collaborative robots for assistance to people with motor disabilities. However, man-machine interaction is difficult for those who suffer severe motor disabilities. The aim of this study was to test the feasibility of a low-cost robotic arm control system with an EEG-based brain-computer interface (BCI). The BCI system relays on the Steady State Visually Evoked Potentials (SSVEP) paradigm. A cross-platform application was obtained in C++. This C++ platform, together with the open-source software Openvibe was used to control a Stäubli robot arm model TX60. Communication between Openvibe and the robot was carried out through the Virtual Reality Peripheral Network (VRPN) protocol. EEG signals were acquired with the 8-channel Enobio amplifier from Neuroelectrics. For the processing of the EEG signals, Common Spatial Pattern (CSP) filters and a Linear Discriminant Analysis classifier (LDA) were used. Five healthy subjects tried the BCI. This work allowed the communication and integration of a well-known BCI development platform such as Openvibe with the specific control software of a robot arm such as Stäubli TX60 using the VRPN protocol. It can be concluded from this study that it is possible to control the robotic arm with an SSVEP-based BCI with a reduced number of dry electrodes to facilitate the use of the system.

1. Introduction

Robotics has been successfully applied to help people with disabilities perform different tasks. Many individuals suffer motor limitations as a consequence of strokes, traumas, muscular dystrophies, cerebral palsies, or various neurodegenerative diseases such as amyotrophic lateral sclerosis (ALS). For these patients, daily activities such as reaching and moving objects can be an important problem. Assistance or collaborative robots have been developed to help subjects with motor limitations in performing daily tasks and to allow them a greater degree of autonomy. However, the handling of these robots through buttons or joysticks remains an obstacle for many users affected by motor dysfunctions.

Brain-computer interfaces (BCIs) are an interesting alternative for robot control by people with severe motor limitations. BCIs are non-muscular communication and control systems that a person can use to communicate his intention and act on the environment from measurements of brain activity [1,2,3,4,5,6,7,8]. The term was introduced by Jacques Vidal in 1973 [9] and in 1999 the definition of a BCI system was formalized during the first international meeting on BCI technology [8].

BCIs include sensors that record brain activity and software that processes this information in order to interact with the environment by means of actuators. In the majority of implementations, non-invasive BCIs based on the acquisition of EEG signals are used [10].

BCI’s potential as an assistive technology system was the main driver for its development. Patients with limited communication and movement capabilities can benefit from this technology, which includes communication protocols such as spellers [11,12,13,14] control of robot arms and neuro-prosthesis [15,16,17,18,19], control of motorized wheelchairs [20,21,22,23], home automation systems [24,25], virtual reality [26] and patients with paralysis that they can restore interaction with their environment [8].

Different paradigms have been used in BCI applications based on EEG for robot control, such as Motor Imagery (MI) [19,27,28,29,30,31,32,33], P300 evoked potentials [14,34,35], or Steady-State Visually Evoked Potentials (SSVEP) [36,37,38,39,40,41,42,43,44,45,46,47,48].

In [16] a rehabilitation application based on the control of a robot arm is shown to perform tasks of grasping parts by using a MI paradigm. The application allows two-dimensional control of a robot arm. The imagined movement of left, right, and both hands and relaxation allow movement to the left, right, up, and down of the robot [17].

With the SSVEP paradigm, different studies have been performed to control a robotic arm or hand, seeking that people with muscular or neuromuscular disorders can interact and communicate with their environment [47,49]. The tasks performed with a robotic hand have been opening or closing the hand [50] or giving the order for the robot hand to make a gesture (greeting, approval, or disapproval) [51].

Combinations of two or more paradigms have also been proposed to control a robotic arm such as P300/SSVEP [52,53], SSVEP/mVEP [54], SSVEP/MI/Electromyography (EMG) [55], SSVEP/Facial gestures [56], control of a robotic arm and a wheelchair by SSVEP/cervical movements [57], SSVEP/EOG [58], SSVEP/Eye [59], SSVEP/Computer Vision [60], SSVEP/MI [55], and SSVEP/P300/MI [61].

Existing studies of the application of BCI systems to the control of assistive devices are limited to pilot tests, and their use outside the laboratory has not been generalized [49,62,63]. Some of the drawbacks that prevent the widespread use of BCIs in patients are the high cost of hardware and development of the BCI [62], the laborious preparation of the interface (i.e., placement of the electrodes to acquire the EEG signal) [10], the need for training on the part of the subject in the use of the BCI and its calibration [64].

The use of open-source software (OSS) has made it possible to implement low-cost BCI applications without having to pay for user licenses. There are different types of OSS that can be implemented in projects related to BCIs [65,66]. These software platforms receive brain signals and allow scenarios to be designed that interact with external or simulation devices. Likewise, these platforms allow the processing of electroencephalographic signals such as filtering, feature extraction, and classification [67]. Some examples of commercial and free software platforms with which interaction between users and devices can be carried out for the implementation of a BCI are Matlab [68,69,70], Labview [71,72], Openvibe [73,74,75], BCI2000 [76,77,78], BCI++ [79], o OpenBCI [80]. Tools for offline analysis of EEG signals have also been developed, such as those developed in Matlab by the Swartz Center of Computational Neuroscience (SCCN) [81].

In this study, Openvibe is used to acquire, filter, process, classify and visualize EEG signals in the development of the BCI application. Scenario design is performed with toolboxes and can be used in real-time. Openvibe is a platform developed in C++. It allows working under Linux or Windows operating system. It is licensed under the Affero General Public License (AGPL), which is a copyleft license derived from the GNU General Public License designed for cooperation within the research community and was made by the Institut National de Recherche en Informatique et en Automatique (INRIA) [73].

Openvibe has been used for multiple applications and with different types of paradigms such as motor imagination to control a robotic arm or a robotic hand [30,31], motor imagination in neural plasticity with a wrist exoskeleton [32], with the P300 paradigm for the control of an electric chair [23], P300 for the control of a manipulator robot [35], surface electromyographic signals for the control of a functional electrical stimulator (FES) [82], neurorehabilitation [28,33,83], music [84], mobile robots [85] or processing with motor imagination or P300 [86,87,88,89] with different amplifiers [90].

Different types of interfaces or amplifiers have been used to obtain electroencephalographic signals through the Openvibe platform, such as Neurosky under the motor imagination paradigm [29] or Emotiv with the P300 paradigm [90]. An Enobio amplifier from Neuroelectrics is used in this study. Enobio has been used for the acquisition and processing of EEG signals in research related to different BCI applications, such as subjective behaviors in marketing [91] and other activities [92,93,94,95,96].

This work implements and tests a BCI application based on scalp EEG for the control of a robot arm with minimum requirements at the hardware and software levels from the point of view of the programmer and the user. These design requirements are addressed with the use of open-source BCI software. A cross-platform application is developed to interface OpenVibe and the proprietary software that controls the Staübly robot arm.

With these design specifications, the SSVEP paradigm has been selected, which, compared to the other paradigms commonly used in EEG BCIs, provides greater communication speed [7,10,97,98], the subject requires less training time [10,55,97,98,99], and can be operated with fewer electrodes placed on the occipital region [46,48,50,51,100,101,102,103]. The SSVEP signal appears in the visual cortex when the subject observes intermittent stimuli [104], and its response depends on the subject’s attention [105,106,107] and the size, shape, and frequency of the stimulus [7,19,108].

Minimum requirements are also considered for the BCI experimental subject to facilitate the use of the control system. In order to simplify the acquisition of the EEG signal, dry electrodes are chosen, avoiding the use of electroconductive gel. To also improve the readiness of implementation, just eight electrodes are selected.

The SSVEP-based BCI system designed has been tested with five healthy subjects without previous experience in BCIs. The results suggest that the control of the robot arm through the integration of an open BCI software platform with the software program developed to control the robot is feasible. The proposed system allows controlling the robot arm with a level of demand acceptable to the user.

The prototype developed is presented in the following sections. EEG signal processing and communication between the robot arm and the BCI application are described. The performance of the participants with the BCI is analyzed and compared with previous studies in the area. Finally, the results are discussed, and the conclusions of the study are presented.

2. Materials and Methods

2.1. System Description

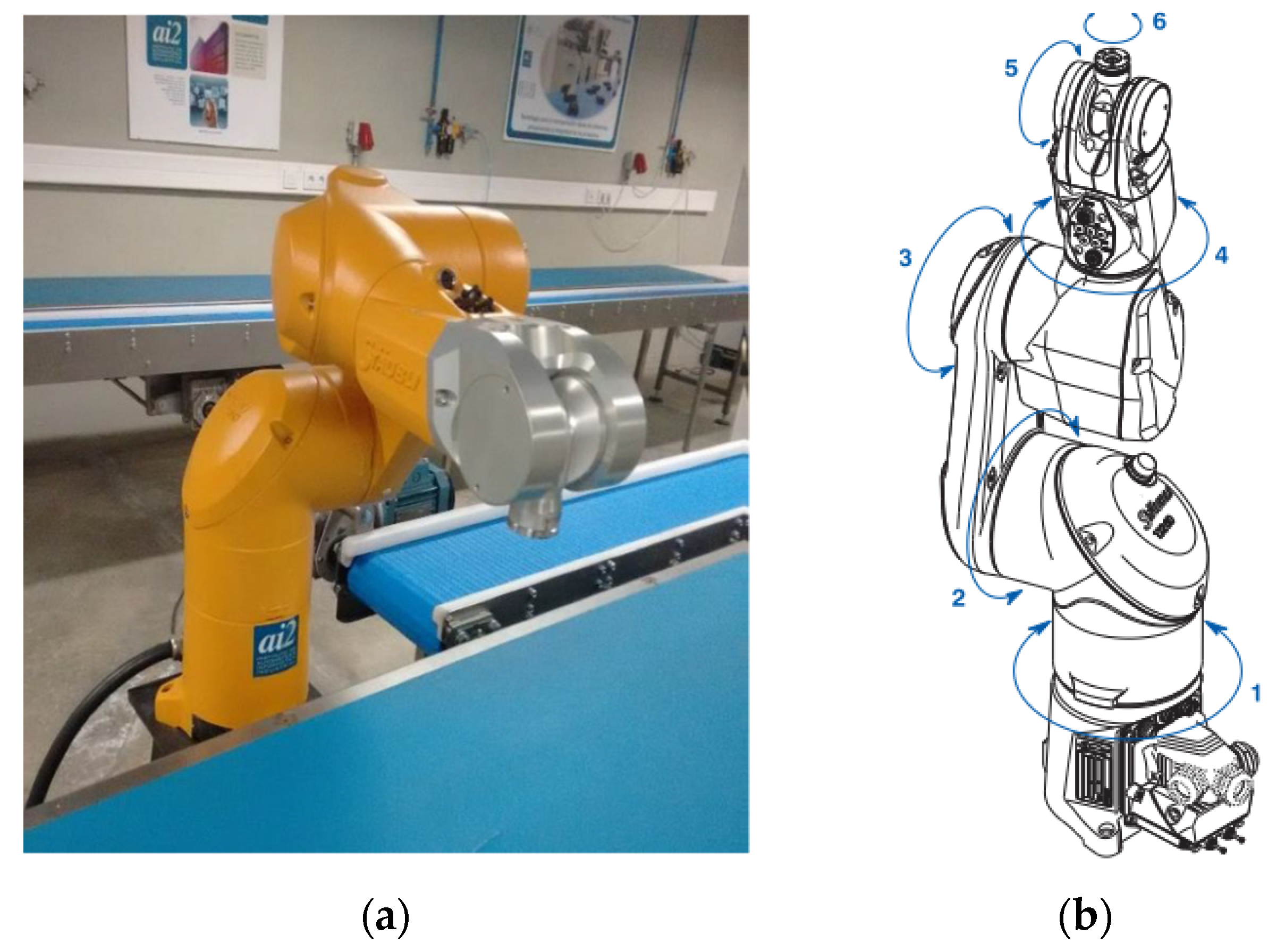

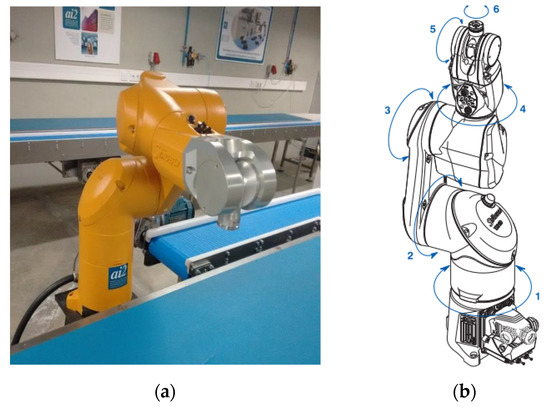

The SSVEP BCI control system proposed in this work is composed of two main subsystems: The SSVEP processing system and the robot system. EEG signals are wirelessly transmitted from the EEG Enobio amplifier. The EEG signal is then processed to obtain the control signal for the robotic arm. The SSVEP BCI communicates with the robotic arm via a TCP/IP communications protocol. The robotic arm is a six-axis industrial manipulator model TX60 from Stäubli [109]. It is a light-duty robot arm with a maximum load of 9 kg in certain positions. The robot arm weighs 3.5 kg, and its maximum reach is 670 mm (Figure 1).

Figure 1.

Robot Stäubli TX60. (a) robot arm in the lab; (b) degrees of freedom scheme.

As previously stated, among the different software platforms available for the acquisition, processing, and classification of EEG signals [76,110,111,112], Openvibe has been selected [97,98,113]. This software was developed by INRIA [113] in order to design, test, and use brain-computer interfaces. Its programming is based on block diagrams and allows the EEG signals to be acquired, filtered, conditioned, classified, and visualized. Openvibe is compiled in C++, so it allows quick and easy integration of the communication library with the robot.

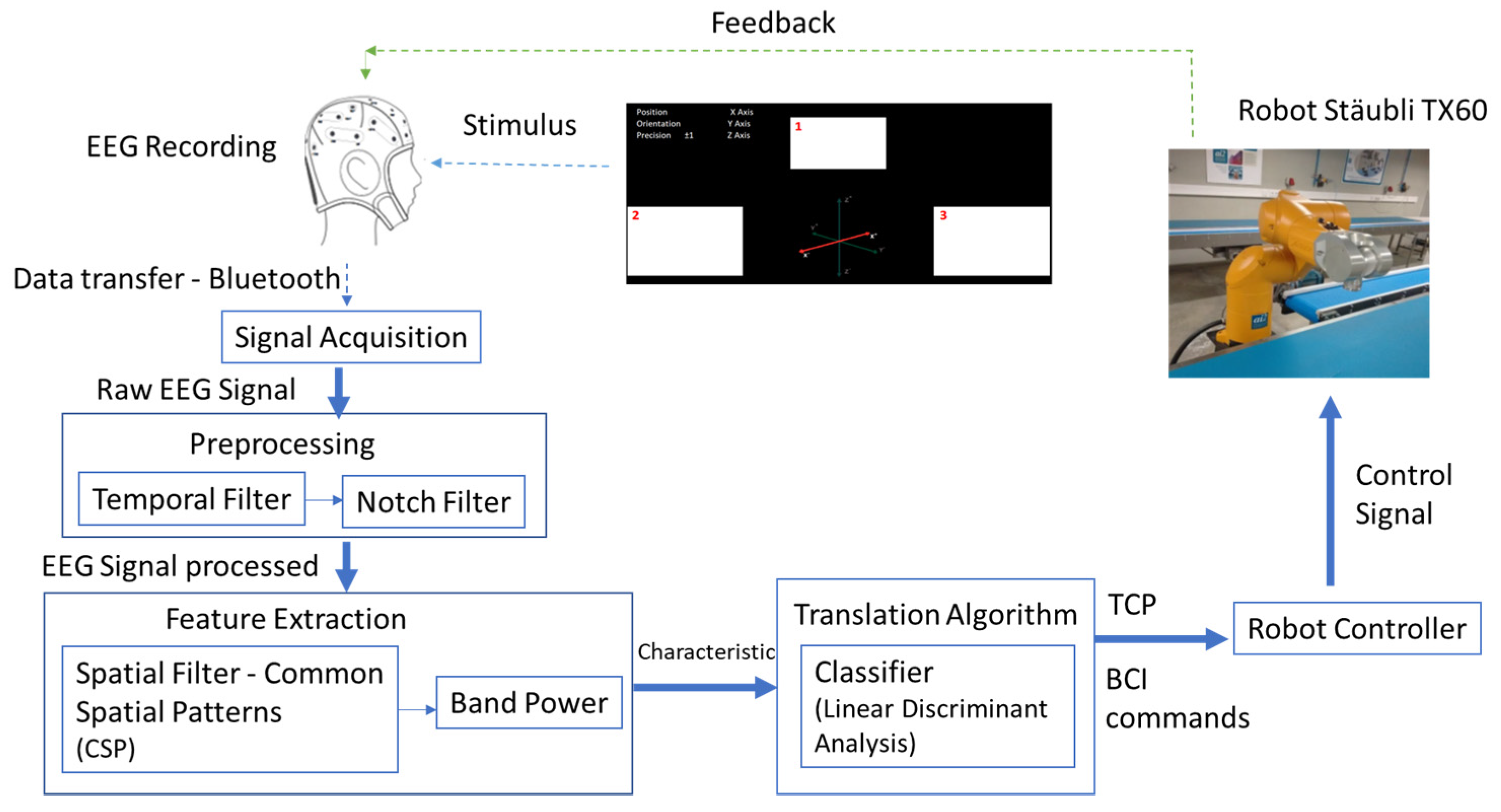

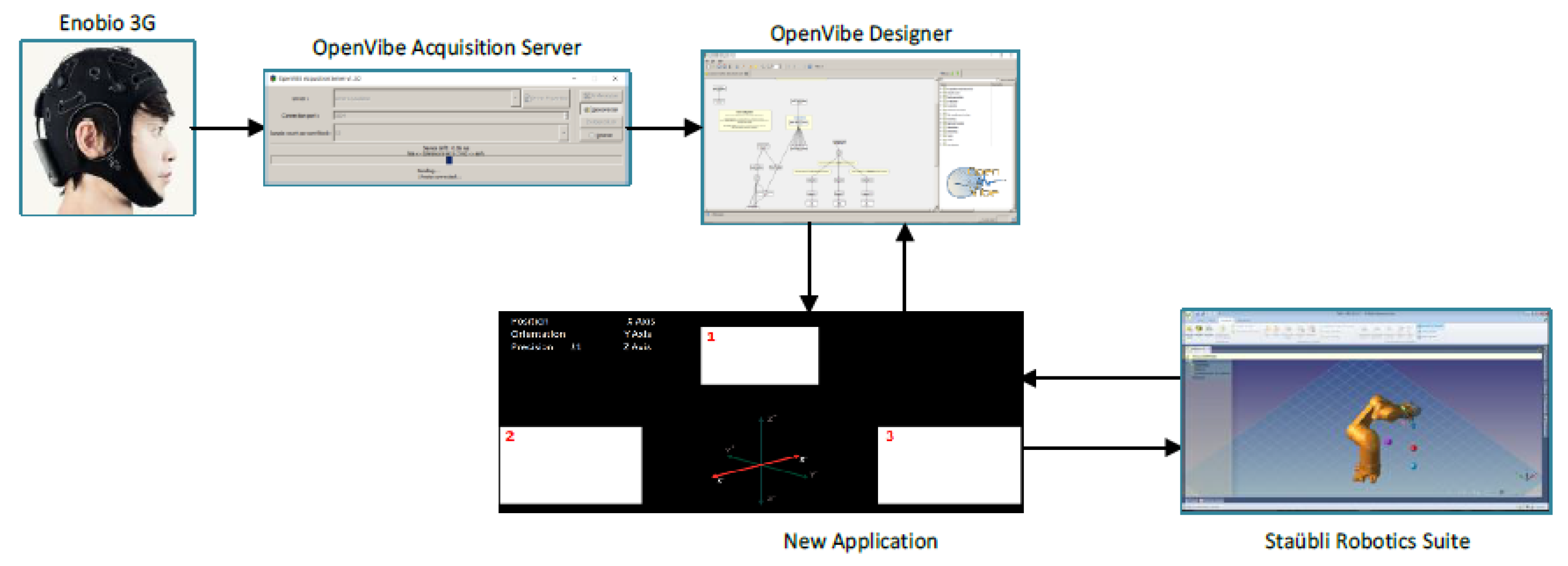

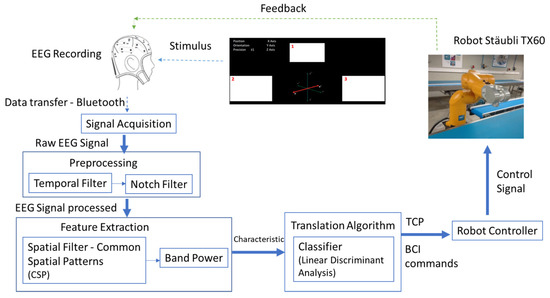

Figure 2 shows the architecture of the SSVEP BCI control system. The different modules are explained in the following sections.

Figure 2.

SSVEP-BCI methodology for robotic arm control.

2.2. Stimulus Generation

Stimulus generation for the elicitation of the SSVEP can be based on light-emitting diodes LEDs [114] or monitors [46,51,101,103,108,115]. In this study, intermittent visual stimuli are presented on the computer screen.

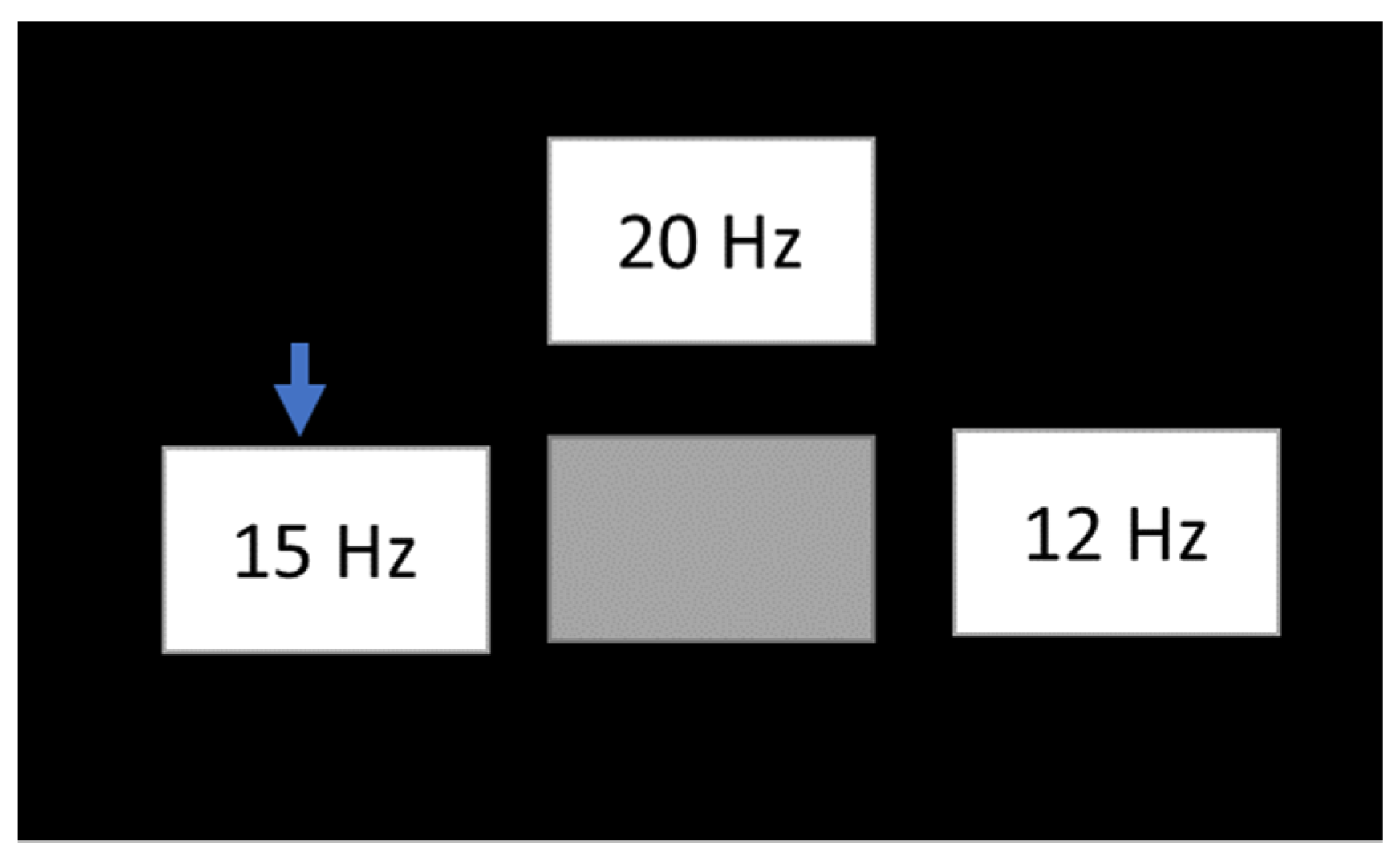

The stimulus type is a white square with a black background [115,116,117]. The size of the stimulus and the location of the stimuli on the screen is configured in Openvibe. Frequencies used are 12, 15, and 20 Hz [114]. These frequencies are multiples (1/5, 1/4, 1/3) of the update rate from a 60 Hz LCD screen (Figure 3).

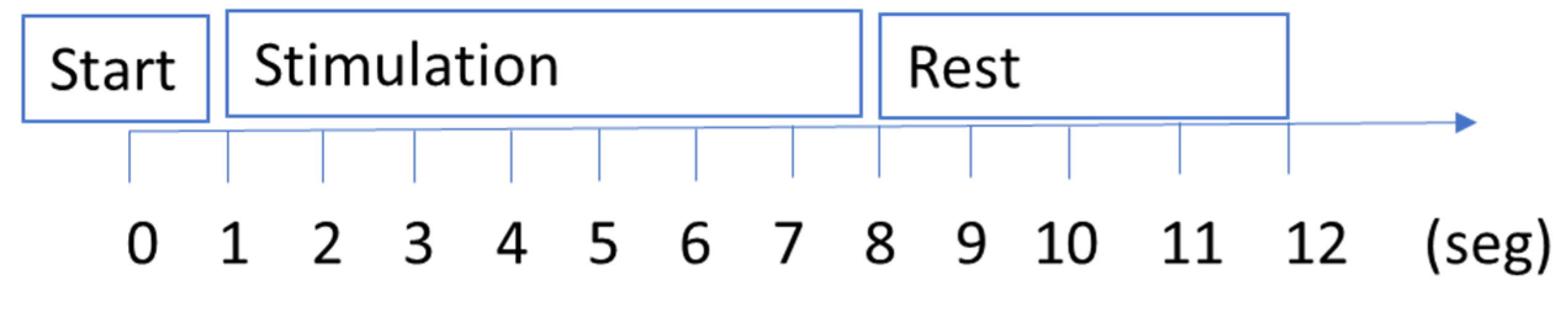

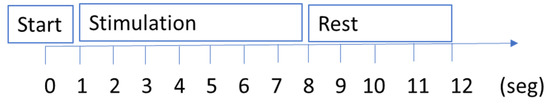

Figure 3.

Timing of a single SSVEP trial.

The experimental procedure to train the BCI spatial filters and classifier has 32 trials arranged in 8 runs. Every run has four trials. Every trial has a length of 12 s and consists of three sections: (1) stimulus presentation (arrow positioning), (2) visualization period, and (3) rest period and stimulus change.

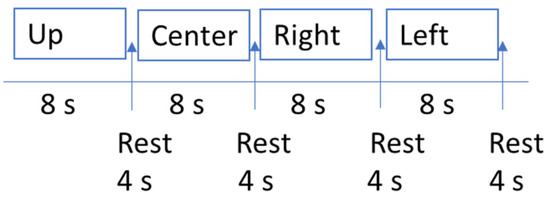

The duration of the stimulus is set to 7 s, the duration between stimuli or interval time of trials is set to 4 s, and the delay of the stimulus is 1 s (Figure 3). The stimuli are shown in sequence, and each stimulus is repeated eight times (Figure 4).

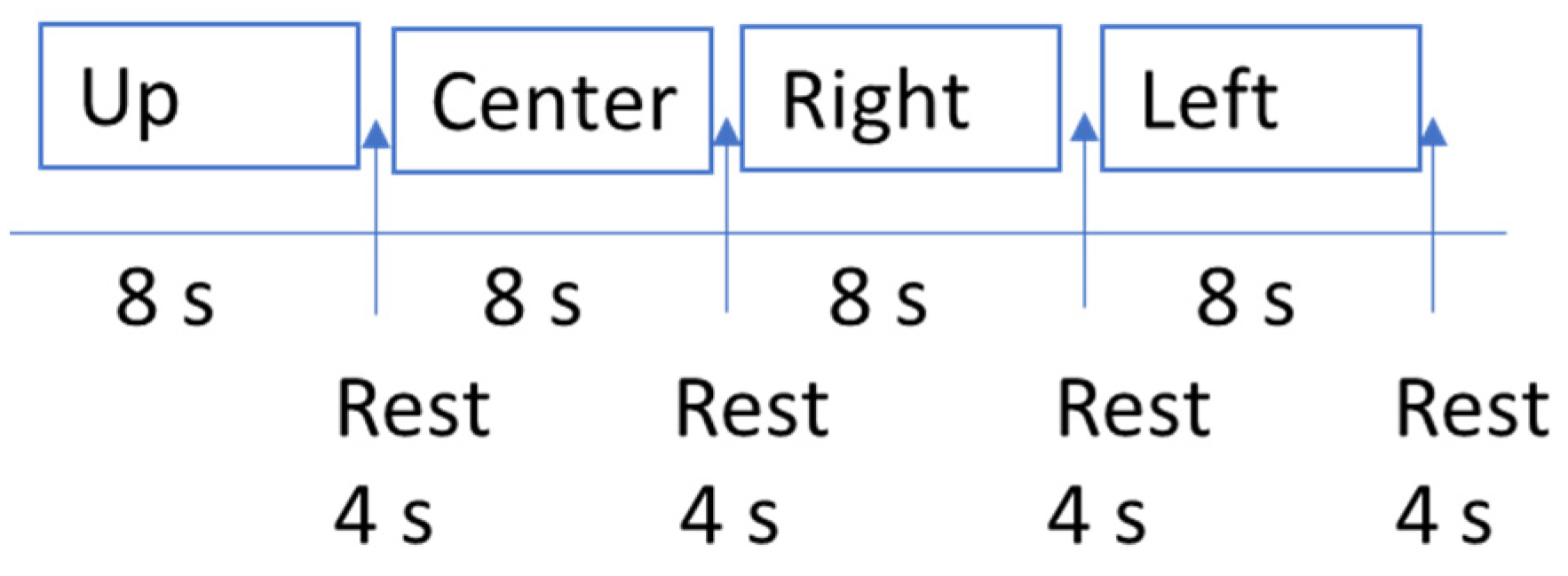

Figure 4.

Time duration for starting on stimulation frequency and resting period in one run.

Subjects observed on an LCD screen the three stimuli placed on the top, right, and left parts of the computer screen. The center square has the same color as the black background. The execution begins with the positioning of the arrow in one of the four stimuli, and then the stimuli begin to oscillate at 20 Hz (upper box), 15 Hz (left box), and 12 Hz (right box). Simultaneously, the subject must focus on the stimulus for 7 s. Then, the stimuli stop flashing, and the arrow is repositioned on one of the other stimuli. The sequence is random in each run (Figure 5).

Figure 5.

Stimuli frequencies.

2.3. Signal Acquisition

Several factors have been taken into account when selecting hardware and software components for the EEG-based BCI. Given the interest in a compact and portable solution for BCI control, Enobio digital amplifier from Neuroelectrics [118] was selected to acquire the EEG signals. The Enobio amplifier was developed for BCI research. It was chosen for its wireless technology and dry electrodes that facilitate the experimental setup.

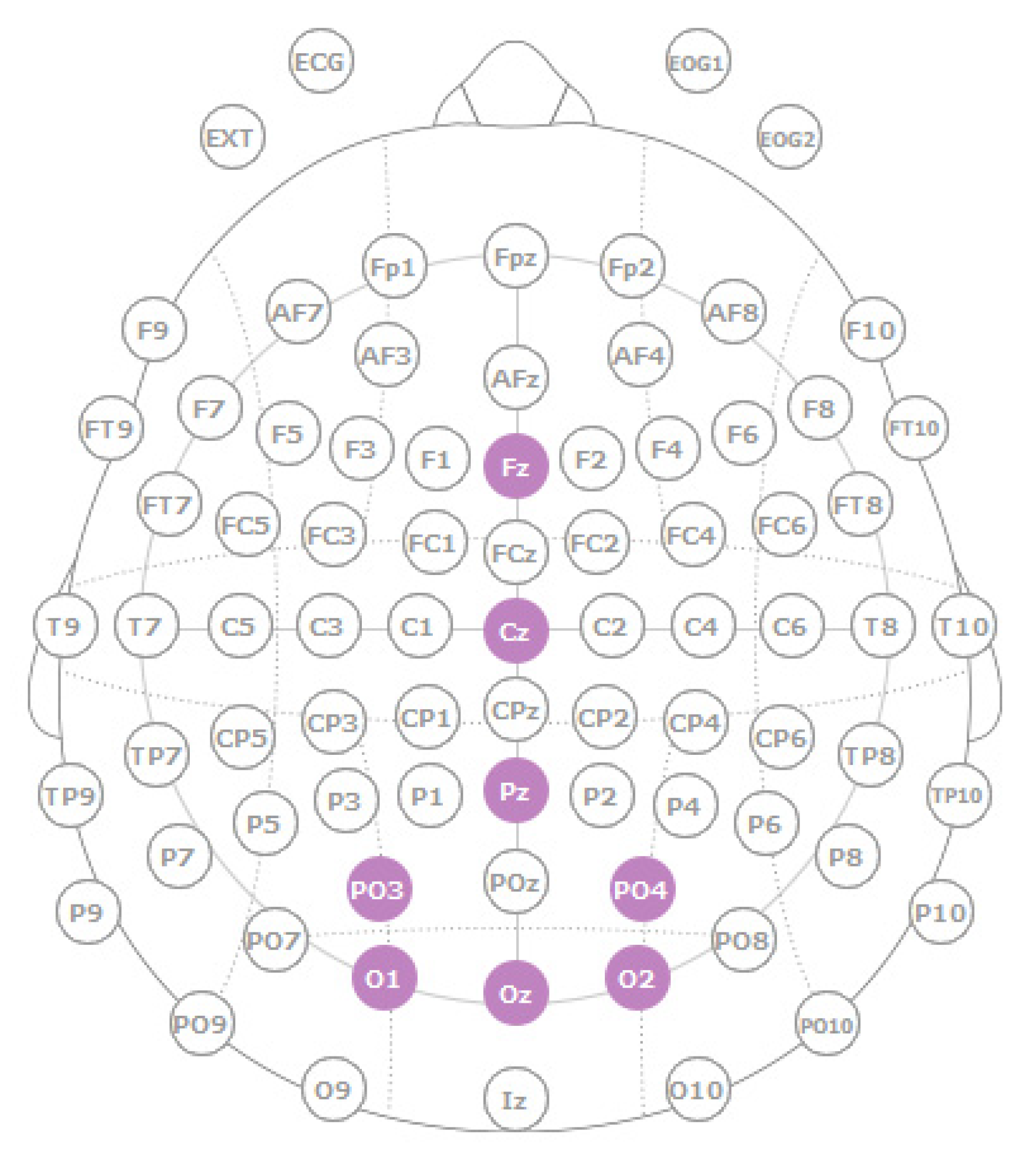

The EEG signal was acquired through channels O1, O2, Oz, PO3, PO4, Pz, Cz, and Fz around the occipital area according to the standard 10–20 electrode location system (Figure 6). Ground and reference electrodes were placed in the subject’s earlobe. The EEG signal was recorded using a sampling rate of 500 Hz and band-pass filtered between 2 and 100 Hz with an activated notch filter at 50 Hz. The sampled and amplified EEG signal is then sent to the computer via Bluetooth.

Figure 6.

Electrode disposition according to the international system 10–20.

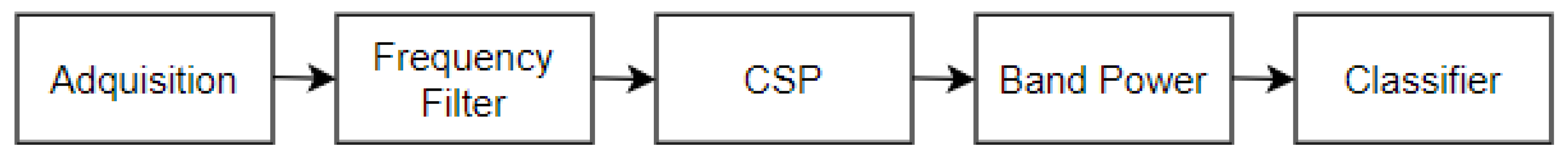

2.4. Signal Processing

The EEG signal is sent from Enobio to Openvibe through the Openvibe Acquisition Server module [113]. The EEG signal is processed in a five-step process: preprocessing, feature extraction, classification, command translation, and feedback to the BCI user. Its programming is based on block diagrams and allows the signals to be acquired, filtered, conditioned, classified, and visualized (Figure 7).

Figure 7.

Signal Processing Procedure.

Considering that the cognitive activity of interest in this study is in the range of 0.2–40 Hz, a fourth-order Butterworth band-pass filter between 6–40 Hz was applied to the EEG signal. According to [119], the SSVEP paradigm is less sensible to artifacts, due to its high signal-to-noise ratio (SNR) and robustness, than other typical BCI paradigms [120] said that SSVEP are little affected by muscular artifacts such as blinking and facial muscles’ EMG. As one of the objectives of this work was to research into practical applications of BCI systems in non-clinical settings, SSVEP was selected because of its high signal-to-noise ratio (SNR). Nevertheless, appropriate processing and filtering of artifacts must be conducted in every BCI system.

For feature extraction, we use a spatial approach [121]. A common spatial patterns (CSP) filter selects the best characteristics from the EEG signal. The CSP algorithm produces spatial filters that maximize the variance of bandpass-filtered EEG signals from one class while minimizing their variance for the other class [122,123,124,125,126].

The power spectrum was extracted in the considered frequency bands, respectively 19.75–20.25 Hz for 20 Hz flashing frequency, 14.75–15.25 Hz for 15 Hz flashing frequency and 11.75–12.25 Hz for 12 Hz flashing frequency. For single-trial data (7 s length), a 0.1-s sliding window was applied to extract the signal features. The window length was 0.5 s. A logarithmic mapping is applied to the power average, as it assists in the improvement of the classification performance [127].

In order to classify the features extracted, a linear discriminant analysis (LDA) classifier was used. The aim of LDA is to adjust a hyperplane that can separate the data representing the different classes [67,128]. This classifier is popular and efficient for BCI. For each condition, the training set was used to select the features and to train the LDA classifier on these features. Then, the trained LDA classifier was used to classify the features extracted from the test set [129,130,131].

Cross-validation was used in this study to validate the LDA classifier. The idea was to repeatedly divide the set of trials in a BCI timeline into two non-overlapping sets, one used for training and the other for testing. Cross-validation is typically used in Openvibe in a range from 4 to 10 partitions. In this case a 10-fold cross-validation method was used. The LDA classifier was trained on 90% of the feature vectors and tested on 10%, 10 times.

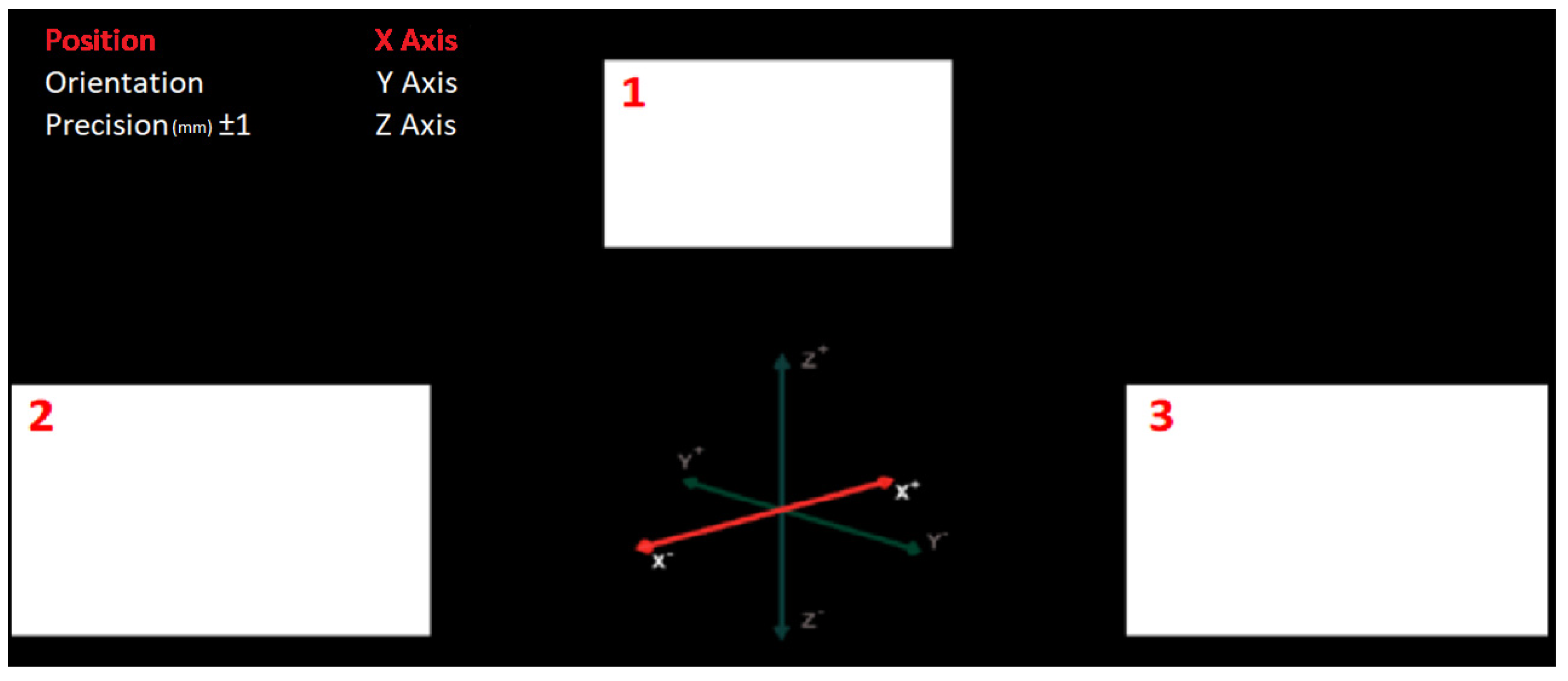

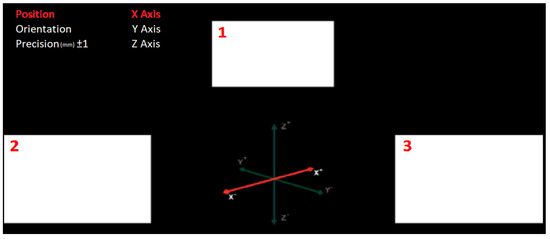

2.5. GUI for Robotic Arm Control

To control the robotic arm, six degrees of freedom are available in position (linear control) and orientation (angular control). The graphical user interface (GUI) designed for the control of the robot arm is shown in Figure 8. Both in the upper left corner of the application screen and on the coordinate axes in the center, the user can visualize the active degree of freedom to control.

Figure 8.

GUI for the control of the robotic arm.

The GUI shows the three visual stimuli used to elicit the SSVEP. Stimulus 1 allows changing the degree of freedom. It is oscillating at 20 Hz, and when the subject selects this stimulus, in case of having the position selected, it will alternate between the three main axes (X, Y, and Z) while, in case of having the orientation selected, it would alternate between the three main angles (alpha, beta, and gamma). Stimulus 2, whose frequency is 15 Hz, increases the position and angle negatively, while stimulus 3, programmed at a frequency of 12 Hz, increases them positively.

The increases are related to the selected precision and the degree of freedom to control, this magnitude being millimeters in the case of linear movement or degrees in the case of angular movement. The subject can vary the millimeters or degrees of movement of the robot with the precision indicator located in the upper left corner of the GUI.

2.6. Robot Communication Module

Figure 9 shows the block diagram for the robot arm control. Openvibe Acquisition Server acquires the EEG signal from Enobio. Openvibe Designer is used for the treatment of the received signal and the classification of the subject’s intention. Through the virtual reality peripherals network (VRPN), the application designed in Openvibe is connected with an external application in Visual Studio. The VRPN protocol has two servers, Analog VRPN Server and Button VRPN Server. The analog server is capable of receiving a connection from an analog client and sending analog signals. The Button VRPN Server is simply a digital server that, receiving the connection from a digital client, can send logical signals similar to the mechanism of a button. In the study, digital servers (Button VRPN Server) have been used to carry out actions (Table 1). The protocol used is TCP/IP, which guarantees the order and reception of the data sent, and also allows communication to start and end in a controlled manner.

Figure 9.

Control signal flowchart.

Table 1.

VRPN communication tags.

In Visual Studio, a program has been developed that receives the output of the classifier in Openvibe Designer and elaborates the control action to act on the robot through a series of events. This program sends the robot the modification of the position or orientation according to the precision and wishes of the subject.

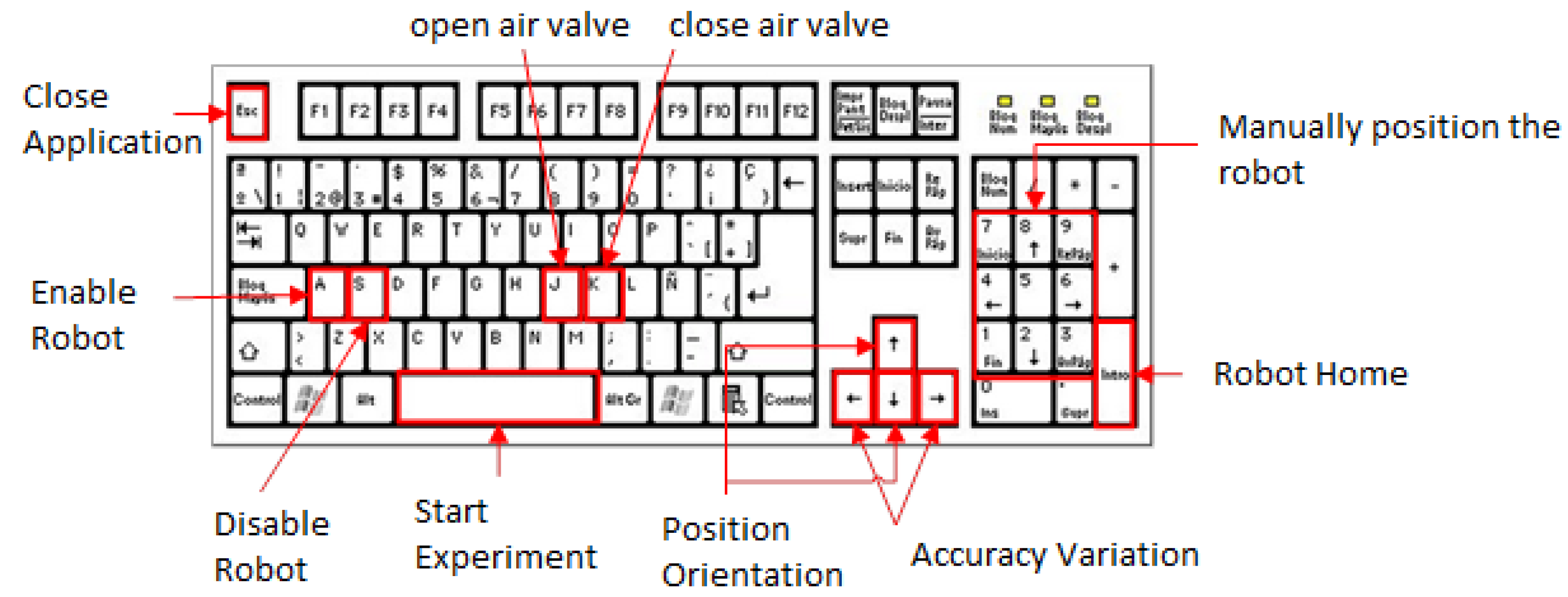

In addition, keyboard-configured security controls have been implemented that allow the experimenter to directly control the execution of the program. Figure 10 summarizes the selected set of keys and their use. The application is launched with the Space key. Once started, the robot is disabled for safety. The programmer can enable or disable the robot’s movements using the appropriate keys. It should be noted that disabling the robot does not cut communication, so it is a useful tool for debugging the response obtained.

Figure 10.

Combination of softkeys and their functionality.

The J and K keys allow the opening and closing of a small pneumatic solenoid valve. Thanks to this external drive, the robot can be equipped with a claw to carry out pick and place or similar tasks. With the arrows, it is possible to navigate through the menu. The user can select whether to control the position or orientation of the robot, as well as the precision (millimeters or degrees of freedom). Nine final positions have been programmed for the robot so that they maintain the same height level and vary their position on the plane. In this way, objects can be reached with greater speed in the programmed tasks. Finally, the Enter key returns the robot to its initial position.

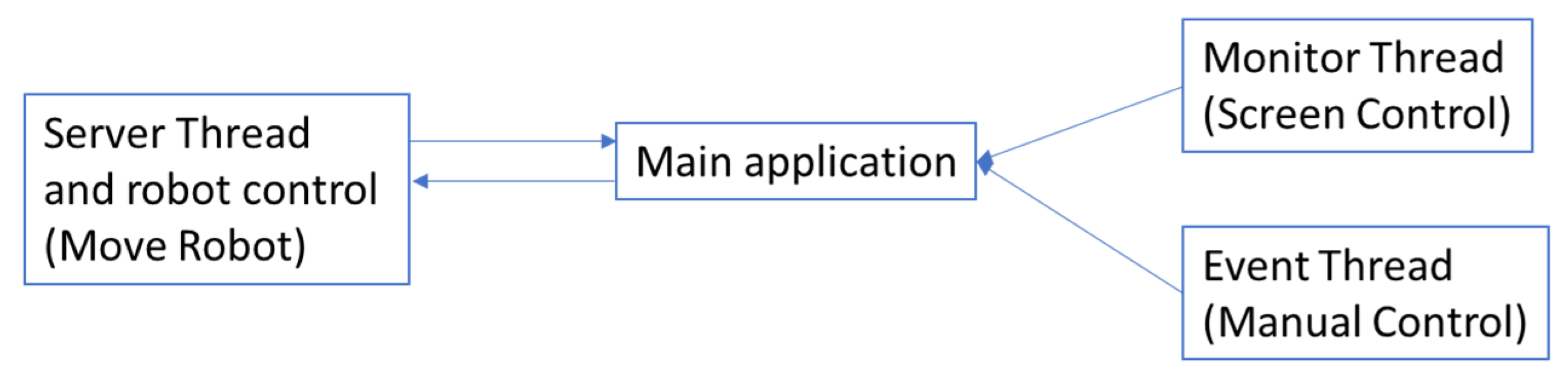

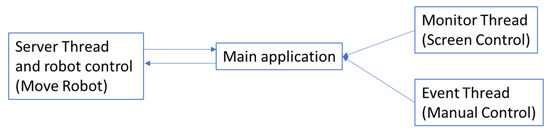

Concurrent programming has been used to guarantee the correct operation of the program. The robot needs to be constantly communicating, so it must always have an active server. Furthermore, the application must guarantee that the stimuli maintain the same blinking frequency to avoid false positives and even erroneous control actions. Finally, the screen is continually refreshing itself, and there are a number of external events coming from the keyboard that must be attended to, and actions are taken accordingly. For this reason, the main application runs sequentially and has three execution threads (Figure 11):

Figure 11.

Program execution threads.

- Server thread, in charge of making, maintaining, and recovering the connection with the robot.

- Monitor thread, in charge of refreshing the screen every time there is a modification in it.

- Event thread, responsible for managing all events external to the application and coming from the keyboard.

2.7. Subjects

A total of 5 healthy volunteers (3 males and two females; aged 19–30 years) with normal or corrected to normal vision participated in this study. The participants were students from the Universitat Politècnica de València. None of them had previous experience with BCIs. A medical history of epilepsy or the intake of psychoactive drugs were exclusion criteria for this experiment, and none of the participants was rejected for these causes.

Informed consent was obtained from all individual participants included in the study. Subjects were informed about the experimental procedure. Subjects were sitting in front of an LCD screen. They were instructed to focus their attention on the stimulus indicated on the computer screen. They were also instructed to avoid muscle and eye movements and to have a comfortable and relaxed position throughout the experiment.

2.8. Experimental Procedure

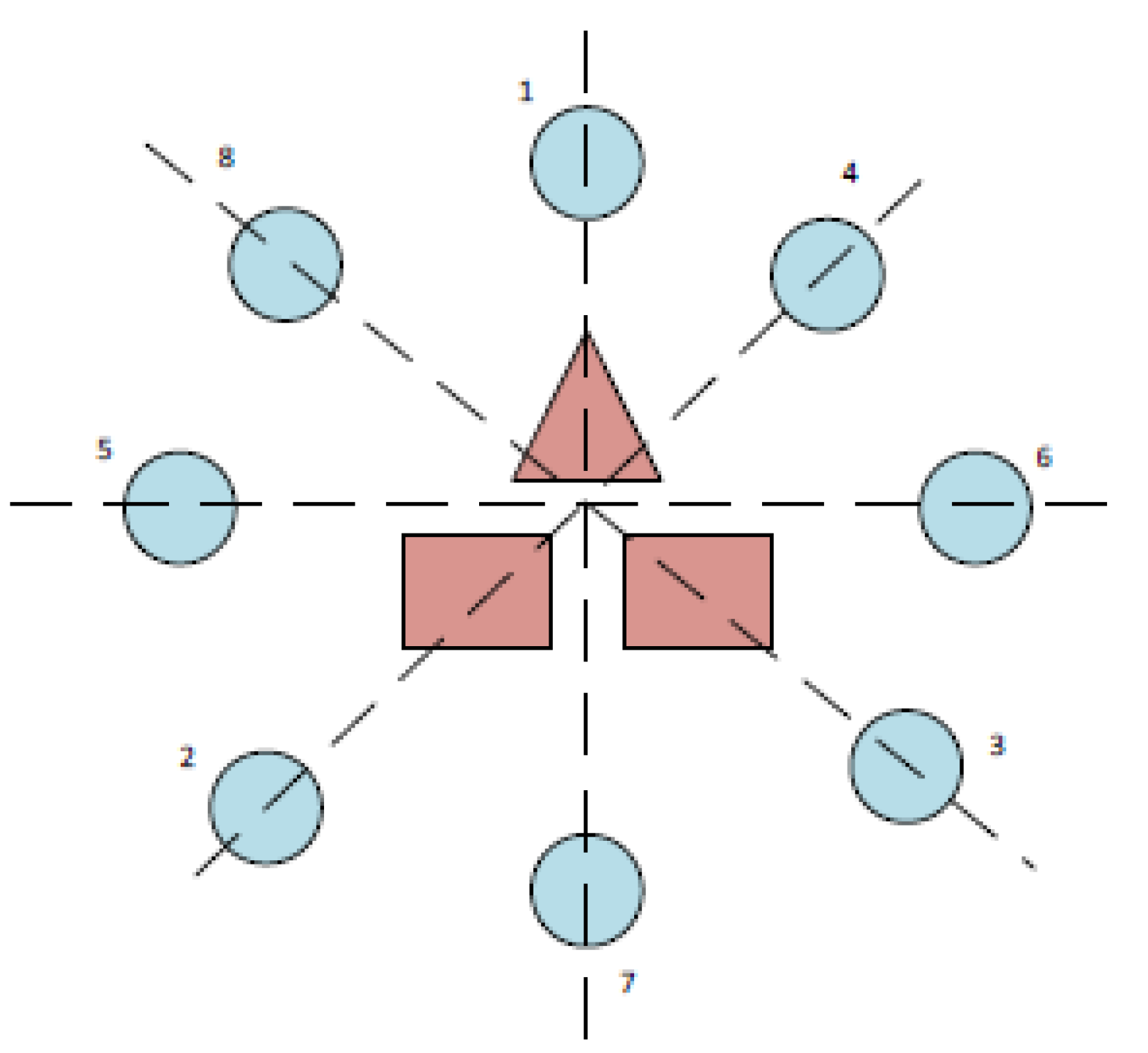

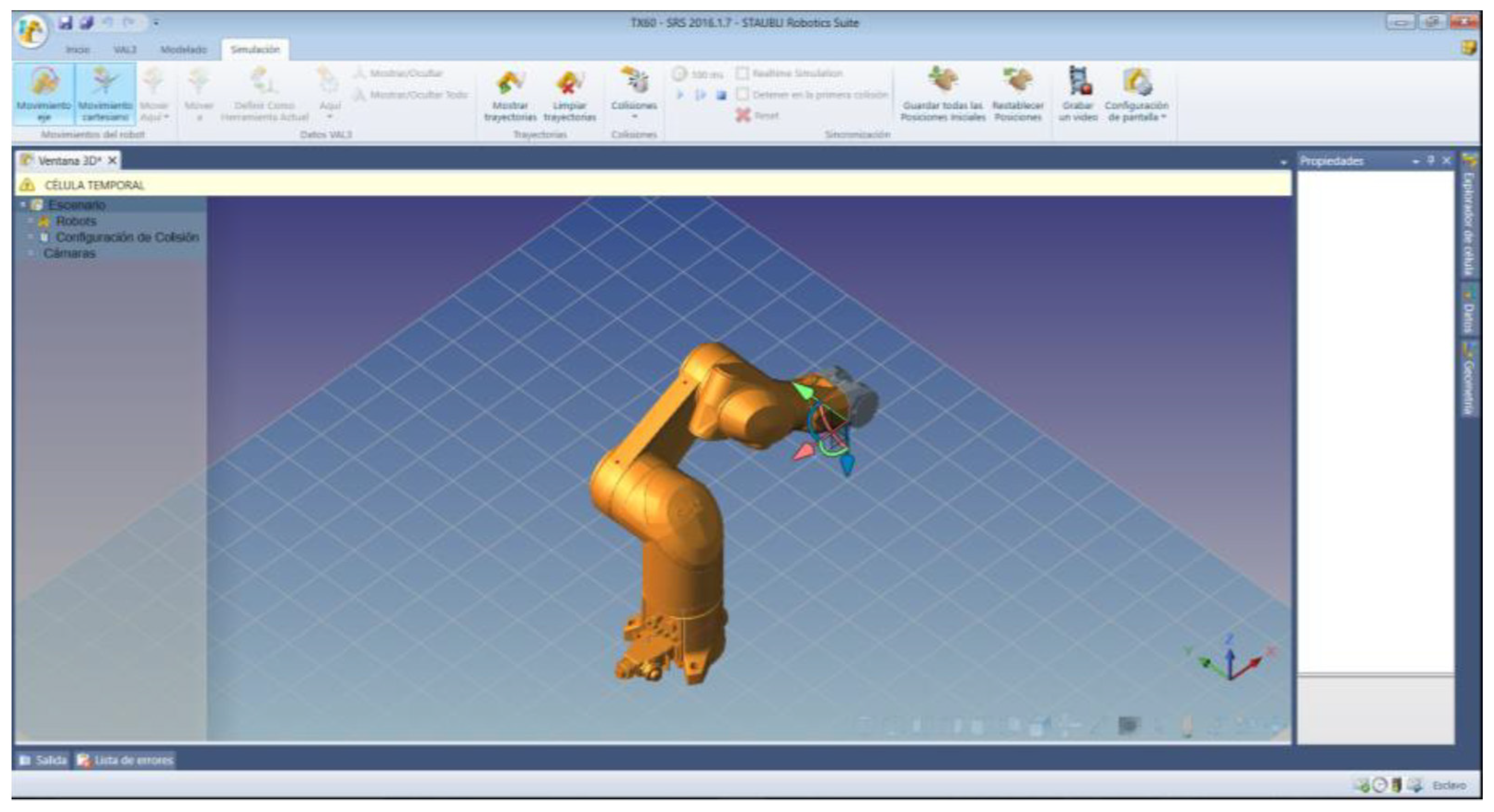

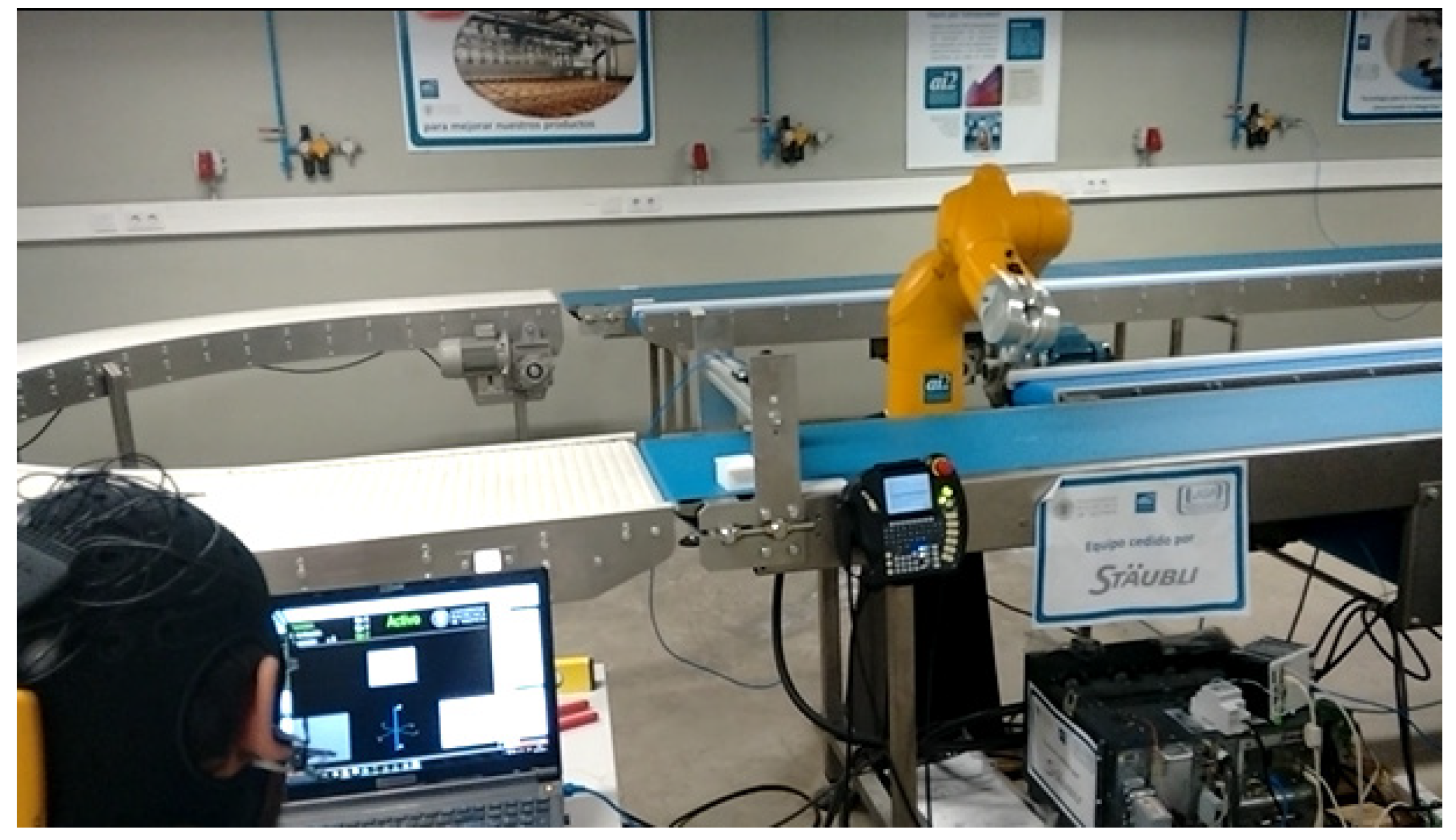

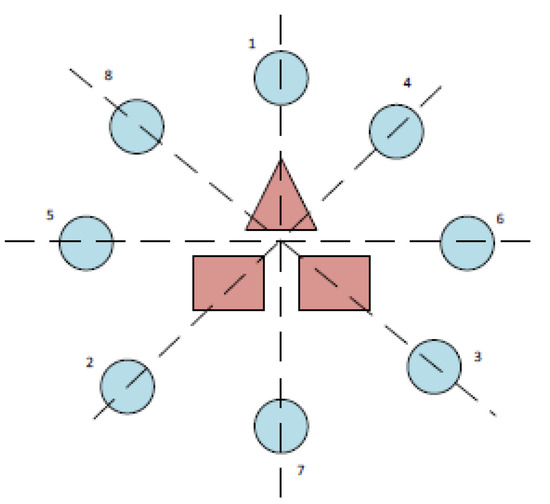

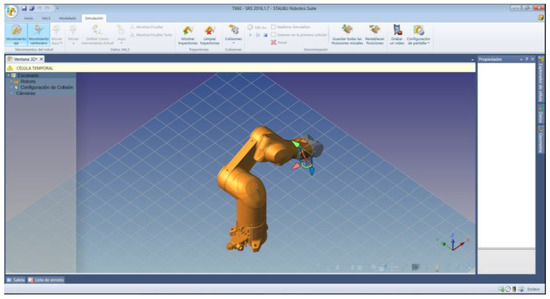

The experimental procedure has followed the recommendations established in [132]. The experimental subjects have to guide the robot arm to eight positions in a 360° range according to the sequence of numbers shown in Figure 12. The rotation of the extreme joint of the robot has been controlled, corresponding to the degree of freedom 6 of Figure 1b. The movement is visualized in the Stäubli simulation environment (Figure 13) and carried out by the 6th articulation of the robot arm. The subjects must rotate this robot arm articulation to the angular positions indicated by a series of circular targets that appear sequentially. To do this, the 12 Hz visual stimulus allows for clockwise rotation and the 15 Hz visual stimulus for counter-clockwise rotation. Once the required rotation is reached, the target is confirmed with the activation of the triangular stimulus that oscillates with a frequency of 20 Hz.

Figure 12.

Position of the targets and order of appearance.

Figure 13.

Stäubli Robotic Suite environment showing the rotation of the extreme joint of the robot.

Given that targets are distributed every 45°, Table 2 reflects the optimal theoretical movement sequence of the task. The triangular stimulus is initially aimed at the first target, so no turn is necessary. In order to achieve the second objective, a counter-clockwise movement of 135° is required, which corresponds to 45 theoretical steps in the program. In order to carry out the test, a minimum of 285 turning movements are required without counting the shots. The experimental setup is shown in Figure 14.

Table 2.

Minimum number of movements required for the test.

Figure 14.

SSVEP BCI control of the Stäubli robotic arm.

3. Results

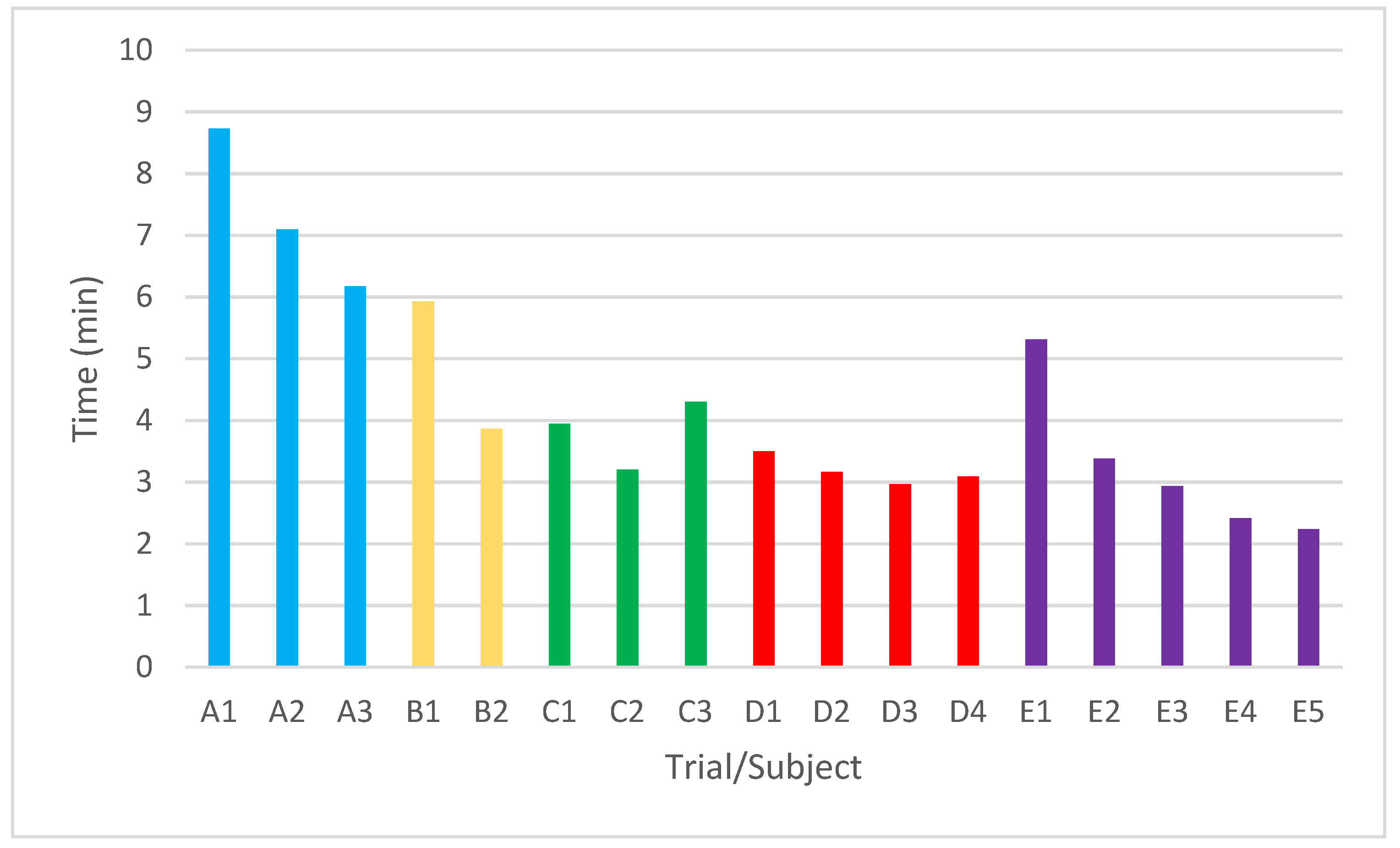

Table 3 shows the average time it takes each subject to complete the task. The average has been made considering the number of trials carried out by each subject. It is observed how subjects C, D, and E finished every experimental run in just over three minutes. These subjects are capable of selecting about three targets per minute with the complexity required by reaching and confirming every requested rotation (combined actions). Subjects A and B did not achieve good control of the BCI system and did not complete the full number of trials in the experiment.

Table 3.

Comparative results between subjects.

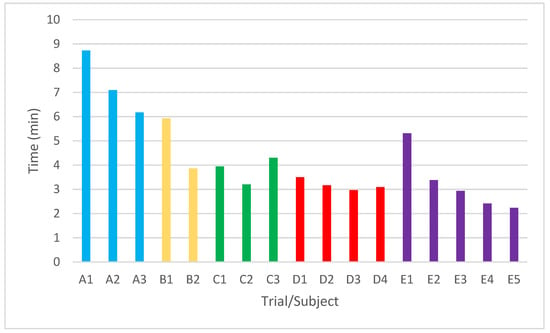

Figure 15 shows the evolution of learning for each subject, the time spent in each attempt, and the attempts made by each one. It is concluded that subjects A, B, D, and E had a continuous improvement in the task from their first attempt to the last attempt. In subject A the trial time decreased by 29.28%, in subject B by 34.84%, in subject D by 11.64%, and subject E reduced the time by 57.80%. Subject C decreased his time from the first to the second attempt, but in the last attempt, it increased by 8.89%, possibly due to the accumulated fatigue from task repetitions.

Figure 15.

Evolution of the time to complete the task in each attempt of the five subjects.

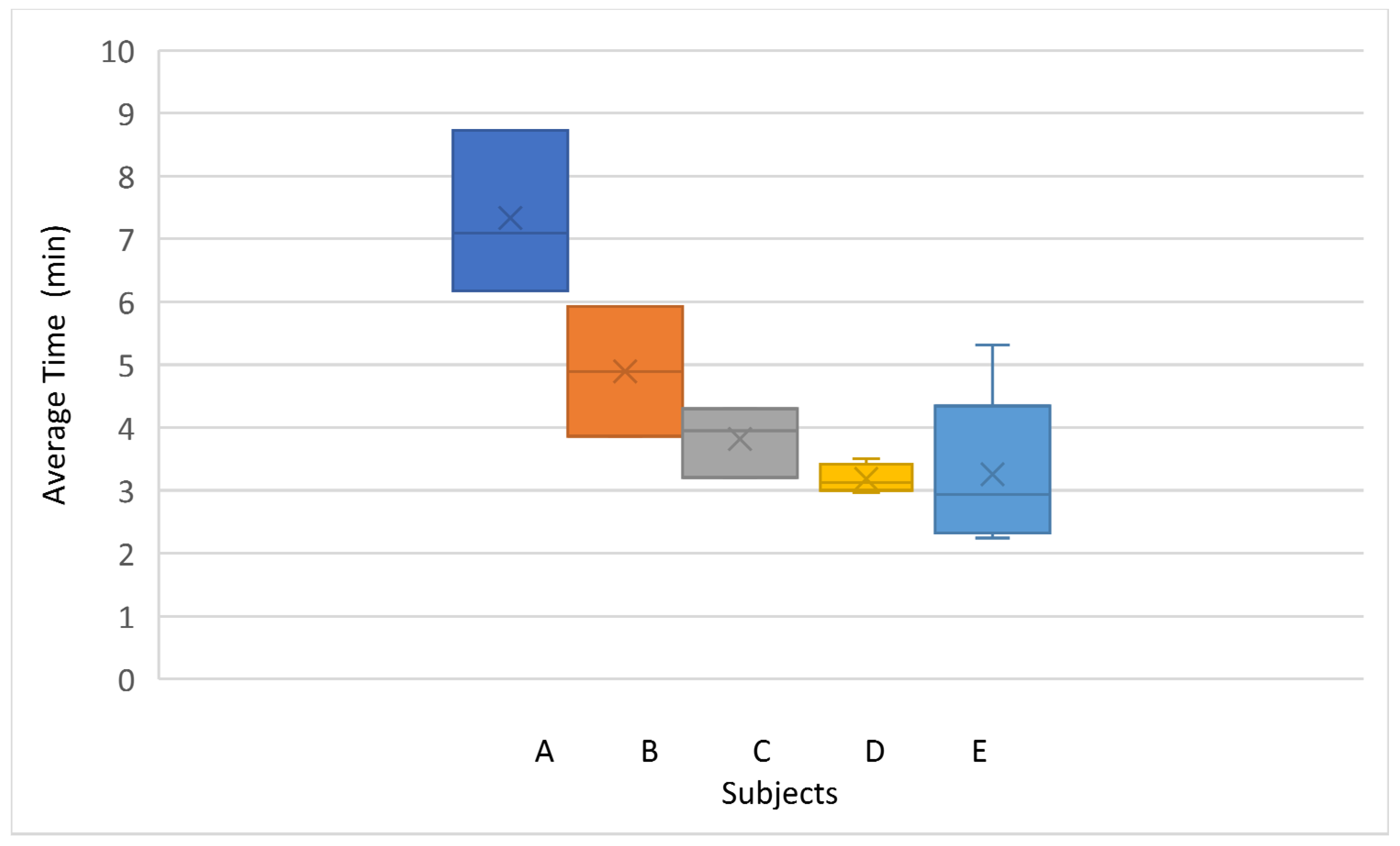

Figure 16 shows the average time of the attempts made by each subject. The best average time was obtained by subject D with four attempts and a total time of 3.18 min.

Figure 16.

Average total time to complete task per subject.

Table 4 compares the average success rate of the subjects after performing the experimental tasks according to the minimum number of movements required shown in Table 2. The success rate has been evaluated according to Equation (1). The average, as in the previous case, has been calculated based on the number of trials carried out.

Trial Success (%) = (theoretical movement/experimental movement) × 100

Table 4.

Success rate comparison between subjects.

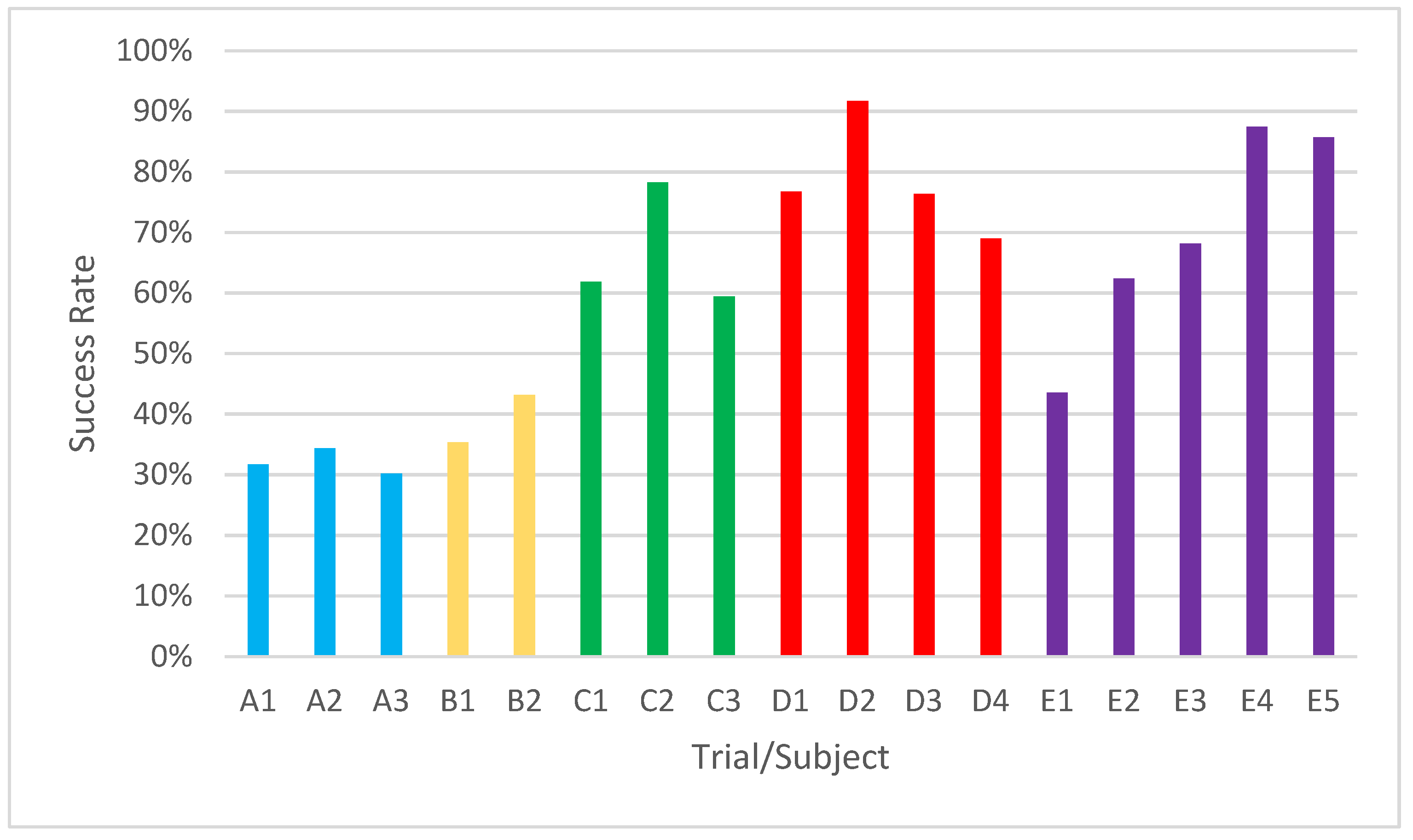

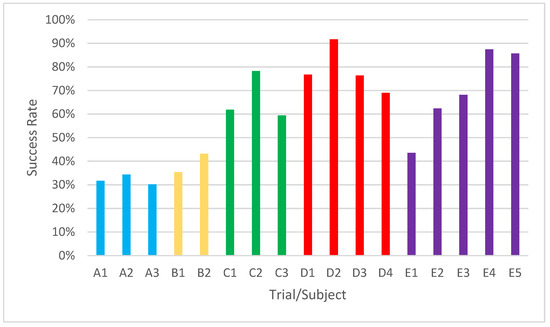

Figure 17 shows the percentage of movements calculated with respect to the theoretical minimum number of movements. Subjects A and B did not have a good performance. Their percentage for each attempt was below 50%. Subjects C, D, and E had performances above this value, with subject C achieving a peak performance in one of his attempts of 78.3%, subject D of 91.68% and subject E of 87.5%.

Figure 17.

Percentage of success of each subject for each attempt.

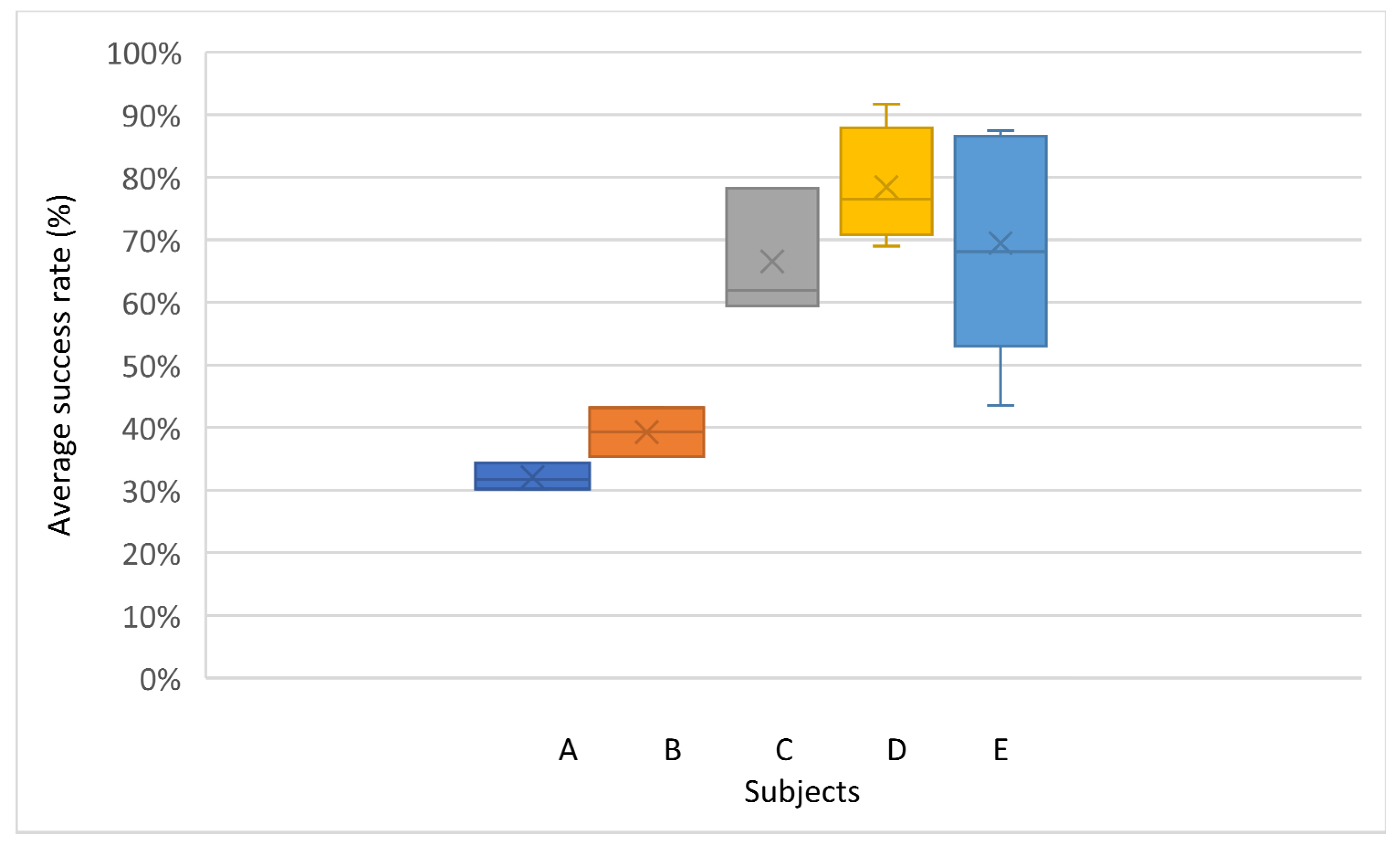

Figure 18 shows that the average success percentage was less than 40% in subjects A and B, in the range of 60 and 70% for subjects C and E, and above 70% for subject D.

Figure 18.

Distribution and average percentage of success of each subject.

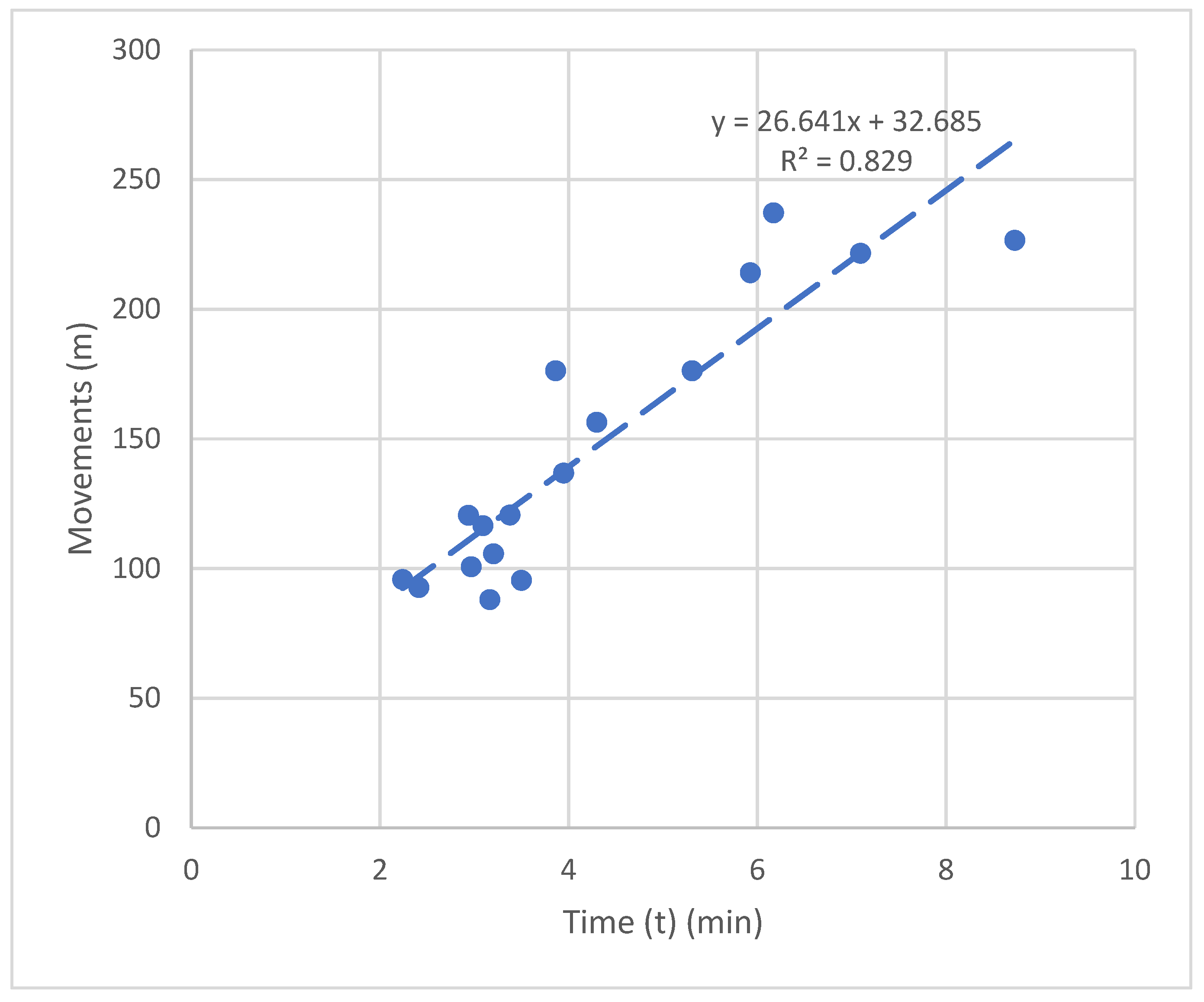

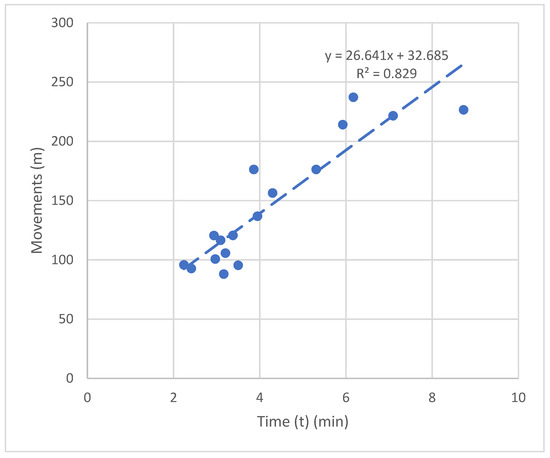

In Figure 19, the number of movements performed is displayed as a function of the time spent in completing the task.

Figure 19.

Relationship between time and the number of total movements completed.

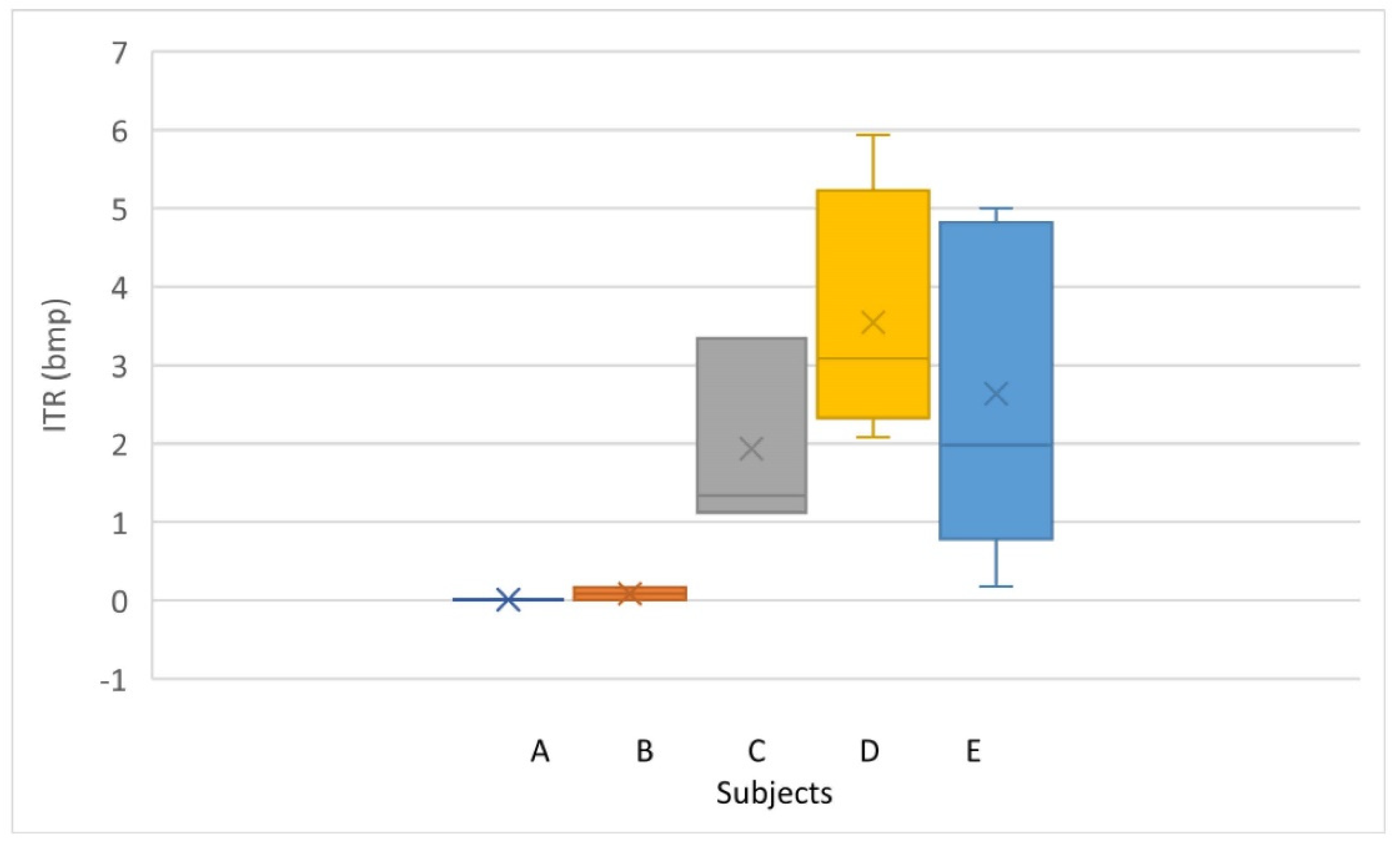

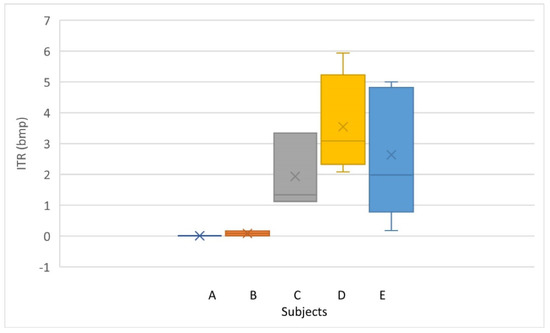

The information transfer rate (ITR) was used to evaluate the performance of the BCI system [8,133]. The calculation was made according to Equation (2), where T is equal to the stimulus time (7 s) plus the gaze change time (4 s); N is the number of stimuli, which in this case was three, and P is the precision of the classification (Table 4).

Table 5 and Figure 20 show the ITR of each subject in each trial, as well as the mean value achieved. The best subject had a classification of 91.68% (Table 4) with an ITR of 5.94 bit/min.

Table 5.

ITR for every subject and trial.

Figure 20.

Distribution and average ITR of each subject.

4. Discussion

The influence of the spatial filtering stage of the EEG signal with respect to the precision of the classifier has been analyzed. For this, three Laplacian filters [134,135] with the weights indicated in Table 6 have been compared with two CSP filters of two and eight dimensions. The CSP algorithm results in spatial filters that maximize the variance of the EEG signals corresponding to one class and minimize it for the other class [122,136].

Table 6.

Laplacian filter weights.

Table 7 shows the comparison of the spatial filters applied in an LDA classifier. The value obtained is the precision value of the classifier in each subject. From the comparison of the precision measure of the classifier, it can be observed that a significant improvement is obtained with the CSP filters compared to the Laplacian ones. The best response was obtained with a CSP filter of dimension 8, although the difference with respect to the CSP filter of two dimensions is not very significant.

Table 7.

Spatial filter comparison.

The study carried out is compared with other similar studies below. Out of the seventeen reviewed references, this study is the only one that worked with the Enobio interface with eight dry electrodes. Different studies in robotic application obtained acquisition signals from the occipital/parietal brain areas. There are many interfaces used for acquisition signal such as Enobio (Neuroelectrics) with 32 electrodes [40], Epoc Headset (Emotiv) [51,101,137], Ultracortex (OpenBCI) [45,47], Biosemi [103], Neuracle [46,48], BioRadio (Great Lakes NeuroTechnologies) [39], DSI (Wearable sensing) [42], Mindo 4S (National Chiao Tung University Brain Research Center) [43,138], EEG amplifier Nuamps Express (Neuroscan) [44] and NeuSen W8 (Neuracle) [41]. See Table 8 for a summary.

Table 8.

Robotic application, acquisition device and electrode characteristics.

The references reviewed used a combination of frequencies in the low and medium-range [46,47,48,50,103,137] or in the low range [51,101], while the present study used frequencies in the medium range. This study used a black/white stimulus color as in [51], but with different frequencies, while other studies used stimulus color such as red, blue, and purple, or they used other types of stimuli such as images or names. See Table 9 for a summary.

Table 9.

Stimuli characteristics.

This study presents a preprocessing and feature extraction process similar to [51]. Both studies used Butterworth fourth-order filters, Common Spatial Pattern (CSP) filters and Band Power (BP) extraction. See Table 10 for a summary.

Table 10.

Signal processing characteristics.

This study used the Linear discriminant analysis (LDA) as classification, while other studies used other types of classification such as Support vector machine (SVM) [39,42] or Canonical correlation analysis (CCA) [43,45]. Although other classifiers had an accuracy of 85.56% to 95.5%, the LDA classifier had good results for three of the five subjects in this study. Taking into account the task objectives, the classification accuracy can be considered acceptable above 80%. The three subjects that were able to control the BCI had 86% peak average accuracy in a range between 78.30% and 91.68%.

In subsequent studies, the authors intend to improve the classifier training method by coding a wrapper solution as proposed in [139]. This method consists in explicitly defining for each fold which time segments of the signal should belong to the train set and which to the test set, and using this same segmentation for training all of the supervised learning components in all the signal processing scenarios. It is also intended in future studies to compare different classifiers.

Trials have been carried out in subjects without prior experience in BCI applications, so with perseverance and carrying out more trials better results could be achieved. This learning process can be observed in Figure 15 for every participant in the study.

The performance of the participants in the study can be affected by some variables of the study, such as stimulus type and color, the use of LEDs instead of a computer screen for presenting the intermittent stimulus, or selecting another combination of electrodes in the occipital cortex. The authors evaluated the effect of some of these parameters in a previous study [114]. In that work, it was concluded that both white and red colors could be used for medium frequency intermittent stimulus, and both green and red for low frequency, while at high frequency, there are no differences between colors. That is the reason why white stimuli are used in this study.

The perception of the subjects regarding the experiment was that completing the task was feasible, although it required concentration. Participants agreed that the application carries out their wishes except on rare occasions, providing a sense of control. Regarding the placement of the dry electrodes and the perceived pain, all agree that the placement has been simple, and the pain is bearable.

The main limitations of the present work are related to the use of dry electrodes and the number of participants. The use of wet electrodes can improve the quality of the EEG signals obtained and, consequently, the success rate of the experimental trials. However, the aim of this study was to build a BCI system that is easy to apply, with few electrodes, and comfortable to use, avoiding the nuisance of applying the electro-conductive gel. The future success of BCI systems applied to, for instance, assistance robotics or videogames depends on this readiness of use, where the user can wear the electrodes without external assistance.

Regarding the subjects, results showed that although a majority learn and improve in the course of the trials. There is a percentage, in this case, 40% of the subjects where the precision rate is less than 50%. This percentage is close to the reported BCI illiteracy rate [140] in which the subjects are unable to use the BCI. In future studies, the authors intend to increase the number of experimental subjects to corroborate the obtained results.

Finally, a matter of importance is to continue investigating with this paradigm, for example incorporating new functionalities, other analyses with EEG signals algorithms, different stimuli, an embedded application, or the replacement of the screen with LEDs.

5. Conclusions

The SSVEP paradigm was used to guide an industrial robot arm through the analysis and processing of the EEG data obtained through an Enobio amplifier with dry electrodes. A cross-platform application was obtained in C++. This platform, together with the open-source software Openvibe, can control a Stäubli robot arm model TX60. Additional security controls through the keyboard were implemented.

Five healthy subjects tried the BCI. Two of them were unable to successfully control the BCI device. This proportion agrees with previous results related to BCI illiteracy rates [140,141]. Regarding the subjects that were able to control the BCI, an average of around 71.5% success (peak performance average of 86%) in the application was obtained in subjects C, D, and E, with an average task completion time of around 3 min. This means that these subjects are capable of performing different actions every 23 s and with a reliability of more than 70% with eight dry EEG electrodes.

It has been confirmed that the black and white colors used in the SSVEP paradigm stimulus have shown good results and that these colors are acceptable to use in an experiment such as the one carried out.

The main conclusion of the study is that it is possible to control in real-time the robotic arm with a cross-platform application developed in C++, using openly available tools to develop an SSVEP-based BCI. The BCI operates with a reduced number of dry electrodes to facilitate the use of the system.

Author Contributions

Conceptualization, E.Q. and J.D.; methodology, J.D. and E.Q.; software, J.D. and N.C.; validation, J.D. and N.C.; formal analysis, N.C., E.Q. and E.G.; investigation, J.D., E.Q., N.C. and E.G.; resources, E.Q., N.C. and E.G.; data curation, J.D.; writing—original draft preparation, J.D. and E.Q.; writing—review and editing, E.Q. and N.C.; supervision, E.Q. and E.G.; project administration, E.Q. and E.G.; funding acquisition, E.Q. and E.G. All authors have read and agreed to the published version of the manuscript.

Funding

Funding for open access charge: Universitat Politècnica de València.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this paper:

| BCI | Brain-computer interface |

| CSP | Common spatial patterns filter |

| EEG | Electroencephalography |

| EMG | Electromyography |

| GUI | Graphical user interface |

| ITR | Information transfer rate |

| LCD | Liquid crystal display |

| LDA | Linear discriminant analysis |

| mVEP | Motion-onset visual evoked potential |

| MI | Motor imagery |

| P300 | Event-related potential component |

| SPSS | Statistical Package for the Social Sciences |

| SSVEP | Steady-State Visual Evoked Potentials |

| TCP/IP | Transmission Control Protocol/Internet Protocol |

| UDP | User datagram protocol |

| VEP | Visual evoked potential |

| VRPN | Virtual reality peripherals network |

References

- Lotte, F.; Nam, C.S.; Nijholt, A. Introduction: Evolution of Brain-Computer Interfaces. In Brain-Computer Interface Handbook: Technological and Theoretical Advances; Nam, C.S., Nijholt, A., Lotte, F., Eds.; CRC Press: Oxford, UK, 2018; pp. 1–8. ISBN 9781351231954. [Google Scholar]

- Wolpaw, J.R.; Wolpaw, E.W. Brain-Computer Interfaces: Something New under the Sun. Brain-Comput. Interfaces Princ. Pract. 2012, 3, 123–125. [Google Scholar] [CrossRef]

- Klein, E.; Nam, C.S. Neuroethics and Brain-Computer Interfaces (BCIs). Brain-Comput. Interfaces 2016, 3, 123–125. [Google Scholar] [CrossRef]

- Nijholt, A.; Nam, C.S. Arts and Brain-Computer Interfaces (BCIs). Brain-Comput. Interfaces 2015, 2, 57–59. [Google Scholar] [CrossRef]

- Schalk, G.; Brunner, P.; Gerhardt, L.A.; Bischof, H.; Wolpaw, J.R. Brain-Computer Interfaces (BCIs): Detection Instead of Classification. J. Neurosci. Methods 2008, 167, 51–62. [Google Scholar] [CrossRef] [PubMed]

- Lotte, F. A Tutorial on EEG Signal Processing Techniques for Mental State Recognition in Brain-Computer Interfaces. In Guide to Brain-Computer Music Interfacing; Miranda, E.R., Castet, J., Eds.; Springer: London, UK, 2014; ISBN 978-1-4471-6583-5. [Google Scholar]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain Computer Interfaces, a Review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef] [PubMed]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-Computer Interfaces for Communication and Control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Vidal, J.J. Toward Direct Brain-Computer Communication. Annu. Rev. Biophys. Bioeng. 1973, 2, 157–180. [Google Scholar] [CrossRef]

- Nam, C.S.; Choi, I.; Wadeson, A.; Whang, M. Brain Computer Interface. An Emerging Interaction Technology. In Brain Computer Interfaces Handbook: Technological and Theoretical Advances; Pergamon Press: Oxford, UK, 2018; pp. 11–52. [Google Scholar]

- Placidi, G.; Petracca, A.; Spezialetti, M.; Iacoviello, D. A Modular Framework for EEG Web Based Binary Brain Computer Interfaces to Recover Communication Abilities in Impaired People. J. Med. Syst. 2016, 40, 34. [Google Scholar] [CrossRef]

- Tang, J.; Xu, M.; Han, J.; Liu, M.; Dai, T.; Chen, S.; Ming, D. Optimizing SSVEP-Based BCI System towards Practical High-Speed Spelling. Sensors 2020, 20, 4186. [Google Scholar] [CrossRef]

- Mannan, M.M.N.; Kamran, M.A.; Kang, S.; Choi, H.S.; Jeong, M.Y. A Hybrid Speller Design Using Eye Tracking and SSVEP Brain–Computer Interface. Sensors 2020, 20, 891. [Google Scholar] [CrossRef]

- Zhou, Z.; Yin, E.; Liu, Y.; Jiang, J.; Hu, D. A Novel Task-Oriented Optimal Design for P300-Based Brain-Computer Interfaces. J. Neural Eng. 2014, 11, 56003. [Google Scholar] [CrossRef] [PubMed]

- Collinger, J.L.; Wodlinger, B.; Downey, J.E.; Wang, W.; Tyler-Kabara, E.C.; Weber, D.J.; McMorland, A.J.C.; Velliste, M.; Boninger, M.L.; Schwartz, A.B. High-Performance Neuroprosthetic Control by an Individual with Tetraplegia. Lancet 2013, 381, 557–564. [Google Scholar] [CrossRef]

- Kai, K.A.; Guan, C.; Sui, G.C.K.; Beng, T.A.; Kuah, C.; Wang, C.; Phua, K.S.; Zheng, Y.C.; Zhang, H. A Clinical Study of Motor Imagery-Based Brain-Computer Interface for Upper Limb Robotic Rehabilitation. In Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society: Engineering the Future of Biomedicine, Minneapolis, MN, USA, 3–6 September 2009; pp. 5981–5984. [Google Scholar]

- Meng, J.; Zhang, S.; Bekyo, A.; Olsoe, J.; Baxter, B.; He, B. Noninvasive Electroencephalogram Based Control of a Robotic Arm for Reach and Grasp Tasks. Sci. Rep. 2016, 6, 38565. [Google Scholar] [CrossRef] [PubMed]

- Hortal, E.; Planelles, D.; Costa, A.; Iáñez, E.; Úbeda, A.; Azorín, J.M.; Fernández, E. SVM-Based Brain-Machine Interface for Controlling a Robot Arm through Four Mental Tasks. Neurocomputing 2015, 151, 116–121. [Google Scholar] [CrossRef]

- Quiles, E.; Suay, F.; Candela, G.; Chio, N.; Jiménez, M.; Álvarez-kurogi, L. Low-Cost Robotic Guide Based on a Motor Imagery Brain–Computer Interface for Arm Assisted Rehabilitation. Int. J. Environ. Res. Public Health 2020, 17, 699. [Google Scholar] [CrossRef]

- Iturrate, I.; Antelis, J.M.; Kübler, A.; Minguez, J. A Noninvasive Brain-Actuated Wheelchair Based on a P300 Neurophysiological Protocol and Automated Navigation. IEEE Trans. Robot. 2009, 25, 614–627. [Google Scholar] [CrossRef]

- Singla, R.; Khosla, A.; Jha, R. Influence of Stimuli Color on Steady-State Visual Evoked Potentials Based BCI Wheelchair Control. J. Biomed. Sci. Eng. 2013, 06, 1050–1055. [Google Scholar] [CrossRef][Green Version]

- Lamti, H.; Khelifa, M.M.; Alimi, A.; Gorce, P. Effect of Fatigue on Ssvep During Virtual Wheelchair Navigation. J. Theor. Appl. Inf. Technol. 2014, 65, 1–10. [Google Scholar]

- Piña-Ramirez, O.; Valdes-Cristerna, R.; Yanez-Suarez, O. Scenario Screen: A Dynamic and Context Dependent P300 Stimulator Screen Aimed at Wheelchair Navigation Control. Comput. Math. Methods Med. 2018, 2018, 7108906. [Google Scholar] [CrossRef]

- Edlinger, G.; Holzner, C.; Guger, C.; Groenegress, C.; Slater, M. Brain-Computer Interfaces for Goal Orientated Control of a Virtual Smart Home Environment. In Proceedings of the 4th International IEEE/EMBS Conference on Neural Engineering, Antalya, Turkey, 29 April–2 May 2009; pp. 463–465. [Google Scholar] [CrossRef]

- Yang, D.; Nguyen, T.-H.; Chung, W.-Y. A Bipolar-Channel Hybrid Brain-Computer Interface System for Home Automation Control Utilizing Steady-State Visually Evoked Potential and Eye-Blink Signals. Sensors 2020, 20, 5474. [Google Scholar] [CrossRef]

- Bevilacqua, V.; Tattoli, G.; Buongiorno, D.; Loconsole, C.; Leonardis, D.; Barsotti, M.; Frisoli, A.; Bergamasco, M. A Novel BCI-SSVEP Based Approach for Control of Walking in Virtual Environment Using a Convolutional Neural Network. In Proceedings of the 2014 International Joint Conference on Neural Networks, Beijing, China, 6–11 July 2014; pp. 4121–4128. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Flotzinger, D.; Kalcher, J. Brain-Computer Interface-a New Communication Device for Handicapped Persons. J. Microcomput. Appl. 1993, 16, 293–299. [Google Scholar] [CrossRef]

- Braun, N.; Kranczioch, C.; Liepert, J.; Dettmers, C.; Zich, C.; Büsching, I.; Debener, S. Motor Imagery Impairment in Postacute Stroke Patients. Neural Plast. 2017, 2017, 4653256. [Google Scholar] [CrossRef] [PubMed]

- Hasbulah, M.H.; Jafar, F.A.; Nordin, M.H.; Yokota, K. Brain-Controlled for Changing Modular Robot Configuration by Employing Neurosky’s Headset. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 617. [Google Scholar] [CrossRef]

- Herath, H.M.K.K.M.B.; de Mel, W.R. Controlling an Anatomical Robot Hand Using the Brain-Computer Interface Based on Motor Imagery. Adv. Hum-Comput. Interact. 2021, 2021, 5515759. [Google Scholar] [CrossRef]

- Gillini, G.; di Lillo, P.; Arrichiello, F. An Assistive Shared Control Architecture for a Robotic Arm Using EEG-Based BCI with Motor Imagery. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Prague, Czech Republic, 27 September–1 October 2021; pp. 4132–4137. [Google Scholar]

- Jochumsen, M.; Janjua, T.A.M.; Arceo, J.C.; Lauber, J.; Buessinger, E.S.; Kæseler, R.L. Induction of Neural Plasticity Using a Low-Cost Open Source Brain-Computer Interface and a 3D-Printedwrist Exoskeleton. Sensors 2021, 21, 572. [Google Scholar] [CrossRef]

- Shen, X.; Wang, X.; Lu, S.; Li, Z.; Shao, W.; Wu, Y. Research on the Real-Time Control System of Lower-Limb Gait Movement Based on Motor Imagery and Central Pattern Generator. Biomed. Signal. Processing Control 2022, 71, 102803. [Google Scholar] [CrossRef]

- Krusienski, D.J.; Sellers, E.W.; McFarland, D.J.; Vaughan, T.M.; Wolpaw, J.R. Toward Enhanced P300 Speller Performance. J. Neurosci. Methods 2008, 167, 15–21. [Google Scholar] [CrossRef]

- Malki, A.; Yang, C.; Wang, N.; Li, Z. Mind Guided Motion Control of Robot Manipulator Using EEG Signals. In Proceedings of the 2015 5th International Conference on Information Science and Technology, Sanya, Hainan, 14–15 November 2015. [Google Scholar]

- Middendorf, M.; McMillan, G.; Calhoun, G.; Jones, K.S. Brain-Computer Interfaces Based on the Steady-State Visual-Evoked Response. IEEE Trans. Rehabil. Eng. 2000, 8, 211–214. [Google Scholar] [CrossRef]

- Sandesh, R.S.; Venkatesan, N. Steady State VEP-Based BCI to Control a Five-Digit Robotic Hand Using LabVIEW. Int. J. Biomed. Eng. Technol. 2022, 38, 109–127. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, B.; Wang, W.; Zhang, D.; Gu, X. Brain-Controlled Robotic Arm Grasping System Based on Adaptive TRCA. In Proceedings of the ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2021; pp. 363–367. [Google Scholar]

- Karunasena, S.P.; Ariyarathna, D.C.; Ranaweera, R.; Wijayakulasooriya, J.; Kim, K.; Dassanayake, T. Single-Channel EEG SSVEP-Based BCI for Robot Arm Control. In Proceedings of the 2021 IEEE Sensors Applications Symposium, SAS 2021, Sundsvall, Sweden, 2–4 August 2021. [Google Scholar]

- Sharma, K.; Maharaj, S.K. Continuous and Spontaneous Speed Control of a Robotic Arm Using SSVEP. In Proceedings of the 9th IEEE International Winter Conference on Brain-Computer Interface, Gangwon, Korea, 22–24 February 2021. [Google Scholar]

- Chen, Z.; Li, J.; Liu, Y.; Tang, P. A Flexible Meal Aid Robotic Arm System Based on SSVEP. In Proceedings of the 2020 IEEE International Conference on Progress in Informatics and Computing, Shanghai, China, 18–20 December 2020. [Google Scholar]

- Zhang, D.; Yang, B.; Gao, S.; Gu, X. Brain-Controlled Robotic Arm Based on Adaptive FBCCA. In Proceedings of the Communications in Computer and Information Science, Salta, Argentina, 4–8 October 2021; Volume 1369. [Google Scholar]

- Lin, C.T.; Chiu, C.Y.; Singh, A.K.; King, J.T.; Ko, L.W.; Lu, Y.C.; Wang, Y.K. A Wireless Multifunctional SSVEP-Based Brain-Computer Interface Assistive System. IEEE Trans. Cogn. Dev. Syst. 2019, 11, 375–383. [Google Scholar] [CrossRef]

- Kaseler, R.L.; Leerskov, K.; Struijk, L.N.S.A.; Dremstrup, K.; Jochumsen, M. Designing a Brain Computer Interface for Control of an Assistive Robotic Manipulator Using Steady State Visually Evoked Potentials. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Toronto, ON, Canada, 24–28 June 2019; Volume 2019. [Google Scholar]

- Tabbal, J.; Mechref, K.; El-Falou, W. Brain Computer Interface for Smart Living Environment. In Proceedings of the 2018 9th Cairo International Biomedical Engineering Conference, Hilton Pyramids, Egypt, 20–22 December 2018. [Google Scholar]

- Chen, X.; Zhao, B.; Wang, Y.; Xu, S.; Gao, X. Control of a 7-DOF Robotic Arm System with an SSVEP-Based BCI. Int. J. Neural Syst. 2018, 28, 1850018. [Google Scholar] [CrossRef] [PubMed]

- Pelayo, P.; Murthy, H.; George, K. Brain-Computer Interface Controlled Robotic Arm to Improve Quality of Life. In Proceedings of the 2018 IEEE International Conference on Healthcare Informatics (ICHI), New York, NY, USA, 4–7 June 2018; pp. 398–399. [Google Scholar]

- Chen, X.; Zhao, B.; Gao, X. Noninvasive Brain-Computer Interface Based High-Level Control of a Robotic Arm for Pick and Place Tasks. In Proceedings of the ICNC-FSKD 2018—14th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery, Huangshan, China, 28–30 July 2018; pp. 1193–1197. [Google Scholar] [CrossRef]

- van Erp, J.; Lotte, F.; Tangermann, M. Brain-Computer Interfaces: Beyond Medical Applications. Comput. (Long Beach Calif) 2012, 45, 26–34. [Google Scholar] [CrossRef]

- Al-maqtari, M.T.; Taha, Z.; Moghavvemi, M. Steady State-VEP Based BCI for Control Gripping of a Robotic Hand. In Proceedings of the International Conference for Technical Postgraduates 2009, TECHPOS 2009, Kuala Lumpur, Malaysia, 14–15 December 2009; pp. 1–3. [Google Scholar] [CrossRef]

- Meattini, R.; Scarcia, U.; Melchiorri, C.; Belpaeme, T. Gestural Art: A Steady State Visual Evoked Potential (SSVEP) Based Brain Computer Interface to Express Intentions through a Robotic Hand. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 211–216. [Google Scholar] [CrossRef]

- Çaǧlayan, O.; Arslan, R.B. Robotic Arm Control with Brain Computer Interface Using P300 and SSVEP. In Proceedings of the IASTED International Conference on Biomedical Engineering, Innsbruck, Austria, 13–15 February 2013; pp. 141–144. [Google Scholar] [CrossRef]

- Lüth, T.; Ojdanić, D.; Friman, O.; Prenzel, O.; Gräser, A. Low Level Control in a Semi-Autonomous Rehabilitation Robotic System via a Brain-Computer Interface. In Proceedings of the 2007 IEEE 10th the International Conference on Rehabilitation Robotics, Noordwijk, The Netherlands, 12–15 June 2007; pp. 721–728. [Google Scholar] [CrossRef]

- Rakshit, A.; Ghosh, S.; Konar, A.; Pal, M. A Novel Hybrid Brain-Computer Interface for Robot Arm Manipulation Using Visual Evoked Potential. In Proceedings of the 2017 9th International Conference on Advances in Pattern Recognition, ICAPR 2017, Bangalore, India, 27–30 December 2017; pp. 404–409. [Google Scholar] [CrossRef]

- Gao, Q.; Dou, L.; Belkacem, A.N.; Chen, C. Noninvasive Electroencephalogram Based Control of a Robotic Arm for Writing Task Using Hybrid BCI System. BioMed. Res. Int. 2017, 2017, 8316485. [Google Scholar] [CrossRef]

- Peyton, G.; Hoehler, R.; Pantanowitz, A. Hybrid BCI for Controlling a Robotic Arm over an IP Network. In IFMBE Proceedings; Lacković, I., Vasic, D., Eds.; Springer International Publishing: Cham, Switzerland, 2015; Volume 45, pp. 517–520. ISBN 978-3-319-11127-8. [Google Scholar]

- Achic, F.; Montero, J.; Penaloza, C.; Cuellar, F. Hybrid BCI System to Operate an Electric Wheelchair and a Robotic Arm for Navigation and Manipulation Tasks. Proc. IEEE Workshop Adv. Robot. Its Soc. Impacts ARSO 2016, 2016, 249–254. [Google Scholar] [CrossRef]

- Zhu, Y.; Li, Y.; Lu, J.; Li, P. A Hybrid BCI Based on SSVEP and EOG for Robotic Arm Control. Front. Neurorobot. 2020, 14, 583641. [Google Scholar] [CrossRef] [PubMed]

- Postelnicu, C.C.; Girbacia, F.; Voinea, G.D.; Boboc, R. Towards Hybrid Multimodal Brain Computer Interface for Robotic Arm Command. Int. Conf. Hum-Comput. Interact. 2019, 11580, 460–471. [Google Scholar]

- Chen, X.; Zhao, B.; Wang, Y.; Gao, X. Combination of High-Frequency SSVEP-Based BCI and Computer Vision for Controlling a Robotic Arm. J. Neural. Eng. 2019, 16, 26012. [Google Scholar] [CrossRef]

- Choi, W.-S.; Yeom, H.-G. Studies to Overcome Brain–Computer Interface Challenges. Appl. Sci. 2022, 12, 2598. [Google Scholar] [CrossRef]

- Huggins, J.E.; Guger, C.; Allison, B.; Anderson, C.W.; Batista, A.; Brouwer, A.-M.; Brunner, C.; Chavarriaga, R.; Fried-Oken, M.; Gunduz, A.; et al. Workshops of the Fifth International Brain-Computer Interface Meeting: Defining the Future. Brain-Comput. Interfaces 2014, 1, 27–49. [Google Scholar] [CrossRef]

- Mihajlovic, V.; Grundlehner, B.; Vullers, R.; Penders, J. Wearable, Wireless EEG Solutions in Daily Life Applications: What Are We Missing? IEEE J. Biomed. Health Inform. 2015, 19, 6–21. [Google Scholar] [CrossRef]

- Lightbody, G.; Galway, L.; McCullagh, P. The Brain Computer Interface: Barriers to Becoming Pervasive. In Pervasive Health. Human–Computer Interaction Series; Holzinger, A., Ziefle, M., Röcker, C., Eds.; Routledge: London, UK, 2014; pp. 101–129. [Google Scholar]

- Silva, C.R.; de Araújo, R.S.; Albuquerque, G.; Moioli, R.C.; Brasil, F.L. Interfacing Brains to Robotic Devices—A VRPN Communication Application. Braz. Congr. Biomed. Eng. 2019, 70, 597–603. [Google Scholar]

- Olchawa, R.; Man, D. Development of the BCI Device Controlling C++ Software, Based on Existing Open Source Projects. Control. Comput. Eng. Neurosci. 2021, 1362, 60–71. [Google Scholar]

- Aljalal, M.; Ibrahim, S.; Djemal, R.; Ko, W. Comprehensive Review on Brain-Controlled Mobile Robots and Robotic Arms Based on Electroencephalography Signals. Intell. Serv. Robot. 2020, 13, 539–563. [Google Scholar] [CrossRef]

- Leeb, R.; Friedman, D.; Müller-Putz, G.R.; Scherer, R.; Slater, M.; Pfurtscheller, G. Self-Paced (Asynchronous) BCI Control of a Wheelchair in Virtual Environments: A Case Study with a Tetraplegic. Comput. Intell. Neurosci. 2007, 2007, 79642. [Google Scholar] [CrossRef] [PubMed]

- Yendrapalli, K.; Tammana, S.S.N.P.K. The Brain Signal Detection for Controlling the Robot. Int. J. Sci. Eng. Technol. 2014, 3, 1280–1283. [Google Scholar]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Ortner, R.; Allison, B.Z.; Korisek, G.; Gaggl, H.; Pfurtscheller, G. An SSVEP BCI to Control a Hand Orthosis for Persons with Tetraplegia. IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 1–5. [Google Scholar] [CrossRef]

- Cauvery, N.K. Brain Controlled Wheelchair for Disabled. Int. J. Comput. Sci. Eng. Inf. Technol. Res. 2014, 4, 42075. [Google Scholar]

- Renard, Y.; Lotte, F.; Gibert, G.; Congedo, M.; Maby, E.; Delannoy, V.; Bertrand, O.; Lécuyer, A. OpenViBE: An Open-Source Software Platform to Design, Test, and Use Brain–Computer Interfaces in Real and Virtual Environments. Presence 2010, 19, 35–53. [Google Scholar] [CrossRef]

- Singala, K.V.; Trivedi, K.R. Connection Setup of Openvibe Tool with EEG Headset, Parsing and Processing of EEG Signals. In Proceedings of the International Conference on Communication and Signal Processing, Melmaruvathur, India, 6–8 April 2016. [Google Scholar]

- Minin, A.; Syskov, A.; Borisov, V. Hardware-Software Integration for EEG Coherence Analysis. In Proceedings of the 2019 Ural Symposium on Biomedical Engineering, Radioelectronics and Information Technology, Yekaterinburg, Russia, 25–26 April 2019. [Google Scholar]

- Schalk, G.; Mcfarland, D.J.; Hinterberger, T.; Birbaumer, N.; Wolpaw, J.R. BCI2000: A General-Purpose Brain-Computer Interface (BCI ) System. IEEE Trans. Biomed. Eng. 2004, 51, 1034–1043. [Google Scholar] [CrossRef]

- Escolano, C.; Antelis, J.M.; Minguez, J. A Telepresence Mobile Robot Controlled with a Noninvasive Brain-Computer Interface. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 793–804. [Google Scholar] [CrossRef] [PubMed]

- Appriou, A.; Pillette, L.; Trocellier, D.; Dutartre, D.; Cichocki, A.; Lotte, F. BioPyC, an Open-Source Python Toolbox for Offline Electroencephalographic and Physiological Signals Classification. Sensors 2021, 21, 5740. [Google Scholar] [CrossRef] [PubMed]

- Perego, P.; Maggi, L.; Parini, S.; Andreoni, G. BCI++: A New Framework for Brain Computer Interface Application. In Proceedings of the 18th International Conference on Software Engineering and Data Engineering 2009, SEDE 2009, Washington, DC, USA, 22–24 June 2009; pp. 37–41. [Google Scholar]

- Durka, P.J.; Kus, R.; Zygierewicz, J.; Michalska, M.; Milanowski, P.; Labecki, M.; Spustek, T.; Laszuk, D.; Duszyk, A.; Kruszynski, M. User-Centered Design of Brain-Computer Interfaces: OpenBCI.Pl and BCI Appliance. Bull. Pol. Acad. Sci. Technol. Sci. 2012, 60, 427–431. [Google Scholar] [CrossRef]

- Delorme, A.; Mullen, T.; Kothe, C.; Acar, Z.A.; Bigdely-Shamlo, N.; Vankov, A.; Makeig, S. EEGLAB, SIFT, NFT, BCILAB, and ERICA: New Tools for Advanced EEG Processing. Comput. Intell. Neurosci. 2011, 2011, 130714. [Google Scholar] [CrossRef] [PubMed]

- Toledo-Peral, C.L.; Gutiérrez-Martínez, J.; Mercado-Gutiérrez, J.A.; Martín-Vignon-Whaley, A.I.; Vera-Hernández, A.; Leija-Salas, L. SEMG Signal Acquisition Strategy towards Hand FES Control. J. Healthc. Eng. 2018, 2018, 2350834. [Google Scholar] [CrossRef]

- Li, C.; Jia, T.; Xu, Q.; Ji, L.; Pan, Y. Brain-Computer Interface Channel-Selection Strategy Based on Analysis of Event-Related Desynchronization Topography in Stroke Patients. J. Healthc. Eng. 2019, 2019, 3817124. [Google Scholar] [CrossRef]

- Tiraboschi, M.; Avanzani, F.; Boccignone, G. Listen to Your Mind’s (He)Art: A System for Affective Music Generation Via Brain-Computer Interface. In Proceedings of the 18th Sound and Music Computing Conference, Online, 29 June–1 July 2021; pp. 1–8. [Google Scholar]

- Ghoslin, B.; Nandikolla, V.K. Design of Omnidirectional Robot Using Hybrid Brain Computer Interface. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Virtual, 16–19 November 2020; Volume 5: Biomedical and Biotechnology. p. V005T05A070. [Google Scholar] [CrossRef]

- Wannajam, S.; Thamviset, W. Brain Wave Pattern Recognition of Two-Task Imagination by Using Single-Electrode EEG. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2019; Volume 769. [Google Scholar]

- Tariq, M.; Trivailo, P.M.; Simic, M. Motor Imagery Based EEG Features Visualization for BCI Applications. In Procedia Computer Science; Elsevier: Amsterdam, The Netherlands, 2018; Volume 126. [Google Scholar]

- Barros, E.S.; Neto, N. Classification Procedure for Motor Imagery EEG Data. In Proceedings of the Augmented Cognition: Intelligent Technologies—12th International Conference, {AC} 2018, Held as Part of {HCI} International 2018, Las Vegas, NV, USA, 15–20 July 2018; Schmorrow, D.D., Fidopiastis, C.M., Eds.; Springer: Cham, Switzerland, 2018; Volume 10915, pp. 201–211. [Google Scholar]

- Plechawska-Wójcik, M.; Kaczorowska, M.; Michalik, B. Comparative Analysis of Two-Group Supervised Classification Algorithms in the Study of P300-Based Brain-Computer Interface. MATEC Web Conf. 2019, 252, 3010. [Google Scholar] [CrossRef]

- Fouad, I.A. A Robust and Reliable Online P300-Based BCI System Using Emotiv EPOC + Headset. J. Med. Eng. Technol. 2021, 45, 94–114. [Google Scholar] [CrossRef]

- Izadi, B.; Ghaedi, A.; Ghasemian, M. Neuropsychological Responses of Consumers to Promotion Strategies and the Decision to Buy Sports Products. Asia Pac. J. Mark. Logist. 2021, 34, 1203–1221. [Google Scholar] [CrossRef]

- Babiker, A.; Faye, I. A Hybrid EMD-Wavelet EEG Feature Extraction Method for the Classification of Students’ Interest in the Mathematics Classroom. Comput. Intell. Neurosci. 2021, 2021, 6617462. [Google Scholar] [CrossRef]

- Peiqing, H. Multidimensional State Data Reduction and Evaluation of College Students’ Mental Health Based on SVM. J. Math. 2022, 2022, 4961203. [Google Scholar] [CrossRef]

- Teixeira, A.R.; Rodrigues, I.; Gomes, A.; Abreu, P.; Rodríguez-Bermúdez, G. Using Brain Computer Interaction to Evaluate Problem Solving Abilities. In Lecture Notes in Computer; Springer: Berlin/Heidelberg, Germany, 2021; Volume 12776. [Google Scholar]

- Rajendran, V.G.; Jayalalitha, S.; Adalarasu, K. EEG Based Evaluation of Examination Stress and Test Anxiety among College Students. IRBM 2021, 1–13. [Google Scholar] [CrossRef]

- Sun, G.; Wen, Z.; Ok, D.; Doan, L.; Wang, J.; Chen, Z.S. Detecting Acute Pain Signals from Human EEG. J. Neurosci. Methods 2021, 347, 108964. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Li, W.; Mao, X.; Li, M. SSVEP-Based Experimental Procedure for Brain-Robot Interaction with Humanoid Robots. J. Vis. Exp. 2015, 2015, 53558. [Google Scholar] [CrossRef] [PubMed]

- Martišius, I.; Damaševičius, R. A Prototype SSVEP Based Real Time BCI Gaming System. Comput. Intell. Neurosci. 2016, 2016, 3861425. [Google Scholar] [CrossRef]

- Gao, X.; Xu, D.; Cheng, M.; Gao, S. A BCI-Based Environmental Controller for the Motion-Disabled. IEEE Trans. Neural Syst. Rehabil. Eng. 2003, 11, 137–140. [Google Scholar] [CrossRef]

- Norcia, A.M.; Appelbaum, L.G.; Ales, J.M.; Cottereaur, B.R.; Rossion, B. The Steady State VEP in Research. J. Vis. 2015, 15, 1–46. [Google Scholar] [CrossRef]

- Cáceres, C.A.; Rosário, J.M.; Amaya, D. Approach to Assistive Robotics Based on an EEG Sensor and a 6-DoF Robotic Arm. Int. Rev. Mech. Eng. 2016, 10, 253–260. [Google Scholar] [CrossRef]

- McFarland, D.J.; Wolpaw, J.R. EEG-Based Brain–Computer Interfaces. Curr. Opin. Biomed. Eng. 2017, 4, 194–200. [Google Scholar] [CrossRef]

- Bakardjian, H.; Tanaka, T.; Cichocki, A. Brain Control of Robotic Arm Using Affective Steady-State Visual Evoked Potentials. In Proceedings of the 5th IASTED Inter-national Conference Human-Computer Interaction, Maui, HI, USA, 23–25 August 2010; pp. 264–270. [Google Scholar]

- Herrmann, C.S. Human EEG Responses to 1–100 Hz Flicker: Resonance Phenomena in Visual Cortex and Their Potential Correlation to Cognitive Phenomena. Exp. Brain Res. 2001, 137, 346–353. [Google Scholar] [CrossRef]

- Müller, M.M.; Hillyard, S. Concurrent Recording of Steady-State and Transient Event-Related Potentials as Indices of Visual-Spatial Selective Attention. Clin. Neurophysiol. 2000, 111, 1544–1552. [Google Scholar] [CrossRef]

- Ko, L.; Chikara, R.K.; Lee, Y.; Lin, W. Exploration of User’s Mental State Changes during Performing Brain–Computer Interface. Sensors 2020, 20, 3169. [Google Scholar] [CrossRef] [PubMed]

- Candela, G.; Quiles, E.; Chio, N.; Suay, F. Chapter # 13 Attentional Variables and BCI Performance: Comparing Two Strategies. In Psychology Applications & Developments IV; inSciencePress: Lisboa, Portugal, 2018; pp. 130–139. [Google Scholar]

- Zhu, D.; Bieger, J.; Molina, G.G.; Aarts, R.M. A Survey of Stimulation Methods Used in SSVEP-Based BCIs. Comput. Intell. Neurosci. 2010, 2010, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Staubli, Robots Industriales de 6 Ejes TX60. Available online: https://www.staubli.com/es/ (accessed on 24 June 2022).

- Brunner, P.; Schalk, G. BCI Software. In Brain–Computer Interfaces Handbook Technological and Theoretical Advances; CRC Press: New York, NY, USA, 2018; pp. 321–342. ISBN 9781351231954. [Google Scholar]

- BCI2000. Available online: http://www.schalklab.org/research/bci2000 (accessed on 16 March 2022).

- Matlab. Available online: https://www.mathworks.com/products/matlab.html (accessed on 16 March 2022).

- Openvibe. Available online: http://openvibe.inria.fr (accessed on 16 March 2022).

- Floriano, A.; Diez, P.F.; Bastos-Filho, T.F. Evaluating the Influence of Chromatic and Luminance Stimuli on SSVEPs from Behind-the-Ears and Occipital Areas. Sensors 2018, 18, 615. [Google Scholar] [CrossRef]

- Duart, X.; Quiles, E.; Suay, F.; Chio, N.; García, E.; Morant, F. Evaluating the Effect of Stimuli Color and Frequency on SSVEP. Sensors 2021, 21, 117. [Google Scholar] [CrossRef]

- Cao, T.; Wan, F.; Mak, P.U.; Mak, P.-I.; Vai, M.I.; Hu, Y. Flashing Color on the Performance of SSVEP-Based Brain-Computer Interfaces. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 1819–1822. [Google Scholar]

- Tello, R.M.; Müller, S.M.; Bastos, T.F.; Ferreira, A. Evaluation of Different Stimuli Color for an SSVEP-Based BCI. In Proceedings of the XXIV Congresso Brasileiro De Engenharia Biomédica-CBEB 2014, Uberlândia, Brazil, 13–17 October 2014; pp. 25–28. [Google Scholar]

- Neuroelectrics. Enobio Products. Available online: http://www.neuroelectrics.com (accessed on 16 March 2022).

- Işcan, Z.; Nikulin, V.V. Steady State Visual Evoked Potential (SSVEP) Based Brain-Computer Interface (BCI) Performance under Different Perturbations. PLoS ONE 2018, 13, e0191673. [Google Scholar] [CrossRef]

- Perlstein, W.M.; Cole, M.A.; Larson, M.; Kelly, K.; Seignourel, P.; Keil, A. Steady-State Visual Evoked Potentials Reveal Frontally-Mediated Working Memory Activity in Humans. Neurosci. Lett. 2003, 342, 191–195. [Google Scholar] [CrossRef]

- Jin, J.; Xiao, R.; Daly, I.; Miao, Y.; Wang, X.; Cichocki, A. Internal Feature Selection Method of CSP Based on L1-Norm and Dempster-Shafer Theory. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4814–4825. [Google Scholar] [CrossRef]

- Lotte, F.; Guan, C. Regularizing Common Spatial Patterns to Improve BCI Designs: Unified Theory and New Algorithms. IEEE Trans. Biomed. Eng. 2011, 58, 355–362. [Google Scholar] [CrossRef]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A Review of Classification Algorithms for EEG-Based Brain-Computer Interfaces: A 10 Year Update. J. Neural Eng. 2018, 15, 31005. [Google Scholar] [CrossRef]

- Fabien, L.; Marco, C. EEG Feature Extraction. In Brain–Computer Interfaces 1; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2016; pp. 127–143. ISBN 9781119144977. [Google Scholar]

- Falzon, O.; Camilleri, K.; Muscat, J. Complex-Valued Spatial Filters for SSVEP-Based BCIs with Phase Coding. IEEE Trans. Biomed. Eng. 2012, 59, 2486–2495. [Google Scholar] [CrossRef] [PubMed]

- Bialas, P.; Milanowski, P. A High Frequency Steady-State Visually Evoked Potential Based Brain Computer Interface Using Consumer-Grade EEG Headset. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC 2014, Chicago, IL, USA, 26–30 August 2014; pp. 5442–5445. [Google Scholar] [CrossRef]

- Chu, Y.; Zhao, X.; Han, J.; Zhao, Y.; Yao, J. SSVEP Based Brain-Computer Interface Controlled Functional Electrical Stimulation System for Upper Extremity Rehabilitation. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics, Bali, Indonesia, 5–10 December 2014; pp. 2244–2249. [Google Scholar] [CrossRef]

- Touyama, H. A Study on EEG Quality in Physical Movements with Steady-State Visual Evoked Potentials. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 4217–4220. [Google Scholar] [CrossRef]

- Carvalho, S.N.; Costa, T.B.S.; Uribe, L.F.S.; Soriano, D.C.; Yared, G.F.G.; Coradine, L.C.; Attux, R. Comparative Analysis of Strategies for Feature Extraction and Classification in SSVEP BCIs. Biomed. Signal Processing Control 2015, 21, 34–42. [Google Scholar] [CrossRef]

- Fabiani, G.E.; McFarland, D.J.; Wolpaw, J.R.; Pfurtscheller, G. Conversion of EEG Activity into Cursor Movement by a Brain-Computer Interface (BCI). IEEE Trans. Neural Syst. Rehabil. Eng. 2004, 12, 331–338. [Google Scholar] [CrossRef] [PubMed]

- Duda, R.O.; Hart, P.E.; Stork, D.G.; Wiley, J. Pattern Classification All Materials in These Slides Were Taken from Pattern Classification, 2nd ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2016. [Google Scholar]

- Maby, E. Practical Guide to Performing an EEG Experiment. In Brain-Computer Interfaces 2; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2016; pp. 163–177. [Google Scholar]

- Speier, W.; Arnold, C.; Pouratian, N. Evaluating True BCI Communication Rate through Mutual Information and Language Models. PLoS ONE 2013, 8, e78432. [Google Scholar] [CrossRef]

- McFarland, D.J.; McCane, L.M.; David, S.V.; Wolpaw, J.R. Spatial Filter Selection for EEG-Based Communication. Electroencephalogr. Clin. Neurophysiol. 1997, 103, 386–394. [Google Scholar] [CrossRef]

- Carvalhaes, C.; de Barros, J.A. The Surface Laplacian Technique in EEG: Theory and Methods. Int. J. Psychophysiol. 2015, 97, 174–188. [Google Scholar] [CrossRef]

- Falzon, O.; Camilleri, K.P. Multi-Colour Stimuli to Improve Information Transfer Rates in SSVEP-Based Brain-Computer Interfaces. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; pp. 443–446. [Google Scholar] [CrossRef]

- Çiǧ, H.; Hanbay, D.; Tüysüz, F. Robot Arm Control with for SSVEP-Based Brain Signals in Brain Computer Interface|SSVEP Tabanli Beyin Bilgisayar Arayüzü Ile Robot Kol Kontrolü. In Proceedings of the IDAP 2017—International Artificial Intelligence and Data Processing Symposium, Malatya, Turkey, 16–17 September 2017. [Google Scholar] [CrossRef]

- Chiu, C.Y.; Singh, A.K.; Wang, Y.K.; King, J.T.; Lin, C.T. A Wireless Steady State Visually Evoked Potential-Based BCI Eating Assistive System. In Proceedings of the International Joint Conference on Neural Networks, Anchorage, AK, USA, 4–19 May 2017; Volume 2017. [Google Scholar]

- Openvibe Tutorial. Available online: http://openvibe.inria.fr/tutorial-how-to-cross-validate-better (accessed on 25 June 2022).

- Jeunet, C.; Lotte, F.; N’Kaoua, B. Human Learning for Brain-Computer Interfaces. In Brain-Computer Interfaces 1; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2016; pp. 233–250. [Google Scholar]

- Vidaurre, C.; Blankertz, B. Towards a Cure for BCI Illiteracy. Brain Topogr. 2010, 23, 194–198. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).