A Temperature Compensation Method for aSix-Axis Force/Torque Sensor Utilizing Ensemble hWOA-LSSVM Based on Improved Trimmed Bagging

Abstract

:1. Introduction

2. Basic Algorithm

2.1. Least-Square Support Vector Machine (LSSVM)

- is a normal vector of the hyperplane,

- b is a bias that decides the distance between the hyperplane and origin point,

- is a mapping function which maps input data into a higher dimension space.

- I is the identity matrix,

- ,

- .

2.2. The Hybrid Whale Optimization Algorithm (hWOA)

2.2.1. Whale Optimization Algorithm

- indicates the distance between the search agent and the target (the optimal position obtained so far),

- b is a random constant for defining the shape of spiral,

- l is a random number in [−1, 1].

2.2.2. The Simulated Annealing Algorithm (SA)

Initialization

- is the position of search agent in the ith iteration;

- T and indicate the current temperature and initial maximum temperature, respectively;

- k is a constant in [0, 1] to control the decreasing speed of temperature.

Annealing

Termination

2.2.3. Hybridization

| Algorithm 1: Hybrid Whale Optimization Algorithm | |

| Inputs: | Initialize the population of whales , |

| Initialize the parameters and | |

| Outputs: | The optimal position |

| Process: | |

| 1. | Calculate fitness of all search agents |

| 2. | while (t < maximum number of iterations) || (stop condition): |

| 3. | Update p and |

| 4. | for each search agent: |

| 5. | if : |

| 6. | if : |

| 7. | Update the position of the current search agent by Equation (11) |

| 8. | else if : |

| 9. | Select a random search agent |

| 10. | Update the position of the current search agent by Equation (15) |

| 11. | end |

| 12. | else if : |

| 13. | Update the position of the current search agent by Equation (14) |

| 14. | end if |

| 15. | if : |

| 16. | else if : |

| 17. | if : do nothing |

| 18. | else if : |

| 19. | end if |

| 20. | end if |

| 21. | end for |

| 22. | Check if any search agent travels beyond the search space and amend it |

| 23. | |

| 24. | % Update the system temperature by Equation (18) |

| 25. | end while |

| 26. | return |

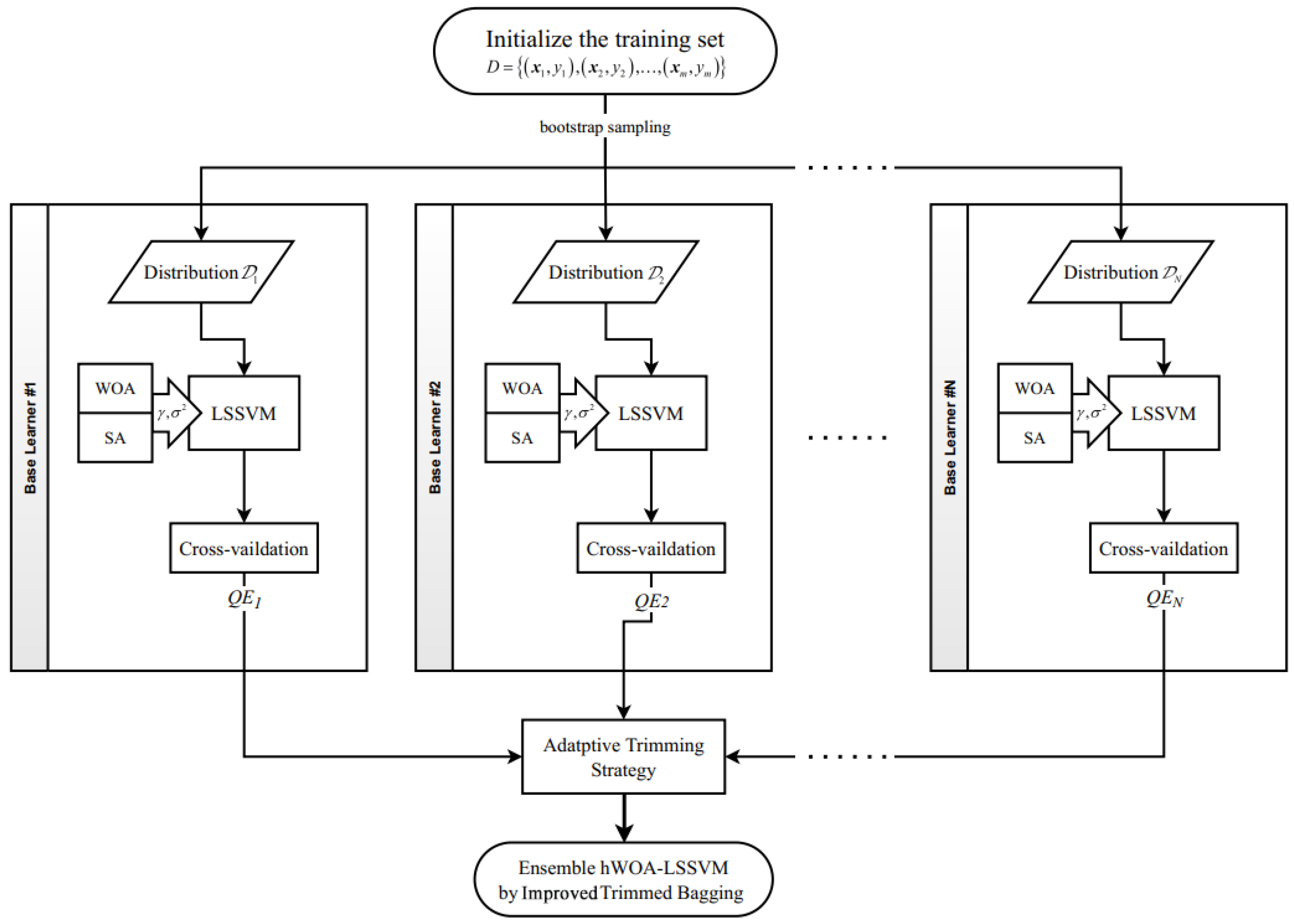

2.3. Improved Trimmed Bagging

- The algorithm begins by applying bootstrap sampling on the given dataset. Assuming denotes the original training datasets, we can obtain the bootstrap distributions , where T indicates the number of base learners.

- Train all base learners with the corresponding subsets:where stands for training the base learning algorithm with dataset D under distribution , is the ith trained learner, and T is the number of base learners.

- Calculate the quoted error () of each base learner using OOB sampling:

- Sort all base learners ascendingly according to their QEs:where indicates the quoted error of at the jth iteration for all base learners.

- Trim off the “worst” learners at the portion of ; then average them over the remainder to obtain the ensemble learner:

| Algorithm 2: Improved Trimmed Bagging Algorithm | |

| Inputs: | Dataset , |

| Base learning algorithm , | |

| The trimming portion , | |

| Number of base learners T | |

| Outputs: | The ensemble learner |

| Process: | |

| 1. | Initial the distributions by applying bootstrap sampling over dataset D |

| 2. | Initial out-of-bag samples correspond to distribution |

| 3. | for |

| 4. | %Train the base learning algorithm by distribution |

| 5. | %Calculate quoted error of current learner |

| 6. | end |

| 7. | Sort all learners ascendingly according to quoted error. |

| 8. | Find the optimal trim portion by Equation (23)–(26). |

| 9. | Calculate the weight of each base learner by Equation (27). |

| 10. | %Average on the remaining base learners |

| 11. | return |

3. Hybrid WOA-LSSVM Ensembled by Improved Trimmed Bagging

- We prepared the datasets with a high–low temperature experiment. The input vector was formed by a series of ambient temperatures (T), and the voltage signal (V) of the strain gage sensor constituted the output vector. Then, 80% of origin data were randomly separated for training and the remaining 20% were prepared for testing. More details about the datasets are shown in the High–Low Temperature Experiment section.

- We divided the training set into N distributions by bootstrap sampling and obtained base learners by training LSSVM on each distribution. Two parameters of LSSVM, the regulation item () and the width of RBF kernel (), were tuned by hWOA in this process.

- We calculated quoted error () of each base learner according Equation (21) by out-of-bag (OOB) sampling; then, we determined the optimal trim portion () and weight () by the adaptive trim strategy.

- We assembled the final model by trimming off and averaging over the outputs of base learners according to and .

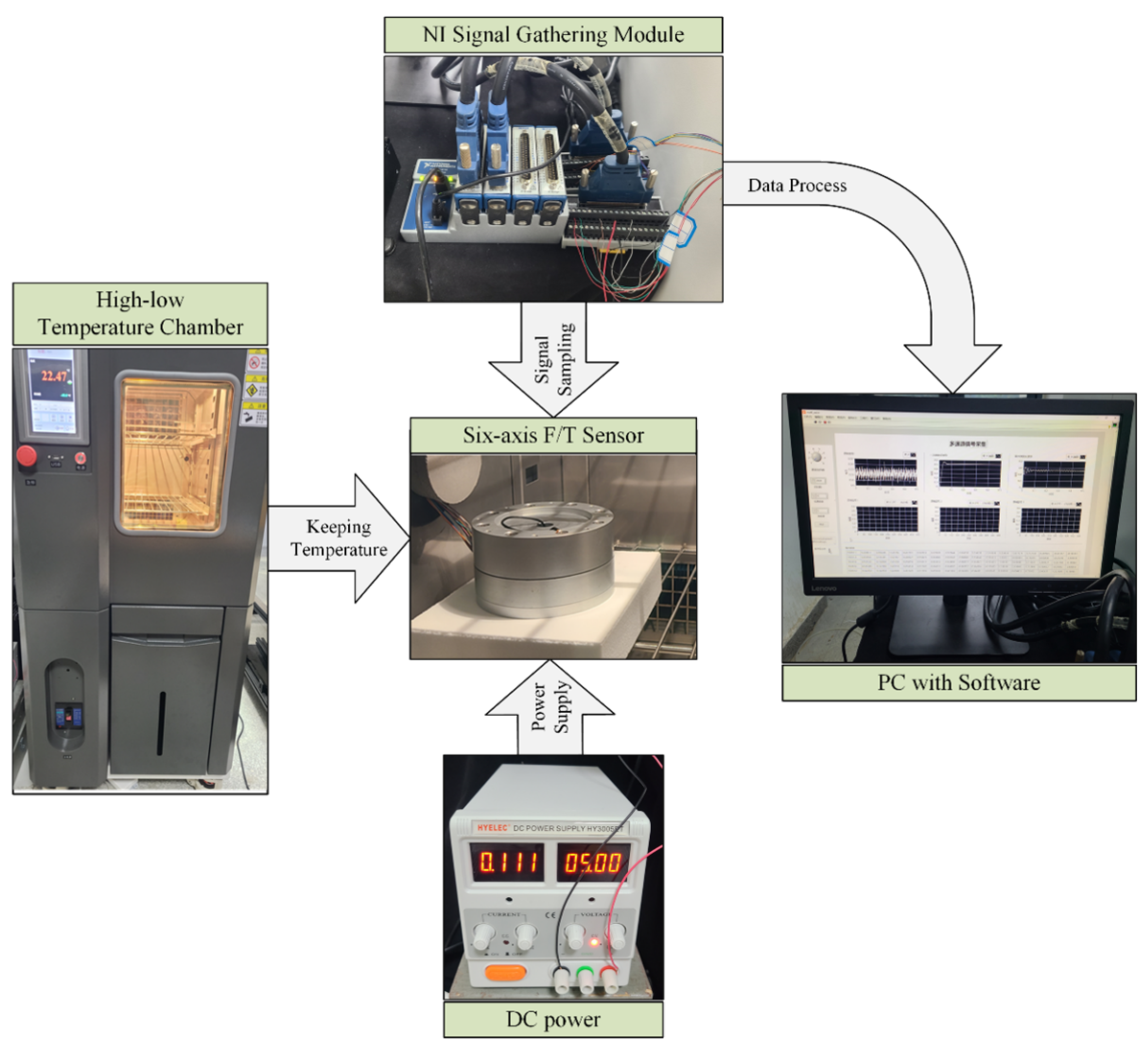

4. Experiment

4.1. Calibration and Decoupling

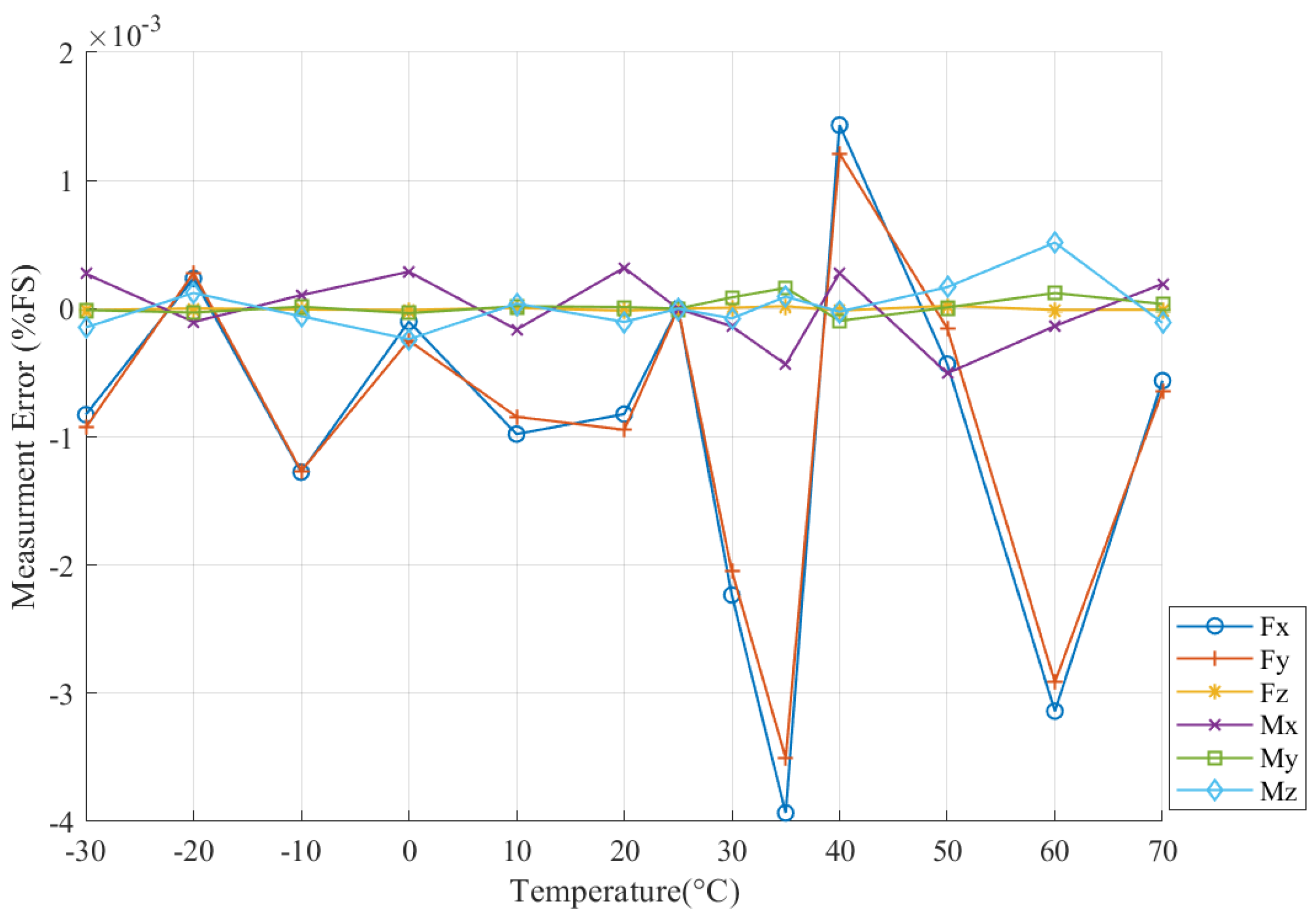

4.2. High–Low Temperature Experiment

- is the measured value of the F/T sensor under T °C;

- denotes the measured value under the temperature of calibration, which means 25 °C here;

- denotes the full scale of the corresponding dimension.

4.3. F/T Sensor Temperature Compensation

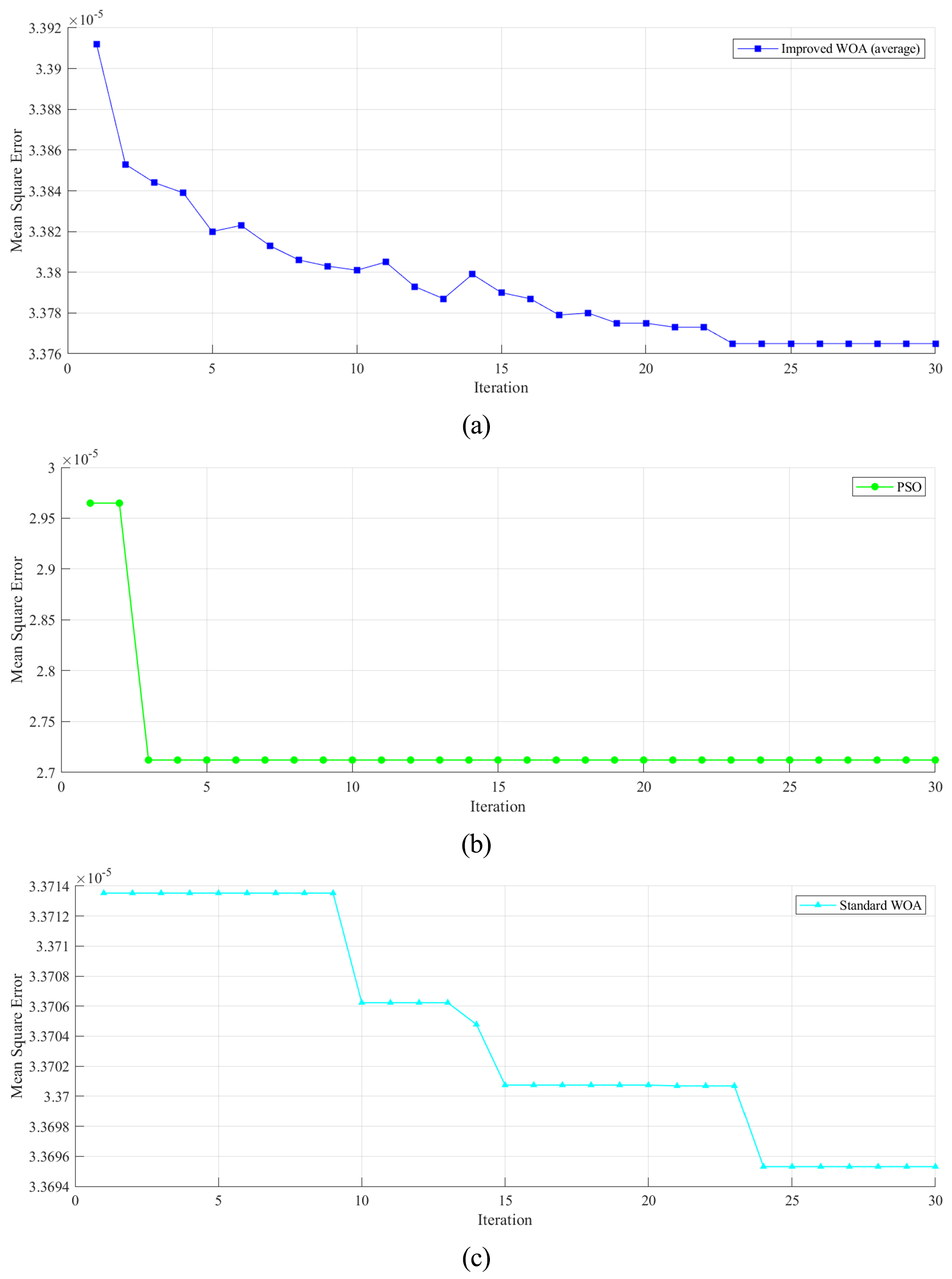

4.3.1. Model Training

4.3.2. Compensation

4.4. Compensation Results and Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yuxiang, S.; Yuyun, Y.; Huibin, C.; Shuang, F.; Lifu, G.; Yunjian, G. Research on Joint Torque Sensor for Space Manipulator Based on Redundant Measurement. Chin. J. Sens. Actuators 2018, 31, 1621–1627. [Google Scholar]

- Chen, Y.; Zhang, Q.; Tian, Q.; Huo, L.; Feng, X. Fuzzy Adaptive Impedance Control for Deep-Sea Hydraulic Manipulator Grasping Under Uncertainties. In Proceedings of the Global Oceans: Singapore—U.S. Gulf Coast, Biloxi, MS, USA, 5–30 October 2020; IEEE: Biloxi, MS, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Bai, Y.; Zhang, Q.; Tian, Q.; Yan, S.; Tang, Y.; Zhang, A. Performance and experiment of deep-sea master-slave servo electric manipulator. In Proceedings of the OCEANS MTS/IEEE Seattle, OCEANS, Seattle, WA, USA, 27–31 October 2019; IEEE: Seattle, WA, USA, 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Luo, H.; Cabot, J.; Duan, M.; Lee, Y.K. An Integrated Temperature Compensation Method for Thermal Expansion-based Angular Motion Sensors. In Proceedings of the IEEE Sensors, Sydney, Australia, 31 October–3 November 2021; IEEE: Sydney, Australia, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Pereira, R.d.S.; Cima, C.A. Thermal Compensation Method for Piezoresistive Pressure Transducer. IEEE Trans. Instrum. Meas. 2021, 70, 9510807. [Google Scholar] [CrossRef]

- Hewes, A.; Medvescek, J.I.; Mydlarski, L.; Baliga, B.R. Drift compensation in thermal anemometry. Meas. Sci. Technol. 2020, 31, 045302. [Google Scholar] [CrossRef]

- Wang, L.; Zhu, R.; Li, G. Temperature and Strain Compensation for Flexible Sensors Based on Thermosensation. ACS Appl. Mater. Interfaces 2020, 12, 1953–1961. [Google Scholar] [CrossRef]

- Yang, Y.; Liu, Y.; Liu, Y.; Zhao, X. Temperature Compensation of MEMS Gyroscope Based on Support Vector Machine Optimized by GA. In Proceedings of the 2019 IEEE Symposium Series on Computational Intelligence (SSCI), Xiamen, China, 6–9 December 2019; IEEE: Xiamen, China, 2019; pp. 2989–2994. [Google Scholar] [CrossRef]

- Deng, J.; Chen, W.L.; Liang, C.; Wang, W.F.; Xiao, Y.; Wang, C.P.; Shu, C.M. Correction model for CO detection in the coal combustion loss process in mines based on GWO-SVM. J. Loss Prev. Process Ind. 2021, 71, 104439. [Google Scholar] [CrossRef]

- Ma, H.G.; Zeng, G.H.; Huang, B. Research on Temperature Compensation of Pressure Transmitter Based on WOA-BP. Instrum. Technol. Sens. 2020, 6, 33–36. [Google Scholar]

- Wang, Y.; Xiao, S.; Tao, J. Temperature Compensation for MEMS Mass Flow Sensors Based on Back Propagation Neural Network. In Proceedings of the Annuel International Conference on Nano/Micro Engineered and Molecular Systems (NEMS), NEMS, Xiamen, China, 25–29 April 2021; IEEE: Xiamen, China, 2021; pp. 1601–1604. [Google Scholar] [CrossRef]

- Chung, V.P.J.; Lin, Y.C.; Li, X.; Guney, M.G.; Paramesh, J.; Mukherjee, T.; Fedder, G.K. Stress-and-Temperature-Induced Drift Compensation on a High Dynamic Range Accelerometer Array Using Deep Neural Networks. In Proceedings of the IEEE International Conference on Micro Electro Mechanical Systems (MEMS), Gainesville, FL, USA, 25–29 January 2021; IEEE: Gainesville, FL, USA, 2021; pp. 188–191. [Google Scholar] [CrossRef]

- Yanmei, S.; Shudong, L.; Fengjuan, M.; Bairui, T. Temperature Compensation Model Based on the Wavelet Neural Network with Genetic Algorithm. Chin. J. Sens. Actuators 2012, 25, 77–81. [Google Scholar]

- Seo, Y.B.; Yu, H.; Yu, M.J.; Lee, S.J.; IEEE. Compensation Method of Gyroscope Bias Hysteresis Error with Temperature and Rate of Temperature using Deep Neural Networks. In Proceedings of the nternational Conference on Control, Automation and Systems (ICCAS), PyeongChang, Korea, 17–20 October 2018; number WOS:000457612300164. pp. 1072–1076. [Google Scholar]

- Shi, S.; Wang, Z.; Guo, J.; Huang, Y. Temperature Compensation Technology of Speckle Structured Light Based on BP Neural Network. In Proceedings of the Sixth Symposium on Novel Photoelectronic Detection Technology and Application, Beijing, China, 3–5 December 2019; Volume 11455. [Google Scholar] [CrossRef]

- Suykens, J.; Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Zhang, J.A.; Wang, P.; Gong, Q.R.; Cheng, Z. SOH estimation of lithium-ion batteries based on least squares support vector machine error compensation model. J. Power Electron. 2021, 21, 1712–1723. [Google Scholar] [CrossRef]

- Tan, F.; Yin, G.; Zheng, K.; Wang, X. Thermal error prediction of machine tool spindle using segment fusion LSSVM. Int. J. Adv. Manuf. Technol. 2021, 116, 99–114. [Google Scholar] [CrossRef]

- Mohammed, A.J.; Ghathwan, K.I.; Yusof, Y. A hybrid least squares support vector machine with bat and cuckoo search algorithms for time series forecasting. J. Inf. Commun. Technol.-Malays. 2020, 19, 351–379. [Google Scholar] [CrossRef]

- Song, Y.; Niu, W.; Wang, Y.; Xie, X.; Yang, S.; IEEE. A Novel Method for Energy Consumption Prediction of Underwater Gliders Using Optimal LSSVM with PSO Algorithm. In Global Oceans 2020: Singapore—US Gulf Coast; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Wu, H.; Wang, J. A Method for Prediction of Waterlogging Economic Losses in a Subway Station Project. Mathematics 2021, 9, 1421. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Mirjalili, S. A hyper-heuristic for improving the initial population of whale optimization algorithm. Knowl. Based Syst. 2019, 172, 42–63. [Google Scholar] [CrossRef]

- Feng, W. Convergence Analysis of Whale Optimization Algorithm. J. Phys. Conf. Ser. 2021, 1757, 012008. [Google Scholar] [CrossRef]

- Tong, W. A Hybrid Algorithm Framework with Learning and Complementary Fusion Features for Whale Optimization Algorithm. Sci. Program. 2020, 2020, 5684939. [Google Scholar] [CrossRef] [Green Version]

- Rana, N.; Abd Latiff, M.S.; Abdulhamid, S.M.; Misra, S. A hybrid whale optimization algorithm with differential evolution optimization for multi-objective virtual machine scheduling in cloud computing. Eng. Optimiz. 2021. [Google Scholar] [CrossRef]

- Zhou, Z.H. Ensemble Methods: Foundations and Algorithms; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Arora, J.S. 5—More on Optimum Design Concepts. In Introduction to Optimum Design, 2nd ed.; Arora, J.S., Ed.; Academic Press: San Diego, CA, USA, 2004; pp. 175–190. [Google Scholar] [CrossRef]

- Kouziokas, G.N. SVM kernel based on particle swarm optimized vector and Bayesian optimized SVM in atmospheric particulate matter forecasting. Appl. Soft. Comput. 2020, 93, 106410. [Google Scholar] [CrossRef]

- ELSEBACH, R. Evaluation of forecasts in ar models with outliers. OR Spektrum 1994, 16, 41–45. [Google Scholar] [CrossRef]

- Breiman, L. Arcing classifiers. Ann. Stat. 1998, 26, 801–824. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Dietterich, T. An experimental comparison of three methods for constructing ensembles of decision trees: Bagging, boosting, and randomization. Mach. Learn. 2000, 40, 139–157. [Google Scholar] [CrossRef]

- Wang, Z. Research on Static and Dynamic Characteristics and Temperature Compensation of Six-Axis Force Sensor. Ph.D. Thesis, Hefei University of Technology, Hefei, China, 2021. [Google Scholar]

| Dimensions | Load Points | Units |

|---|---|---|

| Fx | −600, −400, −200, 0, 200, 400, 600 | N |

| Fy | −600, −400, −200, 0, 200, 400, 600 | N |

| Fz | 0, 200, 600, 800, 1000 | N |

| Mx | −30, −20, −10, 0, 10, 20, 30 | N·m |

| My | −30, −20, −10, 0, 10, 20, 30 | N·m |

| Mz | −30, −20, −10, 0, 10, 20, 30 | N·m |

| Parameters | Std-SVR | EhW-LSSVM | PSO-LSSVM | WOA-LSSVM | WOA-RBFNN |

|---|---|---|---|---|---|

| - | [0.01, 300] | [0.01, 300] | - | [0.01, 300] | |

| 50 | [1, 1000] | [1, 1000] | [1, 1000] | [1, 1000] | |

| Maximum iteration | - | 30 | 30 | 30 | 30 |

| Count of search agents | - | 20 | 20 | 20 | 20 |

| Count of base learners | - | 10 | - | - | - |

| Parameters | Std-SVR | EhW-LSSVM | PSO-LSSVM | WOA-LSSVM | WOA-RBFNN |

|---|---|---|---|---|---|

| Fx | |||||

| Fy | |||||

| Fz | |||||

| Mx | |||||

| My | |||||

| Mz |

| Parameters | Std-SVR | EhW-LSSVM | PSO-LSSVM | WOA-LSSVM | WOA-RBFNN |

|---|---|---|---|---|---|

| Fx | |||||

| Fy | |||||

| Fz | |||||

| Mx | |||||

| My | |||||

| Mz |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Gao, L.; Cao, H.; Sun, Y.; Jiang, M.; Zhang, Y. A Temperature Compensation Method for aSix-Axis Force/Torque Sensor Utilizing Ensemble hWOA-LSSVM Based on Improved Trimmed Bagging. Sensors 2022, 22, 4809. https://doi.org/10.3390/s22134809

Li X, Gao L, Cao H, Sun Y, Jiang M, Zhang Y. A Temperature Compensation Method for aSix-Axis Force/Torque Sensor Utilizing Ensemble hWOA-LSSVM Based on Improved Trimmed Bagging. Sensors. 2022; 22(13):4809. https://doi.org/10.3390/s22134809

Chicago/Turabian StyleLi, Xuhao, Lifu Gao, Huibin Cao, Yuxiang Sun, Man Jiang, and Yue Zhang. 2022. "A Temperature Compensation Method for aSix-Axis Force/Torque Sensor Utilizing Ensemble hWOA-LSSVM Based on Improved Trimmed Bagging" Sensors 22, no. 13: 4809. https://doi.org/10.3390/s22134809

APA StyleLi, X., Gao, L., Cao, H., Sun, Y., Jiang, M., & Zhang, Y. (2022). A Temperature Compensation Method for aSix-Axis Force/Torque Sensor Utilizing Ensemble hWOA-LSSVM Based on Improved Trimmed Bagging. Sensors, 22(13), 4809. https://doi.org/10.3390/s22134809