Abstract

The emerging areas of IoT and sensor networks bring lots of software applications on a daily basis. To keep up with the ever-changing expectations of clients and the competitive market, the software must be updated. The changes may cause unintended consequences, necessitating retesting, i.e., regression testing, before being released. The efficiency and efficacy of regression testing techniques can be improved with the use of optimization approaches. This paper proposes an improved quantum-behaved particle swarm optimization approach for regression testing. The algorithm is improved by employing a fix-up mechanism to perform perturbation for the combinatorial TCP problem. Second, the dynamic contraction-expansion coefficient is used to accelerate the convergence. It is followed by an adaptive test case selection strategy to choose the modification-revealing test cases. Finally, the superfluous test cases are removed. Furthermore, the algorithm’s robustness is analyzed for fault as well as statement coverage. The empirical results reveal that the proposed algorithm performs better than the Genetic Algorithm, Bat Algorithm, Grey Wolf Optimization, Particle Swarm Optimization and its variants for prioritizing test cases. The findings show that inclusivity, test selection percentage and cost reduction percentages are higher in the case of fault coverage compared to statement coverage but at the cost of high fault detection loss (approx. 7%) at the test case reduction stage.

1. Introduction

With the advent of healthcare applications and the tremendous amount of information processing, there is a need for fault handling [1]. Therefore, software testing is becoming essential for critical safety systems, e.g., IoT devices and sensor networks connected with it in one or another, where failure may lead to loss of money and life. In other words, it is an important part of the software development lifecycle since it ensures that the software is of high quality. It accounts for around half of the entire cost [2]. Testing during the evolution and maintenance phases becomes more important to assure the software’s dependability. All of the test cases must be re-implemented to guarantee that the quality is not affected; this is known as regression testing [3]. In other words, the software is continually changing to sustain the competitive market by updating and maintaining to satisfy the changing needs. Complete retesting accounts for around eighty percent of the entire maintenance cost [4]. On the other hand, it is difficult to test each upgraded version of software nowadays. Software becomes more complex with frequent upgrades, and the amount of time and effort required for regression testing may increase. Test case reduction, selection and priority strategies can help solve these bottleneck problems [5].

- 1.

- Test Case Prioritization (TCP)It ranks the test cases based on some predefined goals, such as maximum code coverage, fault coverage and requirements coverage. It finds such that ; for a given test suite, T, its permutations set , and f denotes a function from to real numbers [2]. In other words, it claims to identify from with a value of larger than any other test case in . The coverage rate is represented by f, which calculates the performance of the permutation series in terms of real numbers.

- 2.

- Test Case Selection (TCS)It chooses essential test cases that are linked with the update of the software. In other words, it finds a subset of T, for testing the modified version of P, [5].

- 3.

- Test Case Reduction (TCR)It focuses on removing redundant test cases by finding a representative test case set, , from T that satisfies test requirements set R: {} for the defined coverage of the program [2].

TCP is the most commonly used out of these three strategies by researchers because it does not eliminate or pick test cases. Instead, it simply rearranges them such that the most critical ones are checked first. The significance of these test cases is determined by a number of factors. It might be code coverage, fault coverage, requirement priority or critical components [3]. TCS and TCR, on the other hand, may leave out certain crucial test cases that can be useful for upcoming versions of the product [2]. On the other side, finding the best order for the test cases, as well as the best way to limit or choose the test cases, makes it a NP- hard problem [6].

Optimization strategies can be used to successfully overcome these issues. Nature-inspired algorithms have been successfully employed to solve difficult optimization problems in many domains [7]. Alternatively, they can improve the cost-effectiveness of regression testing. Nature-inspired algorithms appeal to researchers because of their basic structure and ease of use. The methods are theoretically built by modeling natural events [3]. These algorithms are broadly classified into three classes: biology-inspired, physics/chemistry-inspired and social-phenomena-inspired algorithms. These techniques have also been applied in regression testing [2]. The most often used algorithms are evolutionary algorithms and swarm intelligence-based algorithms from the biology-inspired family of nature-inspired approaches [8].

PSO algorithms have been used by researchers for solving regression testing problems. We have also used similar approaches in our previous works. For Example, Dragonfly was hybridized with PSO for prioritizing the test cases using fault coverage information. It reduced the test suite to 87–96%, which thereby removed some of the critical test cases. Therefore, tri-level regression testing was performed by layering the test case selection in between the test case prioritization and reduction. The promising results of the nature-inspired algorithms on statement coverage motivated us to validate the results on fault coverage as well. As a result, this work analyses the effect of fault and statement coverage criteria on the performance of the technique. It also suggests a swarm-intelligence-based algorithm, Quantum-behaved particle swarm optimization (QPSO) and its improved version, IQPSO, for tri-level regression testing to improve the quality of results. The main contributions of this research are:

- Improved QPSO Algorithm to solve the TCP for fault and statement coverage criteria.

- Extended the algorithm for selecting the modification-revealing test cases using historical information and further reduction of the test suite size.

- Performance analysis of the algorithms using different testing goals, i.e., code coverage and fault coverage.

- Verified robustness of the proposed algorithm against Genetic Algorithm (GA), Bat Algorithm (BAT), Grey Wolf Optimization (GWO), Particle Swarm Optimization (PSO), Adaptive PSO (AdPSO) and the hybrid of PSO with Gravitational Search Algorithm (PSOGSA) and Dragonfly Algorithm (DAPSO).

Alternatively, the nature-inspired algorithms prioritize test cases based on the most extensively used criteria: statement and fault coverage. The modification-revealing test cases are included with the help of the adaptive test case selection method. It takes into account test case history and picks failed test cases based on probabilistic potentials. Since the test selection percentage is large at the expense of high inclusiveness; the TCR is introduced to minimize test suite size by removing duplicate test cases. The empirical results show that the proposed technique works for both fault and statement coverage. Inclusivity, test selection percentage and cost reduction percentages are higher in the case of fault coverage compared to statement coverage but at the cost of slightly high fault detection loss at the test case reduction stage.

The organization of the paper is structured as follows: Section 2 describes the research work done in the application of PSO algorithms for solving regression testing problems. In succession, Section 3 presents the working mechanism of the basic PSO and QPSO. The proposed algorithms are discussed in Section 4. Section 5 and Section 6 present the experimental setup and results analysis. The paper is concluded in Section 7.

2. Literature Review

A review of the literature on the applications of nature-inspired algorithms for regression testing is presented in this section. For Example, Li et al. [4] compared search-based methods to traditional algorithms. It was discovered that the search space is better explored with GA. It prompted more research into the use of nature-inspired algorithms. For example, Zhang et al. [9] used a distance-based and index-based implementation of ACO to prioritize test cases, and the results were superior to GA, PSO and RS. Using the Cuckoo Search Algorithm (CSA), a new fixup mechanism for permutation encoding was developed to address the TCP problem [3]. CSA was also used to reduce the test suite for configuration-aware software testing [10].

Mohapatra and Prasad [11] have employed Ant Colony Optimization on Java programs, and analyzed the performance for reduced suite and complexity to traditional techniques. The quantum-inspired ACO approach for test suite reduction was developed by Zhang et al. [12]. The suggested method outperformed previous ACOs in terms of a % decrease in size. NSGA-II was employed by Mondal et al. [13] for TCS by taking the test suite variety and code coverage as a fitness metric. With a maximum time limit of 20%, it was discovered that diversity enhanced the defect detection rate by up to 16 percent.

Several researchers have employed PSO, such as Khatibsyarbini et al. [14], who used string distances to arrange the test instances and validated it on the real-world TSL dataset. To choose test cases based on redundancy, binary constraint PSO and its hybrid variants with local search algorithms were developed [15]. PSO was implemented with local search to select test cases, having goals of increased branch coverage and lower costs [16]. Because of the positive findings, PSO was also combined with harmony search, which performed better than NSGA-II [17]. Correia [18] developed a test suite diversity diagnosability measure, and the results were improved by applying local search algorithms with PSO to maximize requirement coverage while lowering associated costs.

The test case reduction was also implemented with TCP by hybridizing the PSO with the Dragonfly Algorithm. The observations suggested that hybrid algorithms outperformed other search algorithms [6]. Tri-level regression tesing was proposed to prioritize, select and minimize the test cases based on statement coverage. It was observed that the hybrid of PSO with Gravitational Search Algorithm (PSOGSA) outperformed GA, PSO and GSA [5]. The test suite was minimized using hybrid PSO and the Firefly Algorithm, considering the fault coverage [19]. The modified condition decision coverage criteria were employed as fitness measures in PSO for prioritizing the test cases [20]. Deneke et al. [21] also proposed the PSO Algorithm for reducing the test suite based on requirement coverage and cost. Samad et al. [22] proposed multi-objective PSO for optimizing the code and fault coverage and cost. Agrawal and Kaur selected the test cases using the fault information with the application of PSO [23]. Table 1 shows the application of PSO Algorithms for regression testing methods along with their optimization criteria.

Table 1.

Summary of PSO Algorithms used in Regression Testing.

PSO algorithms have become one of the state-of-the-art algorithms and show promising results in various domains, e.g., reduction of CO emissions in air baggage systems [24]. The original PSO, on the other hand, had issues, such as getting trapped in local optima and premature convergence [25]. According to the findings, upgraded and hybrid versions of PSO outperformed PSO for complicated systems [15]. One such algorithm is Quantum-behaved PSO (QPSO). It is based on quantum mechanics in which particles can travel through a large search space for global convergence [26]. This method has shown good results in a variety of applications, such as cancer classification [27], feature extraction ([28,29,30]) and constrained engineering problems [31], and others [32]. However, it has not been investigated in the TCP domain, which might be due to the fact that it was originally designed for continuous problems. Therefore, to shift infeasible solutions into feasible ones, we suggested a discrete QPSO method based on an adaptation strategy. It does, however, have significant drawbacks, such as early convergence. As a result, we have improved it with a dynamic contraction-expansion coefficient to speed up the performance in the last iterations [31].

Besides this, we have extended the algorithm for selecting the modification-revealing () test cases from the current best solution of TCP. It is occasionally necessary to reduce the test suite by reducing redundancy because of time limits; thus we employed the TCR approach in the end. Our key focus in this research is on performing regression testing in three steps, including TCP, TCS and TCR processes for fault and statement coverage. Alternatively, the effect of different testing criteria on the overall performance of algorithms was analyzed. The observations suggest that the tri-level regression technique is effective for both coverage criteria. The proposed algorithm, IQPSO, is statistically not significant from PSOGSA; however, its variance and mean fitness values are better.

3. Preliminaries

This Section briefly explains the working mechanism of Particle Swarm Optimization (PSO) and Quantum-behaved PSO.

3.1. Particle Swarm Optimization

PSO is inspired by particle behavior, such as flocking, swarming and herding. Each particle changes its flight based on self or companion’s previous flight experience. Each particle, based on its own experience, is aware of the location of food, which is referred to as the personal best position (P). Simultaneously, the particle has knowledge of the swarm’s best-discovered position, the global best position (G). This phenomenon is reproduced in order to solve real-world issues. In other words, the swarm is made up of particles that fly randomly in the solution space with velocity at position and change positions based on personal experience, social behavior and cognitive behavior ([33]). The position and velocity of each particle i at generation are defined mathematically as:

w is the inertia weight used to regulate the impact of prior velocity; and are the constants used to adjust the attractiveness speeds among these social and cognitive elements; and and are uniform random values in the range .

3.2. Quantum-Behaved PSO

A more robust variant of PSO called QPSO is created [25], as PSO cannot ensure global convergence [32]. It determines the Quantum-behaved particles’ route, assuming that N particles with specified energy and potential are well-centered in each dimension of the n-dimensional Hilbert search space. The component of tje particle’s position at iteration is given by the Monte Carlo technique:

where is a uniformly distributed value between 0 and 1, is the individual’s local attractor and is the contraction-expansion coefficient. As a result, the particle’s location in the QPSO Algorithm may be calculated as follows:

In each generation, the particles travel around the local attractor , which is formed with the P and G optimal locations as follows:

The next generation’s particle position distribution is computed using the mean of the P best locations of the particles.

The fundamental difference between the PSO and the QPSO is twofold: (1) a large search space owing to the exponential distribution of the particles; and (2) the particle’s distance from its partners is considered, whereas in PSO, particles move freely to converge to the global best. Another benefit is that it only has one parameter, , which must be managed for convergence and whose value is reduced linearly using the equation:

Because it is simple to use and has been tried and tested on a variety of applications, ref. [32], we sought to apply the QPSO method to a discrete optimization problem in this study and compare its performance to that of state-of-the-art techniques.

4. Proposed Work

This section explains an improved QPSO Algorithm. It is described in three stages. First, it incorporates the asexual reproduction operator into the population (ARO). Second, the adaptive contraction-expansion coefficient is used to alleviate the issue of stagnation. Finally, the adaptive TCS method is then used to choose the test cases. It is followed by the TCR technique for minimizing the size of the test suite as follows:

4.1. Population Update

Appropriate mapping increases the algorithm’s speed and efficacy, so the real numbers are being updated to permutation series by applying the asexual reproduction method. The fix-up process creates a link between real numbers and test case sequences, such that the current solution acquires the parent solution’s properties by forming the bud from the parent while keeping the offspring’s possible values (larva). Alternatively, the algorithm recalculates and rounds the output to natural values. Do not care conditions (*) are used to replace out-of-range and identical particles [3].

4.2. Dynamic Contraction-Expansion Coefficient ()

The value of the contraction-expansion coefficient in QPSO indicates the population’s search radius. The bigger the value, the wider the particle search range; on the other hand, the smaller value, the more narrow the search range. The evolution velocity coefficient is introduced to adaptively alter [34]:

since the global optimal solution is always replaced by the solution with superior fitness as the iteration advances; specifically, . The fitness value of global best varies significantly when the value of is tiny. The evolution is speeding up at small due to significant changes in as the particles are beyond the ideal location. As a result, needs to be increased to ensure a quick optimization. The evolution gets slowed down with large , and the particle search range is reduced, and so is the for better optimization. The solution converges, and the evolution stops at . Therefore, Equation (8) is replaced with:

here and are the minimum and maximum values, and is the evolution velocity coefficient’s weight. Further, the difference between approaches zero as the iterations proceed, so this value is replaced with the mutation operator , where r and s are the particles selected randomly from the population [34]. It is mathematically formulated as:

4.3. Adaptive Test Case Selection

The test case selection approach was proposed [5] that takes into account the statements they cover in the modified version and the impact of failed test cases. It is a dynamic algorithm that picks test cases depending on their pass or fail information after each iteration of TCP step. During the selection process, it requires exact input information, which is quite important in uncovering errors. It is called adaptive as it revises the fault detection capabilities of unallocated test cases and chooses test cases based on existing and earlier historical data. The technique is described as:

Before picking the test case , is the chance of any statement s having additional errors. p and q are constants with values between 0 and 1, such that . It measures the influence of pass or fail status on the of any statement s. The values of p and q are set to and , and q is set high since the goal is to acquire a larger proportion of failed test cases than passed ones. It also uses Equation (13) to update the of the unallocated test cases t:

The test case t with the highest is chosen, and other test cases’ potentials are updated using the chosen test case’s state. The of unassigned test cases t is also updated depending on their revised potentials. In other words, the unassigned test cases’ fault detection capacity is recalculated. The shortlisting sequence is based on the most recent data obtained, and it picks the test case t that has the highest rank in the modified . If the test cases are tied, the initial copy of the test case order is used to break the tie. is created by removing the specified test case from . The previous steps are continued until the stopping requirements are fulfilled, i.e., it creates a sufficient test case to achieve 100 percent statement and fault coverage (), as presented in Algorithm 1. As the algorithm contains two loops that run up to the size of the test suite, the time complexity of the algorithm is .

| Algorithm 1 Adaptive Test Case Selection (ATCS) Algorithm. |

|

4.4. Test Case Reduction (TCR)

To reduce suite size and cost, the current best solution of every generation is followed by the duplicate verification, and the very first test cases covering the faults/statements completely are chosen. This technique has the advantage of exposing how the test cases are ranked precisely [6]. The pseudo-code of TCR is given in Algorithm 2. Since it contains one loop for the test cases and another for finding the faults or statements , so the time complexity of the algorithm can be calculated as .

Algorithm 3 presents an Improved IQPSO Algorithm consisting of two loops, and . It also contains ATCS and TCR algorithms, so the overall complexity of the algorithm is .

| Algorithm 2 Test Case Reduction Algorithm. |

|

| Algorithm 3 IQPSO Algorithm. |

5. Experimental Setup

This section outlines the empirical study, including research questions, datasets, evaluation metrics and the algorithms with which the proposed algorithm is compared. The formulated research questions are:

RQ1. What is the performance of the proposed algorithm for TCP?

The objective is to see if the suggested algorithm outperforms others. It also identifies which algorithm produces the best results, as well as the effect of various testing settings on algorithm performance.

RQ2. What is the performance of the proposed algorithm for TCS?

The goal is to investigate the efficiency of the provided strategies for the ATCS method, namely, test selection percentage, inclusivity of test cases and reduction in cost percentage.

RQ3. What is the performance of the proposed algorithm for TCR?

The aim is to evaluate the effectiveness of the suggested algorithm to that of the other methods. Furthermore, to figure out which testing criteria improve TCR. Alternatively, to see how it impacts the coverage and fault detection capabilities of the test suite.

5.1. Experimental Design

PSO, QPSO and the latest variants of PSO, i.e., PSOGSA [5], DAPSO [6] and Adaptive PSO (AdPSO) [35], are the algorithms considered for comparison. Apart from these, the algorithm is also validated against state-of-the-art algorithms, such as GA, BAT and the recently proposed Grey Wolf Optimization (GWO) [36]. These methods were developed using MATLAB R2017 on a Dell laptop with an Intel i5 CPU, Windows 11 and 8GB of RAM. Due to their stochastic nature, the algorithms are performed 30 times. These are used on three different Java applications (jtopas, ant and jmeter) that are pulled from the software infrastructure repository (SIR) [37]. We have applied the algorithm to different versions of these programs. Table 2 provides more information.

Table 2.

Subject Programs.

The performance of the algorithms is influenced by parameter choices [38]. As a result, we carefully choose the parameters based on a comprehensive review of related works as well as a trial-and-error process for determining optimal values. Table 3 also contains the data retrieved using the Taguchi approach.

Table 3.

Parameter settings of the algorithms.

5.2. Performance Measures

The following performance measures were used to validate the efficiency and efficacy of these algorithms:

5.2.1. Test Case Prioritization

To assess the robustness of the proposed technique, the test cases were selected using two separate testing criteria: fault and statement coverage. As a result, the commonly used fitness measurements and effectiveness measures are defined as follows:

Average Percentage of Fault Detection (APFD) is a measure of how well a system detects faults. It finds a weighted average of the detected defects based on where they are in the test suite [39]. It is computed as follows:

The location of the test case that detects the fault is denoted by , and the faults covered by n test cases is denoted by m. Its value lies between 0 and 100, with greater being better. The Average Percentage of Statement Coverage (APSC) is calculated in the same way as the APFD.

5.2.2. Test Case Selection and Reduction

Test selection percentage, cost reduction percentage, fault detection percentage and coverage loss percentage are commonly used efficacy measures. In addition to these, the inclusivity measure is also used for TCS as follows:

Test Selection Percentage (TSP): It is a percentage selection in the size of the test suite.

here indicates the test cases selected from n test cases.

Inclusivity (I): The extracted test cases divided by the total test cases gives the inclusivity measure.

Fault Detection Loss Percentage (FDLP): The ratio of faults not covered by minimized test suite to total faults covered by the original suite [40]:

Cost Reduction Percentage (CRP): It is a percentage of the test suite’s cost that is reduced when compared to the original suite’s cost .

6. Results and Analysis

This section experimentally assesses the proposed algorithm for TCP using statement and fault coverage criteria. TCS and TCR have been studied for their effects on fault coverage loss, statement coverage loss and cost benefits. To determine the experimental results of a software, the cumulative average of all its iterations was employed. The performance metrics for each version were calculated using the average of 30 runs. For fitness metrics, boxplots and convergence curves are also shown. A one-way ANOVA test with a p-value was used to analyze the algorithms’ output statistically. If , the null hypothesis was rejected, suggesting that the algorithms’ difference was statistically significant. Further, Tukey’s simultaneous test was used to evaluate the pair-wise comparison of the methods.

6.1. Performance Analysis of TCP (RQ 1)

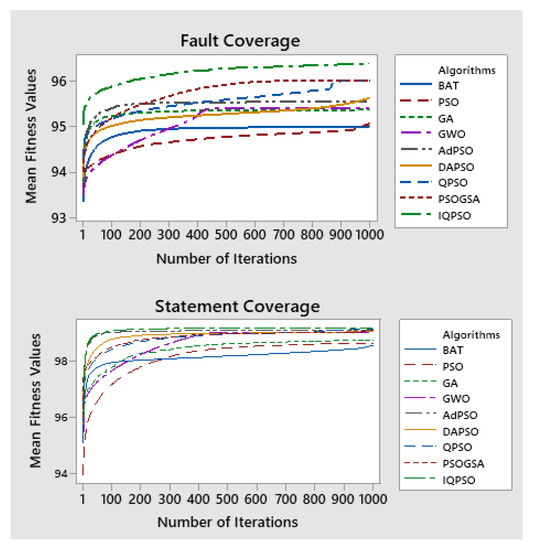

Table 4 shows the mean fitness and variance of performance metrics as well as their corresponding Tukey group ranks for all of the programs. The observations state that IQPSO is statistically different from all other nature-inspired algorithms, with a p-value of less than 0.05, for both statement and fault criteria except PSOGSA. Moreover, it suggests that there is no significant difference between the means of (1) PSOGSA and QPSO, (2) AdPSO and DAPSO, (3) AdPSO and GWO and (4) GA and PSO for both criteria. The convergence curves for one of the versions of the subject programs are illustrated in Figure 1. It shows that the proposed IQPSO Algorithm possesses high-quality solutions for both criteria.

Table 4.

Comparisons of the algorithms for TCP over fault and statement coverages.

Figure 1.

Convergence curves of algorithms for fault and statement coverage criteria of TCP.

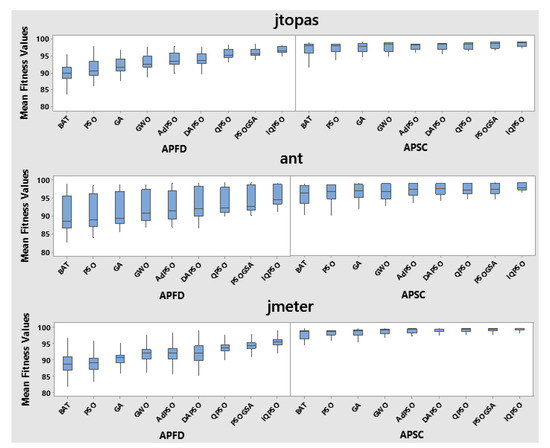

It was also observed that most of the algorithms had equivalent variance in the case of statement coverage. The boxplots in Figure 2 also depict variation in algorithmic performance for fault coverage that is higher for statement coverage. This is because faults are dispersed across the entire software. In other words, most test cases cover almost the same statements. Therefore, the statement coverage boxplots are more compressed than the fault coverage boxplots. Overall, the proposed IQPSO is superior to all other algorithms in terms of variance.

Figure 2.

Boxplots of algorithms for fault and statement coverages of TCP.

6.2. Performance Analysis of TCS (RQ 2)

The performance of the test case selection is evaluated using test selection percentage, inclusivity and cost reduction percentages as follows:

6.2.1. Test Selection Percentage (TSP)

The full version study revealed a random pattern, indicating that all of the algorithms behave similarly. We are unable to determine which algorithm is superior to the others. However, according to the program analysis, DAPSO produced the best TSP for two out of three programs in both coverages (see Table 5). It can be observed that IQPSO, PSOGSA, QPSO and AdPSO are better than PSO, GWO, GA and BAT for fault coverage. On the other side, GA, GWO and PSO performed better than IQPSO, PSOGSA, AdPSO, QPSO and BAT in the case of statement coverage. IQPSO performed better for ant, which includes a significant number of test cases and statements. As a result, it can be said that the improved approach may outperform the large programs. It was also observed that the selection percentage is less in the case of fault coverage than the statement coverage.

Table 5.

Comparisons of the algorithms for TCS over fault and statement coverages.

6.2.2. Inclusivity (I)

All the algorithms are capable of incorporating over 78% and 76% test cases in statement and fault coverages. The proposed algorithm worked well for statement coverage, followed by PSOGSA, QPSO, DAPSO, AdPSO, PSO, GWO, GA and BAT. However, IQPSO and PSOGSA performed least well in the case of fault coverage and the performance-wise algorithms can be ranked as AdPSO, BAT, PSO, GA, QPSO, DAPSO, GWO, IQPSO and PSOGSA. Alternatively, the ATCS method picks a large number of test instances in the case of statement coverage than the fault coverage criteria. Table 5 also showed that the fault coverage criteria is better for inclusiveness of the test cases. The inclusivity of variable state test cases is critical since they necessitate extra care because they do not produce the same results for all versions. In other words, the ATCS method is based on the modification coverage so the fault coverage is the more appropriate choice for inclusivity of the test cases over statement coverage.

6.2.3. Cost Reduction Percentage (CRP)

TCS has witnessed a cost reduction of 6.93–30.26% and 18.78–48.73% for statement and fault coverage criteria. In the case of ant and jmeter, DAPSO delivers the best cost reduction % in most of the cases, whereas IQPSO outperformed DAPSO for statement coverage in ant and fault coverage in jtopas. In other words, DAPSO, GA, GWO and PSO performed better than the PSO variants in statement coverage, whereas IQPSO is the first runner-up for fault coverage after DAPSO (see Table 5). It was also discovered that the TSP and the CRP have an indirect link. In other words, the lower the number of tests in the suite, the higher the CRP.

6.3. Performance Analysis of TCR (RQ 3)

The performance of the test case reduction is analyzed by calculating the test selection percentage, cost reduction percentage and fault detection loss percentages as follows:

6.3.1. Test Selection Percentage (TSP)

Table 6 shows that all the methods perform almost equally well when it comes to reducing the test suite. Nonetheless, the proposed approach performed better than other nature-inspired algorithms for both coverages. Comparatively, BAT had a higher selection percentage. TSP was larger for statement coverage than fault coverage. It is because there was a lot of redundancy in statement coverage, and the faults were spread over the whole program and to balance them APSC had slightly higher TSP than APFD.

Table 6.

Comparisons of the algorithms for TSR over fault and statement coverages.

6.3.2. Fault Detection Loss Percentage (FDLP)

The incorporation of ATCS helped the TCR in reducing the suite size with complete statement coverage and minimized the fault loss too. The findings reveal that the direct application of TCR gave quite a high fault loss, i.e., between 5% and 40% [40]. AdPSO outperformed the other methods for statement (0–0.318%) as well as fault coverages (0–2.887%). Table 6 shows that IQPSO had the least loss in statement coverage compared to the other algorithms, except jtopas, where DAPSO worked better. The observations also depict that the fault loss in APFD (0–8.134%) was higher compared to that in APSC (0–1.121%). The reason for this is that the faults are spread over the software. Hence, the fault coverage is lost by removing certain statement redundancy. It may be deduced that the loss of coverage and the reduction in test suite size are inversely proportionate.

6.3.3. Cost Reduction Percentage (CRP)

The experimental findings show that the cost reduction in fault coverage was more than the statement coverage, as it reduced the test suite better too. It was also observed that cost reduction was inversely proportionate to test selection percentage, i.e., the larger the decrease in test suite size, the lower the running cost. TCM costs were estimated to be roughly 60 and 40 percent lower than TCS for statement and fault coverage. Table 6 clearly shows that the CRP of IQPSO outperformed all other algorithms, followed by PSOGSA, QPSO and DAPSO. GA, PSO, GWO and AdPSO had nearly identical performances. Overall, IQPSO demonstrated superior search capabilities for solving the regression testing problem in all three subject programs.

7. Conclusions

In this paper, we have suggested an improved QPSO Algorithm for regression testing and validated it against GA, GWO, BAT and PSO and its variants, DAPSO, PSOGSA and AdPSO. The empirical results show that the proposed algorithm IQPSO has a comparatively low variance for statement and fault coverages. Further, the adaptive test selection approach was able to successfully identify 77–96% of the test cases in both fault and statement coverages. The study also revealed that the adaptive test selection percentage of fault coverage was 40–60% less than the statement coverage with high inclusivity. IQPSO performed better than all other algorithms for test case reduction and cost reduction %. The algorithms showed approximately a 7% difference in the fault detection capability loss for fault coverage over statement coverage. In the future, we will strive to reduce this fault detection loss to almost zero and validate the algorithm’s results in a variety of large-scale real-world applications. We intend to investigate alternative variants of QPSO Algorithms by modification and hybridization to improve the inclusivity and algorithm’s performance even further.

Author Contributions

Data curation, A.B.; Formal analysis, A.B. and A.A.; Funding acquisition, L.A.G.; Investigation, A.B.; Methodology, A.B.; Project administration, A.A. and L.A.G.; Resources, A.B.; Software, A.B.; Supervision, A.A.; Validation, A.B.; Visualization, A.B., A.A. and S.R.; Writing—original draft, A.B.; Writing—review and editing, A.B., A.A., S.R. and L.A.G. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by the Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2022R178), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Abbreviations and Nomenclature

Abbreviations

| ACO | Ant Colony Optimization |

| AdPSO | Adaptive Particle Swarm Optimization |

| ANOVA | Analysis of Variance |

| APFD | Average Percentage of Fault Detection |

| APSC | Average Percentage of Statement Coverage |

| ARO | Asexual Reproduction Operator |

| ATCS | Adaptive Test Case Selection |

| BAT | Bat Algorithm |

| CRP | Cost Reduction Percentage |

| CSA | Cuckoo Search Algorithm |

| DA | Dragonfly Algorithm |

| FDLP | Fault Detection Loss Percentage |

| FP | Fault Position Array |

| GA | Genetic Algorithm |

| GWO | Grey Wolf Optimization |

| I | Inclusivity |

| IQPSO | Improved Quantum behaved Particle Swarm Optimization |

| MR | Modification-Revealing test cases |

| PSO | Particle Swarm Optimization |

| QPSO | Quantum behaved Particle Swarm Optimization |

| SIR | Software Infrastructure Repository |

| TCP | Test Case Prioritization |

| TCR | Test Case Reduction |

| TCS | Test Case Selection |

| TFP | Test Fault Matrix |

| TRP | Test Reduction Percentage |

Nomenclature

| test case of the test suite | |

| permuted test suite | |

| fitness function | |

| R | requirement Set |

| position of a particle | |

| velocity of a particle | |

| personal best particles | |

| G | global best particles |

| w | inertia weight |

| social and cognitive components | |

| a | local attractor |

| u | uniform random number |

| contraction-expansion coefficient | |

| mean best of personal best particles | |

| random number | |

| ,aximum number of iterations | |

| Gaussian distribution of x | |

| evolution velocity coefficient | |

| fitness value of global best particle | |

| potential of statement | |

| s | statement covered by test case |

| priority of test case | |

| selected Test Suite | |

| reduced Test Suite | |

| maximum capacity of test suite to be selected | |

| indices of reduced array | |

| maximum number of population | |

| crossover probability | |

| mutation probability | |

| A | loudness of bat |

| pulse emission rate of bat | |

| minimum and maximum frequency of bats | |

| n | number of test cases |

| m | number of faults |

| selected test cases | |

| extracted modification-revealing test cases | |

| total modification-revealing test cases | |

| number of faults not covered | |

| total faults covered | |

| reduced cost of test suite | |

| total cost of test suite | |

| potential coefficients |

References

- Webert, H.; Döß, T.; Kaupp, L.; Simons, S. Fault Handling in Industry 4.0: Definition, Process and Applications. Sensors 2022, 22, 2205. [Google Scholar] [CrossRef] [PubMed]

- Yoo, S.; Harman, M. Regression testing minimization, selection and prioritization: A survey. Softw. Testing Verif. Reliab. 2012, 22, 67–120. [Google Scholar] [CrossRef]

- Bajaj, A.; Sangwan, O.P. Discrete cuckoo search algorithms for test case prioritization. Appl. Soft Comput. 2021, 110, 107584. [Google Scholar] [CrossRef]

- Li, Z.; Harman, M.; Hierons, R.M. Search algorithms for regression test case prioritization. IEEE Trans. Softw. Eng. 2007, 33, 225–237. [Google Scholar] [CrossRef] [Green Version]

- Bajaj, A.; Sangwan, O.P. Tri-level regression testing using nature-inspired algorithms. Innov. Syst. Softw. Eng. 2021, 17, 1–16. [Google Scholar] [CrossRef]

- Bajaj, A.; Abraham, A. Prioritizing and Minimizing Test Cases Using Dragonfly Algorithms. Int. J. Comput. Inf. Syst. Ind. Manag. Appl. 2021, 13, 62–71. [Google Scholar]

- Shaukat, N.; Ahmad, A.; Mohsin, B.; Khan, R.; Khan, S.U.D.; Khan, S.U.D. Multiobjective Core Reloading Pattern Optimization of PARR-1 Using Modified Genetic Algorithm Coupled with Monte Carlo Methods. Sci. Technol. Nucl. Install. 2021, 2021, 1802492. [Google Scholar] [CrossRef]

- Fister, J.I.; Yang, X.S.; Fister, I.; Brest, J.; Fister, D. A brief review of nature-inspired algorithms for optimization. arXiv 2013, arXiv:1307.4186, 116–122. [Google Scholar]

- Zhang, W.; Qi, Y.; Zhang, X.; Wei, B.; Zhang, M.; Dou, Z. On test case prioritization using ant colony optimization algorithm. In Proceedings of the 2019 IEEE 21st International Conference on High Performance Computing and Communications; IEEE 17th International Conference on Smart City; IEEE 5th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Zhangjiajie, China, 10–12 August 2019; pp. 2767–2773. [Google Scholar] [CrossRef]

- Ahmed, B.S. Test case minimization approach using fault detection and combinatorial optimization techniques for configuration-aware structural testing. Eng. Sci. Technol. Int. J. 2016, 19, 737–753. [Google Scholar] [CrossRef] [Green Version]

- Mohapatra, S.K.; Prasad, S. Test case reduction using ant colony optimization for object oriented program. Int. J. Electrical. Comput. Eng. 2015, 5, 2088–8708. [Google Scholar] [CrossRef]

- Zhang, Y.N.; Yang, H.; Lin, Z.K.; Dai, Q.; Li, Y.F. A test suite reduction method based on novel quantum ant colony algorithm. In Proceedings of the 2017 4th International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 21–23 July 2017; pp. 825–829. [Google Scholar] [CrossRef]

- Mondal, D.; Hemmati, H.; Durocher, S. Exploring test suite diversification and code coverage in multi-objective test case selection. In Proceedings of the 2015 IEEE 8th International Conference on Software Testing, Verification and Validation (ICST), Graz, Austria, 13–17 April 2015; pp. 1–10. [Google Scholar] [CrossRef]

- Khatibsyarbini, M.; Isa, M.A.; Jawawi, D.N.A. Particle swarm optimization for test case prioritization using string distance. Adv. Sci. Lett. 2018, 24, 7221–7226. [Google Scholar] [CrossRef]

- De Souza, L.S.; Prudêncio, R.B.; Barros, F.D.A.; Aranha, E.H.D.S. Search based constrained test case selection using execution effort. Expert Syst. Appl. 2013, 40, 4887–4896. [Google Scholar] [CrossRef]

- De Souza, L.S.; Prudêncio, R.B.; Barros, F.D.A. A hybrid binary multi-objective particle swarm optimization with local search for test case selection. In Proceedings of the Brazilian conference on intelligent systems, Sao Paulo, Brazil, 18–22 October 2014; pp. 414–419. [Google Scholar] [CrossRef]

- De Souza, L.S.; Prudêncio, R.B.C.; De Barros, F.A. A hybrid particle swarm optimization and harmony search algorithm approach for multi-objective test case selection. J. Braz. Comput. Soc. 2015, 21, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Correia, D. An industrial application of test selection using test suite diagnosability. In Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Tallinn, Estonia, 26–30 August 2019; pp. 1214–1216. [Google Scholar] [CrossRef]

- Bharathi, M. Hybrid particle swarm and ranked firefly metaheuristic optimization-based software test case minimization. Int. J. Appl. Metaheuristic Comput. 2022, 13, 1–20. [Google Scholar] [CrossRef]

- Nayak, G.; Ray, M. Modified condition decision coverage criteria for test suite prioritization using particle swarm optimization. Int. J. Intell. Comput. Cybern. 2019, 12, 425–443. [Google Scholar] [CrossRef]

- Deneke, A.; Assefa, B.G.; Mohapatra, S.K. Test suite minimization using particle swarm optimization. Mater. Today Proc. 2022, 1–5. [Google Scholar] [CrossRef]

- Samad, A.; Mahdin, H.B.; Kazmi, R.; Ibrahim, R.; Baharum, Z. Multiobjective Test Case Prioritization Using Test Case Effectiveness: Multicriteria Scoring Method. Sci. Program. 2021, 2021, 9988987. [Google Scholar] [CrossRef]

- Agrawal, A.P.; Kaur, A. A comprehensive comparison of ant colony and hybrid particle swarm optimization algorithms through test case selection. In Data Engineering and Intelligent Computing; Springer: Singapore, 2018; pp. 397–405. [Google Scholar] [CrossRef]

- Lodewijks, G.; Cao, Y.; Zhao, N.; Zhang, H. Reducing CO2 Emissions of an Airport Baggage Handling Transport System Using a Particle Swarm Optimization Algorithm. IEEE Access 2021, 9, 121894–121905. [Google Scholar] [CrossRef]

- Sun, J.; Xu, W.; Feng, B. A global search strategy of quantum-behaved particle swarm optimization. In Proceedings of the IEEE Conference on Cybernetics and Intelligent Systems, Singapore, 1–3 December 2004; pp. 111–116. [Google Scholar] [CrossRef]

- Lukemire, J.; Mandal, A.; Wong, W.K. d-qpso: A quantum-behaved particle swarm technique for finding d-optimal designs with discrete and continuous factors and a binary response. Technometrics 2019, 61, 77–87. [Google Scholar] [CrossRef] [Green Version]

- Iliyasu, A.M.; Fatichah, C. A Quantum Hybrid PSO Combined with Fuzzy k-NN Approach to Feature Selection and Cell Classification in Cervical Cancer Detection. Sensors 2017, 17, 2935. [Google Scholar] [CrossRef] [Green Version]

- Peng, C.; Yan, J.; Duan, S.; Wang, L.; Jia, P.; Zhang, S. Enhancing Electronic Nose Performance Based on a Novel QPSO-KELM Model. Sensors 2016, 16, 520. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, X.; Peng, C.; Zhang, S.; Yan, J.; Duan, S.; Wang, L.; Jia, P.; Tian, F. A Novel Feature Extraction Approach Using Window Function Capturing and QPSO-SVM for Enhancing Electronic Nose Performance. Sensors 2015, 15, 15198–15217. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wen, T.; Yan, J.; Huang, D.; Lu, K.; Deng, C.; Zeng, T.; Yu, S.; He, Z. Feature Extraction of Electronic Nose Signals Using QPSO-Based Multiple KFDA Signal Processing. Sensors 2018, 18, 388. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- dos Santos Coelho, L. Gaussian quantum-behaved particle swarm optimization approaches for constrained engineering design problems. Expert Syst. Appl. 2010, 37, 1676–1683. [Google Scholar] [CrossRef]

- Omkar, S.N.; Khandelwal, R.; Ananth, T.V.S.; Naik, G.N.; Gopalakrishnan, S. Quantum behaved particle swarm optimization (QPSO) for multi-objective design optimization of composite structures. Expert Syst. Appl. 2009, 36, 11312–11322. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar] [CrossRef]

- Guo, X.; Song, X.; Zhou, J.T. A synergic quantum particle swarm optimisation for constrained combinatorial test generation. IET Softw. 2022, 16, 279–300. [Google Scholar] [CrossRef]

- Nabi, S.; Ahmad, M.; Ibrahim, M.; Hamam, H. AdPSO: Adaptive PSO-Based Task Scheduling Approach for Cloud Computing. Sensors 2022, 22, 920. [Google Scholar] [CrossRef]

- Gupta, D.; Gupta, V. Test Suite Prioritization Using Nature Inspired Meta-Heuristic Algorithms. In Intelligent Systems Design and Applications; Advances in Intelligent Systems and Computing; Madureira, A., Abraham, A., Gamboa, D., Novais, P., Eds.; Springer: Cham, Switzerland, 2017; Volume 557. [Google Scholar] [CrossRef]

- Do, H.; Mirarab, S.; Tahvildari, L.; Rothermel, G. The effects of time constraints on test case prioritization: A series of controlled experiments. IEEE Trans. Softw. Eng. 2010, 36, 593–617. [Google Scholar] [CrossRef] [Green Version]

- Bajaj, A.; Sangwan, O.P. Study the impact of parameter settings and operators role for genetic algorithm based test case prioritization. In Proceedings of the International Conference on Sustainable Computing in Science, Technology and Management, Jaipur, India, 26–28 February 2019; pp. 1564–1569. [Google Scholar] [CrossRef]

- Elbaum, S.; Malishevsky, A.G.; Rothermel, G. Test case prioritization: A family of empirical studies. IEEE Trans. Softw. Eng. 2002, 28, 159–182. [Google Scholar] [CrossRef] [Green Version]

- Bajaj, A.; Sangwan, O.P.; Abraham, A. Improved novel bat algorithm for test case prioritization and minimization. Soft Comput. 2022, 6, 1–27. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).