Implementation of Omni-D Tele-Presence Robot Using Kalman Filter and Tricon Ultrasonic Sensors

Abstract

:1. Introduction

1.1. Motivation

1.2. Related Work and Challenges

1.3. Novelty and Contributions

- We have presented a tele-presence robot to offer the efficient video transmissions by using a WebRTC. The corresponding details are given in Section 3.3.

- Designing of an online portal using a hypertext markup language (HTML) that can be accessed from anywhere in the world. Then, the integration of our designed portal with the proposed tele-presence robot is an additional contribution.

- Performance evaluation of the WebRTC for three different video resolutions, i.e., , , and pixels.

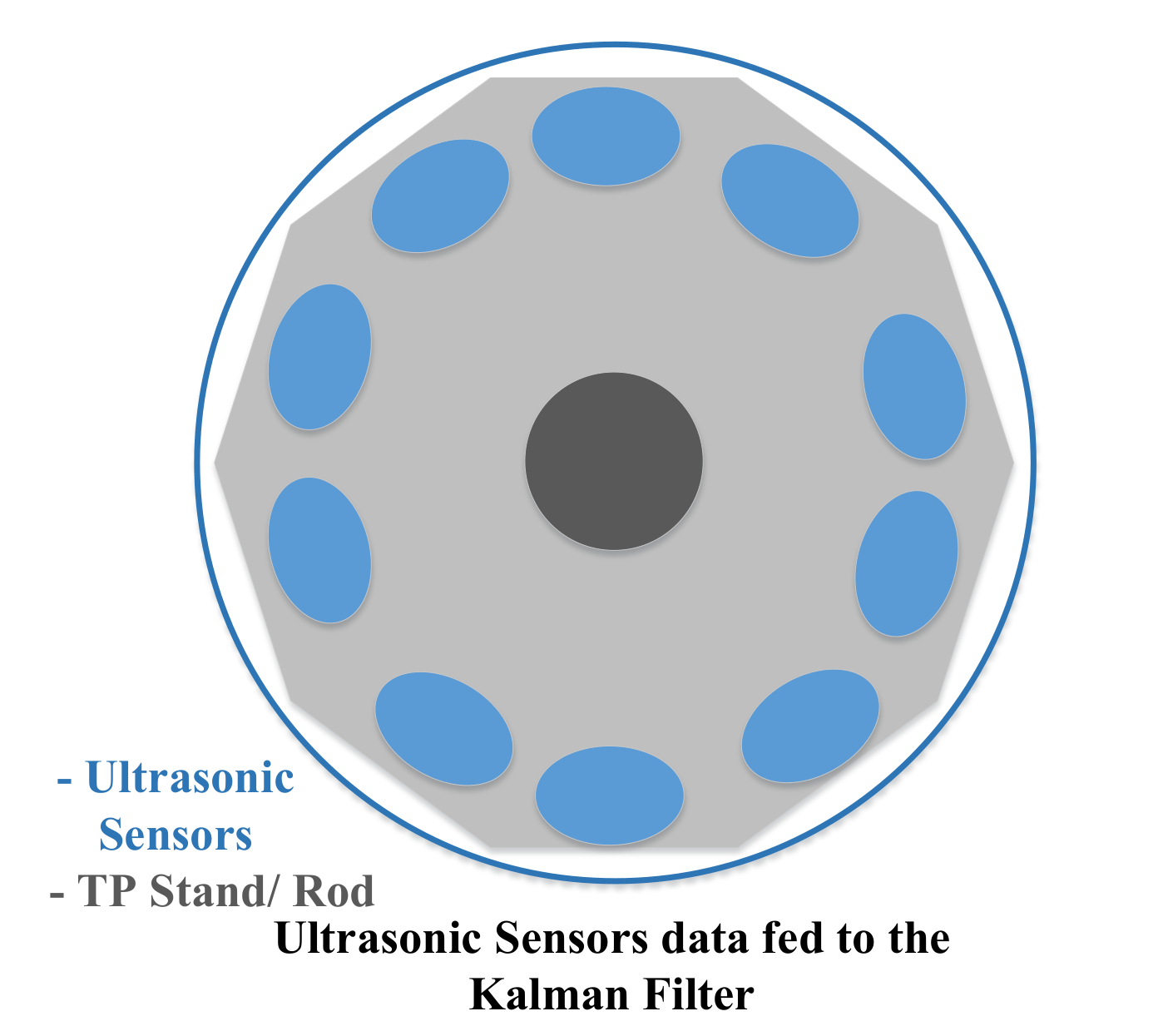

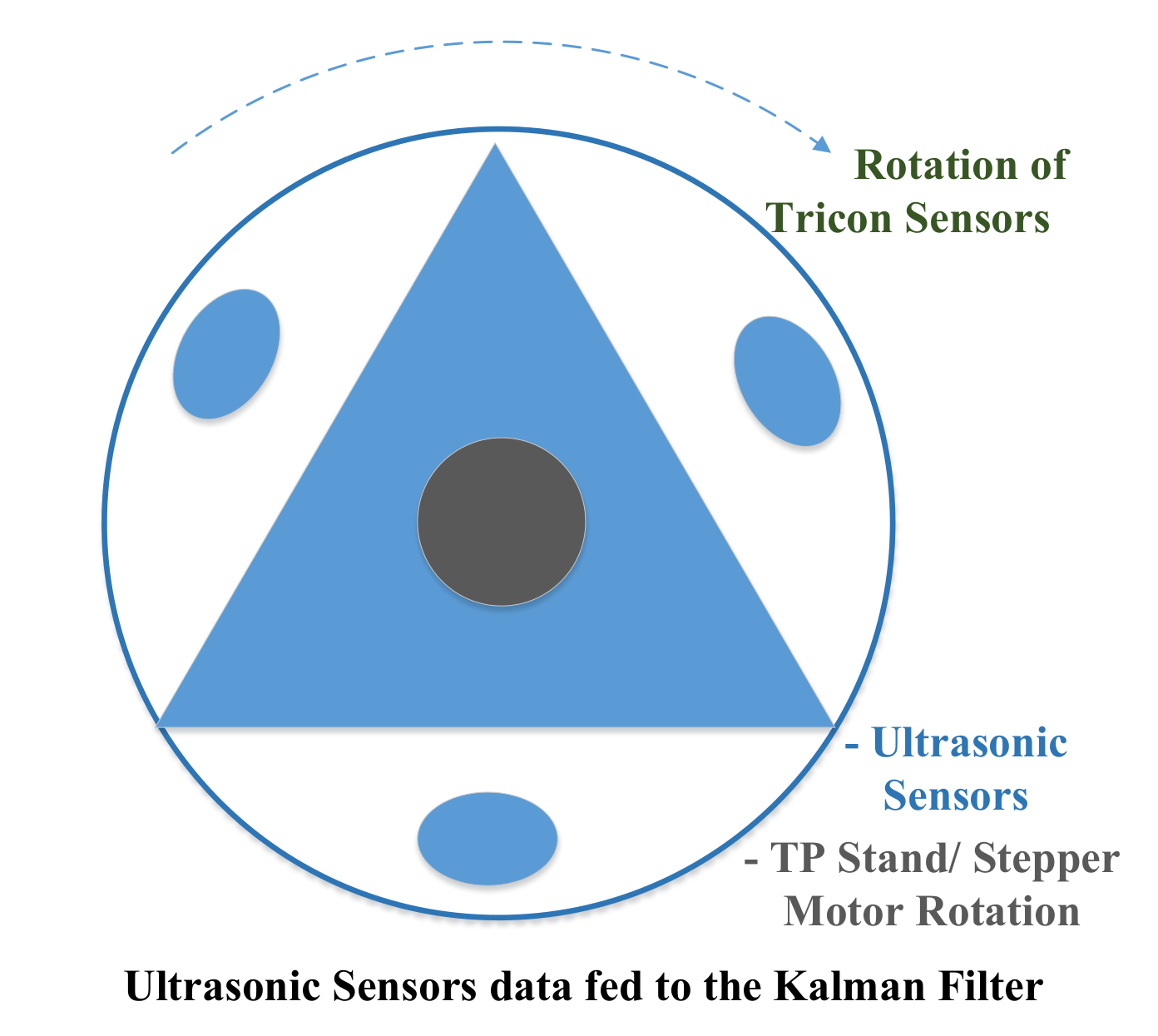

- We have proposed a Tricon sensor algorithm for the Kalman Filter to improve the robustness of the collision avoidance.

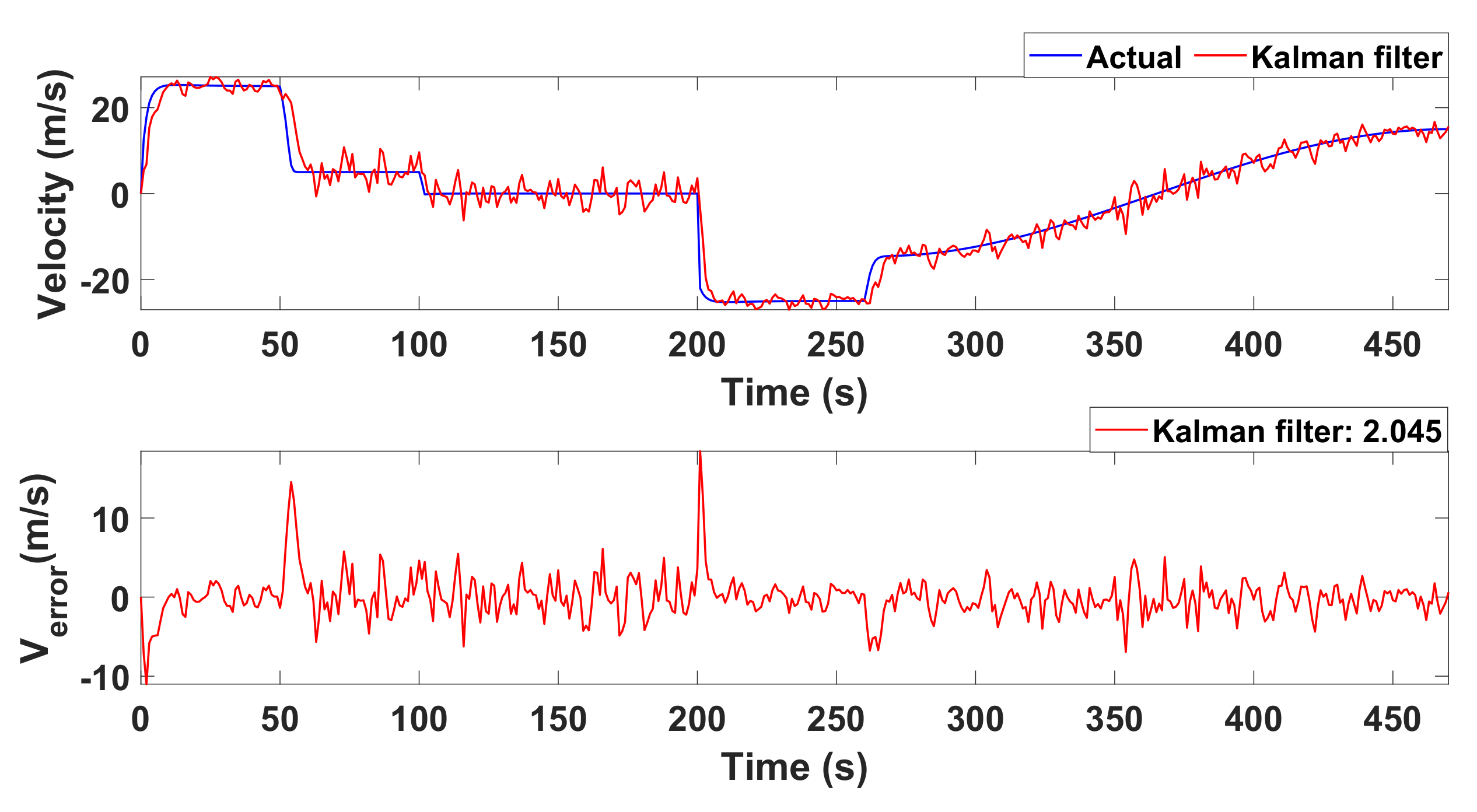

- Simulation of the TPR with Kalman filter for the crucial paths/hurdles to estimate the parameters, i.e., velocity and position.

- Hardware implementation, testing of the TPR in real environment and performance comparison with simulated results.

2. Preliminaries

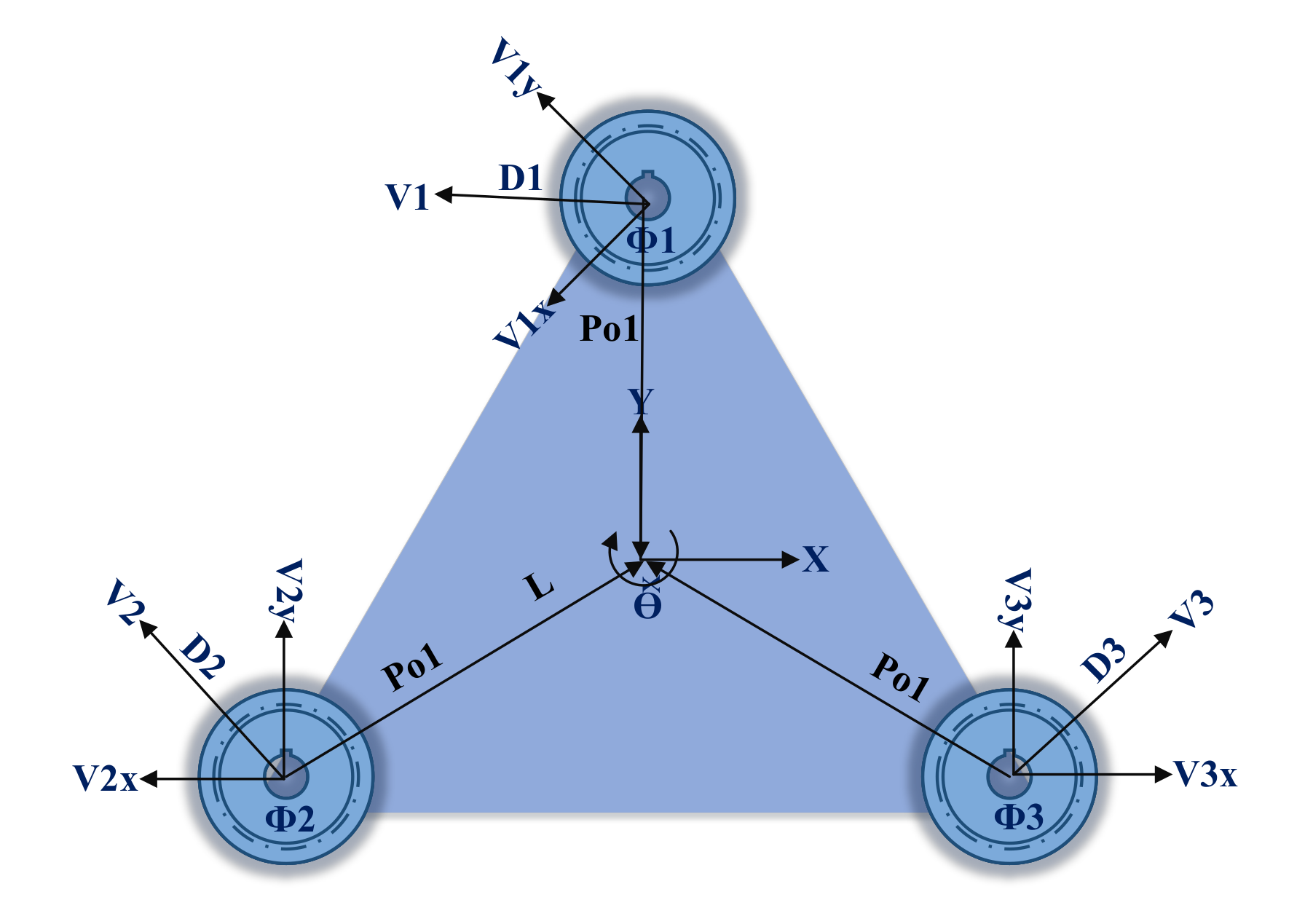

2.1. Modeling of Omnidirectional Wheels

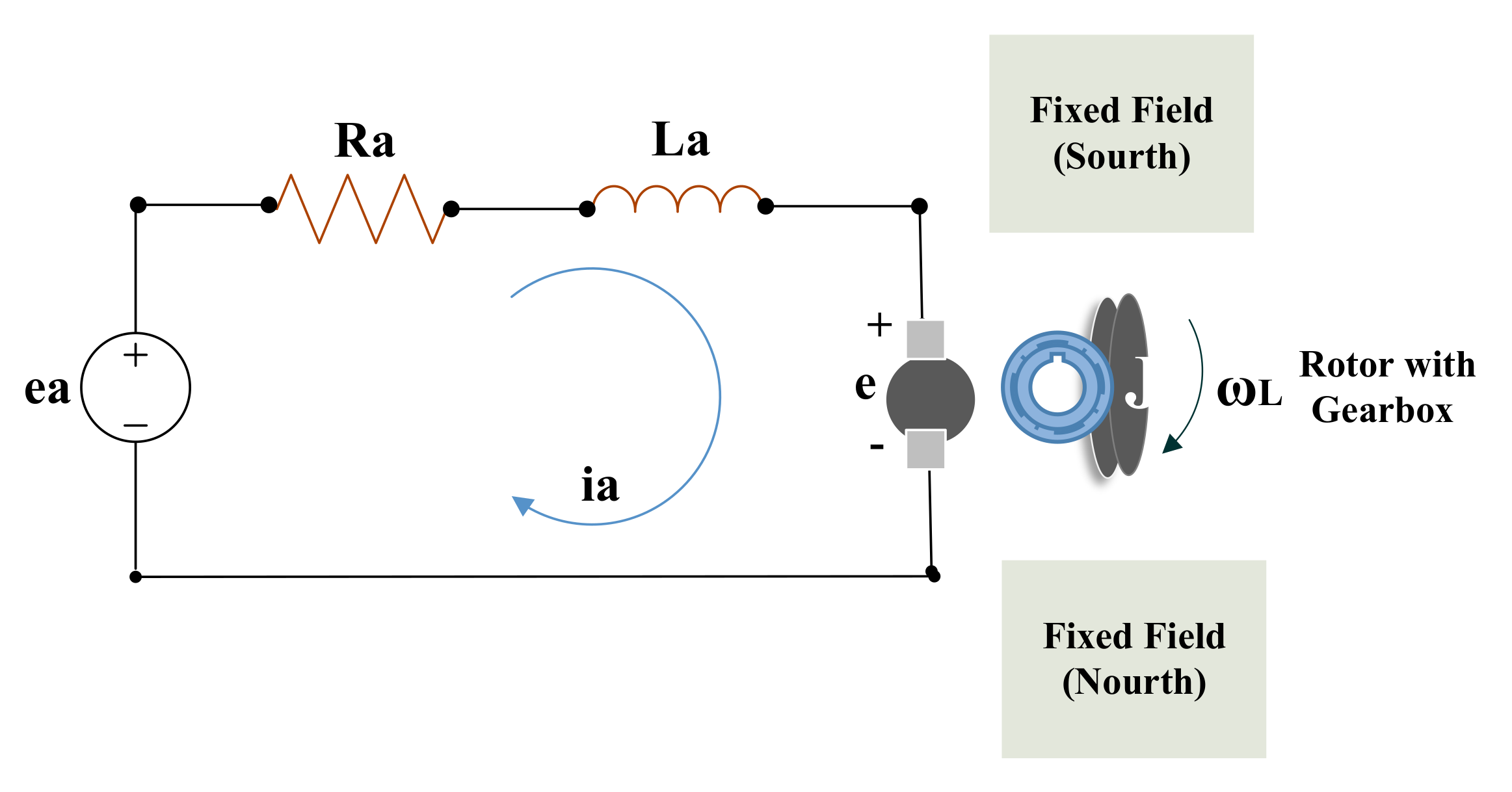

2.2. DC Motor Modeling

2.3. Kalman Filter

3. Proposed Design of Omnidirectional Tele-Presence Robot

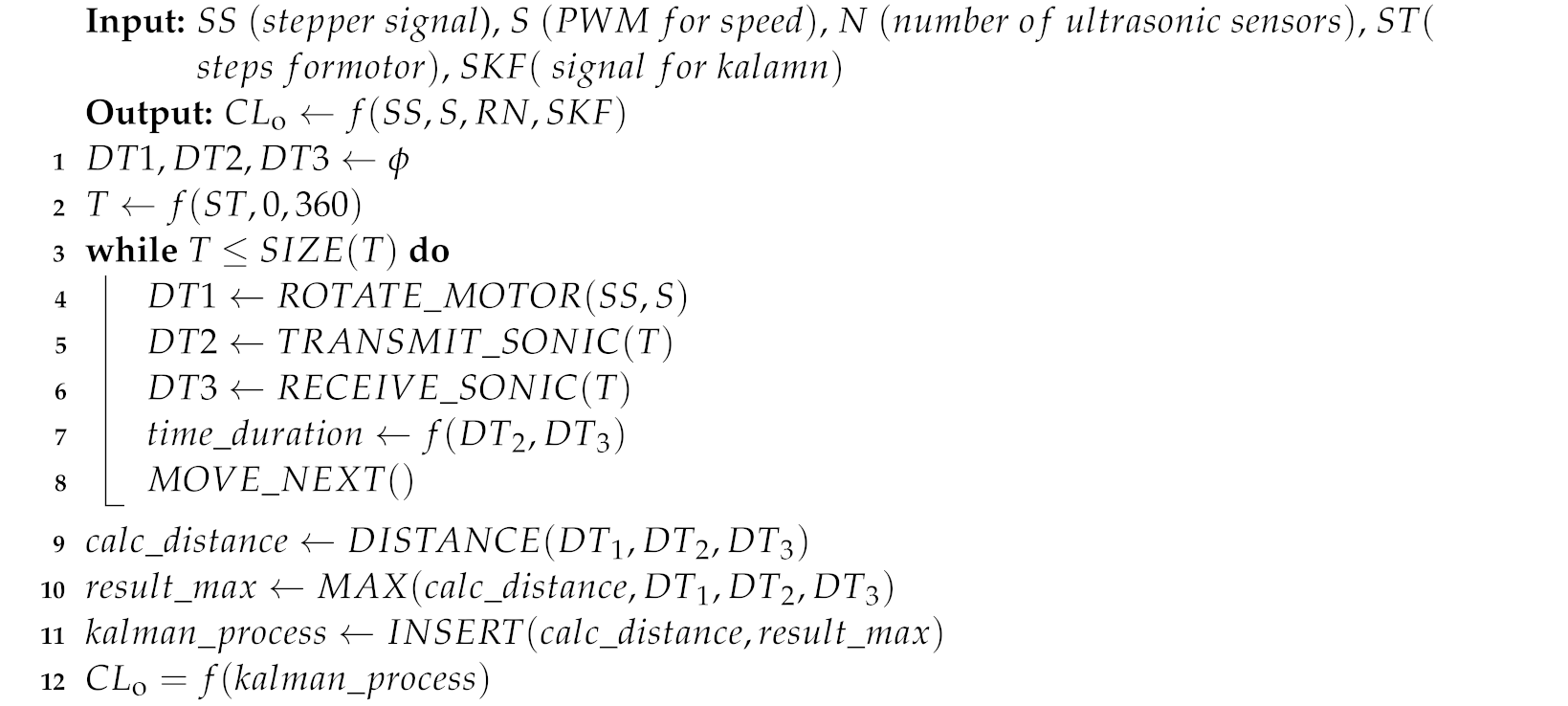

3.1. Our Proposed Algorithm for Tricon Ultrasonic Sensors

| Algorithm 1 Proposed Tricon algorithm for the ultrasonic sensors. |

|

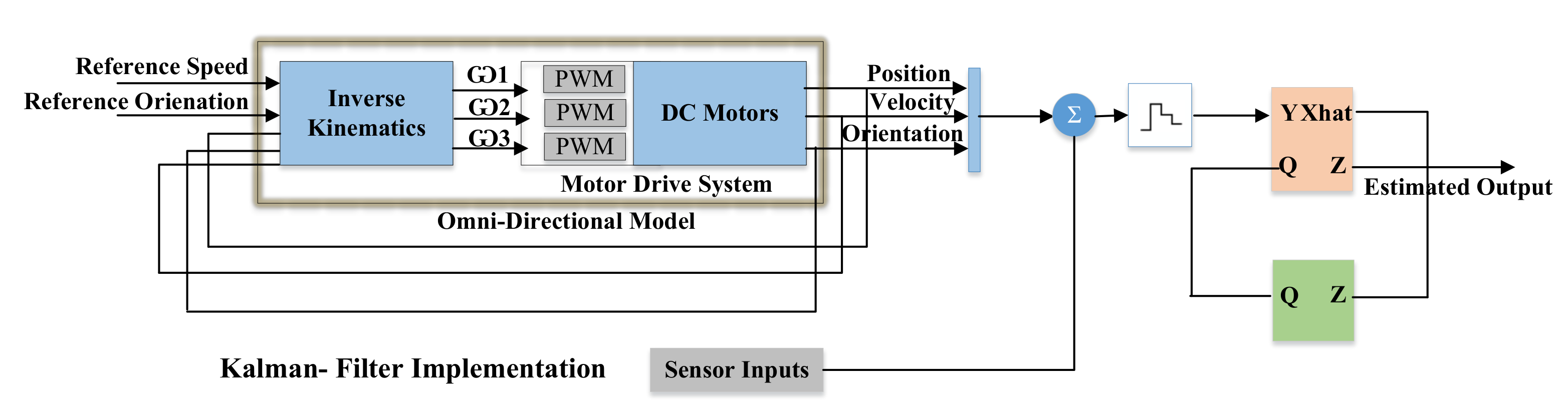

3.2. Control and Integration of Kalman Filter in TPR

3.3. Webrtc Integration in the Tele-Presence Robot (TPR)

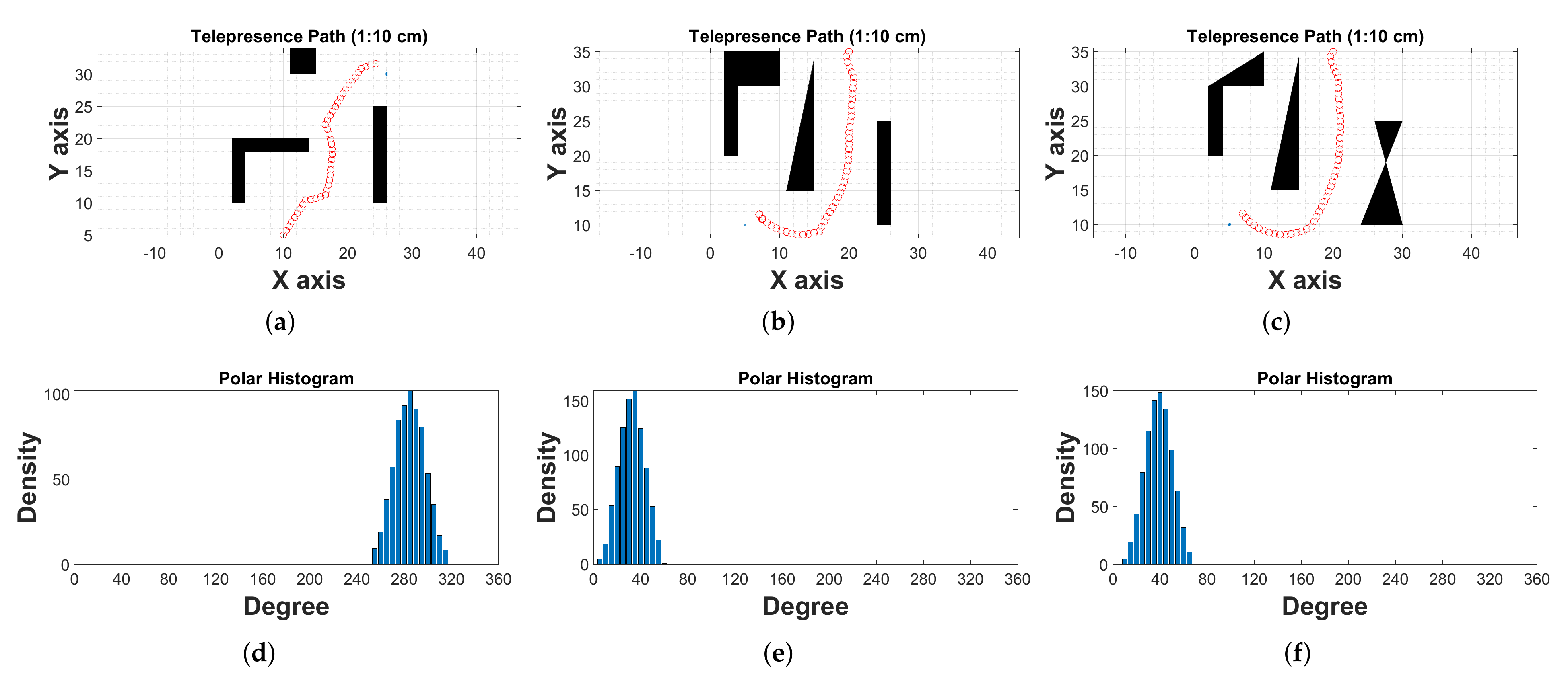

4. Simulation Results

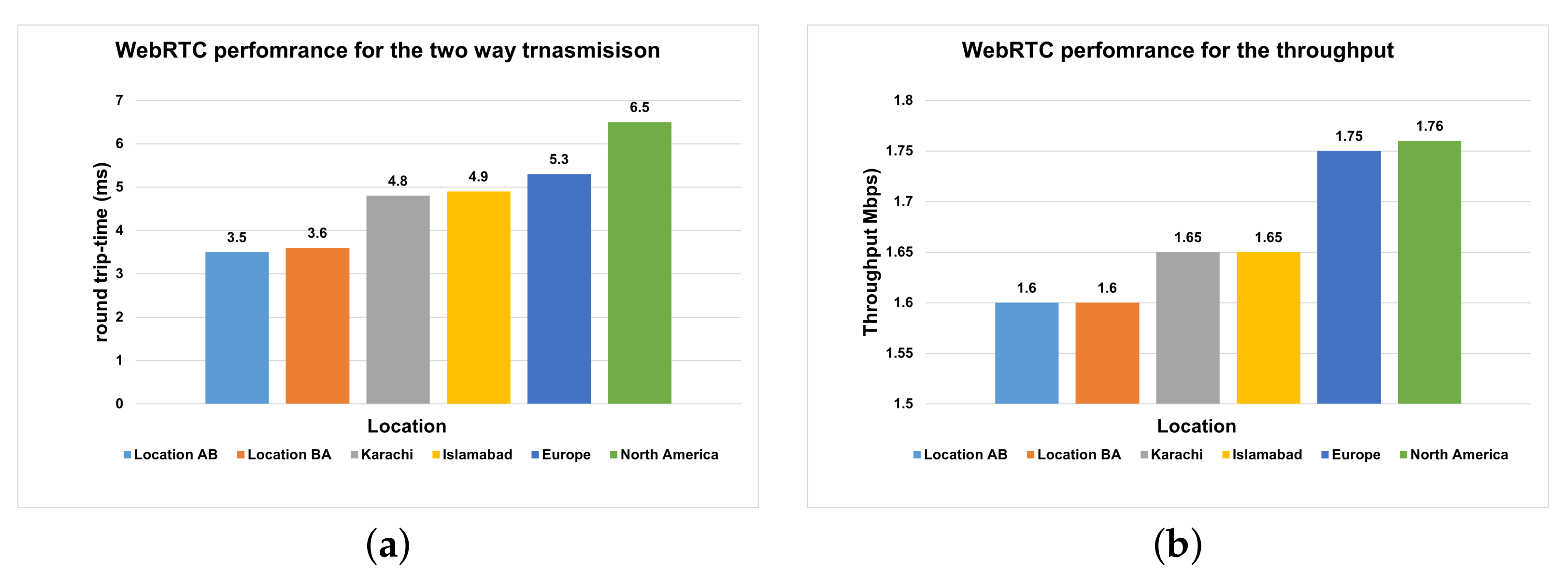

4.1. Performance Evaluation of Webrtc

4.2. Simulation of Controller along with Kalman Filter

5. Hardware Demonstrations and Testing of Tpr

5.1. Hardware Demonstration

5.2. Testing of the Tele-Presence Robot

5.3. Significance of the Proposed TPR

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Choi, J.J.; Kwak, S.S. Can you feel me? In How embodiment levels of telepresence systems affect presence. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 606–611. [Google Scholar] [CrossRef]

- Rangel, R.; Romero, L.; Garcia, M. Paynal, a low cost telepresence robot. In Proceedings of the 2015 IEEE International Autumn Meeting on Power, Electronics and Computing (ROPEC), Ixtapa, Mexico, 4–6 November 2015; pp. 1–4. [Google Scholar] [CrossRef]

- World Health Organization. Ageing and Future Population; WHO: Geneva, Switzerland, 2021. [Google Scholar]

- Adalgeirsson, S.O.; Breazeal, C. MeBot: A robotic platform for socially embodied telepresence. In Proceedings of the 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Osaka, Japan, 2–5 March 2010; pp. 15–22. [Google Scholar] [CrossRef] [Green Version]

- Ha, V.K.L.; Nguyen, T.N.; Nguyen, H.T. Real-time transmission of panoramic images for a telepresence wheelchair. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3565–3568. [Google Scholar] [CrossRef] [Green Version]

- Schneider, D. A DIY Telepresence Robot. IEEE Spectrum, 30 September 2010. Available online: https://spectrum.ieee.org/a-diy-telepresence-robot(accessed on 2 February 2022).

- Ha, V.K.L.; Nguyen, T.N.; Nguyen, H.T. A telepresence wheelchair using cellular network infrastructure in outdoor environments. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 5352–5355. [Google Scholar] [CrossRef]

- Ha, V.K.L.; Nguyen, T.N.; Nguyen, H.T. Real-time video streaming with multi-camera for a telepresence wheelchair. In Proceedings of the 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), Phuket, Thailand, 13–15 November 2016; pp. 1–5. [Google Scholar] [CrossRef]

- GSM Association. WebRTC to Complement IP Communication Services; Version 1; GSMA: London, UK, 2021. [Google Scholar]

- Mishra, R.; Ajmera, Y.; Mishra, N.; Javed, A. Ego-Centric framework for a three-wheel omni-drive Telepresence robot. In Proceedings of the 2019 IEEE International Conference on Advanced Robotics and its Social Impacts (ARSO), Beijing, China, 31 October–2 November 2019; pp. 281–286. [Google Scholar] [CrossRef]

- Husić, J.B.; Baraković, S.; Veispahić, A. What factors influence the quality of experience for WebRTC video calls? In Proceedings of the 2017 40th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 22–26 May 2017; pp. 428–433. [Google Scholar] [CrossRef]

- Sarder, M.R.; Ahmed, F.; Shakhar, B.A. Design and implementation of a lightweight telepresence robot for medical assistance. In Proceedings of the 2017 International Conference on Electrical, Computer and Communication Engineering (ECCE), Cox’s Bazar, Bangladesh, 16–18 February 2017; pp. 779–783. [Google Scholar] [CrossRef]

- Ha, V.K.L.; Chai, R.; Nguyen, H.T. Real-time WebRTC-based design for a telepresence wheelchair. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Korea, 11–15 July 2017; pp. 2676–2679. [Google Scholar] [CrossRef]

- Jitheesh, P.; Keeramkot, F.; Athira, P.C.; Madeena, S.; Arunvinodh, C. Telepresence Robot Doctor. In Proceedings of the 2016 Online International Conference on Green Engineering and Technologies (IC-GET), Coimbatore, India, 19–20 November 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Borvorntanajanya, K.; Thiuthipsakul, P.; Chalongwongse, S.; Moonjaita, C.; Suthakorn, J. Development of differential suspension wheeled system for telepresence robot in rural hospital area. In Proceedings of the 2016 IEEE International Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 December 2016; pp. 1046–1051. [Google Scholar] [CrossRef]

- Nguyen, V.A.; Lu, J.; Zhao, S.; Vu, D.T.; Yang, H.; Jones, D.L.; Do, M.N. ITEM: Immersive Telepresence for Entertainment and Meetings – A Practical Approach. IEEE J. Sel. Top. Signal Process. 2015, 9, 546–561. [Google Scholar] [CrossRef] [Green Version]

- Isabet, B.; Pino, M.; Lewis, M.; Benveniste, S.; Rigaud, A.S. Social Telepresence Robots: A Narrative Review of Experiments Involving Older Adults before and during the COVID-19 Pandemic. Int. J. Environ. Res. Public Health 2021, 18, 3597. [Google Scholar] [CrossRef]

- Okamura, E.; Tanaka, F. A pilot study about remote teaching by elderly people to children over a two-way telepresence robot system. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 489–490. [Google Scholar] [CrossRef]

- Shin, K.W.C.; Han, J. Children’s perceptions of and interactions with a telepresence robot. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 521–522. [Google Scholar] [CrossRef]

- Kwon, O.H.; Koo, S.Y.; Kim, Y.G.; Kwon, D.S. Telepresence robot system for English tutoring. In Proceedings of the 2010 IEEE Workshop on Advanced Robotics and its Social Impacts, Seoul, Korea, 26–28 October 2010; pp. 152–155. [Google Scholar] [CrossRef]

- Budiharto, W.; Suhartono, D. Intelligent service robot with voice recognition and telepresence capabilities. In Proceedings of the 2015 SAI Intelligent Systems Conference (IntelliSys), London, UK, 10–11 November 2015; pp. 301–304. [Google Scholar] [CrossRef]

- Macharet, D.G.; Florencio, D.A. A collaborative control system for telepresence robots. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 5105–5111. [Google Scholar] [CrossRef]

- Zhang, J.; Li, W.; Yu, J.; Zhang, Q.; Cui, S.; Li, Y.; Li, S.; Chen, G. Development of a Virtual Platform for Telepresence Control of an Underwater Manipulator Mounted on a Submersible Vehicle. IEEE Trans. Ind. Electron. 2017, 64, 1716–1727. [Google Scholar] [CrossRef]

- Tanaka, R.; Kurabe, K.; Kihal, M.E.; Ichimi, M.; Tatsuno, K. Improvement on an obstacle avoidance in telepresence robot. In Proceedings of the 2015 IEEE/SICE International Symposium on System Integration (SII), Nagoya, Japan, 11–13 December 2015; pp. 634–639. [Google Scholar] [CrossRef]

- Fitter, N.T.; Joung, Y.; Demeter, M.; Hu, Z.; Matarić, M.J. Design and Evaluation of Expressive Turn-Taking Hardware for a Telepresence Robot. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Boll, S. Multimedia at CHI: Telepresence at Work for Remote Conference Participation. IEEE Multimedia 2017, 24, 5–9. [Google Scholar] [CrossRef]

- Das, B.; Dobie, G.; Pierce, S.G. AS-EKF: A Delay Aware State Estimation Technique for Telepresence Robot Navigation. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 624–629. [Google Scholar] [CrossRef] [Green Version]

- Arroyo, D.; Tanaka, F. A Time-based Strategy for the Transition of Control in Telepresence Robots. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Taian, China, 27–31 August 2018; pp. 342–347. [Google Scholar] [CrossRef]

- Li, W.; Yang, C.; Jiang, Y.; Liu, X.; Su, C.Y. Motion Planning for Omnidirectional Wheeled Mobile Robot by Potential Field Method. J. Adv. Transp. 2017, 2017, 4961383. [Google Scholar] [CrossRef]

- Nise, N.S. Control System Engineering; Wiley: Hoboken, NJ, USA, 2004. [Google Scholar]

- Tariq, H.; Rashid, M.; Hafeez, M.A.; Alotaibi, S.S.; Sinky, M.H. A Hybrid Linear Quadratic Regulator Controller for Unmanned Free-Swimming Submersible. Appl. Sci. 2021, 11, 9131. [Google Scholar] [CrossRef]

- Korotaj, B.; Novoselnik, B.; Baotić, M. Kalman Filter Based Sensor Fusion for Omnidirectional Mechatronic System. In Proceedings of the 2021 International Conference on Electrical Drives Power Electronics (EDPE), Dubrovnik, Croatia, 22–24 September 2021; pp. 183–188. [Google Scholar] [CrossRef]

- Li, Q.; Li, R.; Ji, K.; Dai, W. Kalman Filter and Its Application. In Proceedings of the 2015 8th International Conference on Intelligent Networks and Intelligent Systems (ICINIS), Tianjin, China, 1–3 November 2015; pp. 74–77. [Google Scholar] [CrossRef]

- Batayneh, W.; AbuRmaileh, Y. Decentralized Motion Control for Omnidirectional Wheelchair Tracking Error Elimination Using PD-Fuzzy-P and GA-PID Controllers. Sensors 2020, 20, 3525. [Google Scholar] [CrossRef] [PubMed]

- Shijin, C.S.; Udayakumar, K. Speed control of wheeled mobile robots using PID with dynamic and kinematic modelling. In Proceedings of the 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 17–18 March 2017; pp. 1–7. [Google Scholar] [CrossRef]

- WebRTC. WebRTC API. 2021. Available online: https://developer.mozilla.org/en-US/docs/Web/API/WebRTC_API (accessed on 7 February 2022).

- Lighttpd. Lighttpd Web Server; 1.4.64; Lighttpd: New York, NY, USA, 2021; Available online: https://www.lighttpd.net/ (accessed on 6 December 2021).

- Sredojev, B.; Samardzija, D.; Posarac, D. WebRTC technology overview and signaling solution design and implementation. In Proceedings of the 2015 38th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 25–29 May 2015; pp. 1006–1009. [Google Scholar] [CrossRef]

- Denojean-Mairet, M.; Tan, Q.; Pivot, F.; Ally, M. A Ubiquitous Computing Platform—Affordable Telepresence Robot Design and Applications. In Proceedings of the 2014 IEEE 17th International Conference on Computational Science and Engineering, Washington, DC, USA, 19–21 December 2014; pp. 793–798. [Google Scholar] [CrossRef]

- Corke, P.; Findlater, K.; Murphy, E. Skype: A communications framework for robotics. In Proceedings of the 2012 Australasian Conference on Robotics and Automation, Wellington, New Zealand, 3–5 December 2012. [Google Scholar]

| Constants | Description | Value |

|---|---|---|

| Equivalent viscous damping | 1.2 × Nm s/rad | |

| Sum of the motor inertia | 3.35 × kg· m | |

| Resistance of motor rotor | 340.0 | |

| Armature constant | 0.009540 | |

| Inductance of motor rotor | 0.120 mH |

| API | Resolution | Encode (ms) | Avg. Delay (ms) | Throughput (Mbps) |

|---|---|---|---|---|

| WebRTC | 320 × 280 | |||

| WebRTC | 820 × 460 | |||

| WebRTC | 900 × 590 |

| Components | Rating/Model/Value |

|---|---|

| Chassis | 16 guage Alloy |

| Microprocessor | Raspberry pi Model B |

| Ultrasonic sensors | 1.2 V |

| Accelerometer | MPU6050 |

| Battery (Li-po) | 11.40 V 6A |

| Motor-Driver | BTN7971 |

| Motors (DC-Gear) with encoders | FAULHABER 3557K012C |

| Router | DNS/Internet connectivity |

| Omnidirectional wheels | 3-way |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tariq, H.; Rashid, M.; Javed, A.; Riaz, M.A.; Sinky, M.; Zia, M.Y.I. Implementation of Omni-D Tele-Presence Robot Using Kalman Filter and Tricon Ultrasonic Sensors. Sensors 2022, 22, 3948. https://doi.org/10.3390/s22103948

Tariq H, Rashid M, Javed A, Riaz MA, Sinky M, Zia MYI. Implementation of Omni-D Tele-Presence Robot Using Kalman Filter and Tricon Ultrasonic Sensors. Sensors. 2022; 22(10):3948. https://doi.org/10.3390/s22103948

Chicago/Turabian StyleTariq, Hassan, Muhammad Rashid, Asfa Javed, Muhammad Aaqib Riaz, Mohammed Sinky, and Muhammad Yousuf Irfan Zia. 2022. "Implementation of Omni-D Tele-Presence Robot Using Kalman Filter and Tricon Ultrasonic Sensors" Sensors 22, no. 10: 3948. https://doi.org/10.3390/s22103948

APA StyleTariq, H., Rashid, M., Javed, A., Riaz, M. A., Sinky, M., & Zia, M. Y. I. (2022). Implementation of Omni-D Tele-Presence Robot Using Kalman Filter and Tricon Ultrasonic Sensors. Sensors, 22(10), 3948. https://doi.org/10.3390/s22103948