Strategies for Generating Footsteps of Biped Robots in Narrow Sight

Abstract

:1. Introduction

2. Plane Detection

2.1. Point Cloud Filtering

- Voxel grid filter using an octree: The purpose of this filter is to reduce the computational load by reducing the number of points. After placing the same length of cubes in the point cloud at regular intervals, the centroid for all points existing inside each cube is calculated. After the calculation, the number of point clouds is reduced by removing the remaining points, except for the calculated centroid. It is important to quickly find the points that are configured to process the point cloud, so we use an the octree structure to quickly search for the points. An octree is a hierarchical data structure for 3D spatial division that divides into eight volumes until it reaches a cube with a specific resolution length [21].

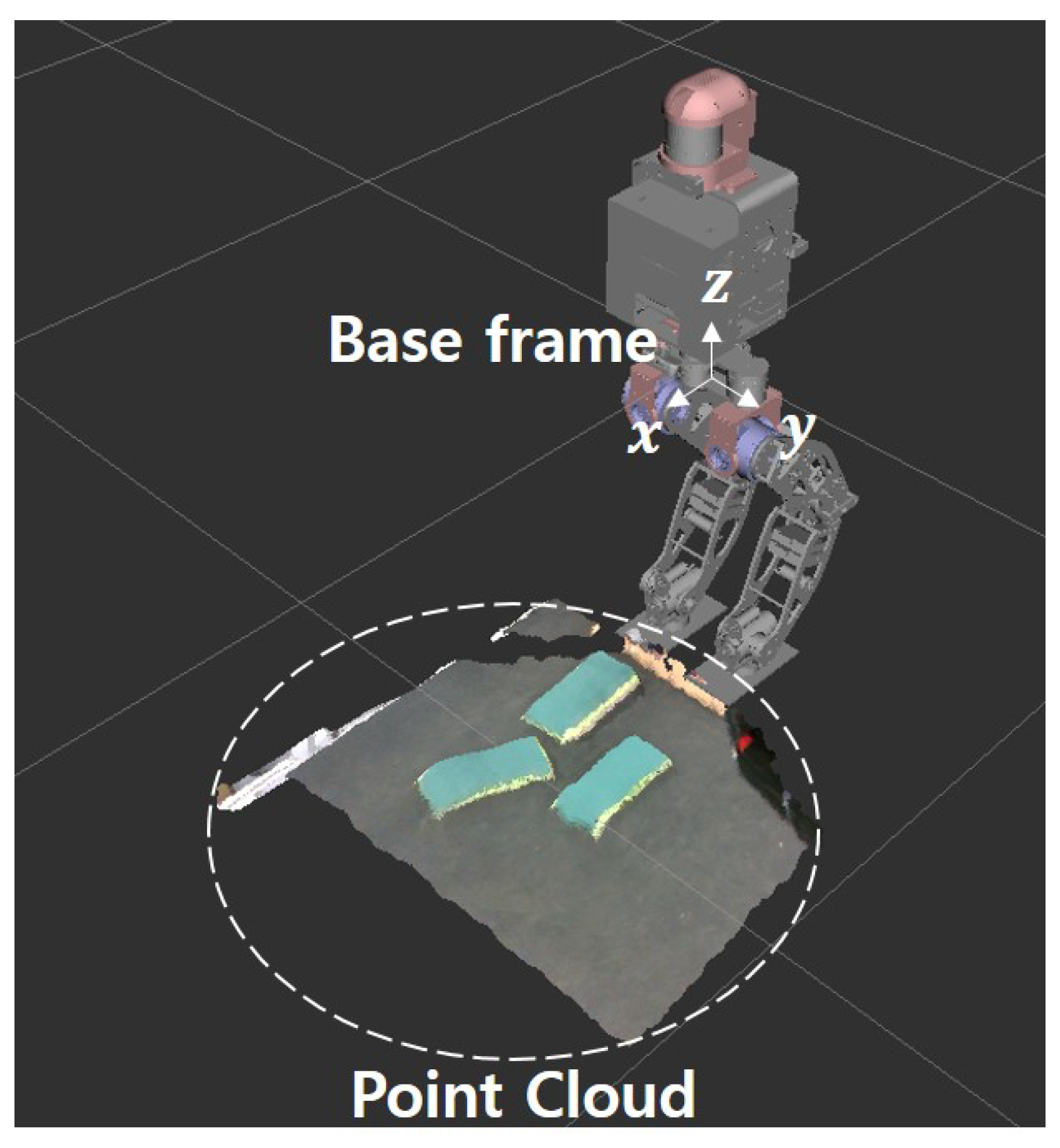

- Transformation: The point cloud acquired from the camera is measured on the basis of the camera’s coordinate system and needed to be checked intuitively. Therefore, we recalculate the position value of the point cloud by transforming the coordinates based on the base frame representing the robot (Figure 2).

- Pass through filter: This is a filter that passes only the points that are in the area of interest and removes those that are not in the area. We reduce the computational load by specifying a range for each axis on a 3D space and using only the points within the specified coordinate range.

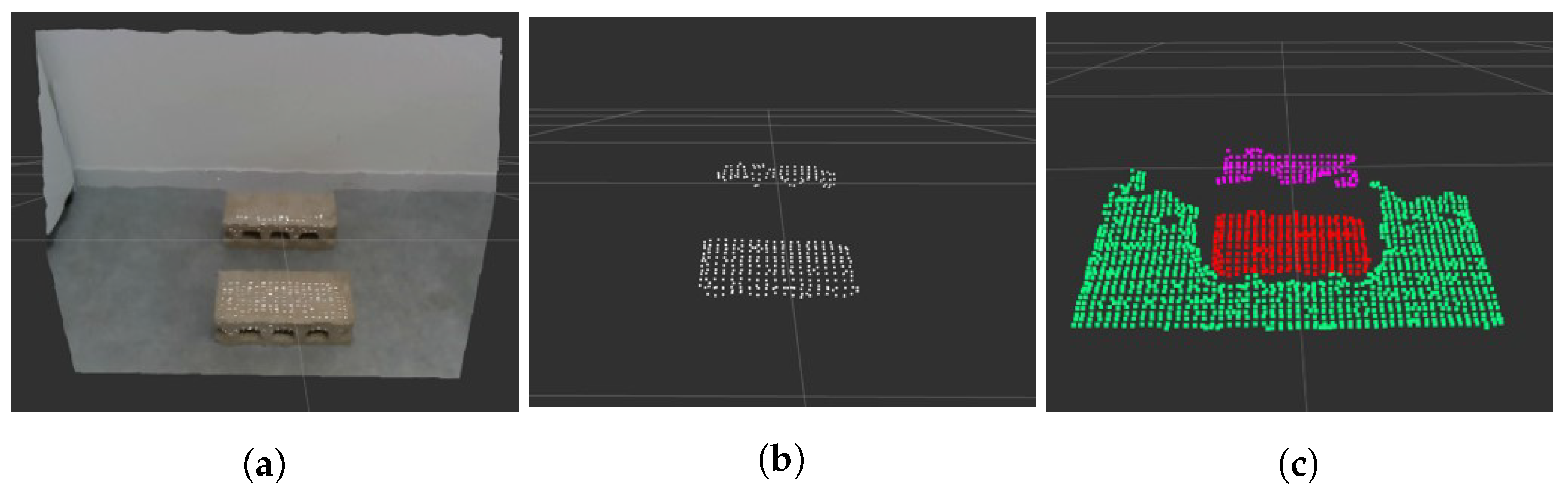

2.2. Random Sample Consensus (Ransac)

- Parameter: Sampling iterations and distance thresholds are required when using RANSAC. The distance threshold is the value of how to set the boundary between the inlier and outlier.

- Plane normal: We restrict the robot from walking on a plane with a large slope such as a roll or pitch. The normal vector of the plane is obtained through plane coefficients a, b, c, and d calculated using RANSAC. Then, the angle between the normal vector of the plane and the z-axis unit vector (0, 0, 1) is calculated based on the reference frame shown in Figure 2. If the angle between the two vectors is more than 15°, the robot considers it difficult to walk and excludes the plane.

2.3. Euclidean Clustering

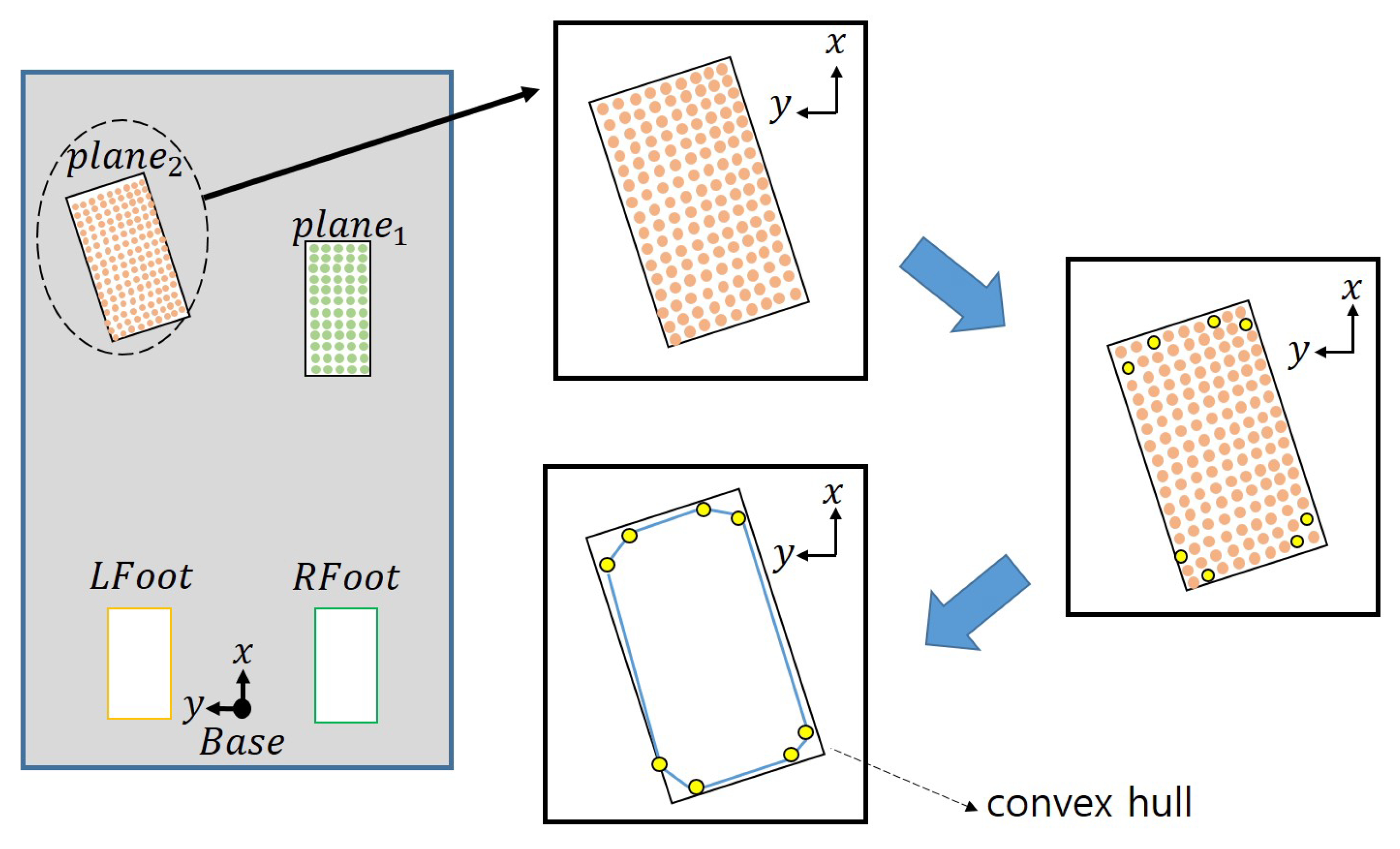

2.4. Calculating the Centroid and Orientation

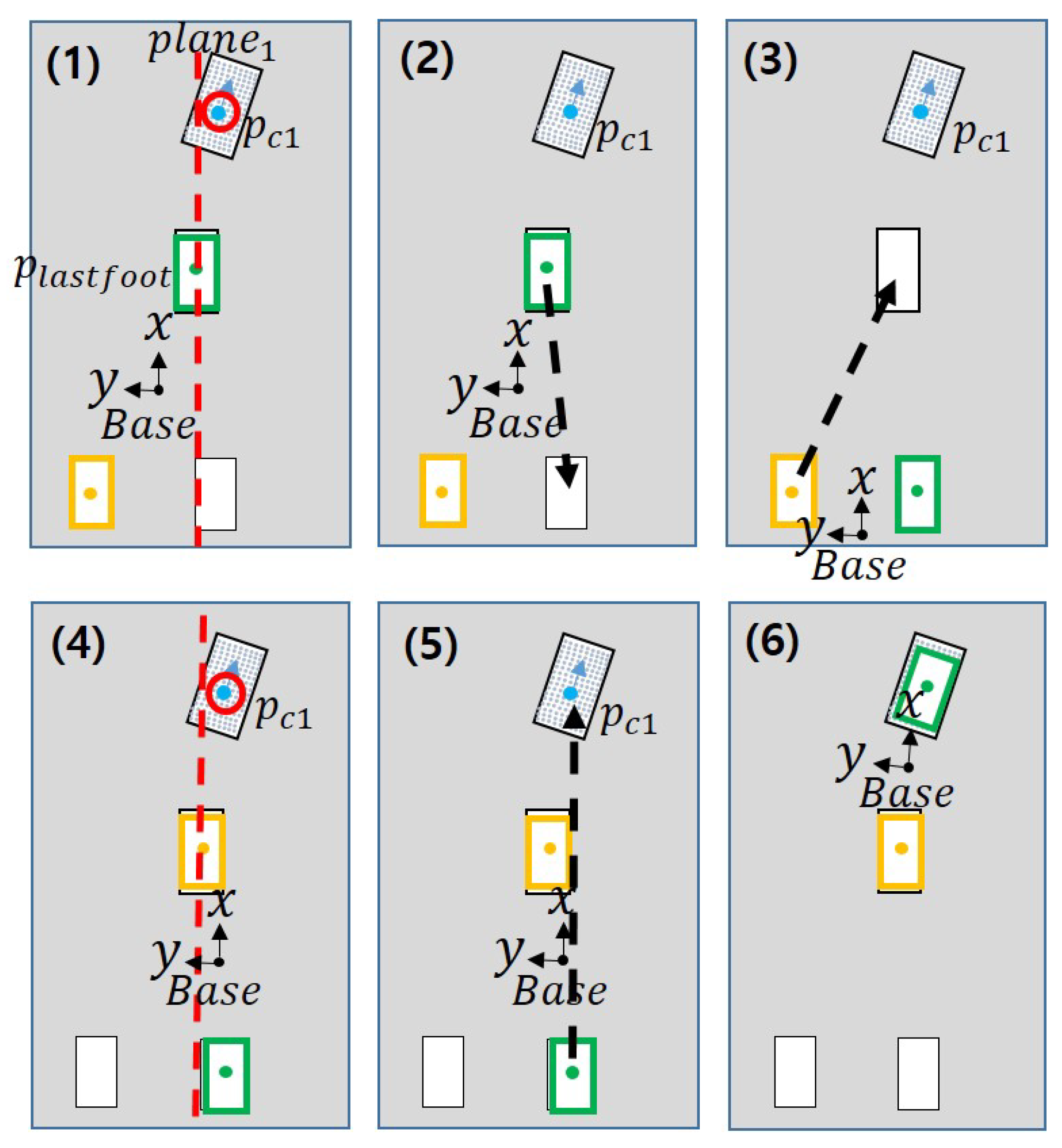

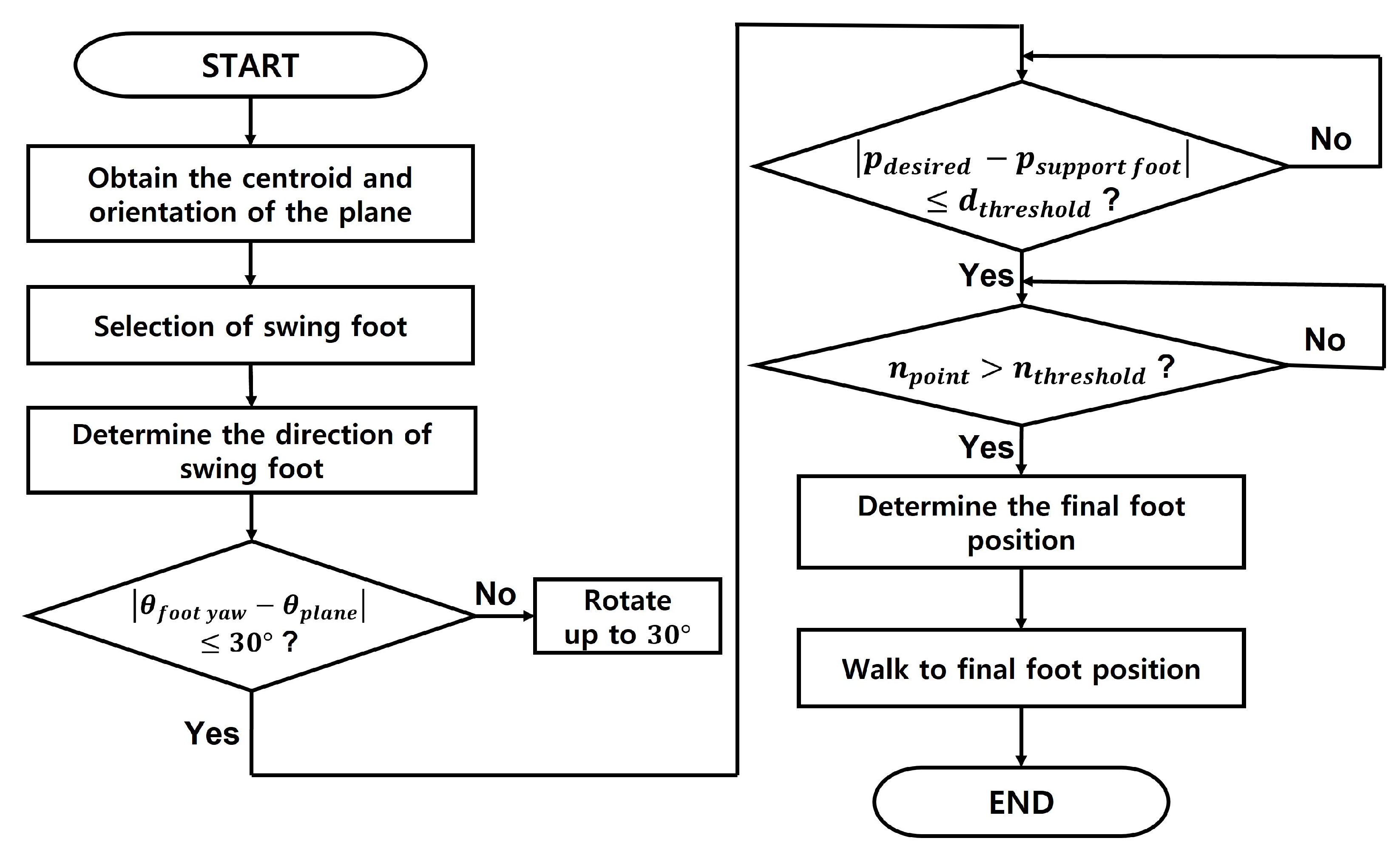

3. Moving Strategy in a Limited Area

- Calculate the center point and orientation of each plane by the method suggested in Section 2.4.

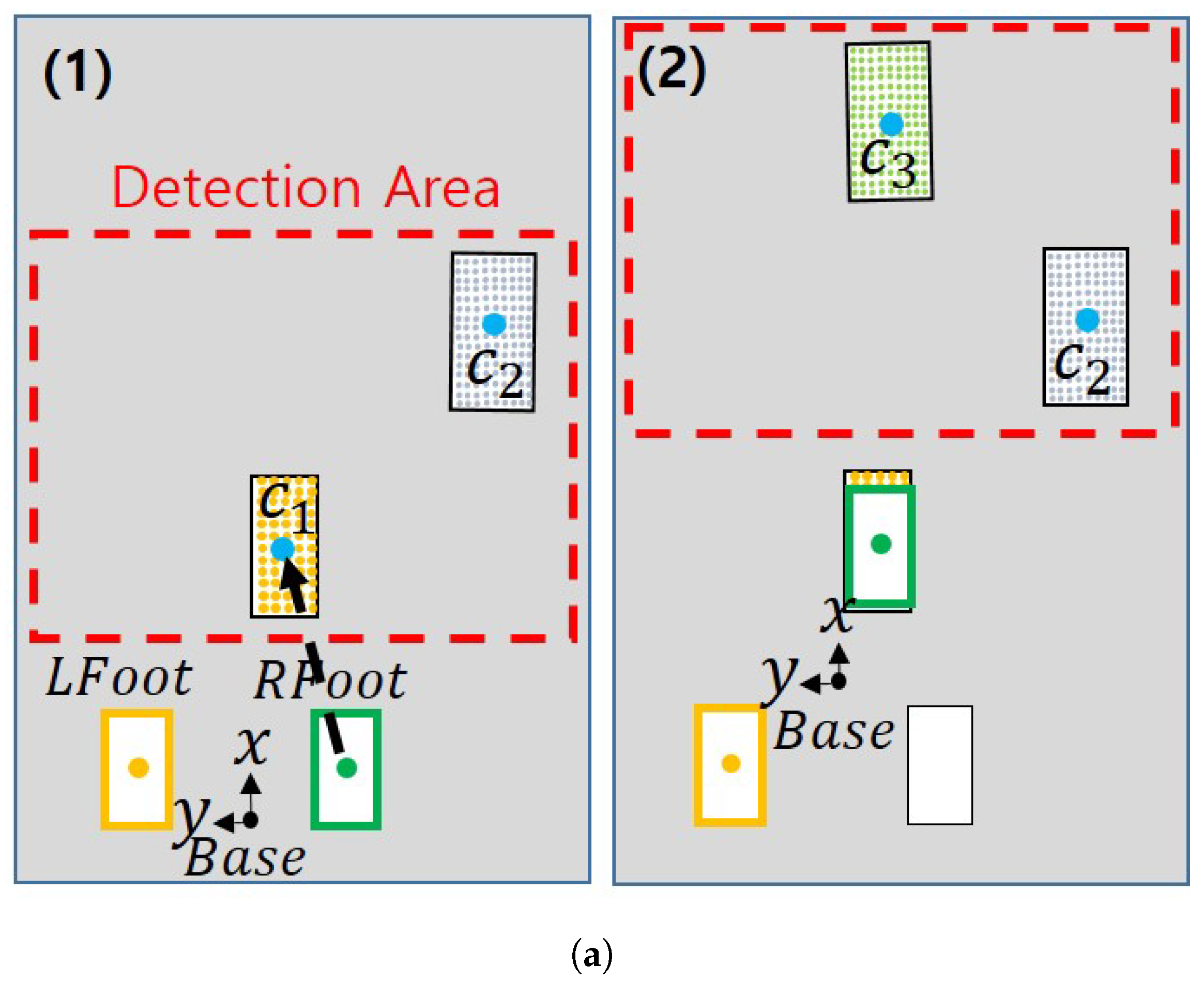

- Check the y-axis coordinate value of the center point of the first plane among the planes determined in the order closest to the base frame. Depending on which side this value is based on the base frame, the swing foot is determined as the left foot or the right foot.

- The yaw direction of the swinging foot is defined as the yaw value of the first plane. If the difference between the direction of the swing foot and the direction of the current foot exceeds a specific threshold value (e.g., 30 degrees), the value is changed to maintain as much as a specific threshold value.

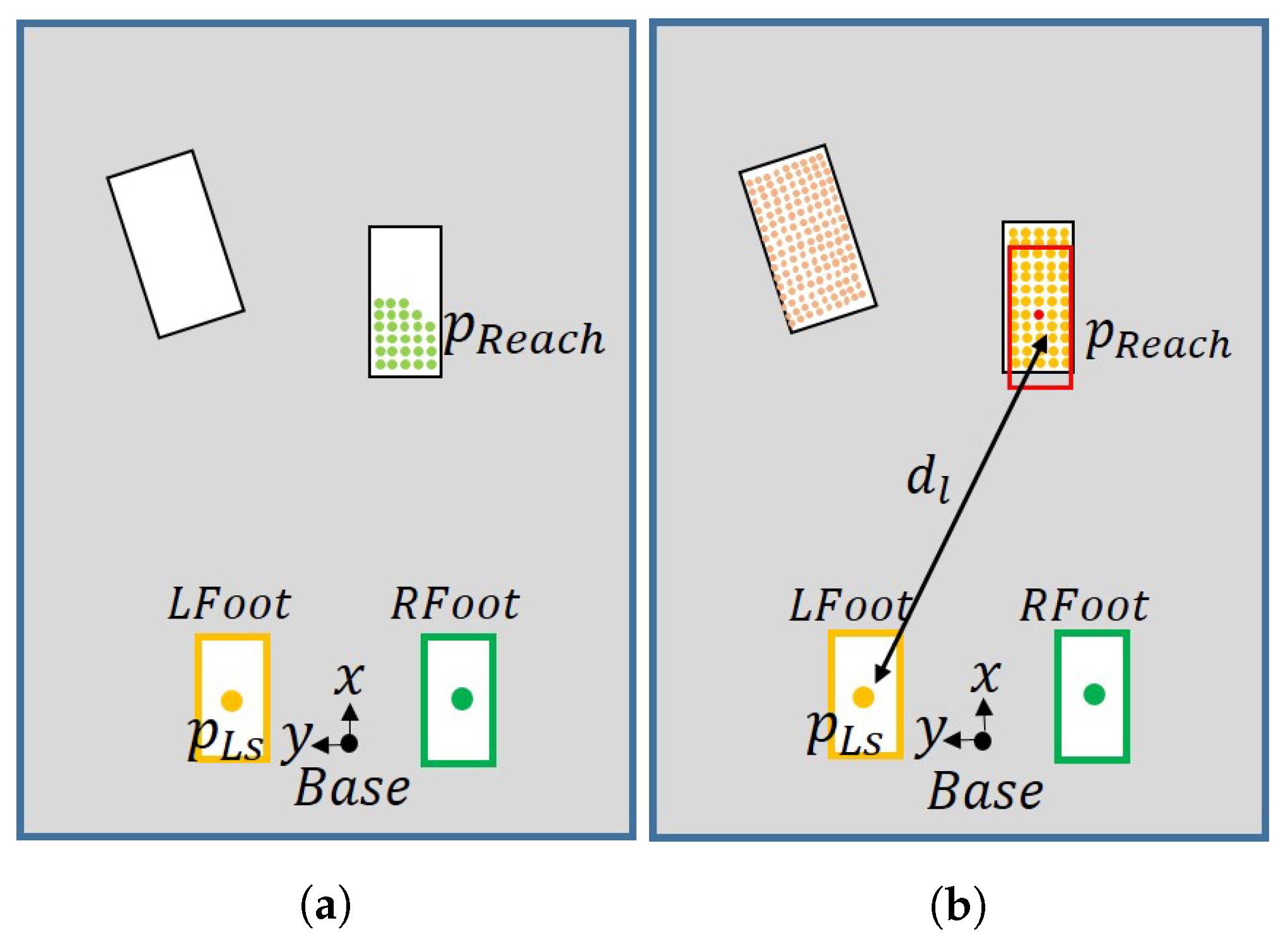

- To determine the maximum distance the foot can travel, check that the difference between the center point of the support foot and the swing foot exceeds a certain threshold.

- Draw a rectangle with the size of the foot centered on the points obtained in step 4. A point in which the number of overlapping points between the drawn rectangle and the detected plane area satisfies a value greater than a specific threshold value is found.

- Among the points satisfying the above conditions, the point with the largest number of overlapping points is determined as the final point to which the swing foot should go. The robot walks to this point. If there are two or more points with the same maximum number, the point with the greatest maximum distance is selected.

- Compare the position of the last swing foot with the position of the first plane centroid, as shown in Figure 8(1). If the center point of the plane based on the y-axis is to the left of the position of the last swing foot, set the next swing foot as the left foot.

- Make sure that the last swing foot and the swing foot calculated in Step 1 are the same foot.

- If the last swing foot and the calculated swing foot are the right foot (Figure 8), the last swing foot returns to the previous position while the next swing foot is replaced by the other foot and moves to the same position.

- If not the same (Figure 9), the next swing foot is determined by the calculated swing foot.

Selecting the Proper Stepping Foot Position on a Plane

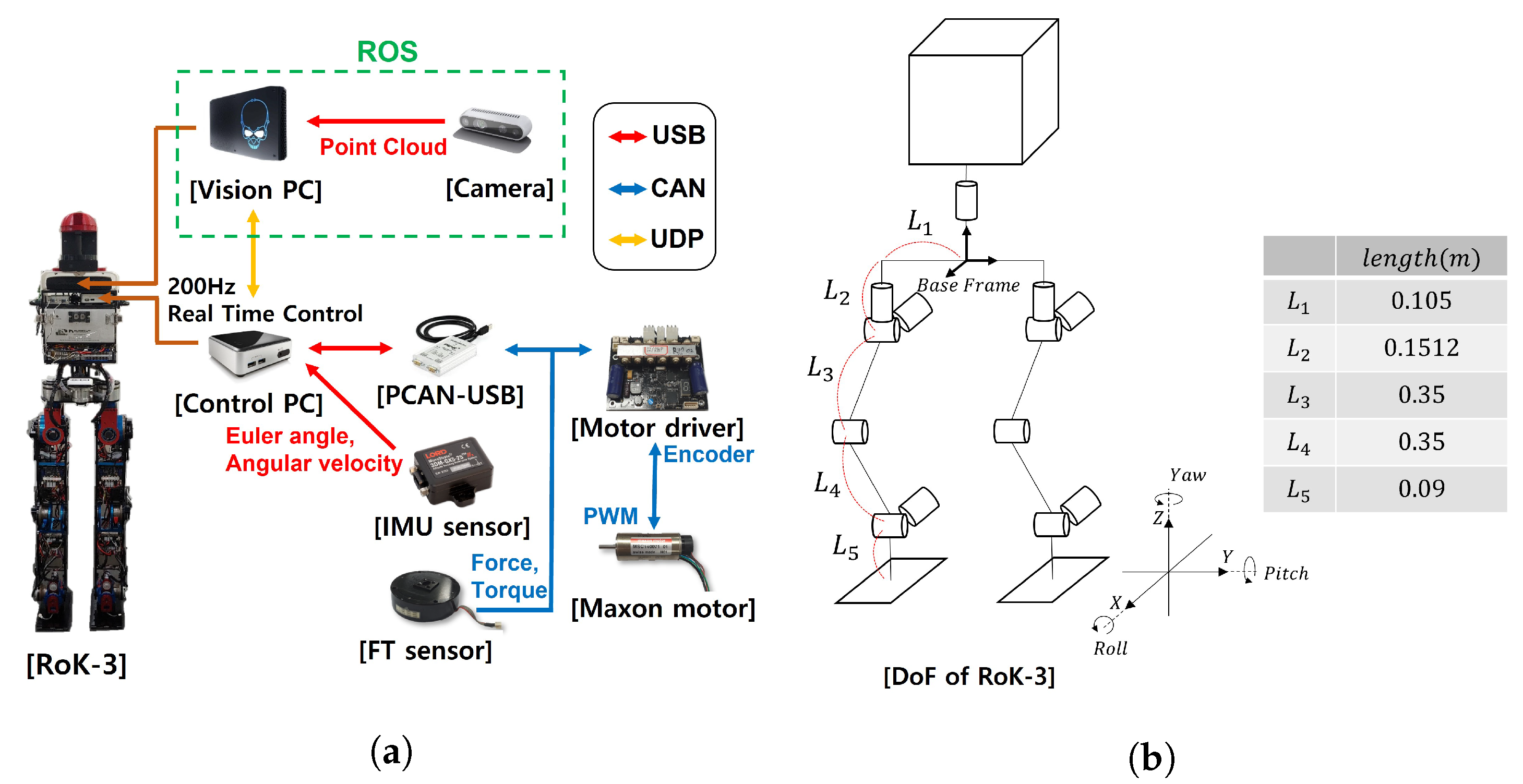

4. An Overview of Rok-3

4.1. Hardware of Rok-3

4.2. System Architecture

5. Experiments

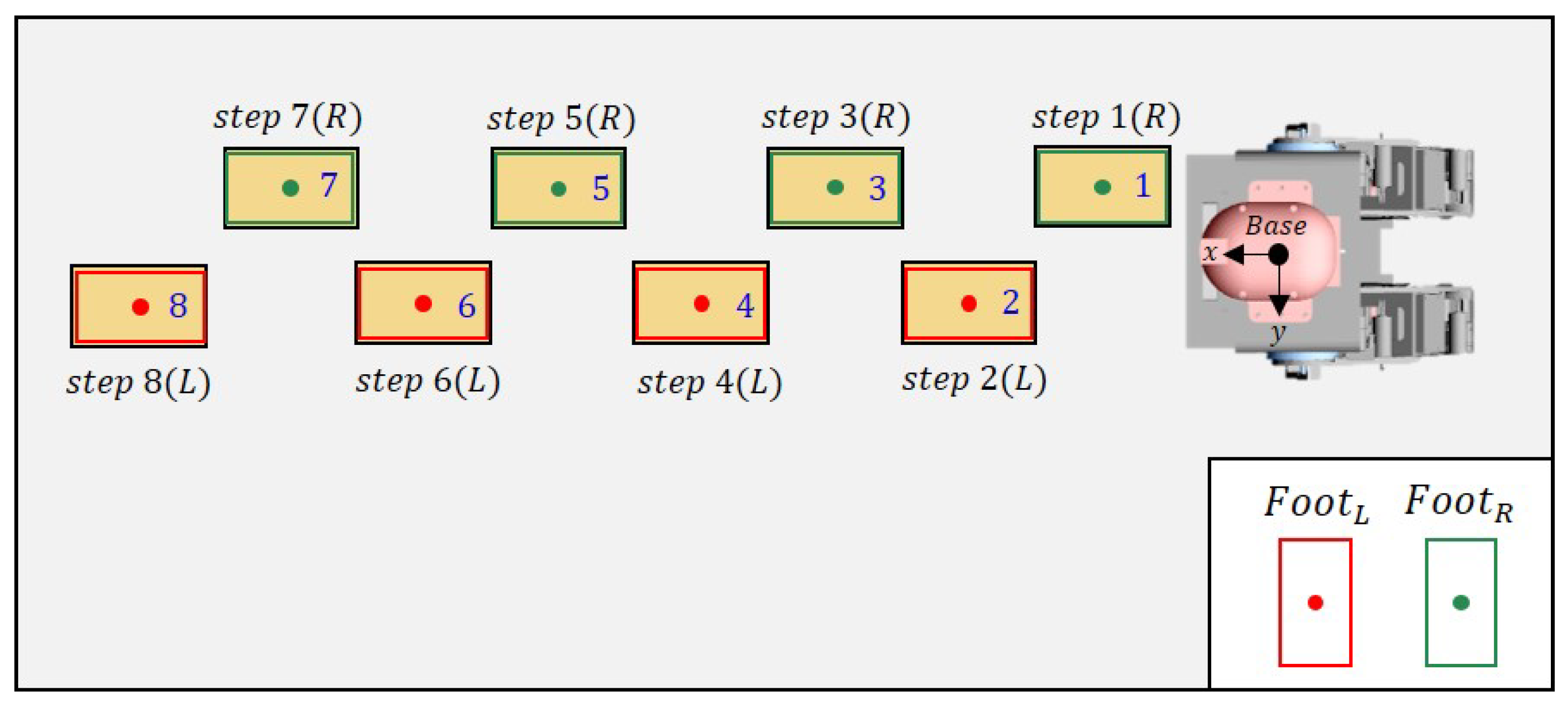

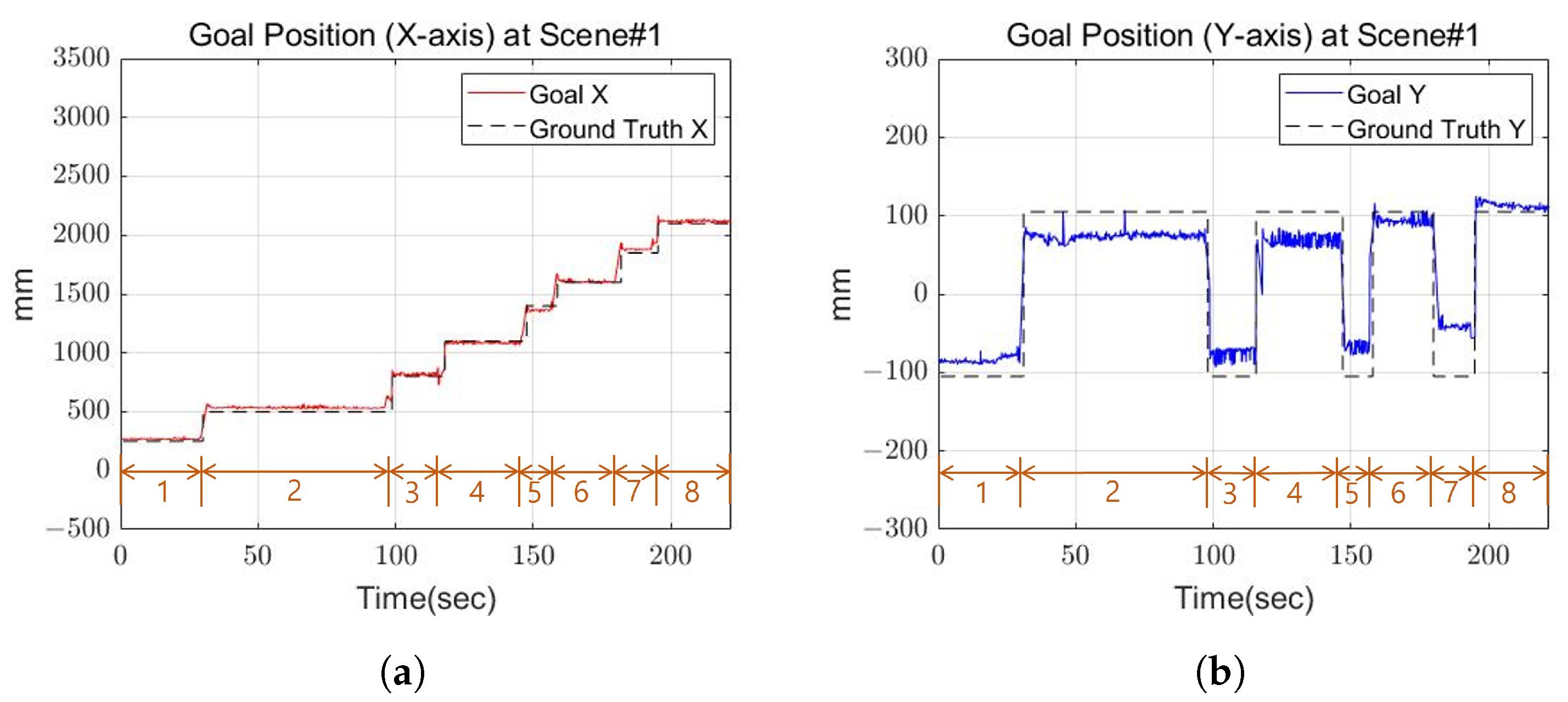

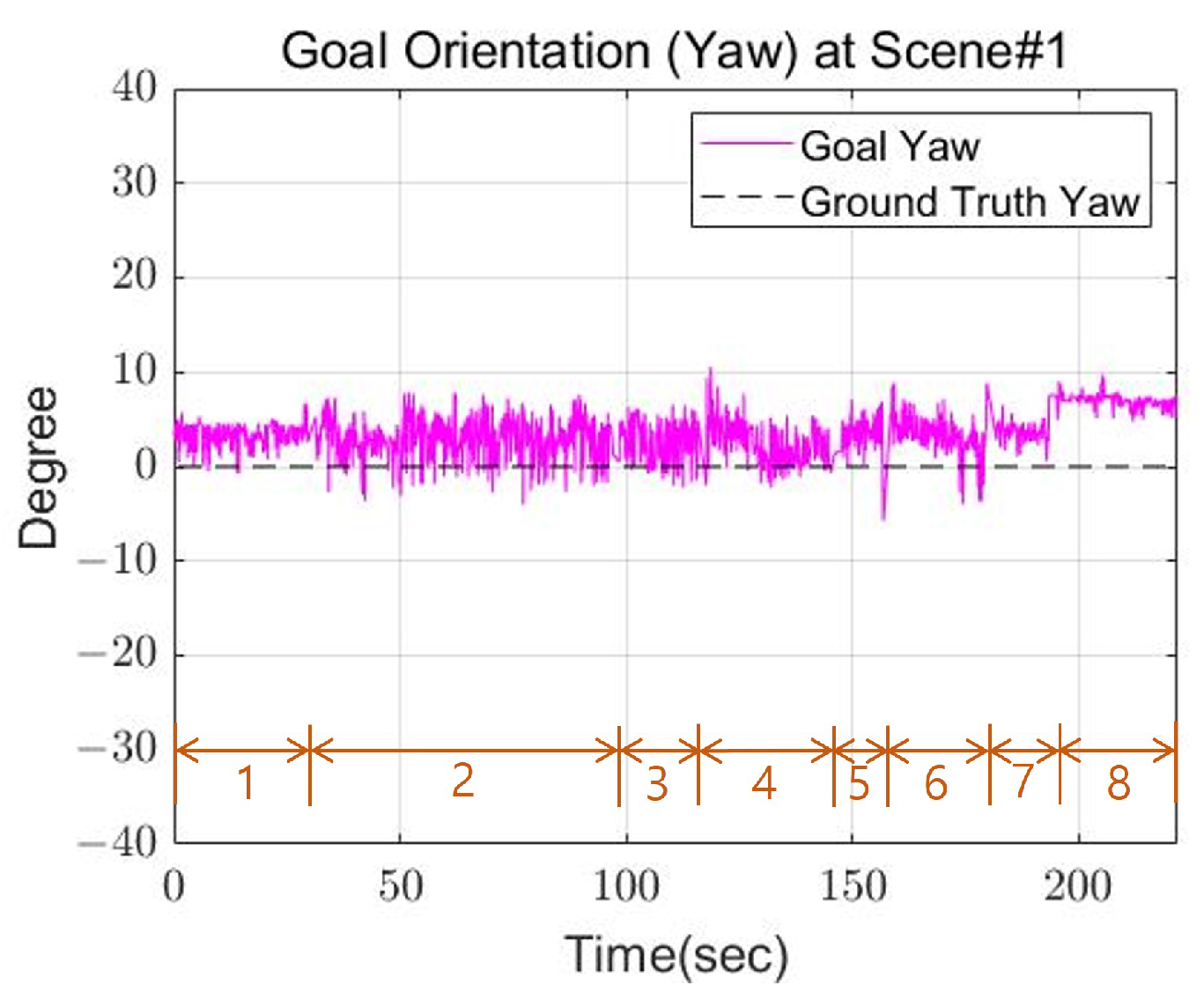

5.1. Scene 1: Walking Straight

5.2. Scene 2: Replanning

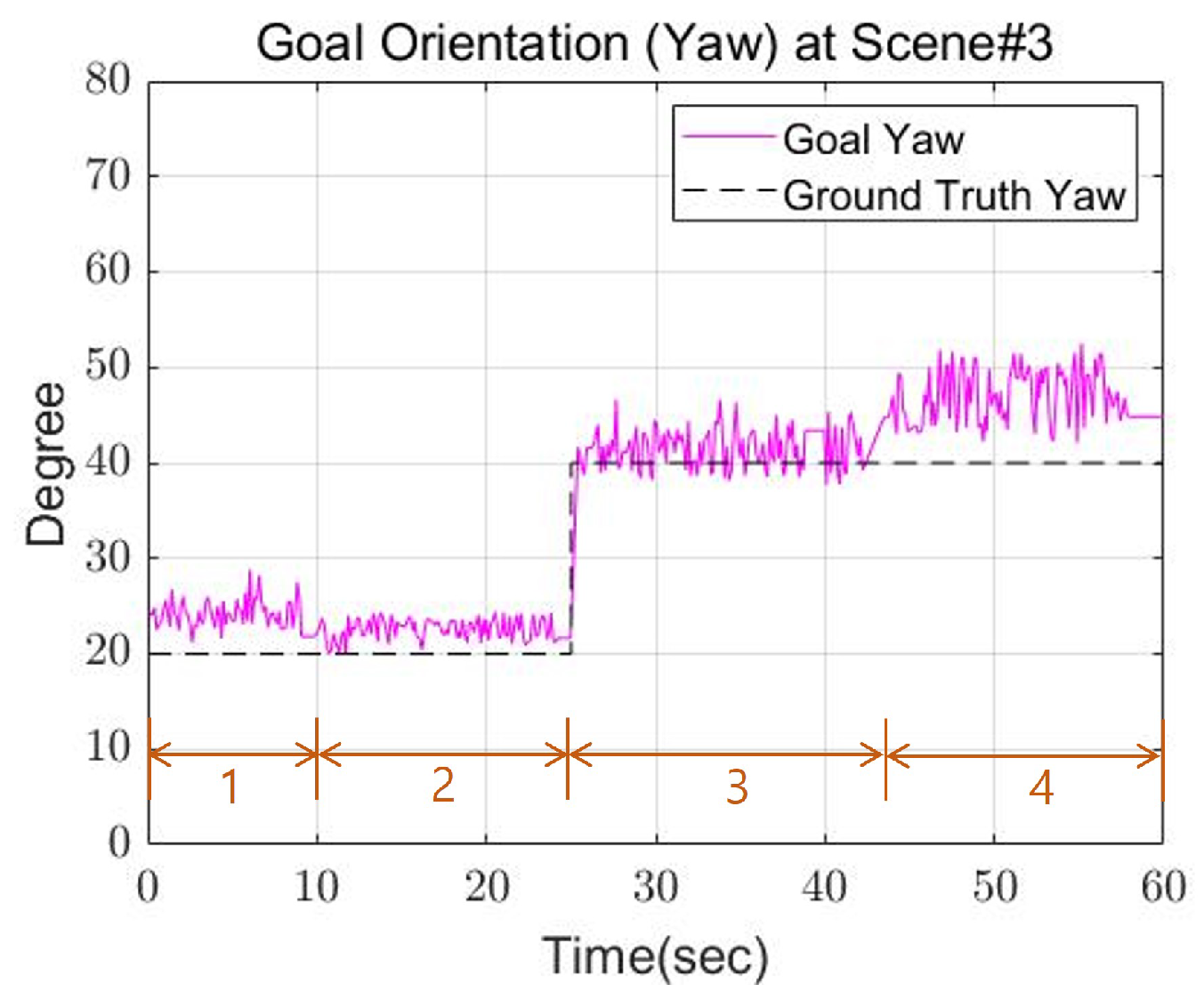

5.3. Scene 3: Turning

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nagatani, K.; Kiribayashi, S.; Okada, Y.; Otake, K.; Yoshida, K.; Tadokoro, S.; Nishimura, T.; Yoshida, T.; Koyanagi, E.; Fukushima, M.; et al. Emergency response to the nuclear accident at the Fukushima Daiichi Nuclear Power Plants using mobile rescue robots. J. Field Robot. 2013, 30, 44–63. [Google Scholar] [CrossRef]

- Nisticò, Y.; Fahmi, S.; Pallottino, L.; Semini, C.; Fink, G. On Slip Detection for Quadruped Robots. Sensors 2022, 22, 2967. [Google Scholar] [CrossRef] [PubMed]

- Ozkan-Aydin, Y.; Goldman, D.I. Self-reconfigurable multilegged robot swarms collectively accomplish challenging terradynamic tasks. Sci. Robot. 2021, 6, eabf1628. [Google Scholar] [CrossRef] [PubMed]

- Chestnutt, J.; Michel, P.; Nishiwaki, K.; Kuffner, J.; Kagami, S. An intelligent joystick for biped control. In Proceedings of the Proceedings 2006 IEEE International Conference on Robotics and Automation, 2006. ICRA 2006, Orlando, FL, USA, 15–19 May 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 860–865. [Google Scholar]

- Crews, S.; Travers, M. Energy management through footstep selection for bipedal robots. IEEE Robot. Autom. Lett. 2020, 5, 5485–5493. [Google Scholar] [CrossRef]

- Deits, R.; Tedrake, R. Footstep planning on uneven terrain with mixed-integer convex optimization. In Proceedings of the 2014 IEEE-RAS International Conference on Humanoid Robots, Madrid, Spain, 18–20 November 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 279–286. [Google Scholar]

- Deepak, B.; Parhi, D.R.; Raju, B. Advance particle swarm optimization-based navigational controller for mobile robot. Arab. J. Sci. Eng. 2014, 39, 6477–6487. [Google Scholar] [CrossRef]

- Cormen, T.H.; Leiserson, C.E.; Rivest, R.L.; Stein, C. Introduction to Algorithms, 2nd ed.; MIT Press and McGraw-Hill: Cambridge, MA, USA, 2001; pp. 595–601. [Google Scholar]

- DARPA Robotics Challenge Website. Available online: http://archive.darpa.mil/roboticschallenge/ (accessed on 6 May 2021).

- Stumpf, A.; Kohlbrecher, S.; Conner, D.C.; von Stryk, O. Supervised footstep planning for humanoid robots in rough terrain tasks using a black box walking controller. In Proceedings of the 2014 IEEE-RAS International Conference on Humanoid Robots, Madrid, Spain, 18–20 November 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 287–294. [Google Scholar]

- Chestnutt, J.; Lau, M.; Cheung, G.; Kuffner, J.; Hodgins, J.; Kanade, T. Footstep planning for the honda asimo humanoid. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 629–634. [Google Scholar]

- Michel, P.; Chestnutt, J.; Kuffner, J.; Kanade, T. Vision-guided humanoid footstep planning for dynamic environments. In Proceedings of the 5th IEEE-RAS International Conference on Humanoid Robots, Tsukuba, Japan, 5 December 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 13–18. [Google Scholar]

- Karkowski, P.; Oßwald, S.; Bennewitz, M. Real-time footstep planning in 3D environments. In Proceedings of the 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, 15–17 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 69–74. [Google Scholar]

- Kanoulas, D.; Stumpf, A.; Raghavan, V.S.; Zhou, C.; Toumpa, A.; Von Stryk, O.; Caldwell, D.G.; Tsagarakis, N.G. Footstep Planning in Rough Terrain for Bipedal Robots Using Curved Contact Patches. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–9. [Google Scholar]

- Griffin, R.J.; Wiedebach, G.; McCrory, S.; Bertrand, S.; Lee, I.; Pratt, J. Footstep planning for autonomous walking over rough terrain. In Proceedings of the 2019 IEEE-RAS 19th International Conference on Humanoid Robots (Humanoids), Toronto, ON, Canada, 15–17 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 9–16. [Google Scholar]

- Okada, K.; Ogura, T.; Haneda, A.; Inaba, M. Autonomous 3D walking system for a humanoid robot based on visual step recognition and 3D foot step planner. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 623–628. [Google Scholar]

- Cupec, R.; Schmidt, G.; Lorch, O. Experiments in vision-guided robot walking in a structured scenario. In Proceedings of the Proceedings of the IEEE International Symposium on Industrial Electronics (ISIE) 2005, Dubrovnik, Croatia, 20–23 June 2005; pp. 1581–1586. [Google Scholar]

- Yagi, M.; Lumelsky, V. Local on-line planning in biped robot locomotion amongst unknown obstacles. Robotica 2000, 18, 389–402. [Google Scholar] [CrossRef]

- LaValle, S.M. Rapidly-Exploring Random Trees: A New Tool for Path Planning; Technical Report TR 98–11; Computer Science Department, Iowa State University: Ames, IA, USA, 1998. [Google Scholar]

- PCL Walkthrough. Available online: https://pcl.readthedocs.io/projects/tutorials/en/latest/walkthrough.html (accessed on 5 May 2022).

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef] [Green Version]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Bertrand, S.; Lee, I.; Mishra, B.; Calvert, D.; Pratt, J.; Griffin, R. Detecting usable planar regions for legged robot locomotion. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 4736–4742. [Google Scholar]

- Xenomai: Real-Time Framework for Linux. Available online: http://xenomai.org (accessed on 5 May 2022).

- Cho, B.K.; Kim, J.Y. Dynamic posture stabilization of a biped robot SUBO-1 on slope-changing grounds. Int. J. Precis. Eng. Manuf. 2018, 19, 1003–1009. [Google Scholar] [CrossRef]

- Cho, B.K.; Ahn, D.; Jun, Y.; Oh, P. A posture balance controller for a humanoid robot using state and disturbance-observer-based state feedback. J. Intell. Robot. Syst. 2019, 95, 331–349. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, S.-J.; Cho, B.-K. Strategies for Generating Footsteps of Biped Robots in Narrow Sight. Sensors 2022, 22, 3817. https://doi.org/10.3390/s22103817

Yoon S-J, Cho B-K. Strategies for Generating Footsteps of Biped Robots in Narrow Sight. Sensors. 2022; 22(10):3817. https://doi.org/10.3390/s22103817

Chicago/Turabian StyleYoon, Sung-Joon, and Baek-Kyu Cho. 2022. "Strategies for Generating Footsteps of Biped Robots in Narrow Sight" Sensors 22, no. 10: 3817. https://doi.org/10.3390/s22103817

APA StyleYoon, S.-J., & Cho, B.-K. (2022). Strategies for Generating Footsteps of Biped Robots in Narrow Sight. Sensors, 22(10), 3817. https://doi.org/10.3390/s22103817